Abstract

Microarray data examination is a relatively new technology that intends to determine the proper treatment for various diseases and a precise medical diagnosis by analyzing a massive number of genes in various experimental conditions. The conventional data classification techniques suffer from overfitting and the high dimensionality of gene expression data. Therefore, the feature (gene) selection approach plays a vital role in handling a high dimensionality of data. Data science concepts can be widely employed in several data classification problems, and they identify different class labels. In this aspect, we developed a novel red fox optimizer with deep-learning-enabled microarray gene expression classification (RFODL-MGEC) model. The presented RFODL-MGEC model aims to improve classification performance by selecting appropriate features. The RFODL-MGEC model uses a novel red fox optimizer (RFO)-based feature selection approach for deriving an optimal subset of features. Moreover, the RFODL-MGEC model involves a bidirectional cascaded deep neural network (BCDNN) for data classification. The parameters involved in the BCDNN technique were tuned using the chaos game optimization (CGO) algorithm. Comprehensive experiments on benchmark datasets indicated that the RFODL-MGEC model accomplished superior results for subtype classifications. Therefore, the RFODL-MGEC model was found to be effective for the identification of various classes for high-dimensional and small-scale microarray data.

1. Introduction

The technology of DNA microarray assists in making it simpler to monitor a huge number of genes simultaneously [1]. Earlier works indicated that the technology of DNA microarray could be useful in the classification of cancer disease [2]. To classify microarray gene expression, several techniques and methods were introduced that have satisfactory outcomes [3]. For the microarray dataset, the gene expression value is organized through the matrix, where samples are rows and genes or features are columns. The value of gene expression is a real number, and it defines the expression level of a gene following certain criteria [4]. Due to the limited number of samples with an enormous number of features from the gene expression data, the systematic machine learning (ML) technique does not work well for cancer classifiers [5].

A microarray experiment produces many gene expression data in an individual sample. The ratio of the number of genes (features) to the number of patients (samples) is skewed, leading to the popular curse-of-dimensionality problem [6]. Furthermore, it enforces self-inflicting limitations on the presenting of methods: (i) processing all the information may not be possible, and (ii) processing a set of data might lead to overfitting, local maxima, and loss of information. These two problems affect the reliability and accuracy of machine learning techniques. Several studies have been conducted to identify an effective feature set [7]. Statistical and evolutionary approaches were introduced for these purposes. Feature subset selection (FSS) methods such as joint mutual information (JMI), joint mutual information maximization (JMIM), and minimum redundancy maximum relevance (mRMR) are among the main statistical methods [8].

Literature reviews showed that recent innovative technologies such as genetic algorithm (GA), mining techniques, transfer learning, deep neural network (DNN), particle swarm optimization (PSO), and so on, generate precise results [9]. The classification of microarray data is generally performed in two different ways. Feature selection (FS) focuses on choosing the most important characteristics from a large dataset to decrease computation overheads, overfitting, and noise. The classifier training process constructs a technique in the selected feature to accurately categorize a microarray sample. Innovative technologies such as convolutional neural network (CNN), image processing, ant miner, transfer learning, and experimental methods were introduced in a previous study [10]. Even though the innovative technologies for FS and classifier training can produce higher accuracy, they should be tuned based on the fundamental data set in a controlled setup to accomplish better outcomes.

We developed a novel red fox optimizer with deep-learning-enabled microarray gene expression classification (RFODL-MGEC) model. The presented RFODL-MGEC model uses a novel RFO-based feature selection (FS) approach to derive an optimum subset of features. Moreover, the proposed RFODL-MGEC model involves a bidirectional cascaded deep neural network (BCDNN) for data classification. The parameters involved in the BCDNN method were optimally tuned using a chaos game optimization (CGO) algorithm.

2. Related Works

In [11], a novel bacterial colony optimization with multidimensional population was named the BCO-MDP technique and was projected for FS to resolve classifier issues. Addressing the combinational problem connected with FS, the population with several dimensionalities was demonstrated as subsets of distinct feature sizes. Zeebaree et al. [12] examined a deep learning (DL) method dependent upon CNN for the classification of microarray data. In contrast to some approaches like vector machine recursive feature elimination and improved random forest (mSVM-RFE-iRF and varSeIRF), CNN revealed that not every datum has higher efficiency. In [13], a two-stage sparse logistic regression (LR) was presented to attain an effectual subset of genes with higher classifier abilities by integrating the screening method as a filtering model and adaptive lasso with novel weight as an embedding process. During the primary phase, the independence screening approach utilized as a screening method recollected individuals’ genes and demonstrated maximum individual correlation with cancer class level. During the secondary phase, the adaptive lasso with novel weight was executed to address higher correlations amongst the screened genes from the primary step.

Shukla et al. [14] progressed a novel hybrid framework named CMIMAGA by integrating conditional mutual information maximization (CMIM) and adaptive genetic algorithm (AGA), and it is utilized for determining important biomarkers in gene expression data. CMIM was executed as a filter to extract out one of the meaningless genes. A wrapper approach such as AGA was utilized for choosing the extremely discriminating genes.

In [15], elephant search algorithm (ESA)-based optimization was presented for selecting optimum gene expression in a huge volume of microarray data. The firefly search (FFS) was utilized to understand the ESA’s efficiency in the FS procedure. The stochastic gradient descent (SGD)-based DNN as DL with the Softmax activation function was utilized on the decreased feature (genes) of the optimum classifier at various instances based on its gene expression level. Sayed et al. [16] examine an ensemble FS approach dependent upon a t-test and GA. After preprocessing the data utilizing a t-test, a nested GA called Nested-GA was utilized to obtain the optimum subset of features using two distinct datasets. The nested GA had two nested GAs (outer and inner), which ran on two different types of datasets. Li et al. [17] established a more effective execution of linear SVMs, enhancing the recursive feature elimination approach and combining selected informative genes. In addition, they presented an easy resampling approach for preprocessing the dataset that creates the data distribution of distinct types of samples that is balanced and improves the classification performance.

3. The Proposed Model

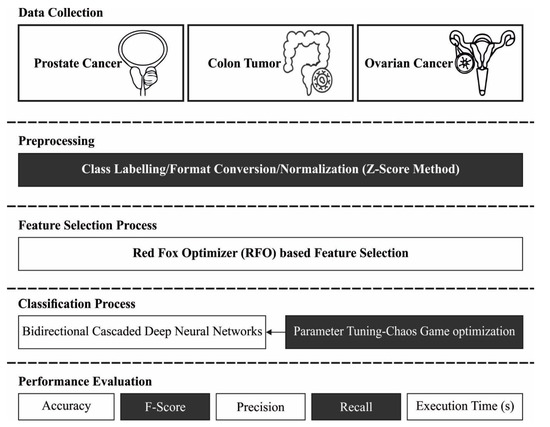

This study proposes a novel RFODL-MGEC model for microarray gene expression classification. The presented RFODL-MGEC model primarily employed an RFO-FS approach for deriving the optimum subset of features. Next, the BCDNN model was utilized for data classification, and the parameters involving the BCDNN technique were optimally tuned using a CGO algorithm. Figure 1 demonstrates the overall block diagram of our proposed RFODL-MGEC technique.

Figure 1.

Block diagram of RFODL-MGEC technique.

3.1. Data Preprocessing

The z-score normalization approach was derived at the initial phase, which computed the standard deviation and arithmetic mean of provided gene data. It was evident that the normalization approach performed effectively with earlier knowledge regarding the average score and score variation of the matcher. The normalization scores were obtained using the following:

where implies standard deviation and indicates arithmetic mean of provided data. In this study, the normalization of the smoothed data was carried out via z-score normalization.

3.2. Design of RFO-Based Feature Selection Approach

During the process of feature selection, the RFO-FS model was executed and the optimum set of features was chosen. A new metaheuristic approach was determined, which was named the RFO approach, and was based on the hunting processes of red foxes. Initially, the red foxes seek food in territories [18]. This can be modelled as an exploration term for global search. Next, they move over the territory to get close to their prey before attacking. This stage can be modelled as an exploitation term for local search. The process was initiated by a constant value of random candidates; each one determines a point, where and defines a coordinate. For discriminating every fox in iteration , where indicates the fox number in the population, we introduce the notation , in which describes the coordinate as the solution space dimension. Based on , the criterion function of the variable depends on the dimension of the searching space, and the notation indicates the point in the space in which . Then, is the optimum solution when the value of function represents a global optimal on The outcomes of the estimated function by the candidate are sorted initially according to fitness condition, and for , the square of Euclidean distance is estimated for the candidate in the following:

and the candidate moves towards the optimal population as:

where defines an arbitrary number in which .

In the RFO approach, movements and observations delude prey when hunting in a local searching phase. For simulating the probability of a fox approaching the prey, an arbitrary number set in the iteration for each candidate can be used.

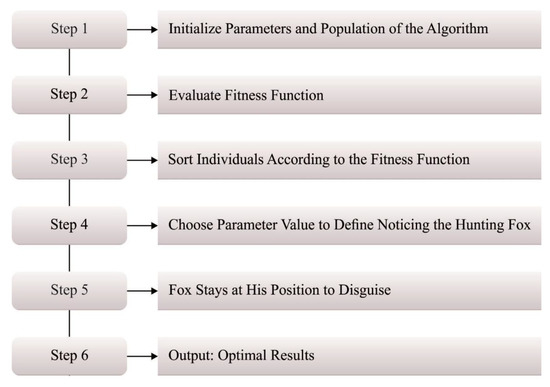

Figure 2 depicts the steps involved in RFO.

Figure 2.

Steps involved in RFO technique.

The radius comprises as an arbitrary number within and 0.2, and denotes an arbitrary number within and which defines the fox observation angle:

represents an arbitrary number within and 1. The approaching method of the fox was modelled as follows:

Five percent of the worst-case candidates were detached and replaced with upgraded candidates. In the same way, two of the optimal individuals were accomplished as and as an alpha couple in iteration . This can be mathematically expressed in the following:

Moreover, the diameter of habitat using Euclidean distance can be accomplished by Equation (8):

An arbitrary number, , was considered in the following:

In this case, In addition, the new candidate was accomplished by the alpha couple in the following:

3.3. Process Involved in BCDNN-Based Classification

The BCDNN model was developed for microarray gene expression classification [19]. The DNN is separated into decoder, encoder, translator, and simulator. Let represent the amplitude response and phase inspired from the finite-difference time-domain (FDTD) methodology and represent the forecast from the simulator. When the module is trained, the simulator predicts as an input image with a rapidly moving meta-atom structure compared to its arithmetical matching part. For backward calculations, with dimensions of is converted to an image with dimensions of , which indicates a lower input parameter than the output parameter for regression processes. The enormous divergence makes it problematic for a system to generalize and converge well, particularly once the input spectra have stronger variation near the resonant frequency. The authors of the aforementioned study attempted to avoid this problem by including a generative adversarial network or bilinear tensor layer. Initially, it characterizes every meta-atom with a lower dimension eigen vector with dimensions of through a pretrained autoencoder. The size of each tensor all over the network is noticeable below all the blocks. Dissimilar layers of the CNN are interconnected with convolution operations. The kernel multiplies the value of the tensor in the kernel region and later sums it with a novel value in tensor. In CNN, we attached two FC layers (dimensions are given below) to estimate a spectral tensor. A leaky ReLU of was employed for all the convolution layers, and was employed for all the FC layers. The convolution layer maps the input tensor with the output tensor :

Leaky ReLU represents the rectified linear unit action, and CONV denotes the convolutional operators (include bias terms). The subscript signifies the number of networks. In the simulator, . Strides of two are employed in two, four, and six convolutions for replacing the max-pooling layer. A dropout layer by means of drops behindhand all the FC layers except the output layer is applied to prevent overfitting networks. Mean absolute error (MAE) was adapted for calculating the weight and gradient. MAE was determined by:

Now, indicates the amount of the entrances of . For cost functions, MAE is insensitive to outliers; however, it is unconducive to the convergence. To guarantee the module stability, the learning rate declines with the number of iterations.

3.4. Parameter Optimization Using CGO Algorithm

In order to optimally tune the parameters involved in the BCDNN method, the CGO approach was employed [20]. The CGO approach was projected depending on the presented principles of the chaos model. Important methods of fractals and chaos games were utilized to formulate a mathematical model for the CGO approach. The CGO approach considered the count of solution candidates (S) in this determination, which represents some appropriate seed inside the Sierpinski triangle. The mathematical process of this feature is as follows:

In this case, signifies the count of eligible seeds (candidate solutions) inside the Sierpinski triangle (searching space), and defines the dimension of this seed. The primary place of these eligible seeds is demonstrated arbitrarily from the searching space as:

where implies an arbitrary number in the interval of zero and one. The process for the primary seed is represented under:

define an arbitrary integer of zero or one for representing the possibility of rolling a die. Then, the schematic presentation of the described process for the second seed is defined as:

The schematic representations of the third and fourth seeds are described as:

in which signifies an arbitrary integer in the interval of zero and one. During the CGO approach, different constructions are presented for , which controls the effort to restrict seeds.

In this case, implies a uniformly distributed random number in the interval of zero and one. and are arbitrary integers in the interval of zero and one. For selecting better parameters in the BCDNN technique, the CGO method is offered as a main function, representing a positive combination to achieve higher performance. During this process, error rate is controlled as the fitness function, and the solution with lower error is observed as the optimum one. It can be defined as:

4. Experimental Validation

The performance validation of the RFODL-MGEC model was tested using three benchmark datasets [21], namely, prostate cancer, colon tumor, and ovarian cancer datasets. The details related to the datasets are provided in Table 1. The proposed model selected a set of 6145, 984, and 8424 features for prostate, colon, and ovarian cancer datasets, respectively.

Table 1.

Dataset details.

4.1. Resulting Analysis of RFODL-MGEC Technique on Prostate Cancer Dataset

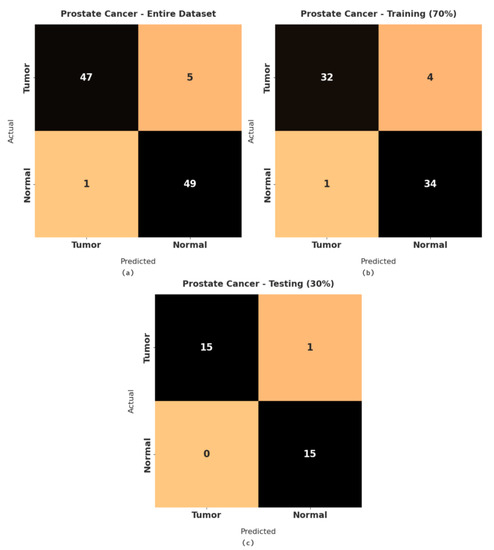

Figure 3 illustrates a set of confusion matrices generated by the RFODL-MGEC model on the test prostate cancer dataset. For the entire dataset, the RFODL-MGEC model categorized 47 images as tumor and 49 images as normal. Similarly, for 70% of the training dataset, the RFODL-MGEC model categorized 32 images as tumor and 34 images as normal. In addition, for 30% of the testing dataset, the RFODL-MGEC model categorized 15 images as tumor and 15 images as normal.

Figure 3.

Confusion matrices of RFODL-MGEC technique for prostate cancer dataset. (a) Entire dataset, (b) 70% of training dataset, and (c) 30% of testing dataset.

Table 2 shows a brief classification performance report for the RFODL-MGEC model on the prostate cancer dataset. The experimental results indicated that the RFODL-MGEC model demonstrated effective results on the test dataset. For instance, with the entire dataset, the RFODL-MGEC model obtained an average , , , and of 94.12%, 94.33%, 94.19%, and 94.12%, respectively. Moreover, with 70% of the training dataset, the RFODL-MGEC technique obtained an average , , , and of 92.96%, 93.22%, 93.02%, and 92.95%, respectively. With 30% of the testing dataset, the RFODL-MGEC system obtained an average , , , and of 96.77%, 96.88%, 96.88%, and 96.77%, respectively.

Table 2.

Resulting analysis of RFODL-MGEC technique with various measures on prostate cancer dataset.

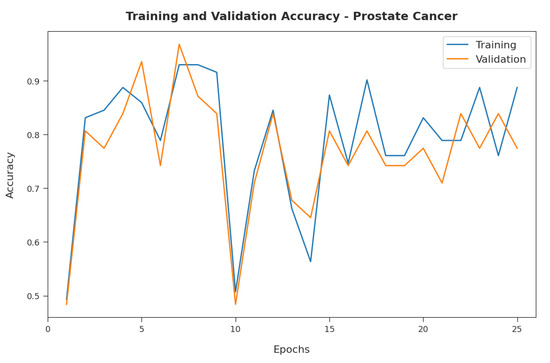

Figure 4 illustrates the training and validation accuracy inspection of the RFODL-MGEC model with the prostate cancer dataset. Figure 4 conveys that the RFODL-MGEC model offered maximum training/validation accuracy for the classification process.

Figure 4.

Accuracy analysis of RFODL-MGEC technique on prostate cancer dataset.

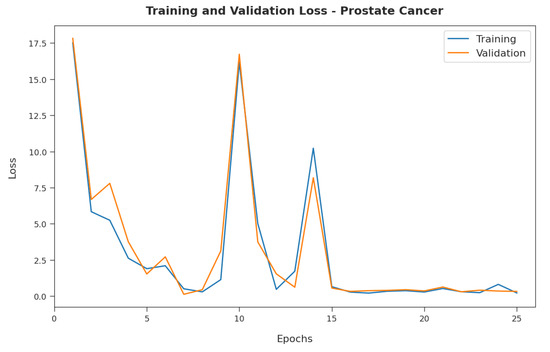

Figure 5 exemplifies the training and validation loss inspection of the RFODL-MGEC model with the prostate cancer dataset. Figure 5 shows that the RFODL-MGEC model offered reduced training/accuracy loss for the classification process of the test data.

Figure 5.

Loss analysis of RFODL-MGEC technique on prostate cancer dataset.

4.2. Resulting Analysis of RFODL-MGEC Technique on Colon Tumor Dataset

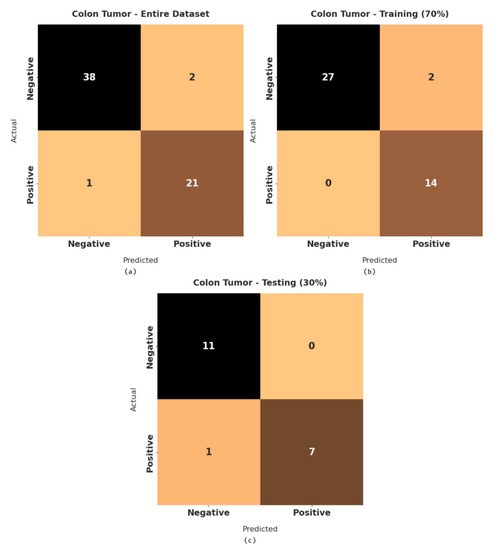

Figure 6 demonstrates a set of confusion matrices generated by the RFODL-MGEC model for the test colon tumor dataset. For the entire dataset, the RFODL-MGEC technique categorized 38 images as negative and 21 images as positive. Likewise, for 70% of the training dataset, the RFODL-MGEC approach categorized 27 images as negative and 14 images as positive. Furthermore, with 30% of the testing dataset, the RFODL-MGEC model categorized 11 images as negative and 7 images as positive.

Figure 6.

Confusion matrices of RFODL-MGEC technique on colon tumor dataset. (a) Entire dataset, (b) 70% of training dataset, and (c) 30% of testing datase.

Table 3 demonstrates a brief classification performance report on the RFODL-MGEC model with the colon tumor dataset. The experimental results indicated that the RFODL-MGEC model demonstrated effective results with the test dataset. For instance, with the entire dataset, the RFODL-MGEC model obtained an average , , , and of 95.16%, 94.37%, 95.23%, and 94.77%, respectively. With 70% of the training dataset, the RFODL-MGEC method attained an average , , , and of 95.35%, 93.75%, 96.55%, and 94.88%, respectively. Additionally, with 30% of the testing dataset, the RFODL-MGEC algorithm obtained an average , , , and of 94.74%, 95.83%, 93.75%, and 94.49%, respectively.

Table 3.

Resulting analysis of RFODL-MGEC technique with various measures on colon tumor dataset.

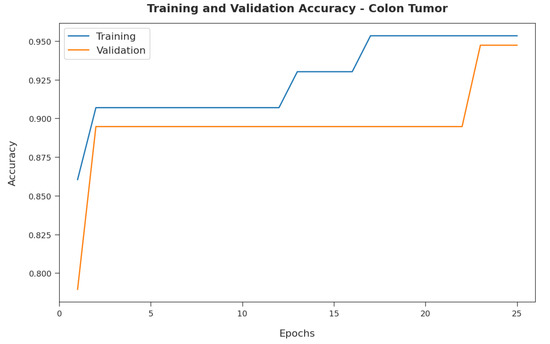

Figure 7 demonstrates the training and validation accuracy inspection of the RFODL-MGEC model on the colon tumor dataset. The figure conveys that the RFODL-MGEC technique offered maximal training/validation accuracy for the classification process.

Figure 7.

Accuracy analysis of RFODL-MGEC technique on colon tumor dataset.

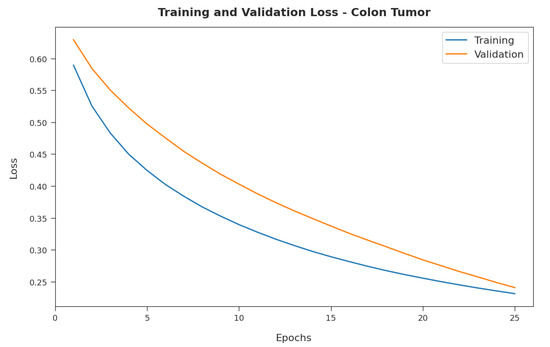

Figure 8 illustrates the training and validation loss inspection of the RFODL-MGEC model on the colon tumor dataset. The figure shows that the RFODL-MGEC approach offered lower training/accuracy loss for the classification process of the test data.

Figure 8.

Loss analysis of RFODL-MGEC technique on colon tumor dataset.

4.3. Resulting Analysis of RFODL-MGEC Technique on Ovarian Cancer Dataset

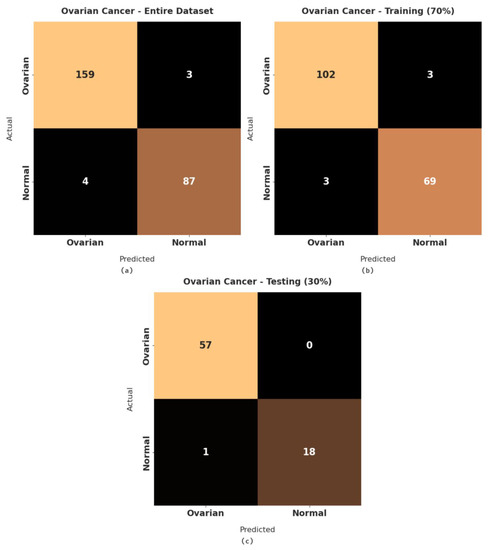

Figure 9 illustrates a set of confusion matrices generated by the RFODL-MGEC algorithm on the test ovarian cancer dataset. For the entire dataset, the RFODL-MGEC technique categorized 159 images as ovarian and 87 images as normal. With 70% of the training dataset, the RFODL-MGEC algorithm categorized 102 images as ovarian and 69 images as normal. For 30% of the testing dataset, the RFODL-MGEC technique categorized 57 images as ovarian and 18 images as normal.

Figure 9.

Confusion matrices of RFODL-MGEC technique on ovarian cancer dataset. (a) Entire dataset, (b) 70% of training dataset, and (c) 30% of testing dataset.

Table 4 shows a brief classification performance report on the RFODL-MGEC technique with the ovarian cancer dataset. The experimental results indicated that the RFODL-MGEC technique demonstrated effective results on the test dataset. For instance, with the entire dataset, the RFODL-MGEC system obtained an average , , , and of 97.23%, 97.11%, 96.88%, and 96.99%, respectively. With 70% of the training dataset, the RFODL-MGEC algorithm obtained an average , , , and of 96.61%, 96.49%, 96.49%, and 96.49%, respectively. Eventually, with 30% of the testing dataset, the RFODL-MGEC algorithm obtained an average , , , and of 98.68%, 99.14%, 97.37%, and 98.21%, respectively.

Table 4.

Resulting analysis of RFODL-MGEC technique with various measures on ovarian cancer dataset.

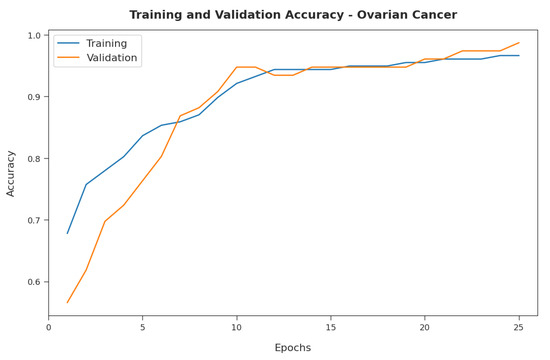

Figure 10 illustrates the training and validation accuracy inspection of the RFODL-MGEC algorithm with the ovarian cancer dataset. The figure conveys that the RFODL-MGEC technique offered maximum training/validation accuracy for the classification process.

Figure 10.

Accuracy analysis of RFODL-MGEC technique with ovarian cancer dataset.

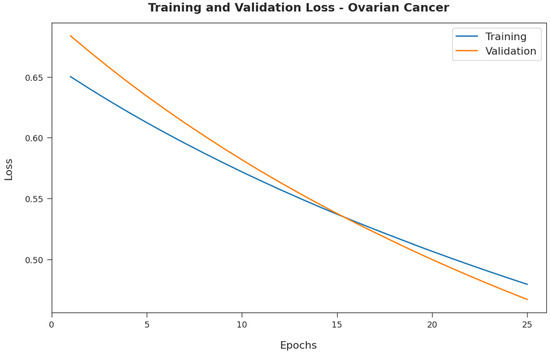

Figure 11 exemplifies the training and validation loss inspection of the RFODL-MGEC technique on the ovarian cancer dataset. The figure shows that the RFODL-MGEC system offered reduced training/accuracy loss for the classification process of the test data.

Figure 11.

Loss analysis of RFODL-MGEC technique on ovarian cancer dataset.

4.4. Discussion

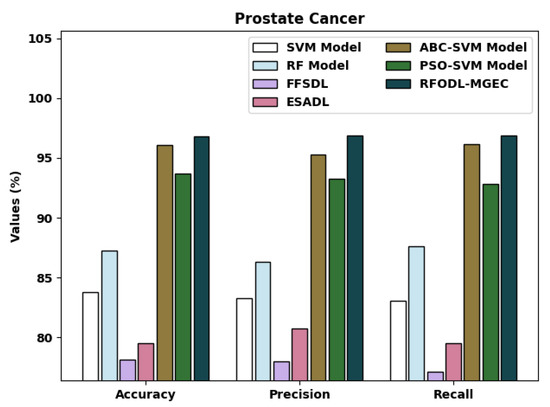

A detailed comparative examination of the RFODL-MGEC model with recent approaches [15] for prostate cancer is provided in Table 5 and Figure 12. The experimental outcomes indicated that the FFSDL and ESADL models reached lower classification outcomes than other approaches. At the same time, the SVM and RF models accomplished slightly enhanced classification outcomes compared with the FFSDL and ESADL models. Along with that, the ABC-SVM and PSO-SVM models accomplished closer classification performances, with an of 96.06% and 93.71%, respectively.

Table 5.

Comparative analysis of RFODL-MGEC technique with recent algorithms for prostate cancer dataset.

Figure 12.

Comparative analysis of RFODL-MGEC technique with prostate cancer dataset.

The proposed RFODL-MGEC model resulted in maximum classification efficiency, with an , , and of 96.77%, 96.88%, and 96.88% respectively.

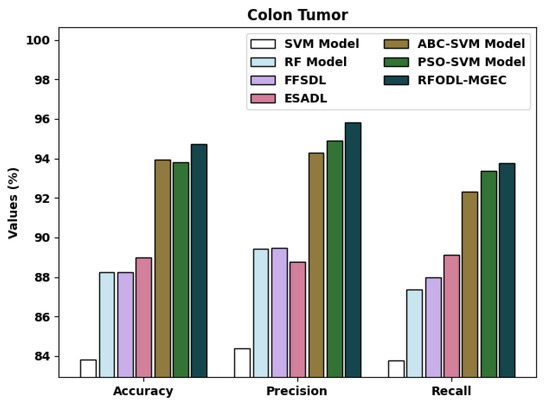

A brief comparative examination of the RFODL-MGEC approach with recent approaches for colon tumors is given in Table 6 and Figure 13. The experimental outcomes indicated that the FFSDL and ESADL approaches reached lower classification outcomes than the other approaches. Likewise, the SVM and RF approaches accomplished somewhat enhanced classification outcomes compared with the FFSDL and ESADL approaches.

Table 6.

Comparative analysis of RFODL-MGEC technique with recent algorithms for colon tumor dataset.

Figure 13.

Comparative analysis of RFODL-MGEC technique with colon tumor dataset.

Along with that, the ABC-SVM and PSO-SVM models accomplished closer classification performances, with an of 93.94% and 93.80%, respectively. Finally, the RFODL-MGEC model resulted in higher classification efficiency with an , , and of 94.74%, 95.83%, and 93.75% respectively.

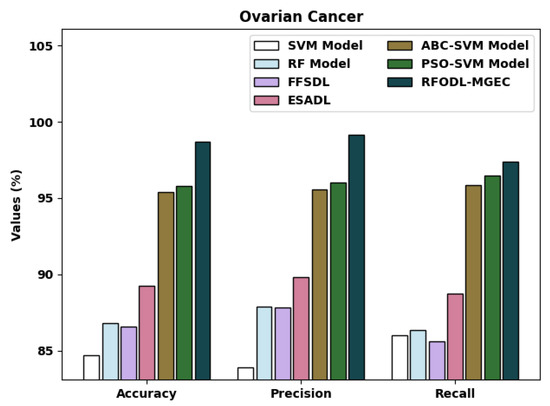

A detailed comparative examination of the RFODL-MGEC algorithm with recent approaches for ovarian cancer is given in Table 7 and Figure 14. The experimental outcomes indicated that the FFSDL and ESADL methods reached lower classification outcomes than the other approaches.

Table 7.

Comparative analysis of RFODL-MGEC technique with recent algorithms for ovarian cancer dataset.

Figure 14.

Comparative analysis of RFODL-MGEC technique with ovarian cancer dataset.

The SVM and RF models accomplished some enhanced classification outcomes compared with the FFSDL and ESADL models. This was followed by the ABC-SVM and PSO-SVM techniques, which accomplished closer classification performances with an of 95.42% and 95.81%, respectively. Finally, the RFODL-MGEC methodology resulted in maximum classification efficiency, with an , , and of 98.68%, 99.11%, and 97.37%, respectively.

Finally, a computation time (CT) examination of the RFODL-MGEC technique with recent models for the three distinct datasets is provided in Table 8. The experimental results indicated that the RFODL-MGEC technique showed a lower CT compared with the other methods. The proposed RFODL-MGEC technique required a lower CT of 1.231, 0.432, and 1.542 s with the test prostate cancer, colon tumor, and ovarian cancer datasets, respectively.

Table 8.

Comparative CT analysis of RFODL-MGEC technique with recent algorithms.

After examining the aforementioned tables and figures, we noted that the RFODL-MGEC model was able to maximize classification performance compared with the other methods.

5. Conclusions

In this study, a novel RFODL-MGEC model was established for microarray gene expression classification. The presented RFODL-MGEC model primarily employed an RFO-FS technique for deriving an optimal subset of features. Next, the BCDNN model was utilized for data classification, and the parameters involved in the BCDNN technique were optimally tuned by utilizing a CGO algorithm. Comprehensive experiments on benchmark datasets showed that the RFODL-MGEC model accomplished superior results for subtype classifications. Therefore, the RFODL-MGEC model was found to be effective for the identification of different classes for high-dimensional and small-scale microarray data. Future directions involve the use of data clustering and feature reduction approaches to enhance classification performance. The proposed model should be tested on large-scale datasets.

Author Contributions

Conceptualization, T.V. and H.A.; methodology, T.V. and L.; software and investigation, T.V. and H.A.; validation, L., E.A. and S.S.; data curation, H.A.; writing—T.V., L. and S.S.; review and editing, H.A., E.A. and A.H.; funding acquisition, H.A. and A.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work is funded by Prince Sattam bin Abdulaziz University, KSA under grant number: 2020/01/1174.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article as no datasets were generated during this study.

Acknowledgments

The authors would like to thank Prince Sattam Bin Abdualziz University for providing technical support during this research work. This project was supported by the Deanship of Scientific Research at Prince Sattam Bin Abdulaziz University (project no. 2020/01/1174).

Conflicts of Interest

The authors declare that they have no conflict of interest.

References

- Ahmed, O.; Brifcani, A. Gene expression classification based on deep learning. In Proceedings of the 2019 4th Scientific International Conference Najaf (SPICN), Al-Najef, Iraq, 29–30 April 2019; pp. 145–149. [Google Scholar]

- Almugren, N.; Alshamlan, H. A survey on hybrid feature selection methods in microarray gene expression data for cancer classification. IEEE Access 2019, 7, 78533–78548. [Google Scholar] [CrossRef]

- Maniruzzaman, M.; Rahman, M.J.; Ahammed, B.; Abedin, M.M.; Suri, H.S.; Biswas, M.; El-Baz, A.; Bangeas, P.; Tsoulfas, G.; Suri, J.S. Statistical characterization and classification of colon microarray gene expression data using multiple machine learning paradigms. Comput. Methods Programs Biomed. 2019, 176, 173–193. [Google Scholar] [CrossRef] [PubMed]

- Tabares-Soto, R.; Orozco-Arias, S.; Romero-Cano, V.; Bucheli, V.S.; Rodríguez-Sotelo, J.L.; Jiménez-Varón, C.F. A comparative study of machine learning and deep learning algorithms to classify cancer types based on microarray gene expression data. PeerJ Comput. Sci. 2020, 6, e270. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Adiwijaya, W.U.; Lisnawati, E.; Aditsania, A.; Kusumo, D.S. Dimensionality reduction using principal component analysis for cancer detection based on microarray data classification. J. Comput. Sci. 2018, 14, 1521–1530. [Google Scholar] [CrossRef] [Green Version]

- Alanni, R.; Hou, J.; Azzawi, H.; Xiang, Y. A novel gene selection algorithm for cancer classification using microarray datasets. BMC Med. Genom. 2019, 12, 10. [Google Scholar] [CrossRef] [PubMed]

- Daoud, M.; Mayo, M. A survey of neural network-based cancer prediction models from microarray data. Artif. Intell. Med. 2019, 97, 204–214. [Google Scholar] [CrossRef] [PubMed]

- Aydadenta, H.; Adiwijaya, A. A clustering approach for feature selection in microarray data classification using random forest. J. Inf. Process. Syst. 2018, 14, 1167–1175. [Google Scholar]

- Cilia, N.D.; De Stefano, C.; Fontanella, F.; Raimondo, S.; Scotto di Freca, A. An experimental comparison of feature-selection and classification methods for microarray datasets. Information 2019, 10, 109. [Google Scholar] [CrossRef] [Green Version]

- Alhenawi, E.A.; Al-Sayyed, R.; Hudaib, A.; Mirjalili, S. Feature selection methods on gene expression microarray data for cancer classification: A systematic review. Comput. Biol. Med. 2022, 140, 105051. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Tan, L.; Niu, B. Feature selection for classification of microarray gene expression cancers using Bacterial Colony Optimization with multi-dimensional population. Swarm Evol. Comput. 2019, 48, 172–181. [Google Scholar] [CrossRef]

- Zeebaree, D.Q.; Haron, H.; Abdulazeez, A.M. Gene selection and classification of microarray data using convolutional neural network. In Proceedings of the 2018 International Conference on Advanced Science and Engineering (ICOASE), Duhok, Iraq, 9–11 October; pp. 145–150.

- Algamal, Z.Y.; Lee, M.H. A two-stage sparse logistic regression for optimal gene selection in high-dimensional microarray data classification. Adv. Data Anal. Classif. 2019, 13, 753–771. [Google Scholar] [CrossRef]

- Shukla, A.K.; Singh, P.; Vardhan, M. A two-stage gene selection method for biomarker discovery from microarray data for cancer classification. Chemom. Intell. Lab. Syst. 2018, 183, 47–58. [Google Scholar] [CrossRef]

- Panda, M. Elephant search optimization combined with deep neural network for microarray data analysis. J. King Saud Univ. Comput. Inf. Sci. 2020, 32, 940–948. [Google Scholar] [CrossRef]

- Sayed, S.; Nassef, M.; Badr, A.; Farag, I. A nested genetic algorithm for feature selection in high-dimensional cancer microarray datasets. Expert Syst. Appl. 2019, 121, 233–243. [Google Scholar] [CrossRef]

- Li, Z.; Xie, W.; Liu, T. Efficient feature selection and classification for microarray data. PLoS ONE 2018, 13, e0202167. [Google Scholar] [CrossRef] [PubMed]

- Khorami, E.; Mahdi Babaei, F.; Azadeh, A. Optimal diagnosis of COVID-19 based on convolutional neural network and red Fox optimization algorithm. Comput. Intell. Neurosci. 2021, 2021, 4454507. [Google Scholar] [CrossRef] [PubMed]

- Kong, W.; Chen, J.; Huang, Z.; Kuang, D. Bidirectional cascaded deep neural networks with a pretrained autoencoder for dielectric metasurfaces. Photonics Res. 2021, 9, 1607–1615. [Google Scholar] [CrossRef]

- Talatahari, S.; Azizi, M. Chaos Game Optimization: A novel metaheuristic algorithm. Artif. Intell. Rev. 2021, 54, 917–1004. [Google Scholar] [CrossRef]

- Zhu, Z.; Ong, Y.S.; Dash, M. Markov Blanket-Embedded Genetic Algorithm for Gene Selection. Pattern Recognit. 2007, 49, 3236–3248. Available online: http://csse.szu.edu.cn/staff/zhuzx/Datasets.html (accessed on 21 January 2022). [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).