Exploiting Diverse Information in Pre-Trained Language Model for Multi-Choice Machine Reading Comprehension

Abstract

1. Introduction

- We propose a simple but effective multi-decision based transformer model that adaptively selects information at different layers to answer different reading comprehension questions. To the best of our knowledge, this is the first occasion explicitly making use of the information at different layers in pre-trained language models for MRC.

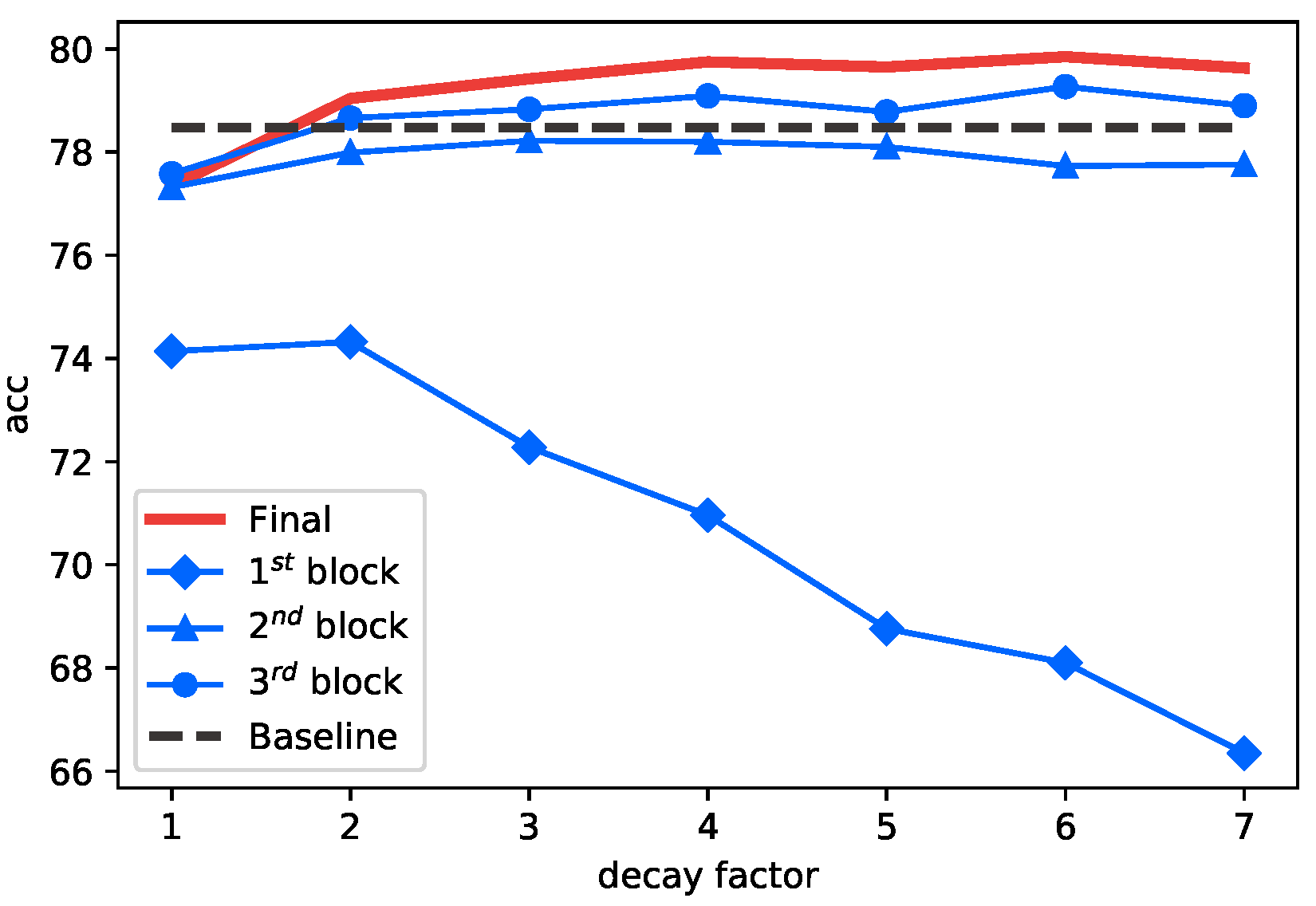

- We propose a learning rate decaying method to maintain the information diversity in different layers of pre-trained models, avoiding it being damaged by the multiple similar supervisory signals during fine-tuning.

- We conduct a detailed analysis to show which types of reading comprehension task can be addressed by each block. Moreover, the experimental results on five public datasets demonstrate that our model increases the inference speed without sacrificing the accuracy.

2. Related Work

3. Models

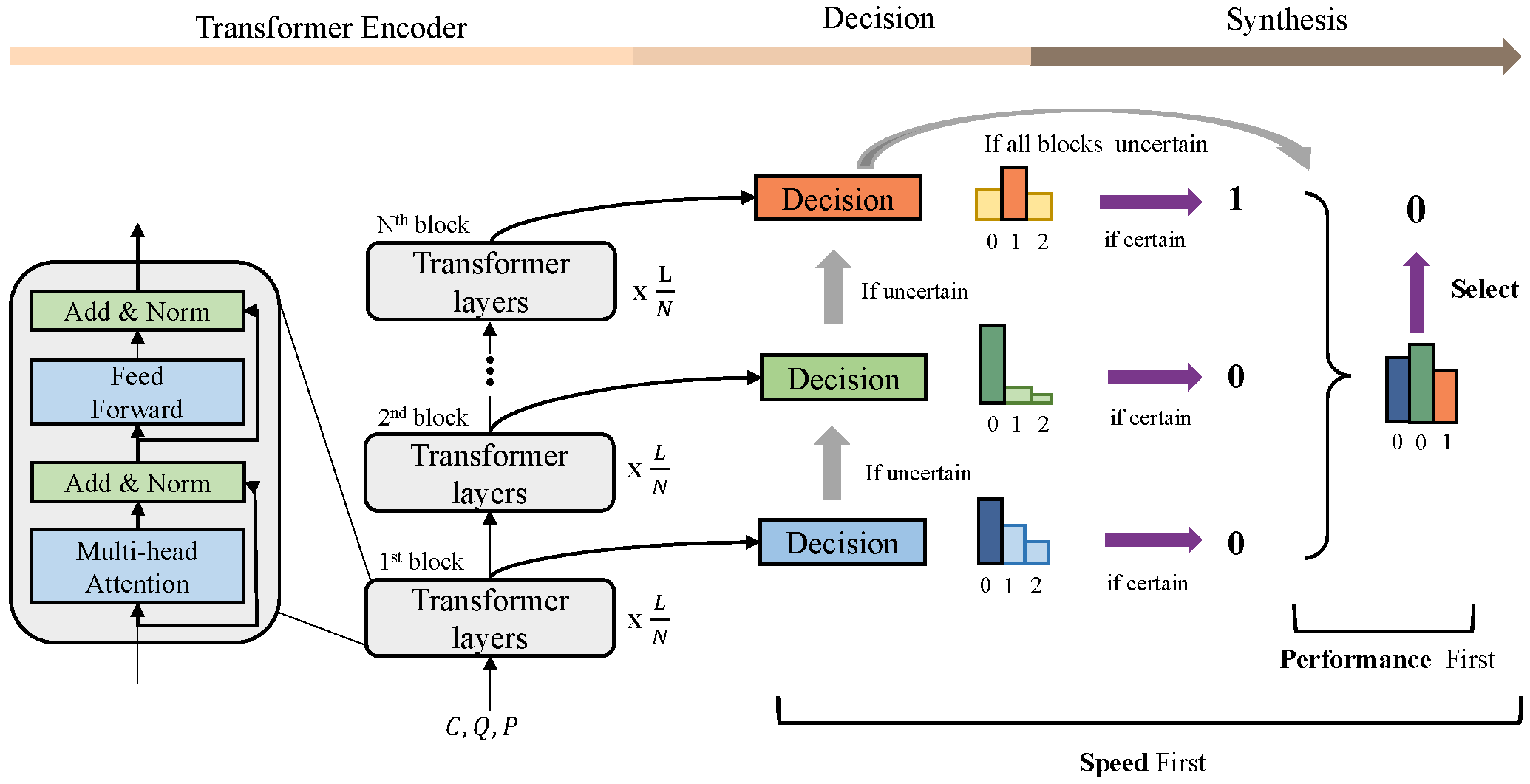

3.1. Model Architecture

3.1.1. Embedding

3.1.2. Backbone

3.1.3. Decision Module

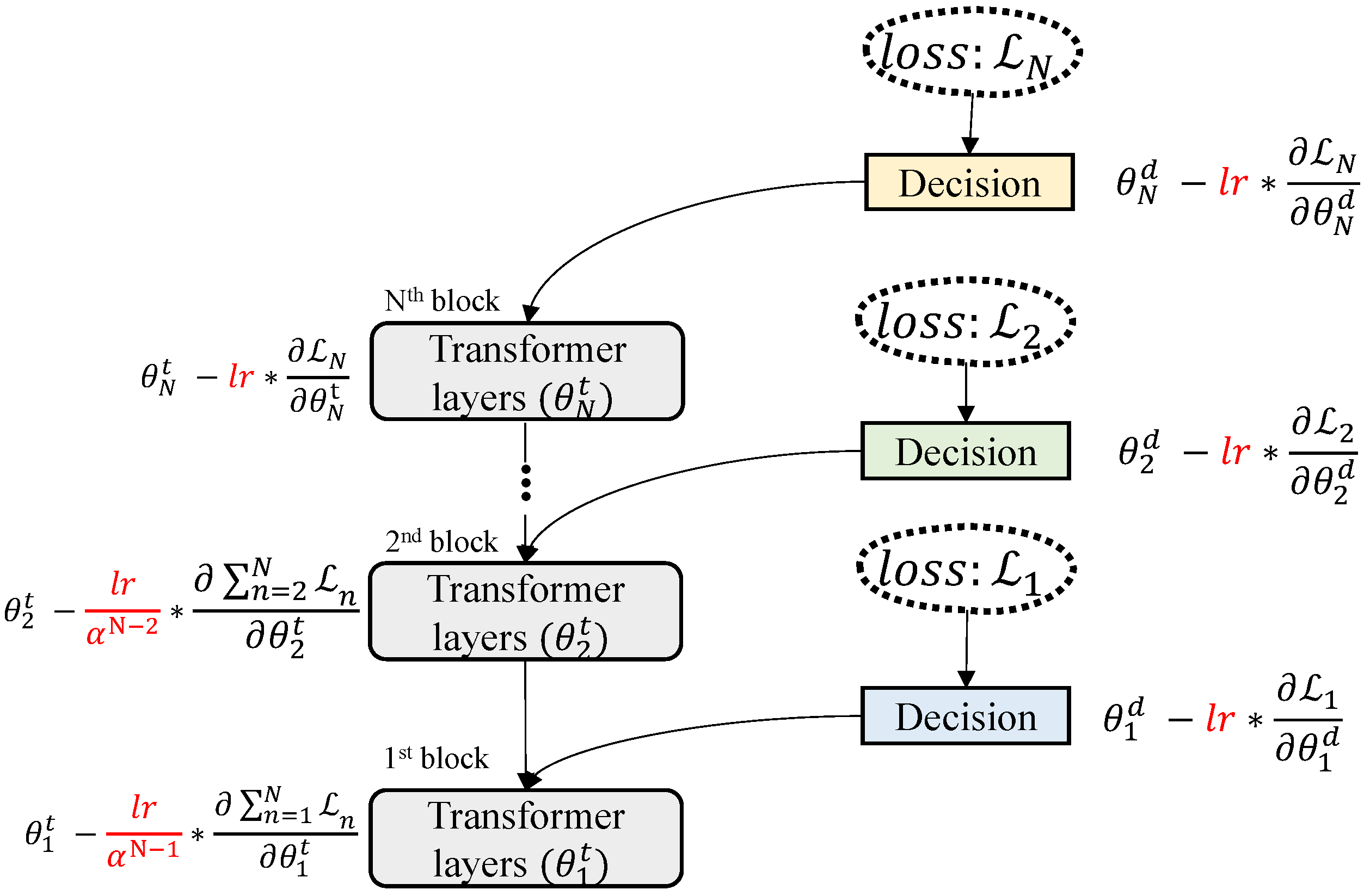

3.2. Training with Learning Rate Decaying

3.2.1. Loss Function

3.2.2. Learning Rate Decaying

3.3. For Specific Task

4. Experiments

4.1. Implement Details

4.2. Datasets

4.3. Evaluate Metrics

4.4. Main Results

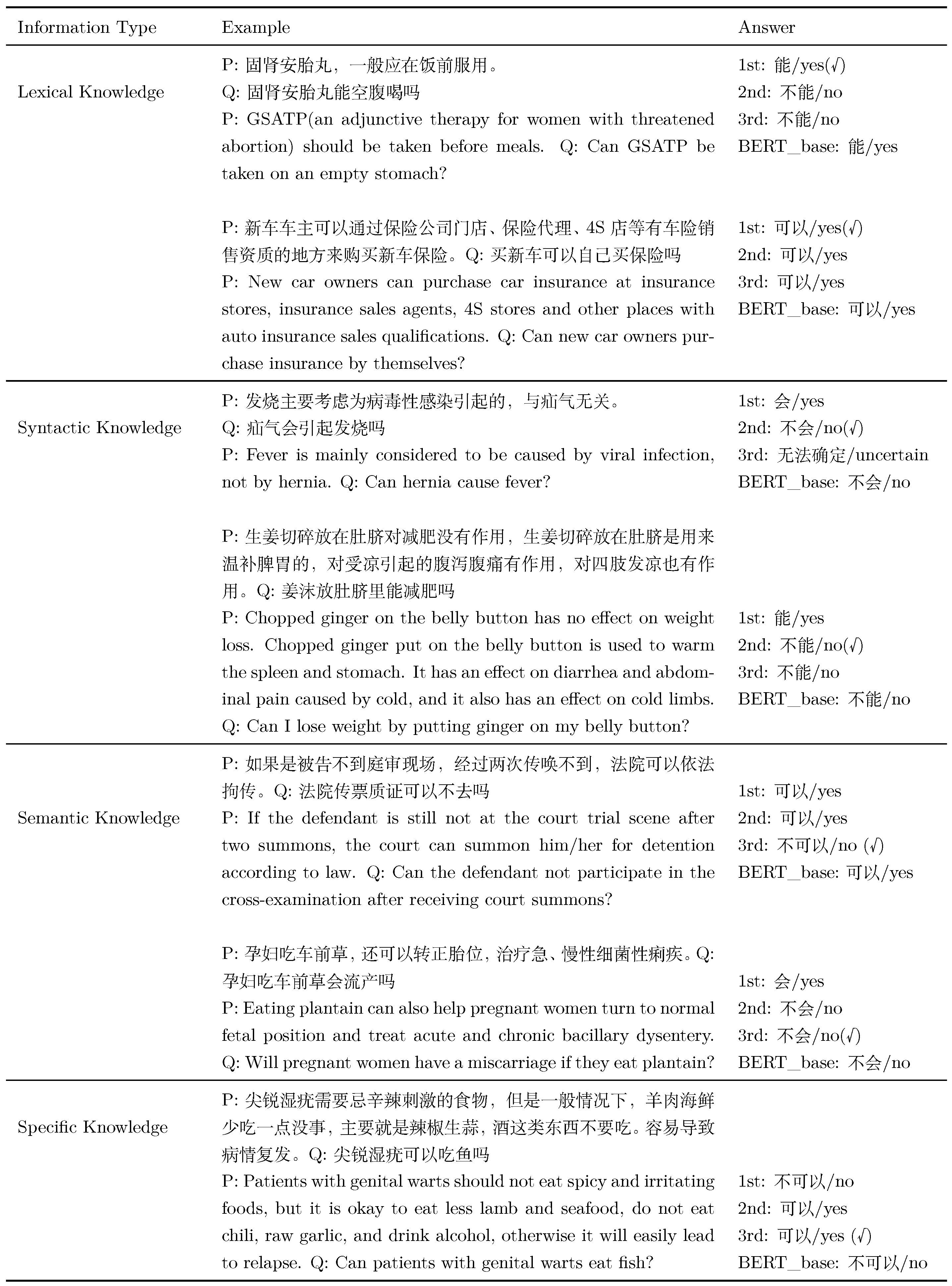

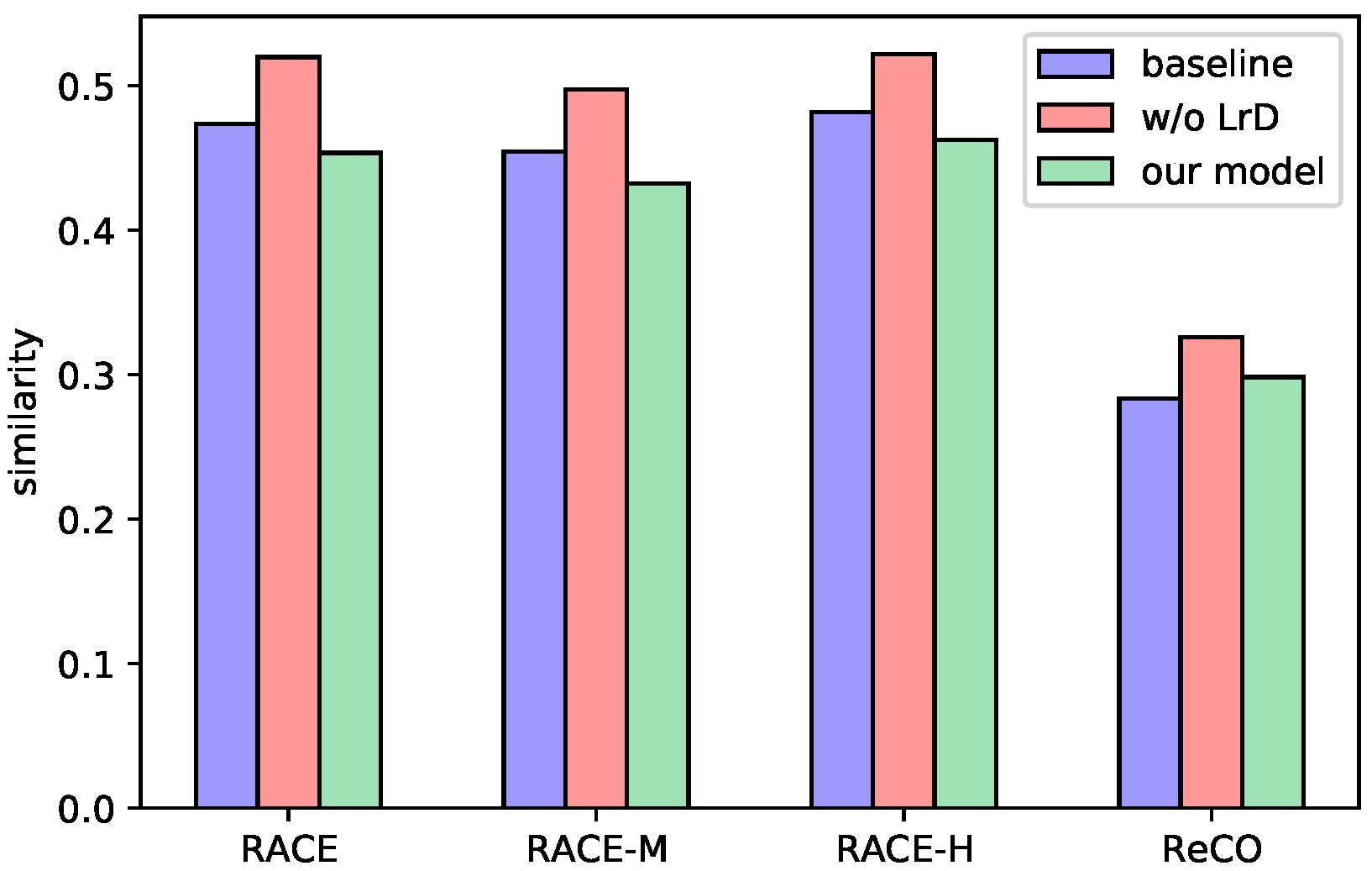

4.5. Information Type Analysis

4.6. Ablation Study

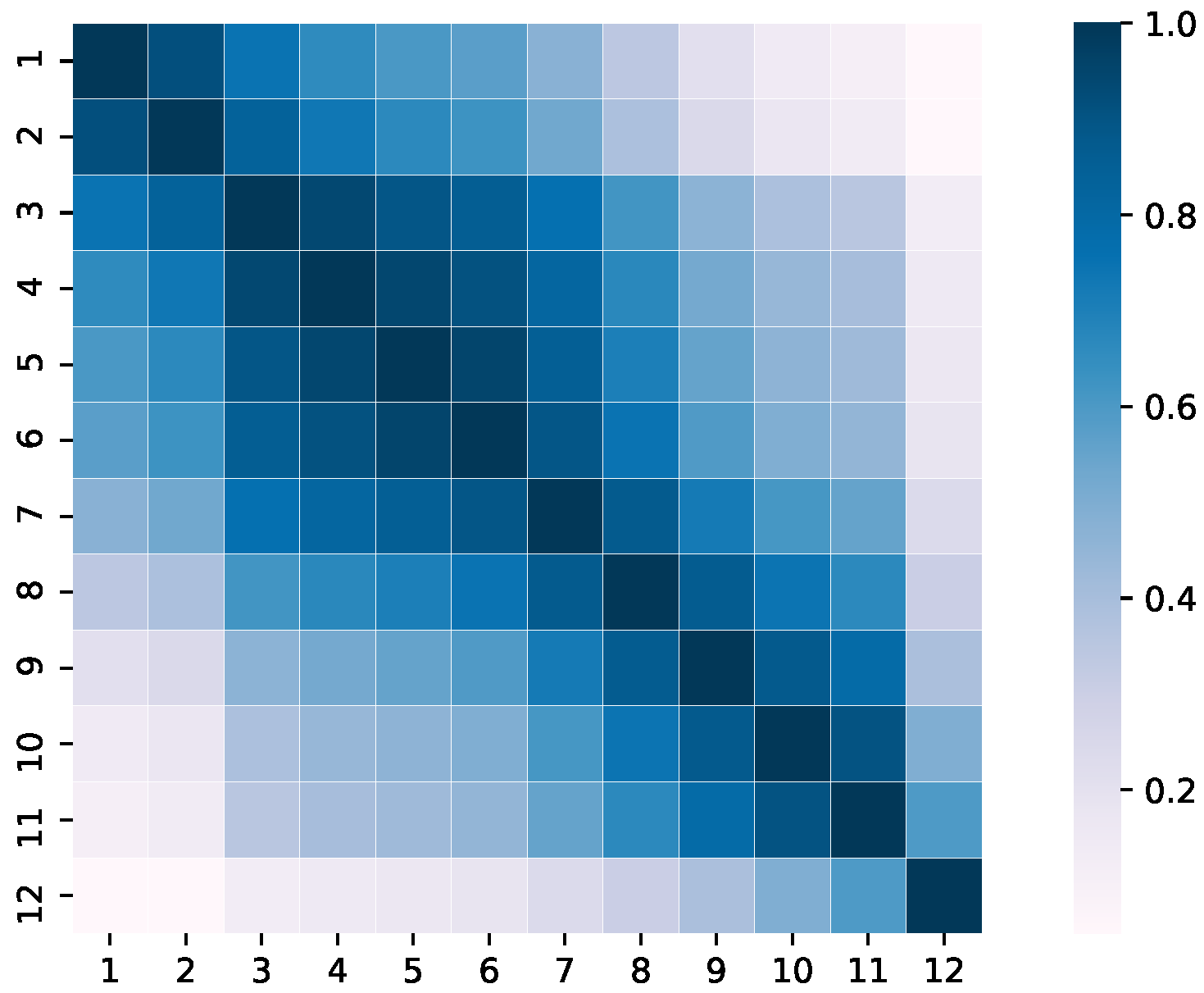

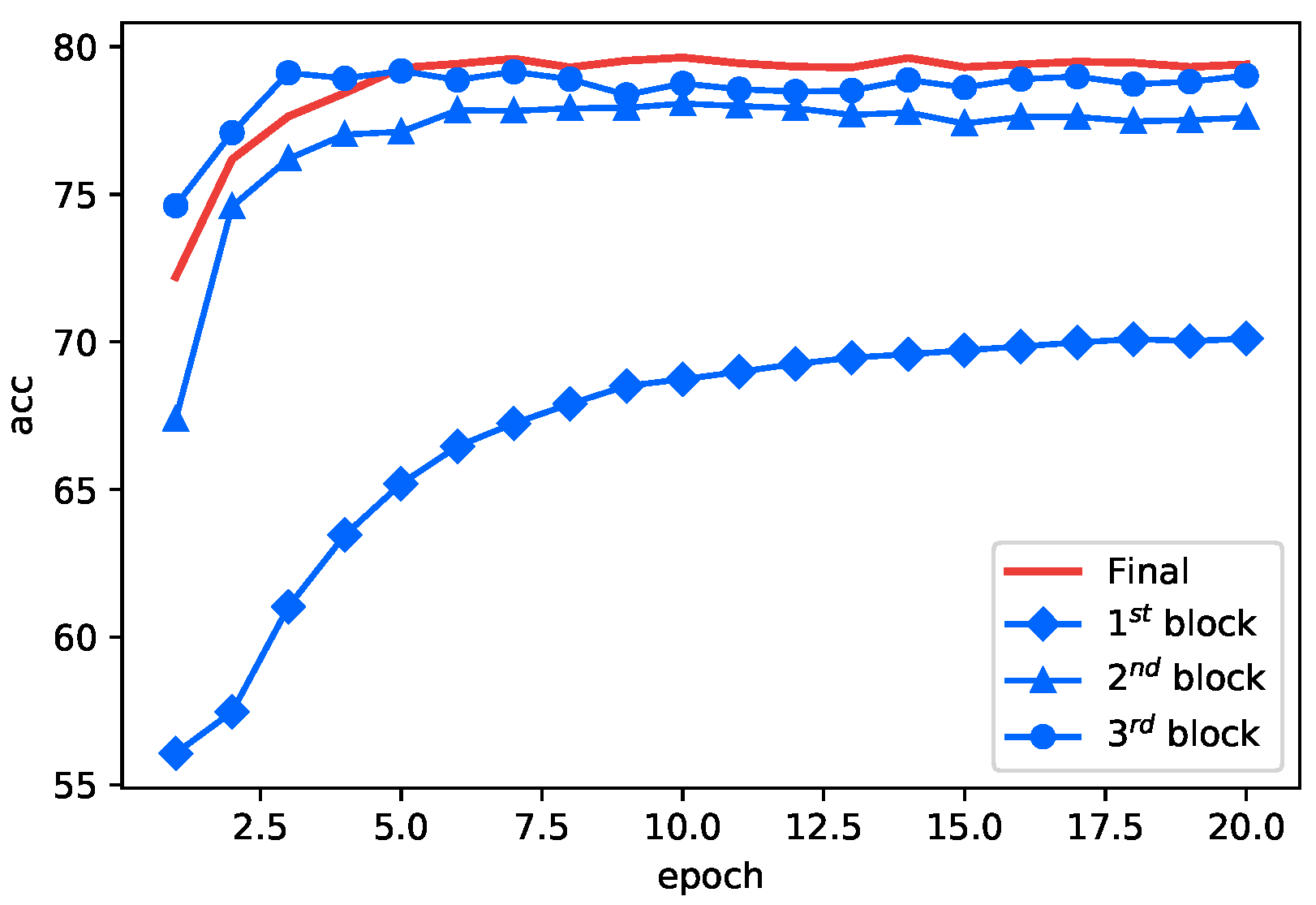

4.7. More Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rajpurkar, P.; Zhang, J.; Lopyrev, K.; Liang, P. SQuAD: 100,000+ Questions for Machine Comprehension of Text. In Proceedings of the EMNLP, Austin, TX, USA, 1–5 November 2016; pp. 2383–2392. [Google Scholar] [CrossRef]

- Lai, G.; Xie, Q.; Liu, H.; Yang, Y.; Hovy, E.H. RACE: Large-scale ReAding Comprehension Dataset From Examinations. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, EMNLP 2017, Copenhagen, Denmark, 9–11 September 2017; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017; pp. 785–794. [Google Scholar] [CrossRef]

- Wang, B.; Yao, T.; Zhang, Q.; Xu, J.; Wang, X. ReCO: A Large Scale Chinese Reading Comprehension Dataset on Opinion. In Proceedings of the The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, New York, NY, USA, 7–12 February 2020; AAAI Press: Menlo Park, CA, USA, 2020; pp. 9146–9153. [Google Scholar]

- Cong, Y.; Wu, Y.; Liang, X.; Pei, J.; Qin, Z. PH-model: Enhancing multi-passage machine reading comprehension with passage reranking and hierarchical information. Appl. Intell. 2021, 51, 1–13. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Jawahar, G.; Sagot, B.; Seddah, D. What Does BERT Learn about the Structure of Language? In Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 3651–3657. [Google Scholar] [CrossRef]

- Zhang, S.; Zhao, H.; Wu, Y.; Zhang, Z.; Zhou, X.; Zhou, X. DCMN+: Dual Co-Matching Network for Multi-Choice Reading Comprehension. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, New York, NY, USA, 7–12 February 2020; AAAI Press: Menlo Park, CA, USA, 2020; pp. 9563–9570. [Google Scholar]

- Zhang, Z.; Wu, Y.; Zhou, J.; Duan, S.; Zhao, H.; Wang, R. SG-Net: Syntax-Guided Machine Reading Comprehension. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, New York, NY, USA, 7–12 February 2020; AAAI Press: Menlo Park, CA, USA, 2020; pp. 9636–9643. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. In Proceedings of the 1st International Conference on Learning Representations, ICLR 2013, Scottsdale, AZ, USA, 2–4 May 2013. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, EMNLP 2014, Doha, Qatar, 25–29 October 2014; Moschitti, A., Pang, B., Daelemans, W., Eds.; A meeting of SIGDAT, a Special Interest Group of the ACL. Association for Computational Linguistics: Stroudsburg, PA, USA, 2014; pp. 1532–1543. [Google Scholar] [CrossRef]

- Peters, M.E.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep Contextualized Word Representations. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2018, New Orleans, LA, USA, 1–6 June 2018; Walker, M.A., Ji, H., Stent, A., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; Volume 1 (Long Papers), pp. 2227–2237. [Google Scholar] [CrossRef]

- Pota, M.; Ventura, M.; Fujita, H.; Esposito, M. Multilingual evaluation of pre-processing for BERT-based sentiment analysis of tweets. Expert Syst. Appl. 2021, 181, 115119. [Google Scholar] [CrossRef]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://www.cs.ubc.ca/~amuham01/LING530/papers/radford2018improving.pdf (accessed on 11 March 2022).

- Guarasci, R.; Silvestri, S.; De Pietro, G.; Fujita, H.; Esposito, M. Assessing BERT’s ability to learn Italian syntax: A study on null-subject and agreement phenomena. J. Ambient. Intell. Humaniz. Comput. 2021. [Google Scholar] [CrossRef]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Sun, Y.; Wang, S.; Li, Y.; Feng, S.; Chen, X.; Zhang, H.; Tian, X.; Zhu, D.; Tian, H.; Wu, H. ERNIE: Enhanced Representation through Knowledge Integration. arXiv 2019, arXiv:1904.09223. [Google Scholar]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. ALBERT: A Lite BERT for Self-supervised Learning of Language Representations. In Proceedings of the 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.G.; Salakhutdinov, R.; Le, Q.V. XLNet: Generalized Autoregressive Pretraining for Language Understanding. In Proceedings of the Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; pp. 5754–5764. [Google Scholar]

- Hirschman, L.; Light, M.; Breck, E.; Burger, J.D. Deep Read: A Reading Comprehension System. In Proceedings of the 27th Annual Meeting of the Association for Computational Linguistics, University of Maryland, College Park, MD, USA, 20–26 June 1999; Dale, R., Church, K.W., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 1999; pp. 325–332. [Google Scholar] [CrossRef]

- Riloff, E.; Thelen, M. A rule-based question answering system for reading comprehension tests. In Proceedings of the ANLP-NAACL 2000 Workshop: Reading Comprehension Tests as Evaluation for Computer-Based Language Understanding Systems; Association for Computational Linguistics: Stroudsburg, PA, USA, 2000. [Google Scholar]

- Hao, X.; Chang, X.; Liu, K. A Rule-based Chinese Question Answering System for Reading Comprehension Tests. In Proceedings of the 3rd International Conference on Intelligent Information Hiding and Multimedia Signal Processing (IIH-MSP 2007), Kaohsiung, Taiwan, 26–28 November 2007; Liao, B., Pan, J., Jain, L.C., Liao, M., Noda, H., Ho, A.T.S., Eds.; IEEE Computer Society: Los Alamitos, CA, USA; Washington, DC, USA; Brussels, Belgium; Tokyo, Japan, 2007; pp. 325–329. [Google Scholar] [CrossRef]

- Wang, X.-J.; Bai, Z.-W.; Li, K.; Yuan, C.-X. Survey on Machine Reading Comprehension. J. Beijing Univ. Posts Telecommun. 2019, 42, 1–9. [Google Scholar]

- Catelli, R.; Casola, V.; Pietro, G.D.; Fujita, H.; Esposito, M. Combining contextualized word representation and sub-document level analysis through Bi-LSTM+CRF architecture for clinical de-identification. Knowl. Based Syst. 2021, 213, 106649. [Google Scholar] [CrossRef]

- Hermann, K.M.; Kociský, T.; Grefenstette, E.; Espeholt, L.; Kay, W.; Suleyman, M.; Blunsom, P. Teaching Machines to Read and Comprehend. In Proceedings of the Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, Montreal, QC, Canada, 7–12 December 2015; pp. 1693–1701. [Google Scholar]

- Seo, M.J.; Kembhavi, A.; Farhadi, A.; Hajishirzi, H. Bidirectional Attention Flow for Machine Comprehension. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- Chen, D.; Fisch, A.; Weston, J.; Bordes, A. Reading Wikipedia to Answer Open-Domain Questions. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, ACL 2017, Vancouver, BC, Canada, 30 July–4 August 2017; Barzilay, R., Kan, M., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017; Volume 1: Long Papers, pp. 1870–1879. [Google Scholar] [CrossRef]

- Yu, A.W.; Dohan, D.; Luong, M.; Zhao, R.; Chen, K.; Norouzi, M.; Le, Q.V. QANet: Combining Local Convolution with Global Self-Attention for Reading Comprehension. In Proceedings of the 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Dhingra, B.; Liu, H.; Yang, Z.; Cohen, W.W.; Salakhutdinov, R. Gated-Attention Readers for Text Comprehension. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, ACL 2017, Vancouver, BC, Canada, 30 July–4 August 2017; Barzilay, R., Kan, M., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017; Volume 1: Long Papers, pp. 1832–1846. [Google Scholar] [CrossRef]

- Xu, Y.; Liu, J.; Gao, J.; Shen, Y.; Liu, X. Dynamic fusion networks for machine reading comprehension. arXiv 2017, arXiv:1711.04964. [Google Scholar]

- Zhu, H.; Wei, F.; Qin, B.; Liu, T. Hierarchical Attention Flow for Multiple-Choice Reading Comprehension. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, (AAAI-18), New Orleans, LA, USA, 2–7 February 2018; McIlraith, S.A., Weinberger, K.Q., Eds.; AAAI Press: Menlo Park, CA, USA, 2018; pp. 6077–6085. [Google Scholar]

- Wang, S.; Jiang, J. Machine Comprehension Using Match-LSTM and Answer Pointer. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017. [Google Scholar]

- Zhu, P.; Zhao, H.; Li, X. DUMA: Reading comprehension with transposition thinking. arXiv 2020, arXiv:2001.09415. [Google Scholar] [CrossRef]

- Ran, Q.; Lin, Y.; Li, P.; Zhou, J.; Liu, Z. NumNet: Machine Reading Comprehension with Numerical Reasoning. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, EMNLP-IJCNLP 2019, Hong Kong, China, 3–7 November 2019; Inui, K., Jiang, J., Ng, V., Wan, X., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 2474–2484. [Google Scholar] [CrossRef]

- Bai, Z.; Li, K.; Chen, J.; Yuan, C.; Wang, X. RAIN: A Relation-based Arithmetic model with Implicit Numbers. In Proceedings of the 2020 IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020; pp. 2370–2375. [Google Scholar]

- Ma, J.; Zhao, Z.; Yi, X.; Chen, J.; Hong, L.; Chi, E.H. Modeling Task Relationships in Multi-task Learning with Multi-gate Mixture-of-Experts. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, KDD 2018, London, UK, 19–23 August 2018; pp. 1930–1939. [Google Scholar] [CrossRef]

- Shen, Y.; Huang, P.; Gao, J.; Chen, W. ReasoNet: Learning to Stop Reading in Machine Comprehension. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 1047–1055. [Google Scholar] [CrossRef]

- Yu, J.; Zha, Z.; Yin, J. Inferential Machine Comprehension: Answering Questions by Recursively Deducing the Evidence Chain from Text. In Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; Volume 1: Long Papers, pp. 2241–2251. [Google Scholar] [CrossRef]

- Zhou, Q.; Wang, X.; Dong, X. Differentiated Attentive Representation Learning for Sentence Classification. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, IJCAI 2018, Stockholm, Sweden, 13–19 July 2018; pp. 4630–4636. [Google Scholar] [CrossRef][Green Version]

- Liu, W.; Zhou, P.; Wang, Z.; Zhao, Z.; Deng, H.; Ju, Q. FastBERT: A Self-distilling BERT with Adaptive Inference Time. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 6035–6044. [Google Scholar] [CrossRef]

- Xin, J.; Tang, R.; Lee, J.; Yu, Y.; Lin, J. DeeBERT: Dynamic Early Exiting for Accelerating BERT Inference. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, 5–10 July 2020; Jurafsky, D., Chai, J., Schluter, N., Tetreault, J.R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 2246–2251. [Google Scholar] [CrossRef]

- Sun, K.; Yu, D.; Chen, J.; Yu, D.; Choi, Y.; Cardie, C. DREAM: A Challenge Dataset and Models for Dialogue-Based Reading Comprehension. Trans. Assoc. Comput. Linguistics 2019, 7, 217–231. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. Transformers: State-of-the-Art Natural Language Processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, EMNLP 2020- Demos, Online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 38–45. [Google Scholar] [CrossRef]

- Zhang, X.; Zhao, J.J.; LeCun, Y. Character-level Convolutional Networks for Text Classification. In Proceedings of the NeurIPs, Montreal, QC, Canada, 7–12 December 2015; pp. 649–657. [Google Scholar]

- Lai, Y.; Zhang, C.; Feng, Y.; Huang, Q.; Zhao, D. Why Machine Reading Comprehension Models Learn Shortcuts? In Proceedings of the Findings of the Association for Computational Linguistics: ACL/IJCNLP 2021, Online Event, 1–6 August 2021; Zong, C., Xia, F., Li, W., Navigli, R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 989–1002. [Google Scholar] [CrossRef]

| Datasets/Models | RACE | ReCO | Dream | ||

|---|---|---|---|---|---|

| All | Middle | High | |||

| BERT_base | 65.0 | 71.7 | 62.3 | 77.1 | 63.2 |

| RoBERTa_base | 74.1 * | 78.1* | 72.5 * | 79.8 * | 70.0 * |

| RoBERTa_large | 83.2 | 86.5 | 81.8 | 79.2 | 85.0 |

| DUMA | 66.1 * | 71.8 * | 63.7 * | 79.1* | 62.0 * |

| Ours | |||||

| BERT_base | 65.5 ± 0.04 | 71.0 ± 0.07 | 63.3 ± 0.07 | 79.8 ± 0.02 | 63.5 ± 0.18 |

| RoBERTa_base | 74.3 ± 0.09 | 78.2 ± 0.10 | 72.7 ± 0.11 | 79.8 ± 0.11 | 70.1 ± 0.06 |

| RoBERTa_large | 83.5 ± 0.21 | 87.7 ± 0.17 | 81.8 ± 0.23 | 81.6 ± 0.11 | 85.3 ± 0.09 |

| DUMA | 65.9 ± 0.18 | 71.4 ± 0.22 | 63.6 ± 0.17 | 79.7 ± 0.02 | 63.4 ± 0.02 |

| Datasets/Models | Ag.news | Book Review |

|---|---|---|

| BERT_base | 94.5 | 86.9 |

| RoBERTa_base | 95.0 * | 87.1 * |

| RoBERTa_large | 95.4 * | 88.3 * |

| Ours | ||

| BERT_base | 95.0 ± 0.02 | 87.8 ± 0.04 |

| RoBERTa_base | 95.2 ± 0.01 | 88.3 ± 0.03 |

| RoBERTa_large | 95.5 ± 0.04 | 88.7 ± 0.02 |

| Datasets/Models | RACE | ReCO | Dream | |||

|---|---|---|---|---|---|---|

| Acc | FLOPs | Acc | FLOPs | Acc | FLOPs | |

| BERT_base | 65.0 | 173,975 M | 77.1 | 43,486 M | 63.2 | 130,464 M |

| Ours | ||||||

| 65.6 | 173,953 M | 79.9 | 43,487 M | 64.1 | 130,465 M | |

| 65.6 | 171,344 M | 79.9 | 39,306 M | 64.1 | 130,465 M | |

| 66.4 | 165,233 M | 79.8 | 35,111 M | 64.1 | 130,103 M | |

| 66.3 | 155,256 M | 79.2 | 31,200 M | 63.9 | 128,441 M | |

| Datasets/Models | Book Review | Ag.news | ||

|---|---|---|---|---|

| Acc | FLOPs | Acc | FLOPs | |

| BERT_base | 86.9 | 21,785 M | 94.5 | 21,785 M |

| Ours | ||||

| 87.9 | 21,785 M | 95.0 | 21,785 M | |

| 87.8 | 16,856 M | 95.0 | 10,584 M | |

| 87.8 | 13,942 M | 94.6 | 8705 M | |

| 87.7 | 12,045 M | 93.6 | 7738 M | |

| Information Type | 1st Layer | 2nd Layer | 3rd Layer |

|---|---|---|---|

| Lexical Knowledge | 51.0 | 32.0 | 10.0 |

| Syntactic Knowledge | 37.0 | 46.0 | 26.0 |

| Semantic Knowledge | 13.0 | 23.0 | 60.0 |

| Specific Knowledge | 1.0 | 4.0 | 10.0 |

| Datasets/Models | RACE | ReCO | Ag.news | ||

|---|---|---|---|---|---|

| All | Middle | High | |||

| 1-block | 65.2 | 71.8 | 62.4 | 78.5 | 94.6 |

| 2-block | 66.4 | 72.1 | 64.0 | 78.9 | 94.9 |

| 3-block | 66.7 | 71.9 | 64.5 | 80.2 | 95.0 |

| 4-block | 66.0 | 71.1 | 63.9 | 79.4 | 94.7 |

| 6-block | 65.4 | 70.1 | 63.5 | 79.7 | 94.9 |

| 12-block | 64.8 | 70.5 | 62.4 | 79.2 | 94.7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, Z.; Liu, J.; Wang, M.; Yuan, C.; Wang, X. Exploiting Diverse Information in Pre-Trained Language Model for Multi-Choice Machine Reading Comprehension. Appl. Sci. 2022, 12, 3072. https://doi.org/10.3390/app12063072

Bai Z, Liu J, Wang M, Yuan C, Wang X. Exploiting Diverse Information in Pre-Trained Language Model for Multi-Choice Machine Reading Comprehension. Applied Sciences. 2022; 12(6):3072. https://doi.org/10.3390/app12063072

Chicago/Turabian StyleBai, Ziwei, Junpeng Liu, Meiqi Wang, Caixia Yuan, and Xiaojie Wang. 2022. "Exploiting Diverse Information in Pre-Trained Language Model for Multi-Choice Machine Reading Comprehension" Applied Sciences 12, no. 6: 3072. https://doi.org/10.3390/app12063072

APA StyleBai, Z., Liu, J., Wang, M., Yuan, C., & Wang, X. (2022). Exploiting Diverse Information in Pre-Trained Language Model for Multi-Choice Machine Reading Comprehension. Applied Sciences, 12(6), 3072. https://doi.org/10.3390/app12063072