Abstract

In this paper, the recursive form of an optimal finite impulse response filter is proposed for discrete time-varying state-space models. The recursive form of the finite impulse response filter is derived by employing finite horizon Kalman filtering with optimally estimated initial conditions. The horizon initial state and its error covariance on the horizon are optimally estimated by using recent finite measurements, in the sense of maximum likelihood estimation, then initiating the finite horizon Kalman filter. The optimality and unbiasedness of the proposed filter are proved by comparison with the conventional optimal finite impulse response filter in batch form. Moreover, an adaptive FIR filter is also proposed by applying the adaptive estimation scheme to the proposed recursive optimal FIR filter as its application. To evaluate the performance of the proposed algorithms, a computer simulation is performed to compare the conventional Kalman filter and adaptive Kalman filters for the gas turbine aircraft engine model.

1. Introduction

The Kalman filter has been used as a standard tool to deal with state estimation of linear state-space models. However, since the Kalman filter has infinite impulse response (IIR) structure, which makes use of whole measurements from the initial time to the current, model uncertainties which come from the limited knowledge of the system model and the statistics of the noises and computation errors may accumulate in the estimated state. These could originate the divergence problem in the Kalman filter [1,2,3]. In order to prevent divergence problems, finite impulse response (FIR) filters have been used as an alternative to the Kalman filter [4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20]. Since FIR filters estimate the states by using finite measurements on the most recent time interval, these filters are known to be more robust against modeling uncertainties and numerical errors that cause of divergence problem in Kalman filter. Moreover, due to their FIR structure, FIR filters have good properties such as built-in bounded input/bounded output (BIBO) stability and fast tracking speed.

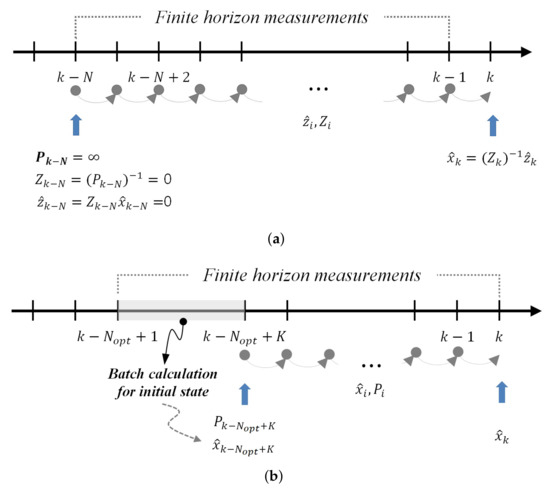

However, despite the aforementioned advantages of FIR filters, their complicated derivation and batch form might lead to computational inefficiency and limitations in further developments. Since the Kalman filter is well-known and has recursive form, which can give effective computation methods to the FIR filter, the recursive form of FIR filters were introduced by modifying the Kalman filter [7,8,9,10,11,12,13,14,15,16]. In [7,8], the receding horizon Kalman (RHK) filters, whose concept is introduced in Figure 1a, and its fast iteration method, are proposed for time-invariant systems. The filter equations of RHK filters are easy to understand and many useful Kalman filtering methods could be directly applied to FIR filtering problems for improving the performance of FIR filters because they are derived by combining the Kalman filter algorithm and receding horizon strategy. Since RHK filters have exactly the same structure as the Kalman filter on the finite estimation horizon, the RHK filtering problem can be thought as a recursive finite horizon Kalman filtering problem with special initial conditions. Thus, the initial state and its error covariance for RHK filters are very important factors for the performance of estimation. However, RHK filters were derived with heuristic assumptions on initial conditions such as the infinite initial error covariance. In the derivation of the RHK filter, inversion of the state transition matrix is used in the iterative calculation to estimate state and error covariance matrix. For an infinite covariance, the inverse matrix becomes singular and the estimation problem may not be feasible. Furthermore, the optimality of RHK filters is not clear in their derivations, and they cannot be applied to time-varying systems. On the other hand, Kalman-like unbiased FIR (KUFIR) filters, whose concept is introduced in Figure 1b, are also proposed for the recursive FIR filtering [9,10,11,12,13,14,15,16]. KUFIR filtering is a recursive Kalman-like algorithm that ignores the noise statistics and initial conditions. These are ignored by determining the optimal horizon length which minimize the mean-square estimation error, then the recursive prediction and correction procedure are repeated without using noise statistics. Since the optimal horizon length is the only design parameter of the KUFIR filter, the determination of optimal horizon length is a major problem, hence, several algorithms have been developed to find the optimal horizon length. In [9], the optimal horizon length was derived for the l-degree polynomial model by minimizing the mean-square estimation error. In [11], it was measured using the correlation method, and in [12], it was determined by using a bank of KUFIR filters operating in parallel. For fast computation of the optimal horizon length, an adaptive KUFIR filter was also suggested for time-invariant systems in [15]. However, even though the horizon initial state can be ignored, the state at time in Figure 1b considered as an actual initial state is required at each estimation horizon, and they are obtained by calculating the batch form of the filter equation. In addition to the aforementioned computational inefficiency, these approaches have a common disadvantage due to the fact that heavy computational load is also required to specify the optimal horizon length, because it must be obtained for each horizon in time-varying systems. Moreover, the optimality of KUFIR filters is not guaranteed and the horizon length cannot be adjusted.

Figure 1.

(a) The concept of RHK filter. (b) The concept of KUFIR filter.

Therefore, in this paper, a new recursive optimal FIR (ROFIR) filter is proposed for linear time-varying systems in order to overcome the disadvantages of previous methods for recursive FIR filtering. The ROFIR filter is derived by employing the finite horizon Kalman filter and the optimal and unbiased initial state estimation. The initial state and its corresponding error covariance on the estimation horizon are obtained by solving the maximum likelihood estimation problem, then they initiate the finite horizon Kalman filter. Since the initial state is estimated from the measurements at each finite estimation horizon, the ROFIR filter does not require any priori initial information. In addition, the proposed ROFIR filter is derived without assumption on a nonsingular state-transition matrix and has less computational burden than the KUFIR filters for time-varying systems. Furthermore, the ROFIR filter provides the best linear unbiased estimate (BLUE) of the state on the finite estimation horizon. In addition, since AFIR filters in previous studies were designed in batch form, they were mostly focused on how to adjust the horizon length [17,18,19]. To the author’s best knowledge, there are no results on the adaptive FIR (AFIR) filters which consider noise statistics, thus, we propose a new adaptive FIR filtering algorithm by employing a sequential noise statistics estimation technique as an application of the proposed ROFIR filter.

This paper is organized as follows: In Section 2, the ROFIR filter is proposed for linear time-varying state-space models and its optimality and unbiasedness are proved. Moreover, the AFIR filter is also proposed by applying the modified sequential noise statistics estimation method to the proposed ROFIR filter. In Section 3, the performance and effectiveness of the proposed ROFIR and AFIR filters are shown and discussed via computer simulations. Finally, our conclusions are presented in Section 4.

2. Recursive Optimal FIR Filter and Adaptive FIR Filter

2.1. Recursive Optimal FIR Filter with Optimally Estimated Initial Conditions

Consider the following discrete time-varying state-space model:

where is the state vector, is the measurement, and are the process noise and measurement noise, respectivley. We assume that and are zero-mean white Gaussian and mutually uncorrelated. These noises are uncorrelated with the initial state and and denote the covariance matrices of and , respectively. The pair of the system (1) and (2) is assumed to be observable so that all modes are observed at the output and stabilized observers can be constructed.

On the horizon , the finite number of measurements is expressed as a batch form as follows:

is the finite number of measurements defined as:

The finite measurement noise vector and the finite process noise vector are defined by replacing in (4) with and , respectively, and and are defined as:

where .

The estimate of the horizon initial state at time k can be represented to be linear with finite measurements on the recent horizon as

where is the gain matrix of initial state estimator.

The optimally estimated initial state can be obtained by the following maximum likelihood criterion:

To maximize , we can equivalently maximize , or minimize the following cost function

By taking the derivative of with respect to as

then the optimal estimate of the horizon initial state can be obtained as

By taking the expectation on estimation error , we have

which shows that the maximum likelihood estimate of the initial state on the horizon is unbiased. Furthermore, the initial error covariance can be obtained with the aid of (11) as

In order to obtain recursive form of the optimally estimated initial conditions, define the following matrices:

Then, the optimal estimate of the horizon initial state in (16) and error covariance in (19) can be rewritten as

Although the optimal initial conditions are obtained, they have computationally inefficient batch forms. In order to obtain the recursive form of and in (25) and (26), and should be calculated recursively.

The recursive equations of and can be obtained as follows:

with , , and .

2.2. Opimallity and Unbiasdness of Recursive Optimal FIR Filter

In this section, the optimality and unbaisedness of the proposed ROFIR filter is verified. Since the conventional optimal FIR filter is optimal and provides unbiased estimate in the finite horizon, the optimality and unbiasedness of the proposed ROFIR filter can be verified by showing the equality with the conventional optimal FIR filter.

To begin with, the best linear unbiased FIR filter for linear time-varying state space is introduced. The best linear unbiased FIR filter is designed to have the properties of unbiasedness and optimality by design as per the following lemma.

Lemma 1.

Next, the finite horizon Kalman filter on the horizon can be represented as following theorem.

Theorem 1.

On the horizon , a batch form of finite horizon Kalman filter can be obtained as

Proof of Theorem 1.

By using the induction method, we can obtain a batch form of the finite horiozon Kalman filter as follows.

and can be obtained from and by substuting the Kalman gain matrix and covariance matrix into dynamic equation of Kalman filter as

where i is used instead of for simple notation.

By defining notations , , and as

where is defined by removing the last zero column from , the Equations (39) and (40) can be rewritten as

For , can be represented with initial state and covarinace as

and is calculated as

For , can be calculated from as

and can be obtained from as

This completes the proof. □

Finally, it can be shown that the finite horizon Kalman filter with estimated initial conditions is equivalent to the conventional optimal FIR filter (32) by applying the estimated initial state (16) and error covariance (19) to the finite horizon Kalman filter (38) as per the following theorem.

Theorem 2.

2.3. Adaptive FIR Filter with Sequential Noise Statistics Esitmation

Since the structure of the proposed ROFIR filter is exactly same as the Kalman filter on the horizon, many useful techniques of Kalman filtering can be applied to the the proposed ROFIR filter for improving the performance of FIR filter. In this section, we propose an AFIR filter as an application of the proposed ROFIR filter.

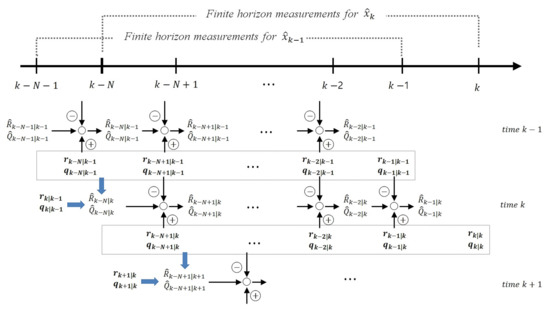

By applying modified sequential noise statistics esitmation method introduced in Figure 2 to ROFIR filter, an AFIR filter can be obtained as follows.

Figure 2.

The concept of modified sequential noise statistics estimation in AFIR filter.

Firtly, consider the linear measurement state relationship to estimate the measurement noise statistics. On the horizon , i-th approximation sample of the measurement noise, can be represented as

An unbiased estimate of the initial mean of the measurement noise at time k can be defined as

where .

Then, the unbiased estimation of initial variance can be obtained as

By using the expectation of as

the unbiased estimate of the initial measurement noise covariance can be obtained as

where .

The mean and covariance of the measurement noise can be obtained on the horizon as

for . For , and can be obtained by replacing and in (58) and (59) with and , respectively.

Secondly, for the process noise statistics, define the approximation of state noise sample on the horizon as

where is defined as the i-th process noise sample at time k. In the same way as the process of measurement noise statistics, an unbiased estimate for horizon initial sampled mean at time k can be represented as

where .

Then, the unbiased estimation of the initial process noise covariance can be represented as

where . Then, the mean and covariance of the process noise on the horizon can be calculated sequentially as

With the above sequential noise statistics esitmates, the AFIR filter can be obtained on the horizon as:

where the filter gain and prediction covariance matrix are obtained as

where the optimal unbiased estimate of the horizon initial state and the state covariance are obtained by (25) and (26) with estimated noise statistics and in (59) and (64), respectively.

Since the noise statistics estimated in the AFIR filter are obtained by using the measurements a step ahead of the estimation time, the modified sequential noise statistics estimation method in the proposed AFIR filter may provide more adaptive estimation results than results given by previous sequential noise statistics estimation methods in adaptive Kalman filtering.

3. Simulation Results and Discussion

To demonstrate the validity of the proposed filters, the estimation performance of the proposed algorithms are compared with the conventional Kalman filter, modified Sage-Husa adaptive Kalman (SHAK) filters [22], and limited memory adaptive Kalman (LMAK) filter [23] for the F-404 gas turbine aircraft engine model in [19]. The discrete-time nominal F-404 gas turbine aircraft engine model can be represented as follows:

where covariances matrices of the process noise and measurement noise are set as and , respectively.

Even if dynamic systems and signals are well-represented in the state-space model, it may undergo unpredictable changes, such as jumps in frequency, phase, and velocity. These effects typically occur over a short time horizon, so they are called temporary uncertainties. Although these effects typically occur over a short time interval, the filter should be robust enough to diminish the effects of the temporary uncertainty. Due to its structure and measurement processing manner, an FIR estimator is believed to be robust against numerical errors and temporary modeling uncertainties that may cause a divergence phenomenon in the case of the IIR filter. To illustrate this fact and the fast convergence, the proposed filters and Kalman filter are compared for the following temporarily uncertain model, where temporary uncertainties are added to the nominal models (68) and (69), as

where

and process and measurement noise covariance matrices are taken as and , respectively.

Filters are designed for the nominal state-space models (68) and (69), then they are applied to the temporarily uncertain system (70) and (71). Additionally, the horizon length is taken as and for the proposed FIR filters and LMAK filter, respectively, and the forgetting factor of SHAK filter is set as .

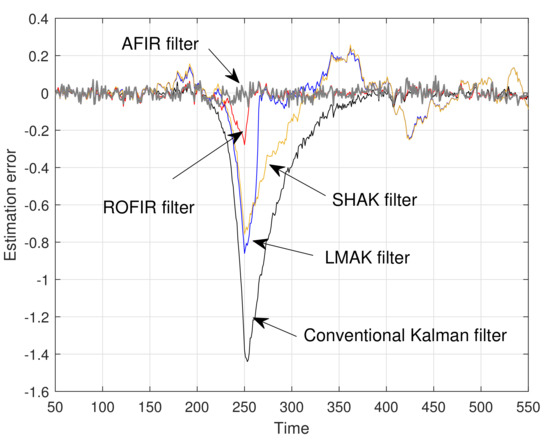

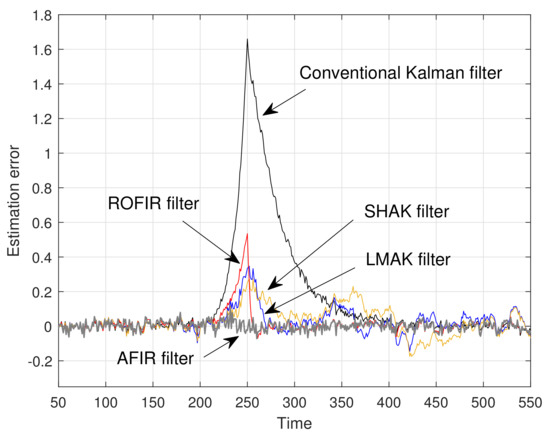

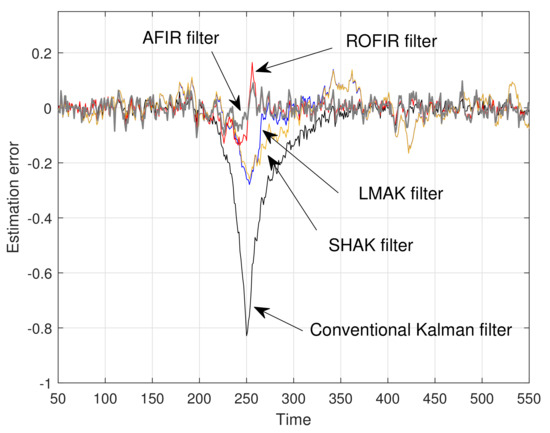

Figure 3, Figure 4 and Figure 5 show estimation errors for the states , , and , respectively, for five filters. In addition, mean relative estimation errors (MREE) are also compared in Table 1, Table 2 and Table 3. The MREE is defined as

where is the real state, is the estimate of the filter, and are the initial time and the end time of simulation, respectively.

Figure 3.

Estimation error for the first state ().

Figure 4.

Estimation error for the second state ().

Figure 5.

Estimation error for the third state ().

Table 1.

Comparison of the MREE for the first state ().

Table 2.

Comparison of the MREE for the second state ().

Table 3.

Comparison of the MREE for the third state ().

Since the conventional Kalman filter provides optimal estimates, the estimation errors of the conventional Kalman filter are smaller than those of other filters during the time interval , when there are no system uncertainties. However, from the simulation results in time intervals and , it can be seen that the proposed ROFIR and AFIR filters have smaller estimation errors and faster convergence speed than the conventional Kalman filter and adaptive Kalman filters. These results show that when model uncertainties exist, the proposed ROFIR and AFIR filters can work well and have better performance than the Kalman filters due to their FIR structure.

By comparing the simulation results of adaptive filters in time interval , it can be easily shown that the estimation errors of the proposed AFIR filter are remarkably smaller than those of LMAK filter, even though the horizon length of the proposed AFIR filter is larger than that of LMAK filter. In addition, the estimation errors of the proposed AFIR filter rapidly converge to zero after temporary model uncertainty disappears, whereas those of adaptive Kalman filters do not. Moreover, the estimation errors of adaptive Kalman filters fluctuate and oscillate during time interval , which are caused by accumulation of estimation errors. From these results, it can be assumed that the modified sequential noise statistics estimation method and its combination with recursive FIR filtering make for more adaptive and faster convergence performance than the sequential noise statistics estimation with Kalman filtering.

4. Conclusions

In this paper, the optimal- and recursive-form FIR filter was proposed by employing the Kalman filtering technique, moving the horizon estimation strategy for discrete time-varying state-space models. The initial state and its error covariance were optimally estimated in the maximum likelihood sense over the horizon, then they initiated the finite horizon Kalman filter. The proposed recursive optimal FIR filter was designed without assumption of nonsingular transition system matrix and any a priori initial information. In addition, it was also proved that the proposed ROFIR filter is the best linear estimator on the finite estimation horizon. Furthermore, by applying the modified sequential noise statistics estimation method to the ROFIR filter, an AFIR filter was also proposed as an application of the ROFIR filter, which shows that many useful techniques of Kalman filtering could be applied to the proposed ROFIR filter for improving the estimation performance of FIR filters. To validate the proposed filters, computer simulation was performed and it was shown that the proposed filters were more accurate and robust than other conventional Kalman filters and adaptive Kalman filters.

Author Contributions

Conceptualization, B.K.; methodology, B.K.; software, B.K.; validation, B.K. and S.-i.K.; formal analysis, B.K.; investigation, B.K. and S.-i.K.; resources, B.K. and S.-i.K.; data curation, B.K. and S.-i.K.; writing—original draft preparation, B.K.; writing—review and editing, S.-i.K.; visualization, B.K.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| IIR | Infinite Impulse Response |

| FIR | Finite Impulse Response |

| BIBO | Bounded Input Bounded Output |

| RHK | Receding Horizon Kalman |

| KUFIR | Kalman-like Unbiased Finite Impulse Response |

| ROFIR | Recursive Optimal Finite Impulse Response |

| AFIR | Adative Finite Impulse Response |

| SHAK | Sage-Husa Adaptive Kalman |

| LMAK | Limited Memory Adaptive Kalman |

References

- Fitzgerald, R.J. Divergence of the Kalman filter. IEEE Trans. Autom. Control 1971, 6, 736–747. [Google Scholar] [CrossRef]

- Sangsuk-Iam, S.; Bullock, T.E. Analysis of discrete-time Kalman filtering under incorrect noise covariances. IEEE Trans. Autom. Control 1990, 35, 1304–1309. [Google Scholar] [CrossRef]

- Grewal, M.S.; Anderews, A.P. Kalman Filtering—Theory and Practice; Prentice-Hall: Englewood Cliffs, NJ, USA, 1993. [Google Scholar]

- Kwon, W.H.; Lee, K.S.; Kwon, O.K. Optimal FIR Filters for Time-Varying State-Space Models. IEEE Trans. Aerosp. Electron. Syst. 1990, 26, 1011–1021. [Google Scholar] [CrossRef]

- Kwon, B.; Quan, Z.; Han, S. A robust fixed-lag receding horizon smoother for uncertain state space models. Int. J. Adapt. Control Signal Process. 2015, 29, 1354–1366. [Google Scholar] [CrossRef]

- Kwon, B.; Han, S.; Han, S. Improved Receding Horizon Fourier Analysis for Quasi-periodic Signals. J. Electr. Eng. Technol. 2017, 12, 378–384. [Google Scholar] [CrossRef]

- Kwon, W.H.; Kim, P.S.; Park, P. A receding horizon Kalman FIR filter for discrete time-invariant systems. IEEE Trans. Autom. Control 1999, 44, 1787–1791. [Google Scholar] [CrossRef]

- Kwon, W.H.; Kim, P.S.; Han, S.H. A Receding Horizon Unbiased FIR Filter for Discrete-Time State Space Models. Automatica 2002, 38, 545–551. [Google Scholar] [CrossRef]

- Shmaliy, Y.S.; Munoz-Diaz, J.; Arceo-Miquel, L. Optimal horizons for a one-parameter family of unbiased FIR filter. Digit. Signal Process. 2008, 18, 739–750. [Google Scholar] [CrossRef]

- Shmaliy, Y.S. An iterative Kalman-like algorithm ignoring noise and initial conditions. IEEE Trans. Signal Process 2011, 59, 2465–2473. [Google Scholar] [CrossRef]

- Kou, Y.; Jiao, Y.; Xu, D.; Zhang, M.; Liu, Y.; Li, X. Low-cost precise measurement of oscillator frequency instability based on GNSS carrier observation. Adv. Space Res. 2012, 51, 969–977. [Google Scholar] [CrossRef]

- Pak, J.M.; Ahn, C.K.; Shmaliy, Y.S.; Shi, P.; Lim, M.T. Switching extensible FIR filter bank for adaptive horizon state estimation with application. IEEE Trans. Control Syst. Technol. 2016, 24, 1052–1058. [Google Scholar] [CrossRef]

- Shmaliy, Y.S.; Khan, S.; Zhao, S. Ultimate iterative UFIR filtering algorithm. Measurement 2016, 92, 236–242. [Google Scholar] [CrossRef]

- Zhao, S.; Shmaliy, Y.S.; Liu, F. Fast Kalman-Like Optimal Unbiased FIR Filtering with Applications. IEEE Trans. Signal Process. 2016, 64, 2284–2297. [Google Scholar] [CrossRef]

- Zhao, S.; Shmaliy, Y.S.; Ahn, C.K.; Liu, F. Adaptive-Horizon Iterative UFIR Filtering Algorithm with Applications. IEEE Trans. Ind. Electron. 2018, 65, 6393–6402. [Google Scholar] [CrossRef]

- Zhao, S.; Shmaliy, Y.S.; Ahn, C.K.; Liu, F. Self-Tuning Unbiased Finite Impulse Response Filtering Algorithm for Processes with Unknown Measurement Noise Covariance. IEEE Trans. Control Syst. Technol. 2021, 29, 1372–1379. [Google Scholar] [CrossRef]

- Pak, J.M.; Yoo, S.Y.; Lim, M.T.; Song, M.K. Weighted Average Extended FIR Filter Bank to Manage the Horizon Size in Nonlinear FIR Filtering. Int. J. Control Autom. Syst. 2014, 13, 138–145. [Google Scholar] [CrossRef]

- Pak, J.M.; Kim, P.S.; You, S.H.; Lee, S.S.; Song, M.K. Extended Least Square Unbiased FIR Filter for Target Tracking Using the Constant Velocity Motion Model. Int. J. Control Autom. Syst. 2017, 15, 947–951. [Google Scholar] [CrossRef]

- Kim, P.S. Selective Finite Memory Structure Filtering Using the Chi-Square Test Statistic for Temporarily Uncertain Systems. Appl. Sci. 2019, 9, 4257. [Google Scholar] [CrossRef]

- Kwon, B.; Han, S.; Lee, K. Robust Estimation and Tracking of Power System Harmonics Using an Optimal Finite Impulse Response Filter. Energies 2018, 11, 1811. [Google Scholar] [CrossRef]

- Kwon, B. An Optimal FIR Filter for Discrete Time-varying State Space Models. J. Inst. Control Robot. Syst. 2011, 17, 1183–1187. [Google Scholar] [CrossRef][Green Version]

- Akhlaghi, S.; Zhou, N.; Huang, Z. Adaptive Adjustment of Noise Covariance in Kalman Filter for Dynamic State Estimation. In Proceedings of the 2017 IEEE Power and Energy Society General Meeting, Chicago, IL, USA, 16–20 July 2017. [Google Scholar]

- Myers, K.; Tapley, B. Adaptive sequential estimation with unknown noise statistics. IEEE Trans. Autom. Control 1976, 21, 520–523. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).