Nanovised Control Flow Attestation

Abstract

:1. Introduction

- Single threads—C-FLAT is available only for a single thread.

- Single Process— C-FLAT is available only for a single process.

- Multi-core—C-FLAT is utilized only for a single process.

2. Related Work

3. Threat Model

4. Background

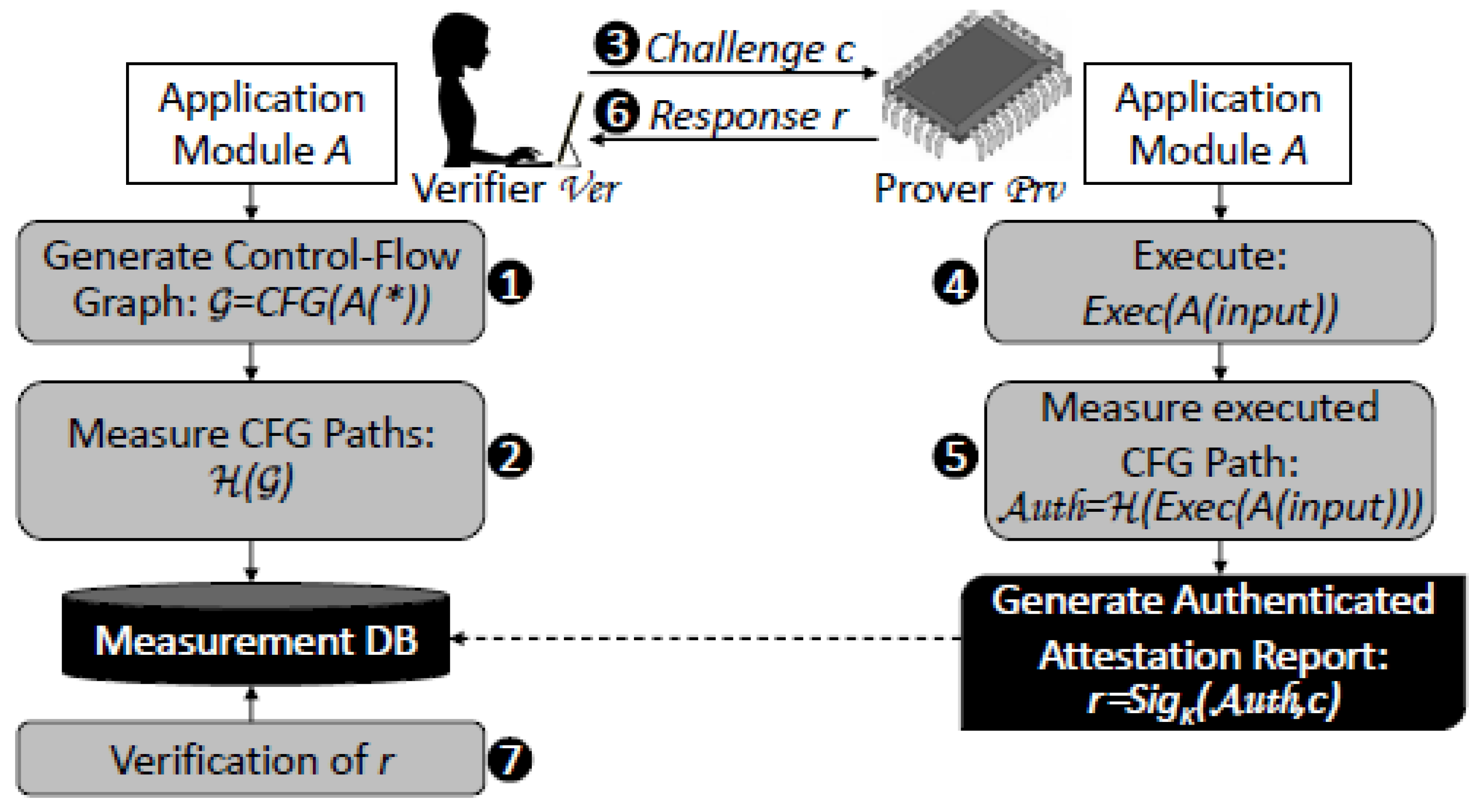

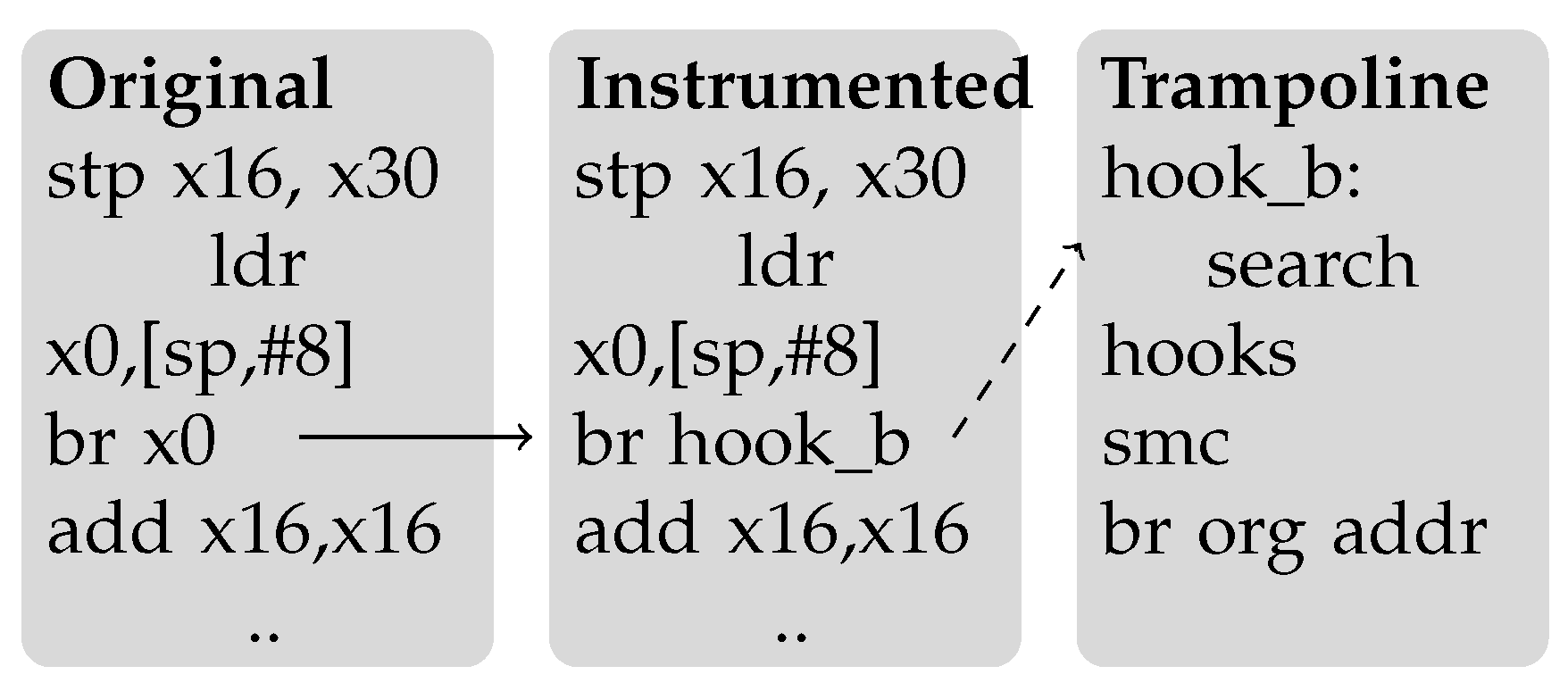

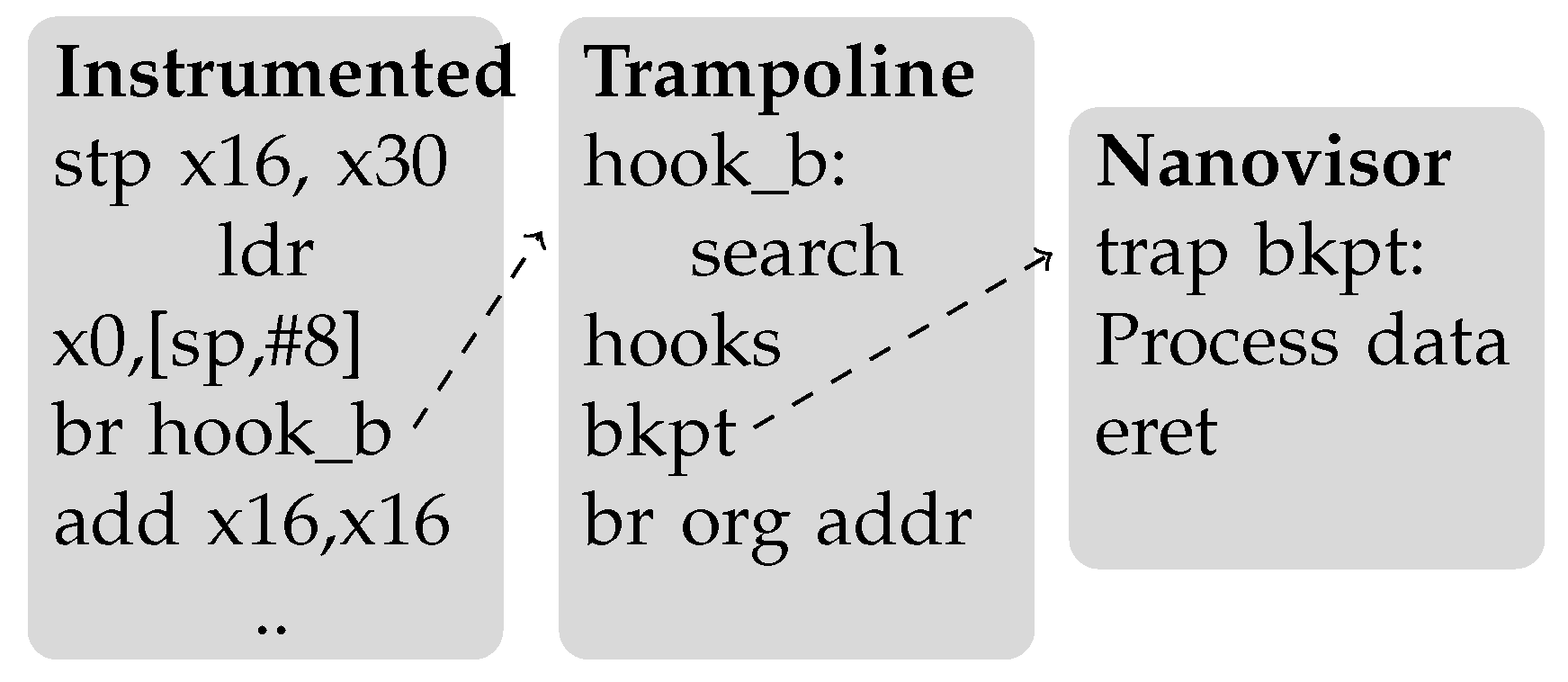

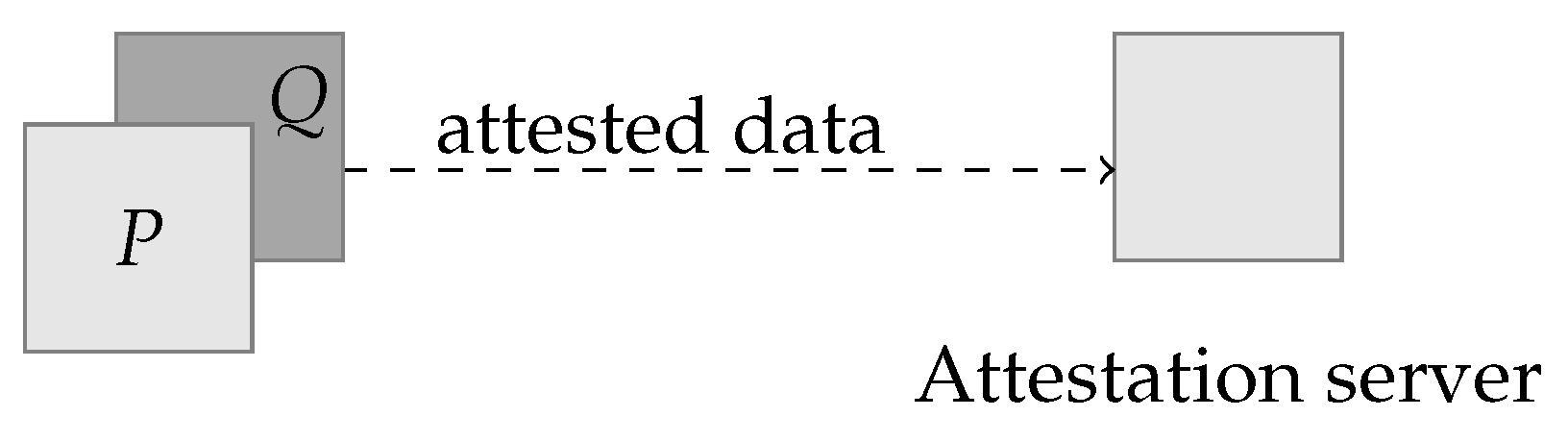

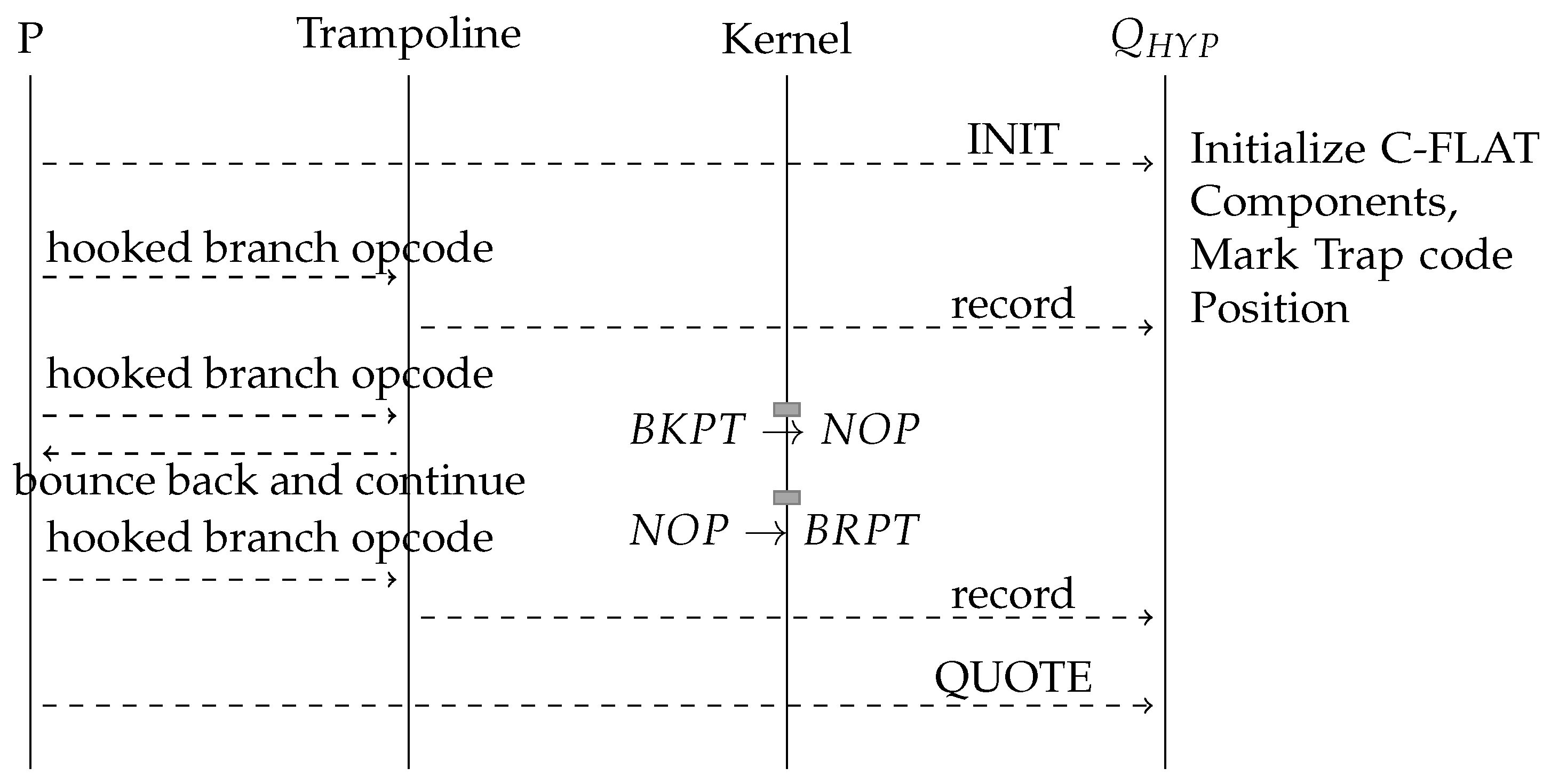

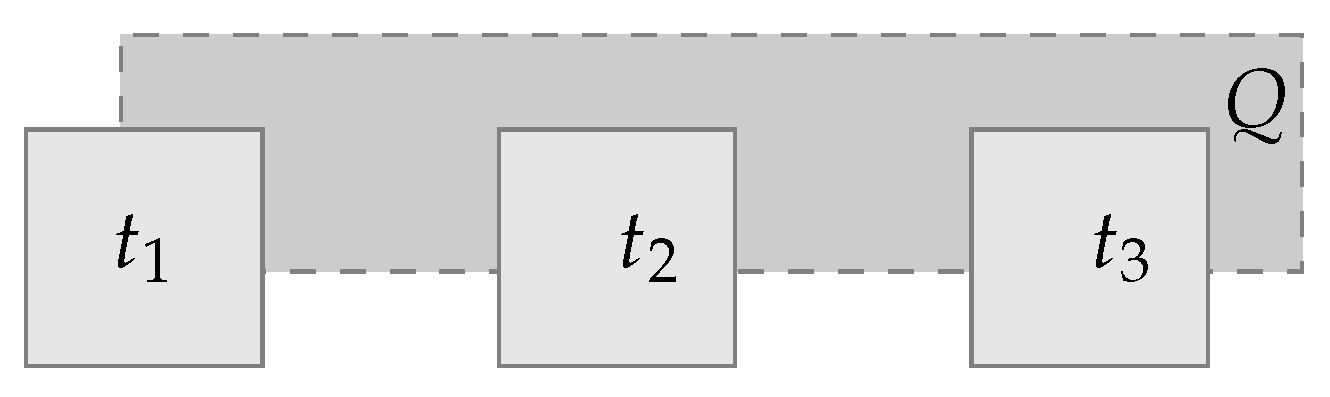

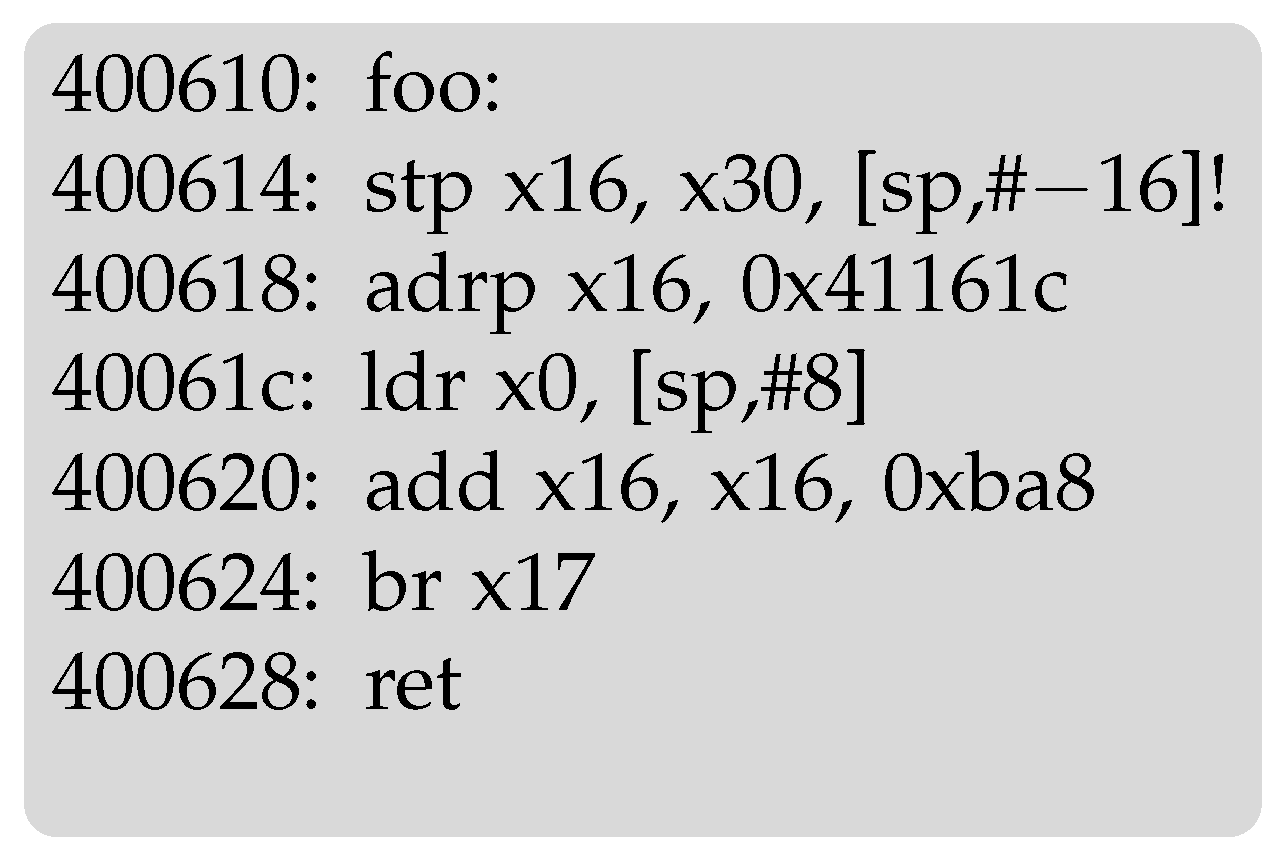

5. Control Flow Attestation for Linux

5.1. Performance

- 1.

- The size of the BKPT opcode (16 bit or 32 bit);

- 2.

- The position of the opcode in the program’s address space;

- 3.

- Identify that a protected program runs (or sleeps) without the need to wait for traps.

5.2. GPOS Considerations

5.3. The Hyplet Nanovisor

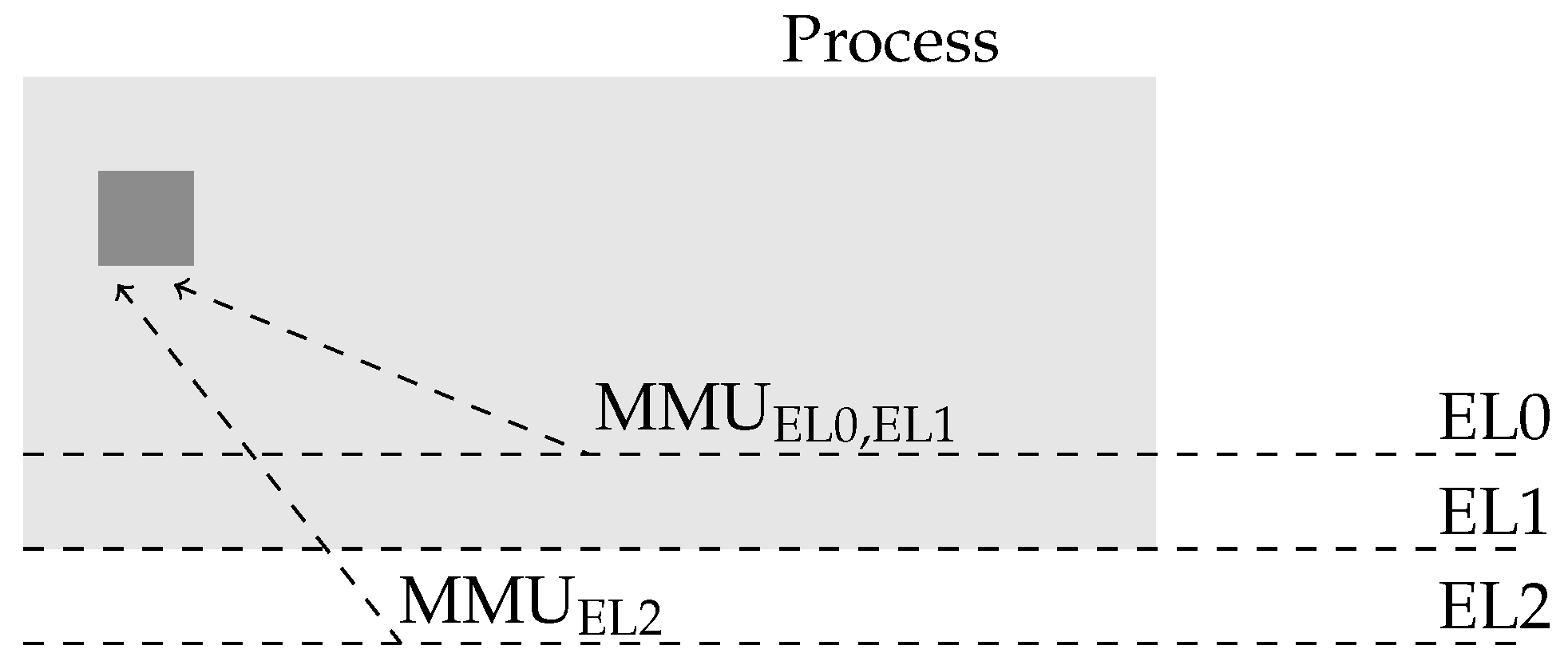

5.3.1. The Hyplet Protection

5.3.2. The Hyplet Security

5.3.3. Static Analysis to Eliminate Security Concerns

6. Evaluation

6.1. Test 1: Transition Latency

6.2. Test 2: Latency Dissection

- Without C-FLAT Linux—to establish the baseline performance;

- With C-FLAT Linux with its BKPT opcodes replaced by the NOP opcode—to measure the degradation associated with the presence of C-FLAT Linux;

- With C-FLAT Linux and full instrumentation—to measure the maximum performance degradation.

- In each operation, the program jumps to the trampoline twice: in the condition of the for and in the condition of the if;

- The number of iterations if 100,000;

- The number of trampoline invocations is 200,000;

- According to Table 2, a single entry to the Nanovisor takes 92 nanoseconds; 200,000 entries will take 18,400 s.

- 148,056 represents the total execution time in the “C-FLAT with BKPT” configuration;

- 5188 the total execution time in the “No C-FLAT” configuration;

- 37,097 the total execution time in the “C-FLAT with NOPs” configuration;

- 18,400 represents the time required to enter the Nanovisor.

6.3. Test 3: Real-World Performance

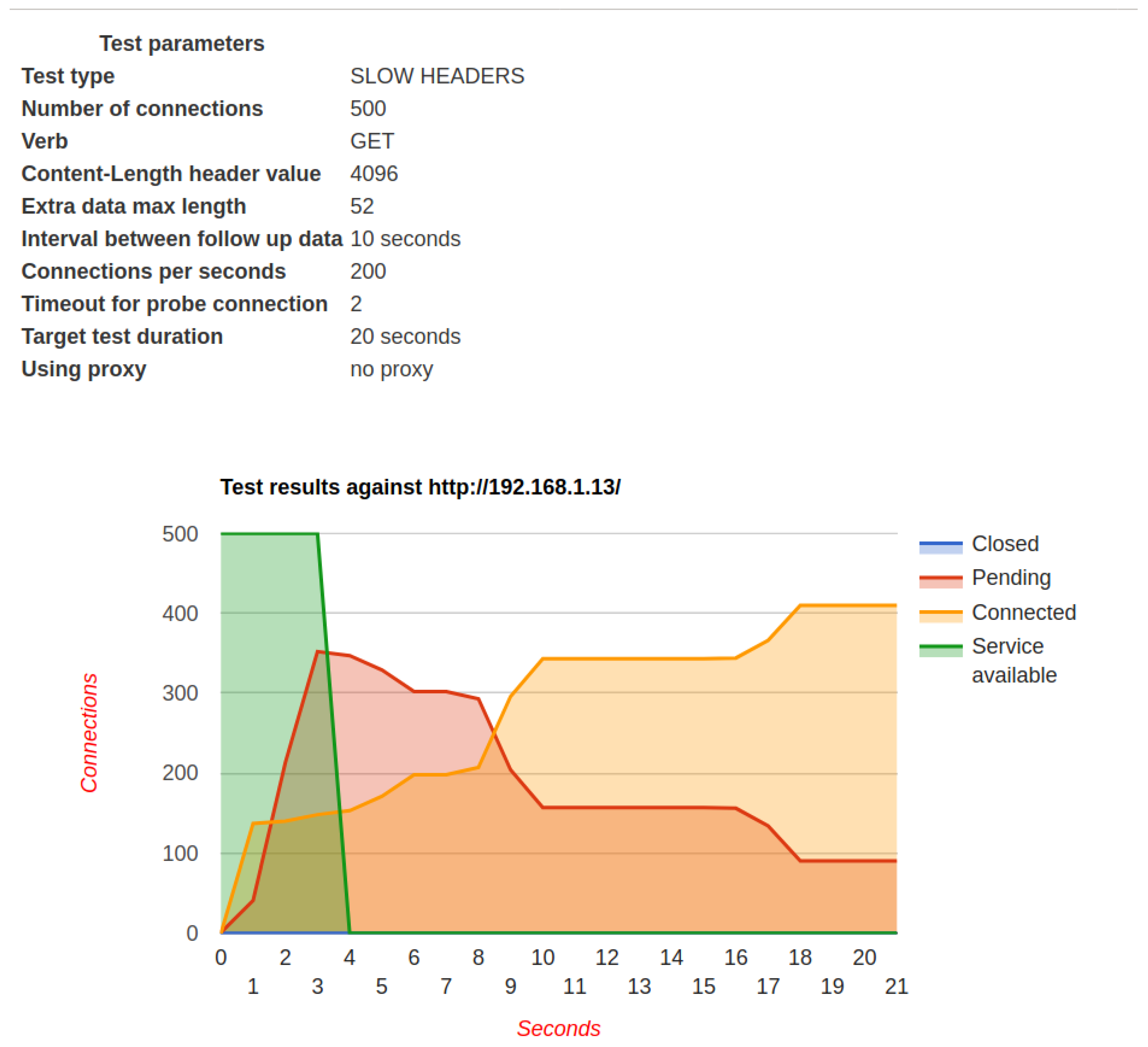

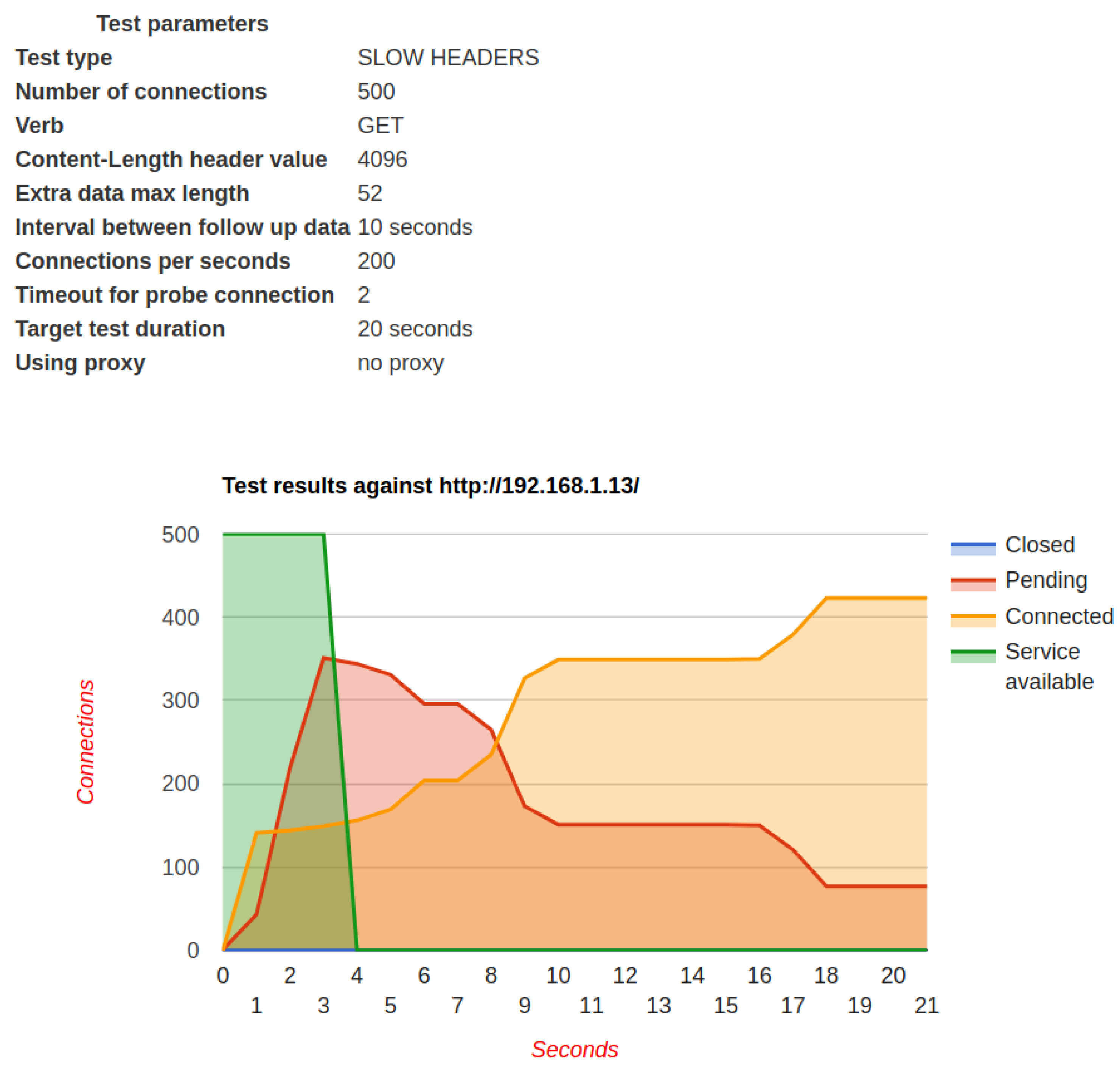

6.4. Test 4: Web Server Performance

- unixd_accept;

- default_handler;

- core_create_req;

- core_create_conn;

- core_pre_connection.

6.5. Test 5: Security

7. Discussion

8. Summary

8.1. Future Work

8.2. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Glossary: | |

| VHE | Virtual Host Extension |

| EL | Exception Level |

| BKPT | Breakpoint |

| TEE | Trusted Execution Environment |

| RPC | Remote Procedure Call |

| ISR | Interrupt Service Routine |

| ELF | Executable and Linking Format |

| SMC | System Monitor Call |

| SVC | System Supervisor Call |

| Ver | Verifier |

| Prv | Prover |

| MOSKG | Multiple Operating Systems Kernel Guard |

| DKOM | Dynamic Kernel Object Manipulation |

| ROP | Return Oriented Programming |

| KSP | Kernel Stack Protect |

| DOS | Denial Of Service |

| CFI | Control Flow Inspection |

| CPI | Control Pointer Integrity |

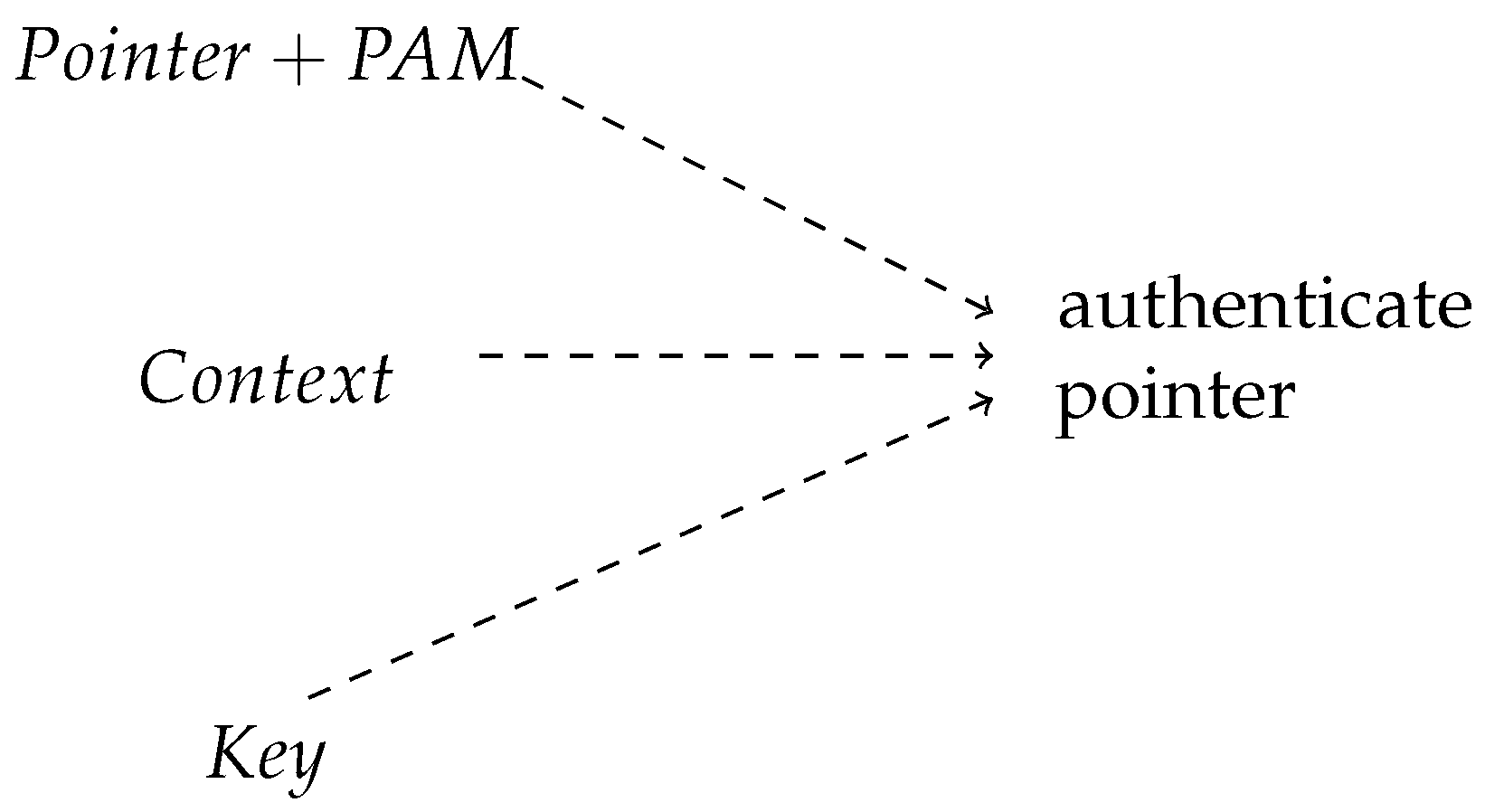

| PAC | Pointer Authentication Code |

| NOP | No Operation |

| TLS | Thread Local Storage |

| QSEE | Qualcomm Secure Execution Environment |

| OP-TEE | Open Portable Trusted Execution Environment |

References

- Abera, T.; Asokan, N.; Davi, L.; Ekberg, J.E.; Nyman, T.; Paverd, A.; Sadeghi, A.R.; Tsudik, G. C-FLAT: Control-flow attestation for embedded systems software. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 743–754. [Google Scholar]

- Yehuda, R.B.; Zaidenberg, N.J. Protection against reverse engineering in ARM. Int. J. Inf. Secur. 2020, 19, 39–51. [Google Scholar] [CrossRef]

- Flur, S.; Gray, K.E.; Pulte, C.; Sarkar, S.; Sezgin, A.; Maranget, L.; Deacon, W.; Sewell, P. Modelling the ARMv8 architecture, operationally: Concurrency and ISA. In Proceedings of the 43rd Annual ACM SIGPLAN-SIGACT Symposium on Principles of Programming Languages, St. Petersburg, FL, USA, 20–22 January 2016; Volume 51, pp. 608–621. [Google Scholar]

- Tice, C.; Roeder, T.; Collingbourne, P.; Checkoway, S.; Erlingsson, Ú.; Lozano, L.; Pike, G. Enforcing Forward-Edge Control-Flow Integrity in GCC & LLVM. In Proceedings of the 23rd USENIX Security Symposium (USENIX Security 14), San Diego, CA, USA, 20–22 August 2014; pp. 941–955. [Google Scholar]

- Abadi, M.; Budiu, M.; Erlingsson, Ú.; Ligatti, J. Control-flow integrity principles, implementations, and applications. ACM Trans. Inf. Syst. Secur. (TISSEC) 2009, 13, 4. [Google Scholar] [CrossRef]

- Dessouky, G.; Zeitouni, S.; Nyman, T.; Paverd, A.; Davi, L.; Koeberl, P.; Asokan, N.; Sadeghi, A.R. Lo-fat: Low-overhead control flow attestation in hardware. In Proceedings of the 54th Annual Design Automation Conference 2017, Austin, TX, USA, 18–22 June 2017; pp. 1–6. [Google Scholar]

- Clements, A.A.; Almakhdhub, N.S.; Saab, K.S.; Srivastava, P.; Koo, J.; Bagchi, S.; Payer, M. Protecting bare-metal embedded systems with privilege overlays. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 289–303. [Google Scholar]

- Liu, J.; Yu, Q.; Liu, W.; Zhao, S.; Feng, D.; Luo, W. Log-Based Control Flow Attestation for Embedded Devices. In Cyberspace Safety and Security; Vaidya, J., Zhang, X., Li, J., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 117–132. [Google Scholar]

- Zhang, Y.; Liu, X.; Sun, C.; Zeng, D.; Tan, G.; Kan, X.; Ma, S. ReCFA: Resilient Control-Flow Attestation. In Annual Computer Security Applications Conference; Association for Computing Machinery: New York, NY, USA, 2021; pp. 311–322. [Google Scholar]

- De Oliveira Nunes, I.; Jakkamsetti, S.; Tsudik, G. Tiny-CFA: Minimalistic Control-Flow Attestation Using Verified Proofs of Execution. In Proceedings of the 2021 Design, Automation Test in Europe Conference Exhibition (DATE), Grenoble, France, 1–5 February 2021; pp. 641–646. [Google Scholar] [CrossRef]

- Hu, J.; Huo, D.; Wang, M.; Wang, Y.; Zhang, Y.; Li, Y. A Probability Prediction Based Mutable Control-Flow Attestation Scheme on Embedded Platforms. In Proceedings of the 2019 18th IEEE International Conference On Trust, Security and Privacy in Computing and Communications/13th IEEE International Conference On Big Data Science And Engineering (TrustCom/BigDataSE), Rotorua, New Zealand, 5–8 August 2019; pp. 530–537. [Google Scholar] [CrossRef]

- Moreira, J.; Rigo, S.; Polychronakis, M.; Kemerlis, V.P. Drop the Rop Fine-Grained Control-Flow Integrity for the Linux Kernel. Black Hat Asia. 2017. Available online: https://www.blackhat.com/docs/asia-17/materials/asia-17-Moreira-Drop-The-Rop-Fine-Grained-Control-Flow-Integrity-For-The-Linux-Kernel-wp.pdf (accessed on 17 February 2022).

- Davi, L.; Dmitrienko, A.; Egele, M.; Fischer, T.; Holz, T.; Hund, R.; Nürnberger, S.; Sadeghi, A.R. MoCFI: A Framework to Mitigate Control-Flow Attacks on Smartphones. In Proceedings of the NDSS, San Diego, CA, USA, 5–8 February 2012; Volume 26, pp. 27–40. [Google Scholar]

- Van der Veen, V.; Andriesse, D.; Göktaş, E.; Gras, B.; Sambuc, L.; Slowinska, A.; Bos, H.; Giufida, C. Practical context-sensitive CFI. In Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security, Denver, CO, USA, 12–16 October 2015; pp. 927–940. [Google Scholar]

- Pappas, V. kBouncer: Efficient and transparent ROP mitigation. Apr 2012, 1, 1–2. [Google Scholar]

- Seshadri, A.; Perrig, A.; Van Doorn, L.; Khosla, P. SWATT: Software-based attestation for embedded devices. In Proceedings of the IEEE Symposium on Security and Privacy, Berkeley, CA, USA, 12 May 2004; pp. 272–282. [Google Scholar]

- Seshadri, A.; Luk, M.; Shi, E.; Perrig, A.; Van Doorn, L.; Khosla, P. Pioneer: Verifying code integrity and enforcing untampered code execution on legacy systems. In Proceedings of the Twentieth ACM Symposium on Operating Systems Principles, Brighton, UK, 23–26 October 2005; pp. 1–16. [Google Scholar]

- Li, Y.; McCune, J.M.; Perrig, A. VIPER: Verifying the integrity of PERipherals’ firmware. In Proceedings of the 18th ACM Conference on Computer and Communications Security, Chicago, IL, USA, 17–21 October 2011; pp. 3–16. [Google Scholar]

- Eldefrawy, K.; Tsudik, G.; Francillon, A.; Perito, D. SMART: Secure and Minimal Architecture for (Establishing Dynamic) Root of Trust. In Proceedings of the NDSS, San Diego, CA, USA, 5–8 February 2012; Volume 12, pp. 1–15. [Google Scholar]

- Koeberl, P.; Schulz, S.; Sadeghi, A.R.; Varadharajan, V. TrustLite: A security architecture for tiny embedded devices. In Proceedings of the Ninth European Conference on Computer Systems, Amsterdam, The Netherlands, 14–16 April 2014; pp. 1–14. [Google Scholar]

- Brasser, F.; El Mahjoub, B.; Sadeghi, A.R.; Wachsmann, C.; Koeberl, P. TyTAN: Tiny trust anchor for tiny devices. In Proceedings of the 52nd Annual Design Automation Conference, San Francisco, CA, USA, 8–12 June 2015; pp. 1–6. [Google Scholar]

- Zhang, C.; Wei, T.; Chen, Z.; Duan, L.; Szekeres, L.; McCamant, S.; Song, D.; Zou, W. Practical control flow integrity and randomization for binary executables. In Proceedings of the 2013 IEEE Symposium on Security and Privacy, Berkeley, CA, USA, 19–22 May 2013; pp. 559–573. [Google Scholar]

- Zhang, M.; Sekar, R. Control flow integrity for COTS binaries. In Proceedings of the 22nd USENIX Security Symposium (USENIX Security 13), Washington, DC, USA, 14–16 August 2013; pp. 337–352. [Google Scholar]

- Kuznetzov, V.; Szekeres, L.; Payer, M.; Candea, G.; Sekar, R.; Song, D. Code-pointer integrity. In The Continuing Arms Race: Code-Reuse Attacks and Defenses; ACM: New York, NY, USA, 2018; pp. 81–116. [Google Scholar]

- Evans, I.; Fingeret, S.; Gonzalez, J.; Otgonbaatar, U.; Tang, T.; Shrobe, H.; Sidiroglou-Douskos, S.; Rinard, M.; Okhravi, H. Missing the point (er): On the effectiveness of code pointer integrity. In Proceedings of the 2015 IEEE Symposium on Security and Privacy, San Jose, CA, USA, 17–21 May 2015; pp. 781–796. [Google Scholar]

- Nagarajan, A.; Varadharajan, V.; Hitchens, M.; Gallery, E. Property based attestation and trusted computing: Analysis and challenges. In Proceedings of the 2009 Third International Conference on Network and System Security, Gold Coast, QLD, Australia, 19–21 October 2009; pp. 278–285. [Google Scholar]

- Sadeghi, A.R.; Stüble, C. Property-based attestation for computing platforms: Caring about properties, not mechanisms. In Proceedings of the 2004 Workshop on New Security Paradigms, Victoria, BC, Canada, 20–23 September 2004; pp. 67–77. [Google Scholar]

- Chen, L.; Landfermann, R.; Löhr, H.; Rohe, M.; Sadeghi, A.R.; Stüble, C. A protocol for property-based attestation. In Proceedings of the First ACM Workshop on Scalable Trusted Computing, Alexandria, VA, USA, 3 November 2006; pp. 7–16. [Google Scholar]

- Seshadri, A.; Luk, M.; Qu, N.; Perrig, A. SecVisor: A tiny hypervisor to provide lifetime kernel code integrity for commodity OSes. In Proceedings of the Twenty-First ACM SIGOPS Symposium on Operating Systems Principles, Stevenson, WA, USA, 14–17 October 2007; pp. 335–350. [Google Scholar]

- Liu, W.; Luo, S.; Liu, Y.; Pan, L.; Safi, Q.G.K. A kernel stack protection model against attacks from kernel execution units. Comput. Secur. 2018, 72, 96–106. [Google Scholar] [CrossRef]

- Yan, G.; Luo, S.; Feng, F.; Pan, L.; Safi, Q.G.K. MOSKG: Countering kernel rootkits with a secure paging mechanism. Secur. Commun. Netw. 2015, 8, 3580–3591. [Google Scholar] [CrossRef]

- Azab, A.M.; Ning, P.; Sezer, E.C.; Zhang, X. HIMA: A hypervisor-based integrity measurement agent. In Proceedings of the 2009 Annual Computer Security Applications Conference, Honolulu, HI, USA, 7–11 December 2009; pp. 461–470. [Google Scholar]

- Khen, E.; Zaidenberg, N.J.; Averbuch, A.; Fraimovitch, E. Lgdb 2.0: Using lguest for kernel profiling, code coverage and simulation. In Proceedings of the 2013 International Symposium on Performance Evaluation of Computer and Telecommunication Systems (SPECTS), Toronto, ON, Canada, 7–10 July 2013; pp. 78–85. [Google Scholar]

- Zaidenberg, N.J.; Khen, E. Detecting kernel vulnerabilities during the development phase. In Proceedings of the 2015 IEEE 2nd International Conference on Cyber Security and Cloud Computing, New York, NY, USA, 3–5 November 2015; pp. 224–230. [Google Scholar]

- Kiperberg, M.; Leon, R.; Resh, A.; Algawi, A.; Zaidenberg, N. Hypervisor-assisted Atomic Memory Acquisition in Modern Systems. In International Conference on Information Systems Security and Privacy; SCITEPRESS Science And Technology Publications: Setúbal, Portugal, 2019. [Google Scholar]

- Ligh, M.H.; Case, A.; Levy, J.; Walters, A. The Art of Memory Forensics: Detecting Malware and Threats in Windows, Linux, and Mac Memory; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Case, A.; Richard III, G.G. Memory forensics: The path forward. Digit. Investig. 2017, 20, 23–33. [Google Scholar] [CrossRef]

- Aljaedi, A.; Lindskog, D.; Zavarsky, P.; Ruhl, R.; Almari, F. Comparative analysis of volatile memory forensics: Live response vs. memory imaging. In Proceedings of the 2011 IEEE Third International Conference on Privacy, Security, Risk and Trust and 2011 IEEE Third International Conference on Social Computing, Boston, MA, USA, 9–11 October 2011; pp. 1253–1258. [Google Scholar]

- Lu, S.; Lin, Z.; Zhang, M. Kernel Vulnerability Analysis: A Survey. In Proceedings of the 2019 IEEE Fourth International Conference on Data Science in Cyberspace (DSC), Hangzhou, China, 23–25 June 2019; pp. 549–554. [Google Scholar]

- Lu, H. Elf: From the Programmer’s Perspective. 1995. Available online: https://www.linux.co.cr/free-unix-os/review/acrobat/950517.pdf (accessed on 17 February 2022).

- Drepper, U.; Molnar, I. The Native POSIX thread Library for Linux. 2003. Available online: https://www.cs.utexas.edu/~witchel/372/lectures/POSIX_Linux_Threading.pdf (accessed on 17 February 2022).

- Ben Yehuda, R.; Zaidenberg, N. Hyplets-Multi Exception Level Kernel towards Linux RTOS. In Proceedings of the 11th ACM International Systems and Storage Conference, Haifa, Israel, 4–7 June 2018; pp. 116–117. [Google Scholar]

- Ben Yehuda, R.; Zaidenberg, N. The hyplet-Joining a Program and a Nanovisor for real-time and Performance. In Proceedings of the SPECTS, the 2020 International Symposium on Performance Evaluation of Computer and Telecommunication Systems Conference, Madrid, Spain, 20–22 July 2020. [Google Scholar]

- Dall, C.; Nieh, J. KVM/ARM: The design and implementation of the linux ARM hypervisor. ACM Sigarch Comput. Archit. News 2014, 42, 333–348. [Google Scholar] [CrossRef]

- Open Portable Trusted Execution Environment. Available online: https://www.op-tee.org/ (accessed on 17 February 2022).

- Corbet, J. BPF Comes to Firewalls. 2018. Available online: https://lwn.net/Articles/747551/#:~:text=The%20use%20of%20BPF%20enables,security%20to%20the%20whole%20system (accessed on 17 February 2022).

- Wang, Z.; Jiang, X. Hypersafe: A lightweight approach to provide lifetime hypervisor control-flow integrity. In Proceedings of the 2010 IEEE Symposium on Security and Privacy, Oakland, CA, USA, 16–19 May 2010; pp. 380–395. [Google Scholar]

- AdvanceCOMP. Available online: http://www.advancemame.it/ (accessed on 17 February 2022).

- Damon, E.; Dale, J.; Laron, E.; Mache, J.; Land, N.; Weiss, R. Hands-on denial of service lab exercises using slowloris and rudy. In Proceedings of the 2012 Information Security Curriculum Development Conference, Kennesaw, GA, USA, 12–13 October 2012; pp. 21–29. [Google Scholar]

- Cambiaso, E.; Papaleo, G.; Chiola, G.; Aiello, M. Slow DoS attacks: Definition and categorisation. Int. J. Trust. Manag. Comput. Commun. 2013, 1, 300–319. [Google Scholar] [CrossRef]

- Aulds, C. Linux Apache Web Server Administration; Sybex: Hoboken, NJ, USA, 2002. [Google Scholar]

- SlowHttpTest Linux Man Page. Available online: https://linux.die.net/man/1/slowhttptest (accessed on 17 February 2022).

| Soc | Broadcom BCM2837 |

|---|---|

| CPU | 4 cores, ARM Cortex A53, 1.2 GHz, (clocked to 700 MHz) |

| RAM | 1GB LPDDR2 (900 MHz) |

| Clock | 19.2 Mhz |

| Measure | BRK Trap |

|---|---|

| Avg | 92 ns |

| StdDev | 41 ns |

| Max | 156 ns |

| Min | 52 ns |

| Test | Avg | Max | Min | Std Dev |

|---|---|---|---|---|

| No C-FLAT | 5188 | 5823 | 5055 | 241 |

| C-FLAT with NOPs | 37,097 | 37,237 | 36,979 | 92 |

| C-FLAT with BKPT | 148,056 | 154,086 | 146,994 | 2174 |

| Input | Test | Avg | Max | Min | Std Dev |

|---|---|---|---|---|---|

| Legal | No C-FLAT | 1628 | 1688 | 1606 | 31.8 |

| Legal | C-FLAT with BKPT | 2655 | 2795 | 2625 | 52 |

| Erroneous | No C-FLAT | 21.7 | 23 | 21 | 0.67 |

| Erroneous | C-FLAT with BKPT | 113 | 119 | 111 | 3 |

| SlowLoris | b | b | b | b | p | b | b | p | b |

| Browser | b | b | b | b | b | b | b | ||

| wget | b | b | b | b | b |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ben Yehuda, R.; Kiperberg, M.; Zaidenberg, N.J. Nanovised Control Flow Attestation. Appl. Sci. 2022, 12, 2669. https://doi.org/10.3390/app12052669

Ben Yehuda R, Kiperberg M, Zaidenberg NJ. Nanovised Control Flow Attestation. Applied Sciences. 2022; 12(5):2669. https://doi.org/10.3390/app12052669

Chicago/Turabian StyleBen Yehuda, Raz, Michael Kiperberg, and Nezer Jacob Zaidenberg. 2022. "Nanovised Control Flow Attestation" Applied Sciences 12, no. 5: 2669. https://doi.org/10.3390/app12052669

APA StyleBen Yehuda, R., Kiperberg, M., & Zaidenberg, N. J. (2022). Nanovised Control Flow Attestation. Applied Sciences, 12(5), 2669. https://doi.org/10.3390/app12052669