The Application of Hyperspectral Remote Sensing Imagery (HRSI) for Weed Detection Analysis in Rice Fields: A Review

Abstract

:1. Introduction

2. Methodology

3. Weeds in Malaysia’s Rice Field

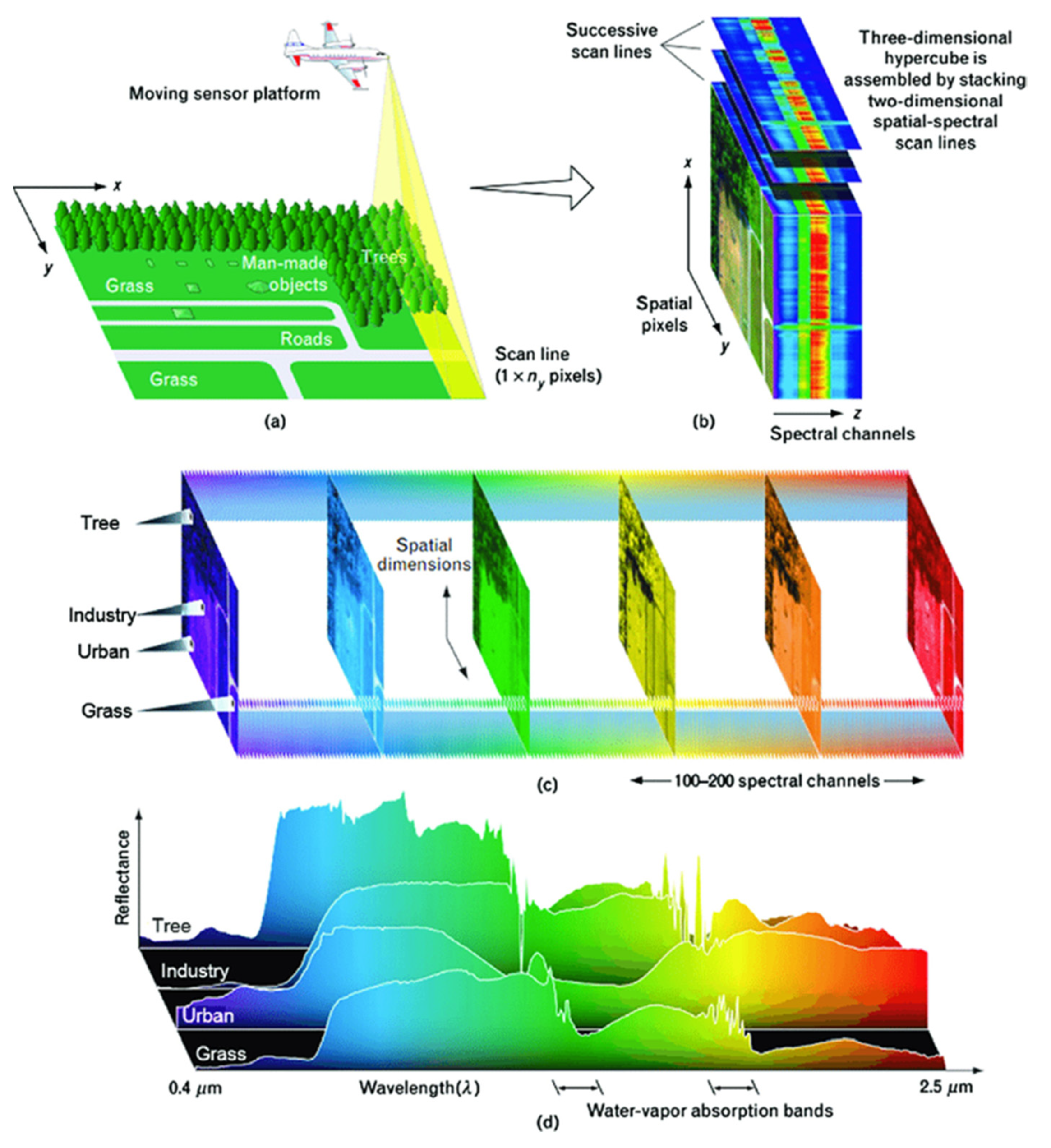

4. Hyperspectral Remote Sensing: A Brief Overview

5. Hyperspectral Remote Sensing Imagery (HRSI) Data Processing and Analysing

5.1. Data Preprocessing

5.2. Hyperspectral Image Classification

6. HRSI Application in Weed Detection Analysis

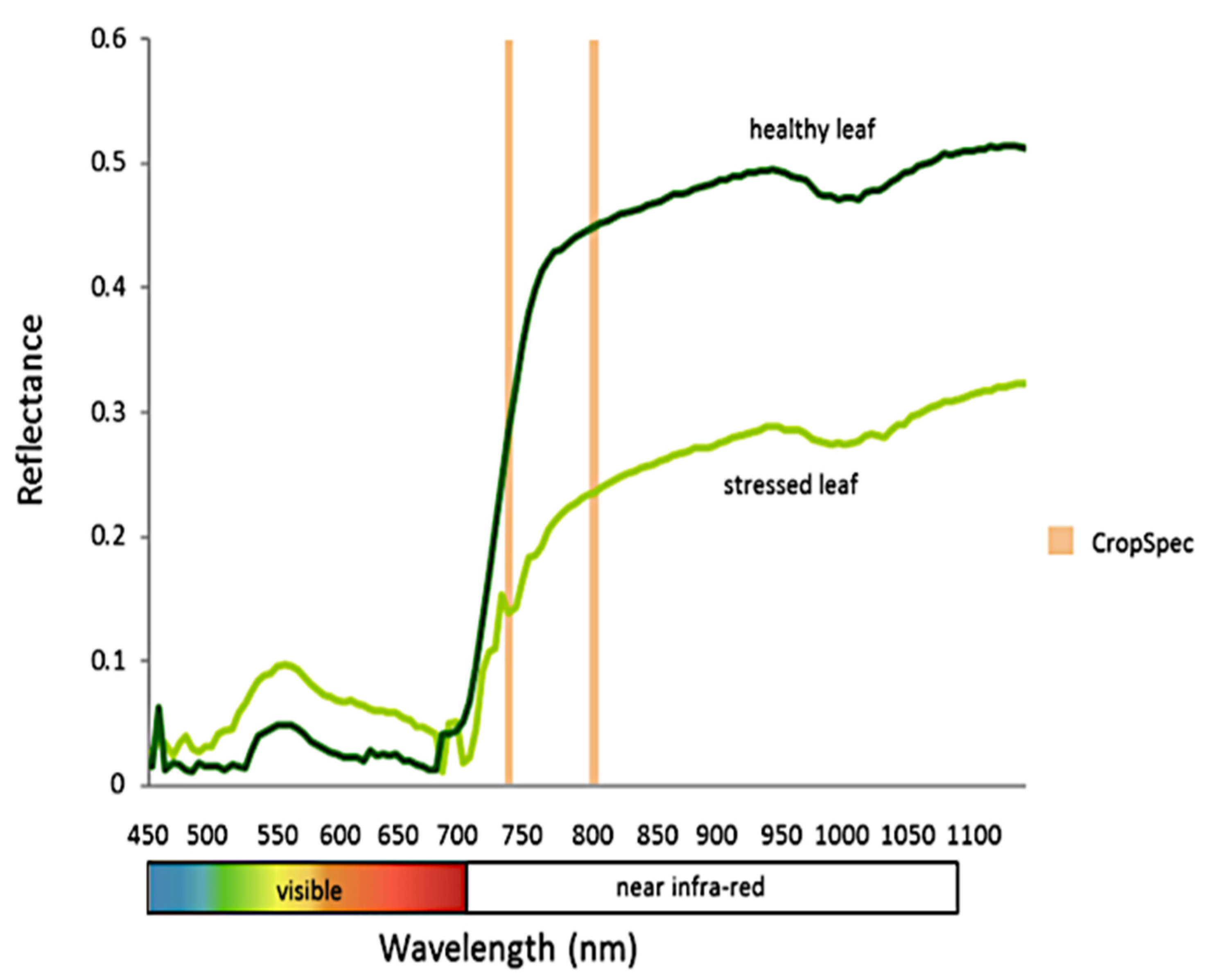

6.1. Weed Classification Using the Spectral Reflectance

6.2. Algorithms and Modelling for Weed Detection Analysis

7. Direction for Future Work and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Afzal, N.; Ahmad, S. Agricultural input use efficiency in Pakistan: Key issues and reform areas. Manag. Nat. Resour. Sustain. Future Agric. Res. Brief. 2009, 1, 1–12. [Google Scholar]

- Ali, H.H.; Peerzada, A.M.; Hanif, Z.; Hashim, S.; Chauhan, B.S. Weed management using crop competition in Pakistan: A review. Crop Prot. 2017, 95, 22–30. [Google Scholar] [CrossRef]

- Hassan, A.A.G.; Ngah, I.; Applanaidu, S.D. Agricultural Transformation in Malaysia: The Role of Smallholders And Area Development. Development 2018, 15, 2. [Google Scholar]

- Dilipkumar, M.; Chuah, T.S.; Goh, S.S.; Sahid, I. Weed management issues, challenges, and opportunities in Malaysia. Crop Prot. 2020, 134, 104347. [Google Scholar] [CrossRef]

- Chauhan, B.; Johnson, D.E. Row spacing and weed control timing affect yield of aerobic rice. Field Crop. Res. 2011, 121, 226–231. [Google Scholar] [CrossRef]

- Kang, Y.; Khan, S.; Ma, X. Climate change impacts on crop yield, crop water productivity and food security–A review. Prog. Nat. Sci. 2009, 19, 1665–1674. [Google Scholar] [CrossRef]

- Khan, M.A.; Marwat, K.B.; Umm-e-Kalsoom Hussain, Z.; Hashim, S.; Rab, A.; Nawab, K. Weed control effects on the wheat-pea intercropping. Pak. J. Bot. 2013, 45, 1743–1748. [Google Scholar]

- Hussain, S.; Khaliq, A.; Matloob, A.; Fahad, S.; Tanveer, A. Interference and economic threshold level of little seed canary grass in wheat under different sowing times. Environ. Sci. Pollut. Res. 2014, 22, 441–449. [Google Scholar] [CrossRef] [PubMed]

- El Pebrian, D.; Ismail, M.I. The characteristics of the repair and maintenance costs distribution of rice combine harvester in Malaysian paddy fields. Agric. Eng. Int. CIGR J. 2019, 20, 132–138. [Google Scholar]

- Yusof, Z.M.; Misiran, M.; Baharin, N.F.; Yaacob, M.F.; Aziz, N.A.B.A.; Sanan, N.H.B. Projection of paddy production in Kedah Malaysia: A case study. Asian J. Adv. Agric. Res. 2019, 3, 1–6. [Google Scholar] [CrossRef]

- Karim, R.S.; Man, A.B.; Sahid, I.B. Weed problems and their management in rice fields of Malaysia: An overview. Weed Biol. Manag. 2004, 4, 177–186. [Google Scholar] [CrossRef]

- Chauhan, B.S. Weed Management in Directs Seeded Rice Systems; International Rice Research Institute: Los Banos, Philippines, 2012; p. 20. [Google Scholar]

- Hossain, K.; Timsina, J.; Johnson, D.E.; Gathala, M.K.; Krupnik, T.J. Multi-year weed community dynamics and rice yields as influenced by tillage, crop establishment, and weed control: Implications for rice-maize rotations in the eastern Gangetic plains. Crop. Prot. 2020, 138, 105334. [Google Scholar] [CrossRef]

- Singh, M.; Nagargade, M.; Tyagi, V. Ecologically sustainable integrated weed management in dry and irrigated direct-seeded rice. Adv. Plants Agric. Res. 2018, 8, 319–331. [Google Scholar] [CrossRef]

- Moody, K.; De Datta, S.K. Integration of weed control practices for rice in tropical Asia. In Proceedings of the Workshop on Weed Control in Small Farms, Jakarta, Indonesia, 15–16 July 1977; pp. 37–47. [Google Scholar]

- Shekhawat, K.; Rathore, S.S.; Chauhan, B.S. Weed management in dry direct-seeded rice: A review on challenges and opportunities for sustainable rice production. Agronomy 2020, 10, 1264. [Google Scholar] [CrossRef]

- Toriyama, K. (Ed.) Rice is Life Scientific Perspectives for the 21st Century; International Rice Research Institute: Los Banos, Philipines; Japan International Research Center for Agricultural Sciences: Tsukuba, Japan, 2005. [Google Scholar]

- Dadashzadeh, M.; Abbaspour-Gilandeh, Y.; Mesri-Gundoshmian, T.; Sabzi, S.; Hernández-Hernández, J.L.; Hernández-Hernández, M.; Arribas, J.I. Weed Classification for Site-Specific Weed Management Using an Automated Stereo Computer-Vision Machine-Learning System in Rice Fields. Plants 2020, 9, 559. [Google Scholar] [CrossRef] [PubMed]

- Paap, A.J. Development of an Optical Sensor for Real-Time Weed Detection Using Laser Based Spectroscopy. Ph.D. Dissertation, Edith Cowan University, Perth, Australia. Available online: https://ro.ecu.edu.au/cgi/viewcontent.cgi?referer=https://scholar.google.com/&httpsredir=1&article=2284&context=theses (accessed on 15 May 2021).

- Ali, H.H.; Tanveer, A.; Naeem, M.; Jamil, M.; Iqbal, M.; Javaid, M.M.; Kashif, M.S. Efficacy of pre-emergence herbicides in controlling Rhynchosia capitata, an emerging summer weed in Pakistan. Philipp. Agric. Sci. 2015, 98, 301–311. [Google Scholar]

- Kandhro, M.N.; Tunio, S.; Rajpar, I.; Chachar, Q. Allelopathic impact of sorghum and sunflower intercropping on weed management and yield enhancement in cotton. Sarhad J. Agric. 2014, 30, 311–318. [Google Scholar]

- Partel, V.; Kakarla, S.C.; Ampatzidis, Y. Development and evaluation of a low-cost and smart technology for precision weed management utilizing artificial intelligence. Comput. Electron. Agric. 2019, 157, 339–350. [Google Scholar] [CrossRef]

- Huang, Y.; Lee, M.A.; Thomson, S.J.; Reddy, K.N. Ground-based hyperspectral remote sensing for weed management in crop production. Int. J. Agric. Biol.Eng. 2016, 9, 98–109. [Google Scholar]

- Yao, H.; Huang, Y. Remote sensing applications to precision farming. Remote Sens. Nat. Resour. 2013, 358–377. [Google Scholar]

- Hunter, J.E.; Gannon, T.W.; Richardson, R.J.; Yelverton, F.H.; Leon, R.G. Integration of remote-weed mapping and an autonomous spraying unmanned aerial vehicle for site-specific weed management. Pest Manag. Sci. 2019, 76, 1386–1392. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Campbell, J.B.; Wynne, R.H. Introduction to Remote Sensing; Guilford Press: New York, NY, USA, 2011. [Google Scholar]

- Bioucas-Dias, J.M.; Plaza, A.; Camps-Valls, G.; Scheunders, P.; Nasrabadi, N.M.; Chanussot, J. Hyperspectral remote sensing data analysis and future challenges. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–36. [Google Scholar] [CrossRef] [Green Version]

- Camps-Valls, G.; Tuia, D.; Gómez-Chova, L.; Jiménez, S.; Malo, J. Remote sensing image processing. Synth. Lect. Image Video Multimed. Process. 2011, 5, 1–192. [Google Scholar] [CrossRef]

- Qian, S.-E. Optical Satellite Signal Processing and Enhancement; SPIE Press: Bellingham, DC, USA; Cardiff, Wales, 2013. [Google Scholar]

- Qian, S.E. Hyperspectral Satellites and System Design; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Govender, M.; Chetty, K.; Naiken, V.; Bulcock, H. A comparison of satellite hyperspectral and multispectral remote sensing imagery for improved classification and mapping of vegetation. Water SA 2019, 34, 147. [Google Scholar] [CrossRef] [Green Version]

- Wendel, A. Hyperspectral Imaging from Ground Based Mobile Platforms and Applications in Precision Agriculture; School of Aerospace, Mechanical and Mechatronic Engineering, The University of Sydney: Sydney, Australia, 2018. [Google Scholar]

- Chen, Y.; Guerschman, J.; Cheng, Z.; Guo, L. Remote sensing for vegetation monitoring in carbon capture storage regions: A review. Appl. Energy 2019, 240, 312–326. [Google Scholar] [CrossRef]

- Shaw, G.A.; Burke, H.K. Spectral imaging for remote sensing. Linc. Lab. J. 2003, 14, 3–28. [Google Scholar]

- Lu, B.; Dao, P.; Liu, J.; He, Y.; Shang, J. Recent Advances of Hyperspectral Imaging Technology and Applications in Agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Kate, S.H.; Rocchini, D.; Neteler, M.; Nagendra, H. Benefits of hyperspectral remote sensing for tracking plant invasions. Divers. Distrib. 2011, 17, 381–392. [Google Scholar]

- Vorovencii, I. The hyperspectral sensors used in satellite and aerial remote sensing. Bull. Transilv. Univ. Brasov. For. Wood Ind. Agric. Food Engineering. Ser. II 2009, 2, 51. [Google Scholar]

- Lv, W.; Wang, X. Overview of Hyperspectral Image Classification. J. Sens. 2020, 2020, 4817234. [Google Scholar] [CrossRef]

- Rosle, R.; Che’Ya, N.N.; Ang, Y.; Rahmat, F.; Wayayok, A.; Berahim, Z.; Omar, M.H. Weed Detection in Rice Fields Using Remote Sensing Technique: A Review. Appl. Sci. 2021, 11, 10701. [Google Scholar] [CrossRef]

- Chang, C.I. Hyperspectral Data Processing: Algorithm Design and Analysis; WileyInterscience: Hoboken, NJ, USA, 2013. [Google Scholar]

- Park, B.; Lu, R. (Eds.) Hyperspectral Imaging Technology in Food and Agriculture; Springer: New York, NY, USA, 2015. [Google Scholar]

- Vidal, M.; Amigo, J.M. Pre-processing of hyperspectral images. Essential steps before image analysis. Chemom. Intell. Lab. Syst. 2012, 117, 138–148. [Google Scholar] [CrossRef]

- Burger, J.; Geladi, P. Hyperspectral NIR image regression part I: Calibration and correction. J. Chemom. A J. Chemom. Soc. 2005, 19, 355–363. [Google Scholar] [CrossRef]

- Ahmad, M.; Shabbir, S.; Raza, R.A.; Mazzara, M.; Distefano, S.; Khan, A.M. Hyperspectral Image Classification: Artifacts of Dimension Reduction on Hybrid CNN. arXiv 2021, arXiv:2101.10532, preprint. [Google Scholar]

- Basantia, N.C.; Nollet, L.M.; Kamruzzaman, M. (Eds.) Hyperspectral Imaging Analysis and Applications for Food Quality; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Tamilarasi, R.; Prabu, S. Application of Machine Learning Techniques for Hyperspectral Image Dimensionality: A Review. J. Crit. Rev. 2020, 7, 3499–3516. [Google Scholar]

- Sawant, S.S.; Prabukumar, M. Semi-supervised techniques based hyper-spectral image classification: A survey. In Proceedings of the 2017 Innovations in Power and Advanced Computing Technologies (i-PACT), Vellore, India, 21–22 April 2017; pp. 1–8. [Google Scholar]

- Freitas, S.; Almeida, C.; Silva, H.; Almeida, J.; Silva, E. Supervised classification for hyperspectral imaging in UAV maritime target detection. In Proceedings of the 2018 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Torres Vedras, Portugal, 25–27 April 2018; pp. 84–90. [Google Scholar]

- Romaszewski, M.; Głomb, P.; Cholewa, M. Semi-supervised hyperspectral classification from a small number of training samples using a co-training approach. ISPRS J. Photogramm. Remote Sens. 2016, 121, 60–76. [Google Scholar] [CrossRef]

- Su, W.H. Advanced Machine Learning in Point Spectroscopy, RGB-and hyperspectral-imaging for automatic discriminations of crops and weeds: A review. Smart Cities 2020, 3, 767–792. [Google Scholar] [CrossRef]

- Sarvini, T.; Sneha, T.; Sukanya Gowthami, S.G.; Sushmitha, S.; Kumaraswamy, R. Performance comparison of weed detection algorithms. In Proceedings of the 2019 International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 4–6 April 2019; pp. 0843–0847. [Google Scholar]

- Li, Y.; Al-Sarayreh, M.; Irie, K.; Hackell, D.; Bourdot, G.; Reis, M.M.; Ghamkhar, K. Identification of Weeds Based on Hyperspectral Imaging and Machine Learning. Front. Plant Sci. 2021, 11, 2324. [Google Scholar] [CrossRef]

- Atzberger, C. Advances in Remote Sensing of Agriculture: Context Description, Existing Operational Monitoring Systems and Major Information Needs. Remote Sens. 2013, 5, 949–981. [Google Scholar] [CrossRef] [Green Version]

- Pandey, P.C.; Balzter, H.; Srivastava, P.K.; Petropoulos, G.P.; Bhattacharya, B. Future perspectives and challenges in hyperspectral remote sensing. In Hyperspectral Remote Sensing; Elsevier: Amsterdam, The Netherlands, 2020; pp. 429–439. [Google Scholar]

- Feng, Y.-Z.; Sun, D.-W. Application of Hyperspectral Imaging in Food Safety Inspection and Control: A Review. Crit. Rev. Food Sci. Nutr. 2012, 52, 1039–1058. [Google Scholar] [CrossRef] [PubMed]

- Singh, V.; Rana, A.; Bishop, M.; Filippi, A.M.; Cope, D.; Rajan, N.; Bagavathiannan, M. Unmanned aircraft systems for precision weed detection and management: Prospects and challenges. Adv. Agron. 2020, 159, 93–134. [Google Scholar]

- Pott, L.P.; Amado, T.J.; Schwalbert, R.A.; Sebem, E.; Jugulam, M.; Ciampitti, I.A. Pre-planting weed detection based on ground field spectral data. Pest Manag. Sci. 2020, 76, 1173–1182. [Google Scholar] [CrossRef] [PubMed]

- Nursyazyla, S.; Syarifah, N.I.S.S.A.; Zaid, R.; Nik, N.C.; Muhammad, H.M.R. A Review on Hyperspectral Remote Sensing for Weed Detection Analysis in Agricultural Crop. Southeast Asian Agricultural Engineering Student Chapter Annual Regional Convention (2021: Sarawak) E-PROCEEDING. 2021. Available online: https://btu.upm.edu.my/upload/dokumen/20211105082434E-Proceeding_ARC2021_eISBN.pdf (accessed on 19 January 2022).

- Bradter, U.; O’Connell, J.; Kunin, W.E.; Boffey, C.W.; Ellis, R.J.; Benton, T.G. Classifying grass-dominated habitats from remotely sensed data: The influence of spectral resolution, acquisition time and the vegetation classification system on accuracy and thematic resolution. Sci. Total Environ. 2019, 711, 134584. [Google Scholar] [CrossRef] [PubMed]

- Che’Ya, N.; Dunwoody, E.; Gupta, M. Assessment of Weed Classification Using Hyperspectral Reflectance and Optimal Multispectral UAV Imagery. Agronomy 2021, 11, 1435. [Google Scholar] [CrossRef]

- Zhang, G.; Xu, T.; Tian, Y.; Xu, H.; Song, J.; Lan, Y. Assessment of rice leaf blast severity using hyperspectral imaging during late vegetative growth. Australas. Plant Pathol. 2020, 49, 571–578. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Lyon, J.G.; Huete, A. Hyperspectral remote sensing of vegetation and agricultural crops: Knowledge gain and knowledge gap after 40 years of research. In Hyperspectral Remote Sensing of Vegetation; Thenkabail, P.S., Lyon, J.G., Huete, A., Eds.; CRC Press: Boca Raton, FL, USA, 2010; p. 705. [Google Scholar]

- Casa, R.; Pascucci, S.; Pignatti, S.; Palombo, A.; Nanni, U.; Harfouche, A.; Fantozzi, P. UAV-based hyperspectral imaging for weed discrimination in maize. In Precision Agriculture’19; Wageningen Academic Publishers: Wageningen, The Netherlands, 2019; pp. 24–35. [Google Scholar]

- Martin, M.P.; Barreto, L.; Riano, D.; Fernandez-Quintanilla, C.; Vaughan, P. Assessing the potential of hyperspectral remote sensing for the discrimination of grassweeds in winter cereal crops. Int. J. Remote. Sens. 2011, 32, 49–67. [Google Scholar] [CrossRef]

- Lass, L.W.; Prather, T.; Glenn, N.; Weber, K.; Mundt, J.T.; Pettingill, J. A review of remote sensing of invasive weeds and example of the early detection of spotted knapweed (Centaurea maculosa) and babysbreath (Gypsophila paniculata) with a hyperspectral sensor. Weed Sci. 2005, 53, 242–251. [Google Scholar] [CrossRef]

- Scherrer, B.; Sheppard, J.; Jha, P.; Shaw, J.A. Hyperspectral imaging and neural networks to classify herbicide-resistant weeds. J. Appl. Remote Sens. 2019, 13, 044516. [Google Scholar] [CrossRef]

- Zhang, Y.; Gao, J.; Cen, H.; Lu, Y.; Yu, X.; He, Y.; Pieters, J.G. Automated spectral feature extraction from hyperspectral images to differentiate weedy rice and barnyard grass from a rice crop. Comput. Electron. Agric. 2019, 159, 42–49. [Google Scholar] [CrossRef]

- Chaoying, T.A.N.G.; Xianghui, W.E.I.; Biao, W.A.N.G.; Prasad, S. A CrossBorder Detection Algorithm for Agricultural Spraying UAV; American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2018. [Google Scholar]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Zhang, L.; Wen, S.; Zhang, H.; Zhang, Y.; Deng, Y. Detection of Helminthosporium Leaf Blotch Disease Based on UAV Imagery. Appl. Sci. 2019, 9, 558. [Google Scholar] [CrossRef] [Green Version]

- Felegari, S.; Sharifi, A.; Moravej, K.; Amin, M.; Golchin, A.; Muzirafuti, A.; Tariq, A.; Zhao, N. Integration of Sentinel 1 and Sentinel 2 Satellite Images for Crop Mapping. Appl. Sci. 2021, 11, 10104. [Google Scholar] [CrossRef]

- Hennessy, A.; Clarke, K.; Lewis, M. Hyperspectral classification of plants: A review of waveband selection generalisability. Remote Sens. 2020, 12, 113. [Google Scholar] [CrossRef] [Green Version]

- Shannon, D.K.; Clay, D.E.; Kitchen, N.R. Precision Agriculture Basics; John Wiley & Sons: Hoboken, NJ, USA, 2020; Volume 176. [Google Scholar]

- Lan, Y.; Huang, Z.; Deng, X.; Zhu, Z.; Huang, H.; Zheng, Z.; Lian, B.; Zeng, G.; Tong, Z. Comparison of machine learning methods for citrus greening detection on UAV multispectral images. Comput. Electron. Agric. 2020, 171, 105234. [Google Scholar] [CrossRef]

- Nguyen, M.L.; Ciesielski, V.; Song, A. Rice leaf detection with genetic programming. In Proceedings of the 2013 IEEE Congress on Evolutionary Computation, Cancun, Mexico, 20–23 June 2013; pp. 1146–1153. [Google Scholar]

- Pérez-Ortiz, M.; Gutiérrez, P.A.; Peña, J.M.; Torres-Sánchez, J.; López-Granados, F.; Hervás-Martínez, C. Machine learning paradigms for weed mapping via unmanned aerial vehicles. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016; pp. 1–8. [Google Scholar]

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine learning in agriculture: A review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zheng, Y.; Zhu, Q.; Huang, M.; Guo, Y.; Qin, J. Maize and weed classification using color indices with support vector data description in outdoor fields. Comput. Electron. Agric. 2017, 141, 215–222. [Google Scholar] [CrossRef]

- Kamath, R.; Balachandra, M.; Prabhu, S. Paddy crop and weed discrimination: A multiple classifier system approach. Int. J. Agron. 2020, 2020, 6474536. [Google Scholar] [CrossRef]

- Mehra, L.; Cowger, C.; Gross, K.; Ojiambo, P.S. Predicting Pre-planting Risk of Stagonospora nodorum blotch in Winter Wheat Using Machine Learning Models. Front. Plant Sci. 2016, 7, 390. [Google Scholar] [CrossRef]

- Chen, Y.; Wu, Z.; Zhao, B.; Fan, C.; Shi, S. Weed and Corn Seedling Detection in Field Based on Multi Feature Fusion and Support Vector Machine. Sensors 2020, 21, 212. [Google Scholar] [CrossRef]

- Chou, J.J.; Chen, C.P.; Yeh, J.T. Crop identification with wavelet packet analysis and weighted Bayesian distance. Comput. Electron. Agric. 2007, 57, 88–98. [Google Scholar] [CrossRef]

- Bakhshipour, A.; Zareiforoush, H. Development of a fuzzy model for differentiating peanut plant from broadleaf weeds using image features. Plant Methods 2020, 16, 153. [Google Scholar] [CrossRef]

- Bakhshipour, A.; Jafari, A.; Nassiri, S.M.; Zare, D. Weed segmentation using texture features extracted from wavelet sub-images. Biosyst. Eng. 2017, 157, 1–12. [Google Scholar] [CrossRef]

- Tang, L.; Tian, L.; Steward, B.L. Classification of broadleaf and grass weeds using Gabor wavelets and an artificial neural network. Trans. ASAE 2003, 46, 1247. [Google Scholar] [CrossRef] [Green Version]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Chen, T. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef] [Green Version]

- Yu, J.; Sharpe, S.M.; Schumann, A.W.; Boyd, N.S. Deep learning for image-based weed detection in turfgrass. Eur. J. Agron. 2019, 104, 78–84. [Google Scholar] [CrossRef]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; Hasan, M.; Van Essen, B.C.; Awwal, A.A.S.; Asari, V.K. A State-of-the-Art Survey on Deep Learning Theory and Architectures. Electronics 2019, 8, 292. [Google Scholar] [CrossRef] [Green Version]

- Hasan, A.S.M.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G. A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 2021, 184, 106067. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Hosseini, M.P.; Lu, S.; Kamaraj, K.; Slowikowski, A.; Venkatesh, H.C. Deep learning architectures. In Deep Learning: Concepts and Architectures; Springer: Cham, Switzerland, 2020; pp. 1–24. [Google Scholar]

- Bah, M.D.; Hafiane, A.; Canals, R. Deep Learning with Unsupervised Data Labeling for Weed Detection in Line Crops in UAV Images. Remote. Sens. 2018, 10, 1690. [Google Scholar] [CrossRef] [Green Version]

- Lee, S.H.; Chan, C.S.; Mayo, S.J.; Remagnino, P. How deep learning extracts and learns leaf features for plant classification. Pattern Recognit. 2017, 71, 1–13. [Google Scholar] [CrossRef] [Green Version]

| Grassy Weeds | Sedges | Broadleaf Weeds |

|---|---|---|

| Digitaria setigera | Cyperus iria | Commelina benghalensis |

| Digitaria sanguinalis | Cyperus difformis | Caesulia axillaris |

| Digitaria ciliaris | Cyperus rotundus | Eclipta prostrata |

| Echinochloa colonum | Fimbristylis miliacea | Ipomoea aquatica |

| Echinoclhoa crus-galli | Ludwigia octovalvis | |

| Eleusine indica | Ludwigia adscendens | |

| Ischaemum rugosum | Monochoria vaginalis | |

| Leptochloa chinensis | Sphenoclea zeylanica | |

| Oryza sativa | ||

| Paspalum |

| Irrigated Transplanting | Extensive Direct Seeding | Intensive Direct Seeding |

|---|---|---|

| Grasses | ||

| Isachne globose Leersia hexandra | Echinochloa crus-galli complex Lepthochola chinensis Ischaemum rugosum Oryza sativa (weedy rice) | E. crus-galli L. chinensis I. Rugosum O. sativa (weedy rice) |

| Broadleaf weeds | ||

| Limnocharis flava Monochoria vaginalis M. minuta Sphenoclea zeylanica | L. flava M. vaginalis L. hyssopifolia M. minuta | L. flavaa S. guyanensisb Sphenoclea zeylanicab M. crenatab Limnophila erectaa |

| Sedges | ||

| Scirpus grossus | Cyperus iria Fimbristylis miliacea | C. iria F. milicea b C. difformis |

| Parameters | Satellites | Airplanes | Helicopters | Fixed-Wing UAVs | Multi-Rotor UAVs | Close-Range Platforms |

|---|---|---|---|---|---|---|

| Operational Altitudes | 400–700 km | 1–20 km | 100 m–2 km | <150 m | <150 m | <10 m |

| Spatial coverage | 42 km × 7.7 km | ~100 km2 | ~10 km2 | ~5 km2 | ~0.5 km2 | ~0.005 km2 |

| Spatial resolution | 20–60 m | 1–20 m | 0.1–1 m | 0.01–0.5 m | 0.01–0.5 m | 0.0001–0.01 m |

| Temporal resolution | Days to weeks | Depends on flight operations (hours to days) | ||||

| Flexibility | Low (fixed by repeating cycles) | Medium (depend on availability of aviation company) | High | |||

| Operational complexity | Low (provide final data to users) | Medium (depend on users or vendors) | High (operate by users with setting up the hardware and software) | |||

| Applicable scales | Regional–global | Landscape-regional | Canopy–landscape | Leaf–canopy | ||

| Major limiting factors | Weathers | Unfavourable flight height/speed, unstable illumination conditions | Short battery endurance, flight regulations | Platform design and operation | ||

| Image acquisition cost | Low to medium | High (typically need to hire an aviation company to fly) | Large (due to area coverage) | |||

| Types of Sensors | Producer | Number of Bands | Spectral Image (μm) |

|---|---|---|---|

| Satellite mounted hyperspectral sensors | |||

| FTHSI on MightySat II | Air Force Research (OH, USA) | 256 | 0.35–1.05 |

| Hyperion on EO- | NASA Guddard Space Flight Center (Greenbelt, MA, USA) | 242 | 0.40–250 |

| Aircraft-mounted hyperspectral sensors | |||

| AVIRIS (airborne visible infrared imaging spectrometer) | NASA Jet Propulsion Lab. (Pasadena, CA, USA) | 224 | 0.40–2.50 |

| HYDICE (hyperspectral digital imagery collection experiment) | Naval Research Lab (Washington, DC, USA) | 210 | 0.40–2.50 |

| PROBE-1 | Earth Search Sciences Inc. (Kalispell, MT, USA) | 128 | 0.40–2.50 |

| CASI (compact airborne spectrographic imager) | ITRES Research Limited (Calgary, AB, Canada) | Over 22 | 0.40–1.00 |

| HyMap | Integrated Spectronics | 100 la 200 | Visible to thermal Infrared |

| EPS-H (environmental protection system) | GER Corporation | VIS/NIR (76), SWIR1 (32), SWIR2 (32), TIR (12) | VIS/NIR (0.43–1.05) SWIR1 (1.50–1.80) SWIR2 (2.00–2.50) TIR (8–12.50) |

| DAIS 7915 (digital airborne imaging spectrometer) | GER Corporation (geophysical and environmental research imaging spectrometer) | VIS/NIR (32), SWIR1 (8), SWIR2 (32), MIR (1), TIR (12) | VIS/NIR (0.43–1.05) SWIR1 (1.50–1.80) SWIR2 (2.00–2.50) MIR (3.00–5.00) TIR (8.70–12.30) |

| DAIS 21115 (digital airborne imaging spectrometer) | GER Corporation | VIS/NIR (76), SWIR1 (64), SWIR2 (64), MIR (1), TIR (6) | VIS/NIR (0.40–1.00) SWIR1 (1.00–1.80) SWIR2 (2.00–2.50) MIR (3.00–5.00) TIR (8.00–12.00) |

| AISA (airborne imaging spectrometer) | Spectral Imaging | Over 288 | 0.43–1.00 |

| No. | Crop | Weed | Model | Optimal Accuracy | Reference |

|---|---|---|---|---|---|

| 1. | Rice | Barnyard grass, weedy rice | RF, SVM | 100% | Zhang et al. (2019) |

| 2. | Maize | Caltrop, curly dock, barnyard grass, ipomoea spp., polymeria spp. | SVM, LDA | >98.35% | Wendel et al. (2016) |

| 3. | Soybean, cotton | Ryegrass | LDA | >90% | Huang et al. (2016) |

| 4. | Wheat | Broadleaf weeds, grass weeds | PLSDA | 85% | Hermann et al. (2013) |

| 5. | Broadbean, wheat | Cruciferous weeds | ANN | 100% | De Castro et al. (2012) |

| 6. | Sugar beet | Wild buckwheat, Field Horsetail, Green foxtail, Chickweed | LDA | 97.3% | Okamoto et al. (2007) |

| 7. | Wheat | Musk thistle | SVM | 91% | Mirik et al. (2013) |

| 8. | Maize | C. arvenis | RF | >90% | Gao et al. (2018) |

| Crop/Weed Mixture | Reflectance | Wavelength (nm) |

|---|---|---|

| Wheat system | ||

| Wheat (Triticum aestivum) | 0.42–0.45 | 720–850 |

| Broadleaved weeds | 0.6–0.8 | 720–850 |

| Grass weeds | 0.55–0.6 | 720–850 |

| Wheat stubbles | 0.2–0.3 | 1425–2250 |

| Wheat stubbles heavily grazed | 0.3–0.4 | 1425–2250 |

| Cruciferous weeds | 0.65–0.7 | 750–900 |

| Wheat | 0.4–0.5 | 720–900 |

| Soybean system | ||

| Soybean (Glycine max) | 0.85–0.90 | 750–900 |

| Prickly sida (Sida spinosa) | 0.78–0.82 | 750–900 |

| Pitted morning glory (Ipomoea lacunosa) | 0.6–0.65 | 750–900 |

| Sicklepod (Cassia obtusifolia) | 0.52–0.55 | 750–900 |

| Cotton system | ||

| Cotton (Gossypium hirsutum) | 0.48–0.52 | 400–600 |

| Cogongrass (Imperata cylindrica) | 0.5–0.6 | 400–750 |

| Johnsongrass (Sorghum halepense) | 0.45–0.52 | 400–750 |

| Sicklepod (C. obtusifolia) | 0.21–0.3 | 400–800 |

| Sorghum system | ||

| Sorghum (Sorghum bicolor) | 0.55–0.6 | 720–1000 |

| Common lambsquarters (Chenopodium album) | 0.39–0.41 | 720–1000 |

| Pigweed (Amaranthus spp.) | 0.48–0.52 | 720–1000 |

| Barnyardgrass (Echinochloa spp.) | 0.35 | 72–1000 |

| Mallow (Malva spp.) | 0.42–0.44 | 720–1000 |

| Purple nutsedge (Cyperus rotundus) | 0.28–0.30 | 720–1000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sulaiman, N.; Che’Ya, N.N.; Mohd Roslim, M.H.; Juraimi, A.S.; Mohd Noor, N.; Fazlil Ilahi, W.F. The Application of Hyperspectral Remote Sensing Imagery (HRSI) for Weed Detection Analysis in Rice Fields: A Review. Appl. Sci. 2022, 12, 2570. https://doi.org/10.3390/app12052570

Sulaiman N, Che’Ya NN, Mohd Roslim MH, Juraimi AS, Mohd Noor N, Fazlil Ilahi WF. The Application of Hyperspectral Remote Sensing Imagery (HRSI) for Weed Detection Analysis in Rice Fields: A Review. Applied Sciences. 2022; 12(5):2570. https://doi.org/10.3390/app12052570

Chicago/Turabian StyleSulaiman, Nursyazyla, Nik Norasma Che’Ya, Muhammad Huzaifah Mohd Roslim, Abdul Shukor Juraimi, Nisfariza Mohd Noor, and Wan Fazilah Fazlil Ilahi. 2022. "The Application of Hyperspectral Remote Sensing Imagery (HRSI) for Weed Detection Analysis in Rice Fields: A Review" Applied Sciences 12, no. 5: 2570. https://doi.org/10.3390/app12052570

APA StyleSulaiman, N., Che’Ya, N. N., Mohd Roslim, M. H., Juraimi, A. S., Mohd Noor, N., & Fazlil Ilahi, W. F. (2022). The Application of Hyperspectral Remote Sensing Imagery (HRSI) for Weed Detection Analysis in Rice Fields: A Review. Applied Sciences, 12(5), 2570. https://doi.org/10.3390/app12052570