Abstract

The applications of computer networks are increasingly extensive, and networks can be remotely controlled and monitored. Cyber hackers can exploit vulnerabilities and steal crucial data or conduct remote surveillance through malicious programs. The frequency of malware attacks is increasing, and malicious programs are constantly being updated. Therefore, more effective malware detection techniques are being developed. In this paper, a convolutional fuzzy neural network (CFNN) based on feature fusion and the Taguchi method is proposed for malware image classification; this network is referred to as FT-CFNN. Four fusion methods are proposed for the FT-CFNN, namely global max pooling fusion, global average pooling fusion, channel global max pooling fusion, and channel global average pooling fusion. Data are fed into this network architecture and then passed through two convolutional layers and two max pooling layers. The feature fusion layer is used to reduce the feature size and integrate the network information. Finally, a fuzzy neural network is used for classification. In addition, the Taguchi method is used to determine optimal parameter combinations to improve classification accuracy. This study used the Malimg dataset to evaluate the accuracy of the proposed classification method. The accuracy values exhibited by the proposed FT-CFNN, proposed CFNN, and original LeNet model in malware family classification were 98.61%, 98.13%, and 96.68%, respectively.

1. Introduction

With the rapid development of information technology, the progress of network technology has made our lives more convenient. However, malware can penetrate information technology devices through loopholes in program security, which can cause system damage, limited network bandwidth, and the theft of crucial files. The frequency of malware attacks has been increasing. For example, antivirus company Kaspersky Lab detected 69,277,289 unique malicious objects in 2016 [1]. McAfee Labs reported that 670 million malware samples were detected in 2017 [2]. According to Malwarebytes’ annual malware report, more than 50 million cyber threats were detected separately in 2018 and 2019 [3,4]. The 2020 Trend Micro Cybersecurity Report stated that 119,000 cyberattacks occur every minute [5]. Because of the wide variety of malware and the increasing number of malware attacks, various malware classification methods have been proposed by researchers.

Malware analysis can be broadly categorized into static analysis and dynamic analysis. Static analysis involves parsing and extracting the features of malicious programs without executing code. Common static analysis is a signature-based method that involves searching a signature database for matching signatures to determine whether the program is malware. A local signature database is required to store signatures extracted from malware by experts. The signature database must be regularly manually updated to effectively prevent new malware attacks. However, this approach has major limitations. Researchers and malware authors have demonstrated that malware can evade signature-based detection through new program encryption or obfuscation techniques [6,7,8,9]. Chen et al. [10] proposed the use of four easily extractable small-scale features to classify malware families and used machine learning methods to search for the best model and hyperparameters for each feature and parameter combination. Kazuki et al. [11] developed a malware analysis system that conducts control traffic analysis, antisink editing, feature extraction, and similarity calculation. Peyman [12] proposed the use of heuristic-based malware detection and simultaneous byte analysis based on static features.

In dynamic analysis, malicious programs (such as virtual machines or sandboxes) are executed in a controlled environment. Malware detection results are obtained from the collection and analysis of the system operating behavior, network packets, file storage, and download items [13]. Mohaisen et al. [14] proposed a malware classification technology called the automated malware and labeling scheme (AMAL), which is based on automated behavior analysis. AMAL primarily uses AutoMal and MaLabel to monitor the file system and network behavior. MaLabel classifies similar malware through extracted behavioral monitoring. Support vector machine (SVM), decision tree, and K-nearest neighbor (KNN) algorithms are used to classify specific malware families. Galal et al. [15] proposed a dynamic analysis method based on malware behavior. In this method, application programming interface (API) hooking technology is used to determine the parameters of the malware and collect relevant information; from the extracted API features, parameters, and sequences, the unique malware behavior is inferred. The decision tree, random forest, and SVM algorithms are then used to classify malware. Kolosnjaji et al. [16] used a recurrent neural network and convolutional neural network (CNN) for feature extraction and an n-gram for malware detection. Fahade and Wei [17] proposed the use of the longest common substring and longest common subsequence for character matching to detect malware. Damodaran et al. [18] used numerous malware samples for the comparison of static and dynamic malware analysis.

The aforementioned static and dynamic analyses have many limitations. Although static analysis is fast and safe, it cannot be used to classify unknown malware. Dynamic analysis can be used for accurately identifying the code and determining the functionality of a malicious program; however, this method might affect the application of the computer. Therefore, some researchers have advocated the conversion of malware from binary files to grayscale images and then utilizing various algorithmic techniques to classify these malware images [19]. In contrast to static and dynamic analysis, malware image classification does not require strong malware domain knowledge, and it bypasses the need for malware fine-tuning to overcome obfuscation techniques. Malware image classification thus allows for fast classification applicable to various malware types [20].

Machine learning technology has been widely used to address the malware detection problem. Narayanan et al. [21] used principal component analysis to extract features, and artificial neural networks (ANN), KNN, and SVM were then used to complete the classification of malware images. Garcia and Muga II [22] used random forest to classify malware images, and they reported satisfactory classification results. Gao et al. [23] proposed a malware classification framework based on malware visualization and semisupervised learning. This framework mainly includes three parts: the parts for malware visualization, feature extraction, and classification. Feature fusion methods are used to fuse local and global features to save time and improve feature correlation. Nataraj et al. [24] proposed a classification method based on standard image features. This method is simple and efficient and requires neither disassembly nor code execution. The above-mentioned methods require the user to define the features in advance.

In recent years, many scholars have conducted in-depth research with deep learning approaches on malware detection. Lin et al. [25] used LeNet for malware family classification. They used convolution operations to automatically extract malware features. Kalash et al. [26] proposed a deep learning architecture to classify malware samples. Qi et al. [27] used an adversarial learning framework for unsupervised domain adaptation to enable gradient boosting decision trees to learn domain-invariant features and to mitigate performance degradation in the target domain. Because CNNs have too many parameters and require high-performance hardware, Lin and Jhang [28] employed convolution operations with fuzzy neural network to reduce the number of network parameters in breast cancer classification and obtained good performance. Because CNNs require numerous parameters and these parameters are difficult to determine, the trial-and-error method is widely used for parameter selection. To reduce the time and cost of experiments, the Taguchi method [29] can be used to statistically optimize parameter selection by using an orthogonal array of influencing factors and their levels.

In this paper, a convolutional fuzzy neural network (CFNN) based on feature fusion and the Taguchi method (FT-CFNN) is proposed for malware image classification. The FT-CFNN comprises two parts: a CFNN and Taguchi-method-based optimal parameter combinations. The CFNN comprises convolutional, pooling, feature fusion, and fuzzy neural network (FNN) layers. Four feature fusion methods, namely global max pooling (GMP) fusion, global average pooling (GAP) fusion, channel global max pooling (CGMP) fusion, and channel global average pooling (CGAP) fusion, are proposed to reduce the feature size and integrate the network information. An FNN is used for classification. In addition, the Taguchi method is used to determine the affecting factors and the best parameter combination for achieving optimal accuracy. In this study, we focus on improving deep learning methods that require a large number of learning parameters and improving classification accuracy by optimizing parameter combinations. The major contributions of this study are as follows:

- An efficient FT-CFNN is proposed for malware image classification.

- Four feature fusion methods, namely GMP fusion, GAP fusion, CGMP fusion, and CGMP fusion, are proposed to reduce the feature size and integrate network information.

- The size of the adjustable parameters can be reduced by replacing a fully connected network with an FNN.

- To reduce the number of experiments required for the various parameter combinations, the Taguchi method is used to determine the affecting factors and levels.

The rest of this paper is organized as follows. Section 2 describes the structure of the proposed FT-CFNN and the Taguchi method. Section 3 presents the experimental results obtained when using the proposed FT-CFNN. Finally, Section 4 details the conclusions of this study and recommendations for future research.

2. Proposed FT-CFNN

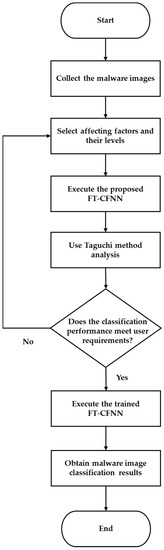

In this section, the classification method of the proposed FT-CFNN is described in detail. Figure 1 shows a flowchart detailing the process of malware image classification with the aforementioned network. First, the Taguchi method is used to select the affecting factors and their levels. Next, the training image set is input into the FT-CFNN to implement the Taguchi experiment. The network then determines whether the experimental results meet the requirements of users. If the results do not meet the requirements of users, the affecting factor and its level are reselected. If the results meet the requirements of users, the experiment is concluded. Finally, the malware images are classified into their corresponding categories.

Figure 1.

Flowchart of malware image classification with the FT-CFNN.

2.1. Convolutional Fuzzy Neural Network

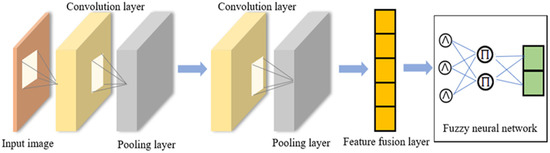

The architecture of the CFNN of the FT-CFNN is displayed in Figure 2. It comprises convolution, pooling, feature fusion, and FNN layers. Two convolution layers and two pooling layers are used in this network. Four feature fusion methods, namely GMP fusion, GAP fusion, CGMP fusion, and CGMP fusion, are proposed to reduce the feature size and integrate the network information. To reduce the size of adjustable parameters, an FNN is used to replace a fully connected network.

Figure 2.

Architecture of the CFNN used in the FT-CFNN.

2.1.1. Convolution Layers

The convolutional layers of the CFNN contain multiple convolution kernels. A convolution kernel is used to extract the features of an image by moving it from left to right and from top to bottom through a sliding action. The inner product operation is performed on overlapping positions to obtain the feature values for these points.

2.1.2. Pooling Layers

The main function of a pooling layer is to reduce the size of input image features and retain only important features. Commonly used pooling operations are of two types: maximum pooling and average pooling. Maximum pooling is used to determine the maximum value in a convolutional kernel. Average pooling is used to compute the average value in a convolution kernel.

2.1.3. Feature Fusion Layer

In this study, four feature fusion methods (GMP fusion, GAP fusion, CGMP fusion, and CGMP fusion) were used (Figure 3). Figure 3a illustrates the global pooling fusion method. First, two feature maps are completed, and each feature map (width and height) is then fused separately. Finally, a feature point is obtained. Figure 3b illustrates channel global pooling fusion. All channels between feature maps are fused (depth) in this method. The operations used in the aforementioned methods are of two types: maximum value operations and average value operations.

Figure 3.

Feature fusion methods: (a) GMP fusion and GAP fusion as well as (b) CGMP fusion and CGAP fusion.

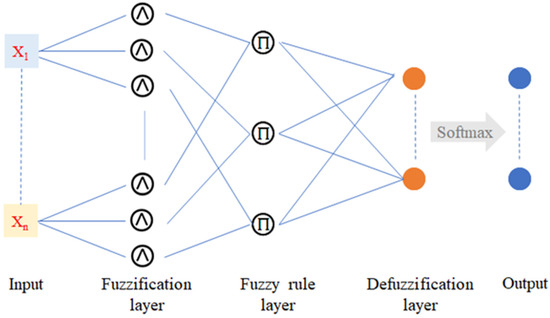

2.1.4. FNN Layer

An FNN combines the logical reasoning of humans and the learning abilities of neural networks. FNNs have been successfully used in classification systems [30,31]. Figure 4 illustrates the architecture of an FNN, which primarily contains a fuzzification layer, fuzzy rule layer, and defuzzification layer. Fuzzy rules are inferred using IF–THEN representations. These rules are expressed as follows:

Figure 4.

Architecture of an FNN.

In the aforementioned rule, is the input, is the membership function, and is the output of the jth rule.

In the fuzzification layer, the Gaussian membership function used by each input is fuzzified to obtain the corresponding membership values. The relevant formula is as follows:

where is the input, is the mean value, and is the deviation.

Subsequently, the AND operation is completed for the attribution values corresponding to each input to obtain the excitation intensity of each fuzzy rule. The most commonly used AND operations are minimum and product. To facilitate the derivation of the backpropagation algorithm, the product operation is used in this study. The excitation intensity of each fuzzy rule is calculated as follows:

where is the excitation value of each membership function.

Finally, each rule is input into the defuzzification layer to obtain a crisp output. The relevant formula is as follows:

where is the kth output, is the excitation intensity of the jth fuzzy rule, r is the number of fuzzy rules, and is the weight of the jth fuzzy rule and kth output.

2.2. Taguchi Method

The Taguchi method is a statistical method for experimental design. This method is a low-cost, high-efficiency, and high-quality engineering method. In a complete factorial experiment design, the number of experiments to be conducted increases as the number of factors increases. Therefore, an orthogonal array (OA) is developed to analyze the signal-to-noise (S/N) ratio of various parameter combinations by conducting the least number of experiments. The S/N ratio is selected as the indicator of quality optimization and is used to analyze the experimental effect of each factor and level to achieve quality optimization. In this study, the measured values constitute the experimental evaluation index. When the measured value is higher, the quality loss is lower. The relevant formula is as follows:

Because orthogonal tables are reliable, they are widely used in several fields [32]. The Taguchi method reduces the required number of experiments to be conducted and the time required to complete an experiment, thereby reducing engineering costs and enabling a reliable impact factor to be obtained. From the various types of orthogonal tables, an appropriate orthogonal table is selected according to the number of factors and the number of levels. The notation used for orthogonal tables is , where L represents the abbreviation of the orthogonal table (a Latin square), x represents the number of experiments, y represents the number of levels, and z represents the maximum number of factors that can be accommodated.

3. Experimental Results

We designed experiments to evaluate the accuracy of malware image classification with the developed FT-CFNN. In this section, the source of the dataset is stated, and the selection of FT-CFNN parameters and the network settings are then described. The Taguchi method was used to determine the optimal parameter combination. The experimental results of the proposed FT-CNN were then compared with those obtained using other methods.

3.1. Dataset

The Malimg dataset, which is a public database obtained from Virus-Share.com, was adopted in this study. Currently, samples on approximately 47 million types of viruses are available on this website. A total of 9339 images of 25 types of viruses [33] were used in this study (Table 1).

Table 1.

Numbers of images of various types of viruses adopted in this study [33].

3.2. Experimental Results Obtained with the CFNN

In the adopted CFNN, four fusion methods (GMP fusion, GAP fusion, CGMP fusion, and CGAP fusion) are used to perform malware image classification. This network contains two convolutional layers and two pooling layers, and an FNN is used in it to replace a fully connected network. Therefore, the CFNN has a small architecture, a small number of parameters, and a high operation speed. Table 2 presents the learning parameter settings of the CFNN, and Table 3 presents the network architecture parameter settings of the CFNN. The test loops of TensorFlow and Keras were used for deep learning in this study. The parameters of the CFNN were the same in the experiments conducted using the four fusion methods. The adopted dataset contained 9339 malware images, 80% of which were randomly used as the training set, and the remaining 20% of the images were used as the test set. In the first convolution and pooling layers, the filter size was 32, the size of the convolution kernel was 3 × 3, and the stride was 2. In the second convolution and pooling layers, the filter size was 64, the size of the convolution kernel was 3 × 3, and the stride was 2. The feature fusion layer was used to reduce the dimensions of the feature layer. Finally, the FNN was used for classification.

Table 2.

Learning parameter settings of the CFNN.

Table 3.

Network architecture parameter settings of the CFNN.

Accuracy was used as the evaluation index of the proposed network. This parameter is determined as follows:

where TP, FP, TN, and FN represent the numbers of true positives, false positives, true negatives, and false negatives, respectively. Table 4 presents the accuracy achieved with the four fusion methods. The accuracy values achieved by the CFNN with the GMP, GAP, CGMP, and CGAP fusion methods were 96.68%, 97.86%, 98.13%, and 97.64%, respectively. The experimental results indicate that the proposed CFNN achieved the highest accuracy with the CGAP fusion method.

Table 4.

Accuracy of the CFNN with various fusion methods.

3.3. Experimental Results Obtained with the FT-CFNN

As presented in Table 4, the accuracy obtained with CGAP fusion was higher than that achieved with the other three fusion methods. Because the network architecture parameters could not be easily determined, the Taguchi method was used to determine the impact factor and optimize the parameter combinations. Therefore, the developed FT-CFNN uses the CGAP fusion method to apply the Taguchi method.

In the conducted experiment, six factors were selected: Conv1-Filter (F1), Conv1-Kernel size (K1), Conv1-Padding (P1), Conv2-Filter (F2), Conv2-Kernel size (K2), and Conv2-Padding (P2). Four of these factors (F1, K1, F2, and K2) are three-level factors, and the other two factors (P1 and P2) are two-level factors. The affecting factors and their levels are presented in Table 5. According to the total number of factors and levels in the experiment, we used the L36 orthogonal table, which is composed of different factors and levels.

Table 5.

Affecting factors and their levels.

The selected three- and two-level factors required 22 × 34 = 324 traditional experiments. After the Taguchi method was applied, only 36 experiments were required. Therefore, the Taguchi method reduced the number of experiments required. To ensure the stability of the experiments, three experiments were performed for each parameter combination in the OA, and the average of the three accuracy values was used to calculate the S/N ratio, as presented in Table 6.

Table 6.

Accuracy values and S/N ratios for different parameter combinations.

The S/N ratio for each factor and level combination was obtained from the results of the 36 experiments based on larger-the-better characteristic. Table 7 presents the difference of S/N ratio, significance ranking, optimal level, and optimal parameter combination for each factor. If the difference value of S/N ratio for each factor was higher, the corresponding factor had a stronger influence. The significance ranking represents the ranking of the influence degree of each factor. A higher difference of S/N ratio for P2 indicated that a factor had a stronger influence (Table 7). The final optimal parameter combination was as follows: F1 = 16, K1 = 5, P1 = 0, F2 = 16, K2 = 6, and P2 = 0.

Table 7.

Results obtained for each factor.

The results of analysis of variance (ANOVA) are presented in Table 8, and these results indicate whether the screened experimental factors are significant with respect to the experimental results. In Table 8, the degree of freedom is the number of levels minus 1, SS is the error sum of squares, the size of the F value determines the significance of the factors affecting the overall experiment, and the contribution degree represents the proportion of each factor to the overall experimental results. Table 8 indicates that the factor P2 (i.e., the padding in second layer) has the strongest influence on and highest contribution to the experiments.

Table 8.

Results of ANOVA.

Table 9 presents the optimal parameter combinations obtained using the Taguchi method. These parameters were set for the FT-CFNN, which was then used for malware image classification. The accuracy and sensitivity values obtained with the CFNN and FT-CFNN are presented in Table 10. Experimental results show that the accuracy and sensitivity of the proposed FT-CFNN with CGAP fusion method are 98.61% and 97.96%, which are 0.48% and 0.45% higher than CFNN, respectively.

Table 9.

Optimal parameter combination for the FT-CFNN.

Table 10.

Accuracy and sensitivity values obtained with the CFNN and FT-CFNN.

3.4. Comparison of the Results Obtained with Various Methods

To verify the effectiveness of the FT-CFNN, we compared its accuracy with the accuracy values reported in other studies [22,23,24,25,26,27] conducted on the Malimg dataset. In this experiment, Garcia and Muga II [22], Gao et al. [23], and Nataraj et al. [24] classified malware images using traditional machine learning methods such as random forest, KNN, and logistic regression. Although these methods require only a small number of learning parameters and obtain good malware image classification results (Table 11), the user is required to define the malware image features in advance. Lin et al. [25], Kalash et al. [26], and Qi et al. [27] used deep learning technology to obtain good malware image classification results. However, these methods require a large number of learning parameters (Table 11). The experimental results indicated that the proposed FT-CFNN is more accurate than the methods used in the previous relevant studies [22,23,24,25,26,27] (Table 11). Furthermore, the proposed FT-CFNN requires only 13,609 parameters and achieves 98.61% accuracy.

Table 11.

Accuracy values obtained by various methods and the number of parameters required by these methods.

4. Conclusions

In this paper, an FT-CFNN is proposed for malware image classification. This network comprises two parts: a CFNN and Taguchi-method-based optimal parameter combinations. Four feature fusion methods (GMP, GAP, CGMP, and CGMP) are proposed for the CFNN to reduce the feature size and integrate network information. An FNN is used to replace a fully connected network to reduce the size of adjustable parameters. The proposed CFNN achieved accuracy values of 96.68%, 97.86%, 98.13%, and 97.64% with the GMP, GAP, CGMP, and CGMP fusion methods, respectively, for the Malimg dataset. The experimental results indicated that the CFNN achieved the highest accuracy with the CGAP fusion method. To reduce the number of experiments for parameter combinations, the Taguchi method was used to determine the affecting factors and levels. The experimental results indicated that the accuracy of the FT-CFNN, proposed CFNN, and original LeNet model in malware family classification was 98.61%, 98.13%, and 96.68%, respectively.

Because the Malimg dataset only contains 9339 images, generative adversarial networks can be used in the future to increase the number of images and thus improve stability. Indicators such as accuracy, sensitivity, and specificity have been widely used by scholars for comparing the classification and identification performance of deep learning networks. However, these parameters have different values in each network learning training step; therefore, the use of a single value or index average to assess a network’s image classification performance might lead to inaccurate assessments. Therefore, future research should address this problem by using statistical methods to define a performance recognition index. Furthermore, in this study, we only use the existing malware database for analysis and classification, and do not apply the proposed method to actual cyberattack problems. Therefore, we will also apply the proposed method to practically any malicious programs that steal crucial data in future research.

Author Contributions

Conceptualization, C.-J.L. and M.-S.H.; Methodology, C.-J.L., M.-S.H. and C.-L.L.; Software, C.-J.L. and M.-S.H.; Data Curation, C.-J.L. and C.-L.L.; Writing—Original Draft Preparation, C.-J.L. and C.-L.L.; Supervision, C.-J.L.; Funding Acquisition, C.-J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Science and Technology of the Republic of China, grant number MOST 110-2221-E-167-031-MY2.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kaspersky Report: Kaspersky Security Bulletin: Overall Statistics for 2016. Available online: https://media.kasperskycontenthub.com/wp-content/uploads/sites/43/2018/03/07182326/Kaspersky_Security_Bulletin_2016_Statistics_ENG.pdf (accessed on 2 October 2022).

- McAfee Report: McAfee Labs Threats Report in June 2017. Available online: https://www.mcafee.com/enterprise/en-us/assets/reports/rp-quarterly-threats-jun-2017.pdf (accessed on 15 August 2022).

- Gao, X.; Hu, H.; Shan, C.; Liu, B.; Niu, Z.; Xie, H. Malware classification for the cloud via semi-supervised transfer learning. J. Inf. Secur. Appl. 2020, 55, 102661. [Google Scholar] [CrossRef]

- Malwarebytes Labs. 2020 State of Malware Report. Available online: https://www.malwarebytes.com/resources/files/2020/02/2020_state-of-malware-report.pdf (accessed on 7 August 2022).

- A Constant State of Flux: Trend Micro 2020 Annual Cybersecurity Report. Available online: https://www.trendmicro.com/zh_tw/about/newsroom/press-releases/2021/2021-03-16.html (accessed on 22 July 2022).

- Yan, J.; Yong, Q.; Rao, Q. Detecting malware with an ensemble method based on deep neural network. Secur. Commun. Netw. 2018, 2018, 7247095. [Google Scholar] [CrossRef]

- Moser, A.; Kruegel, C.; Kirda, E. Limits of static analysis for malware detection. In Proceedings of the IEEE Twenty-Third Annual Computer Security Applications Conference (ACSAC 2007), Miami Beach, FL, USA, 10–14 December 2007; pp. 421–430. [Google Scholar]

- Zakeri, M.; Daneshgar, F.F.; Abbaspour, M. A static heuristic approach to detecting malware targets. Secur. Commun. Netw. 2015, 8, 3015–3027. [Google Scholar] [CrossRef]

- Alsmadi, T.; Alqudah, N. A Survey on malware detection techniques. In Proceedings of the 2021 International Conference on Information Technology (ICIT), Amman, Jordan, 14–15 July 2021; pp. 371–376. [Google Scholar]

- Chen, Z.; Brophy, E.; Ward, T. Malware classification using static disassembly and machine learning. arXiv 2021. [Google Scholar] [CrossRef]

- Iwamoto, K.; Wasaki, K. Malware classification based on extracted API sequences using static analysis. In Proceedings of the Asian Internet Engineeering Conference, Bangkok, Thailand, 14 November 2012; pp. 31–38. [Google Scholar]

- Khodamoradi, P.; Fazlali, M.; Mardukhi, F.; Nosrati, M. Heuristic metamorphic malware detection based on statistics of assembly instructions using classification algorithms. In Proceedings of the 2015 18th CSI International Symposium on Computer Architecture and Digital Systems (CADS), Tehran, Iran, 7–8 October 2015; pp. 1–6. [Google Scholar]

- Willems, C.; Holz, T.; Freiling, F. Toward automated dynamic malware analysis using cwsandbox. IEEE Secur. Priv. 2007, 5, 32–39. [Google Scholar] [CrossRef]

- Mohaisen, A.; Alrawi, O.; Mohaisen, M. AMAL: High-fidelity, behavior-based automated malware analysis and classification. Comput. Secur. 2015, 52, 251–266. [Google Scholar] [CrossRef]

- Galal, H.S.; Mahdy, Y.B.; Atiea, M.A. Behavior-based features model for malware detection. J. Comput. Virol. Hacking Tech. 2015, 12, 59–67. [Google Scholar] [CrossRef]

- Kolosnjaji, B.; Zarras, A.; Webster, G.; Eckert, C. Deep learning for classification of malware system call sequences. In Proceedings of the 29th Australasian Joint Conference on Artificial Intelligence (AI), Hobart, Australia, 5–9 December 2016; pp. 137–149. [Google Scholar]

- Mira, F.; Huang, W. Performance Evaluation of String Based Malware Detection Methods. In Proceedings of the 2018 24th International Conference on Automation and Computing (ICAC), Newcastle Upon Tyne, UK, 6–7 September 2018; pp. 1–6. [Google Scholar]

- Damodaran, A.; Troia, F.D.; Visaggio, C.A.; Austin, T.H.; Stamp, M. A comparison of static dynamic and hybrid analysis for malware detection. J. Comput. Virol. Hacking Tech. 2015, 13, 1–12. [Google Scholar] [CrossRef]

- Han, K.S.; Kang, B.J.; Im, E.G. Malware analysis using visualized image matrices. Sci. World J. 2014, 1. [Google Scholar] [CrossRef] [PubMed]

- Azab, A.; Khasawneh, M. Msic: Malware spectrogram image classification. IEEE Access 2020, 8, 102007–102021. [Google Scholar] [CrossRef]

- Narayanan, B.N.; Djaneye-Boundjou, O.; Kebede, T.M. Performance analysis of machine learning and pattern recognition algorithms for Malware classification. In Proceedings of the 2016 IEEE National Aerospace and Electronics Conference (NAECON) and Ohio Innovation Summit (OIS), Dayton, OH, USA, 16 February 2017; pp. 338–342. [Google Scholar]

- Garcia, F.C.C.; Muga, F.P. Random forest for malware classification. arXiv 2016. [Google Scholar] [CrossRef]

- Gao, T.; Zhao, L.; Li, X.; Chen, W. Malware detection based on semi-supervised learning with malware visualization. Math. Biosci. Eng. 2021, 18, 5995–6011. [Google Scholar] [CrossRef] [PubMed]

- Nataraj, L.; Karthikeyan, S.; Jacob, G.; Manjunath, B.S. Malware images: Visualization and automatic classification. In Proceedings of the 8th International Symposium on Visualization for Cyber Security, Article No. 4, New York, NY, USA, 20 July 2011; pp. 1–7. [Google Scholar]

- Lin, C.J.; Lin, X.Y.; Jhang, J.Y. Malware classification using a Taguchi-based deep learning network. Sens. Mater. 2022, 34, 3569–3580. [Google Scholar] [CrossRef]

- Kalash, M.; Rochan, M.; Mohammed, N.; Bruce, N.D.B.; Wang, Y.; Iqbal, F. Malware classification with deep convolutional neural networks. In Proceedings of the 2018 9th IFIP International Conference on New Technologies, Mobility and Security (NTMS), Paris, France, 26–28 February 2018; pp. 1–5. [Google Scholar]

- Qi, P.; Wang, W.; Zhu, L.; Ng, S.K. Unsupervised domain adaptation for static malware detection based on gradient boosting trees. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management (CIKM ’21), Queensland, Australia, 1–5 February 2021; pp. 1457–1466. [Google Scholar]

- Lin, C.J.; Jhang, J.Y. Intelligent traffic-monitoring system based on YOLO and convolutional fuzzy neural networks. IEEE Access 2022, 10, 14120–14133. [Google Scholar] [CrossRef]

- Rezania, A.; Atouei, S.A.; Rosendahl, L. Critical parameters in integration of thermoelectric generators and phase change materials by numerical and Taguchi methods. Mater. Today Energy 2020, 16, 100376. [Google Scholar] [CrossRef]

- Shalaginov, A.; Grini, L.S.; Franke, K. Understanding neuro-fuzzy on a class of multinomial malware detection problems. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 684–691. [Google Scholar]

- Shihabudheen, K.V.; Pillai, G.N. Recent advances in neuro-fuzzy system: A survey. Knowl. Based Syst. 2018, 152, 136–162. [Google Scholar] [CrossRef]

- Lin, C.J.; Li, Y.C. Lung nodule classification using Taguchi-based convolutional neural networks for computer tomography images. Electronics 2020, 29, 1066. [Google Scholar] [CrossRef]

- Verma, V.; Muttoo, S.K.; Singh, V.B. Multiclass malware classification via first- and second-order texture statistics. Comput. Secur. 2002, 97, 101895. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).