Implementation and Evaluation of Dynamic Task Allocation for Human–Robot Collaboration in Assembly

Abstract

1. Introduction

1.1. Human–Robot Collaboration

1.1.1. Challenges for Introducing Collaborative Robots

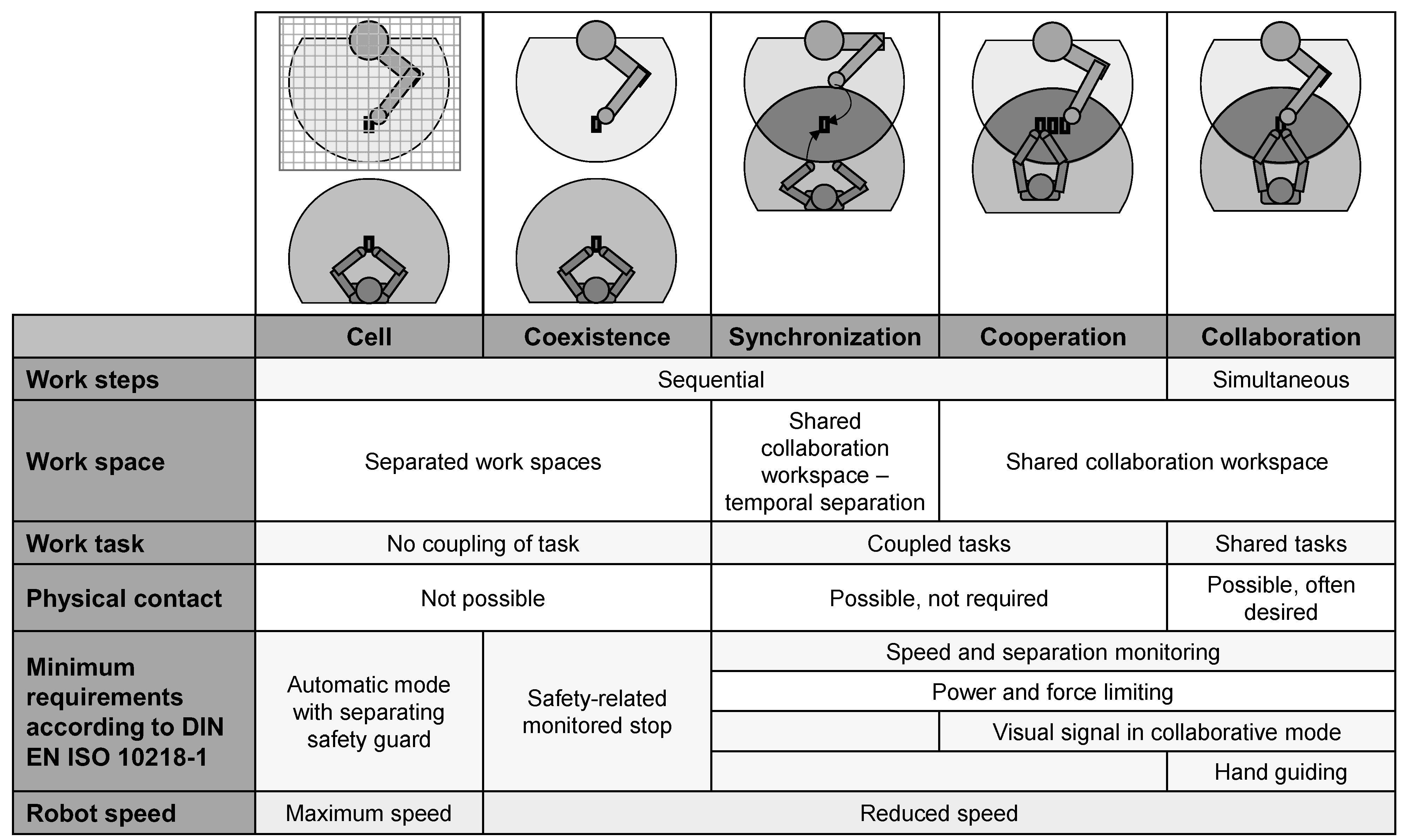

1.1.2. Classification of Cooperation Levels between Humans and Robots

1.2. Task Allocation for Human–Robot Collaboration in Assembly

1.3. Scope and Contributions of the Article

2. Materials and Methods

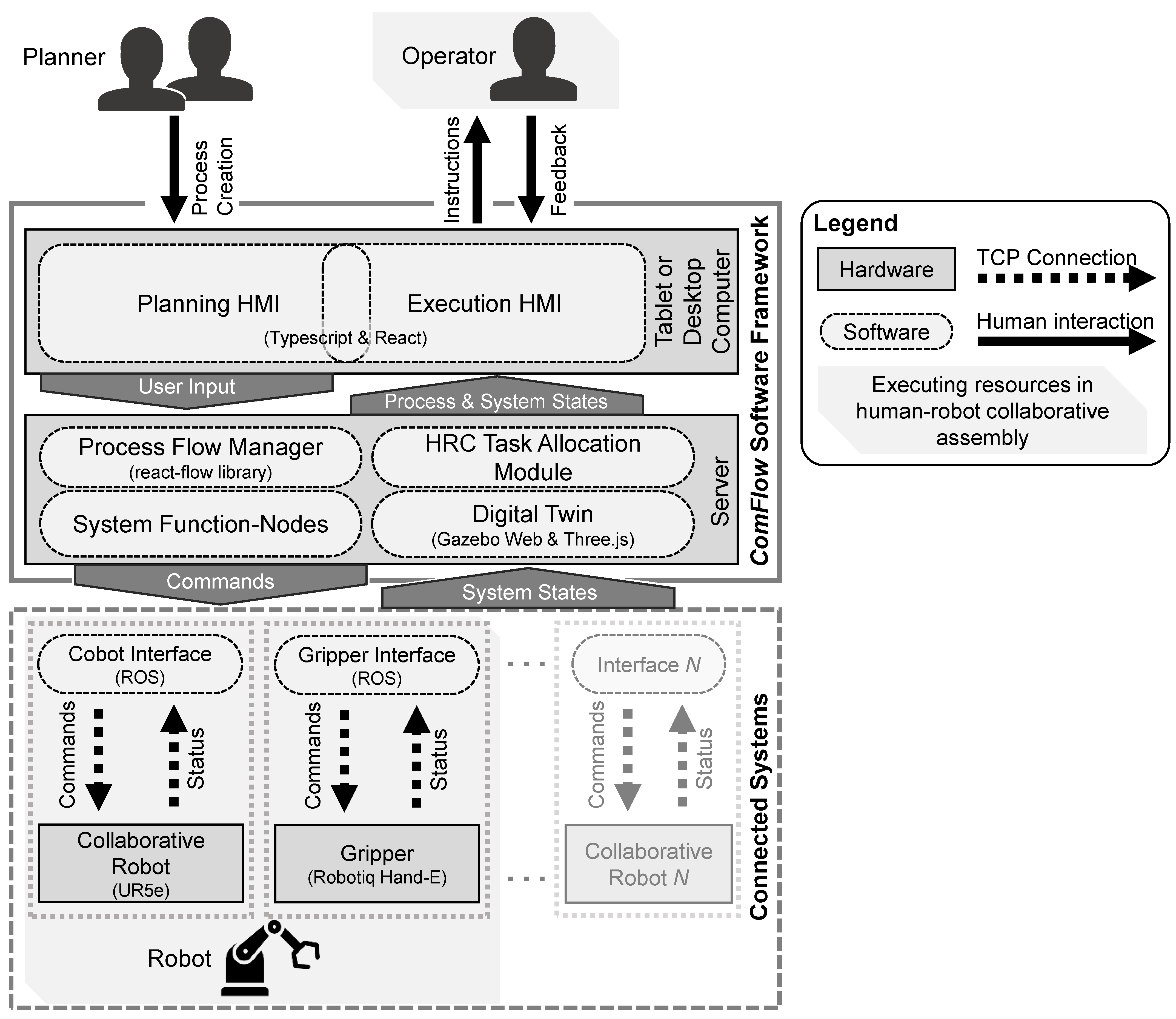

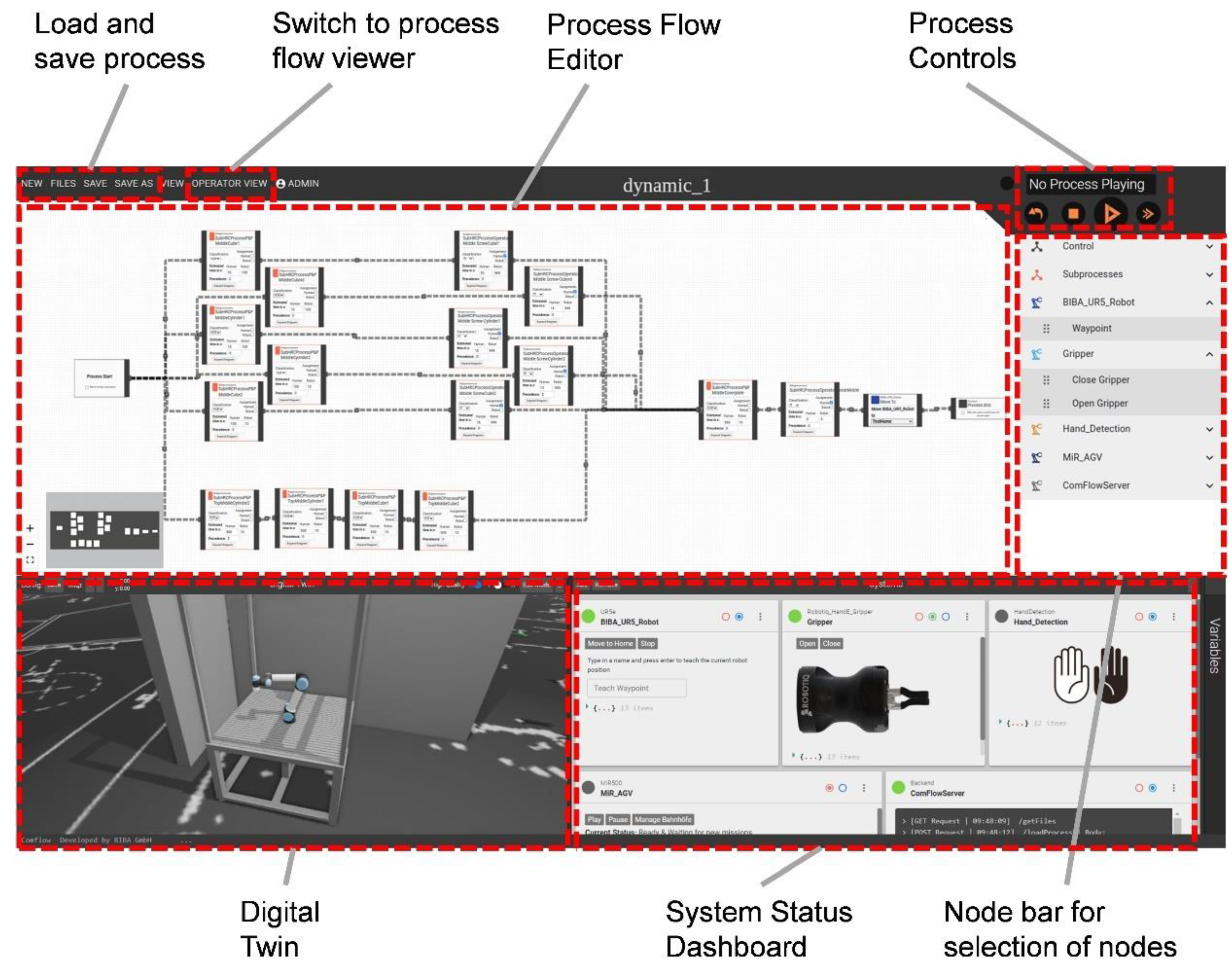

2.1. Software Framework for Block-Based No-Code Programming

2.2. Dynamic Task Allocation Module

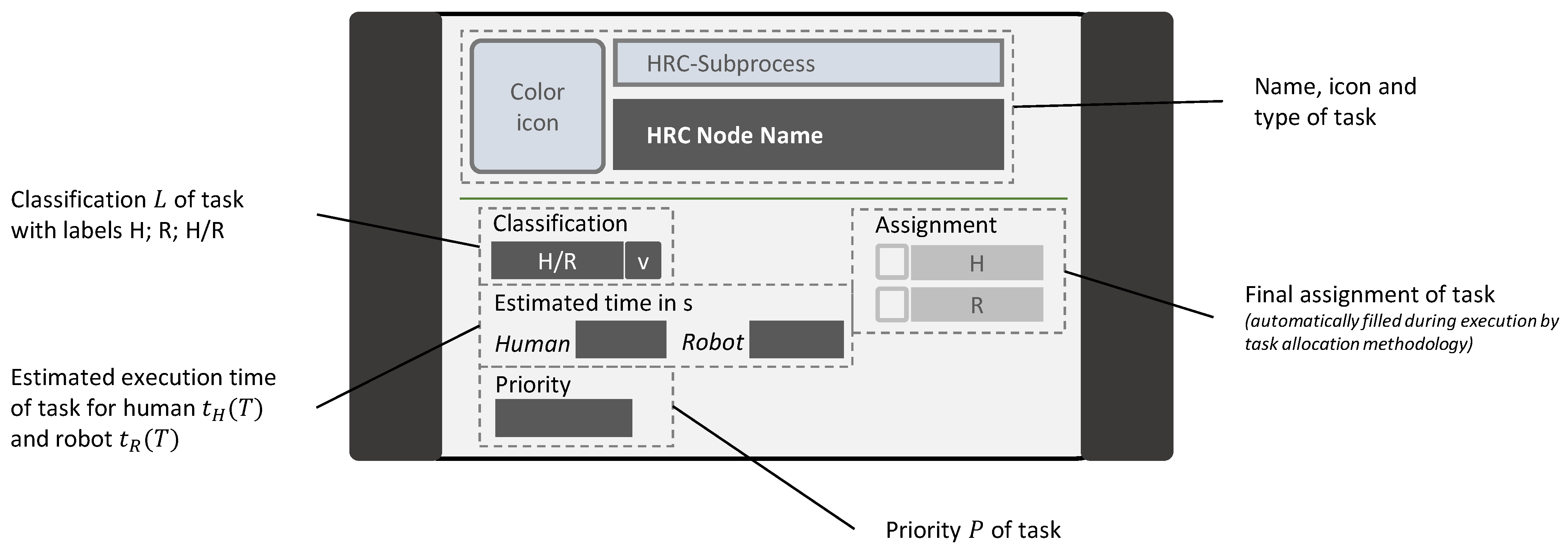

2.2.1. Planning Procedure

- Assembly priority chart;

- Task time estimation;

- Task classification.

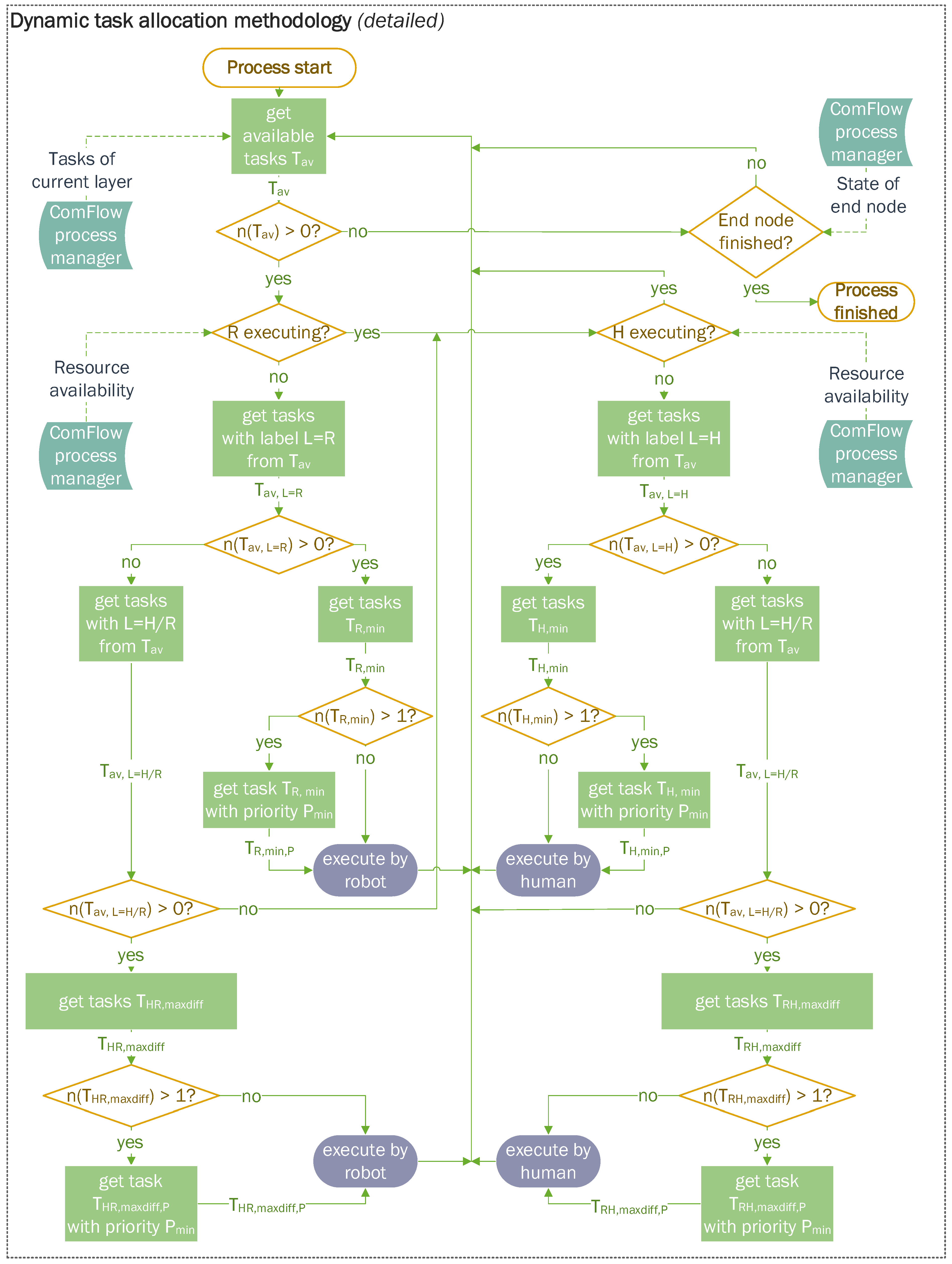

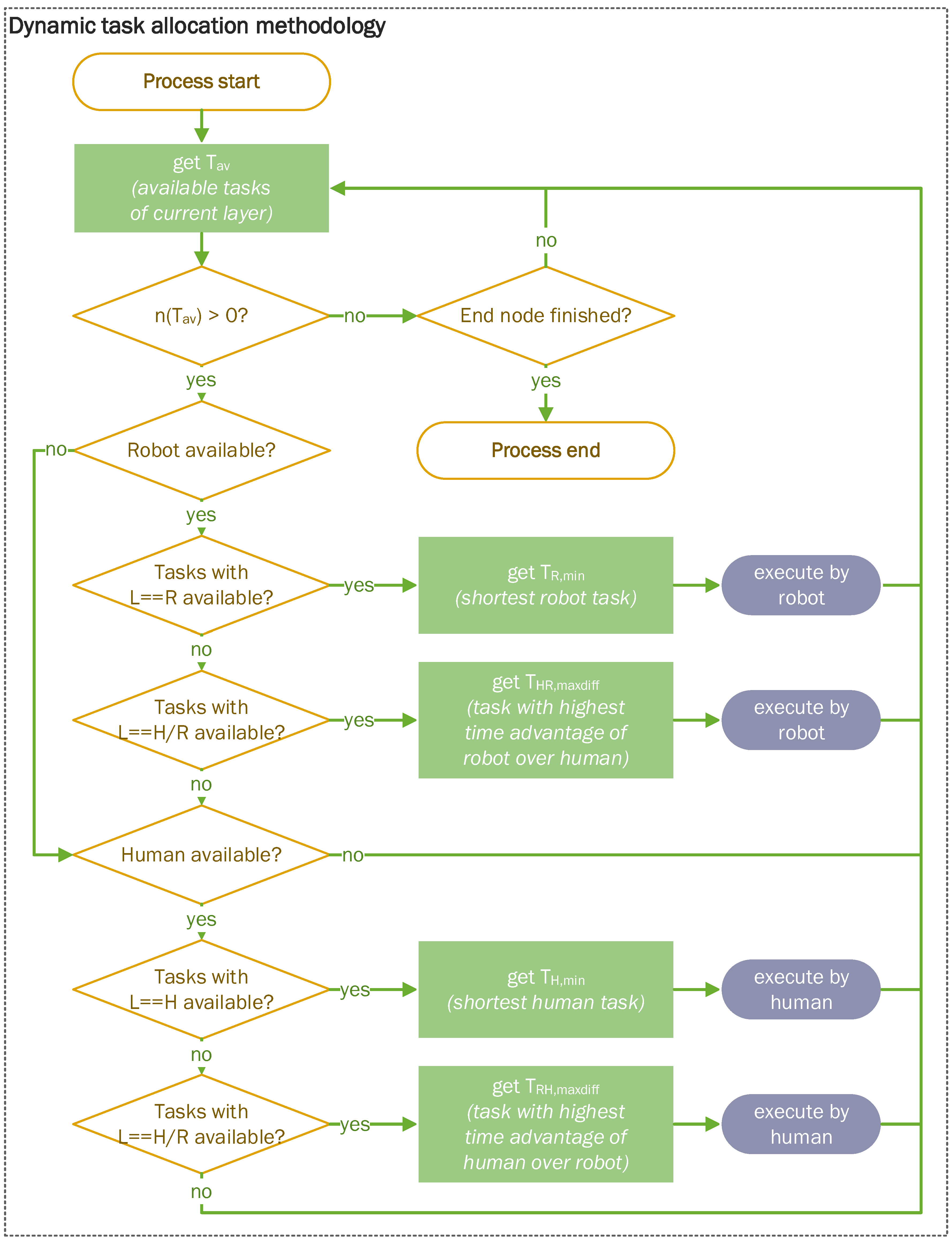

2.2.2. Methodology for Dynamic Task Allocation

- Not executed—not yet available (remaining tasks; highlighted in gray);

- Not executed—available (currently available tasks Tav; yellow);

- In execution by robot (blue), or in execution by human (purple);

- Completed (finished tasks; green).

| Available tasks of current layer (see Figure 6, yellow); | |

| The th task from available tasks of current layer; | |

| Number of tasks ; | |

| Classification of task—available resource labels are : human, : robot, : human or robot; | |

| Available tasks with resource label (i.e., , , or ); | |

| Priority of task; | |

| Task with minimal execution time among tasks with label ; | |

| Task with highest priority , i.e., minimum numeric value , among tasks fulfilling the condition term; | |

| Task with a maximum difference between the execution times of resources X and Y among tasks with label XY; | |

| Estimated execution time of resource X (H: human or R: robot) for task T. |

- The availability of the resources is checked.

- For each available resource, the currently available task with an unambiguous, matching task classification and minimum execution time (see Equations (1) and (2) for calculation) is assigned.

- If no more tasks with unambiguous task classification are currently available, tasks which are eligible for both the human and robot are considered, and the task in which the particular resource has the highest advantage in execution time over the other (see Equations (3) and (4) for calculation) is assigned to the respective available resource.

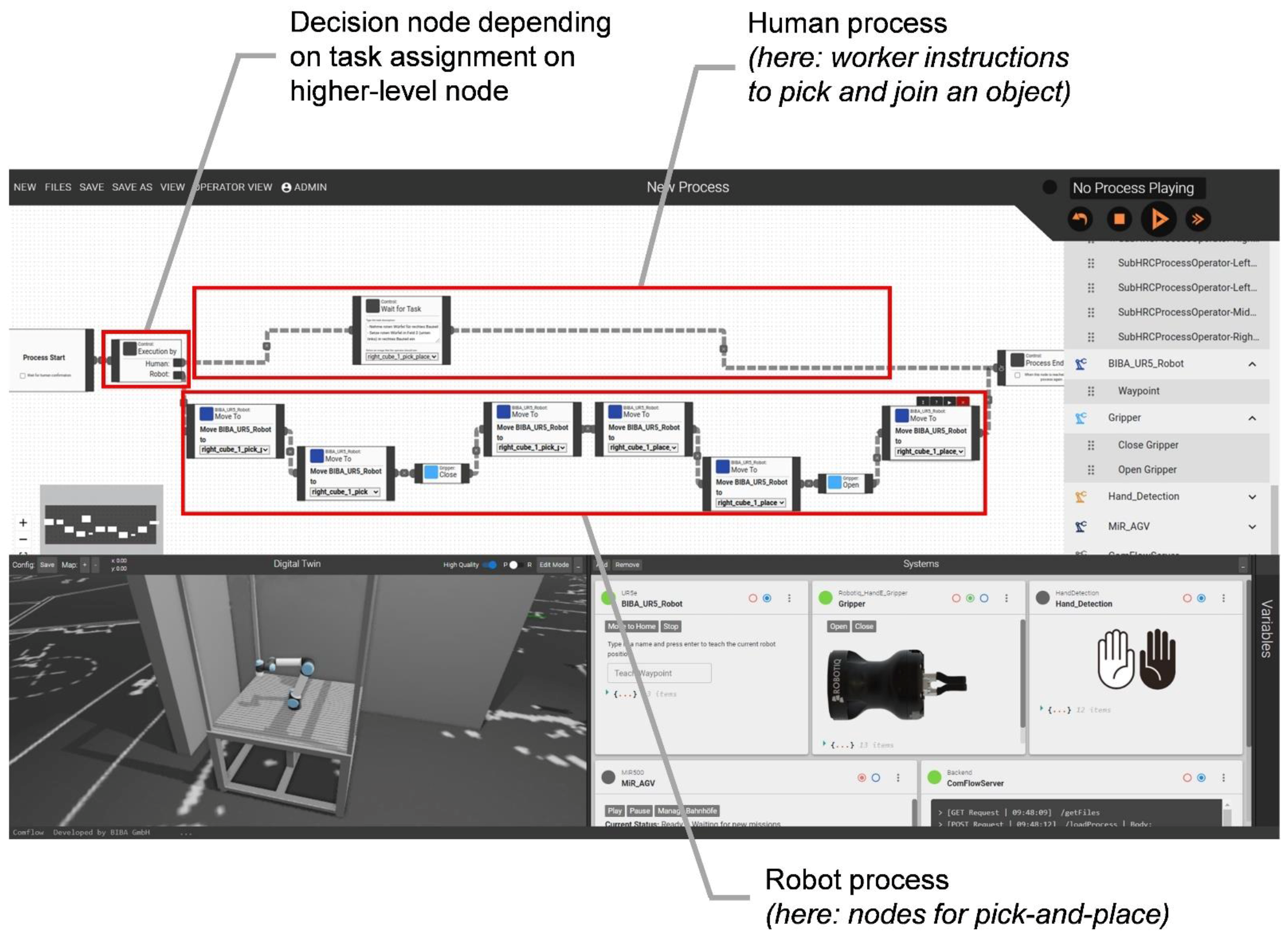

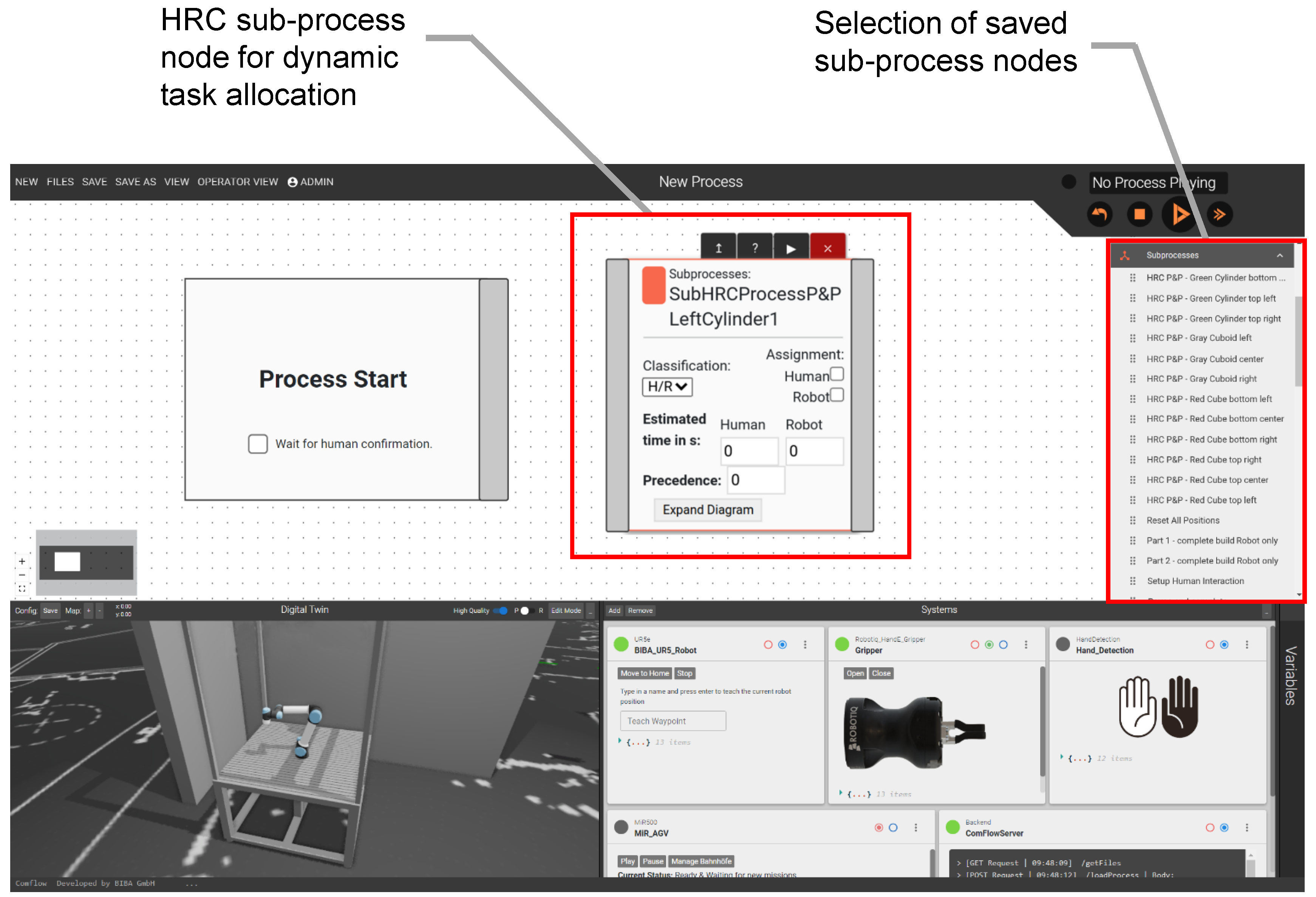

2.2.3. Implementation of Task Allocation Module into Software Framework

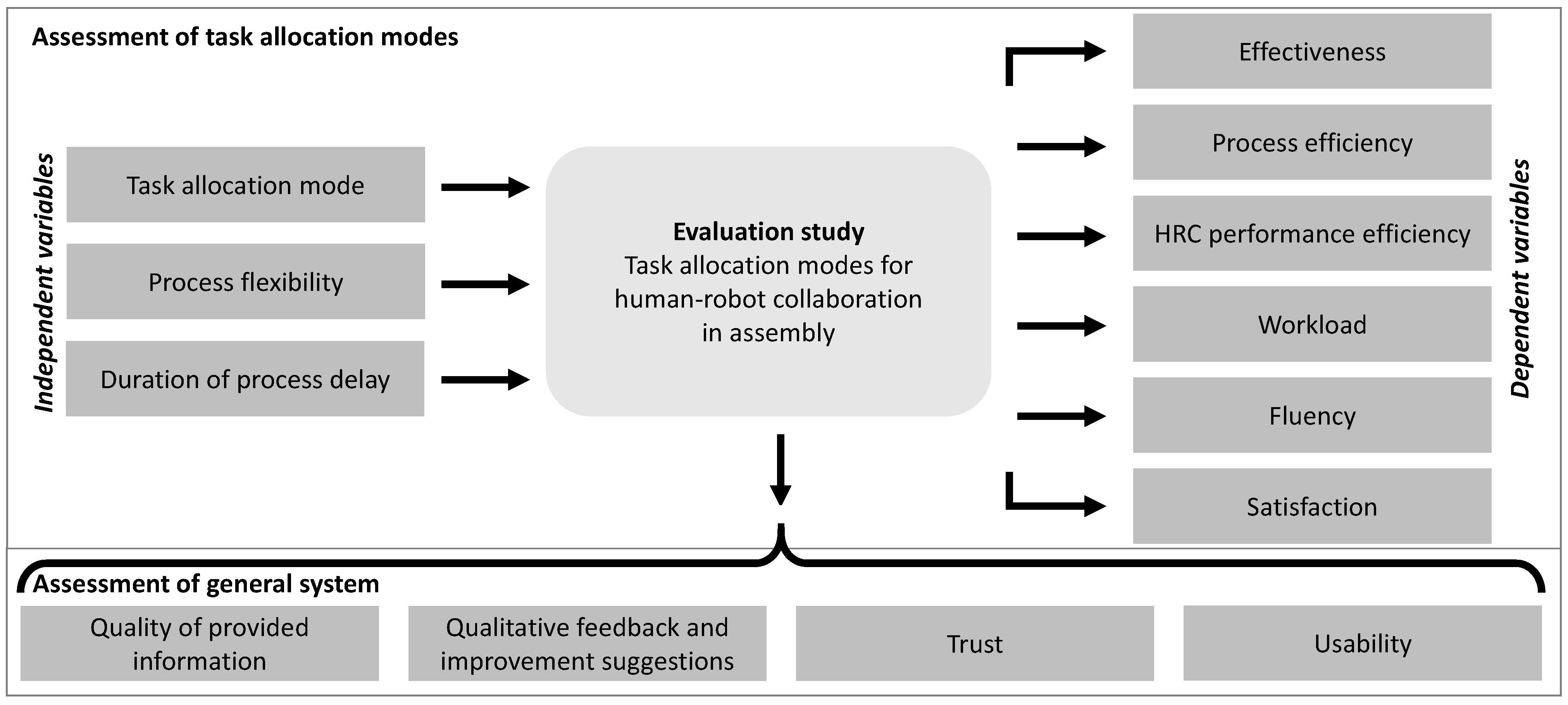

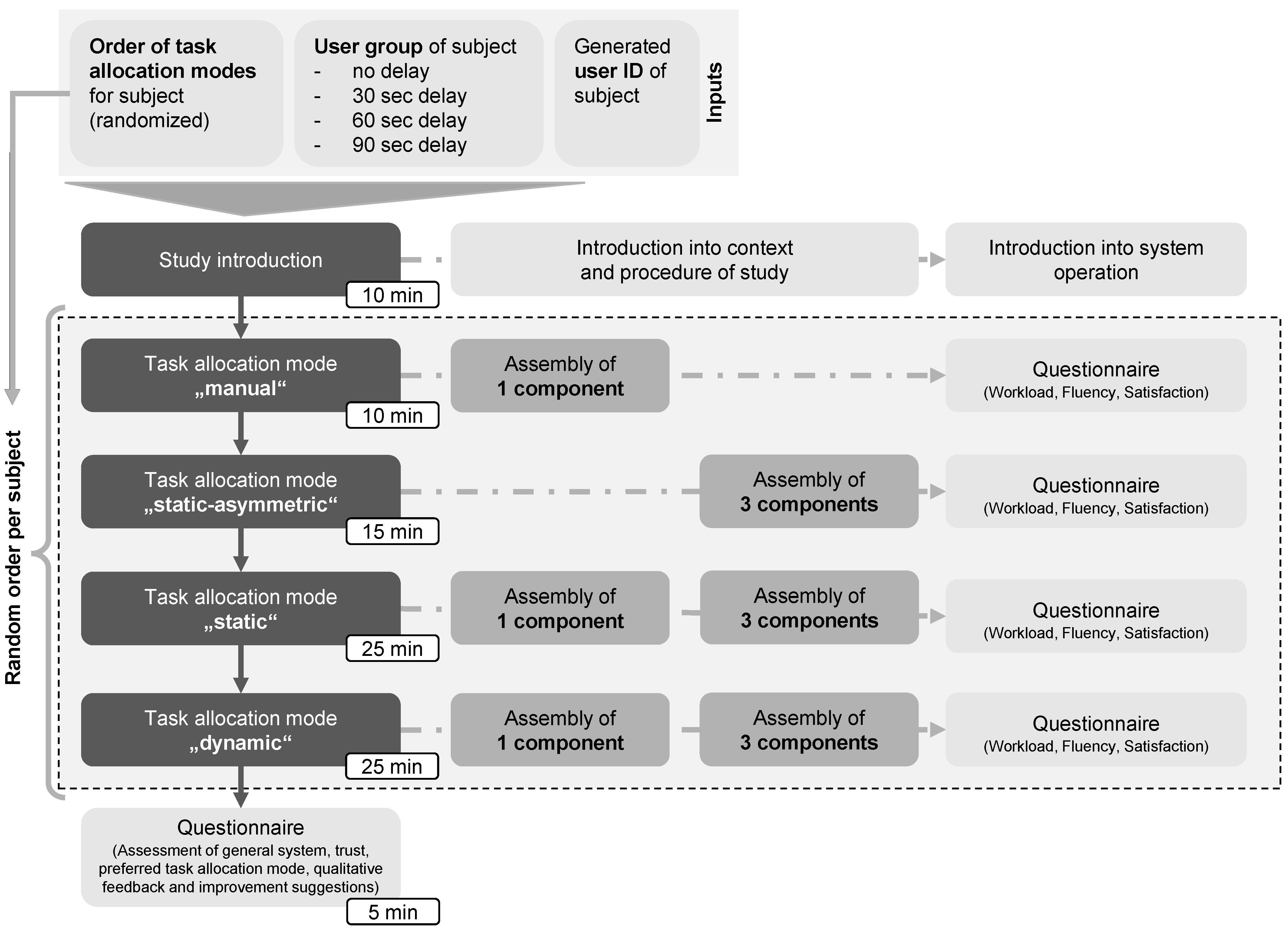

2.3. Study Design and Evaluation Methodology

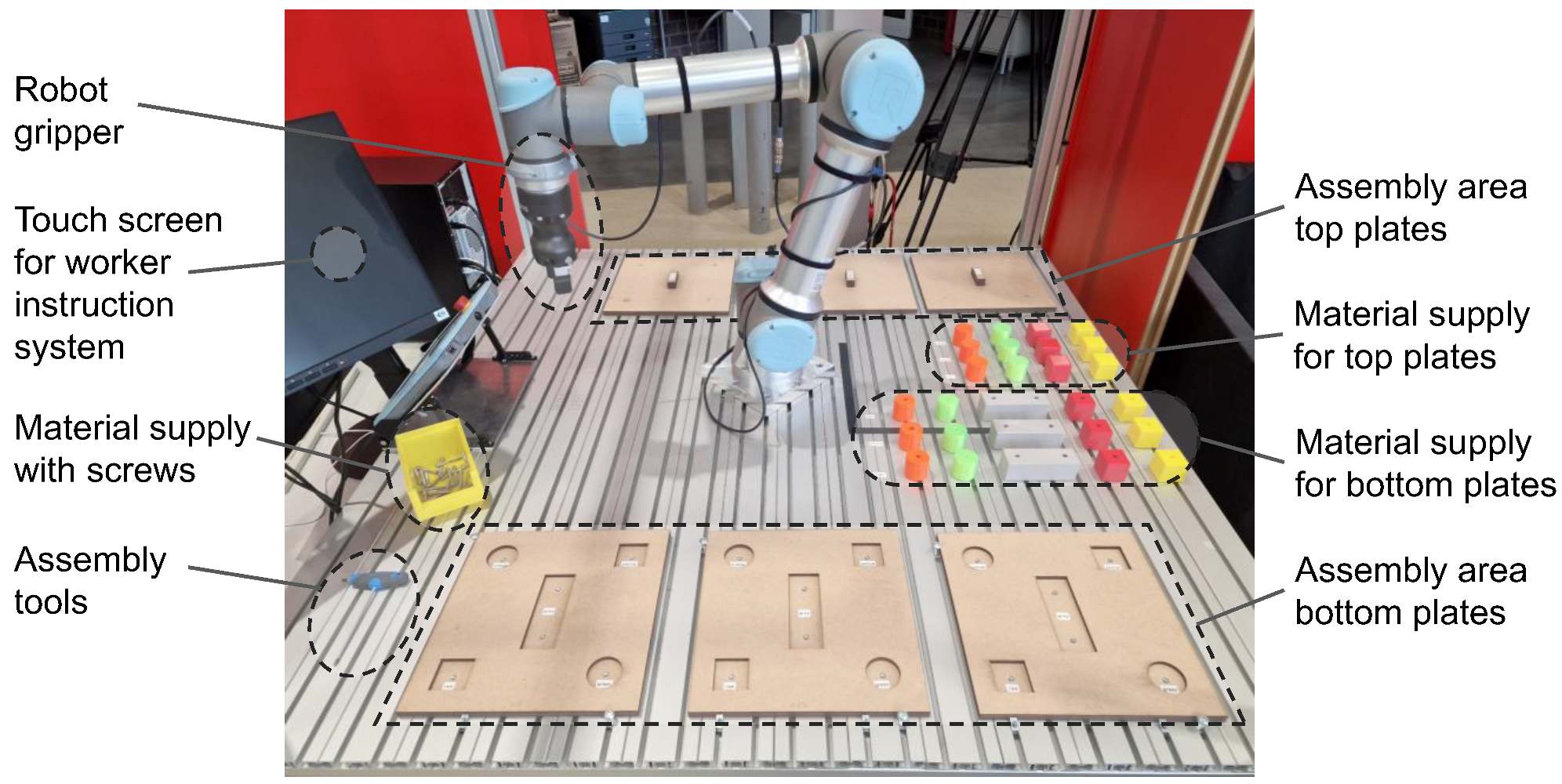

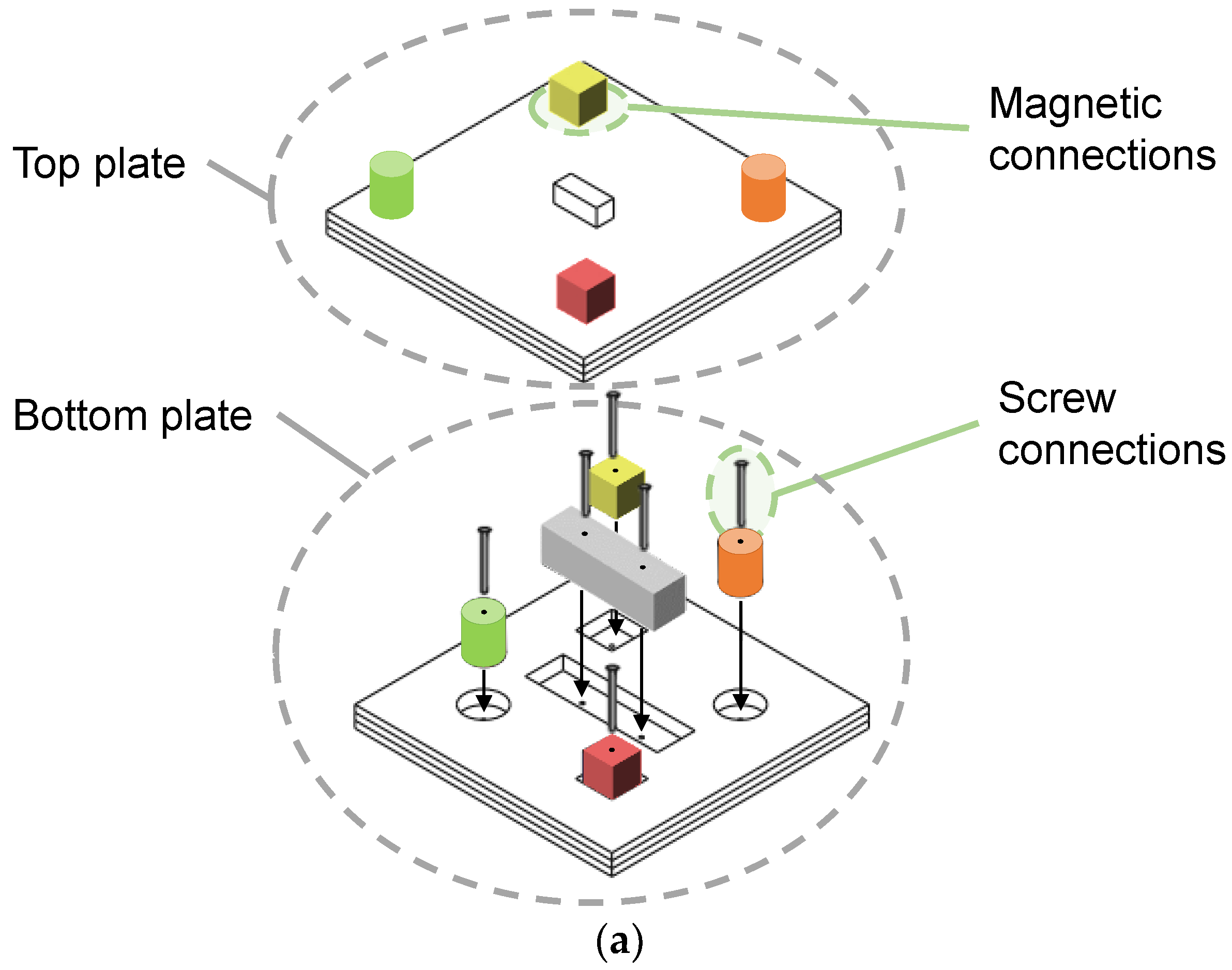

2.3.1. Experimental Setup and Task Allocation Modes

- Manual assemblyExecution of the assembly process exclusively by humans. For this purpose, all HRC process nodes are classified as “human” and thus the corresponding worker assembly instructions are displayed on the screen for all steps.

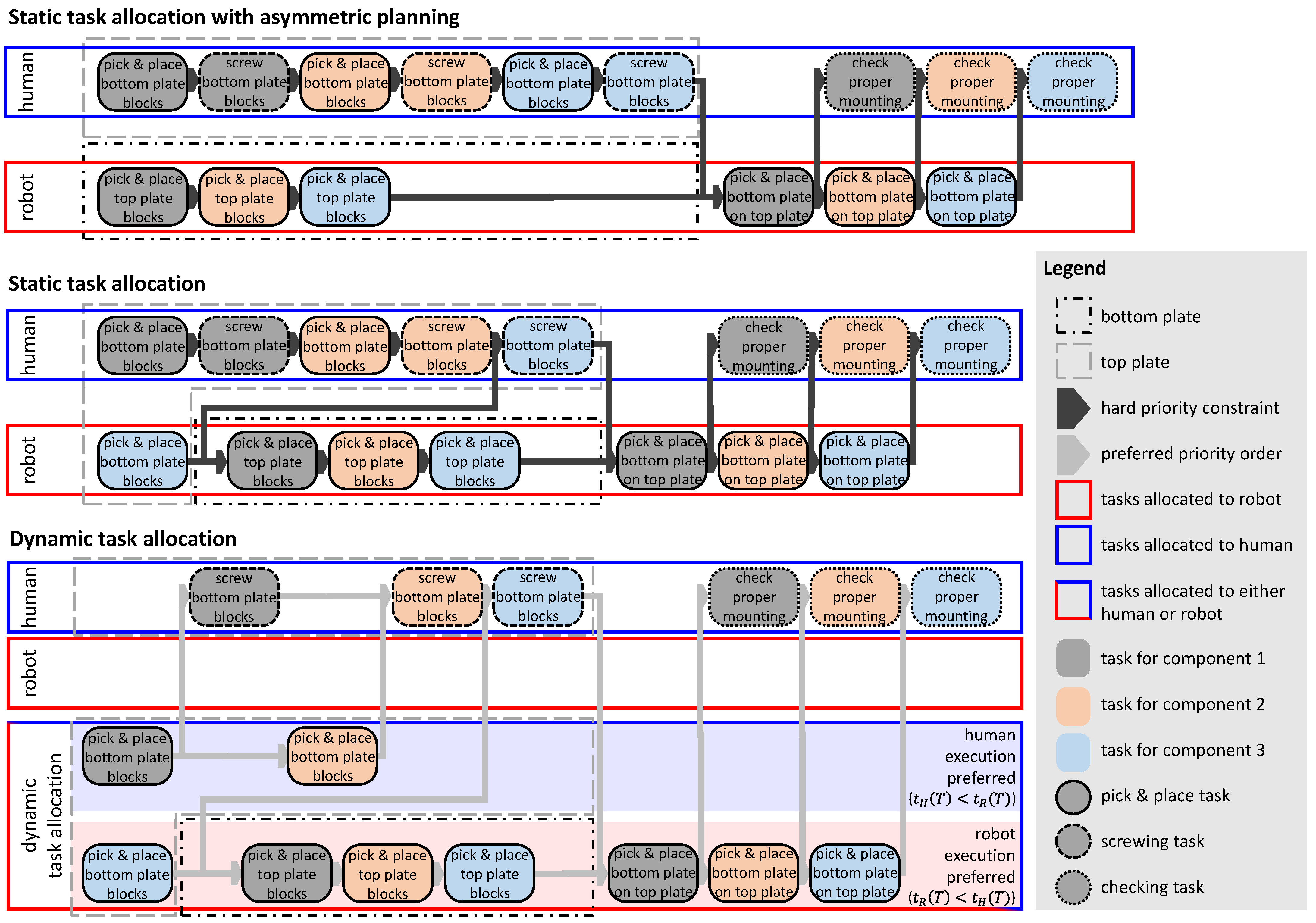

- Collaborative assembly—static task allocation with asymmetric planningExecution of the assembly in human–robot collaboration, with an intuitive distribution of the tasks between the resources. Here, the human takes over all assembly operations of the bottom plate and the robot executes all assembly operations of the top plate. Since the bottom plate requires additional screwing tasks, parallel sequences of different lengths are created, which is why the mode is referred to as static-asymmetric in the following for simplicity. The individual HRC nodes of the assembly process are each classified as either human or robot during process creation to allow no flexibility in task assignment. Additionally, the order in which the tasks are performed is fixed.

- Collaborative assembly—static task allocation with time-optimized planning and iterative, experimental fine-tuningExecution of assembly in human–robot collaboration, with planning of task assignment based on the estimated task times, followed by testing and experimental fine tuning of the order of the sub-processes by the first three authors. Here, the robot additionally takes over the placement of the blocks on the right bottom plate, thus the estimated times of the parallel assembly sequences are harmonized. This task allocation mode requires a higher planning effort and is called static in the following.

- Collaborative assembly—dynamic task allocationExecution of the assembly in human–robot collaboration, where the task allocation decision is made dynamically according to the approach for dynamic task allocation described in Section 2.2.2. The sub-processes, which can be executed in any order, are therefore not defined with a fixed sequence, but are created in parallel in accordance with the assembly precedence graph in Figure 13c. The decision about the task sequence, i.e., the selection of the sub-process to be executed next in each case, is therefore also made automatically following the logic of the explained dynamic task allocation approach. However, we set a priority P of one to three for the bottom plate preassembly tasks so that the algorithm prefers to assemble the bottom plates from left to right. The classification of the individual sub-processes is set according to the information about the executability of the step by the respective resource (human, robot, or human/robot for tasks that can be executed by both resources). The cognitive planning effort in this task allocation mode is therefore quite low, since only the input data need to be entered into the user interface.

2.3.2. Metrics and Data Collection

2.3.3. Procedure of User Study

2.3.4. Data Analysis and Evaluation Process

3. Results

3.1. Effectiveness

3.2. Efficiency

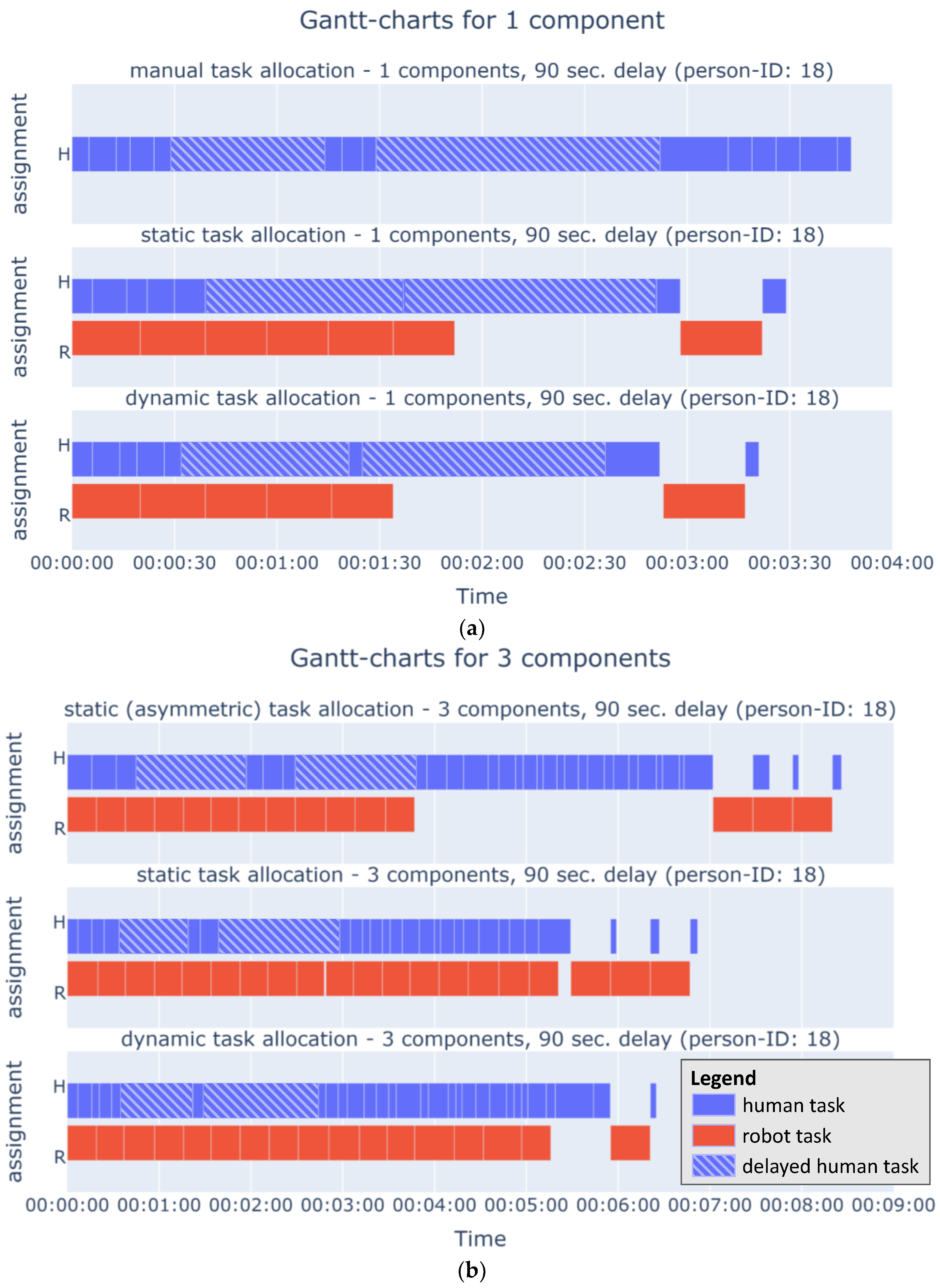

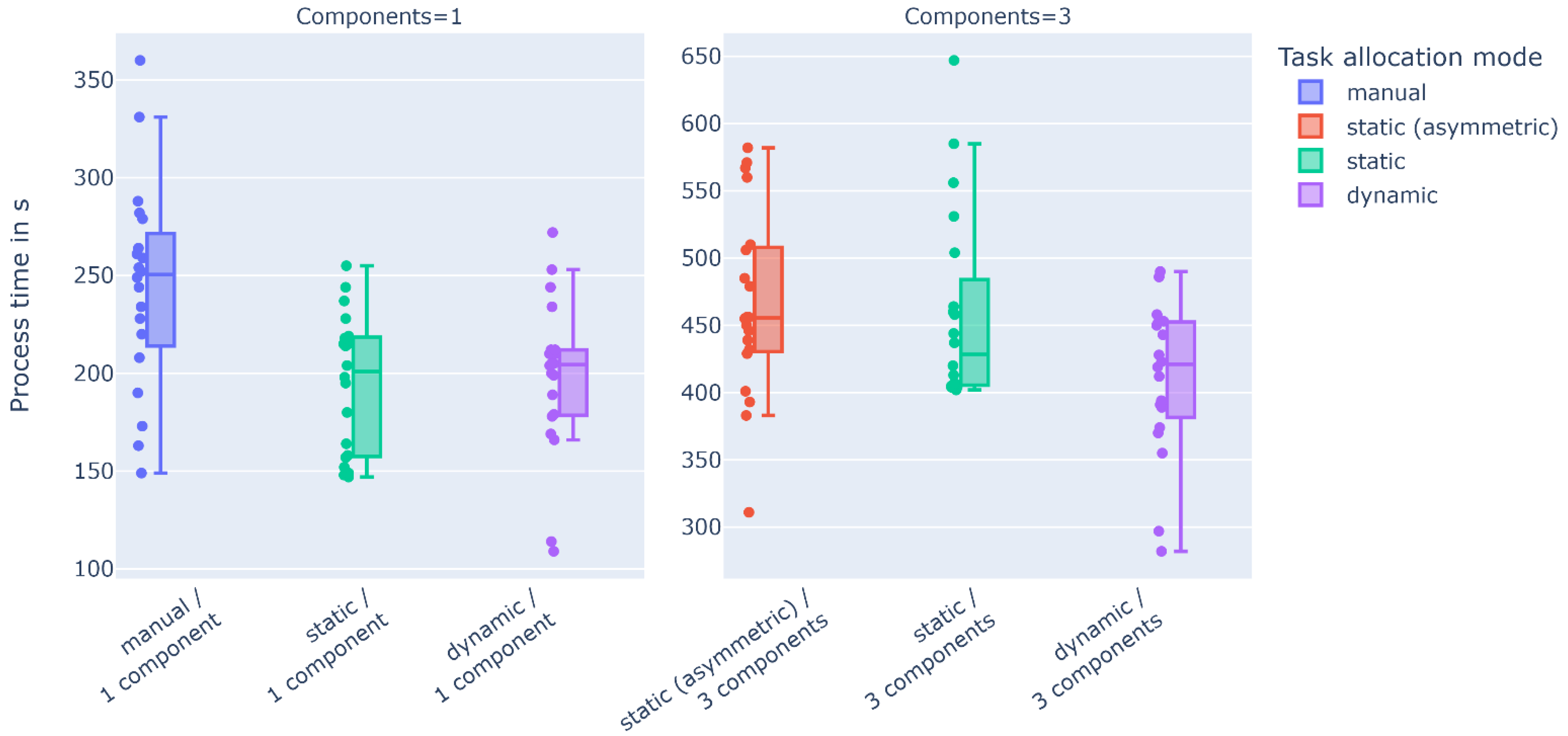

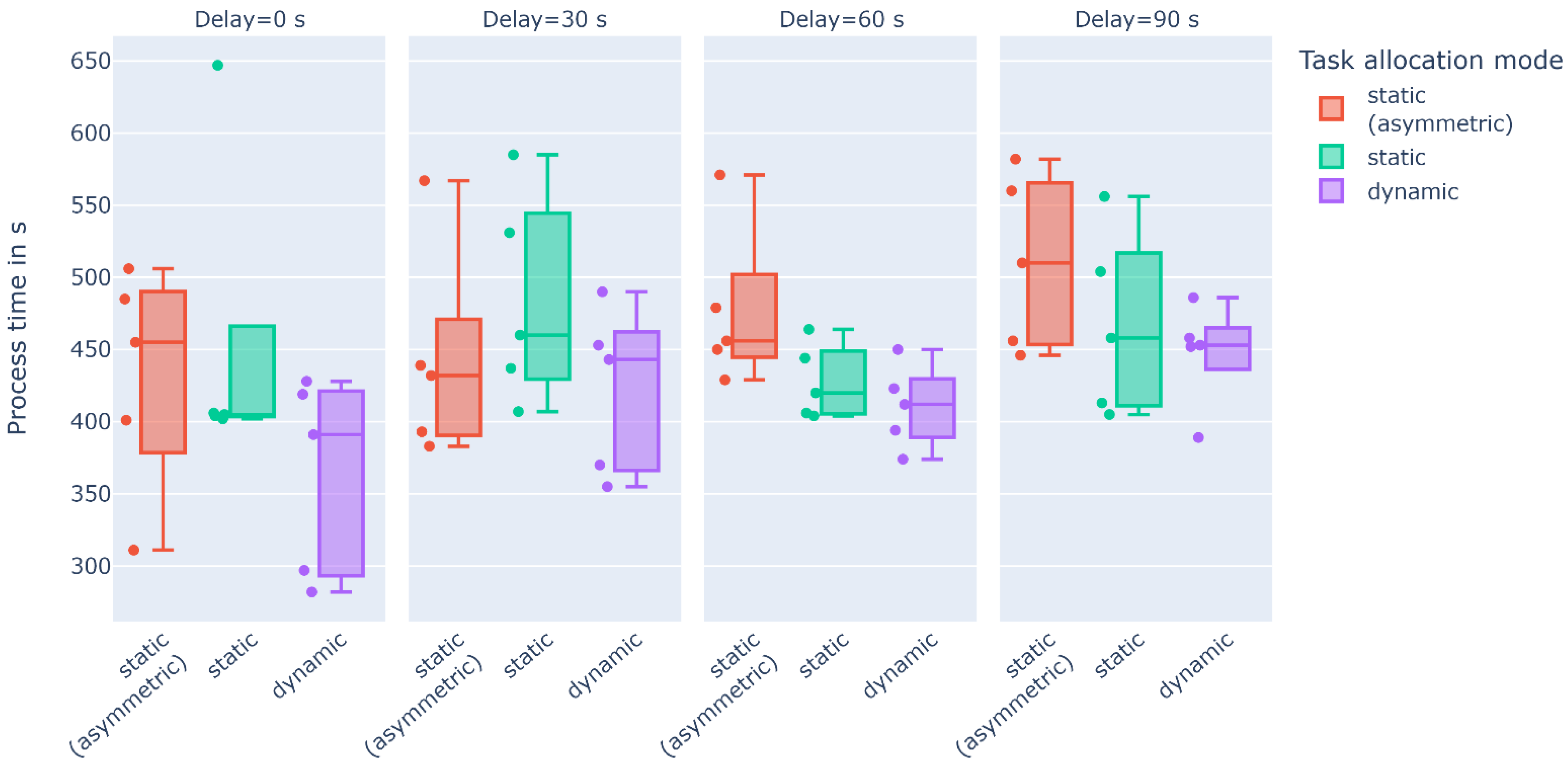

3.2.1. Assembly Process Time

3.2.2. Quantitative Assessment of HRC Performance and Fluency

3.3. Human Factors and User Satisfaction

3.3.1. Variables Depending on Task Allocation Mode

3.3.2. General Assessment of the System

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| No. | Ref. | Classification | Year | Proposed Task Allocation Approach | Evaluation Scenario | No. of Tasks | Evaluation Approach | Main Evaluation Result |

|---|---|---|---|---|---|---|---|---|

| 1 | [36] | static | 2022 | optimization algorithm for planning | Mold assembly use case including required tool changes (scenario extracted from online video) | 13 | Simulation-based evaluation comparing different assembly setups:

| H-R/H-2R process:

|

| 2 | [35] | static | 2019 | planning method with guided assistance software | Industrial use case of linear actuator assembly | 10 | Demonstration of planning process | Validation of planning approach |

| 3 | [27] | static | 2016 | planning method | Industrial use case of aircraft fuselage shell element assembly | 16 | Demonstration of planning process | Validation of planning approach |

| 4 | [26] | static | 2022 | planning method | Industrial use case of touch-screen cash register assembly | 35 | Demonstration of planning process | Validation of planning approach |

| 5 | [41] | static | 2018 | planning method with simulation tool | Industrial use case of brake disc assembly | 28 | Demonstration of planning process Validation of planning result in simulation and laboratory setup | Validation of planning approachH-R process:

|

| 6 | [37] | static | 2022 | optimization algorithm for planning | Laboratory use case of HDD disassembly | 14 | Demonstration of process planning algorithm for disassembly scenario with different processing periods | Validation of implemented optimization algorithm for task allocation |

| 7 | [38] | static | 2018 | optimization algorithm for planning with simulation tool | Industrial use case of a workplace in automotive final assembly line | 14 | Demonstration of task allocation algorithm in simulation | Validation of simulation tool and optimization algorithm for task allocationH-R process:

|

| 8 | [39] | dynamic | 2019 | reactive system for online execution | Laboratory use case of gearbox assembly | 34 | Demonstration of dynamic task allocation system for different workload limits | Validation of implemented system |

| 9 | [42] | dynamic | 2018 | reactive system for online execution | Laboratory use case of LEGO blocks assembly | 12 | Evaluation of different conditions of trust consideration in user study (20 subjects) | Validation of implemented systemTrust between H and R:

|

| 10 | [44,66] | dynamic | 2018 | planning method with software support reactive system for online execution | Laboratory use case of flange assembly; industrial use case of snowplow mill assembly | 26; 65 | Demonstration of planning processDemonstration of dynamic task reassignment system | Validation of implemented system |

| 11 | [40] | dynamic | 2021 | reactive system for online execution | Laboratory pick-and-place packaging scenario | 9 | Demonstration of static and dynamic task allocation for delays:

| Validation of implemented system |

| 12 | [67] | dynamic | 2016 | optimization algorithm for planning re-planning for online execution | Industrial use case of automotive hydraulic pump assembly | 5 | Demonstration of task allocation algorithm | Validation of implemented system |

| 13 | [68] | dynamic | 2014 | optimization algorithm for planning or online execution | Power module box assembly use case | 18 | Demonstration of task allocation optimization algorithm for human–two-robot assembly setup | Validation of implemented system |

| 14 | [43] | dynamic | 2022 | reactive system for online execution | Laboratory pick-and-place packaging scenario | 24 | Evaluation of static and dynamic task allocation in user study (14 subjects) with:

| Validation of implemented system and fatigue estimation strategy Dynamic task allocation:

|

| 15 | [25] | dynamic | 2018 | reactive system for online execution | Laboratory use case of table assembly | 6 | Demonstration of dynamic task allocation system for human–two-robot assembly setup | Validation of implemented system |

| 16 | [69] | dynamic | 2017 | optimization algorithm for planning reactive system for online execution | Theoretical process; laboratory use case | 8; 6 | Simulation-based demonstration of planning system in human–two-robot setup Demonstration of dynamic task allocation system | Validation of implemented planning algorithm Validation of implemented system |

Appendix B

Appendix C

Appendix C.1. Questionnaire after Completion of a Task Allocation Mode

Appendix C.1.1. Fluency

| Statements | Scale; (F/R) 1 | |

|---|---|---|

| 1 | The human-robot team worked fluently together. | 5-scale; (F) |

| 2 | The robot was unintelligent. | 5-scale; (R) |

| 3 | The robot and I were working towards the same goal. | 5-scale; (F) |

| 4 | The robot was uncooperative. | 5-scale; (R) |

| 5 | The robot was contributed to the fluency of the collaboration. | 5-scale; (F) |

| 6 | I needed to adapt my movements to the robot’s movements. | 5-scale; (R) |

| 7 | The robot reacted flexible to changes in task execution. | 5-scale; (F) |

| 8 | I had the feeling that the robot is a team player. | 5-scale; (F) |

| 9 | During the whole process, I always knew what I was requested to do. | 5-scale; (F) |

| 10 | During the whole process, I always knew what the robot was going to do. | 5-scale; (F) |

- Rating from 1 to 5 (1 = strongly disagree; 5 = strongly agree).

- Reverse of the scores on the statements with reversed scale (1→5; 2→4; 3→3; 4→2; 5→1).

- For each task allocation mode: calculation of mean value over all subjects for each statement and the mean fluency value over all statements.

- Higher fluency score indicates better fluency and teamwork.

Appendix C.1.2. Satisfaction

| Statements | Scale; (F/R) 1 | |

|---|---|---|

| 1 | I am satisfied with the collaboration with the robot. | 5-scale; (F) |

| 2 | I am satisfied with the way the allocation decision has been made. | 5-scale; (F) |

| 3 | I am satisfied with how the tasks are allocated to me and the robot. | 5-scale; (F) |

| 4 | I am satisfied with the result of our work. | 5-scale; (F) |

- Rating from 1 to 5 (1 = strongly disagree; 5 = strongly agree).

- For each task allocation mode: calculation of mean value over all subjects for each statement and the mean satisfaction value over all statements.

- Higher satisfaction score indicates higher satisfaction with the collaboration and task allocation.

Appendix C.1.3. Workload

- Rating from 1 to 5 (1 = very low; 5 = very high and 1 = good; 5 = poor for statement 4).

- Mapping of scores to the original point scale from 0 to 100 (1→0; 2→25; 3→50; 4→75; 5→100).

- For each task allocation mode: calculation of mean value over all subjects for each statement and the mean raw TLX score over all statements

- Lower raw TLX score indicates lower subjective workload.

Appendix C.2. Final Questionnaire after Completion of all Runs of the Study

Appendix C.2.1. Affinity for Technical Interaction

- See [64].

Appendix C.2.2. Experience in Fields Related to the Study

| Statements | Scale; (F/R) 1 | |

|---|---|---|

| 1 | How familiar with technical systems are you? | 5-scale; (F) |

| 2 | I have experience in manual assembly. | 5-scale; (F) |

| 3 | I have experience in the usage of assistance systems. | 5-scale; (F) |

| 4 | I have experience in working with collaborative robots. | 5-scale; (F) |

| 5 | I have experience in programming of collaborative robots | 5-scale; (F) |

- Rating from 1–5 (1 = strongly disagree; 5 = strongly agree).

- For each subject: calculation of mean value per statement and over all statements.

- Higher score indicates higher experience in field.

Appendix C.2.3. System Usability

Appendix C.2.4. Preferred Task Allocation Mode

| Mode | Scale 1 | |

|---|---|---|

| 1 | Manual assembly | 3-scale; best to worst |

| 2 | Collaborative assembly with static task allocation | 3-scale; best to worst |

| 3 | Collaborative assembly with dynamic task allocation | 3-scale; best to worst |

Appendix C.2.5. Trust

- See [59].

Appendix C.2.6. Quality of Provided Information

| Category [62] 1 | Statements | Scale; (F/R) 2 | |

|---|---|---|---|

| 1 | Accuracy | The displayed information on the assembly instructions was correct and complete. | 5-scale; (F) |

| 2 | Relevancy | The displayed assembly instruction information was relevant and shown for a reasonable amount of time. | 5-scale; (F) |

| 3 | Representation | The displayed information on the assembly instructions was presented in an understandable and comprehensible way. | 5-scale; (F) |

| 4 | Accessibility | The required information on the assembly instructions could be easily recognized and quickly extracted. | 5-scale; (F) |

- Rating from 1–5 (1 = strongly disagree; 5 = strongly agree).

- Calculation of mean value per category over all subjects.

- Higher score indicates better provision of information in the respective category.

Appendix D

References

- Zanchettin, A.M.; Casalino, A.; Piroddi, L.; Rocco, P. Prediction of Human Activity Patterns for Human-Robot Collaborative Assembly Tasks. IEEE Trans. Ind. Inform. 2019, 15, 3934–3942. [Google Scholar] [CrossRef]

- Andolfatto, L.; Thiébaut, F.; Lartigue, C.; Douilly, M. Quality- and Cost-Driven Assembly Technique Selection and Geometrical Tolerance Allocation for Mechanical Structure Assembly. J. Manuf. Syst. 2014, 33, 103–115. [Google Scholar] [CrossRef]

- Spena, P.R.; Holzner, P.; Rauch, E.; Vidoni, R.; Matt, D.T. Requirements for the Design of Flexible and Changeable Manufacturing and Assembly Systems: A SME-Survey. Procedia CIRP 2016, 41, 207–212. [Google Scholar] [CrossRef]

- Fast-Berglund, Å.; Palmkvist, F.; Nyqvist, P.; Ekered, S.; Åkerman, M. Evaluating Cobots for Final Assembly. Procedia CIRP 2016, 44, 175–180. [Google Scholar] [CrossRef]

- Scholz-Reiter, B.; Freitag, M. Autonomous Processes in Assembly Systems. CIRP Ann. 2007, 56, 712–729. [Google Scholar] [CrossRef]

- ElMaraghy, H.; ElMaraghy, W. Smart Adaptable Assembly Systems. Procedia CIRP 2016, 44, 4–13. [Google Scholar] [CrossRef]

- Antonelli, D.; Astanin, S.; Bruno, G. Applicability of Human-Robot Collaboration to Small Batch Production. In IFIP Advances in Information and Communication Technology; Springer New York LLC: Torino, Italy, 2017; Volume 480, pp. 24–32. [Google Scholar] [CrossRef]

- Gaede, C.; Ranz, F.; Hummel, V.; Echelmeyer, W. A Study on Challenges in the Implementation of Human-Robot Collaboration. J. Eng. Manag. Oper. 2018, 1, 29–39, ISBN 978-3-643-99768-5. [Google Scholar]

- Lorenz, M.; Rüßmann, M.; Strack, R.; Lueth, K.L.; Bolle, M. Man and Machine in Industry 4.0: How will Technology Transform the Industrial Workforce Through 2025?. Bost. Consult. Group 2015. Available online: https://www.bcg.com/de-de/publications/2015/technology-business-transformation-engineered-products-infrastructure-man-machine-industry-4 (accessed on 6 December 2019).

- Müller, R.; Franke, J.; Henrich, D.; Kuhlenkötter, B.; Raatz, A.; Verl, A. Handbuch Mensch-Roboter-Kollaboration; Müller, R., Franke, J., Henrich, D., Kuhlenkötter, B., Raatz, A., Verl, A., Eds.; Carl Hanser Verlag GmbH & Co. KG: München, Germany, 2019; ISBN 978-3-446-45016-5. [Google Scholar] [CrossRef]

- Leng, J.; Sha, W.; Wang, B.; Zheng, P.; Zhuang, C.; Liu, Q.; Wuest, T.; Mourtzis, D.; Wang, L. Industry 5.0: Prospect and Retrospect. J. Manuf. Syst. 2022, 65, 279–295. [Google Scholar] [CrossRef]

- Romero, D.; Stahre, J. Towards the Resilient Operator 5.0: The Future of Work in Smart Resilient Manufacturing Systems. Procedia CIRP 2021, 104, 1089–1094. [Google Scholar] [CrossRef]

- Matheson, E.; Minto, R.; Zampieri, E.G.G.; Faccio, M.; Rosati, G. Human-Robot Collaboration in Manufacturing Applications: A Review. Robotics 2019, 8, 100. [Google Scholar] [CrossRef]

- Hold, P.; Ranz, F.; Sihn, W.; Hummel, V. Planning Operator Support in Cyber-Physical Assembly Systems. IFAC-PapersOnLine 2016, 49, 60–65. [Google Scholar] [CrossRef]

- Petzoldt, C.; Keiser, D.; Beinke, T.; Freitag, M. Functionalities and Implementation of Future Informational Assistance Systems for Manual Assembly. In Subject-Oriented Business Process Management. The Digital Workplace—Nucleus of Transformation. Proc. of S-BPM ONE 2020; Freitag, M., Kinra, A., Kotzab, H., Kreowski, H.J., Thoben, K.D., Eds.; Springer: Cham, Switzerland, 2020; Volume 1278, pp. 88–109. ISBN 978-3-030-64350-8. [Google Scholar] [CrossRef]

- Mark, B.G.; Rauch, E.; Matt, D.T. Worker Assistance Systems in Manufacturing: A Review of the State of the Art and Future Directions. J. Manuf. Syst. 2021, 59, 228–250. [Google Scholar] [CrossRef]

- Markets and Markets. Research Collaborative Robot Market Size, Growth, Trend and Forecast to 2025. Available online: https://www.marketsandmarkets.com/Market-Reports/collaborative-robot-market-194541294.html (accessed on 25 March 2020).

- Bauer, W.; Bender, M.; Braun, M.; Rally, P.; Scholtz, O. Lightweight Robots in Manual Assembly—Best to Start Simply! Examining Companies’ Initial Experiences with Lightweight Robots; Fraunhofer Institute for Industrial Engineering IAO: Stuttgart, Germany, 2016. [Google Scholar]

- Fast-Berglund, Å.; Romero, D. Strategies for Implementing Collaborative Robot Applications for the Operator 4.0. In IFIP Advances in Information and Communication Technology; Springer New York LLC: New York, NY, USA, 2019; Volume 566, pp. 682–689. [Google Scholar] [CrossRef]

- Michalos, G.; Karagiannis, P.; Dimitropoulos, N.; Andronas, D.; Makris, S. Human Robot Collaboration in Industrial Environments. In Intelligent Systems, Control and Automation: Science and Engineering; Springer Science and Business Media B.V.: Berlin/Heidelberg, Germany, 2022; Volume 81, pp. 17–39. [Google Scholar] [CrossRef]

- Statistisches Bundesamt. Industrie 4.0: Roboter in 16% Der Unternehmen Im Verarbeitenden Gewerbe; Statistisches Bundesamt: Wiesbaden, Germany, 2018. [Google Scholar]

- Schnell, M.; Holm, M. Challenges for Manufacturing SMEs in the Introduction of Collaborative Robots. In Proceedings of the SPS 2022: Proceedings of the 10th Swedish Production Symposium, Skövde, Sweden, 26–29 April 2022; pp. 173–183. [Google Scholar] [CrossRef]

- Kildal, J.; Tellaeche, A.; Fernández, I.; Maurtua, I. Potential Users’ Key Concerns and Expectations for the Adoption of Cobots. Procedia CIRP 2018, 72, 21–26. [Google Scholar] [CrossRef]

- Ranz, F.; Hummel, V.; Sihn, W. Capability-Based Task Allocation in Human-Robot Collaboration. Procedia Manuf. 2017, 9, 182–189. [Google Scholar] [CrossRef]

- Darvish, K.; Bruno, B.; Simetti, E.; Mastrogiovanni, F.; Casalino, G. Interleaved Online Task Planning, Simulation, Task Allocation and Motion Control for Flexible Human-Robot Cooperation. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 58–65. [Google Scholar] [CrossRef]

- Gualtieri, L.; Rauch, E.; Vidoni, R. Human-Robot Activity Allocation Algorithm for the Redesign of Manual Assembly Systems into Human-Robot Collaborative Assembly. Int. J. Comput. Integr. Manuf. 2022, 1–26. [Google Scholar] [CrossRef]

- Müller, R.; Vette, M.; Mailahn, O. Process-Oriented Task Assignment for Assembly Processes with Human-Robot Interaction. Procedia CIRP 2016, 44, 210–215. [Google Scholar] [CrossRef]

- Bughin, J.; Hazan, E.; Lund, S.; Dahlström, P.; Wiesinger, A.; Subramaniam, A. Skill Shift: Automation and the Future of the Workforce. McKinsey Glob. Inst. 2018, 1, 3–84. [Google Scholar]

- Villani, V.; Pini, F.; Leali, F.; Secchi, C. Survey on Human–Robot Collaboration in Industrial Settings: Safety, Intuitive Interfaces and Applications. Mechatronics 2018, 55, 248–266. [Google Scholar] [CrossRef]

- Schmidbauer, C.; Schlund, S.; Ionescu, T.B.; Hader, B. Adaptive Task Sharing in Human-Robot Interaction in Assembly. In Proceedings of the IEEE International Conference on Industrial Engineering and Engineering Management (IEEM), Singapore, 14–17 December 2020; pp. 546–550. [Google Scholar] [CrossRef]

- Kopp, T.; Baumgartner, M.; Kinkel, S. Success Factors for Introducing Industrial Human-Robot Interaction in Practice: An Empirically Driven Framework. Int. J. Adv. Manuf. Technol. 2020, 112, 685–704. [Google Scholar] [CrossRef]

- Kumar, S.; Savur, C.; Sahin, F. Survey of Human-Robot Collaboration in Industrial Settings: Awareness, Intelligence, and Compliance. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 280–297. [Google Scholar] [CrossRef]

- Kopp, T.; Schäfer, A.; Kinkel, S. Kollaborierende Oder Kollaborationsfähige Roboter?—Welche Rolle Spielt Die Mensch-Roboter-Kollaboration in Der Praxis? Ind. 4.0 Manag. 2020, 36, 19–23. [Google Scholar] [CrossRef]

- Petzoldt, C.; Keiser, D.; Siesenis, H.; Beinke, T.; Freitag, M. Ermittlung Und Bewertung von Einsatzpotentialen Der Mensch-Roboter-Kollaboration—Methodisches Vorgehensmodell Für Die Industrielle Montage. Zeitschrift für wirtschaftlichen Fabrikbetr. 2021, 116, 8–15. [Google Scholar] [CrossRef]

- Malik, A.A.; Bilberg, A. Complexity-Based Task Allocation in Human-Robot Collaborative Assembly. Ind. Robot Int. J. Robot. Res. Appl. 2019, 46, 471–480. [Google Scholar] [CrossRef]

- Liau, Y.Y.; Ryu, K. Genetic Algorithm-Based Task Allocation in Multiple Modes of Human–Robot Collaboration Systems with Two Cobots. Int. J. Adv. Manuf. Technol. 2022, 119, 7291–7309. [Google Scholar] [CrossRef]

- Lee, M.L.; Behdad, S.; Liang, X.; Zheng, M. Task Allocation and Planning for Product Disassembly with Human–Robot Collaboration. Robot. Comput. Integr. Manuf. 2022, 76, 102306. [Google Scholar] [CrossRef]

- Bänziger, T.; Kunz, A.; Wegener, K. Optimizing Human–Robot Task Allocation Using a Simulation Tool Based on Standardized Work Descriptions. J. Intell. Manuf. 2018, 31, 1635–1648. [Google Scholar] [CrossRef]

- El Makrini, I.; Merckaert, K.; De Winter, J.; Lefeber, D.; Vanderborght, B.; DeWinter, J.; Lefeber, D.; Vanderborght, B. Task Allocation for Improved Ergonomics in Human-Robot Collaborative Assembly. Interact. Stud. Soc. Behav. Commun. Biol. Artif. Syst. 2019, 20, 102–133. [Google Scholar] [CrossRef]

- Pupa, A.; Secchi, C. A Safety-Aware Architecture for Task Scheduling and Execution for Human-Robot Collaboration. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Prague, Czech Republic, 27 September 2021–1 October 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2021; pp. 1895–1902. [Google Scholar] [CrossRef]

- Heydaryan, S.; Bedolla, J.S.; Belingardi, G. Safety Design and Development of a Human-Robot Collaboration Assembly Process in the Automotive Industry. Appl. Sci. 2018, 8, 344. [Google Scholar] [CrossRef]

- Rahman, S.M.M.; Wang, Y. Mutual Trust-Based Subtask Allocation for Human–Robot Collaboration in Flexible Lightweight Assembly in Manufacturing. Mechatronics 2018, 54, 94–109. [Google Scholar] [CrossRef]

- Messeri, C.; Bicchi, A.; Zanchettin, A.M.; Rocco, P. A Dynamic Task Allocation Strategy to Mitigate the Human Physical Fatigue in Collaborative Robotics. IEEE Robot. Autom. Lett. 2022, 7, 2178–2185. [Google Scholar] [CrossRef]

- Antonelli, D.; Bruno, G. Dynamic Distribution of Assembly Tasks in a Collaborative Workcell of Humans and Robots. FME Trans. 2019, 47, 723–730. [Google Scholar] [CrossRef]

- Hevner, A.R.; March, S.T.; Park, J.; Ram, S. Design Science in Information Systems Research. Manag. Inf. Syst. Q. MIS Q. 2004, 28, 75–105. [Google Scholar] [CrossRef]

- Peffers, K.; Tuunanen, T.; Rothenberger, M.A.; Chatterjee, S. A Design Science Research Methodology for Information Systems Research. J. Manag. Inf. Syst. 2007, 24, 45–77. [Google Scholar] [CrossRef]

- Niermann, D.; Petzoldt, C.; Dörnbach, T.; Isken, M.; Freitag, M. Towards a Novel Software Framework for the Intuitive Generation of Process Flows for Multiple Robotic Systems. Procedia CIRP 2022, 107, 137–142. [Google Scholar] [CrossRef]

- Petzoldt, C.; Panter, L.; Niermann, D.; Vur, B.; Freitag, M.; Doernbach, T.; Isken, M.; Sharma, A. Intuitive Interaktionsschnittstelle Für Technische Logistiksysteme—Konfiguration Und Überwachung von Prozessabläufen Mittels Multimodaler Mensch-Technik-Interaktion Und Digitalem Zwilling. Ind. 4.0 Manag. 2021, 37, 42–46. [Google Scholar]

- Konold, P.; Reger, H. Praxis Der Montagetechnik, 2nd ed.; Springer Fachmedien: Wiesbaden, Germany, 2003; ISBN 9783663016106. [Google Scholar] [CrossRef]

- Schröter, D. Entwicklung Einer Methodik Zur Planung von Arbeitssystemen in Mensch-Roboter-Kooperation; Universität Stuttgart: Stuttgart, Germany, 2018. [Google Scholar] [CrossRef]

- Beumelburg, K. Fähigkeitsorientierte Montageablaufplanung in Der Direkten Mensch-Roboter-Kooperation (Engl. Skill-Oriented Assembly Sequence Planning for the Direct Man-Robot-Cooperation); Jost Jetter Verlag: Heimsheim, Germany, 2005; ISBN 393694752X. [Google Scholar] [CrossRef]

- Chacón, A.; Ponsa, P.; Angulo, C. Usability Study through a Human-Robot Collaborative Workspace Experience. Designs 2021, 5, 35. [Google Scholar] [CrossRef]

- DIN EN ISO 9241-11; Ergonomics of Human-System Interaction—Part 11: Usability: Definitions and Concepts (ISO 9241-11:2018). 2018. Available online: https://www.iso.org/standard/63500.html (accessed on 25 May 2022).

- Hoffman, G. Evaluating Fluency in Human-Robot Collaboration. IEEE Trans. Hum. Mach. Syst. 2019, 49, 209–218. [Google Scholar] [CrossRef]

- Gervasi, R.; Mastrogiacomo, L.; Franceschini, F. A Conceptual Framework to Evaluate Human-Robot Collaboration. Int. J. Adv. Manuf. Technol. 2020, 108, 841–865. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar] [CrossRef]

- Hart, S.G. NASA-Task Load Index (NASA-TLX); 20 Years Later. Proc. Hum. Factors Ergon. Soc. 2006, 50, 904–908. [Google Scholar] [CrossRef]

- Tausch, A.; Kluge, A. The Best Task Allocation Process Is to Decide on One’s Own: Effects of the Allocation Agent in Human–Robot Interaction on Perceived Work Characteristics and Satisfaction. Cogn. Technol. Work 2020, 24, 39–55. [Google Scholar] [CrossRef]

- Charalambous, G.; Fletcher, S.; Webb, P. The Development of a Scale to Evaluate Trust in Industrial Human-Robot Collaboration. Int. J. Soc. Robot. 2016, 8, 193–209. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A “Quick and Dirty” Usability Scale. In Usability Evaluation in Industry; Jordan, P.W., Thomas, B., McClelland, I.L., Weerdmeester, B., Eds.; CRC Press: Boca Raton, FL, USA, 1996; pp. 207–212. [Google Scholar] [CrossRef]

- Lewis, J.R. The System Usability Scale: Past, Present, and Future. Int. J. Hum.–Comput. Interact. 2018, 34, 577–590. [Google Scholar] [CrossRef]

- Marvel, J.A.; Bagchi, S.; Zimmerman, M.; Antonishek, B. Towards Effective Interface Designs for Collaborative HRI in Manufacturing: Metrics and Measures. ACM Trans. Hum. Robot Interact. 2020, 9, 1–55. [Google Scholar] [CrossRef]

- Knight, S.A.; Burn, J. Developing a Framework for Assessing Information Quality on the World Wide Web. Inf. Sci. 2005, 8, 159–172. [Google Scholar] [CrossRef]

- Franke, T.; Attig, C.; Wessel, D. Assessing Affinity for Technology Interaction—The Affinity for Technology Assessing Affinity for Technology Interaction (ATI). 2017; Unpublished. [Google Scholar] [CrossRef]

- Prabaswari, A.D.; Basumerda, C.; Utomo, B.W. The Mental Workload Analysis of Staff in Study Program of Private Educational Organization. In Proceedings of the IOP Conference Series: Materials Science and Engineering; Institute of Physics Publishing, Makasar, Indonesia, 27–29 November 2018; Volume 528, p. 012018. [Google Scholar] [CrossRef]

- Bruno, G.; Antonelli, D. Dynamic Task Classification and Assignment for the Management of Human-Robot Collaborative Teams in Workcells. Int. J. Adv. Manuf. Technol. 2018, 98, 2415–2427. [Google Scholar] [CrossRef]

- Tsarouchi, P.; Matthaiakis, A.S.S.; Makris, S.; Chryssolouris, G. On a Human-Robot Collaboration in an Assembly Cell. Int. J. Comput. Integr. Manuf. 2016, 30, 580–589. [Google Scholar] [CrossRef]

- Chen, F.; Sekiyama, K.; Cannella, F.; Fukuda, T. Optimal Subtask Allocation for Human and Robot Collaboration within Hybrid Assembly System. IEEE Trans. Autom. Sci. Eng. 2014, 11, 1065–1075. [Google Scholar] [CrossRef]

- Johannsmeier, L.; Haddadin, S. A Hierarchical Human-Robot Interaction-Planning Framework for Task Allocation in Collaborative Industrial Assembly Processes. IEEE Robot. Autom. Lett. 2017, 2, 41–48. [Google Scholar] [CrossRef]

- Smyk, A. The System Usability Scale & How It’s Used in UX. 2020. Available online: https://xd.adobe.com/ideas/process/user-testing/sus-system-usability-scale-ux/ (accessed on 4 November 2022).

| Category | [8,24] 1 | [29] | [30] | [22] 2 |

|---|---|---|---|---|

| Safety |

| Safe interaction

|

| Safety

|

| Planning |

| Intuitive interfaces

|

| Performance

|

| Technology |

| Design methods

|

| Smart technology

|

| Evaluation Aspect | Variable | Metrics | Symbol | Data Collection |

|---|---|---|---|---|

| Effectiveness | Process effectiveness | Task completion rate | notes during study | |

| Number of errors | notes during study | |||

| Efficiency | Process efficiency | Process time | process data logging | |

| HRC performance efficiency | Number of human tasks | process data logging | ||

| Number of robot tasks | process data logging | |||

| Human wait time | process data logging | |||

| Robot wait time | process data logging | |||

| Concurrent activity time | process data logging | |||

| User satisfaction (human factors) | Workload | NASA Raw TLX | questionnaire | |

| Fluency and satisfaction | Fluency score | questionnaire | ||

| Satisfaction score | questionnaire | |||

| User preference | Preferred task allocation mode | - | questionnaire | |

| General system assessment | System usability scale score | questionnaire | ||

| Trust evaluation score | questionnaire | |||

| Quality of provided information | questionnaire | |||

| Qualitative feedback | - | free text input | ||

| Suggestions for improvement | - | free text input |

| (a) Gender | (b) Age | (c) Vocational Education | (d) Experience | (e) Technical Affinity | ||||

|---|---|---|---|---|---|---|---|---|

| F | 25% | 20–24 | 40% | Academic | 50% | Assembly | 60% 1 | ATI score |

| M | 75% | 25–29 | 40% | Undergraduate | 50% | Assistance systems | 40% 1 | 4.74 ± 0.68 |

| N/D | −/− | 30–34 | 10% | Collaborative robots | 25% 1 | |||

| 35–49 | 10% | |||||||

| Process Time in s | |||||

|---|---|---|---|---|---|

| Task Allocation Mode | Components | Delay = 0 s | Delay = 30 s | Delay = 60 s | Delay = 90 s |

| Manual | 1 | 192.6 ± 55.4 | 254.0 ± 6.7 | 251.4 ± 49.2 | 279.6 ± 50.2 |

| Static | 153.0 ± 7.3 | 183.2 ± 31.0 | 209.6 ± 9.9 | 233.6 ± 17.4 | |

| Dynamic | 152.0 ± 37.7 | 191.0 ± 18.6 | 208.2 ± 3.5 | 241.6 ± 24.8 | |

| Static (asymmetric) | 3 | 431.6 ± 78.1 | 442.8 ± 73.5 | 477.0 ±55.5 | 510.8 ± 60.6 |

| Static | 452.8 ± 108.6 | 484.0 ± 72.7 | 427.6 ± 25.9 | 467.2 ± 63.5 | |

| Dynamic | 363.4 ± 69.0 | 422.2 ± 57.5 | 410.6 ± 28.8 | 447.6 ± 35.6 | |

| Data | Manual | Static (Asymmetric) | Static | Dynamic |

|---|---|---|---|---|

| NASA RTLX score − (adjective rating) | 17.7 ± 7.1 (medium workload) | 12.5 ± 2.6 (medium workload) | 11.3 ± 4.1 (medium workload) | 18.5 ± 7.6 (medium workload) |

| Fluency score + | - | 3.5 ± 0.9 1,2 | 3.5 ± 0.8 1,2 | 3.7 ± 0.8 1,2 |

| Satisfaction score + | - | 3.6 ± 0.6 1 | 3.9 ± 0.4 1 | 4.4 ± 0.2 1 |

| (a) Usability | (b) Quality of Provided Information | (c) Permanent Use of System 2 | (d) Trust | ||||

|---|---|---|---|---|---|---|---|

| SUS score | 79.4 ± 12.5 | Correctness and completeness | 4.5 ± 0.9 1 | Yes | 85 % | Trust score | 43.3 ± 4.1 |

| Acceptability rating | Acceptable | Relevance and timing | 4.25 ± 0.8 1 | No | 5 % | ||

| Adjective rating | Good | Understandability and comprehensibility | 4.2 ± 1.0 1 | Not sure | 10 % | ||

| Easy and quick information extraction | 3.5 ± 1.3 1 | ||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Petzoldt, C.; Niermann, D.; Maack, E.; Sontopski, M.; Vur, B.; Freitag, M. Implementation and Evaluation of Dynamic Task Allocation for Human–Robot Collaboration in Assembly. Appl. Sci. 2022, 12, 12645. https://doi.org/10.3390/app122412645

Petzoldt C, Niermann D, Maack E, Sontopski M, Vur B, Freitag M. Implementation and Evaluation of Dynamic Task Allocation for Human–Robot Collaboration in Assembly. Applied Sciences. 2022; 12(24):12645. https://doi.org/10.3390/app122412645

Chicago/Turabian StylePetzoldt, Christoph, Dario Niermann, Emily Maack, Marius Sontopski, Burak Vur, and Michael Freitag. 2022. "Implementation and Evaluation of Dynamic Task Allocation for Human–Robot Collaboration in Assembly" Applied Sciences 12, no. 24: 12645. https://doi.org/10.3390/app122412645

APA StylePetzoldt, C., Niermann, D., Maack, E., Sontopski, M., Vur, B., & Freitag, M. (2022). Implementation and Evaluation of Dynamic Task Allocation for Human–Robot Collaboration in Assembly. Applied Sciences, 12(24), 12645. https://doi.org/10.3390/app122412645