1. Introduction

Recent years have witnessed rapid development in robotics, and its role in society is gradually increasing. It has elevated the importance of emotion detection, as future robots are foreseen as talking with human-like emotions. Similarly, the increasing influence of mute persons in society has also increased the demand for precise emotion detection, and several approaches have been put forward. To identify human emotions, researchers have used different classifications in [

1]. The study asserts that there are six basic emotions called universal emotions, such as delight, grief, fear, surprise, contempt, and anger. Humans experience these emotions everywhere throughout human cultures in the world. These universal sentiments can always be categorized as one of two main classifications: positive or negative. More feelings are included and discussed later on, such as embarrassment, excitement, shame, pride, satisfaction, and amusement in [

2].

Researchers in the past decade have agreed to the point that expression can be predicted by observing one’s eyes, eyebrows, and mouth movement, shape, and position. Other challenges come to light when researchers want to make a system that distinguishes emotion [

3]. While detecting emotions with images or videos, many challenges are faced; the most common issue is occlusion. It happens when the facial features are hidden behind some object, such as a hand covering the face, glasses hiding eyes, the microphone hiding lips, etc. The second most common issue is the variations caused by the position of luminosity called illumination; change in luminosity can cause variations that are significantly larger than the actual differences. This can cause misclassification of the image if the evaluation is based on the comparison. The position of the face is a challenge because, at a different position, different emotions are detected. The system can only detect expressions at 30

to 35

. It is hard to detect emotion from other angles. To detect emotions, both eyes and the mouth should be visible and should be in a frontal position. Up tilt or down tilt make emotion detection harder. If the background is the same as the color of the skin, it creates problems to differentiate between the face and the background. Because people have different colors of skin, and shapes of eyes, noses, lips, and jawlines, these features make people different from each other. Such variations are called interclass variations, which make it hard to detect the face and expression of the image.

To identify the feeling of a person using a computer, three methods are used: computer vision, machine learning, and signal processing. The majority of the facial action coding system (FACS) [

4] offered by Paul Ekman [

1] was employed by the researcher to predict depression, anxiety, and stress levels. There are two main approaches to dealing with expression analysis. The frontal face photo must be fully selected for the first approach before categorization can be performed. The second technique prefers to divide the face image into smaller parts and then calls for processing those fragments. Face tracking, feature extraction, and classification are the general three-step processes used by the methodologies needed to determine a person’s expression. The second method rather chooses the partitioning of the face image into sub-segments and then requires the processing of those sub-segments. The techniques required to detect the expression of a person broadly follow a three-step process: face tracking, feature extraction, and classification. Face detection is a process in which a face is located in a frame. Identifying a face in a frame is a procedure known as face detection and is viewed as the preprocessing step in emotion detection [

5,

6]. The ability of computers to recognize human action is one of the most important applications of computer vision. It can be used for a variety of things, such as monitoring children and the elderly, creating sophisticated surveillance systems, and facilitating human–computer interaction. The process that comes next is feature extraction after the face has been detected. It is employed to gather the face’s main feature points, which serve as a representation of such features. The main goal of feature extraction is to convert the important aspects of the data into numerical characteristics that can then be employed in the machine-learning process. The final phase is classifying photos into informational categories. The process of classification uses a decision rule to partition the space of spectral or spatial features into different classes.

There are four main techniques to detect the face in a single image: knowledge, feature, template, and appearance-based methods. However, some hybrid techniques are also used for emotion detection. A knowledge-based method is a top-down approach. In this method, the face is located with the help of human-coded rules, such as features of the face, skin color, and template matching. These basic rules are very easy to implement, for example, two eyes are symmetric to each other a nose and a mouth [

6]. Skin color is unique because it does not change with a change in position or occlusion. However, skin color varies from person to person and with regions. The main problem with this method is to convert human-knowledge-based rules into codes. If the rules are too strict, the face will not be detected; if the rules are too general, the rate of false detection will increase. The other problem with this approach is that it cannot detect a face in different positions or poses [

7]. The feature-based method is a bottom-up approach [

6] and works to find basic facial features to locate faces in various poses, viewpoints, or light. It is designed for face localization. The feature-based method is subdivided into four kinds: facial feature, texture feature, skin color feature, and multiple feature-based methods. The problems with this method are illumination, noise, and occlusion, which cause the corruption of features that makes it harder to detect edges of features or detect many edges, which makes the algorithm inoperable [

6].

If only because template-based approaches are simple to use, they do not capture overall facial structure. To distinguish between a group of five emotion expressions (entertainment, rage, contempt, fright, and sorrow) in movies from the BioVid Emo database, the face in videos is detected, and spatial and temporal characteristics (points of interest) are extracted [

8]. In the appearance-based method, templates are prepared from a number of training images that capture the various forms of facial appearance. In contrast to the template-based method in which the template is designed by experts, in the appearance-based method, a learning approach is adopted to analyze the image to make a template. These templates are the models for face detection. Multiple techniques and analyses are performed to find different characteristics of images. These procedures are designed primarily for the detection of the face, which determines face and non-face frames [

6]. The most popular face detection algorithm now is the Viola–Jones method. The Viola–Jones algorithm is presumed to be comprised of four stages which can be stated as follows:

Haar-like features;

Integral image;

AdaBoost algorithm;

Cascade of classifiers.

Or just use a pre-trained cascade to detect an object or facial images within an image.

However, with the advancements in technology, it is thereby recommended that the scope of human–computer interaction is widened, and challenges such as occlusions, illumination variations, and changes in physical appearance should be taken into account before considering more novel and practical solutions for detecting emotions with good accuracy. Therefore, this paper proposes a new architecture of convolutional neural networks (CNN) for facial emotion recognition systems. In the proposed framework, face detection utilizes the Viola–Jones cascade followed by face-cropping and image re-sizing. The proposed model is based on five convolution layers, one fully connected layer, and a SoftMax layer. Furthermore, feature map enhancement is employed to accomplish higher precision and the detection of more emotions. Several experiments are performed to detect anger, disgust, fear, happy, neutral, sad, and surprise. Performance is compared with two test models selected for experiments.

The rest of the paper is organized as follows. We present the related work in

Section 2, where recent trends in composition studies over the past research papers are compared on the basis of attributes such as face detection, preprocessing, feature extraction, classification, database, and number of emotions, and the accuracy and motivation of our research are established. The proposed framework is discussed in detail in

Section 3. The implementation of the proposed framework and results are presented in

Section 4. Finally, we present the conclusion and future directions of this research work in

Section 5.

2. Related Work

Prior research placed a strong emphasis on the projection of facial expression, highlighting and identifying the most prevalent emotional traits. However, as time went on, the idea of human–computer interaction and artificial intelligence increased the importance of emotion recognition. Researchers suggested employing local binary pattern histogram and Haar-like features with a cascade classifier to recognize a person’s face in real-time movies [

9], but no significant work has been conducted to determine emotions.

Vertical projection is applicable to discover the limits of the lips before horizontal projection is used to locate the mouth on the identified area of the face. The Viola–Jones algorithm is used for face detection in a variety of settings, including camera distance, backdrop color, object orientation, etc. So, in [

10,

11], multi-level systems are proposed that include algorithms such as feature extraction, feature reduction, and principal face detection using the Viola–Jones algorithm. The region of interest (R.O.I), or feature portion of the image, is determined or removed via feature extraction. Despite the fact that this stage is the most crucial and significant one, enough technical information was overlooked. The choosing procedure in this stage determines the efficiency of the system [

12]. There are a large number of combinations used for feature extraction and classification. Feature extraction can be differentiated into two groups: learned and pre-designed [

12]. Pre-designed feature extraction is handcrafted however learned is an automatic way of feature extraction. Pre-designed features are further divided into two main groups: appearance-based features and geometric features. Additionally, a combination of both of them called the hybrid technique is frequently used [

13,

14,

15].

The most common facial feature extraction techniques are principal, local binary pattern (LBP), Gabor features, and principal component analysis (PCA). However, PCA is mostly used for dimensionality reduction. Landmark and facial points are used for face localization and are used alone or combined with Gabor, LBP, or histogram of oriented gradients (HOG) to extract more accurate features [

13]. Classification is the final phase of expression analysis; computational methods are used to improve performance, for instance, to make accurate predictions. The expression can be classified directly or first recognizing certain action units. The study [

14] employs a support vector machine (SVM) in an e-learning system to identify emotions. The achieved accuracy varies from 89% to 100% with respect to the dataset used for testing.

To examine the classifier performance, test samples are used [

15]. During the training phase, the machine learning algorithm creates a model of the input and creates a hypothesis function for data prediction in [

15]. One way that machines might recognize facial expressions is by examining the changes in the face when the expressions are shown. The optical flow technique is used to obtain the distortion or vibration vectors caused by facial expressions in the face. The analysis is then performed using the vibration vectors that were gathered. They are employed to benefit from their positions and orientations for automatic facial expression recognition using a variety of data-mining techniques.

During the training phase, the machine-learning algorithm builds a model of the input and creates a hypothesis function for data prediction. Ref. [

14] presents a robust approach for facial expression classification using pyramid HOG and LBP features. Hybrid features are extracted from patches of the face that undergo major change during a change in expression. Experimental results using SVM indicate a 94.63% expression recognition rate using the CK+ dataset. The robustness and accuracy of recognizing female expressions are improved by SVM-based active learning in [

16] at a higher pace than male emotions. Surprise and fear, on the other hand, have lower rates of emotion recognition.

Recent academic research on emotion recognition typically uses convolution neural networks (CNN) [

17,

18]. CNN has proved to be a promising application for face detection, feature extraction, and classification. This method automatically extracts a characteristic and classifies it, eliminating the need for handmade methods. Convolution layers, activation function, subsampling, and dense layer are the four fundamental components of CNN (fully connected layer). However, several occlusion-based instances of perplexed face pictures were incorrectly identified by a CNN model based on pre-trained deep learning.

In [

19], the authors used the CNN model to obtain features from depth information. The model is based on two layers: The feature map at the first layer is 6 and kernel size is 5, then a max pooling is used. The second layer is based on 6 feature maps and a kernel size is 5, max pooling is 2, and then 12 feature maps, and finally Softmax is used. The proposed approach is an illumination variant and obtains an 87.98% accuracy with 1000 epochs. The authors present a fusion of two models for emotion recognition in [

20]. The multi-signal convolutional model (MSCNN) is used to get spatial features statically and the part-based hierarchical recurrent neural network (PHRNN) is used to get temporal features dynamically and combine them. The PHRNN model is a 12-layer model whereas the MSCNN model has 6 layers.

The study [

21] presents a FER model based on CNN which has 3 convolutional layers and consists of 5 × 5 filter size. The authors used the dropout layer as the regularization layer. The proposed model obtains an emotion recognition accuracy of 96% in 3 min. In [

22], two convolutional layers are used; first with 5 filter sizes and the second one with 7 filter sizes. The max-pooling layer has a 2 × 2 kernel to reduce the size while the dense layer has 256 hidden neurons. Its learning rate is 0.01 and the training was performed using 2000 epochs. The study obtained promising results, yet ignored the occlusions and illumination variations. The CNN architectural paradigm, which employs the FER2013 database for emotion recognition, is suggested in the paper [

23]. The dataset includes 32,298 90 × 50 pixel photos. To enhance the performance and to generalize the training and dropout, the authors used regularization techniques. It uses a batch size of 128 after each dense layer. Using 40 training epochs, an accuracy of 74% was attained.

Table 1 presents a comparative review of the discussed research works. It describes the process used to detect the face, preprocessing involved in the approach, the feature extraction approach, the classifiers used for emotion classification, and the reported accuracy. The most common classifier used for emotion detection are decision tree [

13,

14,

15], SVM [

24,

25,

26,

27,

28,

29] and neural networks [

23,

30]. SVM is very effective in terms of memory management and dimensionality. On the other hand, the performance is affected because larger datasets need a longer time in the training phase, and data have more noise. SVM also does not directly provide probability estimates, and these have to be computed separately.

The objective of this review is to view the trends in composition studies within the past years and see how emotions are detected using facial expressions. It is clear from the research that mainly six to seven basic emotions are detected. Predominantly, the Viola–Jones method is adopted for face detection in a frame, and then landmarks or LBP descriptors are used for feature extraction. PCA is applied for dimensionality reduction, and SVM is used for emotion classification. The average accuracy gained by the researcher (15 different methods) is 81.77%. It was observed that the RBF error reduction method is the most efficient. Most of the work was performed in feature extraction, but research work is moving more toward CNN, as it is more efficient and does not need hand-crafted methods to improve performance. It automatically detects features but requires more data sets for training.

For the current study, we use extended the Cohn–Kanade (CK+) [

31] and Japanese Female Facial Expression (JAFFE) [

32] datasets which contain large data and are frequently used. Our main focus is to mitigate the effects that occur in images due to occlusions. We focus on human emotions such as joy, grief, surprise, fear, contempt, anger, and neutral.

3. Materials and Methods

In this section, the proposed approach is presented. The mandate of the proposed approach is to consider the challenges like occlusions, illumination variations, and changes in the physical appearance of mute persons’ images and mitigate their effects. The model is designed to identify the basic expressions of human emotions such as joy, grief, surprise, fear, contempt, anger, and neutral. The proposed model is based on 6 layers of CNN, in which 5 convolutional layers are used, including the max-pooling layer and one dense layer with a dropout function.

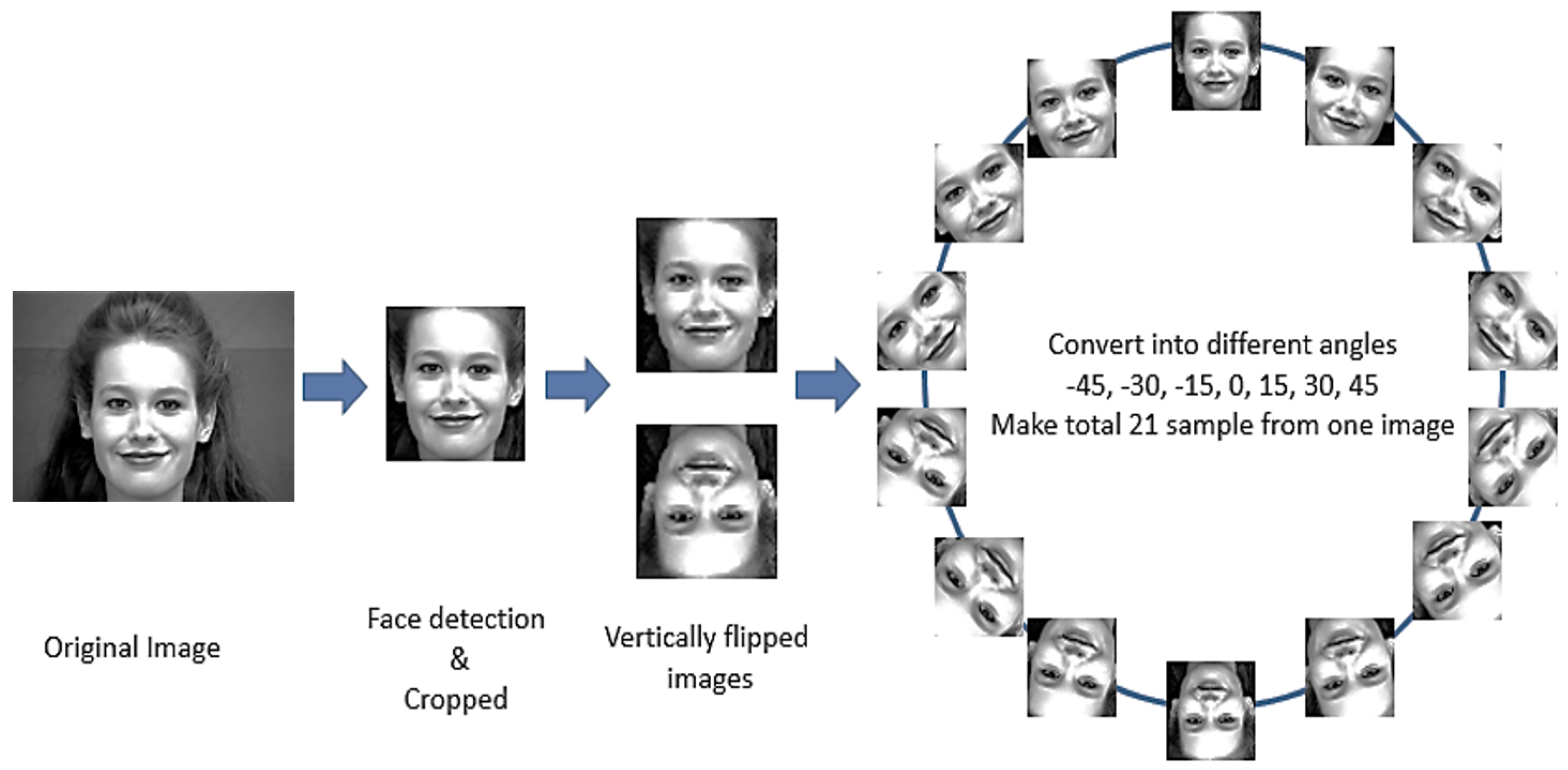

Figure 1 provides the flow of the proposed model, where preprocessing of the obtained image set is undertaken in the first step followed by face detection and cropping in the second step. In the third step, the image is flipped vertically, and 2 images and 7 angles from each image are formed producing a total of 14 images in the final step.

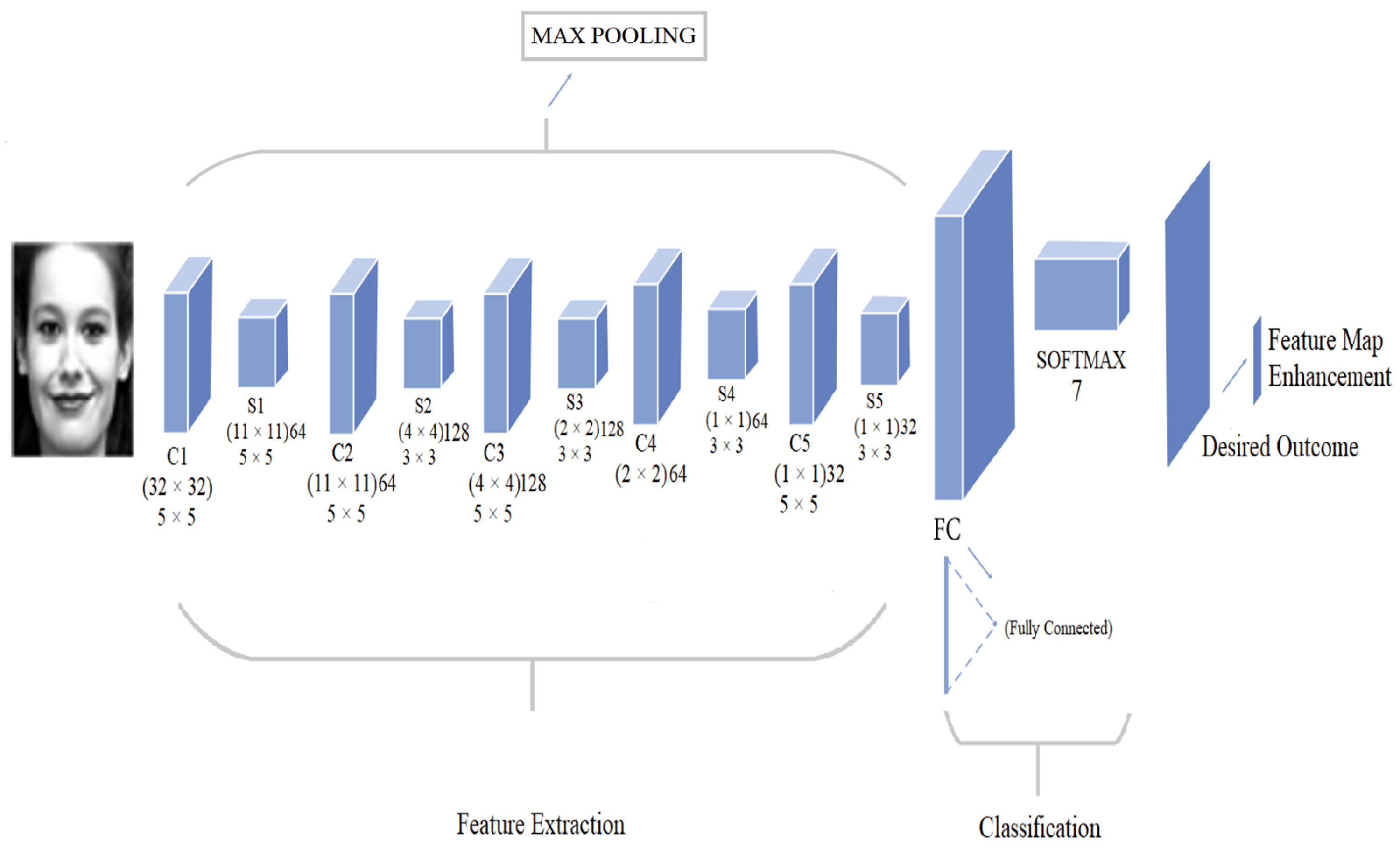

Furthermore, in the proposed framework, the first convolutional layer uses a 5 × 5 filter. It takes a 32 × 32 sized image of grayscale which means the number of channels is 1. Its output size is 32 feature maps. It breaks images into a small subsection of size 5 × 5. Then to reduce the data of the image, the max pool function is used which pools out the max value in the region as shown in

Figure 2. After applying max-pooling, the size becomes 11 × 11 andbut it keeps the output size the same as the convolutional layer.

In the second layer, the output size increase from 32 × 32 to 64 × 64 with the same filter size. The input size is 11 × 11. After that, max pool volume becomes [4 × 4 × 64], while applying the third convolutional layer results in a size of [4 × 4 × 128]. The max-pooling produces a size of [2 × 2 × 128]. Now as dropping the output size of the convolutional layer begins, the output rate is reversed. In the fourth layer, after max-pooling, the CNN model makes only a 2 × 2 kernel size 64 feature map and gives [1 × 1 × 64]. In the fifth layer of convolution, the volume becomes [1 × 1 × 32] and produces 32 feature maps. The dense layer is applied with 1024 hidden neurons. A dense layer or fully connected layer changes the 2- or multi-dimensional data into flat data. All these layers use the ReLU activation function, which is actually a SoftMax function.

Machine-learning-based models have two phases training and testing/execution; in the training phase, all the data along with labels are provided to the classifier to learn from the pattern between the data and label, while in testing, the trained model is validated. The training phase runs and makes the suitable function, called . Initially, preprocessing is performed on the image, and features are extracted. Then CNN is applied to find the pattern and the trained model is saved. In the next phase, the trained model and weights are loaded to predict the labels for the test samples.

3.1. Dataset Description

This study uses publicly available datasets CK+ and JAFFE. These datasets have been widely used in the existing literature.

Table 2 shows the number of samples for each dataset.

CK+ has 123 subjects who posed eight emotions: anger, contempt, disgust, fear, joy, neutral, sad, and surprise. There is a sequence of images for each emotion, starting with neutral and ending with extreme expression. At this point, the images are manually picked, and then neutral images are separated from the original dataset. Similarly, the remaining images are sorted in respective folders. Now, we have 9591 total images, which also contain duplication. These duplicate images are removed, and the size of the set is reduced to 6362 images. JAFFE is based on 10 female subjects and the total number of images is 213; these images are only separated into respective folders. Detail of the total images for each emotion can be seen in

Table 3. The first column represents the emotions sample. The second column has two sub-columns displaying the number of original images per set and, the number of images after removing duplicates from CK+. The third column shows JAFFE detail and the last column presents the total images of each emotion. The total number of images after removing duplication is 6575.

3.2. Preprocessing Dataset

In the preprocessing phase, the image is changed into a format that is appropriate for the CNN model. Preprocessing is dived into four main steps: detecting the face, cropping it, flipping it vertically, and making samples of different angles OpenCV [

33] is used for preprocessing

In the first step, the image is converted into grayscale which converts 3-channel RGB image into 1 channel. To detect the face, Viola–Jones [

34] is used with a Haar-like feature by using pre-trained cascades of frontal face files provided by OpenCV, which returns the face area. The face area is cropped and re-sized to 32 × 32 and is vertically flipped. A copy of it is then made. This step doubles the number of images which are then converted into 7 different angles (−45, −30, −15, 0, 15, 30, 45). This helps generate a large amount of image data and provides more samples to train and test. Moreover, it makes the model train at different angles as well. This process is applied in both the training set and the testing set. It makes our model more powerful and precise in detecting emotions from different angles.

CNN is well-suited for pattern classification problems. CNN is very similar to a neural network, where neurons, activation functions, weights, and learning rates are the same as a neural network. The key difference is in its structural design, as CNN takes images as input. It is specially designed to deal with 2-dimensional data [

35,

38]. In every CNN model, it is essential to set some hyperparameters, such as learning rates, regulation function value, filter sizes, size of the feature map, and the number of hidden neurons. All the performance of the CNN is based on these parameters and the arrangement of layers. The computation of this layer is performed by sliding a window called a filter over the original image by one pixel called stride. This process executes pixel-wise multiplication and adds up to form the result of integers, which shape individual components of the resulting matrix. The output is called a feature map, convoluted map, or activation map. The value of feature maps depends on the values of filters, as different filters generate different feature maps. We just have to initialize the parameters before the training. Following are some parameters related to different convolutional layers.

Hyperparameters are the values that should be set for training. In the current study, batch size, learning rate, weights, biases, hidden neurons, input shape, output values at each layer, etc., are hyperparameters. In this list, some are crucial, such as learning rate and hidden neurons. The batch size used is ’None’ because dynamical allocation is preferably desired. For regulation, the dropout function is used only once after the fully connected layer’s value is 0.8, and the number of hidden neurons is 1024. The learning rate of the proposed is set to 0.0001 as mentioned in

Table 4.

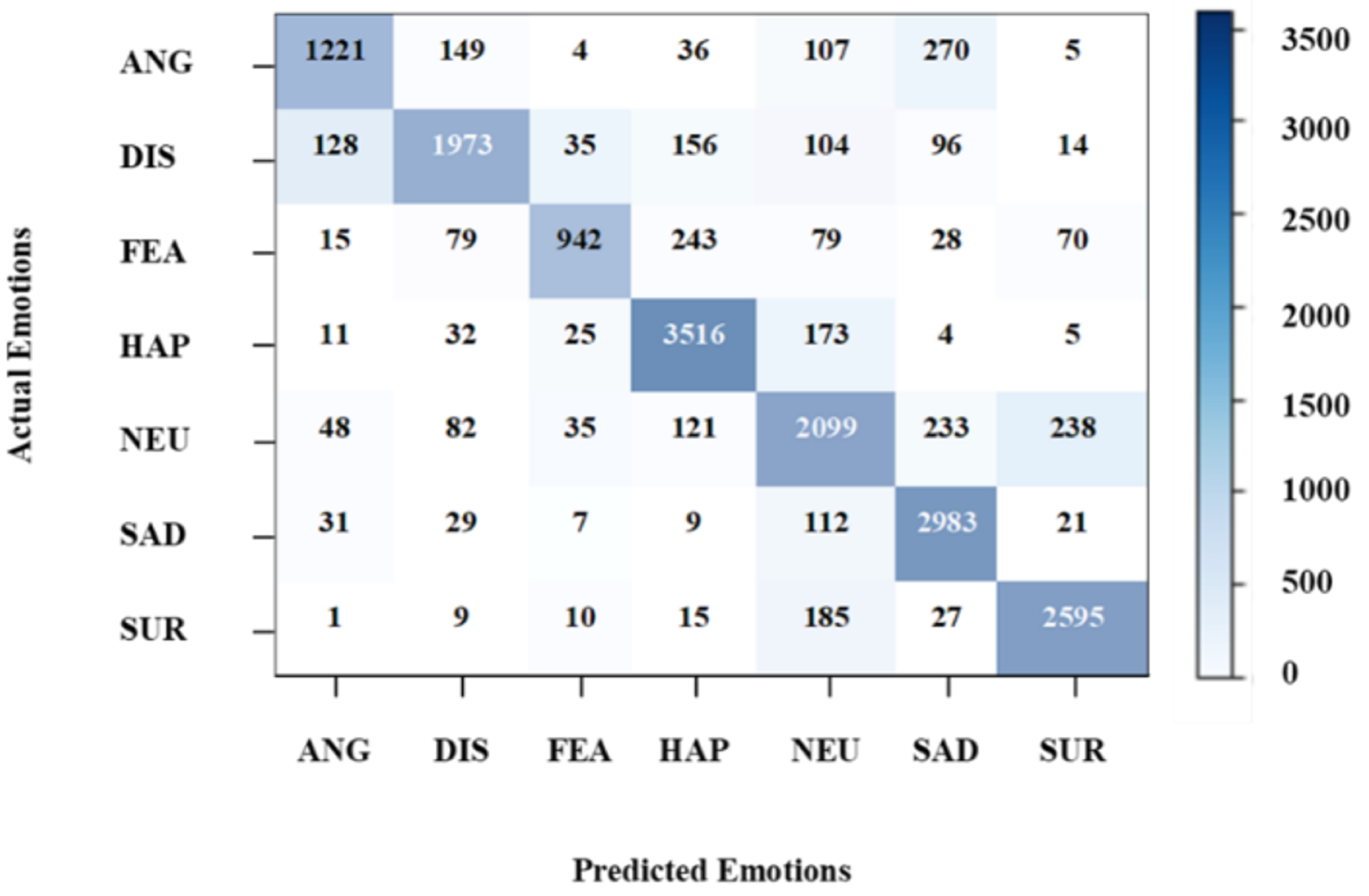

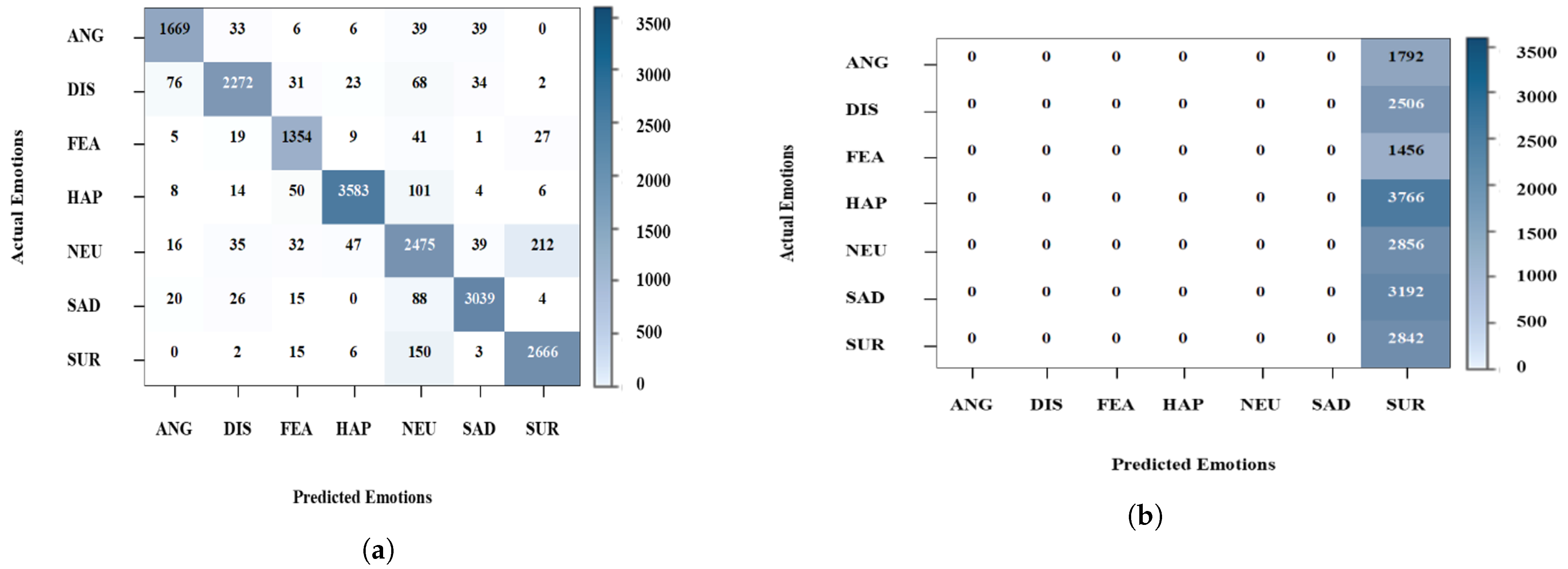

3.3. Evaluation and Analysis

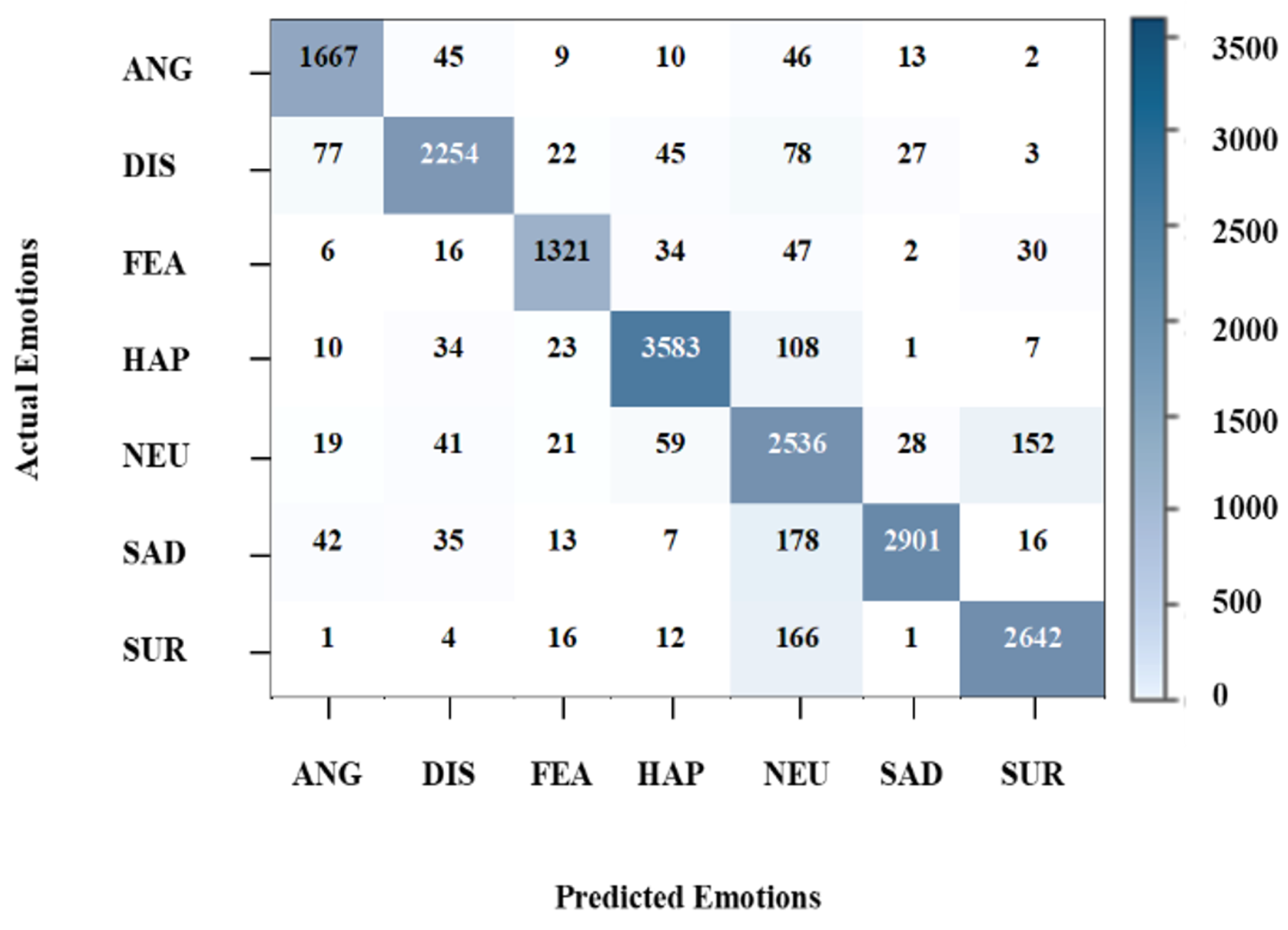

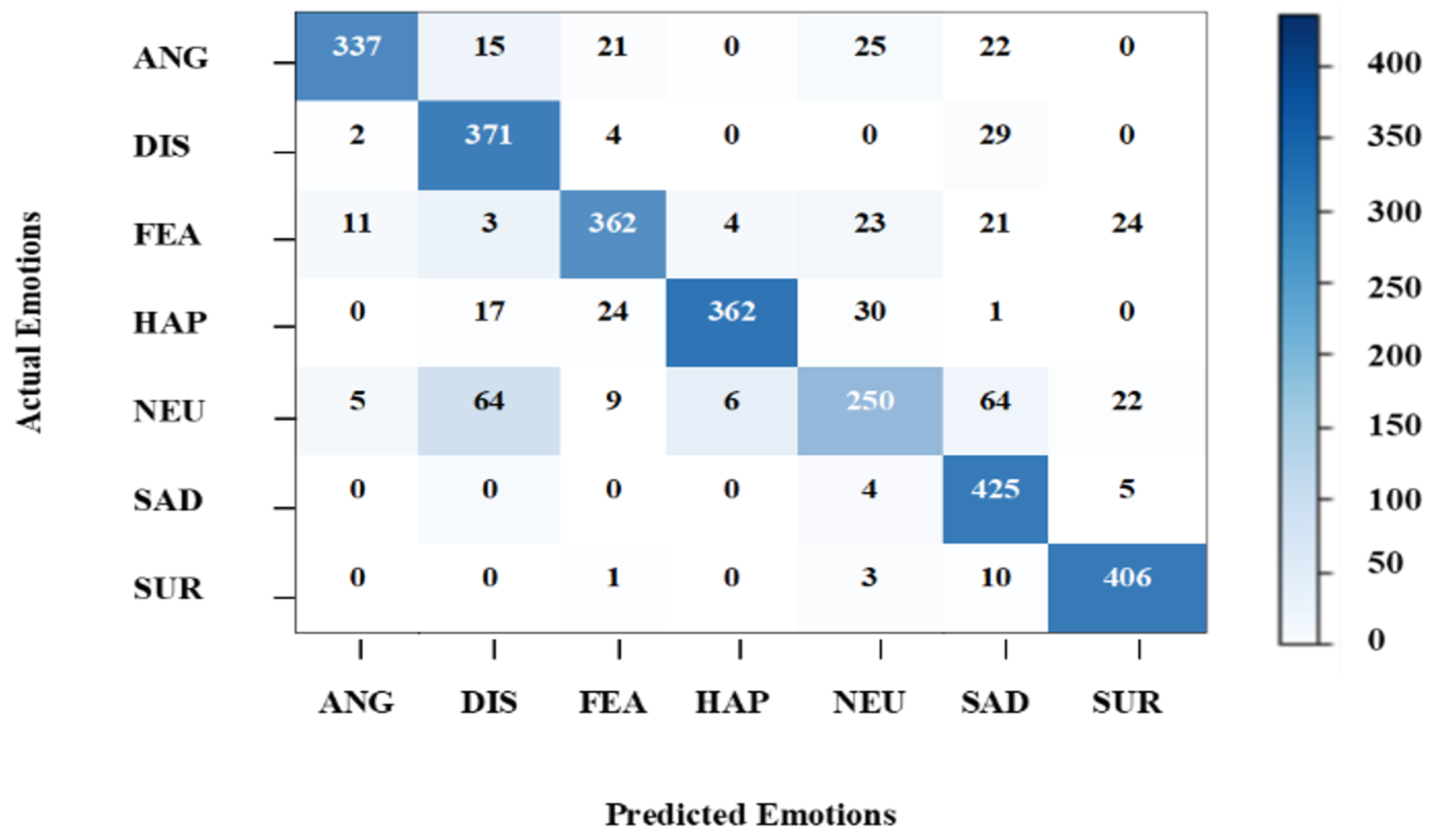

The performance of the proposed model is evaluated in terms of testing and validation. Results are evaluated regarding accuracy, which is calculated based on the values of true positive (TP), true negative (TN), false positive (FP), and false negative (FN) given in the confusion matrix.

3.4. Performance Comparison

The two recently published models are chosen to compare the performance of the proposed model. The first model, called Test Model 1 [

21], consists of 3 convolutional layers. The first two layers have a 32 × 32-feature map, while the third layer has a 64 × 64-feature map. It has two fully connected layers each with 1000 hidden neurons. After every convolutional layer, max-pooling of 3 × 3 kernel is performed. The dropout rate is 0.5; the learning rate is not mentioned in the paper, so we use our learning rate (1 × 10

) 0.0001 to obtain the accuracy. As per the reported results, a 96% accuracy from the model is obtained. Test Model 2 [

22] is based on two convolutional layers of 32 and 64 feature maps, respectively, and one fully connected layer that has 256 hidden neurons. Max-pooling is performed after every convolutional layer with a kernel size of 2 × 2. The parameters of Test Model 1 and Test Model 2 are given in

Table 5.

5. Conclusions and Future Work

A new architecture design for a convolutional neural network is presented in this study for facial expression recognition. By changing the arrangement of the layer and applying a 1 × 10 learning rate, substantial improvement in the precision of the model has been accomplished. Extensive experiments are performed using CK+ and JAFFE datasets. Two strategies are used for experiments, wherein the first involves using CK+ and JAFFE datasets as one dataset, while for the second, CK+ is used for training and validation, and JAFEE is used for testing. Performance is evaluated at different epoch levels and other hyperparameters. Experimental results suggest that the proposed model shows superior performance compared to both models used for performance comparison. The proposed model achieves average accuracy scores of 92.66% and 94.94% for experiments 1 and 2, respectively. To deal with the occlusion and posture change, the images are generated at different angles, and results indicate that the proposed model is able to detect emotions at 45. Despite the better results using the proposed model, it is limited to not using dark-colored faces and dark images for emotion detection.

In the future, we intend to make an application using the proposed model that can detect emotions for patients with autism spectrum disorder, who face difficulty in expressing emotions and social interaction. It will help them to communicate with others and can be of great help for diagnostic and therapeutic services. This application scans the person and can translate their intuitions and emotions for other people. It can be extensively used by medical practitioners, therapists, and psychologists who primarily work with people with mental illnesses, developmental disabilities, and neurological disorders, hence providing a great service to society and humanity.