Abstract

Microservice architecture is the latest trend in software systems development and transformation. In microservice systems, databases are deployed in corresponding services. To better optimize runtime deployment and improve system stability, system administrators need to know the contributions of databases in the system. For the high dynamism and complexity of microservice systems, distributed tracing can be introduced to observe the behavior of business scenarios on databases. However, it is challenging to evaluate the database contribution by combining the importance weight of business scenarios with their behaviors on databases. To solve this problem, we propose a business-scenario-oriented database contribution assessment approach (DBCAMS) via distributed tracing, which consists of three steps: (1) determining the importance weight of business scenarios in microservice system by analytic hierarchy process (AHP); (2) reproducing business scenarios and aggregating the same operations on the same database via distributed tracing; (3) calculating database contribution by formalizing the task as a nonlinear programming problem based on the defined operators and solving it. To the best of our knowledge, our work is the first research to study this issue. The results of a series of experiments on two open-source benchmark microservice systems show the effectiveness and rationality of our proposed method.

1. Introduction

Microservice architecture [1] is an architectural style and approach that develops a single large and complex application as a series of small services, each running in its own process and communicating with lightweight mechanisms such as HTTP APIs. The application system based on microservice architecture (referred to as microservice systems hereafter) has the advantages of independent development, easy continuous delivery, maintenance, scalability, and autonomy compared with monolithic applications [1,2,3,4]. Therefore, in recent years, microservice architecture has become the mainstream for cloud-native application systems, and more and more companies or organizations have chosen to transform their application systems from traditional monolithic architecture to microservice architecture [5,6].

However, software systems adopting microservice architecture have inherent problems, i.e., the highly dynamic and complex environment at runtime, making it more difficult to understand, diagnose, and debug than monolithic software [3]. Specifically, microservice systems usually contain many business scenarios, and related databases that support these business scenarios are deployed in corresponding different services. Each business scenario contains a series of business requests, and the execution of a request usually involves a complex service call chain possessing a large number of service interactions [7], where each service in the call chain records logs respectively. In addition, asynchronous or multithreaded calls also widely exist in microservice systems. These factors make it tough to obtain the support information of the databases for business scenarios by analyzing the log sequence similar to monolithic applications [8,9]. However, in the process of running and maintaining a microservice system, the support information of databases is important for operation engineers and developers.

We define the support of databases to the business scenarios as the contribution of databases, and the task of this paper is to study how to assess the contributions of business-related databases to upper-level business scenarios in microservice systems. As far as we know, there is little previous research on assessing database contribution in microservice systems, but it is meaningful and valuable to study this issue from the perspective of runtime deployment optimization and system stability. Specifically, for example, in the scene where the resources of cluster are relatively sufficient, appropriately increasing the number of instances of services where the databases with high contribution are located can reduce the average response time of businesses to a certain extent [10]. In addition, it can perform multiservice hot standby operations on services hosted by databases with high contribution to improve the stability of the entire application [11].

In order to evaluate the contributions of databases to business scenarios in the microservice system, for the dynamic and complex features of the microservice system mentioned above, distributed tracing [12] can be introduced to observe the invocations between the services, which include behavior information on the database (tables). At the same time, business scenarios in a system always have different importance weight [13] (for example, for an online shopping store, purchasing goods is more important than viewing historical orders). The challenge of this study is determining how to consider both the importance weight of business scenarios and their operations on databases in assessing database contribution.

In this paper, we propose a business-scenario-oriented database contribution assessment approach via distributed tracing for microservice systems. First, the importance weight of the main business scenarios of the microservice system is determined by the analytic hierarchy process (AHP) [14]. Second, we reproduce the business scenarios of the system. At the same time, based on the traces obtained by distributed tracing, for each business scenario, the numbers of records with the same operation on the same database are aggregated. Finally, we define operators for four basic database operations (creating, retrieving, updating and deleting) to formalize the task of database contribution assessment as a nonlinear programming problem. By solving this nonlinear programming problem, the contributions of databases in the microservice system can be gained. It should be noted that each database in the system could contain one or more tables, and the granularity of our method is the database. Our main contributions are summarized as follows:

- To the best of our knowledge, this paper is the first research to assess the contributions of the databases to business scenarios for microservice systems based on the information observed by distributed tracing.

- The method proposed in this paper considers the importance weight of business scenarios and defines operators for the four basic database operations (creating, retrieving, updating, and deleting) so as to skillfully formulate this task as a nonlinear programming problem.

- The experimental results on two open-source benchmark microservice systems (i.e., Sock-Shop [15] and TrainTicket [16]) demonstrate the effectiveness and rationality of our proposed method.

The rest of this paper is structured as follows. Section 2 introduces the related work about distributed tracing and contribution assessment. Section 3 defines the task and details our proposed method. Section 4 introduces the experimental settings and analyzes the experimental results in detail. Section 5 discusses some issues about the method and experiments in this paper. Section 6 provides the conclusion and future work of our study.

2. Related Work

This work is based on the information obtained from distributed tracing to assess the contributions of databases in microservice systems. Hence, related work is divided into two categories: the background, basic concepts, and related research and applications of distributed tracing; and the related research of contribution assessment.

2.1. Distributed Tracing

Due to the complexity, dynamics, and uncertainty at runtime, it becomes more difficult to understand, diagnose, and debug the microservice system [4]. Therefore, observability [17] is regarded as the basic requirement of microservice systems. As a significant means to achieve observability, distributed tracing has been widely accepted and practiced in the industry. According to the industrial research in [12], distributed tracing is mainly used in timeline analysis, service dependency analysis, aggregation analysis, root cause analysis, and anomaly detection for microservice systems. Google introduced the first distributed tracing system, Dapper [18], in 2010, which led to the rapid development of distributed tracing. In recent years, many active distributed tracing projects in the open-source community, such as Pinpoint [19], Zipkin [20], Jaeger [21], and SkyWalking [22], have followed fundamental principles and working mechanisms of Dapper. At the same time, specifications such as OpenTracing [23], OpenCensus [24], and OpenTelemetry [25] have been formulated within the industry.

The data model adopted by the OpenTracing specification, shown in Figure 1, mainly involves two core concepts:

Figure 1.

Traces and spans in distributed tracing.

- Span: A call between two services or threads is called a span. Several properties are recorded in the span, including the operation name, start and end timestamps, span tags, span logs, span context, etc.

- Trace: A directed acyclic graph composed of multiple spans is called a trace.

For a specific system, a trace represents a complete process of processing business requests; a span represents a specific call in this business request. A request generates a unique trace ID and propagates throughout the trace, i.e., spans belonging to the same trace have the same trace ID. At the same time, each span also has a unique span ID; except for the outermost span, each span has a span as its own parent and is marked with parent ID. In addition, span context is the information transmitted in the trace along with distributed transactions. It generally records two parts: one is the data necessary to implement the trace, such as the trace ID, the span ID, and the data required by downstream services; the other is the user-defined data recorded as key-value pairs for subsequent analysis.

At the same time, a lot of research has been conducted based on distributed tracing. Zhou et al. [26] proposed an approach, named MEPFL, of latent error prediction and fault localization for microservice applications by learning from system trace logs. Guo et al. [3] proposed a graph-based approach of microservice trace analysis, named GMTA, for understanding architecture and diagnosing various problems, which includes efficient processing of traces produced on the fly and has been implemented and deployed in eBay. Zhang et al. [9] proposed a deep-learning-based microservice anomaly detection approach named DeepTraLog, which uses a unified graph representation to describe the structure of a trace, and trains models by combining traces and logs. In [27], with their novel trace representation and the design of deep Bayesian networks with posterior flow, Liu et al. designed an unsupervised anomaly detection system called TraceAnomaly, which can accurately and robustly detect trace anomalies in a unified fashion. Bogner et al. [28] designed an approach to calculate service-based maintainability metrics from runtime data and implemented a prototype with a Zipkin integrator.

2.2. Contribution Assessment

Since there are few studies on the evaluation of database contribution, we introduce the research on contribution assessment in other fields.

Node importance ranking in complex networks has received more and more attention in recent years because of its great theoretical and application value. Common methods for identifying and evaluating vital nodes in a network can be classified into structural centralities (including neighborhood-based centralities [29,30,31] and path-based centralities [32,33,34]), iterative refinement centralities [35,36,37,38,39], node operation [40], and dynamics-sensitive methods [41]. Among them, PageRank [36] is a famous variant of eigenvector centrality and is used to rank websites in the Google search engine and other commercial scenarios. LeaderRank [37] later improved two problematic issues in PageRank.

For the evaluation of developers’ contributions in open-source projects, Gousios et al. [42] proposed a model that, by combining traditional contribution metrics with data mined from software repositories, can deliver accurate developer contribution measurements; Zhou et al. [43] suggested researching the contributions of developers from three aspects: motivation, capability, and environment.

To enable fair credits allocation for each party in federated machine learning (FML), Wang et al. [44] developed techniques to fairly calculate the contributions of multiple parties in FML, in the context of both horizontal FML and vertical FML. Boyer et al. [45] proposed an easy-to-apply, universally comparable, and fair metric named Author Contribution Index (ACI) to measure and report co-authors’ contributions in the scientific literature, and ACI is based on contribution percentages provided by the authors.

3. Methodology

3.1. Problem Definition

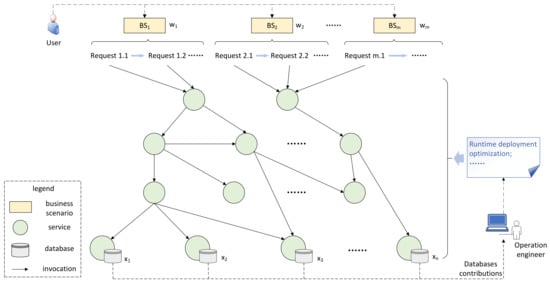

As shown in Figure 2, given a microservice system , where represents m-number business scenarios, and represents n-number business-related databases deployed in the system, we denote the importance weight vector of business scenarios as . The task of this paper is to calculate the vector , i.e., the contributions of n-number databases to m-number business scenarios. The invocations in Figure 2 are obtained by distributed tracing, which contains the behavioral information of the business scenarios on the databases.

Figure 2.

An example of a general microservice system. The main practical influence of the calculated database contribution is to provide information for runtime deployment optimization.

3.2. Method Framework

We propose a business-scenario-oriented database contribution assessment approach, and the framework is illustrated in Figure 3. First, the importance weight of business scenarios is determined by AHP, which includes constructing a business scenarios hierarchy, generating pairwise comparison matrices, hierarchical sorting, and consistency test. Second, we reproduce business scenarios, and the corresponding traces are generated by distributed tracing, which contain behavioral information about databases in span tags or span logs. At the same time, according to each business scenario and aggregate rules, the numbers of records with the same operation on the same database are aggregated. Third, we define operators for four basic database operations (creating, retrieving, updating, and deleting). Meanwhile, based on the business scenarios’ importance weight and the aggregated matrices obtained in the second step, the database contribution assessment is formalized as a nonlinear programming problem, which some corresponding optimization algorithms or tools can solve.

Figure 3.

Framework of our method.

3.3. Determining the Importance Weight of Business Scenarios

We determine the importance weight of the m-number business scenarios through analytical hierarchy process (AHP) [14,46]. AHP is a multicriteria decision-making approach, which was proposed by American operations research scientist Saaty in the 1970s. It has been widely used in enterprise management, engineering scheme determination, resource allocations, resolving conflict, etc. [47]. For determining the importance weight of business scenarios, the four steps are as follows:

Step 1. Structuring hierarchy of business scenarios.

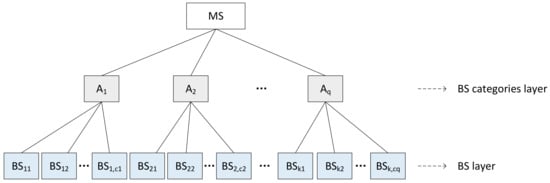

By combiningthe business scenarios of the microservice system, a hierarchical structure can be built, including the top layer, the middle layer (m-number business scenarios are divided into q-number different categories, and there are -number business scenarios in the i-th category, i.e., ; in simple systems, this layer can be omitted), and the bottom layer (m-number business scenarios layer), as shown in Figure 4. It is important to note that the business scenarios cover at least all major business processes and functions.

Figure 4.

Business scenarios hierarchy model.

Step 2. Constructing pairwise comparison matrices.

Construct a set of pairwise comparison matrices for each of the lower levels with one matrix for each element in the level immediately above. For example, for the hierarchy model in Figure 4, a total of pairwise comparison matrices need to be constructed. This step is usually achieved by inviting experts in related fields to use the relative scale measurement shown in Table 1 [14,46] for scoring. Denote the pairwise comparison matrix as , and represents the comparison result of the i-th element relative to the j-th element (the orders of the pairwise comparison matrices for the hierarchy model in Figure 4 are , respectively).

Table 1.

Pairwise comparison scale for AHP.

Step 3. Hierarchical single sorting and consistency test.

Hierarchical single ordering refers to calculating the importance weight of the elements in the lower layer to the corresponding element of the upper immediately layer. Correspondingly, for the hierarchy shown in Figure 4, we need to execute hierarchical single sorting and consistency test times, and we illustrate this process using the middle layer (BS categories layer) as an example. Generally, hierarchical single sorting can be solved by the eigenvector method [48], as is shown in Equation (1).

where is the corresponding q-order pairwise comparison matrix, is the largest eigenvalue of it, and is the corresponding eigenvector of . According to the properties of the pairwise comparison matrix [48], exists and is unique, the components of are all positive components, and normalized is the result of hierarchical single sorting. However, whether the calculated results are acceptable or not requires a consistency test, which requires the following two steps.

- Calculating consistency index (CI).CI is determined by using the eigenvalue, as is illustrated in Equation (2).where q is the order of the pairwise comparison matrix .

- Calculating consistency ratio (CR).If q > 2, to measure the size of CI, the average random consistency index (RI) is introduced. RI under different matrix size is provided in Table 2 [14,46], which was calculated by Saaty as the average consistency of square matrices of various orders n filled with random entries. If the CR is less than 0.1, it is considered that the pairwise comparison matrix is nearly consistent, i.e., the corresponding hierarchical single sorting result is accepted; otherwise, the pairwise comparison matrix should be reviewed and improved.

Table 2. Average random consistency (RI).

Table 2. Average random consistency (RI).

Step 4. Hierarchical total sorting and consistency test.

Hierarchical total sorting refers to calculating the relative importance of all elements from a certain level to the top level. Calculating a hierarchical total sorting requires knowing the hierarchical total sorting of its upper elements, which is a top-down calculation process. For the hierarchy model in Figure 4, denote the hierarchical total sorting result of the middle layer as , and denote the hierarchical single sorting result of the bottom layer as , then the hierarchical total sorting of the i-th business scenario (assume it belongs to the j-th category) is

where , .

Similarly, a consistency test is required to ensure that the hierarchical total sorting result is acceptable. For the hierarchy model in Figure 4, denote the hierarchical single sorting consistency index of the layer to the elements in the upper layer as , and the average random consistency as , then the consistency ratio of the hierarchical total sorting can be calculated by Equation (5).

If < 0.1, the hierarchical total sorting result is acceptable, and the bottom element importance vector can be obtained; otherwise, the pairwise comparison matrices with a higher consistency ratio need to be adjusted. When multiple experts participate in scoring, the final result can be obtained by averaging the hierarchical total sorting results of all experts.

3.4. Aggregating Numbers of Records of the Same Operation

The method in this paper is oriented to business scenarios. Therefore, to reproduce a business scenario, a typical business process that contains a series of interface inputs or clicks can be executed automatically or manually. At the same time, we use a distributed tracing tool to generate corresponding traces which contain behavioral information on the database table in span tags or span logs. For example, from the span tags in Table 3, we can see the query operation of the current span on the Catalogue table.

Table 3.

Span tags in a span of Catalogue service. The span ID is 7b5af686fc795d70 and span name is GET/catalogue/{id}.

Then, for each business scenario, we aggregate numbers of records based on the following rules:

- It aggregates the numbers of records of the same operation on the same database. When a database contains multiple tables, the aggregation result can be obtained by counting the number of records of the same operation for each table.

- For parallel behavior in the typical process of business scenarios, the average value of the numbers of the records of the same operation on the same database of them is adopted. For example, as illustrated in Figure 5, there are three steps in a typical process of purchasing socks and two parallel behaviors (i.e., catalogue menu and browse) in the first step. Assume that the numbers of records of retrieving operations on the Catalogue for catalogue menu, browse, and detail page are , , and , respectively, and the third step does not have a retrieving operation on the Catalogue. Then, for Purchasing socks, the aggregated result of the number of records of retrieving operations on the Catalogue is .

Figure 5. A typical process of Purchasing socks in Sock-Shop.

Figure 5. A typical process of Purchasing socks in Sock-Shop.

Finally, for the i-th business scenario, an aggregation matrix can be obtained based on the above rules:

where n denotes the number of databases in the microservice system; , , , and , respectively, represent the number of records required to create, retrieve, update, and delete on the k-th database to complete the i-th business scenario.

3.5. Formalizing the Task as a Nonlinear Programming Problem

To transform this problem into a mathematical problem, we define corresponding operators for the four basic operations on the database (creating, retrieving, updating, and deleting), as shown in Table 4.

Table 4.

The definitions of operators for the four basic database operations .

In our definition, , , , and are four parameters, representing the weight of creating, retrieving, updating, and deleting operations, respectively. Based on the aggregated matrices of business scenarios, the number of records processed by each operation can be calculated by the equations in Table 5. The weight of the operation corresponding to the smallest number of records is 1; the weight of the operation corresponding to the smaller number of records is 2; the weight of the operation corresponding to the larger number of records is 3; the weight of the operation corresponding to the largest number of records is 4.

Table 5.

The equations of counting records operated by four basic operations.

in the operators represents the numbers of operated records in the i-th business scenario, which can be calculated by Equation (7).

The structure of the defined operators in Table 4 can ensure that the number of operated records h and the weight of four basic database operations maintain a positive correlation with database contribution , to a certain extent, while appropriately reducing the impact of the large gap between h on the calculating database contribution.

Based on the defined operators and the aggregation matrix of each business scenario, we can use the database contribution to represent the importance weight vector of business scenarios:

Then, combined with the business scenario importance weight by AHP, the database contribution assessment problem can be formalized as the following nonlinear programming problem:

The optimization objective in Equation (9) is defined based on cosine similarity. According to the meaning of cosine similarity, Equation (9) essentially finds the x that minimizes the angle between vector g and vector w under constraints, which is a constrained nonlinear optimization problem.

For the above nonlinear optimization problem, there are generally two kinds of solutions: traditional mathematical analysis-based methods (such as sequential quadratic programming) and heuristic algorithms (such as genetic algorithm and simulated annealing). Traditional methods usually depend on the initial value of the function and the gradient information, and easily fall into local optimum. However, the heuristic algorithm does not depend on the mathematical properties of the problem itself, has better adaptability, and more easily obtains the optimal solution [49]. Therefore, we use the classic heuristic algorithm, genetic algorithm, to optimize Equation (9).

4. Experiments

In this section, we conduct experiments on two open-source benchmark microservice systems to verify the effectiveness and rationality of the method proposed in this paper. Two microservice systems are deployed by Docker. Each service has one instance, and Docker-compose is used to orchestrate the docker containers cluster. The databases of both systems are deployed in their respective database services, and each database contains only one table (the database has the same name as the table). Additionally, there is no special requirement for setting the size of database tables, as long as it is able to run the typical business process of a corresponding business scenario.

4.1. Experimental Systems and Settings

4.1.1. Sock-Shop

Sock-Shop [15] is a small typical microservice application containing 13 services for online selling. Services in Sock-Shop are implemented in multiple languages (e.g., Java, .NET, and Go). The entire application is divided into services with a single function that can be independently developed, deployed, and extended. Sock-Shop has been widely used in microservice research [26,50,51]. It fully implements the primary online shopping businesses, and related business databases are deployed in the system. Therefore, this paper uses Sock-Shop as the experimental object.

Four databases (i.e., User, Order, Catalogue, and Cart) in the system and their descriptions and numbers of records are shown in Table 6.

Table 6.

Descriptions and settings of databases in Sock-Shop.

In the system, there are three users, and each user has five records in the shopping cart and ten historical orders; at the same time, there are nine pairs of socks belonging to different categories.

4.1.2. TrainTicket

To verify the applicability of this method to larger-scale microservice systems, we conduct a study on the V0.2.0 of TrainTicket [16]. TrainTicket is a medium-scale open-source microservice system for train ticket booking that provides typical functions, including ticket query, reservation, rebooking, order management, etc. It contains 45 business services written in different languages (e.g., Java, JavaScript, Python), as well as basic services such as messaging middleware services, distributed caching services, and database services. The total number of services is over 70, and services communicate with synchronous REST calls and asynchronous messaging.

Sixteen business-related databases to be evaluated in the system and their descriptions and numbers of records are shown in Table 7.

Table 7.

Descriptions and settings of databases in TrainTicket.

In the experiment, we registered twenty users; each user reserved twenty tickets, had an average of four pieces of consignment information, and had four contacts. Ten routes containing thirteen stations and ten corresponding travels are set in the system, including five high-speed trains (GaoTie/DongChe) and five ordinary trains, as well as the price ratio information of the corresponding ten travels. Five high-speed trains (GaoTie/DongChe) correspond to five pieces of train food information; the Food-map contains information about six restaurants. The system also contains a piece of configuration named DirectTicketAllocationProportion. In addition, since high-speed trains and ordinary trains have almost the same business logic, we calculate the contributions of their respective related databases (i.e., Order and Travel) uniformly.

4.2. Baseline Methods

To verify the effectiveness of DBCAMS, we choose the following two baselines for comparison.

- DBCAMS-MMD: Replace maximizing cosine similarity with minimizing maximum mean discrepancy [52] (MMD) in DBCAMS. MMD can be used to test the similarity between the two distributions. When MMD becomes smaller, the two distributions become more similar.

- DBCAMS-RN: Remove the of the defined operator in DBCAMS. For example, the operator of “Create h records on the k-th database in the i-th business scenario.” changes from to , and the rest is the same as DBCAMS.

The three methods (DBCAMS and two baselines) correspond to three different optimization objectives, all of which are solved by genetic algorithm. To compare the optimization effects of them, we select L2-Norm as the index. The method with a smaller L2-Norm has a better optimization effect.

4.3. Main Results

In this section, we present and analyze the main results according to the steps of our method described in Section 3.

4.3.1. Sock-Shop

Step 1. Determining the importance weight of business scenarios.

By analyzing the main functions and processes of Sock-Shop, the hierarchy structure is illustrated in Figure 6.

Figure 6.

The business scenarios hierarchy of Sock-Shop. We denote the four business scenarios of Purchasing socks, Cart management, User information management, and Historical orders query as , , , and , respectively.

Then, we invited five related experts to score the business scenarios, and the pairwise comparison matrices are shown in Appendix A (Table A1, Table A2, Table A3, Table A4 and Table A5). By hierarchical sorting and consistency test (Table A6 in Appendix A), the final importance weight of business scenarios in Sock-Shop can be calculated and are shown in Table 8.

Table 8.

The importance weight of business scenarios in Sock-Shop.

Step 2. Aggregating numbers of records of the same operation.

Since aggregation matrices are sparse, we present the aggregation results of business scenarios in Sock-Shop in tabular form (Table 9).

Table 9.

The aggregation results of business scenarios in Sock-Shop.

Step 3. Calculating database contribution.

By solving the three corresponding nonlinear programming problems (using the genetic algorithm in the MATLAB global optimization toolbox), the optimization results are shown in Table 10. The L2-Norm of DBCAMS is 0.1456, which is better than the other two baseline methods.

Table 10.

The main results of Sock-Shop. The best result of L2-Norm is underlined, and the databases’ contributions of the corresponding method are bold. ().

The difference in optimization effects between DSCAMS and DBCAMS-MMD is caused by the difference between maximizing cosine similarity and minimizing MMD. Cosine similarity measures the consistency of the direction between vector dimensions, and pays more attention to the differences between dimensions than the differences in numerical values; MMD maps the two samples to be compared into a high-dimensional space (Reproducing Kernel Hilbert Space, RKHS) through a kernel function to find the distance between the two samples in RKHS. The reason for the better performance of DBCAMS is probably that and are more suitable for directional optimization in this situation; when the samples are mapped from low to high dimensions, the large dimension gap may cause noise generation.

The difference in optimization effects between DSCAMS and DBCAMS-RN is caused by difference between the operators. The role of is to reduce the influence of the gap between the number of operation records on calculating contribution, which not only ensures the positive correlation between the number of operation records and the database contribution, but also reduces the error in the optimization process.

Meanwhile, according to the result from DBCAMS, Catalogue has the highest contribution (close to 0.5), mainly because the importance of Purchasing socks is high, and the invocations to Catalogue account for a high proportion in Purchasing socks. The contributions of the Cart and User are close (both are about 0.25). The Order has the lowest contribution, mainly because the importance of the Historical order query is low (only 0.0769), and this business scenario only calls the Order.

4.3.2. TrainTicket

Step 1. Determining the importance weight of business scenarios.

By combiningand analyzing the business scenarios of TrainTicket, a three-tier hierarchy structure is built in Figure 7.

Figure 7.

The business scenarios hierarchy of TrainTicket. The fourteen business scenarios are divided into two categories: user and administrator. We denote the fourteen business scenarios of Ticket reserve, User order management, …, and Config management as , , …, and , respectively.

According to the hierarchy model in Figure 7, we invited five experts familiar with the business to score. At the same time, it is agreed that the user catalogue and the administrator catalogue are of the same importance to the system, i.e., the experts only need to construct pairwise comparison matrices for the third layer of the hierarchy. The pairwise comparison matrices for user business scenarios () and administrator business scenarios () are shown in Appendix B (Table A8, Table A9, Table A10, Table A11, Table A12, Table A13, Table A14, Table A15, Table A16 and Table A17). Table 11 presents the final importance weights of business scenarios obtained through hierarchical sorting and consistency tests (Table A18, Table A19 and Table A20 in Appendix B).

Table 11.

The importance weight of business scenarios in TrainTicket.

Step 2. Aggregating numbers of records of the same operation.

Similarly, since aggregation matrices are sparse, we present the aggregation results in Table 12.

Table 12.

The aggregation results of business scenarios in TrainTicket.

Step 3. Calculating database contribution.

By solving the three corresponding nonlinear programming problems (using the genetic algorithm in the MATLAB global optimization toolbox), the optimization results are shown in Table 13. DBCAMS has better optimization results (the L2-Norm is 0.3558) than others. Similar reasons are analyzed in the main results of Sock-Shop.

Table 13.

The main results of TrainTicket. The best result of L2-Norm is underlined, and the databases’ contributions of the corresponding method are in bold ().

According to the result from DBCAMS, Route, Travel, Station, and Order have higher contributions, and the contributions of other databases are between 0.02 and 0.05. Route and Station contribute more because both databases are invoked and account for higher proportions of operated records in Ticket reserve, User/Admin order management, and Advanced search business scenarios. The low contribution of other databases is mainly due to the low importance of the primary business scenarios in which they are invoked (Consign, User, and Contact). The number of records operated in related business scenarios is small (Assurance, Security, Train-food, Consign-price, and Food-map); other databases belonging to the same business scenarios have high contributions (Config and Train).

4.4. Results Analysis of Different Optimization Algorithms

In this section, we analyze the optimization effect of Equation (9) with different algorithms and L2-Norm as the evaluation index. For traditional methods, we choose interior point method and sequential quadratic programming (SQP) for comparison; for heuristic algorithm, we choose particle swarm optimization (PSO) and simulated annealing algorithm (SA) for comparison.

From the results in Table 14, we can see that for a single system, the optimization effect between traditional algorithms is basically the same, and the optimization effect between heuristic algorithms is basically the same, but the heuristic algorithms are generally better than the traditional methods.

Table 14.

Optimization effects of different algorithms. The best results are in bold, and the second best ones are underlined. The hyperparameters in the heuristic algorithm are set to empirical values that work well.

4.5. The Impact of Business Scenarios’ Importance Weight

In this section, we analyze the impact of business scenarios’ importance on database contribution through experiments on the two systems based on DBCAMS. Based on the aggregation matrix of each business scenario in the microservice system, we can calculate the sum of the number of records processed by the four basic operations (creating, retrieving, updating, and deleting) on each database by Equation (10).

As mentioned above, the database contribution is positively related to the number of records operated on it. Next, we analyze the experimental results of the two systems in detail.

4.5.1. Sock-Shop

Based on Table 9 and Equation (10), the total numbers of records operated on databases and corresponding contribution ranking in the Sock-Shop are shown in Table 15.

Table 15.

and contribution ranking of databases in Sock-Shop.

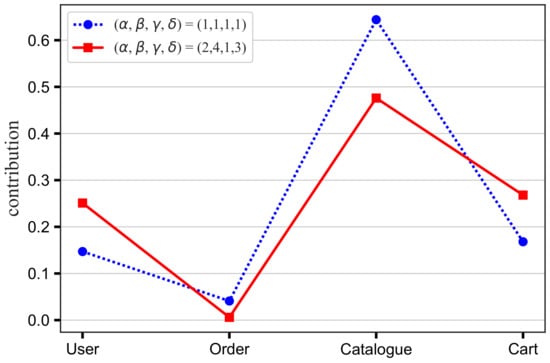

When considering business scenarios’ weight (Table 8), the database contributions are shown in Figure 8 (assuming that the four basic operations have the same importance, i.e., ). At this time, Catalogue, Cart, User, and Order are ranked from high to low in the order of database contribution. The main reason is that although the Order has the most records to be operated, the Historical order query is the least important, and it only calls the Order. At the same time, although the Catalogue has the lowest number of records to be operated, the Purchasing socks has the highest importance, and the Catalogue has a higher proportion of operational records in this business scenario.

Figure 8.

The database contributions to business scenarios in Sock-Shop.

4.5.2. TrainTicket

Based on Table 12 and Equation (10), the total numbers of records operated on databases and corresponding contribution ranking in the TrainTicket are shown in Table 16.

Table 16.

and contribution ranking of databases in TrainTicket.

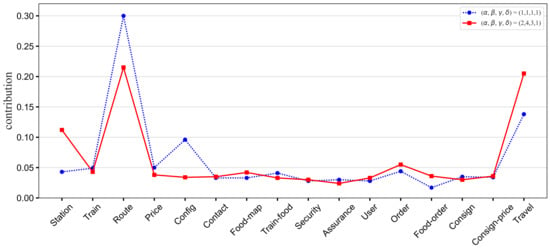

For TrainTicket, when considering business scenarios’ weight (Table 11), the database contributions are shown in Figure 9 (assuming that the four basic operations have the same importance, i.e., ). Although Travel ranks ninth based on the number of records being operated on, the business scenarios (Advanced search, Travel management) that call Travel are of high importance and therefore make the Travel highly contributive. Order ranks first according to the number of operated records, which is called in the Ticket reservation, and User/Admin order management. However, due to the low importance of the Ticket collect & Enter station in which only Order is called, the contribution of Order is reduced. Contact and User rank fourth and eighth according to the number of operated records, respectively, but the lower contribution of Contact and User is mainly due to the lower importance of Contact management and User management.

Figure 9.

The database contributions to business scenarios in TrainTicket.

4.6. The Impact of Four Basic Operations’ Weight

In this section, we analyze the impact of basic operations’ weight on database contribution based on DBCAMS. As mentioned above, , , , and represent the important weight of the creating, retrieving, updating, and deleting operations, respectively. According to the defined operators in Table 4, the larger the value, the more important the corresponding operation is, and the greater the impact on the database contribution.

We compare the database contributions with the same importance of four operations (i.e., ) and the database contributions when weighted according to the number of records processed by each operation. Next, we analyze the experimental results of the two systems in detail.

4.6.1. Sock-Shop

For Sock-Shop, based on Table 5 and Table 9, we obtain the importance weights for four basic operations (i.e., 2, 4, 1, 3). As shown in Figure 10, when the weight is set to , the ranking of database contributions remains unchanged, but the User and Cart contributions increase slightly, while the Order and Catalogue contributions decrease somewhat. The weight vector mainly increases the impact of retrieving and deleting operations on the database contribution, i.e., for a specific database, the more records that are queried and deleted, the easier it is to improve the contribution. Specifically, Car management and User information management mainly perform query operations on User, and Purchasing socks and Cart management mainly perform query and deleting operations on Cart, which results in the increased contribution of these two databases. In addition, since both Catalogue and Cart are called by Purchasing socks, the Catalogue contribution decreases. Similarly, User, Order, and Cart are all called by Cart Management, and the contribution of User and Cart increases, so the contribution of Order decreases.

Figure 10.

The database contributions to business scenarios in Sock-Shop.

4.6.2. TrainTicket

Similarly, for TrainTicket, based on Table 5 and Table 12, we obtain the importance weights for four basic operations (i.e., 2, 4, 3, 1). Figure 11 demonstrates the comparison of the four basic operations weight vector set to and . When the weight of the four basic operations is , which mainly increases the impact of retrieving and updating operations on the database contribution, the overall ranking of the database contribution is basically unchanged, but note that the contributions of Station and Travel increase significantly, the contributions of Route and Config decrease significantly, and the contributions of other databases fluctuate in a small range. Ticket reserve, User order management, and Advanced search all have a large number of retrieving operations on Station, while the retrieving operation has the highest weight, so the contribution of Station has increased notably. The increase in the contribution of Travel is mainly because the number of records retrieved and updated accounts for a high proportion of the number of records processed by all operations. At the same time, Advanced search only calls Station, Train, Route, Price, Config, and Travel, so the contribution of Train, Route, Price, and Config decreases. Because the original contribution of Route and Config is higher, the contribution decreases more.

Figure 11.

The database contributions to business scenarios in TrainTicket.

5. Discussion

In our experiment, we deployed two microservice systems on a single machine with only one instance per database (service). In industrial microservice systems, a service can have several to thousands of instances running on different containers and can be dynamically created or destroyed according to the scaling requirements at runtime [7,12], but this does not affect the effectiveness of our method because these databases (services) can achieve data consistency through related mechanisms [53], which has nothing to do with the database contributions.

By comparing the experimental processes and results of the two systems, we can see that the more business scenarios there are, the lower the efficiency of the AHP process (it is strenuous to construct pairwise comparison matrices). At the same time, the increase of databases in the system may reduce the differentiation between database contributions. An industrial microservice system is usually a large-scale distributed system containing more business scenarios and databases. In this case, more appropriate weighting methods (e.g., G1 method [54], combined weight method [55]) can be considered and try to classify databases and calculate contributions by category.

Since this method aggregates the number of records operated in the business scenario through trace information, the code logic will affect the contribution of the databases. For example, making a large number of redundant calls to a database while implementing a business function may increase the contribution of the database to a certain extent, and because our approach is oriented to business scenarios, different partitions of business scenarios also impact the database contribution.

6. Conclusions

In this study, we proposed a database contribution assessment approach via distributed tracing for microservice systems. This method considers the importance weight of business scenarios, the number of operated records of the database, and the weight of the four basic database operations, so as to skillfully convert the database contribution evaluation into a nonlinear programming problem. The experimental results on two open-source microservice systems demonstrate the validity and rationality of our approach. Future work will focus on the improvement of the optimization efficiency of genetic algorithms in this task and better runtime deployment optimization combined with database contribution.

Author Contributions

Conceptualization, Y.L., Z.Y. and W.K.; methodology, Y.L. and Z.Y.; software, X.Y. and T.D.; validation, X.Y. and W.K.; investigation, Z.F. and C.H.; resources, Z.Y. and C.H.; data curation, Y.L.; writing—original draft preparation, Y.L. and Z.Y.; writing—review and editing, Z.F., C.H. and T.D.; visualization, Z.F.; supervision, C.H.; project administration, T.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Supplementary Details for Experiment on Sock-Shop

Table A1, Table A2, Table A3, Table A4 and Table A5 are the five pairwise comparison matrices scored by experts. Table A6 shows the hierarchical sorting and consistency test of the five pairwise comparison matrices. Table A7 presents the importance weight of business scenarios represented by the database contribution of the DBCAMS method.

Table A1.

The pairwise comparison matrix .

Table A1.

The pairwise comparison matrix .

| 1 | 2 | 3 | 4 | |

| 1/2 | 1 | 2 | 3 | |

| 1/3 | 1/2 | 1 | 2 | |

| 1/4 | 1/3 | 1/2 | 1 |

Table A2.

The pairwise comparison matrix .

Table A2.

The pairwise comparison matrix .

| 1 | 2 | 4 | 8 | |

| 1/2 | 1 | 3 | 7 | |

| 1/4 | 1/3 | 1 | 5 | |

| 1/8 | 1/7 | 1/5 | 1 |

Table A3.

The pairwise comparison matrix .

Table A3.

The pairwise comparison matrix .

| 1 | 3 | 7 | 5 | |

| 1/3 | 1 | 5 | 3 | |

| 1/7 | 1/5 | 1 | 1/3 | |

| 1/5 | 1/3 | 3 | 1 |

Table A4.

The pairwise comparison matrix .

Table A4.

The pairwise comparison matrix .

| 1 | 3 | 3 | 6 | |

| 1/3 | 1 | 1 | 3 | |

| 1/3 | 1 | 1 | 3 | |

| 1/6 | 1/3 | 1/3 | 1 |

Table A5.

The pairwise comparison matrix .

Table A5.

The pairwise comparison matrix .

| 1 | 5 | 3 | 7 | |

| 1/5 | 1 | 1/3 | 3 | |

| 1/3 | 3 | 1 | 5 | |

| 1/7 | 1/3 | 1/5 | 1 |

Table A6.

The normalized eigenvector and CR of five pairwise comparison matrices. The CR of each matrix is in bold.

Table A6.

The normalized eigenvector and CR of five pairwise comparison matrices. The CR of each matrix is in bold.

| 0.4673 | 0.2772 | 0.1601 | 0.0954 | 0.0115 | |

| 0.4977 | 0.3154 | 0.1434 | 0.0436 | 0.0445 | |

| 0.5650 | 0.2622 | 0.0553 | 0.1175 | 0.0433 | |

| 0.5346 | 0.1963 | 0.1963 | 0.0728 | 0.0076 | |

| 0.5650 | 0.1175 | 0.2622 | 0.0553 | 0.0433 |

Table A7.

Business scenarios’ importance weights represented by the database contribution of the DBCAMS method.

Table A7.

Business scenarios’ importance weights represented by the database contribution of the DBCAMS method.

| Business Scenario | Importance Weight Expression |

|---|---|

Appendix B. Supplementary Details for Experiment on TrainTicket

Table A8, Table A9, Table A10, Table A11 and Table A12 are the five pairwise comparison matrices scored by experts for user business scenarios, and Table A13, Table A14, Table A15, Table A16 and Table A17 are the five pairwise comparison matrices scored by experts for administrator business scenarios. Table A18, Table A19 and Table A20 shows the hierarchical sorting and consistency test of the ten pairwise comparison matrices. Table A21 presents the importance weights of business scenarios represented by the database contribution of the DBCAMS method.

Table A8.

The pairwise comparison matrix .

Table A8.

The pairwise comparison matrix .

| 1 | 2 | 3 | 1/2 | 5 | |

| 1/2 | 1 | 2 | 1/4 | 3 | |

| 1/3 | 1/2 | 1 | 1/5 | 2 | |

| 2 | 4 | 5 | 1 | 7 | |

| 1/5 | 1/3 | 1/2 | 1/7 | 1 |

Table A9.

The pairwise comparison matrix .

Table A9.

The pairwise comparison matrix .

| 1 | 1 | 7 | 5 | 9 | |

| 1 | 1 | 7 | 5 | 9 | |

| 1/7 | 1/7 | 1 | 1/3 | 3 | |

| 1/5 | 1/5 | 3 | 1 | 5 | |

| 1/9 | 1/9 | 1/3 | 1/5 | 1 |

Table A10.

The pairwise comparison matrix .

Table A10.

The pairwise comparison matrix .

| 1 | 3 | 9 | 5 | 4 | |

| 1/3 | 1 | 7 | 5 | 3 | |

| 1/9 | 1/7 | 1 | 1/3 | 1/4 | |

| 1/5 | 1/5 | 3 | 1 | 1/2 | |

| 1/4 | 1/3 | 4 | 2 | 1 |

Table A11.

The pairwise comparison matrix .

Table A11.

The pairwise comparison matrix .

| 1 | 3 | 5 | 2 | 4 | |

| 1/5 | 1 | 3 | 1/2 | 2 | |

| 1/9 | 1/3 | 1 | 1/4 | 1/2 | |

| 1/3 | 4 | 5 | 1 | 3 | |

| 1/7 | 1/5 | 3 | 1/3 | 1 |

Table A12.

The pairwise comparison matrix .

Table A12.

The pairwise comparison matrix .

| 1 | 2 | 4 | 3 | 5 | |

| 1/2 | 1 | 3 | 2 | 4 | |

| 1/4 | 1/3 | 1 | 1/2 | 2 | |

| 1/3 | 1/2 | 2 | 1 | 3 | |

| 1/5 | 1/4 | 1/2 | 1/3 | 1 |

Table A13.

The pairwise comparison matrix .

Table A13.

The pairwise comparison matrix .

| 1 | 1/3 | 1/2 | 6 | 7 | 3 | 4 | 5 | 2 | |

| 3 | 1 | 2 | 8 | 9 | 5 | 6 | 7 | 4 | |

| 2 | 1/2 | 1 | 7 | 8 | 4 | 5 | 6 | 3 | |

| 1/6 | 1/8 | 1/7 | 1 | 2 | 1/4 | 1/3 | 1/2 | 1/5 | |

| 1/7 | 1/9 | 1/8 | 1/2 | 1 | 1/5 | 1/4 | 1/3 | 1/6 | |

| 1/3 | 1/5 | 1/4 | 4 | 5 | 1 | 2 | 3 | 1/2 | |

| 1/4 | 1/6 | 1/5 | 3 | 4 | 1/2 | 1 | 2 | 1/3 | |

| 1/5 | 1/7 | 1/6 | 2 | 3 | 1/3 | 1/2 | 1 | 1/4 | |

| 1/2 | 1/4 | 1/3 | 5 | 6 | 2 | 3 | 4 | 1 |

Table A14.

The pairwise comparison matrix .

Table A14.

The pairwise comparison matrix .

| 1 | 5 | 5 | 3 | 9 | 7 | 7 | 7 | 8 | |

| 1/5 | 1 | 1 | 1/3 | 7 | 5 | 5 | 5 | 3 | |

| 1/5 | 1 | 1 | 1/3 | 7 | 5 | 5 | 5 | 3 | |

| 1/3 | 3 | 3 | 1 | 8 | 6 | 6 | 6 | 2 | |

| 1/9 | 1/7 | 1/7 | 1/8 | 1 | 1/3 | 1/3 | 1/3 | 1/5 | |

| 1/7 | 1/5 | 1/5 | 1/6 | 3 | 1 | 1 | 1 | 1/3 | |

| 1/7 | 1/5 | 1/5 | 1/6 | 3 | 1 | 1 | 1 | 1/3 | |

| 1/7 | 1/5 | 1/5 | 1/6 | 3 | 1 | 1 | 1 | 1/3 | |

| 1/8 | 1/3 | 1/3 | 1/2 | 5 | 3 | 3 | 3 | 1 |

Table A15.

The pairwise comparison matrix .

Table A15.

The pairwise comparison matrix .

| 1 | 4 | 2 | 6 | 3 | 7 | 8 | 9 | 5 | |

| 1/4 | 1 | 1/3 | 3 | 1/2 | 4 | 5 | 6 | 2 | |

| 1/2 | 3 | 1 | 5 | 2 | 6 | 7 | 8 | 4 | |

| 1/6 | 1/3 | 1/5 | 1 | 1/4 | 2 | 3 | 4 | 1/2 | |

| 1/3 | 2 | 1/2 | 4 | 1 | 5 | 6 | 7 | 3 | |

| 1/7 | 1/4 | 1/6 | 1/2 | 1/5 | 1 | 2 | 3 | 1/3 | |

| 1/8 | 1/5 | 1/7 | 1/3 | 1/6 | 1/2 | 1 | 3 | 1/4 | |

| 1/9 | 1/6 | 1/8 | 1/4 | 1/7 | 1/3 | 1/3 | 1 | 1/5 | |

| 1/5 | 1/2 | 1/4 | 2 | 1/3 | 3 | 4 | 5 | 1 |

Table A16.

The pairwise comparison matrix .

Table A16.

The pairwise comparison matrix .

| 1 | 2 | 1/2 | 4 | 7 | 1/3 | 5 | 3 | 6 | |

| 1/2 | 1 | 1/3 | 3 | 6 | 1/4 | 4 | 2 | 5 | |

| 2 | 3 | 1 | 5 | 8 | 1/2 | 6 | 4 | 7 | |

| 1/4 | 1/3 | 1/5 | 1 | 4 | 1/6 | 2 | 1/2 | 3 | |

| 1/7 | 1/6 | 1/8 | 1/4 | 1 | 1/9 | 1/3 | 1/5 | 1/2 | |

| 3 | 4 | 2 | 6 | 9 | 1 | 7 | 5 | 8 | |

| 1/5 | 1/4 | 1/6 | 1/2 | 3 | 1/7 | 1 | 1/3 | 2 | |

| 1/3 | 1/2 | 1/4 | 2 | 5 | 1/5 | 3 | 1 | 4 | |

| 1/6 | 1/5 | 1/7 | 1/3 | 2 | 1/8 | 1/2 | 1/4 | 1 |

Table A17.

The pairwise comparison matrix .

Table A17.

The pairwise comparison matrix .

| 1 | 4 | 2 | 3 | 7 | 5 | 8 | 6 | 9 | |

| 1/4 | 1 | 1/3 | 1/2 | 4 | 2 | 5 | 3 | 6 | |

| 1/2 | 3 | 1 | 2 | 6 | 4 | 7 | 5 | 8 | |

| 1/3 | 2 | 1/2 | 1 | 5 | 3 | 6 | 4 | 7 | |

| 1/7 | 1/4 | 1/6 | 1/5 | 1 | 1/3 | 2 | 1/2 | 3 | |

| 1/5 | 1/2 | 1/4 | 1/3 | 3 | 1 | 4 | 2 | 5 | |

| 1/8 | 1/5 | 1/7 | 1/6 | 1/2 | 1/4 | 1 | 1/3 | 2 | |

| 1/6 | 1/3 | 1/5 | 1/4 | 2 | 1/2 | 3 | 1 | 4 | |

| 1/9 | 1/6 | 1/8 | 1/7 | 1/3 | 1/5 | 1/2 | 1/4 | 1 |

Table A18.

The hierarchical single sorting results of . The CR of each matrix is in bold.

Table A18.

The hierarchical single sorting results of . The CR of each matrix is in bold.

| CI | CR | ||||||

|---|---|---|---|---|---|---|---|

| 0.2559 | 0.1417 | 0.0871 | 0.4638 | 0.0515 | 0.0104 | 0.0093 | |

| 0.3969 | 0.3969 | 0.0584 | 0.1165 | 0.0312 | 0.0511 | 0.0457 | |

| 0.4862 | 0.2786 | 0.0359 | 0.0769 | 0.1224 | 0.0455 | 0.0406 | |

| 0.4116 | 0.1417 | 0.0531 | 0.3066 | 0.0869 | 0.0031 | 0.0028 | |

| 0.4185 | 0.2625 | 0.0973 | 0.1599 | 0.0618 | 0.0170 | 0.0152 |

Table A19.

The hierarchical single sorting results of . The CR of each matrix is in bold.

Table A19.

The hierarchical single sorting results of . The CR of each matrix is in bold.

| CI | CR | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.1555 | 0.3121 | 0.2223 | 0.0247 | 0.0183 | 0.0739 | 0.0507 | 0.0350 | 0.1075 | 0.0502 | 0.0346 | |

| 0.3721 | 0.1238 | 0.1238 | 0.1986 | 0.0171 | 0.0317 | 0.0317 | 0.0317 | 0.0695 | 0.0782 | 0.0539 | |

| 0.3113 | 0.1075 | 0.2219 | 0.0507 | 0.1553 | 0.0350 | 0.0265 | 0.0178 | 0.0739 | 0.0566 | 0.0391 | |

| 0.1555 | 0.1075 | 0.2223 | 0.0507 | 0.0183 | 0.3121 | 0.0350 | 0.0739 | 0.0247 | 0.0502 | 0.0346 | |

| 0.3121 | 0.1075 | 0.2223 | 0.1555 | 0.0350 | 0.0739 | 0.0247 | 0.0507 | 0.0183 | 0.0502 | 0.0346 |

Table A20.

The hierarchical total sorting results of all business scenarios. The CR_total of each hierarchical total sorting is in bold.

Table A20.

The hierarchical total sorting results of all business scenarios. The CR_total of each hierarchical total sorting is in bold.

| CR_total | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.1279 | 0.0708 | 0.0435 | 0.2319 | 0.0258 | 0.0777 | 0.1561 | 0.1112 | 0.0124 | 0.0092 | 0.0369 | 0.0253 | 0.0175 | 0.0538 | 0.0236 | |

| 0.1985 | 0.1985 | 0.0292 | 0.0583 | 0.0156 | 0.1860 | 0.0619 | 0.0619 | 0.0993 | 0.0085 | 0.0159 | 0.0159 | 0.0159 | 0.0347 | 0.0503 | |

| 0.2431 | 0.1393 | 0.0179 | 0.0385 | 0.0612 | 0.1556 | 0.0538 | 0.1110 | 0.0254 | 0.0777 | 0.0175 | 0.0133 | 0.0089 | 0.0370 | 0.0397 | |

| 0.2058 | 0.0709 | 0.0266 | 0.1533 | 0.0434 | 0.0777 | 0.0538 | 0.1112 | 0.0253 | 0.0092 | 0.1561 | 0.0175 | 0.0369 | 0.0124 | 0.0207 | |

| 0.2093 | 0.1313 | 0.0486 | 0.0800 | 0.0309 | 0.1561 | 0.0538 | 0.1112 | 0.0777 | 0.0175 | 0.0369 | 0.0124 | 0.0253 | 0.0092 | 0.0261 |

Table A21.

Business scenarios’ importance weights represented by the database contribution of the DBCAMS method.

Table A21.

Business scenarios’ importance weights represented by the database contribution of the DBCAMS method.

| Business Scenario | Importance Weight Expression |

|---|---|

References

- Lewis, J.; Fowler, M. Microservices a Definition of This New Architectural Term. 2014. Available online: http://martinfowler.com/articles/microservices.html (accessed on 24 October 2022).

- Richardson, C. Microservices Patterns: With Examples in Java; Manning: New York, NY, USA, 2018. [Google Scholar]

- Guo, X.; Peng, X.; Wang, H.; Li, W.; Jiang, H.; Ding, D.; Xie, T.; Su, L. Graph-Based Trace Analysis for Microservice Architecture Understanding and Problem Diagnosis. In ESEC/FSE 2020: Proceedings of the 28th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1387–1397. [Google Scholar] [CrossRef]

- Zhou, X.; Peng, X.; Xie, T.; Sun, J.; Ji, C.; Li, W.; Ding, D. Fault Analysis and Debugging of Microservice Systems: Industrial Survey, Benchmark System, and Empirical Study. IEEE Trans. Softw. Eng. 2021, 47, 243–260. [Google Scholar] [CrossRef]

- Francesco, P.D.; Malavolta, I.; Lago, P. Research on Architecting Microservices: Trends, Focus, and Potential for Industrial Adoption. In Proceedings of the 2017 IEEE International Conference on Software Architecture (ICSA), Gothenburg, Sweden, 3–7 April 2017; pp. 21–30. [Google Scholar] [CrossRef]

- Xiang, Q.; Peng, X.; He, C.; Wang, H.; Xie, T.; Liu, D.; Zhang, G.; Cai, Y. No Free Lunch: Microservice Practices Reconsidered in Industry. arXiv 2021, arXiv:2106.07321. [Google Scholar] [CrossRef]

- Liu, D.; He, C.; Peng, X.; Lin, F.; Zhang, C.; Gong, S.; Li, Z.; Ou, J.; Wu, Z. MicroHECL: High-Efficient Root Cause Localization in Large-Scale Microservice Systems. In Proceedings of the 2021 IEEE/ACM 43rd International Conference on Software Engineering: Software Engineering in Practice (ICSE-SEIP), Madrid, Spain, 25–28 May 2021; pp. 338–347. [Google Scholar] [CrossRef]

- Ding, D.; Peng, X.; Guo, X.; Zhang, J.; Wu, Y. Scenario-driven and bottom-up microservice decomposition for monolithic systems. J. Softw. 2020, 31, 3461–3480. [Google Scholar] [CrossRef]

- Zhang, C.; Peng, X.; Sha, C.; Zhang, K.; Fu, Z.; Wu, X.; Lin, Q.; Zhang, D. DeepTraLog: Trace-Log Combined Microservice Anomaly Detection through Graph-based Deep Learning. In Proceedings of the 44th International Conference on Software Engineering, Pittsburgh, PA, USA, 25–27 May 2022; pp. 623–634. [Google Scholar] [CrossRef]

- Sampaio, A.R.; Rubin, J.; Beschastnikh, I.; Rosa, N.S. Improving microservice-based applications with runtime placement adaptation. J. Internet Serv. Appl. 2019, 10, 4. [Google Scholar] [CrossRef]

- Ma, W.; Wang, R.; Gu, Y.; Meng, Q.; Huang, H.; Deng, S.; Wu, Y. Multi-objective microservice deployment optimization via a knowledge-driven evolutionary algorithm. Complex Intell. Syst. 2021, 7, 1153–1171. [Google Scholar] [CrossRef]

- Li, B.; Peng, X.; Xiang, Q.; Wang, H.; Xie, T.; Sun, J.; Liu, X. Enjoy your observability: An industrial survey of microservice tracing and analysis. Empir. Softw. Eng. 2022, 27, 25. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Li, K.; Gao, J.; Liu, B.; Fang, Z.; Ke, W. A Quantitative Evaluation Method of Software Usability Based on Improved GOMS Model. In Proceedings of the 2021 IEEE 21st International Conference on Software Quality, Reliability and Security Companion (QRS-C), Hainan, China, 6–10 December 2021; pp. 691–697. [Google Scholar] [CrossRef]

- Saaty, T.L. How to make a decision: The analytic hierarchy process. Eur. J. Oper. Res. 1990, 48, 9–26. [Google Scholar] [CrossRef]

- Sockshop. Available online: https://github.com/microservices-demo/microservices-demo (accessed on 3 September 2022).

- TrainTicket. Available online: https://github.com/FudanSELab/train-ticket/releases/tag/v0.2.0 (accessed on 3 September 2022).

- Sridharan, C. Distributed Systems Observability: A Guide to Building Robust Systems; O’Reilly Media: Sebastopol, CA, USA, 2018. [Google Scholar]

- Sigelman, B.H.; Barroso, L.A.; Burrows, M.; Stephenson, P.; Plakal, M.; Beaver, D.; Jaspan, S.; Shanbhag, C. Dapper, a Large-Scale Distributed Systems Tracing Infrastructure; Technical Report; Google, Inc.: Mountain View, CA, USA, 2010. [Google Scholar]

- Pinpoint. Available online: https://github.com/pinpoint-apm/pinpoint (accessed on 4 September 2022).

- OpenZipkin. Available online: https://zipkin.io/ (accessed on 4 September 2022).

- Jaeger. Available online: https://www.jaegertracing.io/ (accessed on 4 September 2022).

- Apache Skywalking. Available online: https://skywalking.apache.org/ (accessed on 4 September 2022).

- The OpenTracing Project. Available online: https://opentracing.io/ (accessed on 4 September 2022).

- OpenCensus. Available online: https://opencensus.io/ (accessed on 4 September 2022).

- OpenTelemetry. Available online: https://opentelemetry.io/ (accessed on 4 September 2022).

- Zhou, X.; Peng, X.; Xie, T.; Sun, J.; Ji, C.; Liu, D.; Xiang, Q.; He, C. Latent Error Prediction and Fault Localization for Microservice Applications by Learning from System Trace Logs. In ESEC/FSE 2019: Proceedings of the 2019 27th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering; Association for Computing Machinery: New York, NY, USA, 2019; pp. 683–694. [Google Scholar] [CrossRef]

- Liu, P.; Xu, H.; Ouyang, Q.; Jiao, R.; Chen, Z.; Zhang, S.; Yang, J.; Mo, L.; Zeng, J.; Xue, W.; et al. Unsupervised Detection of Microservice Trace Anomalies through Service-Level Deep Bayesian Networks. In Proceedings of the 2020 IEEE 31st International Symposium on Software Reliability Engineering (ISSRE), Coimbra, Portugal, 12–15 October 2020; pp. 48–58. [Google Scholar] [CrossRef]

- Bogner, J.; Schlinger, S.; Wagner, S.; Zimmermann, A. A Modular Approach to Calculate Service-Based Maintainability Metrics from Runtime Data of Microservices. In Product-Focused Software Process Improvement. PROFES 2019; Franch, X., Männistö, T., Martínez-Fernández, S., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2019; pp. 489–496. [Google Scholar]

- Bonacich, P. Factoring and weighting approaches to status scores and clique identification. J. Math. Sociol. 1972, 2, 113–120. [Google Scholar] [CrossRef]

- Chen, D.; Lü, L.; Shang, M.S.; Zhang, Y.C.; Zhou, T. Identifying influential nodes in complex networks. Phys. A Stat. Mech. Its Appl. 2012, 391, 1777–1787. [Google Scholar] [CrossRef]

- Kitsak, M.; Gallos, L.K.; Havlin, S.; Liljeros, F.; Muchnik, L.; Stanley, H.E.; Makse, H.A. Identification of influential spreaders in complex networks. Nat. Phys. 2010, 6, 888–893. [Google Scholar] [CrossRef]

- Hage, P.; Harary, F. Eccentricity and centrality in networks. Soc. Netw. 1995, 17, 57–63. [Google Scholar] [CrossRef]

- Stephenson, K.; Zelen, M. Rethinking centrality: Methods and examples. Soc. Netw. 1989, 11, 1–37. [Google Scholar] [CrossRef]

- Estrada, E.; Rodríguez-Velázquez, J.A. Subgraph centrality in complex networks. Phys. Rev. E 2005, 71, 056103. [Google Scholar] [CrossRef] [PubMed]

- Poulin, R.; Boily, M.C.; Mâsse, B. Dynamical systems to define centrality in social networks. Soc. Netw. 2000, 22, 187–220. [Google Scholar] [CrossRef]

- Brin, S.; Page, L. The anatomy of a large-scale hypertextual Web search engine. Comput. Netw. ISDN Syst. 1998, 30, 107–117. [Google Scholar] [CrossRef]

- Lü, L.; Zhang, Y.C.; Yeung, C.H.; Zhou, T. Leaders in social networks, the delicious case. PLoS ONE 2011, 6, e21202. [Google Scholar] [CrossRef]

- Kleinberg, J.M. Authoritative Sources in a Hyperlinked Environment. J. ACM 1999, 46, 604–632. [Google Scholar] [CrossRef]

- Lempel, R.; Moran, S. The stochastic approach for link-structure analysis (SALSA) and the TKC effect1Abridged version1. Comput. Netw. 2000, 33, 387–401. [Google Scholar] [CrossRef]

- Dangalchev, C. Residual closeness in networks. Phys. A Stat. Mech. Its Appl. 2006, 365, 556–564. [Google Scholar] [CrossRef]

- Lü, L.; Chen, D.; Ren, X.L.; Zhang, Q.M.; Zhang, Y.C.; Zhou, T. Vital nodes identification in complex networks. Phys. Rep. 2016, 650, 1–63. [Google Scholar] [CrossRef]

- Gousios, G.; Kalliamvakou, E.; Spinellis, D. Measuring Developer Contribution from Software Repository Data. In MSR ’08: Proceedings of the 2008 International Working Conference on Mining Software Repositories; Association for Computing Machinery: New York, NY, USA, 2008; pp. 129–132. [Google Scholar] [CrossRef]

- Zhou, M.; Mockus, A. Who Will Stay in the FLOSS Community? Modeling Participant’s Initial Behavior. IEEE Trans. Softw. Eng. 2015, 41, 82–99. [Google Scholar] [CrossRef]

- Wang, G.; Dang, C.X.; Zhou, Z. Measure Contribution of Participants in Federated Learning. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 2597–2604. [Google Scholar] [CrossRef]

- Boyer, S.; Ikeda, T.; Lefort, M.C.; Malumbres-Olarte, J.; Schmidt, J.M. Percentage-based Author Contribution Index: A universal measure of author contribution to scientific articles. Res. Integr. Peer Rev. 2017, 2, 18. [Google Scholar] [CrossRef]

- Al-Harbi, K.M.S. Application of the AHP in project management. Int. J. Proj. Manag. 2001, 19, 19–27. [Google Scholar] [CrossRef]

- Vaidya, O.S.; Kumar, S. Analytic hierarchy process: An overview of applications. Eur. J. Oper. Res. 2006, 169, 1–29. [Google Scholar] [CrossRef]

- Saaty, T.L. A scaling method for priorities in hierarchical structures. J. Math. Psychol. 1977, 15, 234–281. [Google Scholar] [CrossRef]

- Harada, T.; Alba, E. Parallel Genetic Algorithms: A Useful Survey. ACM Comput. Surv. 2020, 53, 86. [Google Scholar] [CrossRef]

- Quint, P.; Kratzke, N. Towards a Lightweight Multi-Cloud DSL for Elastic and Transferable Cloud-native Applications. In Proceedings of the 8th International Conference on Cloud Computing and Services Science, CLOSER 2018, Funchal, Portugal, 19–21 March 2018; pp. 400–408. [Google Scholar] [CrossRef]

- Brogi, A.; Rinaldi, L.; Soldani, J. TosKer: A synergy between TOSCA and Docker for orchestrating multicomponent applications. Softw. Pract. Exp. 2018, 48, 2061–2079. [Google Scholar] [CrossRef]

- Gretton, A.; Borgwardt, K.; Rasch, M.; Schölkopf, B.; Smola, A. A Kernel Method for the Two-Sample-Problem. In Advances in Neural Information Processing Systems 19 (NIPS 2006); Schölkopf, B., Platt, J., Hoffman, T., Eds.; MIT Press: Cambridge, CA, USA, 2006; Volume 19. [Google Scholar]

- Viennot, N.; Lécuyer, M.; Bell, J.; Geambasu, R.; Nieh, J. Synapse: A Microservices Architecture for Heterogeneous-Database Web Applications. In EuroSys ’15: Proceedings of the Tenth European Conference on Computer Systems; Association for Computing Machinery: New York, NY, USA, 2015; pp. 1–16. [Google Scholar] [CrossRef]

- Gu, Y.; Xie, J.; Liu, H.; Yang, Y.; Tan, Y.; Chen, L. Evaluation and analysis of comprehensive performance of a brake pedal based on an improved analytic hierarchy process. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2021, 235, 2636–2648. [Google Scholar] [CrossRef]

- Wang, Q.; Zhao, D.; Yang, B.; Li, C. Risk assessment of the UPIoT construction in China using combined dynamic weighting method under IFGDM environment. Sustain. Cities Soc. 2020, 60, 102199. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).