A Reputation Model of OSM Contributor Based on Semantic Similarity of Ontology Concepts

Abstract

1. Introduction

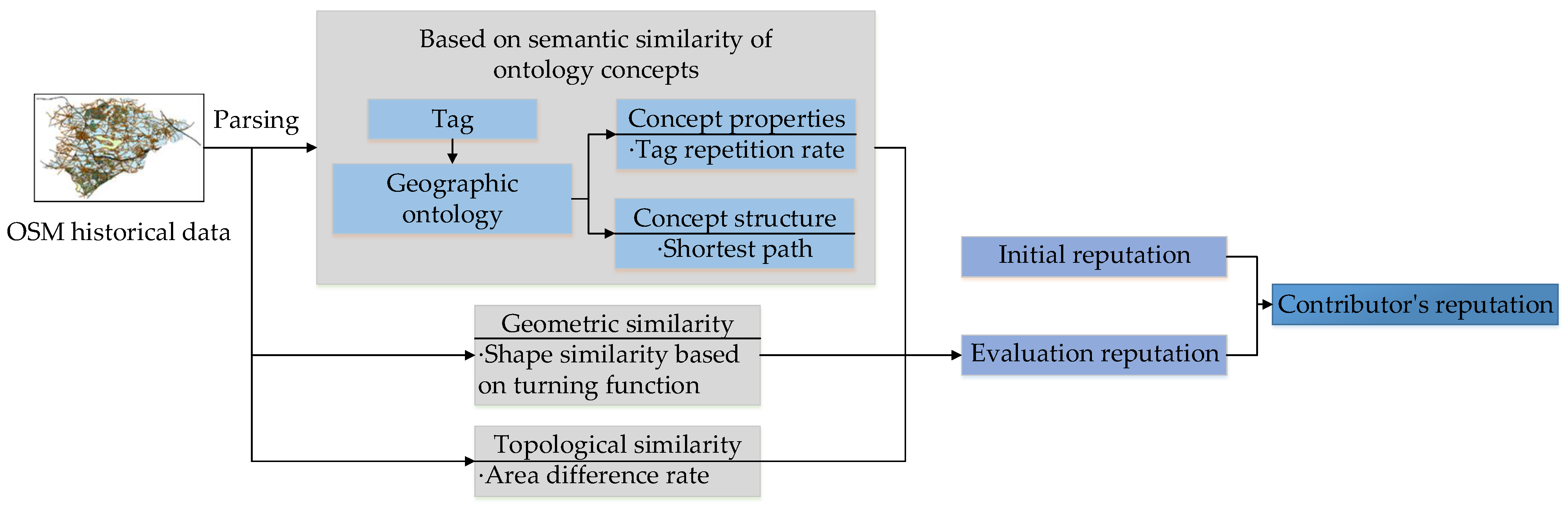

- (1)

- Ontology-related research in the field of knowledge is used in this study, constructing the volunteered geographic information ontology and establishing a semantic similarity evaluation model for evaluating volunteer contributed objects, then combining the semantic similarity of ontology concept, geometric similarity, and topological similarity to obtain the contributor’s evaluation reputation;

- (2)

- An evaluation method of the contributor’s initial reputation is proposed. Firstly, contributors are classified by the improved WPCA-based feature dimension reduction and classification method. Then, an initial reputation is set for every OSM user in each class according to these categories and related research results. The effectiveness of this method is verified by experiments;

- (3)

- A comprehensive evaluation method of the contributor’s reputation is proposed. This method more successfully combines initial reputation and evaluation reputation. With the increase of the contributor’s reputation evaluation, the weight of the initial reputation becomes smaller;

- (4)

- The validity of the contributor’s reputation model proposed in this paper is verified by experiments using the real historical data of OSM. The experiment shows that the contributor’s reputation is essentially positively correlated with the contributor’s initial reputation. Because the semantic similarity between object versions is considered, the quality of contributors’ tagged data can be evaluated more accurately.

2. Related Work

2.1. The Quality of Crowdsourcing Geographic Data

2.2. The Contributor’s Reputation of Crowdsourcing Geographic Data

2.3. The Ontology of Crowdsourcing Geographic

3. Methods

3.1. Model Overview

3.2. Contributor’s Initial Reputation

3.3. Contributor’s Evaluation Reputation

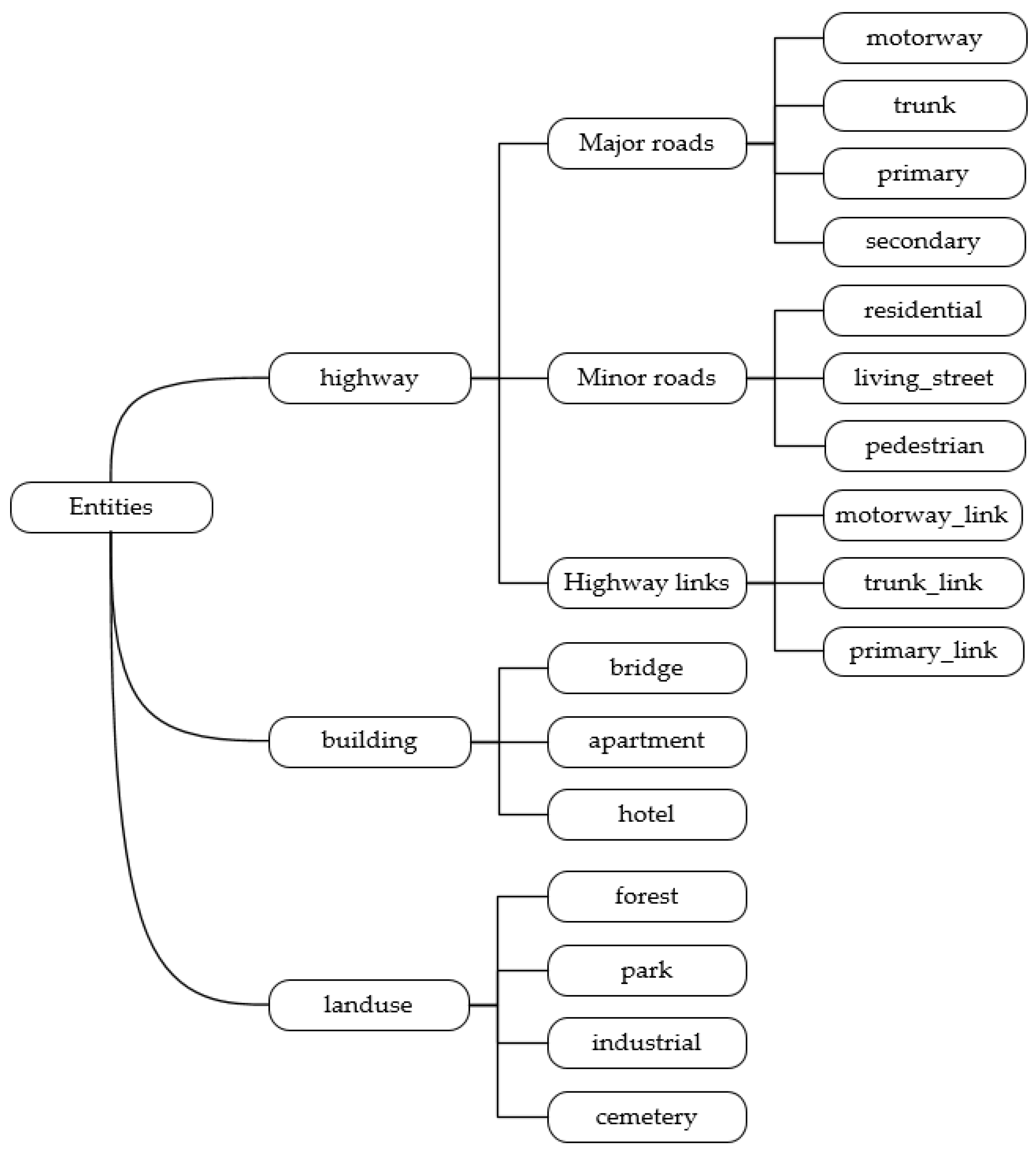

3.3.1. Constructing Geographic Ontology

trunk IS-A highway{Definition: Important roads, typically divided;Semantic relationship: IS-A relationship with highway;Nature: roads, such as elevated expressways, airport inbound expressways, river-crossing tunnels, and expressways on bridges;Attribute: road width; road speed limit.}

3.3.2. Semantic Similarity of Object Version

- (1)

- Similarity of concept attribute.

- (2)

- Semantic distance of concept tree structure.

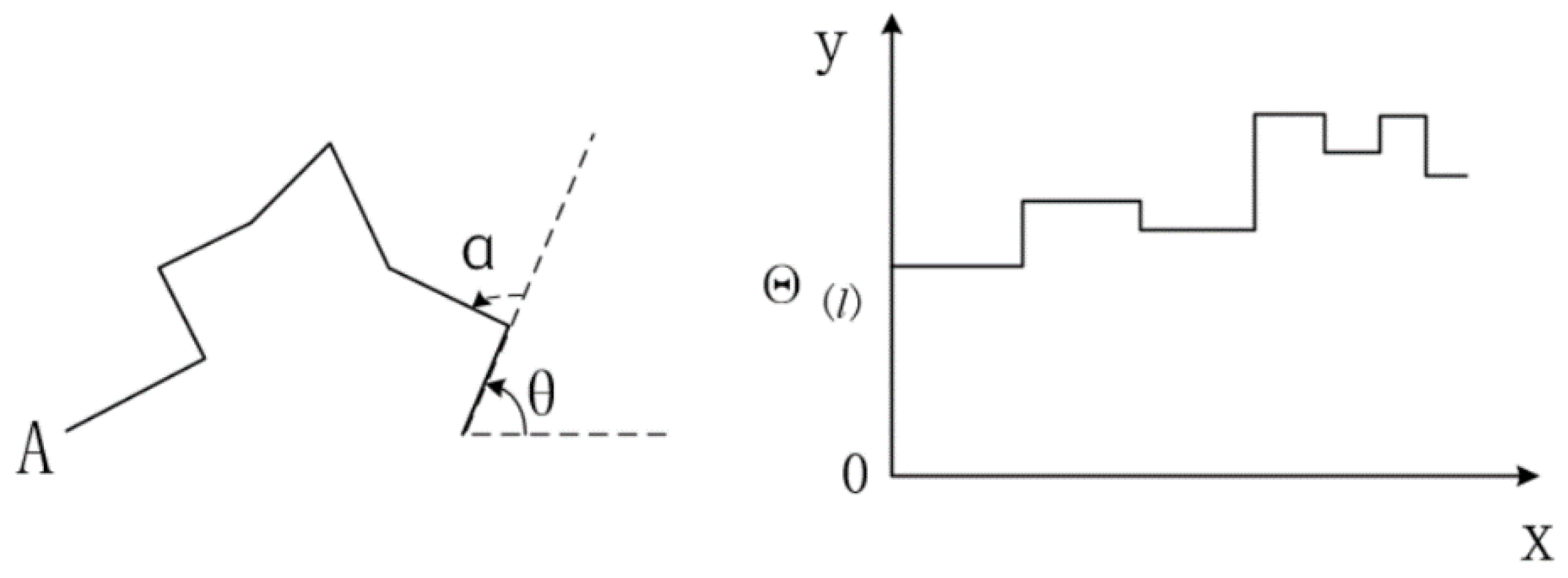

3.3.3. Geometric Similarity of Object Version

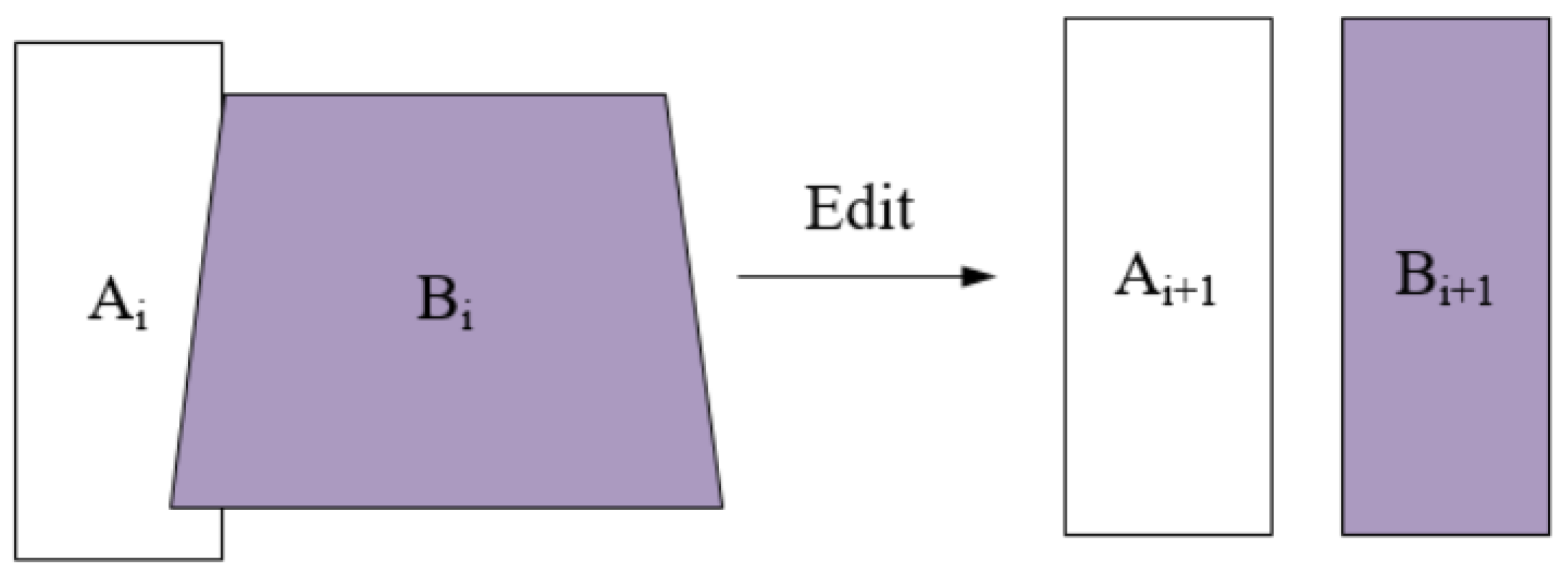

3.3.4. Topological Similarity of Object Version

3.4. Contributor’s Reputation

4. Experiment and Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Goodchild, M.F. Citizens as sensors: The world of volunteered geography. GeoJournal 2007, 69, 211–221. [Google Scholar] [CrossRef]

- Zhang, D.; Ge, Y.; Stein, A.; Zhang, W. Ranking of VGI contributor reputation using an evaluation-based weighted pagerank. Trans. GIS 2021, 25, 1439–1459. [Google Scholar] [CrossRef]

- Sehra, S.S.; Singh, J.; Rai, H.; Anand, S.S. Extending Processing Toolbox for assessing the logical consistency of OpenStreetMap data. Trans. GIS 2019, 24, 44–71. [Google Scholar] [CrossRef]

- Al-Bakri, M.; Fairbairn, D. Assessing similarity matching for possible integration of feature classifications of geospatial data from official and informal sources. Int. J. Geogr. Inf. Sci. 2012, 26, 1437–1456. [Google Scholar] [CrossRef]

- Barron, C.; Neis, P.; Zipf, A. A Comprehensive Framework for Intrinsic OpenStreetMap Quality Analysis. Trans. GIS 2013, 18, 877–895. [Google Scholar] [CrossRef]

- Zhou, X.; Zhao, Y. Issues and Advances of Crowdsourcing Geographic Data Quality. Geomat. World 2020, 27, 9–15. (In Chinese) [Google Scholar]

- Fan, H.; Zipf, A.; Fu, Q.; Neis, P. Quality assessment for building footprints data on OpenStreetMap. Int. J. Geogr. Inf. Sci. 2014, 28, 700–719. [Google Scholar] [CrossRef]

- Zhang, W.-B.; Leung, Y.; Ma, J.-H. Analysis of positional uncertainty of road networks in volunteered geographic information with a statistically defined buffer-zone method. Int. J. Geogr. Inf. Sci. 2019, 33, 1807–1828. [Google Scholar] [CrossRef]

- Bishr, M.; Janowicz, K. Can we trust information?-The case of volunteered geographic information. In Proceedings of the Towards Digital Earth Search Discover and Share Geospatial Data Workshop at Future Internet Symposium, Berlin, Germany, 20 September 2010. [Google Scholar]

- Fogliaroni, P.; D’Antonio, F.; Clementini, E. Data trustworthiness and user reputation as indicators of VGI quality. Geo-Spat. Inf. Sci. 2018, 21, 213–233. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhou, X.; Huang, M. Computing Model of Volunteered Geographic Information Trustworthiness Based on User Reputation. Geomat. Inf. Sci. Wuhan Univ. 2016, 41, 1530–1536. [Google Scholar]

- Malik, Z.; Bouguettaya, A. Reputation Bootstrapping for Trust Establishment among Web Services. IEEE Internet Comput. 2009, 13, 40–47. [Google Scholar] [CrossRef]

- Agapiou, A. Estimating proportion of vegetation cover at the vicinity of archaeological sites using sentinel-1 and-2 data, supplemented by crowdsourced openstreetmap geodata. Appl. Sci. 2020, 10, 4764. [Google Scholar] [CrossRef]

- Costantino, D.; Vozza, G.; Alfio, V.S.; Pepe, M. Strategies for 3D Modelling of Buildings from Airborne Laser Scanner and Photogrammetric Data Based on Free-Form and Model-Driven Methods: The Case Study of the Old Town Centre of Bordeaux (France). Appl. Sci. 2021, 11, 10993. [Google Scholar] [CrossRef]

- Haklay, M. How Good is Volunteered Geographical Information? A Comparative Study of OpenStreetMap and Ordnance Survey Datasets. Environ. Plan. B Plan. Des. 2010, 37, 682–703. [Google Scholar] [CrossRef]

- Girres, J.-F.; Touya, G. Quality Assessment of the French OpenStreetMap Dataset. Trans. GIS 2010, 14, 435–459. [Google Scholar] [CrossRef]

- Zielstra, D.; Zipf, A. A comparative study of proprietary geodata and volunteered geographic information for Germany. In Proceedings of the 13th AGILE International Conference on Geographic Information Science, Guimarães, Portugal, 10–14 May 2010. [Google Scholar]

- Mooney, P.; Corcoran, P. The Annotation Process in OpenStreetMap. Trans. GIS 2012, 16, 561–579. [Google Scholar] [CrossRef]

- Lai, C.-M.; Chen, M.-H.; Kristiani, E.; Verma, V.K.; Yang, C.-T. Fake News Classification Based on Content Level Features. Appl. Sci. 2022, 12, 1116. [Google Scholar] [CrossRef]

- Ballatore, A.; Bertolotto, M.; Wilson, D.C. Geographic knowledge extraction and semantic similarity in OpenStreetMap. Knowl. Inf. Syst. 2013, 37, 61–81. [Google Scholar] [CrossRef]

- Zhao, Y.; Yang, W.; Liu, Y.; Liao, Z. Discovering transition patterns among OpenStreetMap feature classes based on the Louvain method. Trans. GIS 2021, 26, 236–258. [Google Scholar] [CrossRef]

- Forati, A.M.; Ghose, R. Volunteered Geographic Information Users Contributions Pattern and its Impact on Information Quality. Preprints 2020, 2020070270. [Google Scholar] [CrossRef]

- Adler, B.T.; De Alfaro, L. A content-driven reputation system for the Wikipedia. In Proceedings of the 16th International Conference on World Wide Web, Banff, AB, Canada, 8–12 May 2007; pp. 261–270. [Google Scholar]

- Degrossi, L.C.; De Albuquerque, J.P.; Rocha, R.D.S.; Zipf, A. A taxonomy of quality assessment methods for volunteered and crowdsourced geographic information. Trans. GIS 2018, 22, 542–560. [Google Scholar] [CrossRef]

- Yang, H.; Zhang, J.; Roe, P. Reputation modelling in Citizen Science for environmental acoustic data analysis. Soc. Netw. Anal. Min. 2012, 3, 419–435. [Google Scholar] [CrossRef][Green Version]

- Flanagin, A.J.; Metzger, M.J. The credibility of volunteered geographic information. GeoJournal 2008, 72, 137–148. [Google Scholar] [CrossRef]

- Goodchild, M.F.; Li, L. Assuring the quality of volunteered geographic information. Spat. Stat. 2012, 1, 110–120. [Google Scholar] [CrossRef]

- Lodigiani, C.; Melchiori, M. A pagerank-based reputation model for VGI data. Procedia Comput. Sci. 2016, 98, 566–571. [Google Scholar] [CrossRef]

- Nejad, R.G.; Abbaspour, R.A.; Chehreghan, A. Spatiotemporal VGI contributor reputation system based on implicit evaluation relations. Geocarto Int. 2022, 1–28. [Google Scholar] [CrossRef]

- D Antonio, F.; Fogliaroni, P.; Kauppinen, T. VGI edit history reveals data trustworthiness and user reputation. In Proceedings of the AGILE’2014 International Conference on Geographic Information Science, Castellón, Spain, 3–6 June 2014. [Google Scholar]

- Keßler, C.; Trame, J.; Kauppinen, T. Tracking editing processes in volunteered geographic information: The case of OpenStreetMap. Identifying objects, processes and events in spatio-temporally distributed data (IOPE). In Proceedings of the Workshop at Conference on Spatial Information Theory, Belfast, ME, USA, 12–16 September 2011; Volume 12, pp. 6–8. [Google Scholar]

- Zhao, Y.; Zhou, X.; Li, G.; Xing, H. A Spatio-Temporal VGI Model Considering Trust-Related Information. ISPRS Int. J. Geo-Inf. 2016, 5, 10. [Google Scholar] [CrossRef]

- Chen, J.; Zhou, C.H.; Wang, J.G. Advances in the study of the geo-ontology. Earth Sci. Front. 2006, 13, 81–90. (In Chinese) [Google Scholar]

- Tversky, A. Features of similarity. Psychol. Rev. 1977, 84, 327. [Google Scholar] [CrossRef]

- Taieb, M.A.H.; Ben Aouicha, M.; Ben Hamadou, A. Ontology-based approach for measuring semantic similarity. Eng. Appl. Artif. Intell. 2014, 36, 238–261. [Google Scholar] [CrossRef]

- Li, Y.; Bandar, Z.; McLean, D. An approach for measuring semantic similarity between words using multiple information sources. IEEE Trans. Knowl. Data Eng. 2003, 15, 871–882. [Google Scholar] [CrossRef]

- Zhao, Y.; Wei, X.; Liu, Y.; Liao, Z. An OSM Contributors Classification Method Based on WPCA and GMM. J. Phys. Conf. Ser. 2021, 2025, 012040. [Google Scholar] [CrossRef]

- Jacobs, K.T.; Mitchell, S.W. OpenStreetMap quality assessment using unsupervised machine learning methods. Trans. GIS 2020, 24, 1280–1298. [Google Scholar] [CrossRef]

- Boakes, E.H.; Gliozzo, G.; Seymour, V.; Harvey, M.; Smith, C.; Roy, D.B.; Haklay, M. Patterns of contribution to citizen science biodiversity projects increase understanding of volunteers’ recording behaviour. Sci. Rep. 2016, 6, 33051. [Google Scholar] [CrossRef] [PubMed]

- Van Exel, M.; Dias, E.; Fruijtier, S. The impact of crowdsourcing on spatial data quality indicators. In Proceedings of the GIScience 2010 Doctoral Colloquium, Zurich, Switzerland, 14–17 September 2010. [Google Scholar]

- Moreri, K. Volunteer reputation determination in crowdsourcing projects using latent class analysis. Trans. GIS 2020, 25, 968–984. [Google Scholar] [CrossRef]

- Vargas-Munoz, J.E.; Srivastava, S.; Tuia, D.; Falcao, A.X. OpenStreetMap: Challenges and Opportunities in Machine Learning and Remote Sensing. IEEE Geosci. Remote Sens. Mag. 2020, 9, 184–199. [Google Scholar] [CrossRef]

- Ramm, F. OpenStreetMap Data in Layered GIS Format; Version 0.7.12; 2022. Available online: https://www.geofabrik.de/data/geofabrik-osm-gis-standard-0.7.pdf (accessed on 25 June 2022).

- Features on OSM Wiki[EB/OL]. Available online: https://wiki.openstreetmap.org/wiki/Zh-hans:Map_Features (accessed on 25 June 2022).

- Kuhn, W. Modeling the semantics of geographic categories through conceptual integration. In Proceedings of the International Conference on Geographic Information Science, Boulder, CO, USA, 25–28 September 2002. [Google Scholar]

- Muttaqien, B.I.; Ostermann, F.O.; Lemmens, R.L.G. Modeling aggregated expertise of user contributions to assess the credibility of OpenStreetMap features. Trans. GIS 2018, 22, 823–841. [Google Scholar] [CrossRef]

- Zhao, Y.; Guo, X.; Liu, Y.; Liao, Z.; Liu, M. A Tag Recommendation Method for OpenStreetMap Based on FP-Growth and Improved Markov Process. In Advances in Artificial Intelligence and Security. ICAIS 2021. Communications in Computer and Information Science; Springer: Cham, Switzerland, 2021; pp. 407–419. [Google Scholar] [CrossRef]

- Yang, N.; Zhang, Q.; Niu, J. Computational model of geospatial semantic similarity based on ontology structure. Sci. Surv. Mapp. 2015, 40, 6. (In Chinese) [Google Scholar]

- Zhao, Y.; Zhou, X. Version Similarity-based Model for Volunteers’ Reputation of Volunteered Geographic Information: A case of Polygon. Acta Geod. Cartogr. Sin. 2015, 44, 578–584. (In Chinese) [Google Scholar]

| Code | Layer | Class | Description | OSM Tag |

|---|---|---|---|---|

| 5111 | roads | motorway | Motorway/freeway | highway = motorway |

| 5112 | roads | trunk | Important roads, typically divided | highway = trunk |

| 5122 | roads | residential | Roads in residential areas | highway = residential |

| 5131 | roads | motorway_link | Roads that connect from one road to another of the same of lower category | highway = motorway_link |

| 2401 | buildings | hotel | A building designed with separate rooms available for overnight accommodation | tourism = hotel |

| 7201 | landuse | forest | A forest or woodland | landuse = forest, nature = wood |

| 7202 | landuse | park | A park | leisure = park, leisure = common |

| 7204 | landuse | industrial | An industrial area | landuse = industrial |

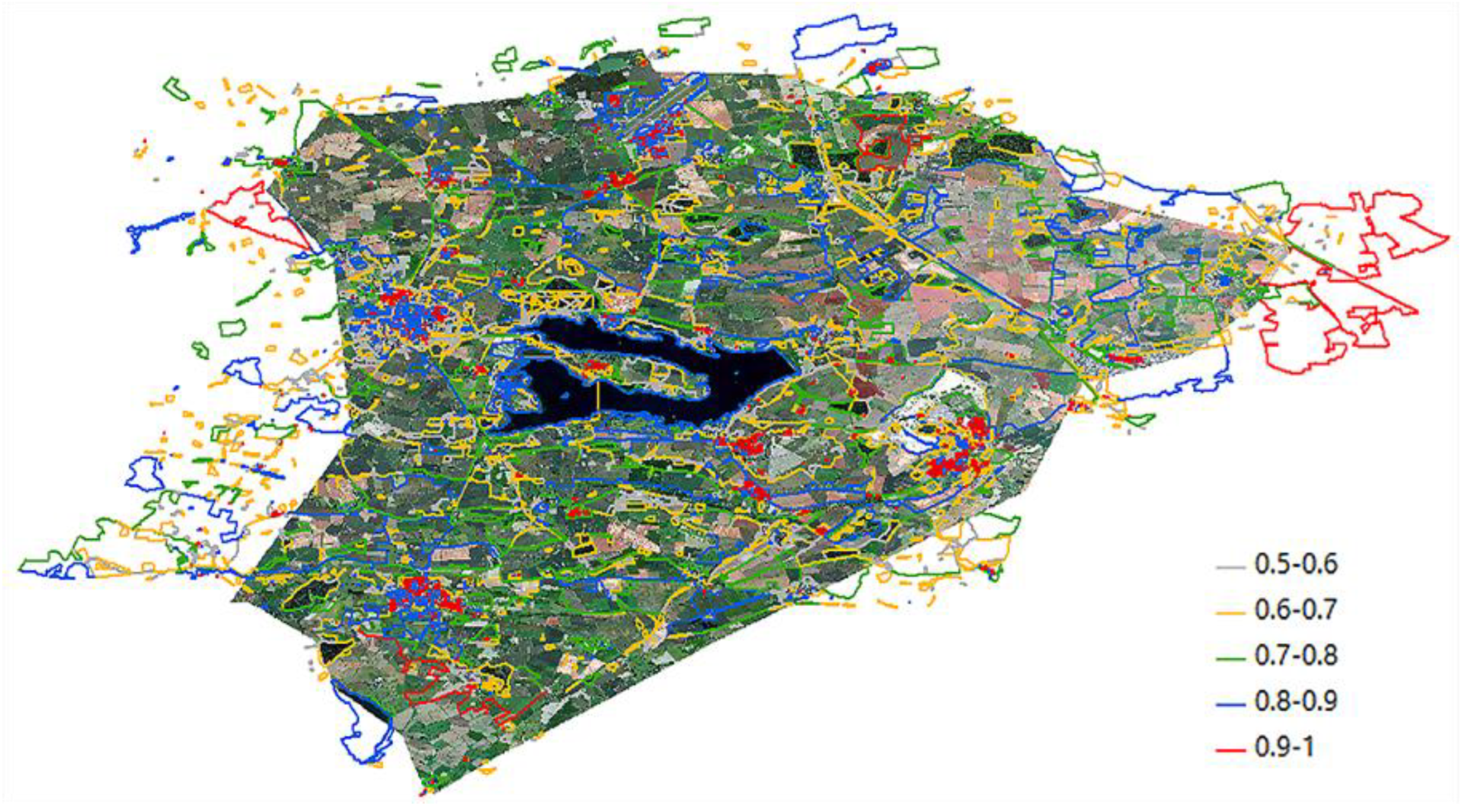

| Interval | 0.5–0.6 | 0.6–0.7 | 0.7–0.8 | 0.8–0.9 | 0.9–1 | Total/Person | |

|---|---|---|---|---|---|---|---|

| Initial Reputation | |||||||

| 0.75 (Novice or unskilled contributors) | 5 | 21 | 116 | 19 | 0 | 161 | |

| 0.9 (Major contributors) | 0 | 1 | 7 | 523 | 0 | 531 | |

| 1 (Professional contributors) | 0 | 0 | 1 | 7 | 24 | 32 | |

| Total/person | 5 | 22 | 124 | 549 | 24 | 724 | |

| Interval | 0.5–0.6 | 0.6–0.7 | 0.7–0.8 | 0.8–0.9 | 0.9–1 | Total/Version | |

|---|---|---|---|---|---|---|---|

| Effect | |||||||

| Error | 10 | 5 | 1 | 1 | 0 | 17 | |

| Poor | 3 | 10 | 78 | 175 | 71 | 337 | |

| Good | 9 | 14 | 92 | 580 | 2368 | 3063 | |

| Total/version | 22 | 29 | 171 | 756 | 2439 | 3417 | |

| Object Id | Semantic Similarity | Geometric Similarity | Topological Similarity | One-Time Evaluation |

|---|---|---|---|---|

| 4406271 | 0.333 | 0.333 | 0.228 | 0.298 |

| 43407756 | 1 | 0.871 | 0.812 | 0.894 |

| 3700148 | 0.235 | 1 | 1 | 0.745 |

| User Id | Evaluation Reputation | Initial Reputation | Reputation |

|---|---|---|---|

| 280348 | 0.316 | 0.75 | 0.526 |

| 12355 | 0.884 | 1 | 0.948 |

| 1377 | 0.627 | 0.9 | 0.736 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Y.; Wei, X.; Liu, Y.; Liao, Z. A Reputation Model of OSM Contributor Based on Semantic Similarity of Ontology Concepts. Appl. Sci. 2022, 12, 11363. https://doi.org/10.3390/app122211363

Zhao Y, Wei X, Liu Y, Liao Z. A Reputation Model of OSM Contributor Based on Semantic Similarity of Ontology Concepts. Applied Sciences. 2022; 12(22):11363. https://doi.org/10.3390/app122211363

Chicago/Turabian StyleZhao, Yijiang, Xingcai Wei, Yizhi Liu, and Zhuhua Liao. 2022. "A Reputation Model of OSM Contributor Based on Semantic Similarity of Ontology Concepts" Applied Sciences 12, no. 22: 11363. https://doi.org/10.3390/app122211363

APA StyleZhao, Y., Wei, X., Liu, Y., & Liao, Z. (2022). A Reputation Model of OSM Contributor Based on Semantic Similarity of Ontology Concepts. Applied Sciences, 12(22), 11363. https://doi.org/10.3390/app122211363