Abstract

The optimal water flow in fish breeding tanks is one of the crucial elements necessary for the well-being and proper growth of fish, such as salmon or trout. Considering the round tanks and the uneven distribution of water-flow velocity, ensuring a nearly optimal flow is an important task that may be performed using various sensors installed to monitor the water flow. Nevertheless, observing the rapid development of video analysis methods and considering the increasing availability of relatively cheap cameras, the use of video feedback has become an interesting alternative that limits the number of sensors inside the water tanks in accordance with the requirements of fish breeders. In this paper, an analysis of the use of optical flow algorithms for this purpose is performed and an estimation method based on their features is proposed. The results of the flow estimation using the proposed method are verified experimentally and compared with the measurement results obtained using the professional water-flow meter, demonstrating a high correlation, exceeding 0.9, confirming the proposed solution as a good alternative in comparison to the use of expensive sensors and meters.

1. Introduction

The application of video analysis has recently become one of the leading interdisciplinary trends in modern aquaculture. Some of the evident reasons for this situation are the increasingly available and affordable video cameras as well as the growth of the computational power of the popular devices that, in some applications, may replace typical personal computers, such as, e.g., Raspberry Pi, FPGA boards, nVidia Jetson Nano and similar more sophisticated hardware platforms. An analogous situation also exists in the automotive industry and some other areas of technology, which is typical for the rapid development of Industry 4.0 solutions, which is becoming Agriculture 4.0 as well [1].

The most typical applications of video analysis in fish farming seem to be motion tracking [2], as well as the recognition and classification of fish [3], which has recently also utilized popular convolutional neural networks (CNNs) [4]. The application of such video systems may also be helpful for the determination of fish behavior [5,6,7,8] as well as in the development of intelligent feeding systems [9,10,11]. Another interesting area of research related to these topics is water-quality monitoring [12]. Some other recent works focus on the applications of artificial-intelligence methods, including mobile remote-control systems and IoT solutions applied in fish farms [13,14].

Many of these research areas are motivated by the willingness to limit the number of sensors and additional equipment mounted inside the ponds or tanks, which is also essential for economic reasons. Such video-based approaches do not affect fish directly, reducing the possibility of injuring individual fish, additionally simplifying the hardware architecture of systems containing sensors and their necessary connections. Therefore, a similar approach may also be beneficiary in monitoring the water flow in recirculating aquaculture systems (RAS).

Proper water flow in fish-farming ponds and tanks is one of the most crucial requirements in the fish-farming centers in which species such as salmon and trout are bred. These fish behave in a specific way, positioning themselves parallel to the water current. Therefore, the correct flow velocity of water determines the proper development of the fish as well as the welfare of the whole group of them present in the pond. Much work has been carried out in recent years to determine the optimal flow speeds for maintaining overall fish health, but the water velocity distribution inside circular tanks is often very heterogeneous, particularly near their walls (edges). Usually, these works are focused on analyzing the effect of design parameters on the distribution of water velocities inside circular aquaculture tanks [15]. An example of such a model can be found in the paper [16], where the proposed model estimates the velocity distribution by determining the angular momentum per unitary mass close to the tank wall and around the central axis. The model depends on the water inlet and outlet flow rates, water inlet velocity, reservoir radius, water depth, and three reservoir-specific parameters that must be determined experimentally. It also takes into account the influence of the wall roughness, the characteristics of the water inlet devices, and the presence of individual elements at the bottom of the tank causing friction losses. However, it is not always necessary to use such advanced models, as in most cases simplified models of flow distribution are sufficient.

The optical flow methods analyzed in this paper may also be used for the estimation of the water-flow velocity in rivers [17,18,19], replacing previously used particle image velocimetry (PIV) methods [20] as well as typical motion-tracking methods based on point detectors [21]. Nevertheless, in such types of video recordings, there is no ground truth data; hence, the evaluation of the obtained results may be conducted with the use of trajectory reconstruction. In recent years, the accuracy and efficiency of such techniques have improved significantly [22], making it also possible to apply some of the methods in real-time applications [23]. Some of the proposed approaches utilize feature matching applied for orthorectification and velocimetry, such as airborne feature matching velocimetry (AFMV) [18], whereas the applicability of the optical-flow methods has also been verified in experimental irrigation stations. It led to promising results, with average relative errors less than 6.5%, applying the time-averaged surface velocity of water flows based on the Farneback optical flow method [24].

With an appropriate measuring environment, it is possible to prepare spatial distributions of the flow in the tank [25]. Unfortunately, breeders are usually opposed to the excessive installation of sensors that can injure fish, so the most commonly available information is the amount of water leaving the water pump supplying the fish-farming tank. The spatial distribution is constant for the static configuration of the tank, that is: the number and settings of nozzles, pump performance and the shape of the tank. It changes as the amount of fish and floating biomass increases, which is problematic if it is not possible to place the sensors in the water together with the fish.

The video measurement method developed and presented in the article is non-invasive, with the quality of results comparable to those obtained by sensors immersed in the tank and a flow meter measuring the performance of the pump feeding the tank.

2. Materials and Methods

2.1. Experimental Site

One of the essential parts of a system that uses vision feedback to determine the behavior of fish in a breeding tank is the estimation of the water-flow velocity. Such studies can be carried out by various image analysis methods, which, however, require verification with a calibrated water-flow meter used in experimental studies. To verify the suitability of individual methods of video sequence analysis for this purpose, experimental tests were carried out by recording video material for the determined values of water flows in a round tank (SDK RT 29-68 type). The experiments were initially carried out without the fish in the tank in order to prevent possible disturbance caused by their movements.

Due to the fact that the water in the tank is never perfectly clean and transparent, visible small objects may appear on its surface, making it easier to estimate the flow velocity thanks to their tracking. Additionally, it may be useful to have fixed elements of the tank or other objects attached to it, or a reflection of the elements above the tank. The flow of water at different speeds causes visible undulations in the shapes of these objects, while in the case of small elements floating on the water surface, it is possible to estimate the flow velocity on the basis of tracking their movement.

The experimental study was performed in a rainbow-trout farm located in Garnki in the northern part of Poland about 35 km from the Baltic Sea coast. The reference measurements were conducted using the electromagnetic water-flow meter MTF-10 and SonTek FlowTracker2 in the above-mentioned fish tank, as illustrated in Figure 1. Video acquisition took place using the color IP camera Dahua IPC-HFW2231T-ZS-27135-S2 typically applied in video surveillance and industrial monitoring systems. It is a varifocal IPC camera of the Lite series that records frames of video sequences with FullHD resolution ( pixels) at the speed of 25 or 30 frames per second.

Figure 1.

Location of the RAS fish farm and the equipment used in experiments: (a) location of Garnki, Poland shown on the map; (b) fish tank type SDK-RT 29-68 mounted on site; (c) electromagnetic water-flow meter MTF-10 (source: http://www.mtfflow.pl accessed on 13 September 2022); (d) SonTek FlowTracker2 Handheld-ADV (source: https://www.ysi.com/flowtracker2 accessed on 13 September 2022).

It has a two megapixel CMOS 1/2.8 inch Progressive Scan sensor with a sensitivity of 0.002 lux/F 1.5. It also has the option of using an additional illuminator in the form of 4 IR LEDs with a range of 60 m and an automatic ICR infrared filter. It has the built-in 2.7–13.5 mm lens with a viewing angle of 28–109 degrees and a motorized zoom with autofocus, which enables external, remote adjustment of the focal length level with the help of a built-in electric motor, and also automatically adjusts the sharpness level. The native format of data recording is Dahua’s DAV, the camera also supports H.265+, H.265, H.264+ and MJPEG compression. The latter format, also referred to as Motion JPEG, may be used in the applications where a high compression ratio is not necessary, as in this case the video stream consists of a series of recorded frames compressed using a JPEG algorithm without using inter-frame information in the compression process. The housing has an IP67 tightness class, the camera supports 12 V DC or 48 V power supply using the Power over Ethernet interface (PoE 10/100 Base-T 802.3af).

Additional functionalities and advantages of the camera are the following technologies: 3D NR (noise reduction system that allows the image to be transparent in the event of changing signal levels, which protects the recordings against smudging), WDR (wide dynamic range—extended dynamic range, important in places with different lighting levels of the observed scene), BLC and HLC (back light compensation—important when the camera is directed towards a strong light source—and high lighting compensation to reduce the negative impact of point light sources, e.g., reflected in water), intelligent infrared lighting function and region of interest (RoI) limitation to minimize the size of saved files.

Due to some limitations of the local conditions, the camera was mounted directly above the tank (about 1 m above the water surface), enabling the observation of a selected part of the tank. One of the very important technical issues during the installation of the camera was also to ensure proper image sharpness. Another, quite obvious, limitation of the camera installation was the need to ensure the rigidity of the mount to prevent possible camera vibrations. In order to be able to effectively use vision techniques to estimate the water-flow velocity, it is necessary to ensure that the camera is stationary with respect to the housing of the rearing tank.

The set used in the research, consisting of a pump with an electromagnetic flow meter, allowed the use of flows (understood as the expenditure of water inflow) in the range of 1.5–5.0 dm3 per second. Taking into account the need to stabilize the water flow in the entire reservoir, the recording of the video material was delayed in relation to the moment of setting the desired flow. The sample frames from the recorded video sequences obtained for various water flows are presented in Figure 2. Some changes are well visible even in the individual frames due to the presence of reflections of ceiling beams in the water. Some examples of the video frames captured in the presence of fish for various water-flow velocities are illustrated in Figure 3.

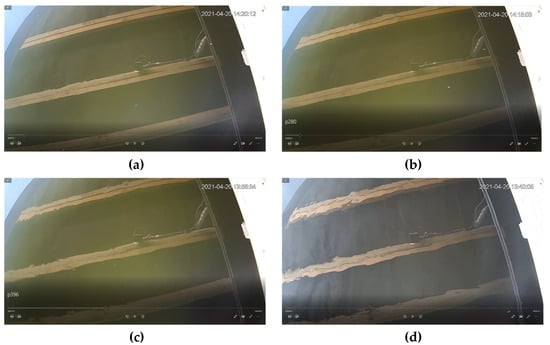

Figure 2.

Sample video frames acquired for various measured water-flow velocities: (a) 1.86 dm3/s; (b) 2.80 dm3/s; (c) 3.96 dm3/s; and (d) 5.03 dm3/s.

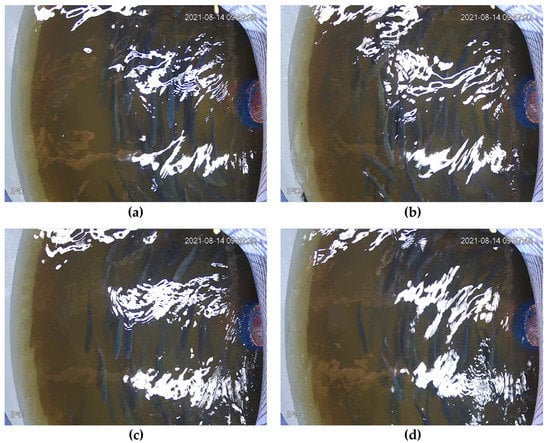

Figure 3.

Sample video frames acquired for various measured water flow velocities in the presence of fish: (a) 2.35 dm3/s; (b) 2.99 dm3/s; (c) 3.62 dm3/s; and (d) 4.01 dm3/s.

2.2. The Overview of the Applied Optical Flow Methods

The first of the considered approaches was the use of global entropy of the image and its local values determined for individual frames of the video sequence. According to the adopted assumption, for higher water-flow velocities, individual frames of the video sequence should be characterized by greater variability, and, thus, higher values of image entropy. However, the observed changes in entropy for individual frames of the video sequence did not allow to obtain satisfactory results, mainly due to the lack of use of inter-frame information. Therefore, subsequent studies focused on estimating the motion vectors between adjacent frames of the video sequence. For this purpose, it is possible to use several methods from the optical flow family [26].

To estimate the value of optical flows for individual frames of recorded video sequences, three different methods were used:

- Horn–Schunck (HS) method;

- Farneback (F) method;

- Lucas–Kanade (LK) method.

Determining the optical flow between two frames of a video sequence requires solving an equation with constraints:

where , and are spatial and temporal (spatiotemporal) derivatives of image brightness, and u and v are the values of the horizontal and vertical optical flow (horizontal and vertical components of the vector), respectively.

The Horn–Schunck (HS) method is based on the assumption that the optical flow in the image is smooth over the entire surface of the image; hence, the velocity vector field determined by this method should minimize the value of the equation:

where the global coefficient enables the selection of smoothness on the image surface, whereas the individual partial derivatives are determined in relation to the image pixel coordinates. Minimizing this expression in the HS method leads to the following relationships:

and

The vector is an estimate of the flow velocity for a given image pixel with coordinates , whereas contains its average values from the defined neighbourhood.

To determine the values of u and v flows, in the first step the gradient images and should be determined using the well-known Sobel convolution filter for a standard pixels mask. The value calculated as the difference between images is determined using the mask, whereas the average velocity for each pixel (excluding it) is determined using a convolution with a mask typical for 4-connected neighborhood (two horizontal and two vertical neighbouring pixels). The determination of the values of flows u and v in subsequent steps is iterative. The default value of the parameter is 1.

The Farneback (F) method assumes the generation of an image pyramid in which each level has a lower resolution compared to the previous level. Selecting a pyramid level greater than 1, the algorithm can track points at multiple levels of resolution, starting from the lowest level. An increase in the number of levels in the pyramid allows the algorithm to detect larger displacements of points between frames, however, increasing the number of necessary computations in this case. This is an example of a dense method that operates in the HSV color space (after the conversion from RGB). The flow vectors are also visualized in the HSV space, where the angle (hue) corresponds to the direction of the vector and the magnitude of the flow represented by the length of the vector is reflected by the value (V) component.

Motion tracking starts at the lowest resolution level and continues until the convergence is achieved. Point locations detected at this level are propagated as key points for the next level. Therefore, the Farneback method makes it possible to increase the tracking accuracy at each successive level. The decomposition of the pyramid enables the algorithm to detect large pixel movements for distances greater than the size of the neighborhood under consideration. The default number of levels in the pyramid is 3 with the scale factor 0.5 (split into 4 parts). By default, three iterations of the solution search are performed for each level with the default neighborhood area of 5 pixels. The convolutional Gaussian filter with the default pixels mask is used as the averaging (smoothing) filter.

The most popular Lucas–Kanade (LK) method belongs to the group of sparse methods; hence, full calculations are performed only for selected points of the image. In this method, the image is divided into fragments in which a constant flow velocity is assumed. To find a solution, a weighted least-squares fit of the optical flow limitation equation to the constant model for each section is performed by minimizing the expression:

where W denotes a centrally weighted window function. The solution has the following form:

Similarly to in the HS method, the value is determined between video frames using the mask. To determine the values of flows u and v, the following actions are performed:

- Determining the values of the directional gradients and using the convolutional filter with the mask and its transposed version, respectively;

- Calculation of the differential value between the frames;

- Smoothing the gradients and using the pixels convolutional filter with the mask type ;

- Solving the system of two linear equations for each pixel:using the eigenvalues of the matrix A determined as and . Both those values are compared to the threshold applied for the reduction in the noise effect. If at least the threshold value is obtained by both eigenvalues, the system of equations can be solved by Cramer’s method. When both eigenvalues are less than the threshold , the optical flow is zero, whereas if only (and ), the matrix A is singular and the gradient flow is normalized to calculate u and v values. The default threshold value is .

One of the possible extensions of the LK method is the use of the additional temporal filtering based on the application of the DoG filter, leading to the Lucas–Kanade derivative of Gaussian (LKDoG) method. Nevertheless, during the conducted initial experiments, this method led to significantly worse results and, therefore, further experiments focused on the application of three above-described algorithms, the implementation of which is available, e.g., in MATLAB® and OpenCV library.

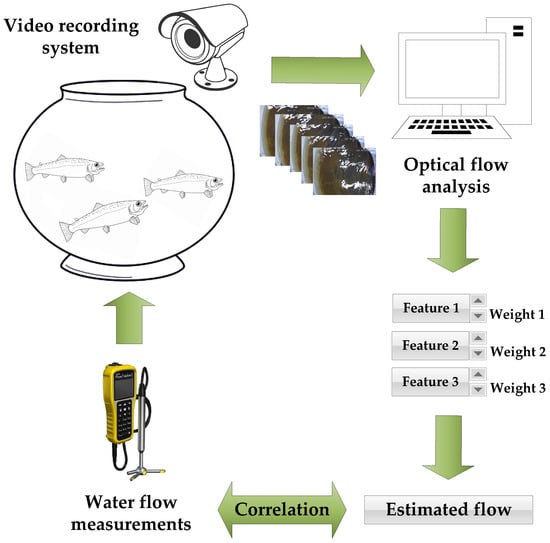

The illustration of the simplified flowchart of the method proposed in the paper is shown in Figure 4.

Figure 4.

The simplified flowchart illustrating the idea of the proposed approach.

2.3. Experiments

In the conducted experimental studies, the possibility of using the above optical flow methods was verified in order to validate the features of video sequences based on various methods of determining optical flows, characterized by the highest possible compliance with the values of water flows measured with a water-flow meter. For this purpose, a set of video sequences was recorded for fixed flow values, and then the optical flow values for each frame, as well as their selected parameters, were calculated.

Due to the assumption of a constant value of the water flow in the tank during the recording of individual video sequences, as well as the rigid mounting of the camera (fixed in relation to the tank housing), it was assumed that the time-averaged and median values determined for individual pixels would be subject to analysis. This assumption was made despite the existence of certain differences between the values of optical flows represented by the designated motion vectors for individual frames, although leading to reliable results as presented later.

As the optical flow methods are based on the calculation of local gradients, global changes in brightness have no effect on the obtained results. An opposite situation can only take place in the case of very strong lighting or darkness, which is considered an emergency state. The method proposed in the article was developed primarily for fish breeding farms located in closed halls, where it is possible to maintain controlled lighting conditions. Due to the fact that intensive fish fattening, justified for economic reasons, usually requires the use of continuous 24-h lighting to intensify food intake by fish, the lighting used in this case is sufficient for the proper functioning of the developed method.

3. Discussion of the Experimental Results

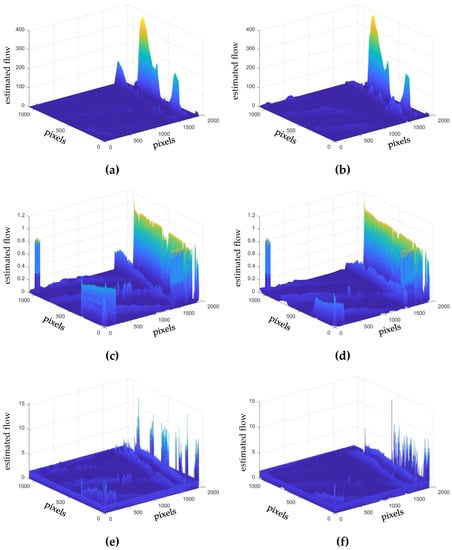

Calculation of the individual flow maps was made with the use of the three methods considered in the paper: Horn–Schunck, Farneback and Lucas–Kanade. Since all calculations were made for the recorded video sequences, the results obtained for individual pixels were averaged. Sample results obtained with the use of the three above-listed methods for two exemplary water-flow velocities are illustrated in Figure 5. All the presented flow maps demonstrate the highest values of detected optical flows for the reflections of several elements located above the tank visible on the water surface (wooden roof boards, electric extension cord, camera). Therefore, further analyses related to determining the flow characteristics were carried out for the central parts of the visible area. Nevertheless, it is worth noting that the presence of reflections on the water surface of solid elements of the structure located above the tank is in fact beneficial for the methods applied to determine the optical flows.

Figure 5.

Sample averaged optical flows obtained for two different water flow velocities: (a) Farneback method for 2.17 dm3/s; (b) Farneback method for 5.03 dm3/s; (c) HS method for 2.17 dm3/s; (d) HS method for 5.03 dm3/s; (e) LK method for 2.17 dm3/s; and (f) LK method for 5.03 dm3/s.

As noticed on the presented flow maps, the flow rates shown in the images did not always follow expectations, which is well-visible, especially for the LK method. However, in this case, the application of the Farneback method yielded water-flow estimates more in line with the predictions. To improve the accuracy of the flow estimation, providing a highly linear correlation with the velocities measured with an electromagnetic flow meter, a set of features determined based on the obtained flow-velocity maps for the central parts of the scene was selected. The goal of the application of these features is the efficient utilization of the visual feedback for the detection of changes in the water-flow values based on the continuous analysis of the video sequence. Thanks to such an approach, the detection of some failures of, e.g., a pump or flow meter (significant non-compliance of the value determined using the video sequence analysis with the values from the flow meter) would be possible. Ultimately, after calibrating the system, it is also possible to use the proposed vision approach for fish-farming tanks in situations when an installation of a water-flow meter is impossible.

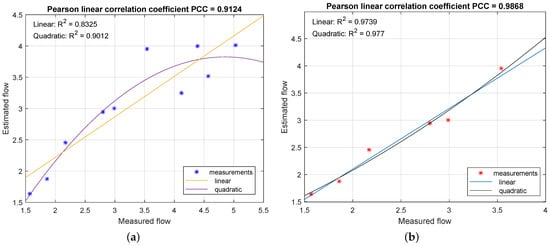

The achieved relationship, based on a non-linear combination of the three features with the fitting curves obtained for the linear and quadratic functions, is shown in Figure 6. The features used in this combination, which ensure the Pearson’s linear correlation coefficient greater than 0.9, are:

Figure 6.

The relationships between the uncalibrated estimates achieved using the proposed method and the velocities measured using the water-flow meter: (a) for the whole range 1.5–5.0 dm3/s; (b) for the typical range 1.5–3.7 dm3/s.

- Squared entropy of the motion vectors map for the Farneback method ();

- Standard deviation of the motion vector map for the Lucas–Kanade method ();

- Variance in the local entropy of the motion vector map for the Lucas–Kanade method ().

The final formula combining the three above features may be obtained using the optimization of the weighting coefficients, finally leading to the following expression:

where the parameters and depend on the spatial configuration of the camera and the tank and might be changed according to needs.

The dependence presented on the graph is approximately linear, however, one can notice an increase in the flow estimation error with the increase in its value. For this reason, the application of the proposed method in real conditions may be limited to lower flow values, for which the linearity of the relationship between the estimated and measured values is easily noticeable. However, additional verification and calibration is required if a different type of tank is used.

An additional factor affecting the results obtained in the video feedback loop is the presence of fish; however, considering the fact that most of the time the fish do not move significantly, it is possible to detect and filter out the detected sudden movements of the fish. As verified experimentally by some other researchers [25,27,28], the presence of fish may decrease the water velocity by 25–30% in comparison to the tank without fish, affecting the flow pattern. However, it has been confirmed that trends of mean velocity in the radial direction remain unchanged [25]. Therefore, as verified for some video sequences acquired in the presence of fish, the method proposed in the paper also leads to similar conclusions and results in the presence of fish biomass, assuming the necessary calibration, taking into account the expected velocity decrease.

Nevertheless, it is also worth noting that a typical water flow used in fish farming for such types of tanks does not exceed 3.7 dm3/s; hence, the proposed method may be successfully applied for the typical velocity range from 1.5 to about 3.7 dm3/s, ensuring the Pearson linear correlation coefficient equal to 0.9868.

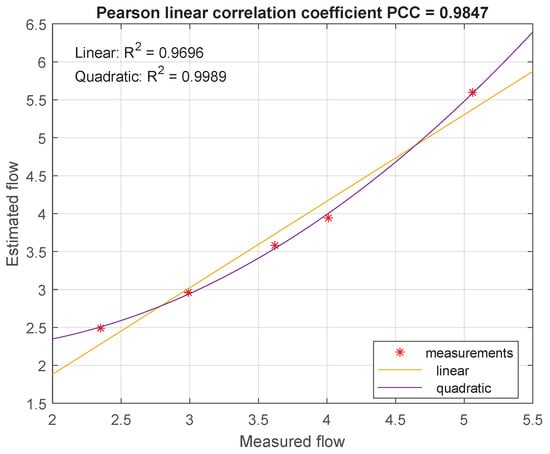

For further verification of the universality of the proposed solution in fish-farming tanks, additional experiments were performed after recording several hours of video sequences using five water-flow velocities in the presence of fish, as illustrated in Figure 3. However, the proposed method requires the additional calibration in the presence of fish and some changes in the parameters, also using a different camera location. In such cases, very good experimental results were also obtained applying the fourth root of the entropy of the motion vectors map for the Lucas–Kanade method () instead of the squared entropy of the Farneback motion vectors map (with and ). Additionally, in this case, there is no necessity to use the Farneback method at all, since all three features originate from the Lucas–Kanade method. Therefore, an additional advantage of this simplified approach is the decrease in the number of computations, preserving relatively high correlation with measurement results (0.9847 in the experiments illustrated in Figure 7). Similarly, considering the four measurements obtained for the water flows from 2.35 to 4.01 dm3/s, a high linearity of the obtained relationship may be noticed as well.

Figure 7.

The relationships between the uncalibrated estimates achieved using the proposed simplified approach utilizing the Lucas–Kanade method and the velocities measured using the water-flow meter in the presence of fish.

4. Conclusions

The video feedback method presented in the paper makes it possible to monitor the water flow in the RAS fish-farming tanks and even avoid the use of water flow meters in some configurations. The possibility of the detection of potential failures of the water pumps or water-flow meters makes the proposed solution not only an interesting alternative to typical flow measurements but also a relatively cheap addition due to the increasing availability of affordable high-resolution video cameras.

The proposed solution may also limit the necessity of mounting some underwater sensors which may injure individual fish. The correctness of the water-flow estimation, verified for typical velocities, also allows its application in some other tanks after the necessary calibration of the algorithm’s parameters. As the proposed method is based on the analysis of the local gradients, it is not sensitive to typical changes in lighting and weather conditions.

Further research on this topic will concentrate on the verification of sensitivity-to-lighting conditions as well as some other constraints, to achieve the higher universality of the proposed approach, allowing it to work in some unexpected conditions. Another direction of research will be related to extensions related to the combination of the proposed method with fish-counting and fish-tracking methods.

Author Contributions

Conceptualization, P.L., K.O., A.T. and K.F.; methodology, P.L. and K.O.; software, K.O.; validation, D.A., D.N., A.T. and K.F.; formal analysis, P.L. and K.O.; investigation, P.L. and K.O.; resources, A.T., A.K.-O. and K.F.; data curation, P.L. and K.O.; writing—original draft preparation, P.L. and K.O.; writing—review and editing, K.O. and K.F.; visualization, D.A., D.N., A.T. and K.O.; supervision, K.F.; project administration, A.K.-O. and K.F.; funding acquisition, K.F. All authors have read and agreed to the published version of the manuscript.

Funding

The research was conducted within the project no 00002-6521.1-OR1600001/17/20 financed by the “Fisheries and the Sea” program.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| AFMV | Airborne feature matching velocimetry |

| BLC | Back light compensation |

| CMOS | Complementary metal-oxide-semiconductor |

| CNN | Convolutional neural network |

| DC | direct current |

| FPGA | Field-programmable gate array |

| HLC | High light compensation |

| ICR | Infrared cutfilter removal |

| IoT | Internet of Things |

| IR | Infrared |

| JPEG | Joint Photographic Experts Group |

| LED | Light-emitting diode |

| MJPEG | Motion JPEG |

| PoE | Power over Ethernet |

| PIV | Particle image velocimetry |

| RAS | Recirculating Aquaculture System |

| RoI | Region of interest |

| WDR | Wide dynamic range |

References

- Liu, Y.; Ma, X.; Shu, L.; Hancke, G.P.; Abu-Mahfouz, A.M. From Industry 4.0 to Agriculture 4.0: Current Status, Enabling Technologies, and Research Challenges. IEEE Trans. Ind. Inform. 2021, 17, 4322–4334. [Google Scholar] [CrossRef]

- Spampinato, C.; Chen-Burger, Y.H.; Nadarajan, G.; Fisher, R.B. Detecting, tracking and counting fish in low quality unconstrained underwater videos. In Proceedings of the Third International Conference on Computer Vision Theory and Applications—Volume 1: VISAPP, (VISIGRAPP 2008), Funchal, Madeira, Portugal, 22–25 January 2008; SciTePress: Setúbal, Portugal, 2008; pp. 514–519. [Google Scholar] [CrossRef]

- Forczmański, P.; Nowosielski, A.; Marczeski, P. Video Stream Analysis for Fish Detection and Classification. In Soft Computing in Computer and Information Science, Advances in Intelligent Systems and Computing; Wiliński, A., ElFray, I., Pejaś, J., Eds.; Springer International Publishing: Cham, Switzerland, 2015; Volume 342, pp. 157–169. [Google Scholar] [CrossRef]

- Han, F.; Yao, J.; Zhu, H.; Wang, C. Underwater Image Processing and Object Detection Based on Deep CNN Method. J. Sensors 2020, 2020, 6707328. [Google Scholar] [CrossRef]

- Spampinato, C.; Giordano, D.; Salvo, R.D.; Chen-Burger, Y.H.J.; Fisher, R.B.; Nadarajan, G. Automatic fish classification for underwater species behavior understanding. In Proceedings of the First ACM International Workshop on Analysis and Retrieval of Tracked Events and Motion in Imagery Streams—ARTEMIS’10, Firenze, Italy, 29 October 2010; ACM Press: New York City, NY, USA, 2010; pp. 45–50. [Google Scholar] [CrossRef]

- Papadakis, V.M.; Papadakis, I.E.; Lamprianidou, F.; Glaropoulos, A.; Kentouri, M. A computer-vision system and methodology for the analysis of fish behavior. Aquac. Eng. 2012, 46, 53–59. [Google Scholar] [CrossRef]

- Zhao, J.; Gu, Z.; Shi, M.; Lu, H.; Li, J.; Shen, M.; Ye, Z.; Zhu, S. Spatial behavioral characteristics and statistics-based kinetic energy modeling in special behaviors detection of a shoal of fish in a recirculating aquaculture system. Comput. Electron. Agric. 2016, 127, 271–280. [Google Scholar] [CrossRef]

- Li, D.; Wang, Z.; Wu, S.; Miao, Z.; Du, L.; Duan, Y. Automatic recognition methods of fish feeding behavior in aquaculture: A review. Aquaculture 2020, 528, 735508. [Google Scholar] [CrossRef]

- Zhou, C.; Lin, K.; Xu, D.; Chen, L.; Guo, Q.; Sun, C.; Yang, X. Near infrared computer vision and neuro-fuzzy model-based feeding decision system for fish in aquaculture. Comput. Electron. Agric. 2018, 146, 114–124. [Google Scholar] [CrossRef]

- An, D.; Hao, J.; Wei, Y.; Wang, Y.; Yu, X. Application of computer vision in fish intelligent feeding system—A review. Aquac. Res. 2020, 52, 423–437. [Google Scholar] [CrossRef]

- Lech, P.; Okarma, K.; Korzelecka-Orkisz, A.; Tański, A.; Formicki, K. Monitoring the Uniformity of Fish Feeding Based on Image Feature Analysis. In Proceedings of the Computational Science—ICCS 2021, Krakow, Poland, 16–18 June 2021; Paszynski, M., Kranzlmüller, D., Krzhizhanovskaya, V.V., Dongarra, J.J., Sloot, P.M., Eds.; Lecture Notes in Computer Science. Springer International Publishing: Cham, Switzerland, 2021; pp. 68–74. [Google Scholar] [CrossRef]

- Xiao, G.; Feng, M.; Cheng, Z.; Zhao, M.; Mao, J.; Mirowski, L. Water quality monitoring using abnormal tail-beat frequency of crucian carp. Ecotoxicol. Environ. Saf. 2015, 111, 185–191. [Google Scholar] [CrossRef] [PubMed]

- Angani, A.; Lee, C.B.; Lee, S.M.; Shin, K.J. Realization of Eel Fish Farm with Artificial Intelligence Part 3: 5G based Mobile Remote Control. In Proceedings of the 2019 IEEE International Conference on Architecture, Construction, Environment and Hydraulics (ICACEH), Xiamen, China, 20–22 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 101–104. [Google Scholar] [CrossRef]

- Angani, A.; Lee, J.C.; Shin, K.J. Vertical Recycling Aquatic System for Internet-of-Things-based Smart Fish Farm. Sensors Mater. 2019, 31, 3987–3998. [Google Scholar] [CrossRef]

- Zhang, Q.; Ren, X.; Liu, C.; Shi, X.; Gui, J.; Bi, C.; Xue, B. The Influence Study of Inlet System in Recirculating Aquaculture Tank on Velocity Distribution. In Proceedings of the 29th International Ocean and Polar Engineering Conference, Honolulu, HI, USA, 16–21 June 2019. Paper No. ISOPE-I-19-332. [Google Scholar]

- Oca, J.; Masalo, I. Flow pattern in aquaculture circular tanks: Influence of flow rate, water depth, and water inlet & outlet features. Aquac. Eng. 2013, 52, 65–72. [Google Scholar] [CrossRef]

- Khalid, M.; Pénard, L.; Mémin, E. Optical flow for image-based river velocity estimation. Flow Meas. Instrum. 2019, 65, 110–121. [Google Scholar] [CrossRef]

- Cao, L.; Weitbrecht, V.; Li, D.; Detert, M. Airborne Feature Matching Velocimetry for surface flow measurements in rivers. J. Hydraul. Res. 2020, 59, 637–650. [Google Scholar] [CrossRef]

- Sirenden, B.H.; Mursanto, P.; Wijonarko, S. Galois field transformation effect on space-time-volume velocimetry method for water surface velocity video analysis. Multimed. Tools Appl. 2022, 1–23. [Google Scholar] [CrossRef]

- Adrian, R.J. Particle-Imaging Techniques for Experimental Fluid Mechanics. Annu. Rev. Fluid Mech. 1991, 23, 261–304. [Google Scholar] [CrossRef]

- Guler, Z.; Cinar, A.; Ozbay, E. A New Object Tracking Framework for Interest Point Based Feature Extraction Algorithms. Elektron. Ir Elektrotechnika 2020, 26, 63–71. [Google Scholar] [CrossRef]

- Yagi, J.; Tani, K.; Fujita, I.; Nakayama, K. Application of Optical Flow Techniques for River Surface Flow Measurements. In Proceedings of the 22nd IAHR APD Congress, Sapporo, Japan, 14–17 September 2020; International Association for Hydro-Environment Engineering and Research-Asia Pacific Division: Beijing, China, 2020. [Google Scholar]

- Ammar, A.; Fredj, H.B.; Souani, C. An efficient Real Time Implementation of Motion Estimation in Video Sequences on SOC. In Proceedings of the 18th International Multi-Conference on Systems, Signals and Devices (SSD), Monastir, Tunisia, 22–25 March 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1032–1037. [Google Scholar] [CrossRef]

- Wu, H.; Zhao, R.; Gan, X.; Ma, X. Measuring Surface Velocity of Water Flow by Dense Optical Flow Method. Water 2019, 11, 2320. [Google Scholar] [CrossRef]

- Gorle, J.; Terjesen, B.; Mota, V.; Summerfelt, S. Water velocity in commercial RAS culture tanks for Atlantic salmon smolt production. Aquac. Eng. 2018, 81, 89–100. [Google Scholar] [CrossRef]

- Sirenden, B.H.; Arymurthy, A.M.; Mursanto, P.; Wijonarko, S. Algorithm Comparisons among Space Time Volume Velocimetry, Horn-Schunk, and Lucas-Kanade for the Analysis of Water Surface Velocity Image Sequences. In Proceedings of the International Conference on Computer, Control, Informatics and its Applications (IC3INA), Tangerang, Indonesia, 23–24 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 47–52. [Google Scholar] [CrossRef]

- Timmons, M.B.; Summerfelt, S.T.; Vinci, B.J. Review of circular tank technology and management. Aquac. Eng. 1998, 18, 51–69. [Google Scholar] [CrossRef]

- Plew, D.R.; Klebert, P.; Rosten, T.W.; Aspaas, S.; Birkevold, J. Changes to flow and turbulence caused by different concentrations of fish in a circular tank. J. Hydraul. Res. 2015, 53, 364–383. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).