1. Introduction

A port crane is a large piece of construction machinery, widely used in major ports, playing an important role in cargo transport. Due to its high frequency and high intensity working characteristics [

1], its structural safety has attracted much attention [

2]. To evaluate the safety of port machinery, it is necessary to measure the coordinates of its key points or measure the key dimensions. Port machinery works continuously for a long time and its structure is usually tall [

3]. Some positions cannot be reached manually, so there are few tools that can complete the measurement, and some key data cannot be measured directly.

At present, among the effective measurement methods within a certain range, the operation of total station [

4,

5] is complicated. If too many points need to be measured, its efficiency will be relatively low. For high-strength, long-working construction machinery such as port cranes, the working clearance is relatively short. If the total station is used for measurement, the crane needs to stop working for a long time, which will cause relatively large economic losses. Laser scanner [

6] has a high cost and complex post-processing. The price of a single device usually exceeds USD 120,000. It needs to work for a long time in one location to obtain a sufficient number of point clouds, so it does not practical for large-scale application. Installing sensors [

7] is too manual and dangerous. Coordinate measuring machine (CMM) [

8,

9] has high measurement accuracy and good versatility, but it belongs to the contact measurement mode with high requirements on the environment, and its measurement range is small. Laser tracker [

10] also belongs to contact measurement, but each contact can only obtain the coordinates of one point, and its measurement efficiency is low. Compared with the above measurement methods, photogrammetry is not only simple and flexible in operation and low in cost, but also with the continuous development and progress of computer vision technology [

11,

12,

13], image post-processing is very convenient.

Photogrammetry has the characteristics of non-contact and automatic processing, so the use of photogrammetry has become a new idea of traditional engineering measurement, especially in today’s pursuit of automation and the intelligent development trend [

14]. The development of instruments, sensors, robots, electronic circuits, chips and other technologies has also injected new vitality into the development of photogrammetry technology. With the continuous emergence of various innovative technologies in recent years, close range photogrammetry has been widely applied in fields, such as deformation photogrammetry, architectural photogrammetry, engineering photogrammetry and so on. Close-range photogrammetry is more advantageous than the traditional geodetic method for measuring deformation of objects, especially for high-rise buildings, bridges, machines and dams. Darmstadt Institute of Engineering published their research results on deformation observation of a 43-story building with a height of 142 m, and Freiberg Institute of Mines in Germany published a report on deformation of a transport bridge in an open-pit coal mine under load conditions, both of which achieved good results [

15]. Close-range photogrammetry is also used in reverse engineering, which greatly shortens the development cycle of industrial products. Due to the high detection accuracy of close-range photogrammetry, it has many applications in automobile manufacturing, parts quality control, whole machine assembly, automatic welding, automatic painting and so on [

16].

2. Related Work

The application of photogrammetry in port machinery is still relatively rare. Aihua Li [

17] tried to use photogrammetry to measure the data of gantry cranes, but the effect was not good. Qi Wang [

18] developed the calibration method of camera effective focal length for port cranes. He proposed a new position and attitude estimation method to solve the problem of low measurement accuracy when the attitude angle is large. Enshun Lu [

19] developed a multi-line intersection point-fitting optimization algorithm for crane structure characteristics. Most of the corners on the crane are the intersections of three straight lines. Taking advantage of this feature, Enshun Lu solved the problem that some key corners cannot be directly measured due to occlusion. The structure of port machinery has obvious characteristics, and a suitable photogrammetry algorithm can be developed according to its structural characteristics.

Taking gantry crane [

20] as the representative of port machinery, after a long period of high-intensity work, its supporting cylinder will be deformed, the center of the circle will be offset, and the safety will be reduced. The coordinates of the center of the circle and other key points play a crucial role in the safety evaluation of mechanical structures [

21,

22]. Therefore, it is very important to measure the coordinates of the center of the circle. However, it is usually impossible to measure the center of a circle directly. In general, the coordinates of multiple points on a circle are measured first, and the center of a circle is fitted according to the spatial coordinates of each point [

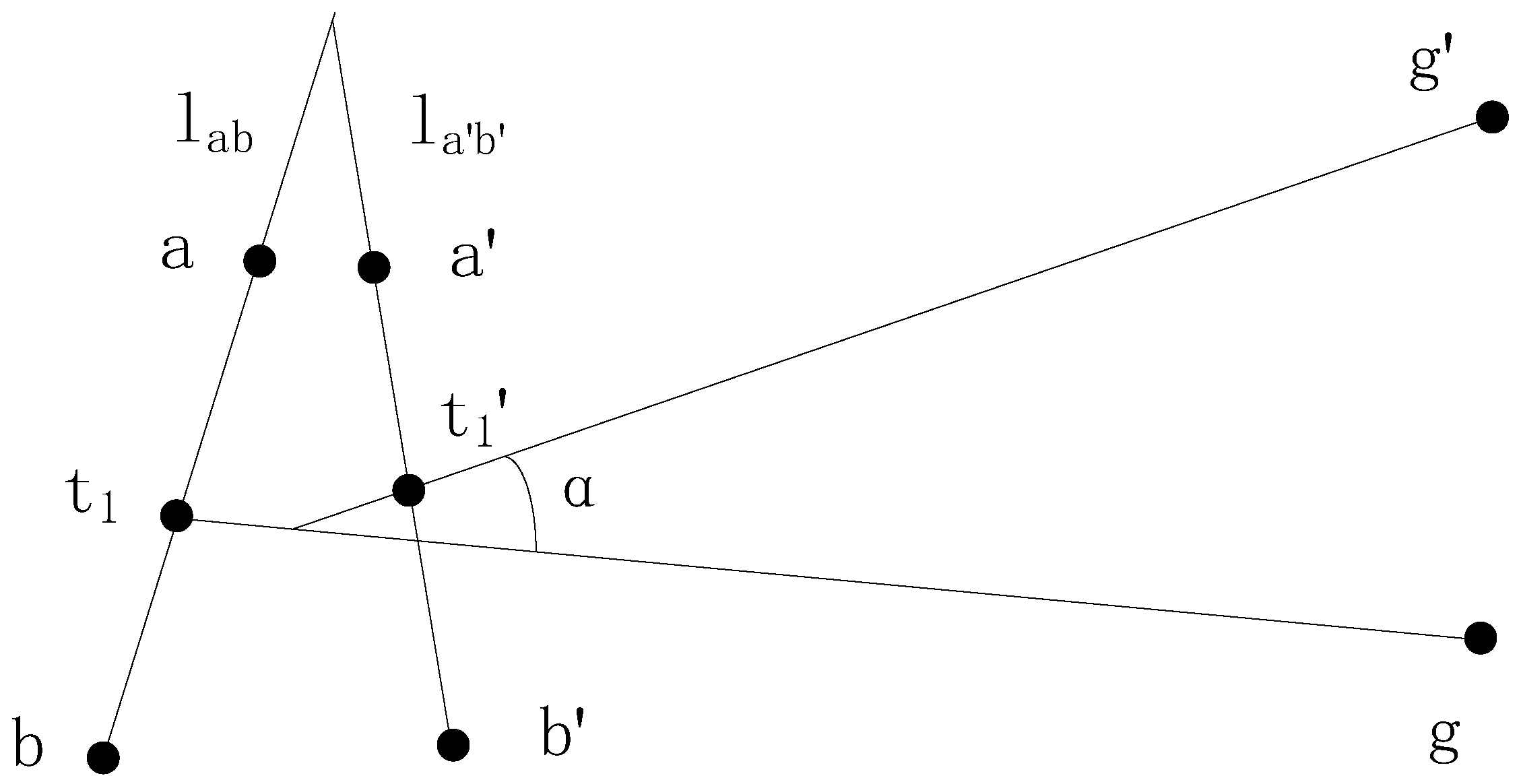

23]. In mathematical theory, the intersection point of midperpendicular of multiple strings on a circle is the center of the circle, but due to the existence of error, the multiple midperpendicular cannot strictly intersect at one point [

19]. Therefore, an approximate model of image point distortion [

24,

25] is established in the image plane. The Hough transform [

26,

27,

28] is used to determine the similarity between the actual and ideal midperpendicular, and then the weight of each midperpendicular is determined according to the similarity, and more accurate coordinates of the center of the circle are obtained through the above process.

Few scholars combine photogrammetry with the fitting of the center of the circle in space. This paper has made relevant research in this direction because of the need to provide supporting data for crane safety assessment. Compared with the previous research work conducted by scholars on the measurement of cranes, this paper has the following innovations: (i) A new approximate model of image point distortion is proposed; (ii) An iterative optimization method based on reprojection is proposed; and (iii) The midperpendicular is weighted by using the Hough transform. The research in this paper solves the problem of poor fitting accuracy of the measurement of the center of a port crane.

The rest of this paper is structured as follows. The circle center fitting theory based on equal weight midperpendicular is proposed in

Section 3.1; on the basis of

Section 3.1, the circle center fitting theory based on weighted midperpendicular is proposed in

Section 3.2.

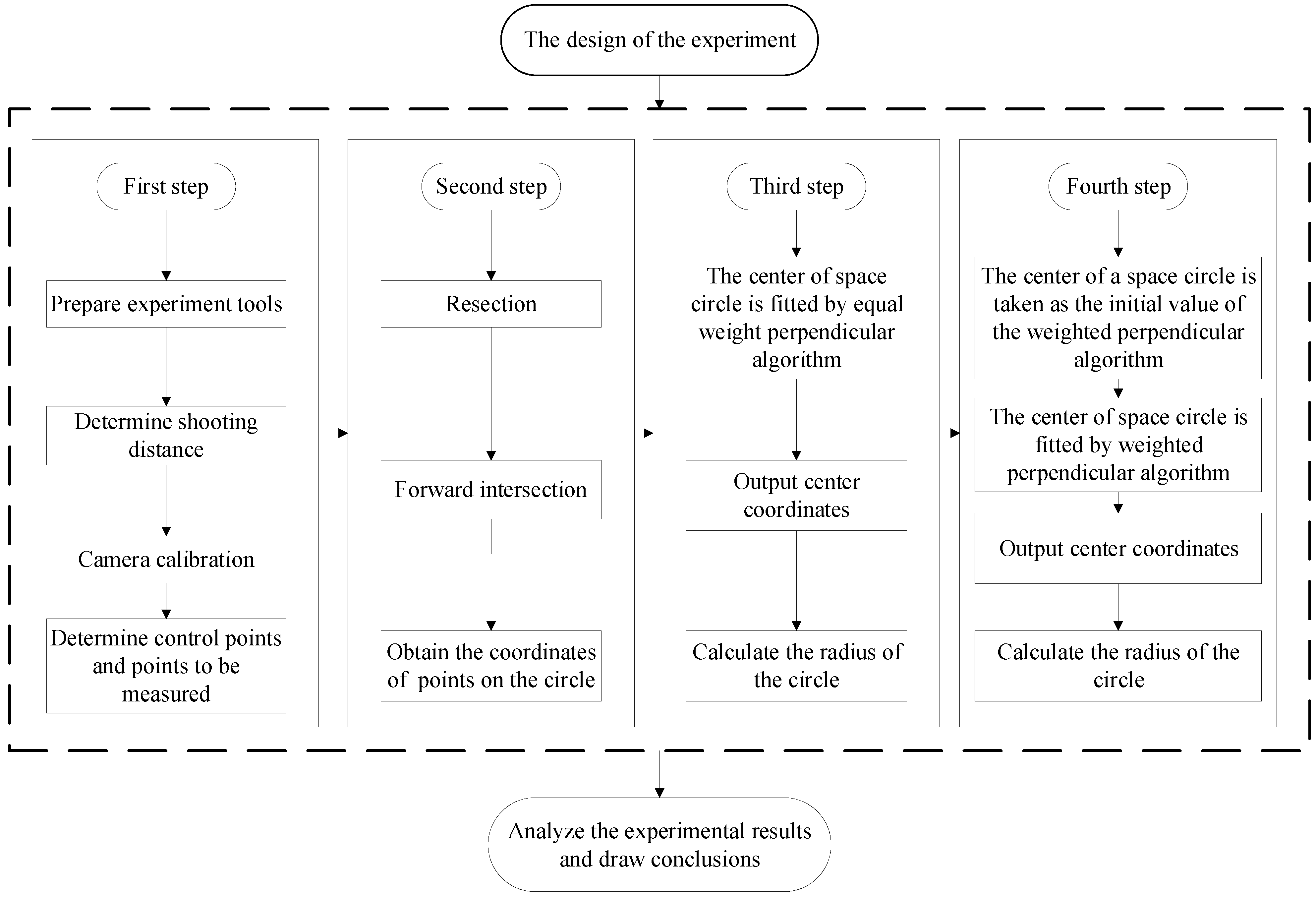

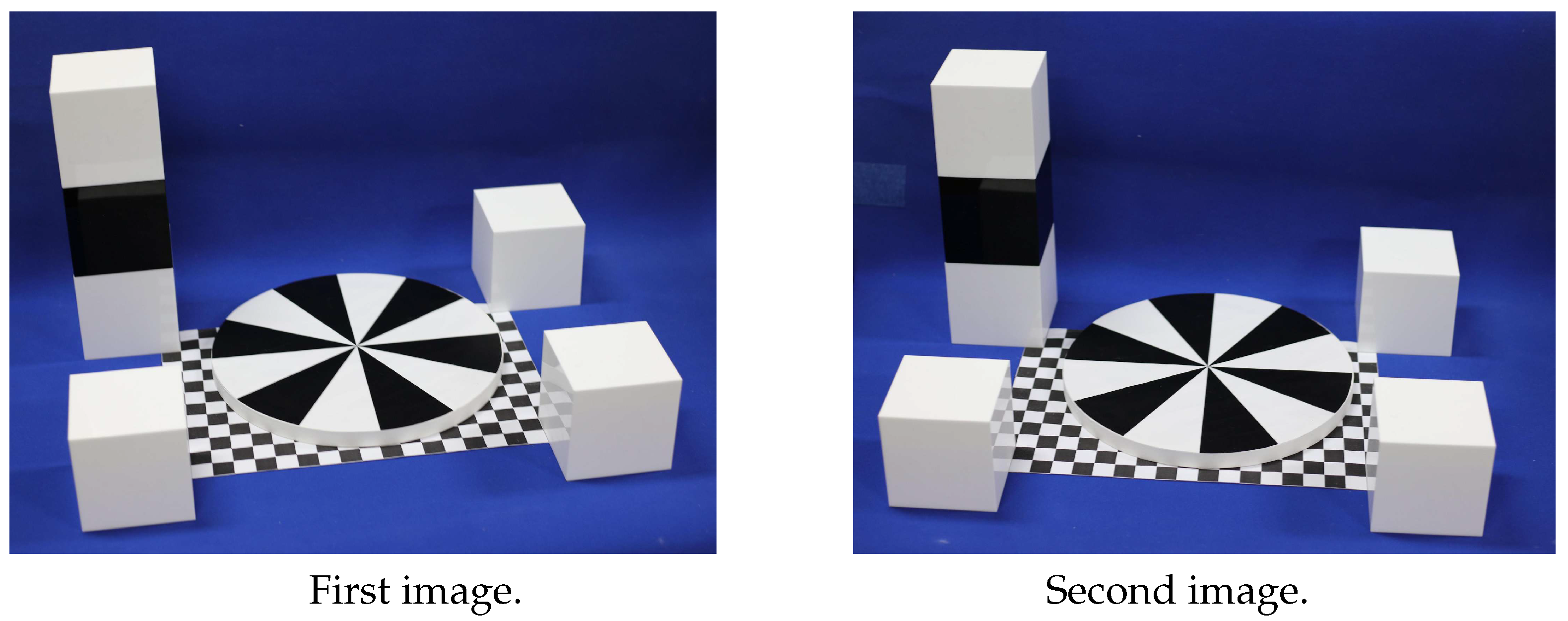

Section 4 introduces the experimental process.

Section 5 analyzes the experimental results. And

Section 6 summarizes the whole paper and prospective future research.

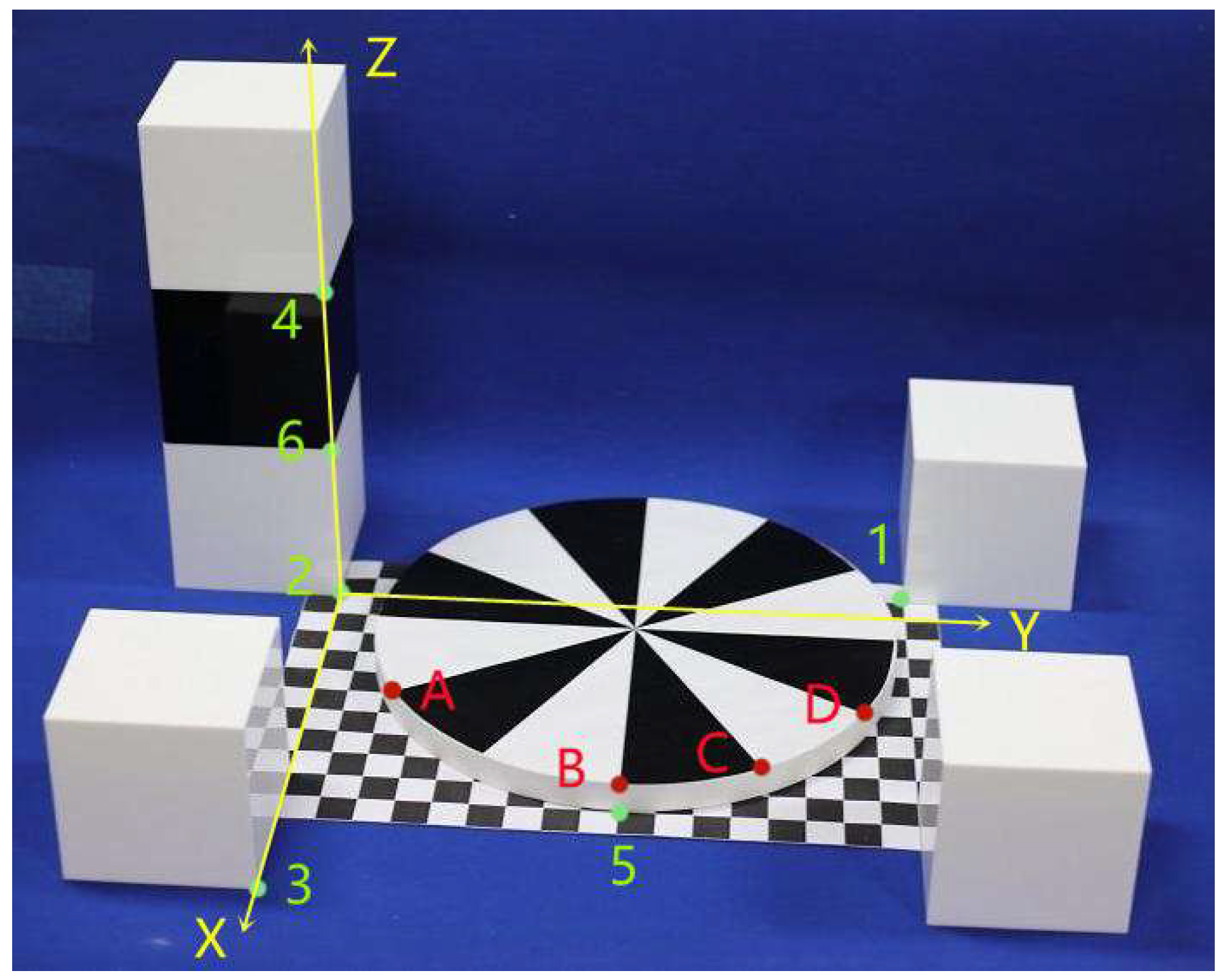

5. Results and Discussion

For the two experiments above, the changes of weights (

,

,

) and coordinates (

,

,

) of each spatial line in the iterative process are shown in

Table 11 and

Table 12.

In the first experiment, the spatial coordinates of the center of the circle are known, so the measured results of the two algorithms can be compared with the true coordinates of the center of the circle. The comparison results are shown in

Table 13. It can be seen from the table that the precision of the WFA is obviously higher than that of the EWFA.

Since the second experiment is an engineering experiment, the center of the circle cannot be measured directly, and its true coordinates are unknown. Therefore, the accuracy of center fitting is evaluated by the error of multiple radii. RA, RB, RC and RD represent the radii between points

A,

B,

C and

D and the center of the circle, respectively. The radii calculated in the two experiments are shown in

Table 14 and

Table 15. The true radius of the first experiment is 150 mm, The true radius of the second experiment is 545 mm.

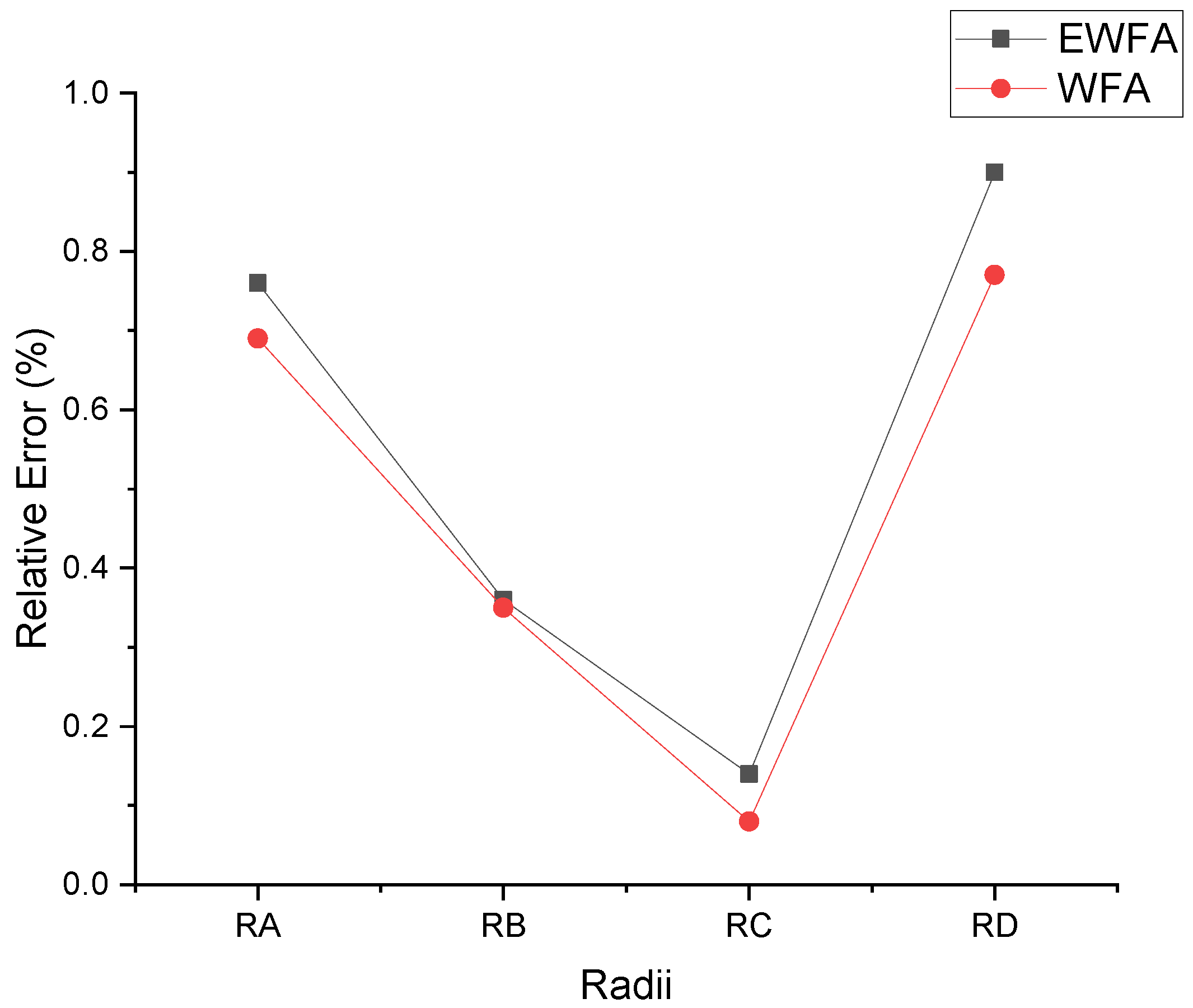

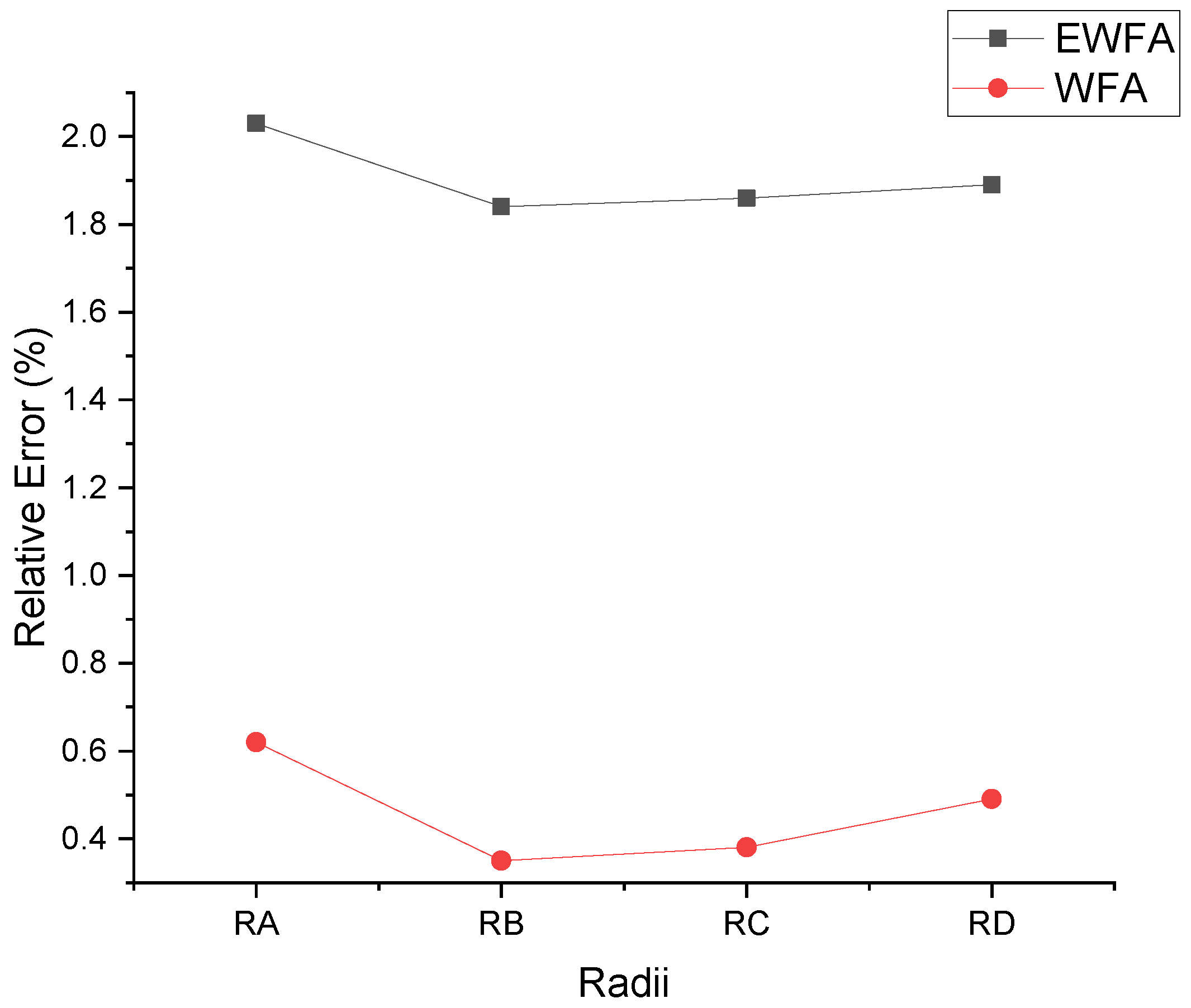

For the first experiment and the second experiment, the error between the calculated radii and the true radii is shown in

Figure 9 and

Figure 10.

It can be seen from

Table 10 and

Table 11 that the convergence speed of the algorithms is fast, and it can basically converge within 10 iterations and obtain the optimal value. As can be seen from

Figure 9 and

Figure 10, compared with the equal-weight midperpendicular algorithm, the four radii obtained by the weighted midperpendicular algorithm have smaller fluctuations and smaller differences with the true radii. Especially in engineering experiments, when the error is large, the optimization effect of the proposed algorithm is more obvious. Therefore, according to the above theoretical verification experiment and engineering experiment results, it can be concluded that the center of the circle fitted by the weighted iterative algorithm is more accurate.

6. Conclusions

Few scholars have studied the combination of spatial center fitting and photogrammetry. This paper examines how to use the center coordinates of the cylinder cross section of the crane to complete the safety assessment of the structure.

Considering the distortion of image points, an algorithm of fitting the center of a circle by means of the midperpendicular is proposed based on the principle of photogrammetry. First, the coordinates of each point on the circle are calculated by photogrammetry, and then the plane of the circle is fitted using the spatial coordinates. Every two points on a circle can obtain one midperpendicular, and the sum of squares of the distances between the center of the circle and the midperpendicular is the smallest. According to this constraint, the intersection points of multiple midperpendiculars are fitted. The intersection point is the center of the circle fitted by the equal weight midperpendicular algorithm. The above is the equal weighted midperpendicular algorithm. On the basis of the equal weighted midperpendicular algorithm, the weighted midperpendicular algorithm is further proposed.The space coordinates obtained by the equal weight midperpendicular algorithm are used as the initial value, and the center of the space circle is projected onto the image. The similarity between the actual midperpendicular and the ideal midperpendicular is calculated by the Hough transform on the image plane. The higher the similarity is, the higher the weight of the corresponding midperpendicular in space is and vice versa. Finally, the weighted midperpendicular is used to solve the space coordinates of the center of the circle, and projection and iteration continue until convergence. The results of the two groups of experiments show that the proposed algorithm based on the basic principle of photogrammetry and the Hough transform has high accuracy, and the effect of the equal weighted midperpendicular algorithm is obviously better than that of the weighted midperpendicular algorithm. It has strong practicability and solves the problem that the center of the circle cannot be directly measured.

The algorithm presented in this paper can be used not only for gantry cranes, but also for other large construction machinery which needs to the center of the circle measured. The algorithm has high universality and engineering application value. In the future, photogrammetry algorithms will be developed for other structural features of port machinery to solve more difficult problems in engineering measurement. Photogrammetry and computer vision technology will also be extended to more engineering fields.