Hierarchical Prototypes Polynomial Softmax Loss Function for Visual Classification

Abstract

1. Introduction

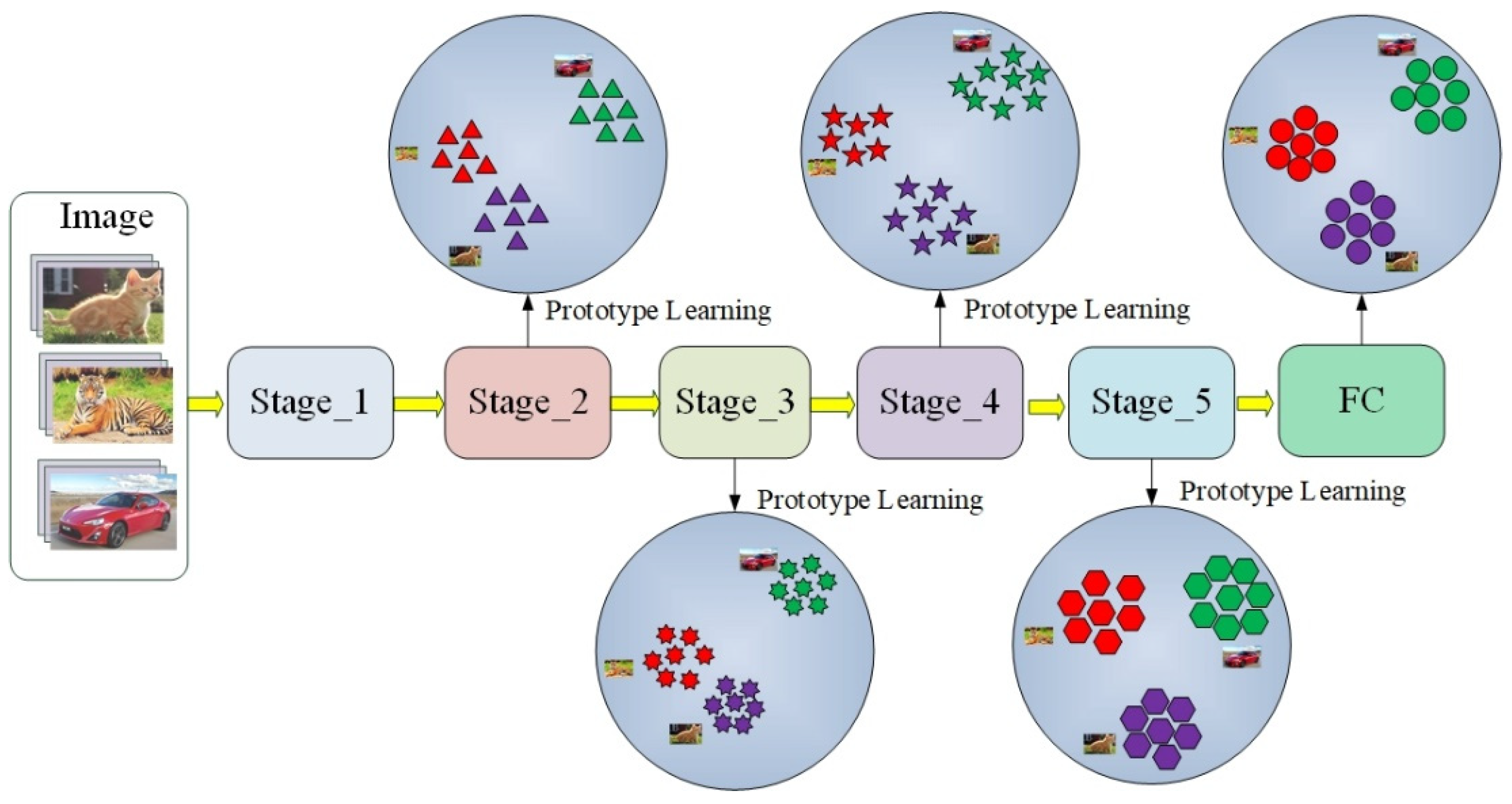

- A hierarchical prototype loss function is proposed. By adding loss functions to different layers of the deep neural network, the performance of the semantic feature extraction at the bottom of the network is effectively improved;

- The loss calculation method used is a polynomial function, which is a kernel method that can effectively improve linear separability of the low-dimensional space;

- Through various experiments using multiple public datasets, it was proven that the proposed method is effective.

2. Related Works

2.1. Loss Function Based on Sample Labels

2.2. Loss Function Based on Regular Constrains

2.3. Loss Function Based on Sample Pair Label

3. The Proposed Method

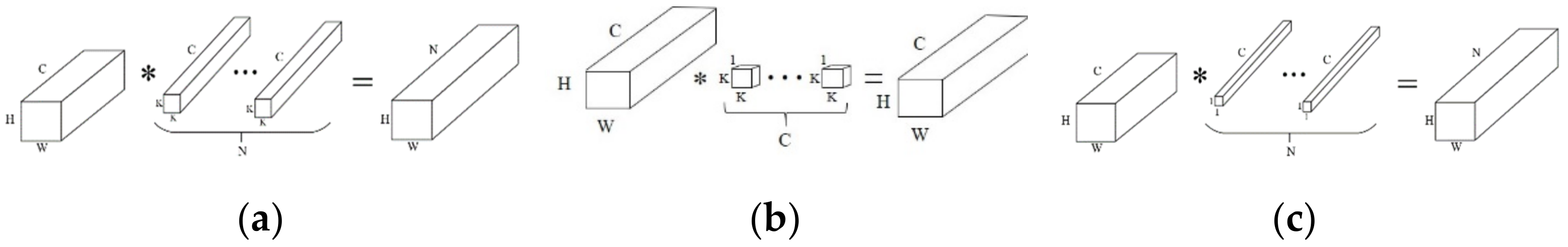

3.1. Lightweight Neural Works

3.2. Prototype Learning

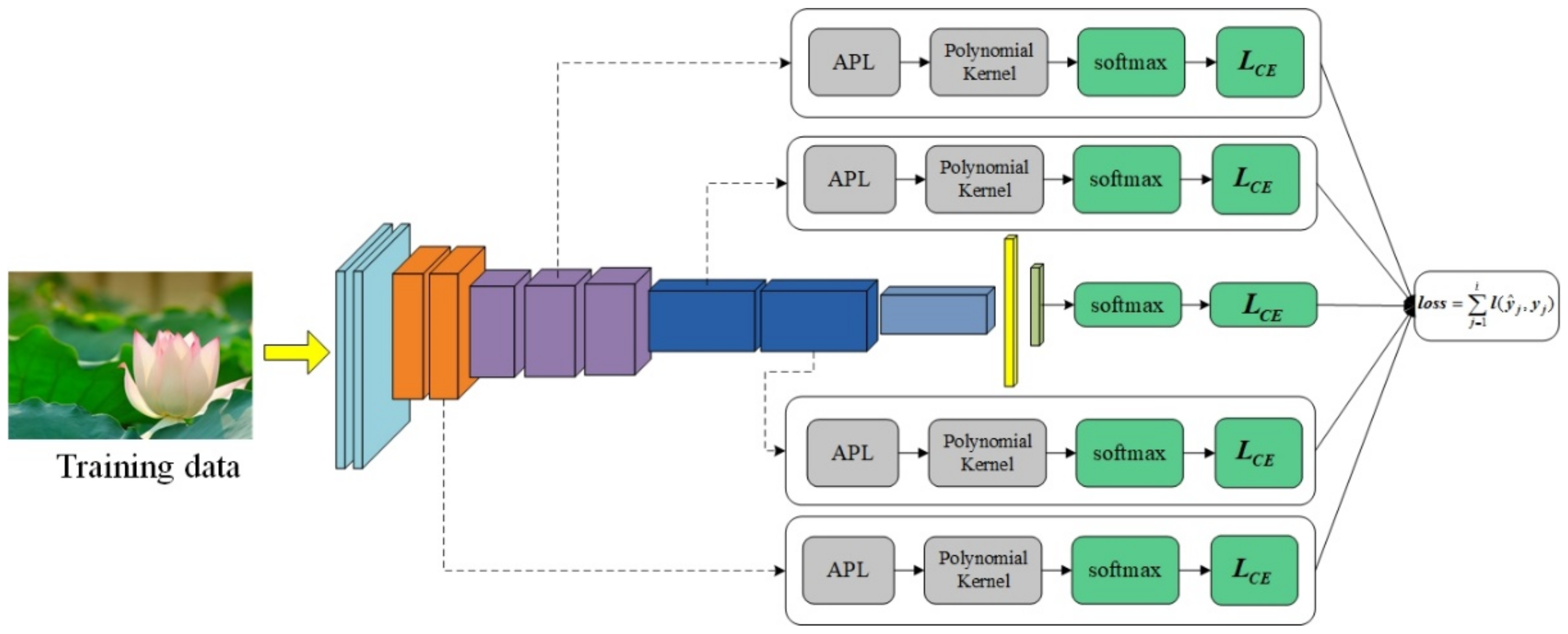

3.3. Hierarchical Prototypes Polynomial Softmax Loss Function

3.4. Optimization of Polynomial Softmax Loss Function

3.5. The Pseudo Code of the Algorithm

- Initialization, the number of iterations ;

- While not converge do;

- ;

- Compute the total loss by ;

- Compute the standard backward propagation error ;

- Update the parameters by ;

- Update the parameters by ;

- End.

4. Experimental Results and Discussion

4.1. Dataset

4.2. Experimental Setup

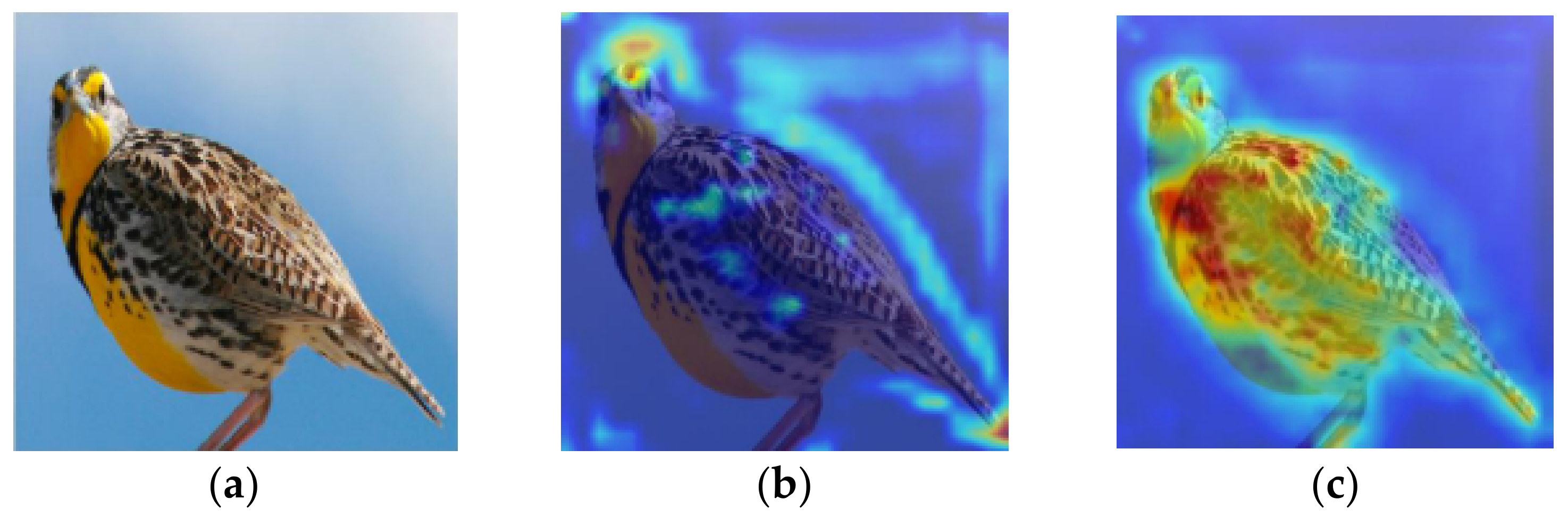

4.3. Ablation Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, Y. A Discriminative Feature Learning Approach for Deep Face Recognition. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 499–515. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Masi, I.; Wu, Y.; Hassner, T.; Natarajan, P. Deep Face Recognition: A Survey. In Proceedings of the 2018 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Parana, Brazil, 29 October–1 November 2018; pp. 471–478. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.-T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; pp. 122–138. [Google Scholar]

- Huang, Y.; Yu, W.; Ding, E.; Garcia-Ortiz, A. EPKF: Energy Efficient Communication Schemes Based on Kalman Filter for IoT. IEEE Internet Things J. 2019, 6, 6201–6211. [Google Scholar] [CrossRef]

- Tan, M.X.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Wan, W.; Zhong, Y.; Li, T.; Chen, J. Rethinking Feature Distribution for Loss Functions in Image Classification. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9117–9126. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Liu, Y.; Li, H.; Wang, X. Learning Deep Features via Congenerous Cosine Loss for Person Recognition. arXiv 2017, arXiv:1702.06890. [Google Scholar]

- Lee, B.S.; Phattharaphon, R.; Yean, S.; Liu, J.G.; Shakya, M. Euclidean Distance based Loss Function for Eye-Gaze Estimation. In Proceedings of the 15th IEEE Sensors Applications Symposium (SAS), Kuala Lumpur, Malaysia, 9–11 March 2020. [Google Scholar]

- Sun, Y.; Chen, Y.H.; Wang, X.G.; Tang, X.O. Deep Learning Face Representation by Joint Identification-Verification. In Proceedings of the 28th Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Gao, R.; Yang, F.; Yang, W.; Liao, Q. Margin Loss: Making Faces More Separable. IEEE Signal Process. Lett. 2018, 25, 308–312. [Google Scholar] [CrossRef]

- Yang, H.M.; Zhang, X.Y.; Yin, F.; Liu, C.L. Robust Classification with Convolutional Prototype Learning. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3474–3482. [Google Scholar]

- Noble, W.S. What is a support vector machine? Nat. Biotechnol. 2006, 24, 1565–1567. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Ranjan, R.; Castillo, C.; Chellappa, R. L2 constrained Softmax Loss for Discriminative Face Verification. arXiv 2019, arXiv:1703.09507. [Google Scholar]

- Liu, W.Y.; Wen, Y.D.; Yu, Z.D.; Yang, M. Large-Margin Softmax Loss for Convolutional Neural Networks. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016. [Google Scholar]

- Liu, W.Y.; Wen, Y.D.; Yu, Z.D.; Li, M.; Raj, B.; Song, L. SphereFace: Deep Hypersphere Embedding for Face Recognition. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6738–6746. [Google Scholar]

- Wu, Y.; Liu, H.; Li, J.; Fu, Y. Deep Face Recognition with Center Invariant Loss. In Proceedings of the Thematic Workshop’17: Proceedings of the on Thematic Workshops of ACM Multimedia 2017, Mountain View, CA, USA, 23–27 October 2017; pp. 408–414. [Google Scholar]

- Chopra, S.; Hadsell, R.; LeCun, Y. Learning a similarity metric discriminatively, with application to face verification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 531, pp. 539–546. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. FaceNet: A unified embedding for face recognition and clustering. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Wu, C.Y.; Manmatha, R.; Smola, A.J.; Krähenbühl, P. Sampling Matters in Deep Embedding Learning. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2859–2867. [Google Scholar]

- Ding, E.; Cheng, Y.; Xiao, C.; Liu, Z.; Yu, W. Efficient Attention Mechanism for Dynamic Convolution in Lightweight Neural Network. Appl. Sci. 2021, 11, 3111. [Google Scholar] [CrossRef]

- Goldberg, Y.; Elhadad, M. splitSVM: Fast, space-efficient, non-heuristic, polynomial kernel computation for NLP applications. In Proceedings of the 46th Annual Meeting of the Association for Computational Linguistics on Human Language Technologies: Short Papers, Columbus, OH, USA, 16–17 June 2008; pp. 237–240. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Kai, L.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Han, K.; Wang, Y.; Zhang, Q.; Zhang, W.; Xu, C.; Zhang, T. Model Rubik’s Cube: Twisting Resolution, Depth and Width for TinyNets. arXiv 2020, arXiv:2010.14819. [Google Scholar]

- Khosla, A.; Jayadevaprakash, N.; Yao, B.; Fei-Fei, L. Novel Dataset for Fine-Grained Image Categorization: Stanford Dogs. In Proceedings of the CVPR Workshop on Fine-Grained Visual Categorization (FGVC), Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Krause, J.; Stark, M.; Deng, J.; Fei-Fei, L. 3D Object Representations for Fine-Grained Categorization. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 554–561. [Google Scholar]

- Welinder, P.; Branson, S.; Mita, T.; Wah, C.; Schroff, F.; Belongie, S.; Perona, P. The Caltech-UCSD Birds-200-2011 Dataset; Technical Report CNS-TR-2010-001; California Institute of Technology: Pasadena, CA, USA, 26 October 2011. [Google Scholar]

- Ketkar, N. Introduction to PyTorch. In Deep Learning with Python: A Hands-on Introduction; Apress: Berkeley, CA, USA, 2017; pp. 195–208. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

| Model | ImageNet-100 | Stanford Dogs | Stanford Cars | CUB-200-2011 | ||||

|---|---|---|---|---|---|---|---|---|

| Orig | Ours | Orig | Ours | Orig | Ours | Orig | Ours | |

| Mobilenetv2 (×0.5) | 74.17 | 74.84 | 44.56 | 52.63 | 83.73 | 85.25 | 52.36 | 54.46 |

| Mobilenetv2 (×0.75) | 76.87 | 78.71 | 45.81 | 56.33 | 85.81 | 86.62 | 59.47 | 61.60 |

| Mobilenetv2 (×1.0) | 78.97 | 81.05 | 48.43 | 60.42 | 87.07 | 87.97 | 61.28 | 66.08 |

| Mobilenetv3-small (×0.5) | 65.72 | 65.98 | 33.42 | 40.30 | 72.92 | 75.04 | 42.85 | 47.54 |

| Mobilenetv3-small (×0.75) | 67.87 | 68.40 | 38.59 | 44.15 | 74.99 | 77.94 | 52.15 | 55.63 |

| Mobilenetv3-small (×1.0) | 70.00 | 72.15 | 46.51 | 47.45 | 79.10 | 80.69 | 52.39 | 56.65 |

| Mobilenetv3-large (×0.5) | 71.58 | 74.37 | 41.83 | 51.42 | 80.45 | 84.80 | 49.59 | 58.76 |

| Mobilenetv3-large (×0.75) | 75.81 | 77.30 | 45.27 | 55.53 | 80.61 | 85.23 | 52.63 | 60.12 |

| Mobilenetv3-large (×1.0) | 76.91 | 78.56 | 47.01 | 57.31 | 82.48 | 86.01 | 55.73 | 62.68 |

| Model | ImageNet100 | ||

|---|---|---|---|

| Original Softmax Loss | RBF-Softmax | Qurs | |

| Mobilenetv2 (×1.0) | 78.97 | 79.51 | 81.05 |

| Mobilenetv3-small (×1.0) | 70.00 | 70.68 | 72.15 |

| Mobilenetv3-large (×1.0) | 76.91 | 77.31 | 78.56 |

| Model | Add Prototypes Loss or Not | ImageNet100 | Stanford Dogs | ||||||

|---|---|---|---|---|---|---|---|---|---|

| FC | Stage 5 | Stage 4 | Stage 3 | Stage 2 | CE | PS | CE | PS | |

| Mobilenetv2 (×1.0) | ◯ | ◯ | ◯ | ◯ | ◯ | 78.97 (Orig) | 48.43 (Orig) | ||

| ✓ | ◯ | ◯ | ◯ | ◯ | 78.07 | 78.68 | 53.99 | 53.05 | |

| ✓ | ✓ | ◯ | ◯ | ◯ | 79.70 | 78.66 | 54.54 | 56.75 | |

| ✓ | ✓ | ✓ | ◯ | ◯ | 79.85 | 81.05 | 57.61 | 60.42 | |

| ✓ | ✓ | ✓ | ✓ | ◯ | 79.23 | 80.54 | 58.86 | 59.73 | |

| ✓ | ✓ | ✓ | ✓ | ✓ | 79.30 | 78.91 | 59.42 | 58.76 | |

| Mobilenetv3-small (×1.0) | ◯ | ◯ | ◯ | ◯ | ◯ | 70.00 (Orig) | 42.75 (Orig) | ||

| ✓ | ◯ | ◯ | ◯ | ◯ | 70.03 | 71.37 | 45.16 | 43.97 | |

| ✓ | ✓ | ◯ | ◯ | ◯ | 70.21 | 70.37 | 45.03 | 45.31 | |

| ✓ | ✓ | ✓ | ◯ | ◯ | 70.64 | 72.15 | 47.05 | 44.92 | |

| ✓ | ✓ | ✓ | ✓ | ◯ | 69.92 | 70.21 | 47.75 | 47.53 | |

| ✓ | ✓ | ✓ | ✓ | ✓ | 68.95 | 70.43 | 46.71 | 46.51 | |

| Mobilenetv3-large (×1.0) | ◯ | ◯ | ◯ | ◯ | ◯ | 76.91 (Orig) | 47.01 (Orig) | ||

| ✓ | ◯ | ◯ | ◯ | ◯ | 76.45 | 76.89 | 50.61 | 52.51 | |

| ✓ | ✓ | ◯ | ◯ | ◯ | 76.22 | 76.71 | 51.13 | 51.90 | |

| ✓ | ✓ | ✓ | ◯ | ◯ | 78.14 | 78.56 | 56.76 | 56.40 | |

| ✓ | ✓ | ✓ | ✓ | ◯ | 77.42 | 77.73 | 56.40 | 57.31 | |

| ✓ | ✓ | ✓ | ✓ | ✓ | 76.93 | 77.83 | 55.68 | 57.14 | |

| Model | Alpha | Gamma | ImageNet100 | Stanford Dogs |

|---|---|---|---|---|

| Mobilenetv2 (×1.0) | 2 | 0.5 | 79.39 | 59.10 |

| 0.8 | 79.91 | 59.75 | ||

| 1 | 81.05 | 60.42 | ||

| 3 | 0.5 | 78.91 | 57.32 | |

| 0.8 | 79.61 | 59.51 | ||

| 1 | 79.97 | 59.37 |

| Model | GAP | GMP | ImageNet100 | Stanford Dogs |

|---|---|---|---|---|

| Mobilenetv2 (×1.0) | ✓ | ◯ | 81.05 | 60.42 |

| ◯ | ✓ | 80.29 | 58.94 | |

| Mobilenetv3-small (×1.0) | ✓ | ◯ | 72.15 | 47.53 |

| ◯ | ✓ | 71.52 | 46.60 | |

| Mobilenetv3-large (×1.0) | ✓ | ◯ | 78.56 | 57.31 |

| ◯ | ✓ | 77.48 | 57.02 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, C.; Liu, X.; Sun, C.; Liu, Z.; Ding, E. Hierarchical Prototypes Polynomial Softmax Loss Function for Visual Classification. Appl. Sci. 2022, 12, 10336. https://doi.org/10.3390/app122010336

Xiao C, Liu X, Sun C, Liu Z, Ding E. Hierarchical Prototypes Polynomial Softmax Loss Function for Visual Classification. Applied Sciences. 2022; 12(20):10336. https://doi.org/10.3390/app122010336

Chicago/Turabian StyleXiao, Chengcheng, Xiaowen Liu, Chi Sun, Zhongyu Liu, and Enjie Ding. 2022. "Hierarchical Prototypes Polynomial Softmax Loss Function for Visual Classification" Applied Sciences 12, no. 20: 10336. https://doi.org/10.3390/app122010336

APA StyleXiao, C., Liu, X., Sun, C., Liu, Z., & Ding, E. (2022). Hierarchical Prototypes Polynomial Softmax Loss Function for Visual Classification. Applied Sciences, 12(20), 10336. https://doi.org/10.3390/app122010336