Large-Scale Printed Chinese Character Recognition for ID Cards Using Deep Learning and Few Samples Transfer Learning

Abstract

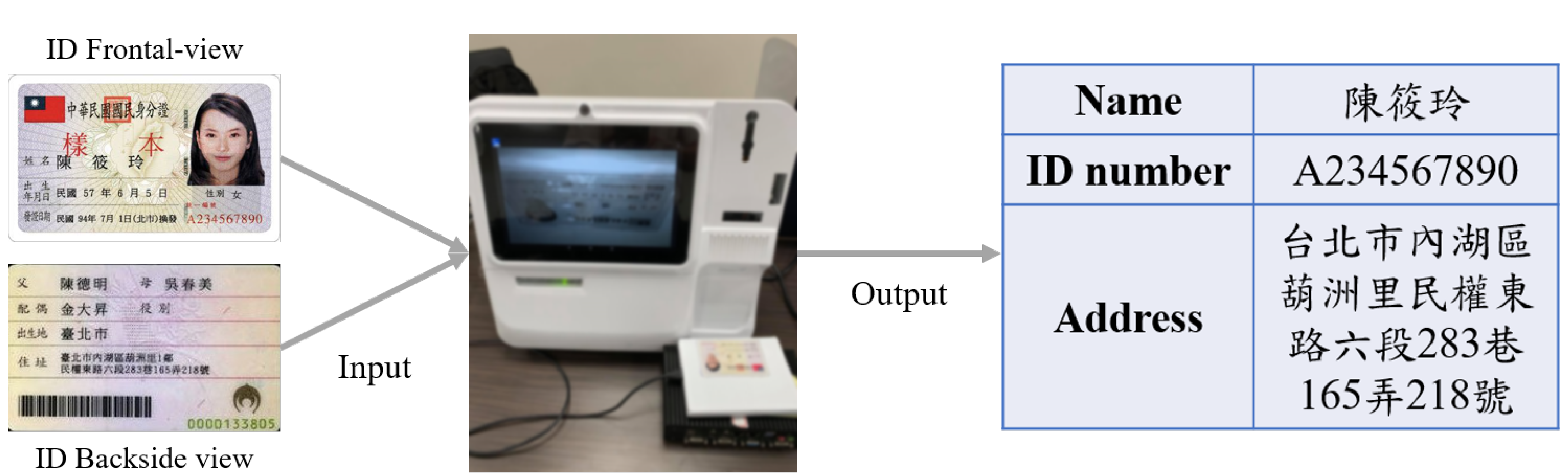

1. Introduction

- We developed a large-scale OCR system to identify printed Chinese characters using a deep learning neural network.

- We propose a new method to generate printed Chinese characters that simulates background noise patterns found on ID cards. To handle the range of input image changes and increase the recognition capability of a convolutional neural network (CNN) model, a massive dataset of more than 19.6 million photos was developed.

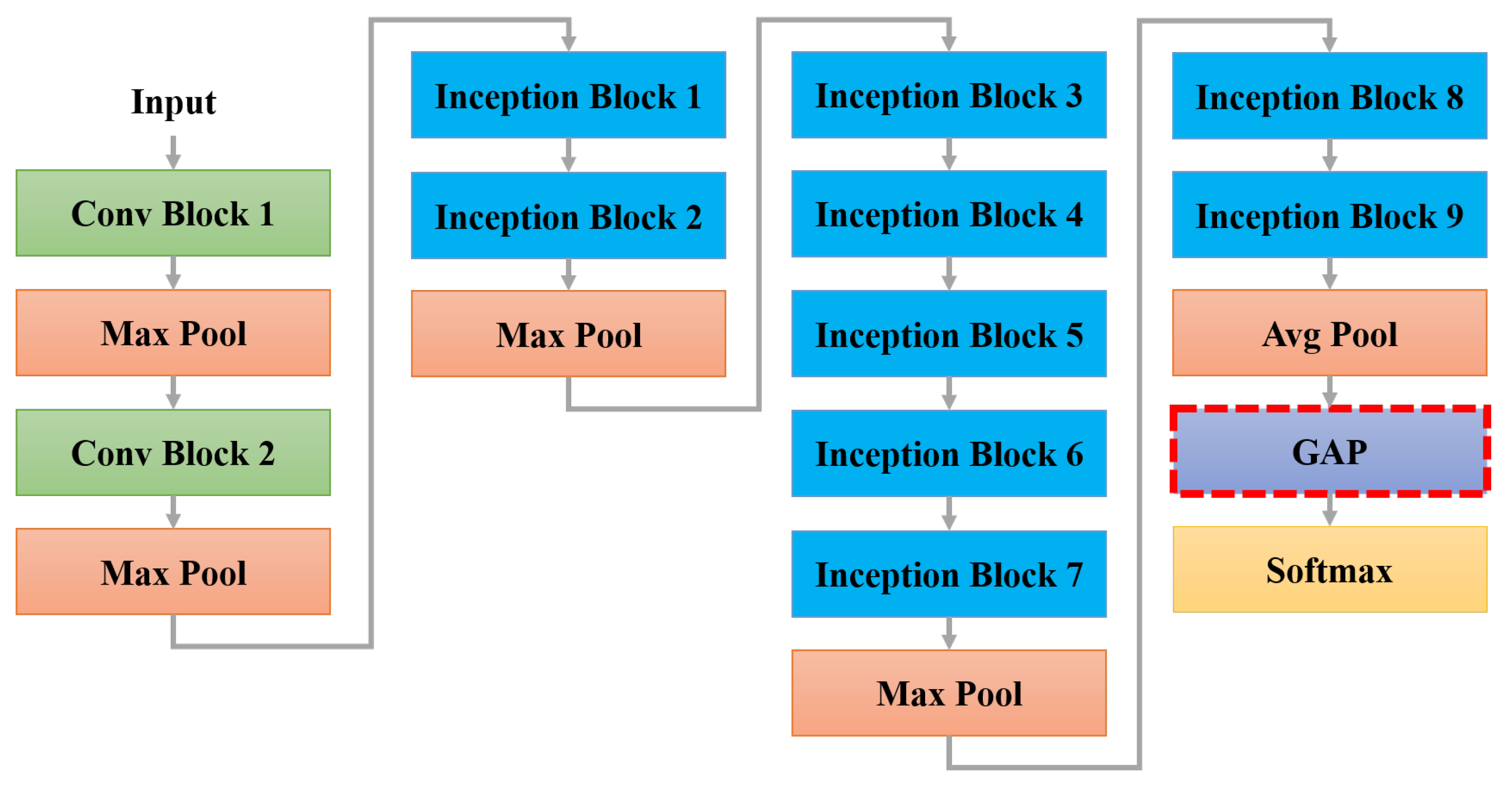

- We improved the recognition performance of the GoogLeNet-GAP model by incorporating transfer learning.

- The experimental results demonstrate the effectiveness of the proposed framework; the accuracy of the large-scale 13,070-character recognition system was as high as 99.39%, as evaluated on our dataset of images of real ID cards.

2. Related Work

2.1. Deep Learning Chinese Character Recognition

2.2. Handwritten Chinese Character Dataset

2.3. Large-Scale Classification

2.4. Transfer Learning

3. Methodology

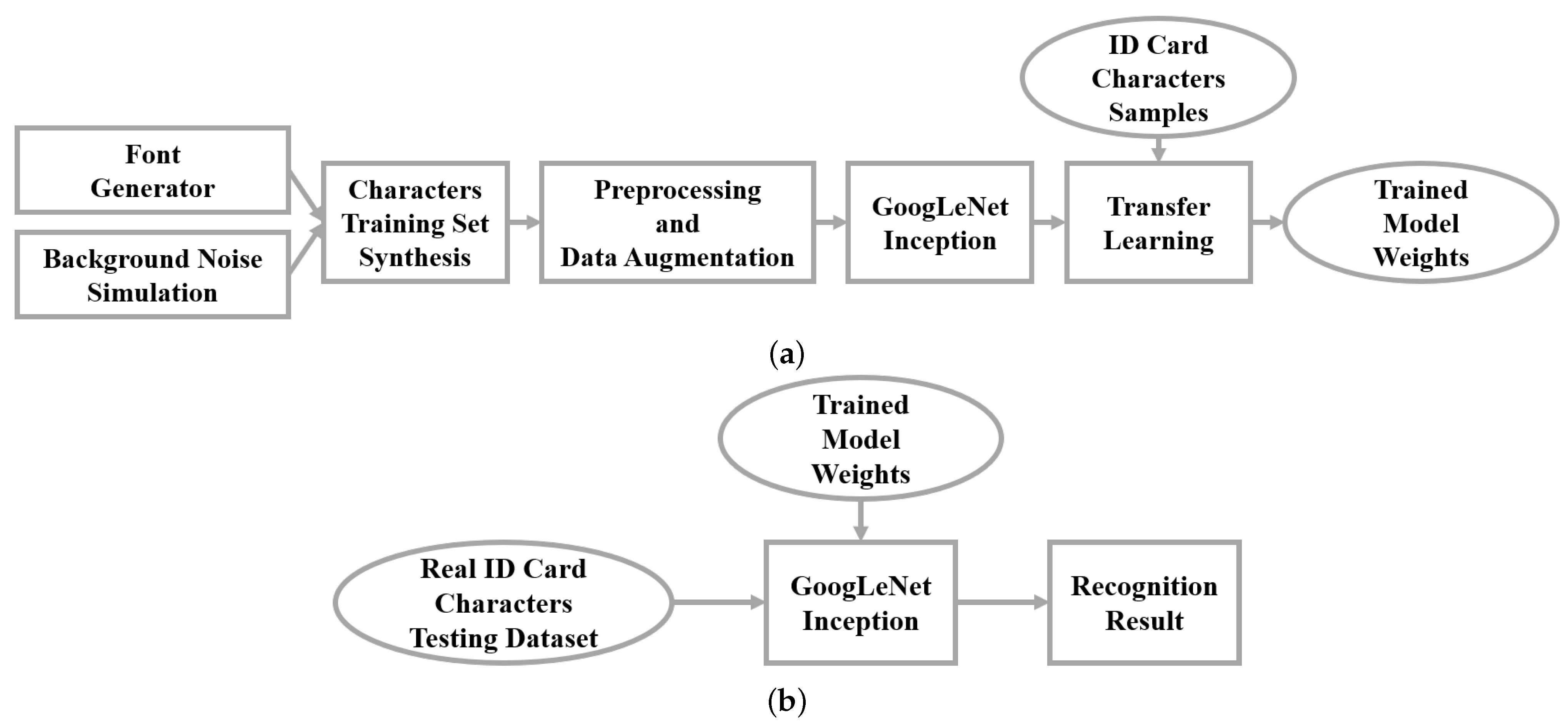

3.1. Training Dataset Synthesis

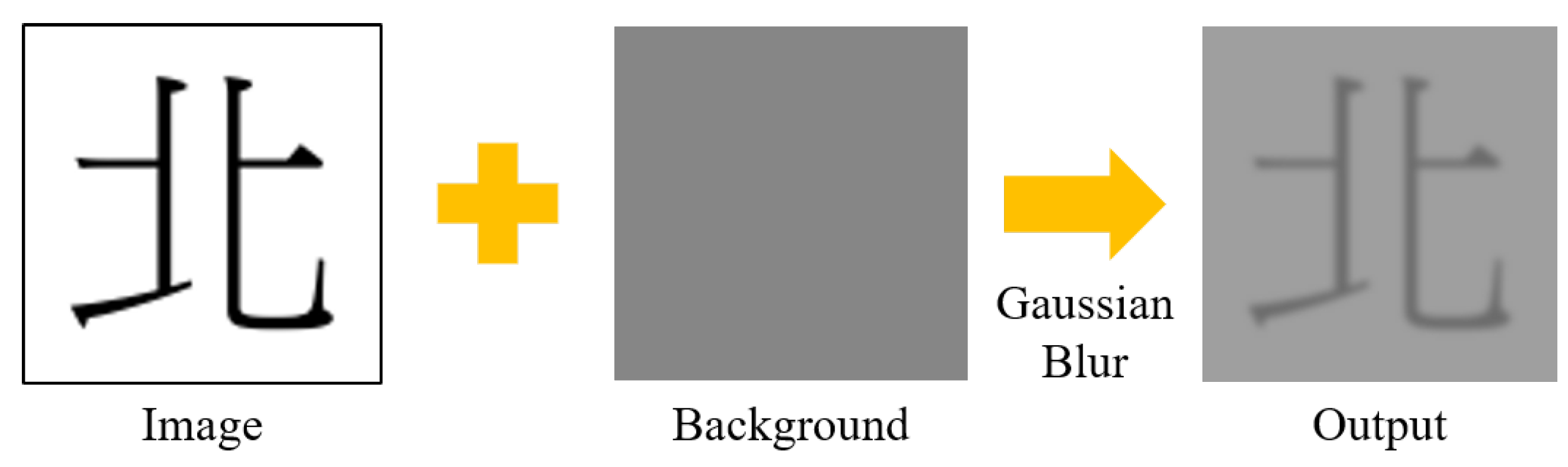

- (1)

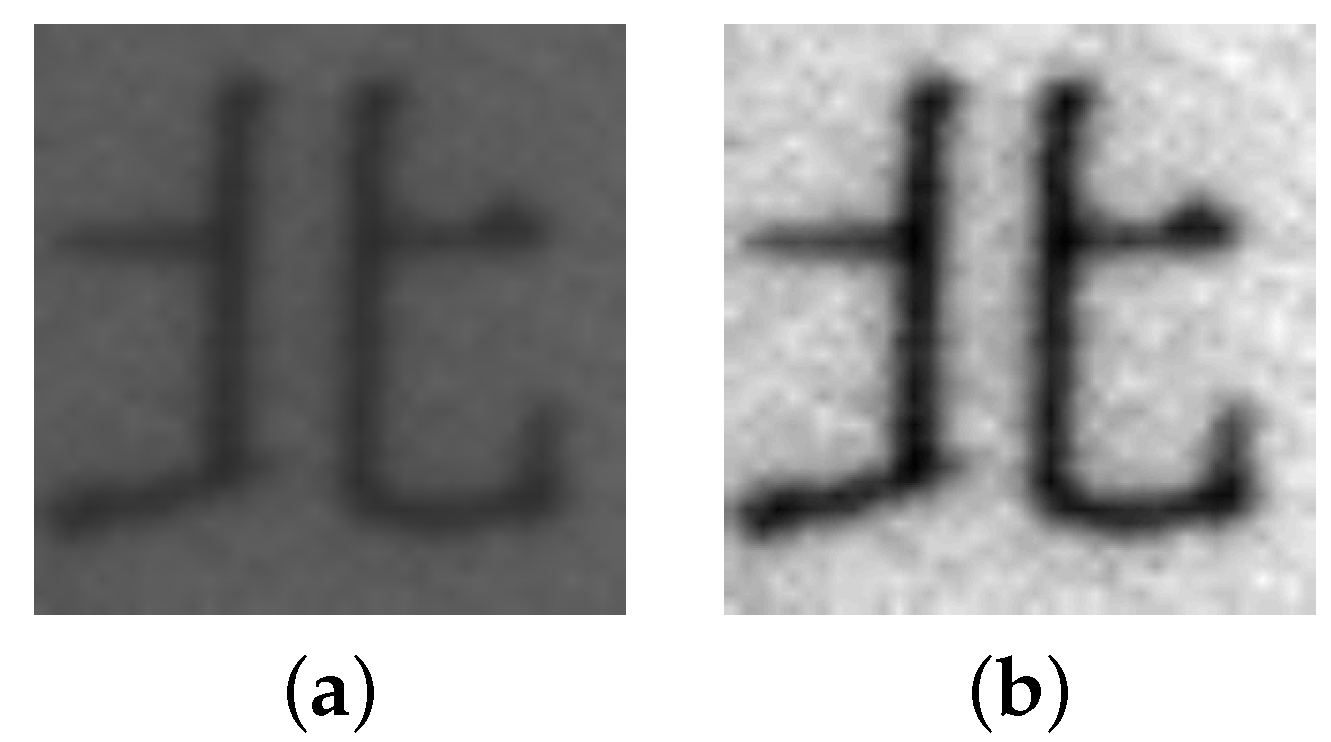

- To simulate various environmental lighting changes, we overlaid the generated character images with a different gray-level background, as depicted in Figure 3. The gray level was randomly selected. Additionally, the resultant character image was blurred with a Gaussian filter to smooth the appearance of the generated character image.

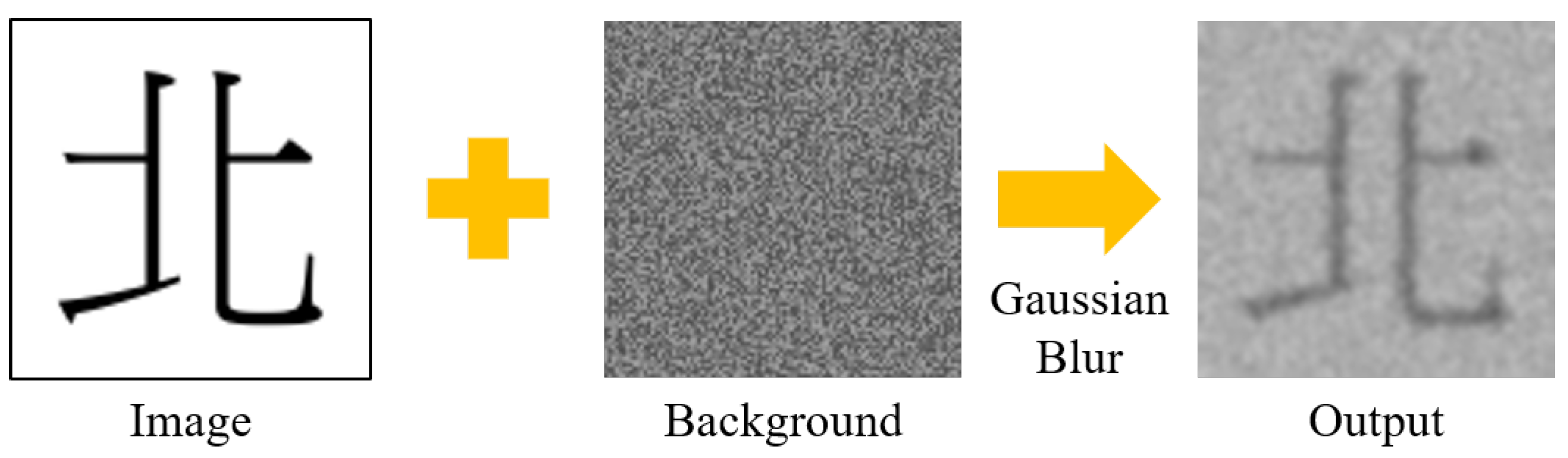

- (2)

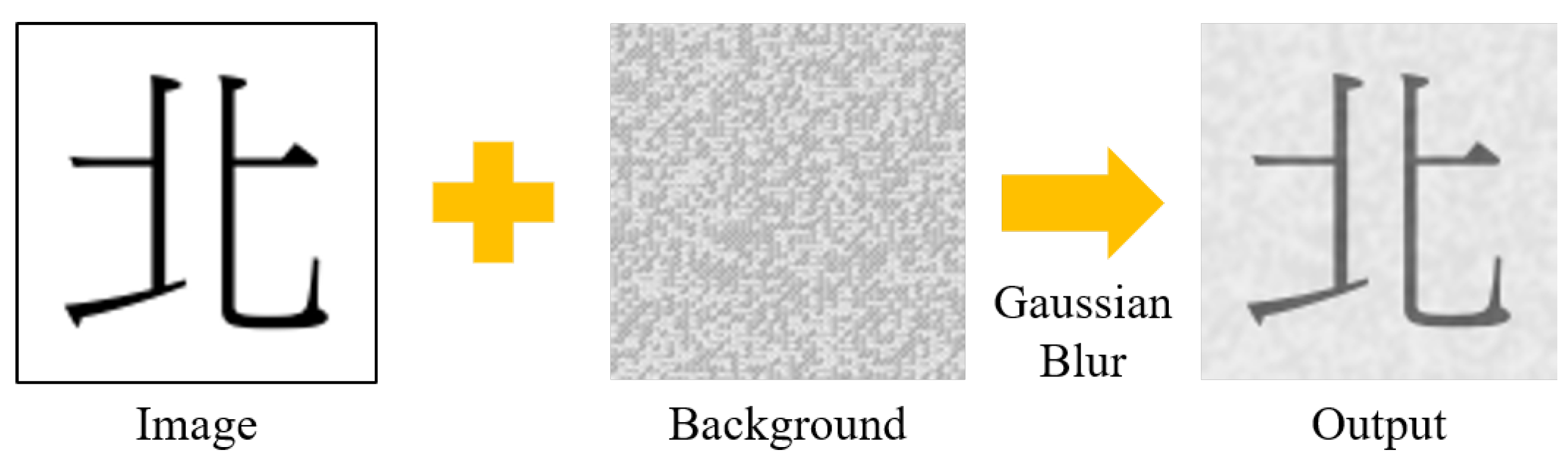

- The second type of background was random noise. First, a pure gray-level background was generated. Next, a positive or negative random number was added to the gray-level background. Then, the character image was merged with the noisy background, as shown in Figure 4. Finally, Gaussian blur was applied to smoothen the appearance.

- (3)

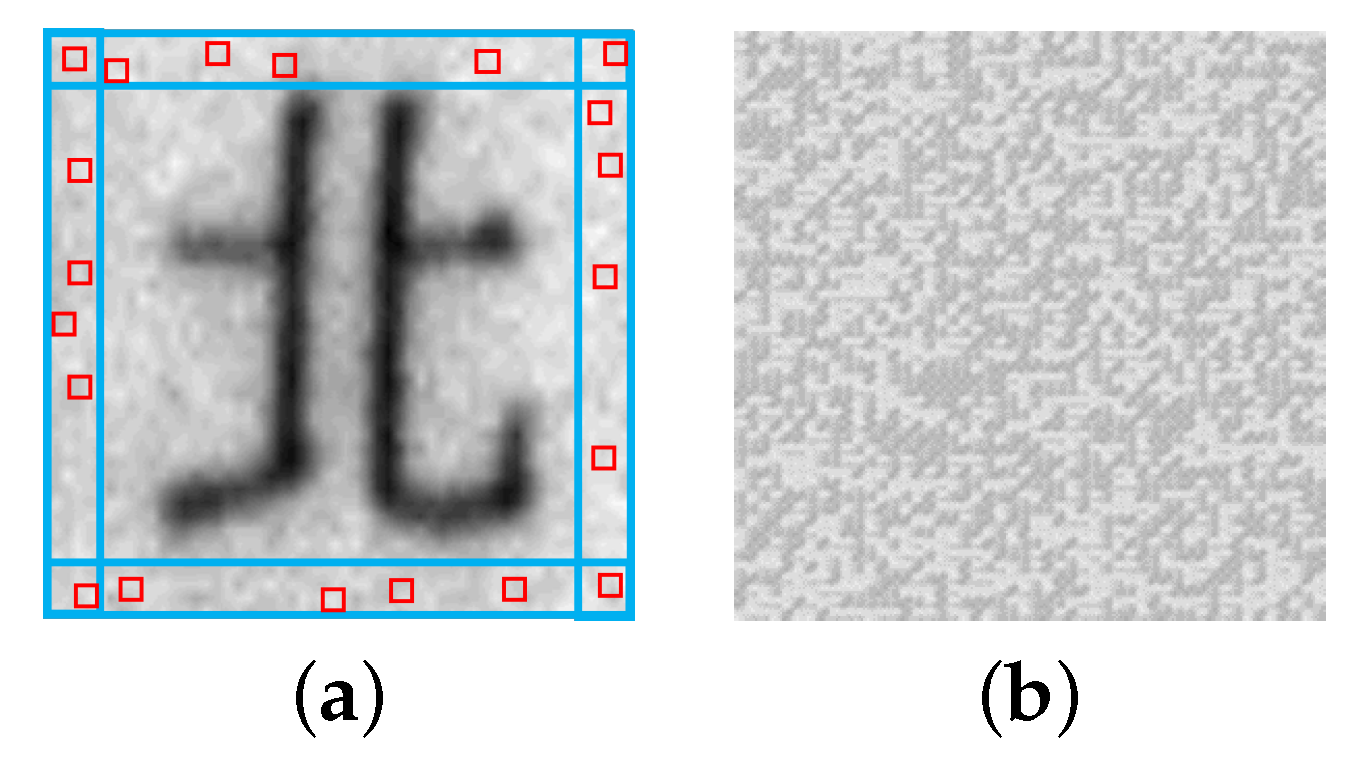

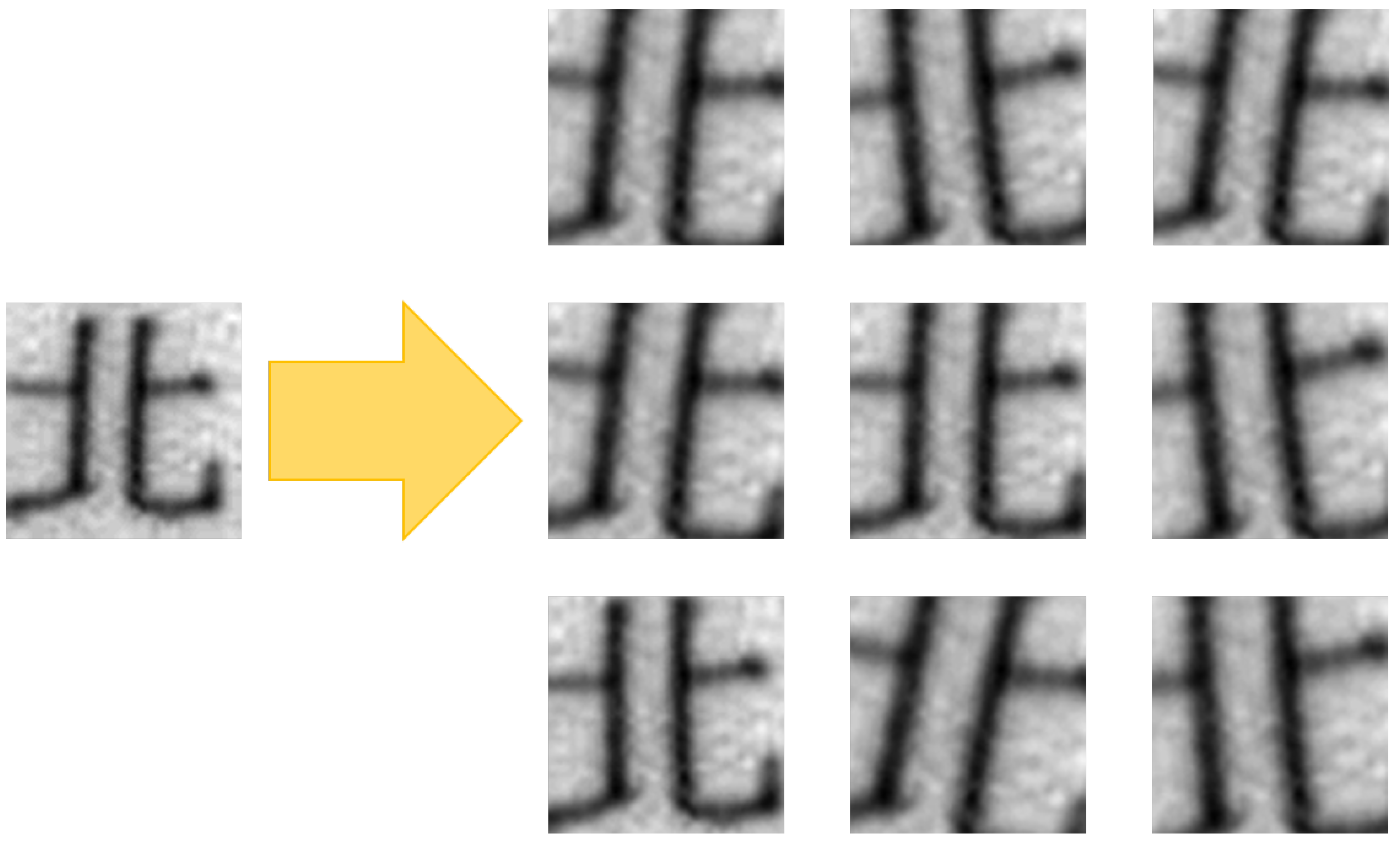

- To improve the instability caused by random noise, we further propose a patch stitching method to simulate the ID card background. First, we randomly selected 50 patches of 2 × 2 images from the surrounding areas of a real ID card image, as shown in the red boxes in Figure 5a. Next, these patches were stitched in order from left to right and from top to bottom into a 100 × 100-pixel background image, as shown in Figure 5b. After obtaining the stitching background, it was combined with the character image, and Gaussian blurring was performed. Figure 6 illustrates the process.

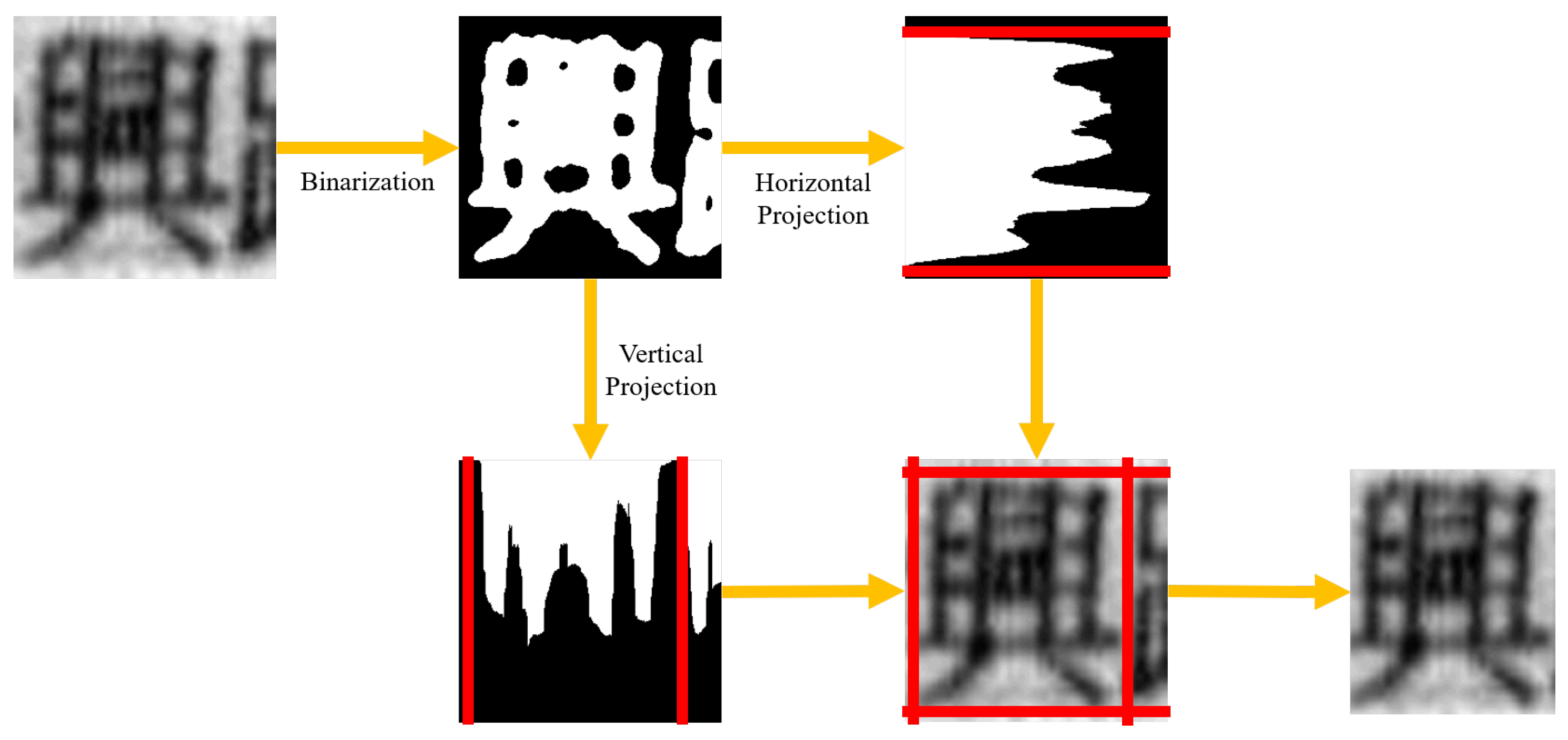

3.2. Image Preprocessing and Data Augmentation

3.3. Baseline Model Pre-Training

3.4. Mixed Data Model and Transfer Learning Model

4. Experimental Results

4.1. Dataset and Implementation Details

4.2. Ablation Study

4.3. Mixed Data Model and Transfer Learning Model Results

4.3.1. Mixed Data Model

4.3.2. Transfer Learning Model

4.4. Error Analysis and Performance Enhancement

4.5. Performance Comparison

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Aprillian, H.D.D.; Purnomo, H.D.; Purwanto, H. Utilization of Optical Character Recognition Technology in Reading Identity Cards. Int. J. Inf. Technol. Bus. 2019, 2, 38–46. [Google Scholar]

- Purba, A.M.; Harjoko, A.; Wibowo, M.E. Text Detection In Indonesian Identity Card Based On Maximally Stable Extremal Regions. Indones. J. Comput. Cybern. Syst. 2019, 13, 177–188. [Google Scholar] [CrossRef]

- Satyawan, W.; Pratama, M.O.; Jannati, R.; Muhammad, G.; Fajar, B.; Hamzah, H.; Fikri, R.; Kristian, K. Citizen Id Card Detection using Image Processing and Optical Character Recognition. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2019; Volume 1235, p. 012049. [Google Scholar]

- Tavakolian, N.; Nazemi, A.; Fitzpatrick, D. Real-time information retrieval from Identity cards. arXiv 2020, arXiv:2003.12103. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Xu, Y.; Shan, S.; Qiu, Z.; Jia, Z.; Shen, Z.; Wang, Y.; Shi, M.; Eric, I.; Chang, C. End-to-end subtitle detection and recognition for videos in East Asian languages via CNN ensemble. Signal Process. Image Commun. 2018, 60, 131–143. [Google Scholar] [CrossRef]

- Zhong, Z.; Jin, L.; Xie, Z. High performance offline handwritten Chinese character recognition using GoogLeNet and directional feature maps. In Proceedings of the 2015 13th International Conference on Document Analysis and Recognition (ICDAR), Tunis, Tunisia, 23–26 August 2015; pp. 846–850. [Google Scholar] [CrossRef]

- Yin, F.; Wang, Q.F.; Zhang, X.Y.; Liu, C.L. ICDAR 2013 Chinese Handwriting Recognition Competition. In Proceedings of the 2013 12th International Conference on Document Analysis and Recognition, Washington, DC, USA, 25–28 August 2013; pp. 1464–1470. [Google Scholar] [CrossRef]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Li, Z.; Teng, N.; Jin, M.; Lu, H. Building efficient CNN architecture for offline handwritten Chinese character recognition. Int. J. Doc. Anal. Recognit. 2018, 21, 233–240. [Google Scholar] [CrossRef]

- Xiao, X.; Jin, L.; Yang, Y.; Yang, W.; Sun, J.; Chang, T. Building fast and compact convolutional neural networks for offline handwritten Chinese character recognition. Pattern Recognit. 2017, 72, 72–81. [Google Scholar] [CrossRef]

- Melnyk, P.; You, Z.; Li, K. A high-performance CNN method for offline handwritten Chinese character recognition and visualization. Soft Comput. 2019, 24, 7977–7987. [Google Scholar] [CrossRef]

- Su, Y.S.; Chou, C.H.; Chu, Y.L.; Yang, Z.Y. A Finger-Worn Device for Exploring Chinese Printed Text With Using CNN Algorithm on a Micro IoT Processor. IEEE Access 2019, 7, 116529–116541. [Google Scholar] [CrossRef]

- Yao, L.; Mao, C.; Luo, Y. Graph convolutional networks for text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 7370–7377. [Google Scholar]

- Liu, B.; Xu, X.; Zhang, Y. Offline Handwritten Chinese Text Recognition with Convolutional Neural Networks. arXiv 2020, arXiv:2006.15619. [Google Scholar]

- Wang, J.; Wu, R.; Zhang, S. Robust Recognition of Chinese Text from Cellphone-acquired Low-quality Identity Card Images Using Convolutional Recurrent Neural Network. Sens. Mater. 2021, 33, 1187–1198. [Google Scholar] [CrossRef]

- Du, Y.; Li, C.; Guo, R.; Yin, X.; Liu, W.; Zhou, J.; Bai, Y.; Yu, Z.; Yang, Y.; Dang, Q.; et al. PP-OCR: A practical ultra lightweight OCR system. arXiv 2020, arXiv:2009.09941. [Google Scholar]

- PaddleOCR. Available online: https://github.com/PaddlePaddle/PaddleOCR (accessed on 26 May 2021).

- EasyOCR. Available online: https://github.com/JaidedAI/EasyOCR (accessed on 11 September 2021).

- Shi, B.; Bai, X.; Yao, C. An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 2298–2304. [Google Scholar] [CrossRef]

- Graves, A.; Fernández, S.; Gomez, F.; Schmidhuber, J. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 369–376. [Google Scholar]

- Chen, P.C. Traditional Chinese Handwriting Dataset. 2020. Available online: https://github.com/AI-FREE-Team/Traditional-Chinese-Handwriting-Dataset (accessed on 19 December 2021).

- Xu, Y.; Yin, F.; Wang, D.H.; Zhang, X.Y.; Zhang, Z.; Liu, C.L. CASIA-AHCDB: A Large-Scale Chinese Ancient Handwritten Characters Database. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 793–798. [Google Scholar] [CrossRef]

- Zhong, Z.; Jin, L.; Feng, Z. Multi-font printed Chinese character recognition using multi-pooling convolutional neural network. In Proceedings of the 2015 13th International Conference on Document Analysis and Recognition (ICDAR), Tunis, Tunisia, 23–26 August 2015; pp. 96–100. [Google Scholar] [CrossRef]

- Qiu, S. Global weighted average pooling bridges pixel-level localization and image-level classification. arXiv 2018, arXiv:1809.08264. [Google Scholar]

- Zhang, Q.; Lee, K.C.; Bao, H.; You, Y.; Li, W.; Guo, D. Large Scale Classification in Deep Neural Network with Label Mapping. In Proceedings of the 2018 IEEE International Conference on Data Mining Workshops (ICDMW), Singapore, 17–20 November 2018; pp. 1134–1143. [Google Scholar] [CrossRef]

- Zhang, J.; Zhu, Y.; Du, J.; Dai, L. Radical Analysis Network for Zero-Shot Learning in Printed Chinese Character Recognition. In Proceedings of the 2018 IEEE International Conference on Multimedia and Expo (ICME), San Diego, CA, USA23–27 July 2018; pp. 1–6. [Google Scholar] [CrossRef][Green Version]

- Zhang, J.; Du, J.; Dai, L. Radical analysis network for learning hierarchies of Chinese characters. Pattern Recognit. 2020, 103, 107305. [Google Scholar] [CrossRef]

- Wu, C.; Wang, Z.R.; Du, J.; Zhang, J.; Wang, J. Joint Spatial and Radical Analysis Network For Distorted Chinese Character Recognition. In Proceedings of the 2019 International Conference on Document Analysis and Recognition Workshops (ICDARW), Sydney, Australia, 22–25 September 2019; Volume 5, pp. 122–127. [Google Scholar] [CrossRef]

- Hong, D.; Gao, L.; Yokoya, N.; Yao, J.; Chanussot, J.; Du, Q.; Zhang, B. More diverse means better: Multimodal deep learning meets remote-sensing imagery classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4340–4354. [Google Scholar] [CrossRef]

- Qiao, S.; Liu, C.; Shen, W.; Yuille, A. Few-Shot Image Recognition by Predicting Parameters from Activations. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7229–7238. [Google Scholar] [CrossRef]

- Ao, X.; Zhang, X.Y.; Yang, H.M.; Yin, F.; Liu, C.L. Cross-Modal Prototype Learning for Zero-Shot Handwriting Recognition. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 589–594. [Google Scholar] [CrossRef]

- Tang, Y.; Peng, L.; Xu, Q.; Wang, Y.; Furuhata, A. CNN Based Transfer Learning for Historical Chinese Character Recognition. In Proceedings of the 2016 12th IAPR Workshop on Document Analysis Systems (DAS), Santorini, Greece, 11–14 April 2016; pp. 25–29. [Google Scholar] [CrossRef]

- Tang, Y.; Wu, B.; Peng, L.; Liu, C. Semi-Supervised Transfer Learning for Convolutional Neural Network Based Chinese Character Recognition. In Proceedings of the 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 1, pp. 441–447. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Clark, A. Pillow (PIL Fork) Documentation. 2015. Available online: https://pillow.readthedocs.io/en/stable/ (accessed on 19 December 2021).

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In European Conference on Computer Vision (ECCV); Springer: Berlin/Heidelberg, Germany, 2014; pp. 818–833. [Google Scholar]

- Hong, D.; Han, Z.; Yao, J.; Gao, L.; Zhang, B.; Plaza, A.; Chanussot, J. Spectralformer: Rethinking hyperspectral image classification with transformers. IEEE Trans. Geosci. Remote Sens. 2021. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A survey on visual transformer. arXiv 2020, arXiv:2012.12556. [Google Scholar]

- Kwon, H.; Baek, J.W. Adv-Plate Attack: Adversarially Perturbed Plate for License Plate Recognition System. J. Sens. 2021, 2021, 6473833. [Google Scholar] [CrossRef]

| Handwritten Text | Our Input Text | |

|---|---|---|

| Sample Image “North” |  |  |

| Complexity | High | High |

| Background | Clean | Noisy |

| Image Size | Specify | Small |

| # of Training Samples per Character | MelnykNet-Res Accuracy | GoogLeNet-GAP Accuracy |

|---|---|---|

| 300 | 21.64% | 51.40% |

| 600 | 41.75% | 90.62% |

| 900 | 53.40% | 95.71% |

| 1500 | 68.95% | 95.35% |

| Dataset | # of Characters | # of Images | Type |

|---|---|---|---|

| CASIA-HWDB1.0 | 3866 | 1,609,136 | Simplified Chinese |

| CASIA-HWDB1.1 | 3755 | 1,121,749 | Simplified Chinese |

| CASIA-HWDB1.2 | 3319 | 990,989 | Simplified Chinese |

| Chinese MNIST | 13,065 | 587,925 | Traditional Chinese |

| CASIA-AHCDB | 10,350 | more than 2.2 million | Traditional Chinese |

| Ours | 13,070 | 19.6 million | Traditional Chinese |

| # of Training Samples per Character | FC | GAP |

|---|---|---|

| 300 | 41.59% | 51.40% |

| 600 | 87.74% | 90.62% |

| 900 | 85.56% | 95.71% |

| 1500 | 91.20% | 95.35% |

| # of Classes (Characters) | GoogLeNet-FC | GoogLeNet-GAP | ||||

|---|---|---|---|---|---|---|

| # of Parameters (M) | Training Time (s/per epoch) | Recognition Time (s/per char) | # of Parameters (M) | Training Time (s/per epoch) | Recognition Time (s/per char) | |

| 294 | 11.802 | 181.8 | 0.002582 | 11.716 | 180 | 0.002521 |

| 1812 | 16.557 | 1077.6 | 0.002603 | 13.271 | 1030.6 | 0.002569 |

| 4945 | 40.941 | 4824.5 | 0.002598 | 16.483 | 4014 | 0.002588 |

| 13070 | 195.649 | 25855 | 0.003418 | 24.811 | 23,443.5 | 0.002622 |

| # of Training Samples per Character | 294 Classes (Characters) | 1812 Classes (Characters) | 4945 Classes (Characters) | 13,070 Classes (Characters) |

|---|---|---|---|---|

| 300 | 51.40% | 77.91% | 76.52% | 66.51% |

| 600 | 90.62% | 88.21% | 89.99% | 83.72% |

| 900 | 95.71% | 91.91% | 89.42% | 88.51% |

| 1500 | 95.35% | 95.28% | 90.23% | 92.17% |

| # of Classes | 600 Training Samples per Character | 900 Training Samples per Character | ||

|---|---|---|---|---|

| (Characters) | Original Model | Mixed Data Model | Original Model | Mixed Data Model |

| 294 | 90.62% | 96.84% | 95.71% | 98.51% |

| 1812 | 88.21% | 96.22% | 91.91% | 95.03% |

| 4945 | 89.99% | 93.54% | 89.42% | 96.16% |

| 13,070 | 83.72% | 91.95% | 88.51% | 93.93% |

| # of Augmentation | # of Output Classes | # of Characters Fine-tuned in Transfer Learning | |||

|---|---|---|---|---|---|

| Samples per Character | 0 | 100 | 200 | 294 | |

| 1 | 294 | 90.62% | 96.91% | 97.25% | 97.90% |

| 1812 | 88.21% | 97.31% | 97.25% | 97.27% | |

| 4945 | 89.99% | 95.79% | 96.97% | 98.06% | |

| 13,070 | 83.72% | 95.00% | 96.50% | 96.06% | |

| 5 | 294 | 90.62% | 99.25% | 99.27% | 99.41% |

| 1812 | 88.21% | 98.89% | 98.75% | 99.81% | |

| 4945 | 89.99% | 98.40% | 98.26% | 99.31% | |

| 13,070 | 83.72% | 97.61% | 98.18% | 99.19% | |

| 10 | 294 | 90.62% | 99.13% | 99.47% | 99.13% |

| 1812 | 88.21% | 98.81% | 98.56% | 99.09% | |

| 4945 | 89.99% | 98.00% | 98.56% | 98.50% | |

| 13,070 | 83.72% | 98.16% | 98.50% | 98.77% | |

| 15 | 294 | 90.62% | 98.85% | 99.56% | 99.33% |

| 1812 | 88.21% | 98.85% | 99.03% | 99.39% | |

| 4945 | 89.99% | 98.26% | 98.75% | 99.37% | |

| 13,070 | 83.72% | 98.34% | 98.56% | 99.11% | |

| 20 | 294 | 90.62% | 98.75% | 99.45% | 99.33% |

| 1812 | 88.21% | 98.58% | 98.83% | 99.41% | |

| 4945 | 89.99% | 98.87% | 98.93% | 99.47% | |

| 13,070 | 83.72% | 97.34% | 98.85% | 99.39% | |

| Model | Training Samples | Original | Projection |

|---|---|---|---|

| Font model | 600 | 83.72% | 85.70% |

| Mixed model | 600 | 91.95% | 96.54% |

| Transfer model | 400 | 95.01% | 97.53% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.-Q.; Chang, H.-S.; Lin, D.-T. Large-Scale Printed Chinese Character Recognition for ID Cards Using Deep Learning and Few Samples Transfer Learning. Appl. Sci. 2022, 12, 907. https://doi.org/10.3390/app12020907

Li Y-Q, Chang H-S, Lin D-T. Large-Scale Printed Chinese Character Recognition for ID Cards Using Deep Learning and Few Samples Transfer Learning. Applied Sciences. 2022; 12(2):907. https://doi.org/10.3390/app12020907

Chicago/Turabian StyleLi, Yi-Quan, Hao-Sen Chang, and Daw-Tung Lin. 2022. "Large-Scale Printed Chinese Character Recognition for ID Cards Using Deep Learning and Few Samples Transfer Learning" Applied Sciences 12, no. 2: 907. https://doi.org/10.3390/app12020907

APA StyleLi, Y.-Q., Chang, H.-S., & Lin, D.-T. (2022). Large-Scale Printed Chinese Character Recognition for ID Cards Using Deep Learning and Few Samples Transfer Learning. Applied Sciences, 12(2), 907. https://doi.org/10.3390/app12020907