Abstract

A visual dialog task entails an agent engaging in a multiple round conversation about an image. Notably, one of the main issues is capturing the semantic associations of multiple inputs, such as the questions, dialog history, and image features. Many of the techniques use a token or a sentence granularity semantic representation of the question and dialog history to model semantic associations; however, they do not perform collaborative modeling, which limits their efficacy. To overcome this limitation, we propose a multi-granularity semantic collaborative reasoning network to properly support a visual dialog. It employs different granularity semantic representations of the question and dialog history to collaboratively identify the relevant information from multiple inputs based on attention mechanisms. Specifically, the proposed method collaboratively reasons the question-related information from the dialog history based on its granular semantic representations. Then, it collaboratively locates the question-related visual objects in the image by leveraging refined question representations. The experimental results conducted on the VisDial v.1.0 dataset verify the effectiveness of the proposed method, showing the improvements of the best normalized discounted cumulative gain score from 59.37 to 60.98 with a single model, from 60.92 to 62.25 with ensemble models, and from 63.15 to 64.13 with performing multitask learning.

1. Introduction

With the many advances gleaned from the intersection of vision and language domains, several vision–language tasks (e.g., image captioning [1], visual question answering (VQA) [2], referring expression comprehension [3], and visual dialog [4]) have been introduced, attracting massive attention from the computer-vision community. Specifically, a visual dialog [4] is used to train an artificial intelligence (AI) agent to answer questions based on an image and its dialog history. This method offers practical benefits to society, such as in aiding visually impaired users to enjoy and appreciate electronic imagery.

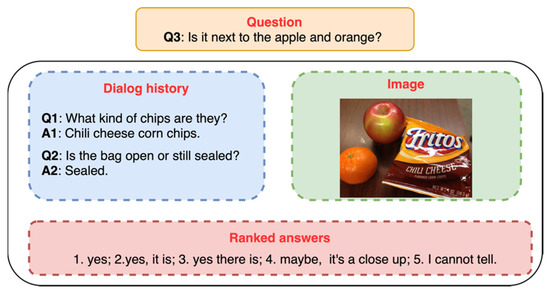

To answer a question correctly and accurately, the AI agent first browses the dialog history to identify passages related to the question. Then, it locates the specific visual objects that match the explicit semantic intent of the question. Figure 1 shows an example of the visual dialog task, to predict the correct answer for the current question Q3, “Is it next to the apple and orange?”, the agent traces the dialog history to obtain clues about the meaning of “it”. In this case, “it” refers to a “bag”, as mentioned in the Q2–A2 pairing. The agent must also determine if there are any mentions of the words “apple” and “orange”. Then, it grounds these entities in the image. For this to work, the agent must comprehensively understand the current question and the past question–answer pairs. In this case, the agent learns that the spatial relationship should be correctly inferred between “it” and the apple and orange fruits pictured.

Figure 1.

An example of visual dialog task. Based on an image and the dialog history, an agent needs to answer a series of questions by ranking a list of 100 candidate answers. Here, we only show top5 ranked answers.

To endow an AI agent with these capabilities, most methods use attention mechanisms to acquire clues from the dialog history and select the specific visual objects that are relevant to the question. Dual attention networks (DAN) [5] uses sentence-granularity semantic representations of the question and dialog history to compute the weight distributions of all of the question–answer rounds. Visual object identification then requires multi-head attention mechanisms. ReDAN [6] and dual-channel multi-hop reasoning model (DMRM) [7] use sentence-granularity semantic representations to iteratively capture information from the dialog history and the image via multiple reasoning steps. Using multi-head attention mechanisms, lightweight transformer for many inputs (LTMI) [8] and transformer-based modular co-attention (MCA) [9] apply token-granularity semantic representations to excavate token-to-token/visual object interactions and assign weight distributions. However, these methods only use single-granularity semantic representations of the question and dialog history to manage the multi-model intersections, which is intuitively insufficient to accurately answer the question. For example, when answering Q3 in Figure 1, the agent may infer incorrect answers if it only understands the semantic token information from the question while not understanding the question’s semantic information. To compensate for this deficiency, the multi-view attention network (MVAN) [10] aggregates question-relevant historical information to facilitate visual object grounding by considering both token- and sentence-granularity semantic representations. MVAN overcomes the single-granularity modeling limitations, but it fails to explicitly and collaboratively explore the impact of different granularity semantic representations to locate the target visual objects.

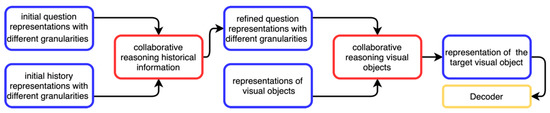

Nevertheless, most of the approaches above are of single-granularity approaches, which exhibit limited reasoning skills and ignore latent information about relationships among the question, the dialog history, and the image. To this end, we propose a novel multi-granularity semantic collaborative reasoning network (MGSCRN) for visual dialog tasks. The model collaboratively extracts question-related information from the dialog history and original visual objects via multi-granularity encoding. Figure 2 shows a diagram for the proposed method. We first encode the question and dialog history into token- and sentence-granularity semantic representations. Then, we collaboratively assess the question-related information from the dialog history by considering the granular semantic representations of the question and dialog history. In this process, refined question representations with different granularities are obtained. Next, we perform collaborative reasoning to form an accurate alignment between visual objects and these two refined question representations. Finally, the target visual object representation-fused question features are delivered to the decoder for predicting answers.

Figure 2.

A diagram for proposed method MGSCRN.

The major contributions of this work are summarized as follows. First, the joint use of the multi-granularity semantic representations of the new question and the image’s dialog history allows MGSCRN to successfully complete a visual dialog task. The collaborative reasoning of the coreference relations among multiple inputs capitalizes on the heterogeneous informative relationships (i.e., token- and sentence-granularity semantic information) in the history, including those of the various objects in the image. Second, our experiments with the VisDial v1.0 dataset demonstrate outstanding performance results that are superior to other state-of-the-art methods. Third, our qualitative analysis shows that MGSCRN infers both historical and visual question-related information more accurately than the other methods, which notably utilize single semantically granular representations.

This paper is structured in the following manner. The related works are shown in Section 2. Section 3 describes our proposed MGSCRN’s model. In Section 4, we introduce our experimental setup and the visual dialog task measures. In Section 5, we empirically evaluate our proposed model using VisDial v1.0, VisPro, and VisDialConv datasets. Then, we report our experimental results and analyze the findings. This paper is concluded in Section 6.

2. Related Work

Visual Dialog

With the advancement of computing sciences and artificial intelligence, many researchers seek solutions to achieve truly intelligent artificial systems for complex real-life applications. Various machine learning techniques have been developed to tackle challenging real-world problems, such as unstructured data classification in social networks [11], sentiment analysis of Chinese short financial texts [12], and even energy conservation in communications [13].

Recently, many works have paid attention to handle multimodal cognitive problems by analyzing vision and language from real life. Compared to the majority of vision–language tasks, such as image captioning [1] and VQA [2], visual dialog [4], which involves multi-turn visual-grounded conversations, is more engaging and challenging. Two separate teams of researchers simultaneously published two types of visual dialog datasets. One is the Guess-what dataset that De Vries et al. [14] gathered. It is a goal-driven visual dialog. The questioner must correctly predict the visual target by asking a series of questions. The Oracle provides yes/no/n.a. answers. The other is a large-scale free-form visual dialog dataset called VisDial [4], where the questioner asks open-ended questions based on the image captions and dialog history to assist itself to better comprehend the visual contents; the answerer responds depending on the image and dialog history. In this paper, the second setting was used.

Various technical vantage points were used to study visual dialog tasks. Three baselines were initially proposed to go with the VisDial dataset: the hierarchical recurrent encoder (HRE) [4] included a dialog-RNN sitting on top of a recurrent block, which was capable of selecting relevant history from previous rounds; the late fusion (LF) [4] directly concatenated individual representations of the image, dialog history, and question followed by a linear transformation; and the memory network (MN) [4] treated each prior question–answer pair as a fact and used a SoftMax to obtain probabilities over the stored facts.

Most of the later techniques utilized various attention mechanisms. In each step of the reasoning process, ReDAN [6] calculated attention maps to the dialog history and visual objects. To manage the visual and textual information in a parallel and adaptive way, heterogeneous excitation-and-squeeze network (HESNet) [15] excavated multi-modal attention. Recursive visual attention (RvA) [16] updated visual attention while recursively browsing the dialog history to tackle the problem of visual reference resolution. In order to use the historical information in a balanced way, the reciprocal question representation learning network (RQRLN) [17] utilized transformer-based attention to reciprocally learn a new question representation through the intersections of two types of question representations with and without dialog history.

Other approaches concentrated on combining neural networks with graphical structural representation to capture the semantic dependence between cross-modal information. A visual graph was built by aligning vision–language for graph inference (AVLGI) [18]. It was used to enhance visual features guided by textual features, and a scene graph was then created to incorporate external knowledge. To discover the partially relevant contexts, a context-aware graph (CAG) [19] constructed a dynamic graph structure, which could be iteratively updated by applying an adaptive top-K message transmission mechanism.

Pretrained models were used in recent studies, and they performed admirably. Wang et al. [20] initialized the encoder with BERT to fuse of dialog history and visual contents in order to better leverage the pretrained language representations. Murahari et al. [21] utilized two-stream vision-language pretrained models (i.e., ViLBERT) for this task to improve the interactions between vision and language.

The aforementioned techniques address the problem of visual dialog from several angles. However, these extant methods employ a token or a sentence semantic granularity representation of multiple inputs, so the output still suffers from poor accuracy. In this study, we employ collaborative reasoning for the visual dialog task to gather more pertinent information from the semantic representation of each granularity.

3. Methodology

3.1. Problem Definition

The problem is stated as follows. Given image , dialog history , and current question , where is the caption describing the image, and is the question–answer pair after concatenating question and the ground-truth answer . The goal of the dialog agent is to infer the best answer to by discriminatively ranking a list of answer candidates: .

3.2. MGSCRN

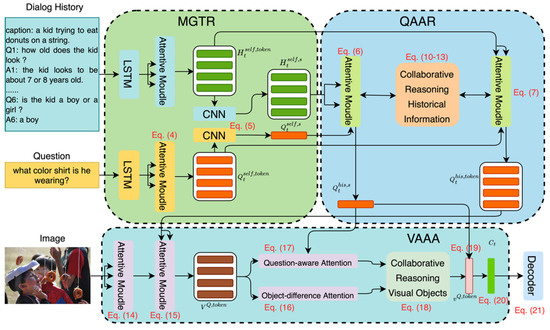

In this section, we formally describe our proposed method. Figure 3 illustrates the overall structure of MGSCRN. It consists of three main modules. First, in the multi-granularity textual representation (MGTR) module, initial token- and sentence-granularity semantic representations are obtained by adopting self-attention mechanisms of transformers and convolutional neural networks (CNNs), respectively. Second, the question-aware attention-refer (QAAR) module leverages the transformer-based cross-attention. It aims to build intersections between the question and dialog history encoded using the same granular semantic representations, which are obtained by the MGTR module. In this process, two types of dialog history distributions are learned: one representing the attention weights of all of the tokens in the dialog history, and another representing the attention weights of all of the question–answer rounds. To more accurately identify the historical information associated with the question, one distribution is modified by the other during training. Consequently, even if one distribution deviates from the truth, the degree of deviation will be reduced via the corrections offered by the other distribution. Thus, the QAAR module collaboratively reasons the question-related information from the dialog history by leveraging different semantically granular representations of the current question and historical dialog. Third, the visual-aware attention alignment (VAAA) module learns the semantic alignments between the visual features and each representation of the question-fused history obtained from the previous module; hence, two types of distributions over visual objects are learned simultaneously. In the same way as the QAAR module, the VAAA module allows these two distributions to collaboratively assess the question-related visual objects. The following subsections discuss each module in detail.

Figure 3.

Model architecture of the multi-granularity semantic collaborative reasoning network (MGSCRN) for visual dialog task. CNN = Convolutional neural network; LSTM = Long short-term memory; MGTR = Multi-granularity textual representation; QAAR = Question-aware attention refer; VAAA = Visual-aware attention alignment.

3.2.1. MGTR

To collaboratively reason through the question and dialog history representations with different levels of granularity, the MGTR module adopts transformer-based attention mechanisms [22] and convolutional neural networks (CNN) to obtain the initial token- and sentence-granularity semantic representations. We first embed each token in the current question, , as , using a pretrained GloVe-embedding layer [23], where denotes the number of tokens in . We then use a long short-term memory (LSTM) to encode into a token sequence, . Next, we feed into a standard transformer attentive module (i.e., ), which is carried out by a multi-head attention layer (i.e., ) and a position-wise fully connected feed-forward layer (i.e., ). Taking query matrix , key matrix , and value matrix as input, is defined as follows:

where and are learned parameter matrices. By setting inputs , and as in , we obtain the token-granularity semantic representation of the question, , after stacking transformer blocks:

To more accurately obtain a sentence-granularity semantic representation of , we employ a CNN instead of average pooling the transformer or bidirectional encoder representations from transformers (BERT) token-embedding outputs, following the approach of [24]. We denote as follows:

Similarly, for the dialog history, , we first concatenate the image caption and question–answer pairs, and then initialize them with the same embedding layer as in the question. Next, we utilize another LSTM and to acquire a token-granularity semantic representation of the dialog history, , where denotes the number of tokens in each turn of the dialog history, . Likewise, the sentence-granularity semantic representation of the dialog history, , is obtained by utilizing Equation (5).

3.2.2. QAAR

Differing from most of the extant works [4,6,17,19] that rely only on single-granularity representations to construct relationships between the question and dialog history, QAAR uses multi-granularity representations to collaboratively capture relevant historical information. To achieve this goal, the question guides the dialog history using sentence- and token-granularity semantic representations to fetch the history features relevant to the question. Two attention-weight distributions over the dialog history are computed simultaneously: all tokens and all rounds. Then, the distributions correct each other to accurately align the features of the question and dialog history items. To this end, we formally describe the QAAR module, which adopts to learn the correlated history features while considering multi-granularity information. The equations are as follows:

where and respectively, represent the question with fused sentence- and token-granularity. When learning the representations of and , the SoftMax function existing in (i.e., Equations (6) and (7)) produce two types of distributions over the dialog history. Here, we take these out and denote them as and , respectively:

where represents the attention weights on rounds of dialog history, and the row of represents the attention weights on all of the tokens that possibly have dependencies to the token in the question.

To better explore the question-related tokens and sentences in the dialog history, we dynamically modify and update and under their mutual guidance during training. To make the deterministic distribution, , more accurate, we first deal with the row of by separately adding the weight values of tokens in the different rounds of the dialog history, which is defined as:

where represents the different contributions of rounds of dialog history to the token in the question. Thus, indicates the different contributions of rounds of dialog history to each token in the question. Then, we add the weight values of each column of to obtain , followed by normalization:

where represents the contribution of rounds of dialog history to the whole question from the perspective of aggregating token-granularity semantic information. Next, as shown in Equation (12), we modify with the help of :

where denotes the elementwise product. To assist in accurately assigning attention weights over all tokens from the dialog history, we also modify under the constraint of , which is calculated as follows:

where denotes the scalar multiplication of vectors, and is the subset of the row of , which represents the attention weights assigned by the token of the question to the all tokens in the round of the dialog history.

Finally, during collaborative reasoning, and are replaced with and , respectively, to obtain and via the interactive transmitting of information (i.e., and ) in Equations (12) and (13). After stacking transformer blocks as with the MGTR module, we obtain two types of question representations: and , which are obtained by leveraging multi-granularity representations of the question and dialog history to collaboratively select question-aware historical information.

3.2.3. VAAA

Given the output representations of the QAAR module, and , the VAAA module collaboratively aligns them with the visual features. Here, the initial features are extracted by employing the Faster-CNN [25], pretrained using Visual Genome [26], denoted as , where and represent the overall number of visual objects in image and the dimension size of each visual object, respectively. We use a linear projection to map the dimensions of the object embeddings to those of the token. Then, the visual features are denoted as . We first establish the latent connections among visual objects in image via the :

Then, we apply the visual-aware attention mechanism to align visual objects with the visually related tokens from the question. The visual representation, , queries the token-granularity semantic representation, , to obtain a new visual representation, , that fuses the attentive token-granularity question features. We utilize cross-attention to compute as follows:

However, the visual features, , are obtained by attending to the most relevant question-token features, which are not target visual features for adequately answering , then, an object-difference attention mechanism is used to locate the question-relevant visual objects in the corresponding image, formulated as:

where is the difference between and representations as guided by . is the parameter matrix to be learned with glimpses. The SoftMax function transforms glimpse results into an attention weight over all visual objects. The final distribution on all of the visual objects is .

is obtained by finding clues from question tokens and comparing visual object differences. Note that it may inaccurately identify relevant visual objects, depending on the token semantically granular representation of the question. Therefore, we also use the sentence-granularity semantic representation of to align it with , which is implemented by the question-aware attention:

The obtained and jointly decide an appropriate distribution, , over the visual objects as follows:

Based on distribution , the weighted visual object representation is formulated as follows:

Finally, we fuse the filtered visual feature, , with (expressing the complete semantic intent of the question) as the final multimodal fused representation:

where denotes the concatenation, yielding context representation , which is then fed to the discriminative decoder to predict the answer to the current question, .

3.3. Discriminative Decoder

We encode the candidate answers in the same way as the questions and the dialog history. Here, we utilize the last hidden states of the LSTMs as candidate answer representations, . The discriminative decoder ranks these candidates using the dot product operations on and the context representation, . A probability distribution over the candidates is then obtained using the SoftMax function, denoted as:

Multi-class cross-entropy loss is employed as the discriminative objective function, which is formulated as follows:

where is the one-hot encoded label vector of the ground-truth answer.

4. Experimental Setup and Metrics

4.1. Datasets

We conducted experiments on the VisDial v1.0 dataset [4] to verify our proposed model. The train, validation, and test splits contained 1.23 M, 20 K, and 44 K dialog rounds, respectively. For the train and validation splits, each image had a 10-round dialog, whereas in the test split, a random set of question–answer pairs and a current question were presented alongside each image. The train split included 123K images taken from the MS-COCO dataset [27], and 2 K and 8 K images from Flickr were used for the validation and test splits, respectively. Additionally, each question followed a list of 100 candidate answer options, one of which was the ground truth.

4.2. Evaluation Metrics

To evaluate our model, we used a number of retrieval metrics in the manner of Das et al. [4]. Specifically, was used to determine where the ground-truth answer was located among the top k ranked responses. The mean rank was the average rank of the ground-truth answer. It is defined as:

where was the set of all the questions, and was the rank of the ground-truth answer.

The mean reciprocal rank was the reciprocal rank of the ground-truth answer, which was computed as follows:

The normalized discounted cumulative gain ( was introduced to penalize lower-ranked answers with high relevance. It should be note that the accepts many answers that are comparable as right, whereas other metrics only take the rank of a single answer. was defined as:

where represents the submitted ranking, represents the ideal ranking, the value is the number of candidate answers with a relevance score greater than 0, and is the relevance score of candidate answer.

4.3. Training Details

The open-source code from Das et al. [4] was used to implement our model via PyTorch. The words that appeared at least five times in the training split were added to the vocabulary that we created. The question and dialog history were truncated to 20 and 40, respectively, or were padded. GloVe word vectors were used to initialize these words, and all LSTMs were set with a single layer and 512-dimensional hidden units. For the transformer blocks, we set the number of heads to eight, the hidden size to 512 dimensions, and the number of transformer layers to six by stacking the transformer blocks. On one single Titan RTX GPU, our model was trained using the Adam optimizer. The learning rate was increased from to during the first epoch, and then decreased by 0.2 at epochs 8 and 10. The batch size was set to 16.

5. Experimental Results and Analysis

5.1. Comparison with State-of-the-Art Methods

5.1.1. Results on Test-Standard v1.0 Split

Under the discriminative decoder setting using VisDial v1.0, we initially contrasted our proposed model with those of previously published results, such as LF [4], HRE [4], MN [4], co-reference neural module network (CorefNMN) [28], graph neural network (GNN) [29], factor graph attention (FGA) [30], dual visual attention network (DVAN) [31], RVA [16], dual encoding visual dialog (DualVD) [32], history-aware co-attention (HACAN) [33], synergistic network [34], DAN [5], textual-visual reference aware attention network (RAA-Net) [35], HESNet [15], CAG [19], LTMI-LG [36], VD-BERT [20], and MVAN [10].

Our MGSCRN model outperformed various techniques according to the NDCG metric, as shown in Table 1. Specifically, when compared with VD-BERT, our model improved the NDCG from 59.96 to 60.98 (+1.02) and achieved results that were competitive with most non-NDCG metrics. Note that VD-BERT employs pretrained BERT language models and unified discriminative and generative training settings to improve performance. We also noticed that the MGSCRN model performed better than MVAN across various metrics (e.g., NDCG from 59.37 to 60.98 (+1.61) and MRR from 64.84 to 65.40 (+0.56)).

Table 1.

Performance comparison on the test-standard dataset of VisDial v1.0 under the discriminative decoder setting. Better performance is indicated by “” when the value is higher and by “” when the value is lower. The best results are shown in bold.

To compare the MGSCRN to more sophisticated works, we provided our ensemble model’s performance on the blind test-standard split of the VisDial v1.0 dataset in Table 2. For comparison, we chose the Synergistic [34], DAN [5], consensus dropout fusion (CDF) [37], and MVAN [10] models, because their ensemble performance results are available in the literature. For our ensemble model, we averaged the results () of the discriminative decoders after training six independent models with random initial seeds. Overall, our ensemble model outperformed all of the others (e.g., 62.25 NDCG compared to MVAN’s 60.92, and 67.42 MRR compared to MVAN’s 66.38). This further validates the effectiveness of our MGSCRN model as it focuses not only on predicting ground-truth answers (e.g., indicated by MRR) but also on selecting more plausible answers as correct ones (e.g., indicated by NDCG).

Table 2.

Performance comparison of different methods of performing ensemble models on the test-standard dataset of VisDial v1.0. Better performance is indicated by “” when the value is higher and by “” when the value is lower. The best results are shown in bold.

To improve task performance even more, we added multitask learning that combined the discriminative and generative decoders during training. For a fair comparison, we conducted multitask learning experiments (see Table 3). Following the visual dialog task [4], the generative decoder generates the next token, depending on the current one from the ground-truth answer, and utilizes log-likelihood scores to determine the rank of the candidates. We averaged the ranking outputs of the discriminative and generative decoders for evaluation. As shown in Table 3, our proposed model was effective in a multitask setting, achieving the best results on all metrics. Note that ReDAN [6] and MVAN [10] adopt the same method as ours, but the LTMI [8] uses only the discriminative decoder output for evaluation.

Table 3.

Performance comparison of different methods of performing multitask learning on the test-standard dataset of VisDial v1.0. Better performance is indicated by “” when the value is higher and by “” when the value is lower. The best results are shown in bold.

5.1.2. Results on VisPro and VisDialConv Datasets

To examine our model’s performance even further, we report the performance results of our model on those of VisPro and VisDialConv datasets [9], in which humans must browse the dialog history to predict the answers for the current question. We contrasted our model’s outcomes with those of MCA-I-HGuidedQ, MCA-I-VGH, and MCA-I-H from the work of Agarwal et al. [9], who incorporated the dialog history in various ways using VisPro and VisDialConv datasets. Table 4 shows that our model continues to yield much better results over all of the metrics and that it has powerful generalization and prediction abilities owing to the collaborative intersection of semantic information with different granularity.

Table 4.

Results comparison on VisPro and VisDialConv datasets. Better performance is indicated by “” when the value is higher and by “” when the value is lower. The best results are shown in bold.

However, the series of MCA methods mentioned above only modelled interactions at the token level of information granularity based on the question and dialog history, which may lead to yield lower scores in most metrics.

5.2. Ablation Study

To evaluate the influence of our model’s key components, we performed an ablation study using the VisDial v1.0 validation split and the same discriminative decoder for all variations:

- Sentence-granularity semantic representations (SgSR): This model used only sentence-granularity semantic representations of the question and dialog history to acquire clues via the attentive module and CNN (Equations (4)–(6)). Then, it located question-related visual objects from using the question-aware attention (Equation (17));

- Token-granularity semantic representations (TgSR): This model used only token-granularity semantic representations of the question and dialog history to acquire clues via the attentive module (Equations (4) and (7)). Then, it located question-related visual objects from : we first use the object-difference attention mechanism (Equation (16)) to obtain distribution on all of the visual objects, ; second, the final question-relevant visual representation is computed by . The -fused question features is fed to the discriminative decoder to predict answers;

- TgSR without object-difference attention (TgSR w/o OdA): In this model, we performed self-attention instead of object-difference attention on to locate question-related visual objects. The other settings matched those of TgSR;

- SgSR+TgSR: This model further combined SgSR and TgSR. Specifically, in the discriminative decoder, two probability distributions over answer candidates from SgSR and TgSR were added after normalization to produce the final distribution. It notably did not include collaborative reasoning historical information (CRHI) and collaborative reasoning visual object (CRVO) operations;

- MGSCRN: This is our full semantic model, which combined SgSR and TgSR with CRHI and CRVO operations.

Table 5 shows that both SgSR and TgSR considered only single-granularity semantic representations of the question and dialog history to reason the relational information among the question, dialog history, and visual objects. They achieved lower performance on all of the evaluation metrics compared with SgSR+TgSR. This illustrates the effectiveness of the joint decision-making that uses multi-granularity semantic information. Due to the introduction of CRHI and CRVO operations, MGSCRN bridged the multi-granularity semantic information to collaboratively reason the important information from the dialog history and visual objects, and it performed best on all of the metrics. This validates the method’s provision of more accurate clues by leveraging a collaborative interaction strategy with multi-granularity semantic information. TgSR gained an extra boost over TgSR w/o OdA, which demonstrates the advantage of explicitly comparing different representations of the same visual object to identify those most related to the question.

Table 5.

Ablation study of MGSCRN using the VisDial v1.0 validation split. Better performance is indicated by “” when the value is higher and by “” when the value is lower. The best results are shown in bold.

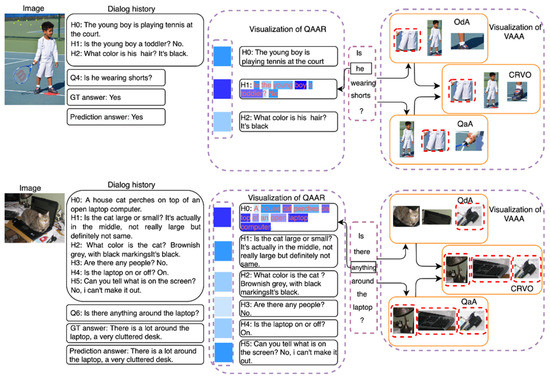

5.3. Qualitative Analysis

To further demonstrate the good interpretability of our model, we visualized the attention weights generated by QAAR and VAAA modules through the examples illustrated in Figure 4. For the QAAR module, two types of attention weights were shown. The first was attention weights for rounds of dialog history, obtained by modifying with the assistance of (as in Equation (12)). Doing so revealed the relevance of different dialog history rounds. The second was over all tokens in the (selected by ) round of dialog history based on their relationship to the current question, where . That is, reveals the relevance of different tokens in the round of dialog history to the token in the current question. Importantly, for both types of attention, we fetched the average attention weight over all heads computed by the topmost stacked transformer block. For the VAAA module, we first visualized the object-difference attention ( in Equation (16)) and question-aware attention ( in Equation (17)), and visualized the collaborative reasoning attention (in Equation (18)) computed by CRVO. Here, we display the top three ranked visual objects for each attention in the rightmost column of Figure 4.

Figure 4.

Example results on the VisDial v1.0 validation set. In the question-aware attention refer (QAAR) module, two types of attention weights are displayed. One is on rounds of dialog history, the other is over all tokens in the round of dialog history, which may have dependencies on the token in the question, . A darker blue square indicates a higher attention weight, and a lighter blue square indicates a lower attention weight. In the visual-aware attention alignment (VAAA) module, we visualized three types of attention: Object difference attention (OdA), question-aware attention (QaA), and collaborative reasoning visual object (CRVO), where top three visual objects for each attention are displayed. Visual objects in the red dotted box are the target objects for answering the question, .

Two QAAR visualization examples are presented, for which our model accurately captured question-aware historical information at both sentence- and token-granularities. In the top example, when answering the current question, “Is he wearing shorts?”, to determine what “he” refers to, the model assigned a maximum weight to the “H1” round of dialog history (i.e., “Is the young boy a toddler? No.”), in which the token, “boy”, gained a relatively large weight by visualizing the weight of each token in “H1”, given by the token “he” in the question. This observation validates that our model accurately obtains clues from historical information, thus clarifying the semantics of the question.

In the VAAA module’s visualization, we observed that the question-related visual objects were located more accurately, benefiting from collaboratively reasoning the distribution over all of the visual objects using multi-granularity semantic representations of the question. For instance, when answering “Is there anything around the laptop?”, the object-difference attention of the VAAA module grounded “cat,” “screen”, and “pens” as the top three target objects. However, “cat” and “screen” should not have been considered based on the complete semantics of the question. However, VAAA’s question-aware attention located all of the visual objects around the laptop, including “table lamp”, “keyboard”, and “pens.” Although the OdA did not produce an accurate distribution over the visual objects, the CRVO method that combined OdA and QaA determined a suitable distribution that still ranked “table lamp”, “keyboard”, and “pens” as the top three target objects. Similar phenomena were verified in the top example.

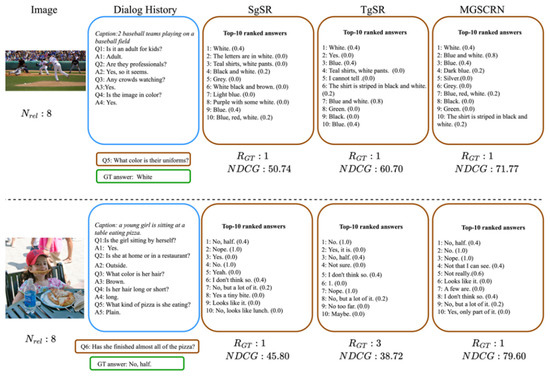

To verify the effectiveness of the proposed model compared with the single granularity model, Figure 5 shows two example results on three models: SgSR model, TgSR model, and MGSCRN model. As observed in Figure 5, the MGSCRN model had a larger number of relevant answers on its list when compared to the top 10 ranking lists of the SgSR model. In the SgSR model, there were four and five relevant answers for each of the two cases. In contrast, our approach boosted the number to six and seven, respectively, which resulted in a higher NDCG score. Note that our model also outperformed the TgSR model in terms of NDCG. Additionally, in both cases, the ground-truth (GT) answer is ranked first by our proposed model. Through the above analysis, compared with a single granularity semantic representation model (SgSR/ TgSR), we validated our model performed better on the visual dialog task due to collaborative reasoning, using two different granularity semantic representations.

Figure 5.

Example results from the validation set of VisDial v1.0 using the SgSR, TgSR, and MGSCRN models. The scores of the top 10 ranked answers are shown in brackets indicating the degree of relevance of the candidate answer options (out of 100) to the question. Here, denotes the rank of GT answer, NDCG is a score ranking of all relevant answers for the question, and is the number of candidate answer options with non-zero relevance.

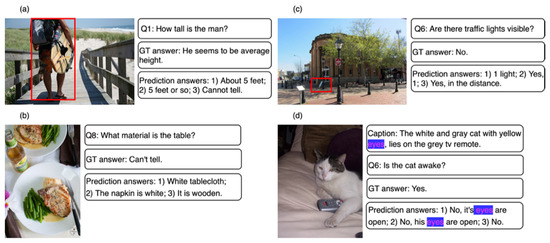

5.4. Error Case Analysis

In this section, we illustrated some of the zero-scoring examples in terms of the R@10 metric. These can be categorized into three groups based on the reasons for the errors. The first is common-sense reasoning. That is, when faced with the question, “What material is the table?” or “How tall is the man?” (Figure 6a,b), common-sense reasoning is needed. However, our model was confused even after accurately locating the target visual objects. The second was related to the image not containing the target visual objects. Because “traffic lights” did not exist in the corresponding image (Figure 6c), our model picked the best candidate, which led to incorrect answers. The third type relates to keyword matching. When modeling the intersections between the question and dialog history, our model memorized the keywords from the dialog history (e.g., “eyes”), thus ranking answers with keywords at the top. For example, “No, his eyes are open” and “No, it’s eyes are open” (Figure 6d). However, these answers are sometimes inaccurate.

Figure 6.

Examples of error cases from the VisDial v1.0 validation set. The visual objects in the red box are selected by the proposed model. The errors can be categorized into three groups based on the reasons: (1) common-sense reasoning, (2) the image not containing the target visual objects, and (3) keyword matching. (a,b) belong to the first group, (c) belong to second, and (d) belong to third, respectively.

6. Conclusions

In this paper, we proposed the MGSCRN visual dialog system. The QAAR module performs collaborative reasoning while leveraging a variety of granular semantic representations of a new question and an image’s dialog history to capture and analyze historical information. The VAAA module performs collaborative reasoning to facilitate visual objects’ grounding. We empirically validated our novel method on VisDial v1.0, VisPro, and VisDialConv datasets, achieving outstanding prediction accuracy and task efficacy.

In terms of applications, the proposed model is a magnificent implementation of AI-supported knowledge management and plays a crucial role in human–machine interaction, such as dealing with robotics applications and AI assistants. In terms of the limitations of the proposed method, our method fed the entire dialog history into the model, disregarding the dialog history’s temporal relationship and discussed topic changes, when modeling the interactions between the current question and dialog history. By memorizing the topic changes, the model may capture the historical information more accurately as the conversation moves forward. Note that some of the questions can be answered by simply seeing the image without access to the history information. The proposed model may be unable to correctly answer these questions due to excessive memory of irrelevant history information. In addition, the accuracy of locating target objects may be decreased because our model does not consider the positional relationships between visual objects.

In the future, we will consider the temporal relationship between many rounds of the dialog history to determine how the discussed topic changes, so that the model can more precisely capture the background knowledge relevant to the current question. To answer questions that are unrelated to dialog history, how to use dialog history in a balanced manner is another challenge. Additionally, we expect to incorporate spatial relationships between visual objects by understanding the prior dialog history.

Author Contributions

Conceptualization, H.Z. and X.W.; methodology, H.Z.; software, H.Z. and S.J.; validation, H.Z. and S.J.; formal analysis, H.Z. and X.L; writing—original draft preparation, H.Z.; writing—review and editing, H.Z., X.W., X.L. and S.J. All authors have read and agreed to the published version of the manuscript.

Funding

This paper is supported by the National Natural Science Foundation of China (No. 62076032).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Publicly available datasets were analyzed in this study. The data can be found here: https://visualdialog.org/data (accessed on 3 August 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Guo, L.; Liu, J.; Tang, J.; Li, J.; Luo, W.; Lu, H. Aligning Linguistic Words and Visual Semantic Units for Image Captioning. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 765–773. [Google Scholar]

- Zhan, H.; Xiong, P.; Wang, X.; Wang, X.; Yang, L. Visual Question Answering by Pattern Matching and Reasoning. Neurocomputing 2022, 467, 323–336. [Google Scholar] [CrossRef]

- Liu, J.; Wang, W.; Wang, L.; Yang, M.-H. Attribute-Guided Attention for Referring Expression Generation and Comprehension. IEEE Trans. Image Process. 2020, 29, 5244–5258. [Google Scholar] [CrossRef] [PubMed]

- Das, A.; Kottur, S.; Gupta, K.; Singh, A.; Yadav, D.; Lee, S.; Moura, J.M.F.; Parikh, D.; Batra, D. Visual Dialog. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1242–1256. [Google Scholar] [CrossRef] [PubMed]

- Kang, G.-C.; Lim, J.; Zhang, B.-T. Dual Attention Networks for Visual Reference Resolution in Visual Dialog. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 2024–2033. [Google Scholar]

- Gan, Z.; Cheng, Y.; Kholy, A.; Li, L.; Liu, J.; Gao, J. Multi-Step Reasoning via Recurrent Dual Attention for Visual Dialog. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 6463–6474. [Google Scholar]

- Chen, F.; Meng, F.; Xu, J.; Li, P.; Xu, B.; Zhou, J. DMRM: A Dual-Channel Multi-Hop Reasoning Model for Visual Dialog. AAAI 2020, 34, 7504–7511. [Google Scholar] [CrossRef]

- Nguyen, V.-Q.; Suganuma, M.; Okatani, T. Efficient Attention Mechanism for Visual Dialog That Can Handle All the Interactions between Multiple Inputs. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Agarwal, S.; Bui, T.; Lee, J.-Y.; Konstas, I.; Rieser, V. History for Visual Dialog: Do We Really Need It? In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 8182–8197. [Google Scholar]

- Park, S.; Whang, T.; Yoon, Y.; Lim, H. Multi-View Attention Network for Visual Dialog. Appl. Sci. 2021, 11, 3009. [Google Scholar] [CrossRef]

- Punia, S.K.; Kumar, M.; Stephan, T.; Deverajan, G.G.; Patan, R. Performance analysis of machine learning algorithms for big data classification: Ml and ai-based algorithms for big data analysis. Int. J. E-Health Med. Commun. (IJEHMC) 2021, 12, 60–75. [Google Scholar] [CrossRef]

- Rao, D.; Huang, S.; Jiang, Z.; Deverajan, G.G.; Patan, R. A dual deep neural network with phrase structure and attention mechanism for sentiment analysis. Neural Comput. Appl. 2021, 33, 11297–11308. [Google Scholar] [CrossRef]

- Niu, Y.; Zhang, H.; Gopal, G. Emerging Trends and the Importance of Network Evolution in Big Data. Recent Adv. Comput. Sci. Commun. (Former. Recent Patents Comput. Sci.) 2020, 13, 158. [Google Scholar]

- De Vries, H.; Strub, F.; Chandar, S.; Pietquin, O.; Larochelle, H.; Courville, A. GuessWhat?! Visual Object Discovery through Multi-Modal Dialogue. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 4466–4475. [Google Scholar]

- Lin, B.; Zhu, Y.; Liang, X. Heterogeneous Excitation-and-Squeeze Network for Visual Dialog. Neurocomputing 2021, 449, 399–410. [Google Scholar] [CrossRef]

- Niu, Y.; Zhang, H.; Zhang, M.; Zhang, J.; Lu, Z.; Wen, J.-R. Recursive Visual Attention in Visual Dialog. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 6672–6681. [Google Scholar]

- Zhang, H.; Wang, X.; Jiang, S. Reciprocal Question Representation Learning Network for Visual Dialog. Appl. Intell. 2022. [Google Scholar] [CrossRef]

- Jiang, T.; Shao, H.; Tian, X.; Ji, Y.; Liu, C. Aligning Vision-Language for Graph Inference in Visual Dialog. Image Vis. Comput. 2021, 116, 104316. [Google Scholar] [CrossRef]

- Guo, D.; Wang, H.; Wang, M. Context-Aware Graph Inference with Knowledge Distillation for Visual Dialog. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 1. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Joty, S.; Lyu, M.; King, I.; Xiong, C.; Hoi, S.C.H. VD-BERT: A Unified Vision and Dialog Transformer with BERT. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 3325–3338. [Google Scholar]

- Murahari, V.; Batra, D.; Parikh, D.; Das, A. Large-Scale Pretraining for Visual Dialog: A Simple State-of-the-Art Baseline. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Long Beach, CA, USA, 2017; pp. 6000–6010. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C. Glove: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 26–28 October 2014; Association for Computational Linguistics: Stroudsburg, PA, USA, 2014; pp. 1532–1543. [Google Scholar]

- Choi, H.; Kim, J.; Joe, S.; Gwon, Y. Evaluation of BERT and ALBERT Sentence Embedding Performance on Downstream NLP Tasks. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 5482–5487. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Krishna, R.; Zhu, Y.; Groth, O.; Johnson, J.; Hata, K.; Kravitz, J.; Chen, S.; Kalantidis, Y.; Li, L.-J.; Shamma, D.A.; et al. Visual Genome: Connecting Language and Vision Using Crowdsourced Dense Image Annotations. Int. J. Comput. Vis. 2017, 123, 32–73. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014, Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. ISBN 978-3-319-10601-4. [Google Scholar]

- Kottur, S.; Moura, J.M.F.; Parikh, D.; Batra, D.; Rohrbach, M. Visual Coreference Resolution in Visual Dialog Using Neural Module Networks. In Computer Vision—ECCV 2018, Proceedings of the 15th European Conference, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 11219, pp. 160–178. ISBN 978-3-030-01266-3. [Google Scholar]

- Zheng, Z.; Wang, W.; Qi, S.; Zhu, S.-C. Reasoning Visual Dialogs with Structural and Partial Observations. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 6662–6671. [Google Scholar]

- Schwartz, I.; Yu, S.; Hazan, T.; Schwing, A.G. Factor Graph Attention. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 2039–2048. [Google Scholar]

- Guo, D.; Wang, H.; Wang, M. Dual Visual Attention Network for Visual Dialog. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; International Joint Conferences on Artificial Intelligence Organization: Brussels, Belgium, 2019; pp. 4989–4995. [Google Scholar]

- Jiang, X.; Yu, J.; Qin, Z.; Zhuang, Y.; Zhang, X.; Hu, Y.; Wu, Q. DualVD: An Adaptive Dual Encoding Model for Deep Visual Understanding in Visual Dialogue. AAAI 2020, 34, 11125–11132. [Google Scholar] [CrossRef]

- Yang, T.; Zha, Z.-J.; Zhang, H. Making History Matter: History-Advantage Sequence Training for Visual Dialog. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 2561–2569. [Google Scholar]

- Guo, D.; Xu, C.; Tao, D. Image-Question-Answer Synergistic Network for Visual Dialog. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 10426–10435. [Google Scholar]

- Guo, D.; Wang, H.; Wang, S.; Wang, M. Textual-Visual Reference-Aware Attention Network for Visual Dialog. IEEE Trans. Image Process. 2020, 29, 6655–6666. [Google Scholar] [CrossRef]

- Chen, F.; Chen, X.; Xu, C.; Jiang, D. Learning to Ground Visual Objects for Visual Dialog. arXiv 2021, arXiv:2109.06013. [Google Scholar]

- Kim, H.; Tan, H.; Bansal, M. Modality-Balanced Models for Visual Dialogue. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).