An Efficient AdaBoost Algorithm with the Multiple Thresholds Classification

Abstract

:Featured Application

Abstract

1. Introduction

2. Background

2.1. AdaBoost

| Algorithm 1 AdaBoost |

| 1. Input: Training dataset: ; Weak Learn algorithm; Ensemble size T. 2. Initialization: Initialize the training set with uniform weight distribution :. 3. Do for (3.1) generates a weak classifier based on the weak classifier learning algorithm with current weight distribution ; (3.2) calculate the weighted training error : ; (3.3) assign a weight of : ; (3.4) update the weights of the training examples: , where is the normalization. 4. Output: the ensemble classifier: . |

2.2. Threshold Classification

| Algorithm 2 Threshold Classification. |

| 1. Input: Training dataset: ; weight distribution . 2. Do for (2.1) Sort the m training examples by the size of their jth attribute; (2.2) Find the threshold in the attribute as the classifier . 3. Output: Compare these weighted errors, , and find the minimum , the threshold classifier is with the error . |

3. AdaBoost with Multiple Thresholds Classification

3.1. Multiple Thresholds Classification

| Algorithm 3 Multiple Thresholds Classification. |

| 1. Input: Training dataset: weight distribution . 2. Do for (2.1) Sort the m training examples by the size of their jth attribute; (2.2) Find the threshold in the jth attribute as the classifier so that we obtain the lowest weighted error as the step (2.2) in Algorithm 2. 3. Output: Compare weighted errors of these classifiers, , to find the three best classifiers with the lowest errors , which . The prediction result is weighted majority voted by the three rules: Weighted error of is . |

- 1.

- all the three classifiers classify correctly, , according to Equation (1), there is , and the probability is:

- 2.

- classify correctly, but not, , in that and the probability is:

- 3.

- classify correctly, but not. The probability is:

- 4.

- classify correctly, but not and the sum of accuracies of and is larger than that of , , in other words, . The probability is:

- 5.

- classifies correctly, but not and the sum of accuracies of and is smaller than that of , , in other words, . The probability is:

| Algorithm 4 AdaBoost with Multiple Thresholds Classification. |

| 1. Input: Training dataset S and Ensemble size T as the same as in Algorithm 1; Multiple Thresholds Classification. 2. Initialization: the same as in Algorithm 1; 3. Do for (3.1) generates a weak classifier as shown in Algorithm 3. (3.2) the greater the error of learner is, the smaller the weight of will be, so we prefer to assign a severe weight to by choosing a greater error between and in Algorithm 3: . The weight of is: . (3.3) Reassign weights for the training examples: , is the normalization. 4. Output: the ensemble classifier: . |

3.2. Multiple Thresholds Classification as the Weak Learn Algorithm

4. Experiment

4.1. Data Set Information and Parameter Setting

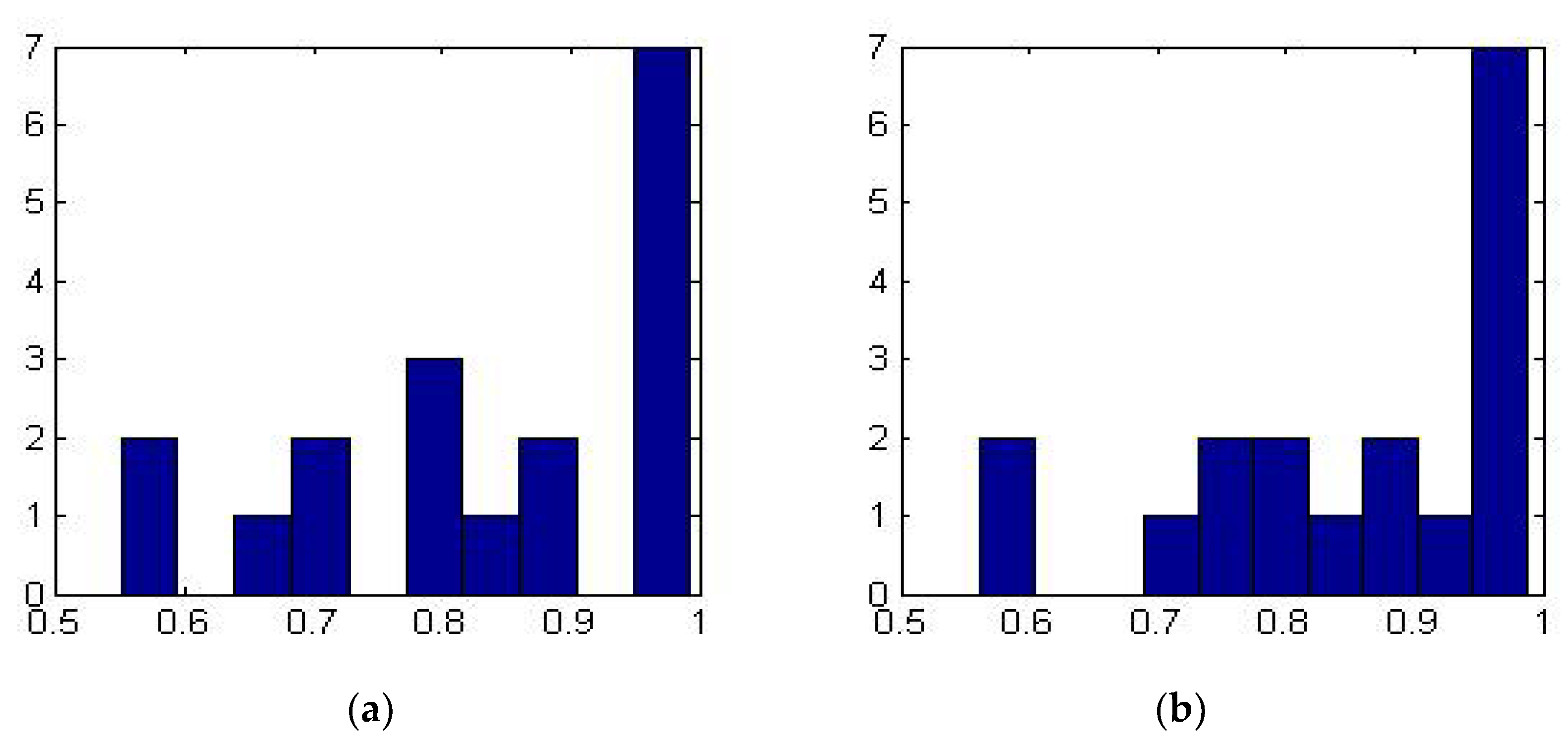

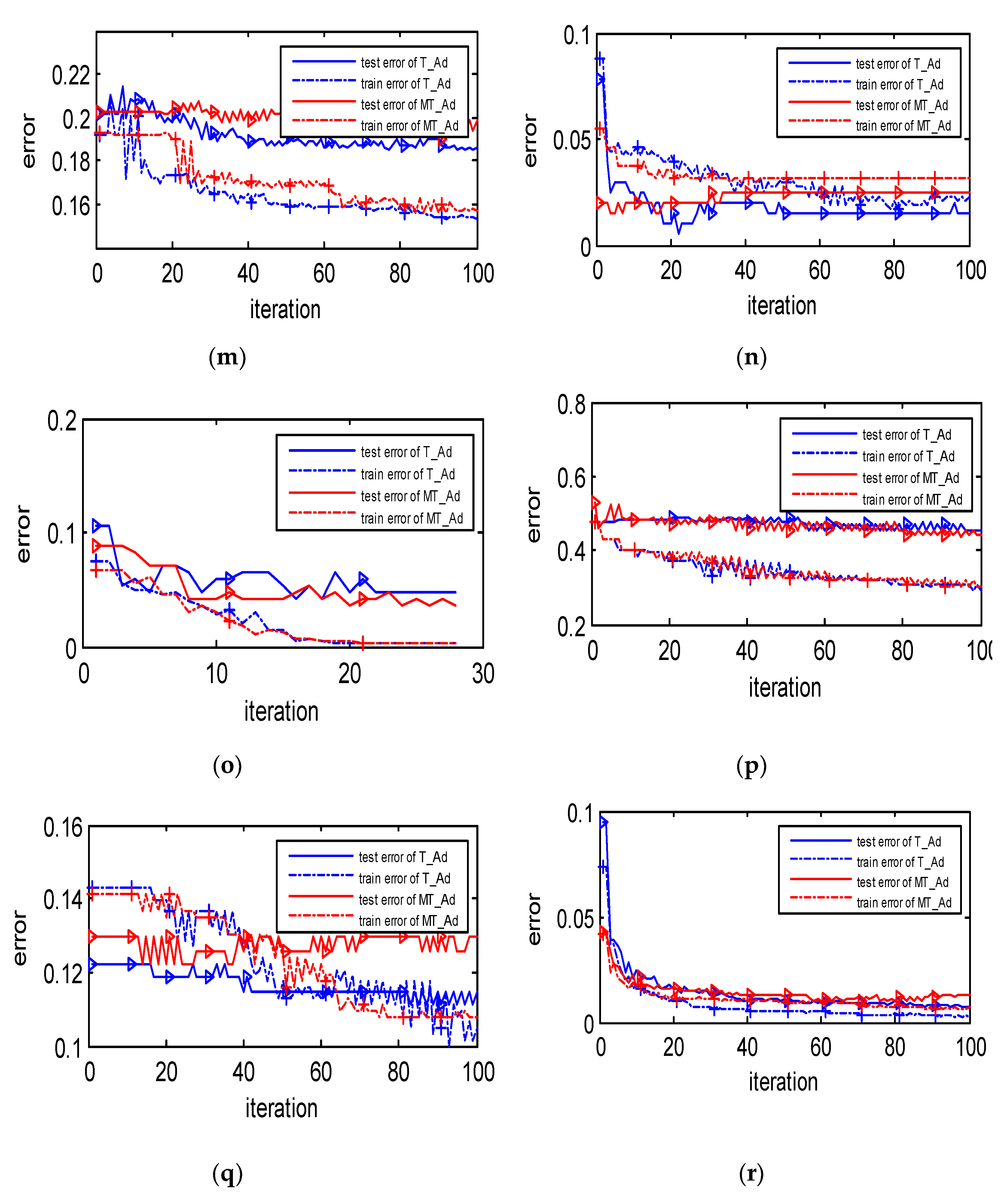

4.2. Wilcoxon Rank-Sum Test of These Two Algorithms and Analysis of Experimental Results

4.3. Analysis of Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Dietterich, T.G. Ensemble Methods in Machine Learning. In Multiple Classifier Systems; Springer: Berlin/Heidelberg, Germany, 2000; p. 1857. ISBN 978-3-540-67704-8. [Google Scholar]

- Zounemat-Kermani, M.; Batelaan, O.; Fadaee, M.; Hinkelmann, R. Ensemble machine learning paradigms in hydrology: A review. J. Hydrol. 2021, 598, 126266. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef] [Green Version]

- Pooria Karimi, H.J.-R. Comparing the Fault Diagnosis Performances of Single Neural Networks and Two Ensemble Neural Networks Based on the Boosting Methods. J. Autom. Control. 2014, 2, 21–32. [Google Scholar]

- Schapire, R.E.; Bartlett, P.; Lee, W.S. Boosting the margin: A new explanation for the effectiveness of voting methods. Ann. Stat. 1998, 26, 1651–1686. [Google Scholar]

- Schapire, R.E. The Boosting Approach to Machine Learning: An Overview. In Nonlinear Estimation and Classification; Springer: New York, NY, USA, 2003; p. 171. ISBN 978-0-387-95471-4. [Google Scholar]

- Schapire, R.E.; Singer, Y. Improved Boosting Algorithms Using Confidence-rated Predictions. Mach. Learn. 1999, 37, 297–336. [Google Scholar] [CrossRef] [Green Version]

- Bauer, E.; Kohavi, R. An Empirical Comparison of Voting Classification Algorithms: Bagging, Boosting, and Variants. Mach. Learn. 1999, 36, 105–139. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 25–32. [Google Scholar]

- McDonald, R.A.; Hand, D.J.; Eckley, I.A. An Empirical Comparison of Three Boosting Algorithms on Real Data Sets with Artificial Class Noise. In Multiple Classifier Systems; Springer: Berlin/Heidelberg, Germany, 2003; p. 2709. ISBN 978-3-540-40369-2. [Google Scholar]

- Maclin, R.; Opitz, D. Popular Ensemble Methods: An Empirical Study. J. Artif. Intell. Res. 2011, 11, 169–198. [Google Scholar]

- Zakaria, Z.; Suandi, S.A.; Mohamad-Saleh, J. Hierarchical Skin-AdaBoost-Neural Network (H-SKANN) for multi-face detection. Appl. Soft Comput. 2018, 68, 172–190. [Google Scholar] [CrossRef]

- Doğan, H.; Akay, O. Using AdaBoost classifiers in a hierarchical framework for classifying surface images of marble slabs. Expert Syst. Appl. 2010, 37, 8814–8821. [Google Scholar] [CrossRef]

- Zhou, Y.; Mazzuchi, T.A.; Sarkani, S. M-AdaBoost-A based ensemble system for network intrusion detection. Expert Syst. Appl. 2020, 162, 113864. [Google Scholar] [CrossRef]

- Hu, G.; Yin, C.; Wan, M.; Zhang, Y.; Fang, Y. Recognition of diseased Pinus trees in UAV images using deep learning and AdaBoost classifier. Biosyst. Eng. 2020, 194, 138–151. [Google Scholar] [CrossRef]

- Liu, B.; Liu, C.; Xiao, Y.; Liu, L.; Li, W.; Chen, X. AdaBoost-based transfer learning method for positive and unlabelled learning problem. Knowl. -Based Syst. 2022, 241, 108162. [Google Scholar] [CrossRef]

- Sevinç, E. An empowered AdaBoost algorithm implementation: A COVID-19 dataset study. Comput. Ind. Eng. 2022, 165, 107912. [Google Scholar] [CrossRef]

- Taherkhani, A.; Cosma, G.; McGinnity, T.M. AdaBoost-CNN: An adaptive boosting algorithm for convolutional neural networks to classify multi-class imbalanced datasets using transfer learning. Neurocomputing 2020, 404, 351–366. [Google Scholar] [CrossRef]

- Tang, D.; Tang, L.; Dai, R.; Chen, J.; Li, X.; Rodrigues, J.J. MF-Adaboost: LDoS attack detection based on multi-features and improved Adaboost. Future Gener. Comput. Syst. 2020, 106, 347–359. [Google Scholar] [CrossRef]

- Wang, W.; Sun, D. The improved AdaBoost algorithms for imbalanced data classification. Inf. Sci. 2021, 563, 358–374. [Google Scholar] [CrossRef]

- Haixiang, G.; Yijing, L.; Yanan, L.; Xiao, L.; Jinling, L. BPSO-Adaboost-KNN ensemble learning algorithm for multi-class imbalanced data classification. Eng. Appl. Artif. Intell. 2016, 49, 176–193. [Google Scholar] [CrossRef]

- Wan, S.; Li, X.; Yin, Y.; Hong, J. Milling chatter detection by multi-feature fusion and Adaboost-SVM. Mech. Syst. Signal Process. 2021, 156, 107671. [Google Scholar] [CrossRef]

- Rodríguez, J.J.; García-Osorio, C.; Maudes, J. Forests of nested dichotomies. Pattern Recognit. Lett. 2010, 31, 125–132. [Google Scholar] [CrossRef]

- Utkin, L.V.; Zhuk, Y.A. Robust boosting classification models with local sets of probability distributions. Knowl.-Based Syst. 2014, 61, 59–75. [Google Scholar] [CrossRef]

- Friedman, J.; Hastie, T.; Tibshirani, R. Additive logistic regression: A statistical view of boosting (With discussion and a rejoinder by the authors). Ann. Stat. 2000, 28, 337–374. [Google Scholar] [CrossRef]

- Trevor, H.; Robert, T.; Jerome, H. The Elements of Sta-tistical Learning; Springer: New York, NY, USA, 2001; pp. 337–384. [Google Scholar]

- Zheng, S.; Liu, W. Functional gradient ascent for Probit regression. Pattern Recognit. 2012, 45, 4428–4437. [Google Scholar] [CrossRef]

- Bylander, T.; Tate, L. Using Validation Sets to Avoid Overfitting in AdaBoost. In Proceedings of the FLAIRS 2006—Proceedings of the Nineteenth International Florida Artificial Intelligence Research Society Conference, Melbourne Beach, FL, USA, 11–13 May 2006; pp. 544–549. [Google Scholar]

- Meddouri, N.; Khoufi, H.; Maddouri, M.S. Diversity Analysis on Boosting Nominal Concepts. In Advances in Knowledge Discovery and Data Mining; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Thammasiri, D.; Meesad, P. Adaboost Ensemble Data Classification Based on Diversity of Classifiers. Adv. Mater. Res. 2012, 403–408, 3682–3687. [Google Scholar] [CrossRef]

| No. | Dataset | Number of Web Hits: | Number of Examples | Number of Attributes |

|---|---|---|---|---|

| 1 | Abalone | 1,268,791 | 4177 | 8 |

| 2 | Breast Cancer Wisconsin (Diagnostic bcw) | 1,742,253 | 683 | 10 |

| 3 | Breast Cancer Wisconsin (Diagnostic wdbc) | 1,742,253 | 569 | 32 |

| 4 | Connectionist Bench (Sonar) | 237,567 | 208 | 60 |

| 5 | Cryotherapy | 62,013 | 90 | 7 |

| 6 | EEG Eye State | 154,643 | 14,980 | 15 |

| 7 | Hill-Valley | 79,654 | 1212 | 101 |

| 8 | HTRU2 | 87,855 | 17,898 | 9 |

| 9 | Immunotherapy | 69,214 | 90 | 8 |

| 10 | Ionosphere | 286,248 | 351 | 34 |

| 11 | Liver Disorders | 216,408 | 345 | 7 |

| 12 | Molecular Biology (Splice) | 116,402 | 3190 | 61 |

| 13 | Raisin | 1,305,031 | 900 | 8 |

| 14 | seismic-bumps | 82,013 | 2584 | 19 |

| 15 | SPECTF Heart | 111,087 | 267 | 44 |

| 16 | Statlog (Heart) | 277,569 | 270 | 13 |

| 17 | Wholesale Customers | 435,201 | 440 | 8 |

| 18 | Wine Quality | 1,875,937 | 6497 | 12 |

| No. | Dataset | T_Ad | MT_Ad |

|---|---|---|---|

| 1 | Abalone | 0.8138 | 0.8013 |

| 2 | Breast Cancer Wisconsin (Diagnostic bcw) | 0.9854 | 0.9756 |

| 3 | Breast Cancer Wisconsin (Diagnostic wdbc) | 0.9532 | 0.9649 |

| 4 | Connectionist Bench (Sonar) | 0.6774 | 0.7097 |

| 5 | Cryotherapy | 0.8148 | 0.8889 |

| 6 | EEG Eye State | 0.5642 | 0.5735 |

| 7 | Hill-Valley | 0.5495 | 0.5611 |

| 8 | HTRU2 | 0.9868 | 0.9883 |

| 9 | Immunotherapy | 0.7778 | 0.7778 |

| 10 | Ionosphere | 0.9524 | 0.9524 |

| 11 | Liver Disorders | 0.7212 | 0.7404 |

| 12 | Molecular Biology (Splice) | 0.9587 | 0.9630 |

| 13 | Raisin | 0.8852 | 0.8704 |

| 14 | Seismic-bumps | 0.9665 | 0.9665 |

| 15 | SPECTF Heart | 0.7059 | 0.7540 |

| 16 | Statlog (Heart) | 0.8395 | 0.8519 |

| 17 | Wholesale Customers | 0.8864 | 0.9167 |

| 18 | Wine Quality | 0.9932 | 0.9875 |

| No. | Dataset | Ranking | Label |

|---|---|---|---|

| 1 | Hill-Valley | 1 | 1 |

| 2 | EEG Eye State | 2 | 1 |

| 3 | Connectionist Bench (Sonar) | 3 | 1 |

| 4 | SPECTF Heart | 5 | 1 |

| 5 | Liver Disorders | 4 | 1 |

| 6 | Immunotherapy | 6 | 1 |

| 7 | Abalone | 7 | −1 |

| 8 | Cryotherapy | 8 | 1 |

| 9 | Statlog (Heart) | 9 | 1 |

| 10 | Raisin | 10 | −1 |

| 11 | Wholesale Customers | 11 | 1 |

| 12 | Ionosphere | 12 | 1 |

| 13 | Breast Cancer Wisconsin (Diagnostic wdbc) | 13 | 1 |

| 14 | Molecular Biology (Splice) | 14 | 1 |

| 15 | Seismic-bumps | 15 | −1 |

| 16 | Breast Cancer Wisconsin (Diagnostic bcw) | 16 | −1 |

| 17 | HTRU2 | 17 | 1 |

| 18 | Wine Quality | 18 | −1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, Y.; Zhu, H.; Chen, R.; Li, R. An Efficient AdaBoost Algorithm with the Multiple Thresholds Classification. Appl. Sci. 2022, 12, 5872. https://doi.org/10.3390/app12125872

Ding Y, Zhu H, Chen R, Li R. An Efficient AdaBoost Algorithm with the Multiple Thresholds Classification. Applied Sciences. 2022; 12(12):5872. https://doi.org/10.3390/app12125872

Chicago/Turabian StyleDing, Yi, Hongyang Zhu, Ruyun Chen, and Ronghui Li. 2022. "An Efficient AdaBoost Algorithm with the Multiple Thresholds Classification" Applied Sciences 12, no. 12: 5872. https://doi.org/10.3390/app12125872

APA StyleDing, Y., Zhu, H., Chen, R., & Li, R. (2022). An Efficient AdaBoost Algorithm with the Multiple Thresholds Classification. Applied Sciences, 12(12), 5872. https://doi.org/10.3390/app12125872