Abstract

Generating natural language descriptions for structured representation (e.g., a graph) is an important yet challenging task. In this work, we focus on SQL-to-text, a task that maps a SQL query into the corresponding natural language question. Previous work represents SQL as a sparse graph and utilizes a graph-to-sequence model to generate questions, where each node can only communicate with k-hop nodes. Such a model will degenerate when adapted to more complex SQL queries due to the inability to capture long-term and the lack of SQL-specific relations. To tackle this problem, we propose a relation-aware graph transformer (RGT) to consider both the SQL structure and various relations simultaneously. Specifically, an abstract SQL syntax tree is constructed for each SQL to provide the underlying relations. We also customized self-attention and cross-attention strategies to encode the relations in the SQL tree. Experiments on benchmarks WikiSQL and Spider demonstrate that our approach yields improvements over strong baselines.

1. Introduction

SQL (Structured Query Language) is a vital tool to access databases. However, SQL is not easy to understand for the average person. SQL-to-text aims to convert a structured SQL program into a natural language description. It can help automatic SQL comment generation as well as build an interactive question answering system [1,2] for natural language interface to a relational database [3,4,5]. Besides, SQL-to-text is useful for searching SQL programs available on the Internet. Guo et al. [6] and Wu et al. [7] also demonstrated that SQL-to-text can assist the text-to-SQL task [8,9,10,11] by using SQL-to-text as data augmentation. In the real world, it can help people understand complex SQLs quickly by reading corresponding texts.

A naive idea is casting SQL-to-text as a Seq2Seq problem [12,13]. Taking the SQL sequence as input, a Seq2Seq model translates it to natural language. The main limitation is that when the SQL sequence becomes longer, the Seq2Seq model may fail to capture the dependency between complex conditions and operations. SQL is structural and can be converted into an abstract syntax tree, as Figure 1 illustrated. Generally, a tree is a special graph, so SQL-to-text can be modeled as a Graph-to-Sequence [14] task. Xu et al. [15] considers the intrinsic graph structure of a SQL query. They construct the SQL graph by representing each token in the SQL as a node in the graph, and concatenating different units (e.g., column names, operators, values) through SQL keyword nodes (e.g., SELECT, AND). By aggregating information from the K-hop neighbors through graph neural network (GNN, Scarselli et al. [16], 2008), each node obtains its contextualized embedding which will be accessed in the natural language decoding phase. Though simple and effective, it suffers from two main drawbacks: (1) poor generalization capability due to the sparsity of the constructed SQL graph, and (2) ignorance of relations between different node pairs, especially the relevance among column nodes.

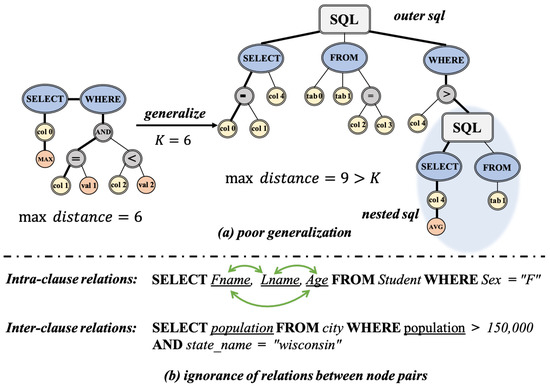

Figure 1.

Two major problems incurred by the previous sparse Graph2Seq model. Examples are selected from dataset Spider. (a) Poor generalization problem. K is the iteration number. Bold edges indicate the maximum distance between node pairs in the entire graph. (b) Ignorance of relations between node pairs.

In particular, Xu et al. [15] only deals with the simple SQL sketch SELECT $AGG $COLUMN WHERE $COLUMN $OP $VALUE (AND $COLUMN $OP $VALUE. Only one column unit and one single table are mentioned in the sketch, and all constraints are organized via intersections of conditions in the WHERE clause. The model updates the contextualized embedding of each node by a K-step iteration. Each node will only communicate with its 1-hop neighbors in one iteration, thus each node can only “see” nodes within the distance of K at the end of iterations. The performance will easily deteriorate when we transfer to more complicated SQL sketches composed of multiple tables, GroupBy/HAVING/OrderBy/LIMIT clauses and nested SQLs.

As the example shown in Figure 1, a Graph2Seq model with may work well on the simple SQL (shown in the left) while generalizing poorly on the complex SQL with a longer dependency distance (shown in the right). We find that two nodes may share high correlations even though they are far apart in both the serialized SQL query and the parsed abstract syntax tree. For instance, the columns mentioned in the same clause (intra-clause) are tightly related. See the example in Figure 1b. Users always require not only the last name, but also the first name of specific candidates. Similarly, there is a high probability that the column serving as one condition in the WHERE clause will also be requested exactly in SELECT clause (inter-clause). Previous work pays more attention on the syntactic structure of SQL, but neglects these potential relations at the semantic level.

To this end, we propose a Relation-aware Graph Transformer (RGT) to take into account both the abstract syntax tree of the query and the correlations between different node pairs. The entire node set is split into two parts: intermediate nodes and leaf nodes. Leaf nodes are usually raw table names or column words, plus some unary modifiers such as DISTINCT and MAX. Typically, these leaf nodes convey significant semantic information in the query. Intermediate nodes such as SELECT and AND inherently capture the tree structure of the underlying SQL query and connect the scattered leaf nodes. An example of constructed SQL tree is shown in Figure 2.

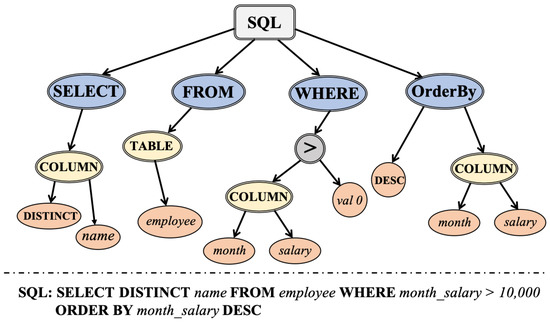

Figure 2.

An example of the constructed SQL tree.

We introduce four types of relations into the SQL tree and propose two variants of cross-attention to capture the structural information. All relations are encoded by our proposed RGT model. As a SQL query may involve multiple tables, we first consider the relations among abstract concepts TABLE and COLUMN, called databse schema (DBS). Given two nodes representing TABLE or COLUMN, they might be two columns in the same table or two tables connected by a foreign key. We define 11 different types of DBS to describe such relations. Besides, the depth of node reflects the amount of information: deeper nodes contain more semantic information while shallower nodes have more syntactic information. We introduce directional relative depth (DRD) to capture the relative depth between intermediate nodes. As for leaf nodes, the most important relation is affiliation. For example, in Figure 2, the leaf nodes month and salary are connected to the COLUMN node, and the COLUMN and another leaf node val0 belong to the intermediate node >. These three leaf nodes are highly relevant. We use lowest common ancestor (LCA) to measure the closeness of two leaf nodes. As we can see, the LCA of node month and val0 is the node > in Figure 2. Furthermore, to leverage the tree structure of SQL, we use two cross-attention strategies, namely attention over ancestors (AOA) and attention over descendants (AOD). Attention over ancestors only allows leaf nodes to attend their ancestors, and attention over descendants forces intermediate nodes to attend only their descendants.

We conduct extensive experiments on benchmarks WikiSQL [17] and Spider [18] with various baseline models. For simple SQL sketches on WikiSQL, our RGT model outperforms the previous best Graph2Seq model [15] and achieves BLEU. To the best of our knowledge, we are the first to perform SQL-to-text task on the SQL sketches that involves multiple tables and complex conditions. Results ( BLEU) demonstrate that our model generalizes well compared with other alternatives.

Our main contributions are summarized as follows:

- We propose a relation-aware graph transformer to consider various relations between node pairs in the SQL graph.

- We are the first to perform the SQL-to-text task with much more complicated SQL sketches on the dataset Spider.

- Extensive experiments show that our model is superior to various Seq2Seq and Graph2Seq models. Data and codes of our models and baselines will be public.

This paper is organized as follows: In Section 1, we introduce the task of SQL-to-text, analyze the existing problems of previous work and present our work on the whole. Then, we summarize related work in Section 2. After that, we clarify our method in detail in Section 3, including how to build the SQL tree and the architecture of our model. In Section 4, we conduct our experiments on two public datasets and report all the results. Finally, we conclude and show the expectation of future work in Section 5.

2. Related Work

Data-to-text Data-to-text intends to transform non-linguistic input data into the meaningful and coherent natural language text [19]. There are several types of the non-linguistic input data, such as a set of triples (the WebNLG challenge [20]) and some kinds of meaning representations (e.g., several slot-value pairs of the E2E dataset [21], the Abstract Meaning Representation (AMR) graph [22]). The key problem of this task is how to obtain a good representation of the input data. At first, researchers [23,24] cast the structured input data to sequence and adopt the sequence-to-sequence model, e.g., LSTM. However, this method neglects the intrinsic structure of the input data. To this end, a lot of graph-to-sequence models are proposed. In particular, refs. [25,26] encoded the input data based on a graph convolutional network (GCN [27]) encoder. Ref. [28] extended transformer to the graph input and proposed the graph transformer encoder. In this work, our model is based on the graph transformer encoder.

SQL-to-text This technique can leverage automatically generated SQL programs [17] to create additional (question, SQL) pairs, alleviating the annotation scarcity problem of the complicated text-to-SQL [29] task with data augmentation [6]. Earlier rule-based methods [30,31] heavily rely on researchers to design generic templates, which will inevitably produce rigid and canonical questions. Seq2Seq [13], Tree2Seq [32] and Graph2Seq [15] models have demonstrated their superiority over the traditional rule-based system. In this work, we propose a relation-aware graph transformer to take into account both the graph structure and various relations embedded in different node pairs.

Tree-to-sequence Tree-to-sequence model [32] aims to map a tree structure into a sequence. Each node gathers information from its children nodes when encoding. They apply this technique to neural machine translation. Specifically, they reorganize the input sequence in the source language as a tree according to its constituency structure. In our work, we construct a SQL tree and utilize the Tree LSTM [33] as a baseline.

Graph-to-sequence Graph convolution network (GCN, Kipf and Welling [27], 2016) and graph attention network (GAT, Veličković et al. [34], 2017) have been successfully applied in various tasks to obtain node embeddings. Every node updates its node embedding by aggregating information from its neighbors. There may be labeled relations or features on edges of the graph. Relations or edge features can be incorporated when aggregating information from neighbors [2,35,36] or calculating relevance weights between node pairs [37,38,39,40]. We adopt both strategies with our tailored relations for different node pairs.

3. Model

3.1. SQL Tree Construction

The entire node set of the constructed SQL tree V is split into two categories: intermediate nodes and leaf nodes . Intermediate nodes include three abstract concepts (SQL, TABLE and COLUMN), seven SQL-clause keywords (SELECT, WHERE, etc.) and binary operators (>, <, =, etc.), while leaf nodes contain unary operators, raw table names and column words and placeholders for entity value (entity mentions such as “new york” are replaced with one special token during preprocessing, called delexicalization). With this partition, the node embeddings of these two types can be updated using different relational information.

Starting from the root node SQL, we firstly append the clause-level nodes as its children (see Figure 2). Then concept abstraction nodes, TABLE and COLUMN, and relevant operator nodes are accordingly attached to their parents. Next, for node COLUMN and TABLE, we append all the raw words, aggregators, and distinct flags as leaf nodes. Our SQL Tree consists of three levels (see Figure 3): clause level, schema level, and token level. Table 1 shows all types of nodes.

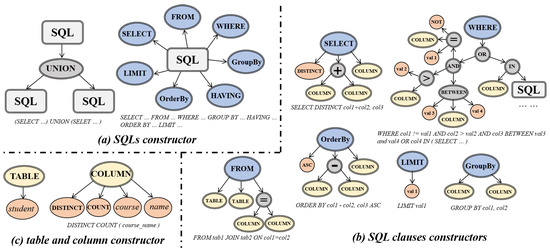

Figure 3.

SQL Tree construction procedure. (a) is clause level; (b) is schema level; (c) is token level.

Table 1.

Enumeration or examples of all types of nodes.

- First, SQL is divided into some clauses such as SELECT clause, WHERE clause, nested SQL clause and so on (see Figure 3a).

- Then, each clause is composed of several tables, columns, and some other binary operators. Considering that some table and column names have multiple tokens, we design two abstract nodes (TABLE and COLUMN) to address this problem (see Figure 3c). With these two abstract nodes, the clause nodes can be represented as shown in Figure 3b. Noticing that binary operators can be regarded as a relation between several nodes, we set them as intermediate nodes (parents of some children nodes).

- For other unary operators and tokens (table and column), we put them on leaves.

3.2. Encoder Overview

The input features include trainable embeddings for all nodes and relations. We use and to denote the set of leaf node embeddings and the relation matrix among leaf nodes. Symmetrically, and for intermediate nodes.

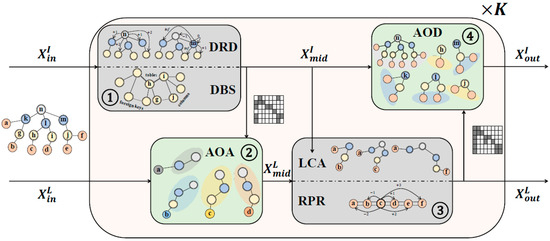

The encoder is composed of K stacked blocks, as illustrated in Figure 4. The main component is relation-aware graph transformer (RGT), which takes as input the node embedding matrix , the relation matrix and a relation function that extracts relation embeddings from , and outputs the updated node matrix. Each block contains four modules: one RGT for intermediate nodes, one RGT for leaf nodes, and two cross-attention modules. In each block, node embeddings and are updated sequentially via self-attention and cross-attention. According to the dataflow in Figure 4, intermediate nodes are first updated by

Figure 4.

Architecture of the encoder. DRD: directional relative depth. DBS: database schema. LCA: lowest common ancestor. RPR: relative position relation. AOD: attention over descendants. AOA: attention over ancestors. The dataflow is ordered by the number.

Then, leaf nodes attend intermediate nodes and update with RGT,

Finally, intermediate nodes attend leaf nodes also,

Subscripts are used to differentiate the inputs and outputs. Definitions of relation embedding functions and , relation matrix and , and module and will be elaborated later.

3.3. Relation-Aware Graph Transformer

We utilize Transformer [41] as the backbone of our model, which can be viewed as an instance of graph attention network (GAT, Veličković et al. [34], 2017) where the receptive field for each node is the entire node set. We view SQL tree as a special graph. Assume the input graph is , , , where V is the vertex set and R is the relation matrix. Each node has a randomly initialized embedding . Shaw et al. [37] proposes to incorporate the relative position between nodes and into relevance score calculation and context aggregation step. Similarly, we adapt this technique to our framework by introducing additional relational vectors. Mathematically, given the relation matrix R, we construct a relation embedding function to retrieve the feature vector for relation . Then, the output embedding of node after one iteration layer is calculated via

where denotes a fully-connected layer, is layer normalization trick [42], represents vector concatenation, parameters , and is the multi-head index. The relation embedding function is shared across different heads and multiple layers unless otherwise specified. For the convenience of discussion, we simplify the notation of our RGT encoding module into

where represent the matrix of input embeddings for all nodes.

3.4. Relations among Intermediate Nodes

As for intermediate nodes, we consider two types of relations: database schema (DBS) and directional relative depth (DRD). DBS considers the relations among abstract concepts TABLE and COLUMN. In total, we define 11 relations, which is a subset of relations proposed in Wang et al. [39]. For example, if node and are nodes of type COLUMN and they belong to the same table according to the database schema, the relation is Same-Table. Table 2 shows the complete version of DBS relations. Mathematically,

where the relation embedding function maps the relation category into a trainable vector .

Table 2.

Database schema relation (refer to Wang et al. [39]).

With the assistance of the underlying directed SQL tree, we can build another relation matrix to indicate the accessibility and relative depth difference between two intermediate nodes and . Let indicates the depth of node , e.g., the depth of root SQL node is 1 (see Figure 4). Given the maximum depth difference D,

where is the relation embedding module with entries. One special entry represents the inaccessibility inf.

The complete relation embedding function and relation matrix for intermediate nodes merge database schema and directional relative depth together.

where affine transformation is used to fuse relation features from two perspectives and means the combination of relations.

3.5. Relations among Leaf Nodes

Leaf nodes mainly consist of raw words, plus a few unary operators as modifiers. Gathering all these nodes into a sequence following their original order in the SQL query, we can obtain relative position relation (RPR) among these leaf nodes. Assume the position of node in is indexed by and D is the pre-defined maximum distance, relation features for nodes and is defined as

Actually, stores the parameter matrix of shape for retrieval. Tokens in the same clause will cluster together in the sequence . Intuitively, with smaller absolute numerical value will capture the previously mentioned intra-clause relations.

Furthermore, we take into account the structure of SQL tree. Let denotes the lowest common ancestor for leaf nodes and in the SQL tree. The relation feature is computed via

The relation embedding function simply extracts the current node embedding of intermediate node from and transforms it into dimension through a trainable linear layer. The relation between remote leaf nodes is reflected by the common ancestor node.

The complete relation embedding function for leaf nodes is constructed by combining both the flattened and tree-structured relations

3.6. Cross-Attention between Leaf and Intermediate Nodes

Module collects features from leaf nodes to intermediate nodes , such that semantic information can flow into the structural node representations of . For each intermediate node , it calculates the attention vector over leaf nodes. Rather than attending all the leaf nodes (attention over full nodes, AOF), only cares about its descendants determined by the SQL tree. We call this strategy attention over descendants (AOD). Let be the set of leaf nodes that are descendants of intermediate node , the update equation for intermediate node is

where is trainable parameters.

Similarly, module collects features from intermediate nodes to leaf nodes , such that the semantic information can be organized referring to the structural information. Rather than attending all the intermediate nodes, only cares about its ancestors in the SQL tree. We call this strategy attention over ancestors (AOA), similar to AOD.

3.7. Decoder

After obtaining the final node embeddings of intermediate and leaf nodes, we apply an LSTM-based [43] sequential decoder with copy mechanism [44] to generating the natural language sentence. Representations of the raw table and column words in leaf nodes will be extracted for a direct copy before decoding. Placeholders for entities such as will be replaced with corresponding nouns (called lexicalization) during post-processing. Specifically, we distinguish intermediate nodes from leaf nodes to capture the semantic and structural information differently. Given the final node embeddings , , the initial hidden state is

where is a function of transforming into . In particular,

For each time step t, we get the context vectors and , respectively.

where is the same as the cross attention mentioned in Section 3.6.

Afterward, the concatenation of the context vectors and previous hidden state is fed into the next step.

Considering there are many low-frequency words, we incorporate copy mechanism into the decoder. We use and to denote the generation probability and copy probability of , respectively. Let denote the probability of generating a word at time t. is the final output probability of . Then,

where , , and are trainable parameters.

4. Experiments

4.1. Dataset

WikiSQL We conduct experiments on WikiSQL with the latest version (The size of the latest version is 7019 less than used by [15]). SQLs in WikiSQL only contain SELECT and WHERE clauses with a short length. We utilize the official train/dev/test splits, ensuring each table only appears in a single split. This setup requires the model to generalize to unseen tables during inference.

Spider We also use Spider, a much more complex dataset. SQLs in Spider are much longer and the data size is much smaller compared to WikiSQL. Furthermore, some other complex grammars such as JOIN, HAVING and nested SQLs are also involved in Spider. Thus, the task on Spider is much more difficult. Considering the test split is not public, we only use the train and dev splits.

The statistics of the two datasets are illustrated in Table 3.

Table 3.

Statistics for WikiSQL and Spider.

4.2. Experiment Setup

Hyper parameters All our codes are implemented by Pytorch [45]. We utilize Adam [46] optimizer to train our models with a learning rate of . The batch size is 32 for WikiSQL and 16 for Spider. Other hyperparameters can be found in Table 4. Note that the layer number of RGT of leaf nodes and intermediate nodes may not be identical. The update cycle (K) is equal to the minimum layer number. For example, RGT of intermediate nodes has 6 layers and 3 layers of leaf nodes. Thus, K is 3. Two RGT layers encode the intermediate nodes, and a single RGT layer encodes the leaf nodes for each cycle. The motivation is that the structure of SQLs in Spider are complex and vital. Thus, the intermediate nodes (structural part of SQL) require more layers to encode. To ensure fairness, all our embeddings (nodes and relations) are initialized randomly (the same as all baselines). We can also initialize all token embeddings (leaf nodes) with some pre-trained vectors (e.g., GloVe [47] and BERT [48]) to further boost the performance.

Table 4.

Hyper parameters for our model on WikiSQL and Spider.

Metric We use BLEU-4 [49] and NIST [50] as automatic metrics. Each SQL has a single reference in WikiSQL. In Spider, most SQLs have double references because many SQLs are corresponding to two different natural language expressions. However, there are two threats of this metric: (1) The results may fluctuate seriously. (2) BLUE-4 cannot fully evaluate the quality of the generated text. To alleviate the fluctuation of results, we run all our experiments 5 times with different random seeds. All results are obtained from the mteval-v14.pl (https://github.com/moses-smt/mosesdecoder/blob/master/scripts/generic/mteval-v14.pl, accessed on 9 November 2021) script. Furthermore, we conduct a human evaluation on Spider to compare our model with the strongest baseline.

Data preprocessing For WikiSQL, we omit the FROM clause since all SQLs are only related to a single table. For Spider, we replace the table alias with its original name and remove the AS grammar. Additionally, the questions are delexicalized as mentioned before.

4.3. Baselines

For all baselines, the same attention-based [51] LSTM decoder with a copy mechanism is utilized, where only the schema-dependent items (table and column tokens) will be copied.

BiLSTM The encoder is a BiLSTM encoder with SQL sequences as input. We report both results with and without a copy mechanism for this baseline.

TreeLSTM The encoder is a Child-Sum TreeLSTM encoder [33] with our SQL Tree as input.

Transformer We investigate the effect of position embedding on the transformer. Specifically, we consider transformer encoder without position embedding, with absolute position embedding [41] and relative position embedding [37].

GCN/GAT Regarding the SQL Tree as a graph, we can employ Graph Neural Networks (GNN), such as Graph Convolutional Network (GCN) and Graph Attention Network (GAT). Additionally, we rerun the code of Xu et al. [15] (https://github.com/IBM/SQL-to-Text, accessed on 10 November 2020).

4.4. Main Results

Table 5 shows the main results, including seq2seq baselines, graph2seq baselines and our model. Our model relation-aware graph transformer (RGT) outperforms all baseline models on both WikiSQL and Spider in both BLEU and NIST. Specifically, RGT outperforms the strongest baseline transformer with relative position by BLEU and NIST on Spider, BLEU, and NIST on WikiSQL, indicating the effectiveness of our model.

Table 5.

Main results for all models. Bold means the best result.

We discover that the GCN does not perform well compared to other baselines. GCN only cares about the structure of the graph without considering any special relations between nodes. We also notice that the transformer with a relative position works well even it only considers the relative position relation. This finding encourages us to consider more relations than the structure.

4.5. Ablation Study

To investigate the influence of relations and cross attention, we conduct two ablation studies, respectively. All our ablation studies are conducted on Spider.

Relation ablation In Table 6, pruning any relation leads to lower performance, indicating that all relations introduced to RGT are reasonable. Specifically, relations among leaf nodes seem more important, verifying the motivation of strengthening relations among semantic SQL tokens (column, table, and so on). We explain the effects of four relations as follows:

Table 6.

Relation ablation. The upper part is to investigate relations among intermediate nodes and the lower is among leaf nodes. Bold means the best result.

- structural relations: Both DBS (DataBase Schema) and DRD (Directional Relative Depth) strengthen the structural representation, but they work differently. DBS is to capture relations about the database schema, such as relations between table and table, table and column, and so on. DRD is to capture the hierarchical structure in SQL. For example (see Figure 2), both DESC node and COLUMN node (the most right two abstract nodes) are descendants of OrderBy node. To express the hierarchy, we incorporate direction into DRD.

- semantic relations: Both LCA (Lowest Common Ancestor) and RPR (Relative Position Relation) enhance the semantic representation. For instance (see Figure 2), the model can realize month and salary are close and may belong to the same column or table with RPR. With LCA, the model ensures they belong to the same column then.

Cross attention ablation To investigate how the cross attention mechanism affects the performance, we apply different combination of attention strategies in cross attention, namely attention over descendants (AOD), attention over ancestors (AOA), attention over full nodes (AOF) and no attention (None). Table 7 shows the experiment result.

Table 7.

Cross attention ablation. The combination is in the format of intermediate to leaf + leaf to intermediate such as AOD + AOA. None symbol means no attention. For leaf nodes, AOF means attending all intermediate nodes. Similarly, AOF means attending all leaf nodes for intermediate nodes. Bold means the best result.

AOD + AOA works best, consistent with our expectations. We consider the cross attention is a balance problem. AOF can capture all kinds of relations, but may introduce more noises (information from less related nodes), while None would lose some vital information. For example, AOD + None performs better than AOD + AOF, which means in this case AOF would introduce more noises. Besides, AOD + None outperforms None+None, indicating that ignoring all relations would lead to poorer performance. In this task, we choose AOD + AOA as our attention strategy, which can catch relations among different types of nodes without introducing too much noise.

4.6. Human Evaluation

We randomly select 100 samples (∼20%) from the dev set of Spider to conduct the human evaluation. For the SQL-to-text task, we should evaluate the correctness and fluency of the generation. To assess the correctness, we recruited two CS students familiar with SQL to score generations. They were first asked to select the better one for correctness from two generations. Furthermore, we asked them to objectively count the number of correct generation for aggregator (MIN, MAX and so on), column (column in SQL) and operator (+, -, DESC, IN and so on). Then, we calculated the metrics (precision, recall, and f1), respectively. Additionally, we asked three native English speakers to evaluate the fluency and grammar correctness. Our model is evaluated against the strongest baseline (transformer with relative position). The results are illustrated in Table 8. The lower part of Table 8 shows the percentage of choosing the generation as more correct (line correctness) or fluent (line fluency), and the percentage of a generation being chosen both correct and fluent (line both). From the evaluation result, we can conclude that our model can generate more correct sentences with a comparable fluency.

Table 8.

Human evaluation for our model (RGT) and transformer with relative position (REL). Bold means the best result.

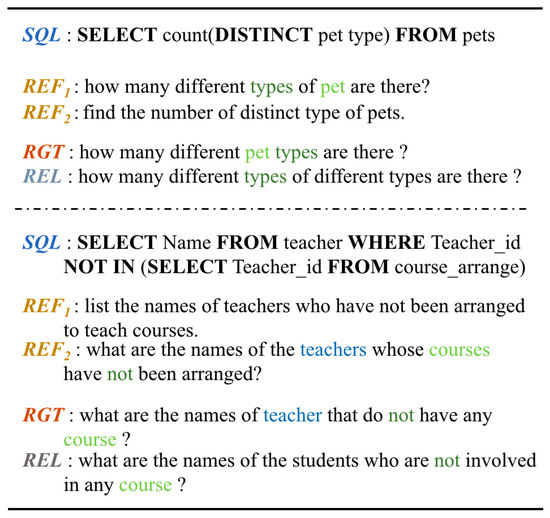

4.7. Case Study

We show two examples generated by our model RGT and the transformer with relative position (Figure 5). For the first example, both models can realize the type correctly, but the baseline fails to generate the pet. Our model can strengthen relations among tokens in one column, so the pet in the SQL would be a strong signal. For the second example, the baseline generates a more fluent sentence. There is a grammar error in the generation of our model (teacher is not correct), but teacher is matched with the SQL teacher in SQL. This phenomenon indicates that our model is more concerned with the relations among nodes. These two cases are consistent with our human evaluation conclusion.

Figure 5.

Case study: REF is the reference; REL is transformer with relative position; RGT is our model.

5. Conclusions

In this paper, we propose a relation-aware graph transformer (RGT) for complex SQL-to-Text generation. When learning the representation of each token in a SQL, multiple relations are considered in our model. Extensive experiments on two datasets WikiSQL and Spider show that our proposed model outperforms strong baselines including Seq2Seq models and Graph2Seq models.

There are two lines of work we can finish in the future. First, we can apply our SQL-to-text model to augment more text and SQL pairs to boost the performance of the text-to-SQL model by generating lots of SQLs automatically. In detail, we can make some SQL templates by handcrafting rules. Based on these templates, a lot of SQL queries can be generated, and then our SQL-to-text model transforms them into texts. These augmented text and SQL pairs can assist to train the text-to-SQL model. Second, we can extend our method to a more general task, e.g., code-to-text. Our model is appropriate to encode the abstract syntax tree of the programming language.

Author Contributions

Conceptualization, D.M. and L.C.; data curation, D.M.; formal analysis, Z.C.; supervision, Z.C., L.C. and K.Y.; writing—original draft, D.M., R.C. and X.C.; writing—review and editing, L.C. and K.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data supporting the conclusions of this article is avaliable at https://github.com/salesforce/WikiSQL and https://yale-lily.github.io/spider, accessed on 10 November 2020.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Liang, P. Learning executable semantic parsers for natural language understanding. Commun. ACM 2016, 59, 68–76. [Google Scholar] [CrossRef] [Green Version]

- Sorokin, D.; Gurevych, I. Modeling semantics with gated graph neural networks for knowledge base question answering. arXiv 2018, arXiv:1808.04126. [Google Scholar]

- Livowsky, J.M. Natural Language Interface for Searching Database. U.S. Patent 6,598,039, 22 July 2003. [Google Scholar]

- Shwartz, S.; Fratarcangeli, C.; Cullingford, R.E.; Aimi, G.S.; Strasburger, D.P. Database Retrieval System Having a Natural Language Interface. U.S. Patent 5,197,005, 23 March 1993. [Google Scholar]

- Nihalani, N.; Silakari, S.; Motwani, M. Natural language interface for database: A brief review. Int. J. Comput. Sci. Issues (IJCSI) 2011, 8, 600. [Google Scholar]

- Guo, D.; Sun, Y.; Tang, D.; Duan, N.; Yin, J.; Chi, H.; Cao, J.; Chen, P.; Zhou, M. Question Generation from SQL Queries Improves Neural Semantic Parsing. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 1597–1607. [Google Scholar]

- Wu, K.; Wang, L.; Li, Z.; Zhang, A.; Xiao, X.; Wu, H.; Zhang, M.; Wang, H. Data Augmentation with Hierarchical SQL-to-Question Generation for Cross-domain Text-to-SQL Parsing. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online and Punta Cana, Dominican Republic, 7–11 November 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 8974–8983. [Google Scholar]

- Cao, R.; Chen, L.; Chen, Z.; Zhao, Y.; Zhu, S.; Yu, K. LGESQL: Line Graph Enhanced Text-to-SQL Model with Mixed Local and Non-Local Relations. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Online, 2–4 August 2021; pp. 2541–2555. [Google Scholar]

- Chen, Z.; Chen, L.; Li, H.; Cao, R.; Ma, D.; Wu, M.; Yu, K. Decoupled Dialogue Modeling and Semantic Parsing for Multi-Turn Text-to-SQL. arXiv 2021, arXiv:2106.02282. [Google Scholar]

- Cao, R.; Zhu, S.; Liu, C.; Li, J.; Yu, K. Semantic Parsing with Dual Learning. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 51–64. [Google Scholar]

- Chen, Z.; Chen, L.; Zhao, Y.; Cao, R.; Xu, Z.; Zhu, S.; Yu, K. ShadowGNN: Graph Projection Neural Network for Text-to-SQL Parser. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 5567–5577. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2014; pp. 3104–3112. [Google Scholar]

- Iyer, S.; Konstas, I.; Cheung, A.; Zettlemoyer, L. Summarizing source code using a neural attention model. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 2073–2083. [Google Scholar]

- Xu, K.; Wu, L.; Wang, Z.; Feng, Y.; Witbrock, M.; Sheinin, V. Graph2seq: Graph to sequence learning with attention-based neural networks. arXiv 2018, arXiv:1804.00823. [Google Scholar]

- Xu, K.; Wu, L.; Wang, Z.; Feng, Y.; Sheinin, V. SQL-to-Text Generation with Graph-to-Sequence Model. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 2–4 November 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 931–936. [Google Scholar]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The graph neural network model. IEEE Trans. Neural Netw. 2008, 20, 61–80. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhong, V.; Xiong, C.; Socher, R. Seq2sql: Generating structured queries from natural language using reinforcement learning. arXiv 2017, arXiv:1709.00103. [Google Scholar]

- Yu, T.; Zhang, R.; Yang, K.; Yasunaga, M.; Wang, D.; Li, Z.; Ma, J.; Li, I.; Yao, Q.; Roman, S.; et al. Spider: A large-scale human-labeled dataset for complex and cross-domain semantic parsing and text-to-sql task. arXiv 2018, arXiv:1809.08887. [Google Scholar]

- Gatt, A.; Krahmer, E. Survey of the State of the Art in Natural Language Generation: Core tasks, applications and evaluation. J. Artif. Intell. Res. 2018, 61, 65–170. [Google Scholar] [CrossRef]

- Gardent, C.; Shimorina, A.; Narayan, S.; Perez-Beltrachini, L. The WebNLG Challenge: Generating Text from RDF Data. In Proceedings of the 10th International Conference on Natural Language Generation, Santiago de Compostela, Spain, 4–7 September 2017; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017; pp. 124–133. [Google Scholar]

- Novikova, J.; Dušek, O.; Rieser, V. The E2E Dataset: New Challenges For End-to-End Generation. In Proceedings of the 18th Annual SIGdial Meeting on Discourse and Dialogue, Saarbrücken, Germany, 15–17 August 2017; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017; pp. 201–206. [Google Scholar]

- Banarescu, L.; Bonial, C.; Cai, S.; Georgescu, M.; Griffitt, K.; Hermjakob, U.; Knight, K.; Koehn, P.; Palmer, M.; Schneider, N. Abstract Meaning Representation for Sembanking. In Proceedings of the 7th Linguistic Annotation Workshop and Interoperability with Discourse, Sofia, Bulgaria, 8–9 August 2013; Association for Computational Linguistics: Stroudsburg, PA, USA, 2013; pp. 178–186. [Google Scholar]

- Konstas, I.; Iyer, S.; Yatskar, M.; Choi, Y.; Zettlemoyer, L. Neural AMR: Sequence-to-Sequence Models for Parsing and Generation. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vancouver, BC, Canada, 30 July–4 August 2017; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017; pp. 146–157. [Google Scholar]

- Castro Ferreira, T.; Calixto, I.; Wubben, S.; Krahmer, E. Linguistic realisation as machine translation: Comparing different MT models for AMR-to-text generation. In Proceedings of the 10th International Conference on Natural Language Generation, Santiago de Compostela, Spain, 4–7 September 2017; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017; pp. 1–10. [Google Scholar]

- Marcheggiani, D.; Perez-Beltrachini, L. Deep Graph Convolutional Encoders for Structured Data to Text Generation. In Proceedings of the 11th International Conference on Natural Language Generation, Tilburg, The Netherlands, 5–8 November 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 1–9. [Google Scholar]

- Beck, D.; Haffari, G.; Cohn, T. Graph-to-Sequence Learning using Gated Graph Neural Networks. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 16–18 July 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 273–283. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. In Proceedings of the 5th International Conference on Learning Representations, ICLR ’17, Toulon, France, 24–26 April 2017. [Google Scholar]

- Koncel-Kedziorski, R.; Bekal, D.; Luan, Y.; Lapata, M.; Hajishirzi, H. Text Generation from Knowledge Graphs with Graph Transformers. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 3–5 June 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 2284–2293. [Google Scholar]

- Xu, X.; Liu, C.; Song, D. Sqlnet: Generating structured queries from natural language without reinforcement learning. arXiv 2017, arXiv:1711.04436. [Google Scholar]

- Koutrika, G.; Simitsis, A.; Ioannidis, Y.E. Explaining structured queries in natural language. In Proceedings of the 2010 IEEE 26th International Conference on Data Engineering (ICDE 2010), Long Beach, CA, USA, 1–6 March 2010; pp. 333–344. [Google Scholar]

- Ngonga Ngomo, A.C.; Bühmann, L.; Unger, C.; Lehmann, J.; Gerber, D. Sorry, i don’t speak SPARQL: Translating SPARQL queries into natural language. In Proceedings of the 22nd International Conference on World Wide Web, Riode Janeiro, Brazil, 13–17 May 2013; pp. 977–988. [Google Scholar]

- Eriguchi, A.; Hashimoto, K.; Tsuruoka, Y. Tree-to-sequence attentional neural machine translation. arXiv 2016, arXiv:1603.06075. [Google Scholar]

- Tai, K.S.; Socher, R.; Manning, C.D. Improved semantic representations from tree-structured long short-term memory networks. arXiv 2015, arXiv:1503.00075. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Schlichtkrull, M.; Kipf, T.N.; Bloem, P.; Van Den Berg, R.; Titov, I.; Welling, M. Modeling relational data with graph convolutional networks. In European Semantic Web Conference; Springer: Heraklion, Crete, Greece, 2018; pp. 593–607. [Google Scholar]

- De Cao, N.; Aziz, W.; Titov, I. Question answering by reasoning across documents with graph convolutional networks. arXiv 2018, arXiv:1808.09920. [Google Scholar]

- Shaw, P.; Uszkoreit, J.; Vaswani, A. Self-attention with relative position representations. arXiv 2018, arXiv:1803.02155. [Google Scholar]

- Xiao, F.; Li, J.; Zhao, H.; Wang, R.; Chen, K. Lattice-based transformer encoder for neural machine translation. arXiv 2019, arXiv:1906.01282. [Google Scholar]

- Wang, B.; Shin, R.; Liu, X.; Polozov, O.; Richardson, M. Rat-sql: Relation-aware schema encoding and linking for text-to-sql parsers. arXiv 2019, arXiv:1911.04942. [Google Scholar]

- Li, X.; Yan, H.; Qiu, X.; Huang, X. FLAT: Chinese NER Using Flat-Lattice Transformer. arXiv 2020, arXiv:2004.11795. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; MIT Press: Long Beach, CA, USA, 2017; pp. 5998–6008. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- See, A.; Liu, P.J.; Manning, C.D. Get to the point: Summarization with pointer-generator networks. arXiv 2017, arXiv:1704.04368. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems; Vancouver Convention Center: Vancouver, BC, Canada, 2019; pp. 8026–8037. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C. GloVe: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 26–28 October 2014; Association for Computational Linguistics: Stroudsburg, PA, USA, 2014; pp. 1532–1543. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MU, USA, 29–31 July 2019; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 4171–4186. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 8–10 July 2002; pp. 311–318. [Google Scholar]

- Wołk, K.; Koržinek, D. Comparison and adaptation of automatic evaluation metrics for quality assessment of re-speaking. arXiv 2016, arXiv:1601.02789. [Google Scholar] [CrossRef] [Green Version]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).