Real-Time Physical Prototyping Tool Design Based on Shape-Changing Display

Abstract

1. Introduction

2. Related Work

2.1. Advancements in Prototyping Tools

2.2. Advancements in SCD

3. Shape-Changing Display for Real-Time Prototyping

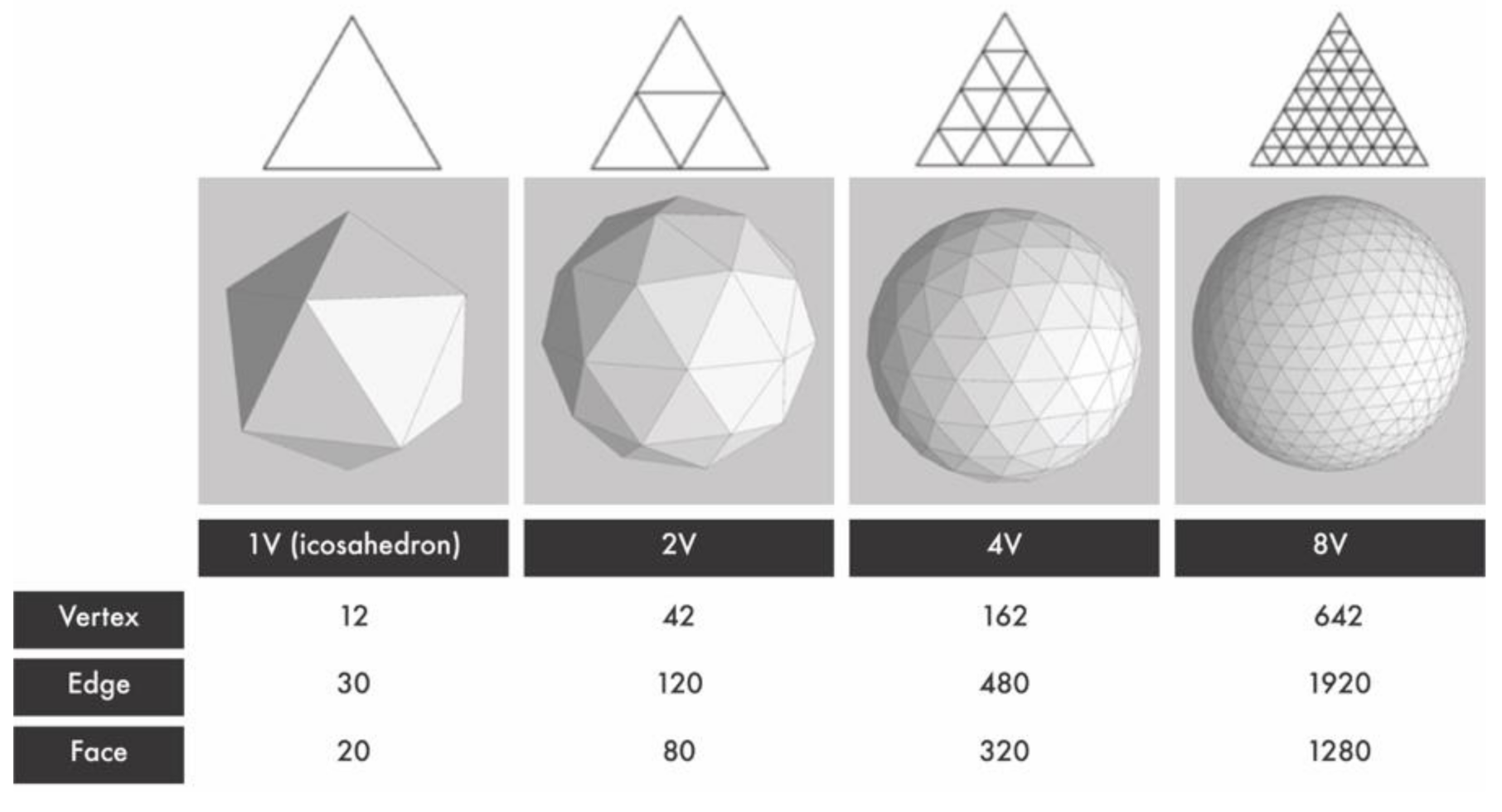

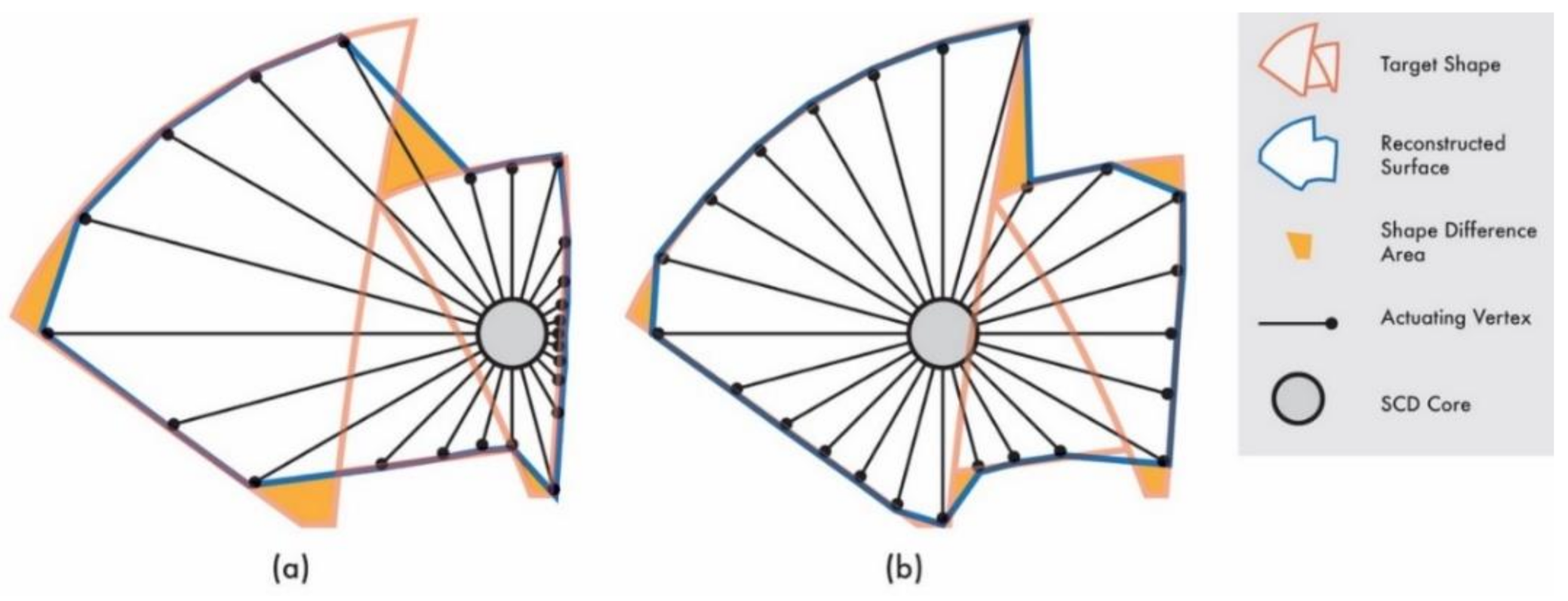

3.1. Tessellation

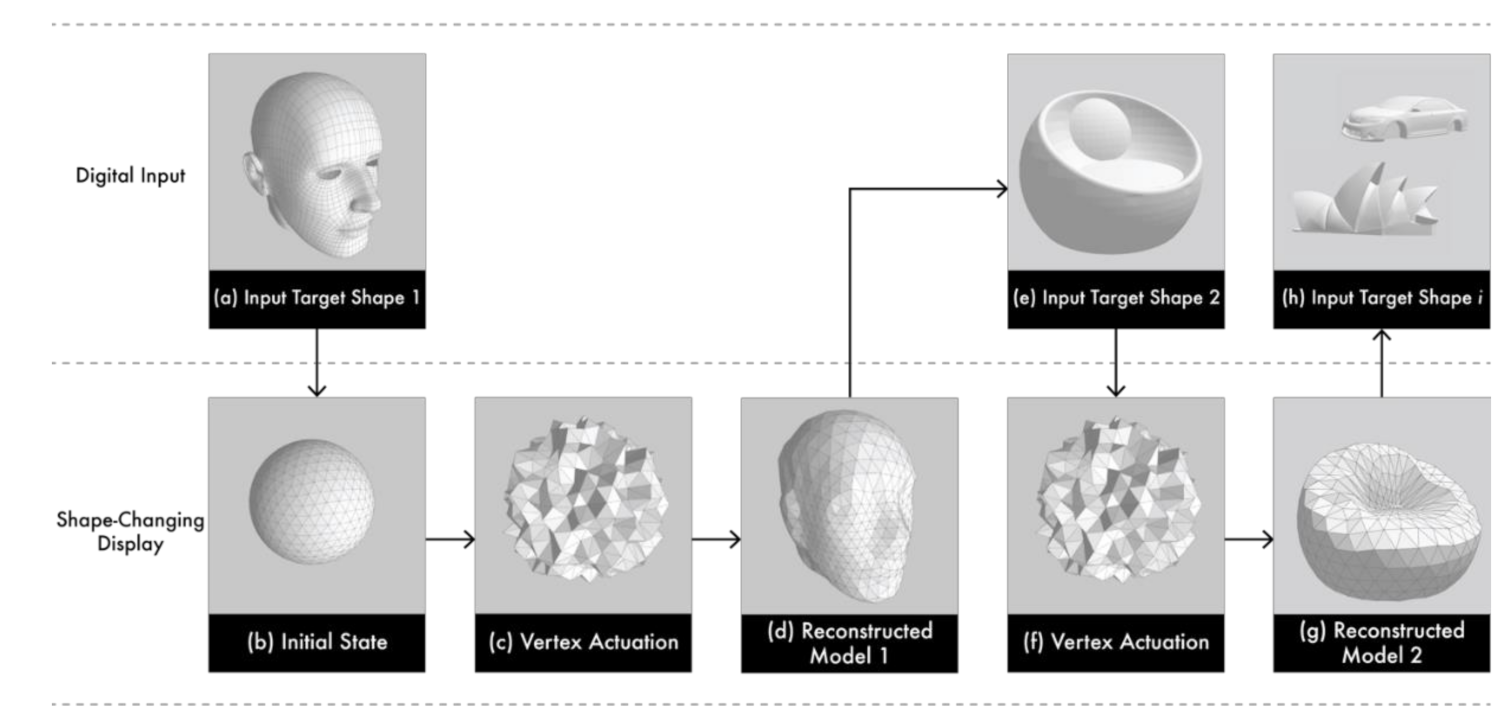

3.2. Shape Reconstruction and Optimization

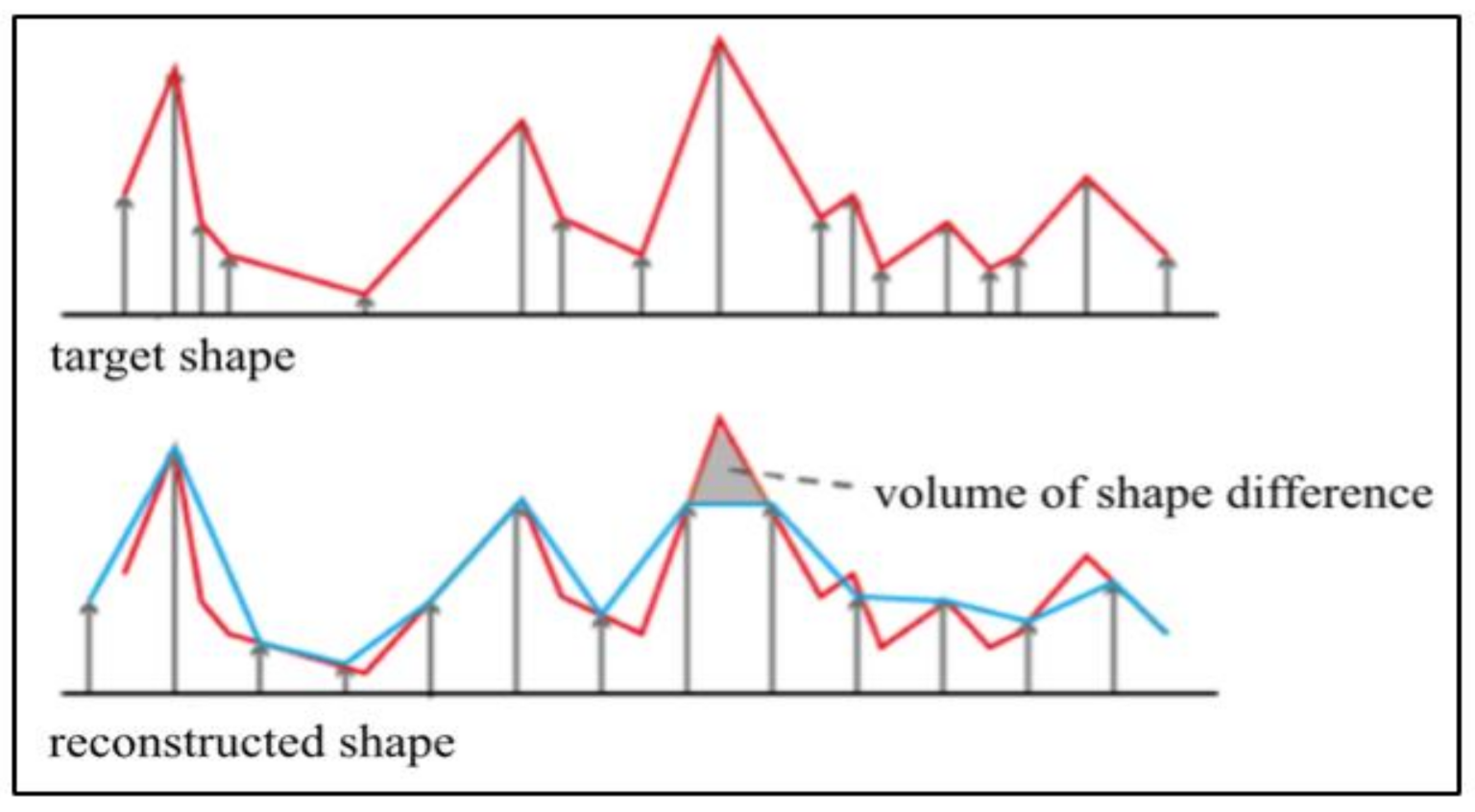

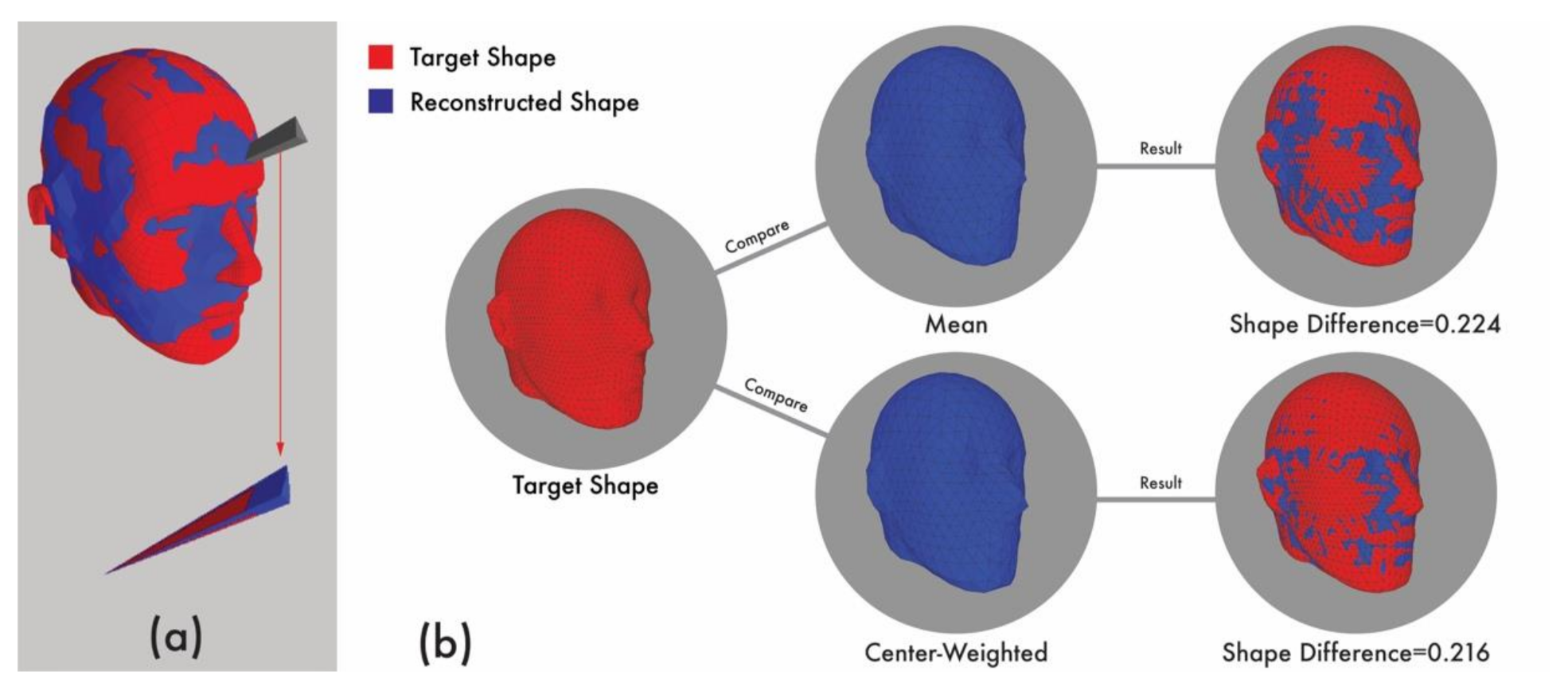

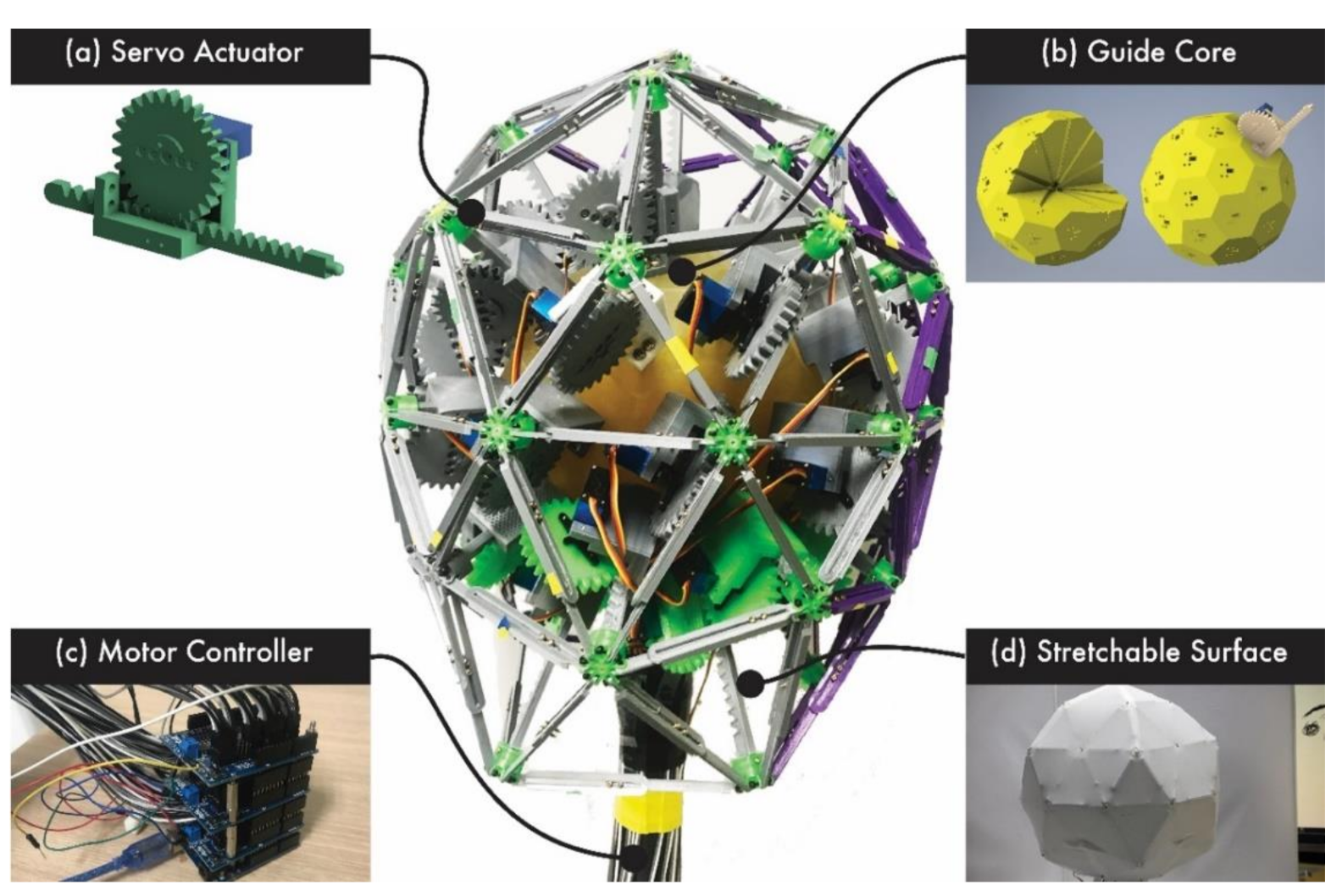

3.2.1. Shape Reconstruction and Evaluation

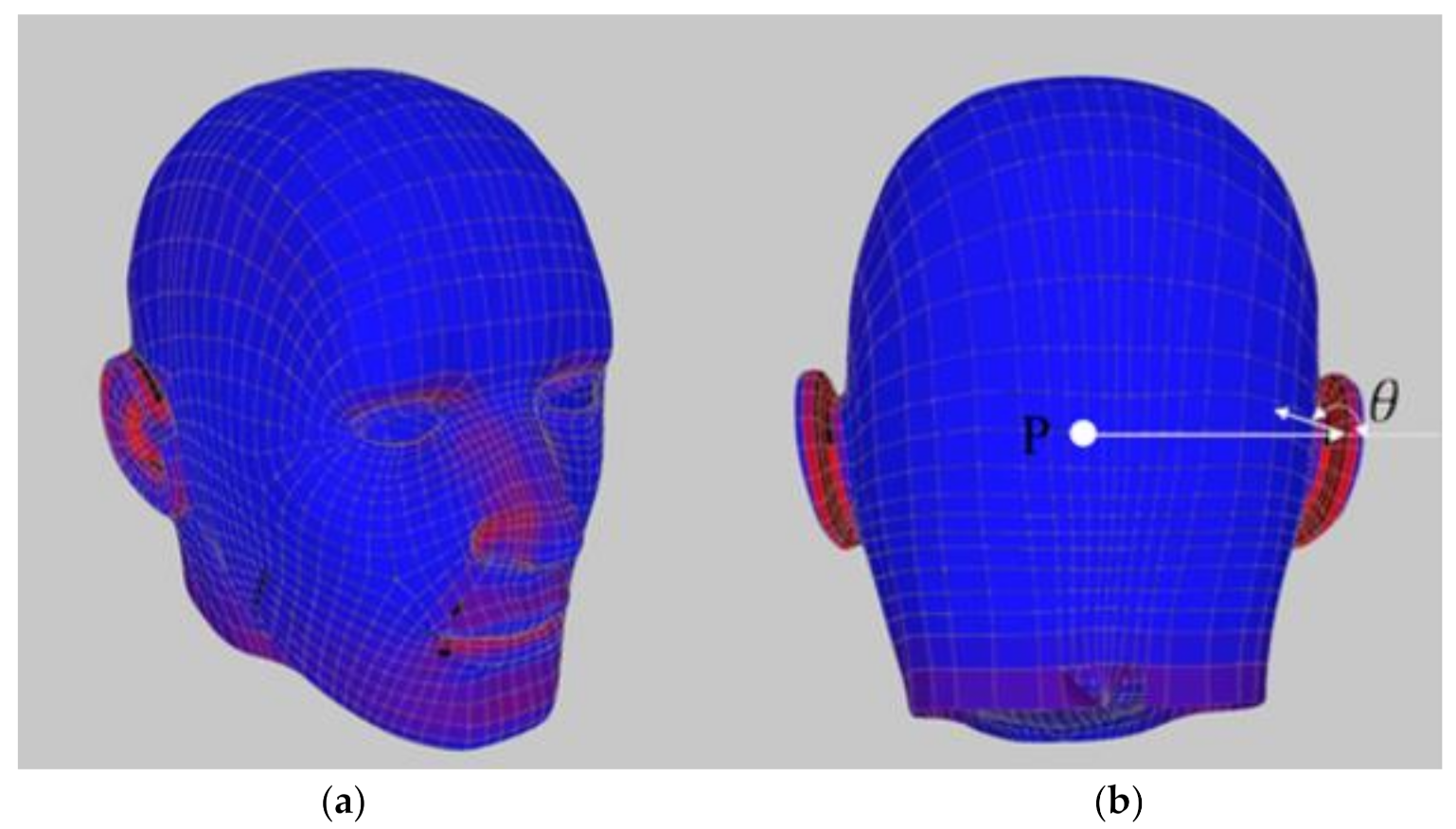

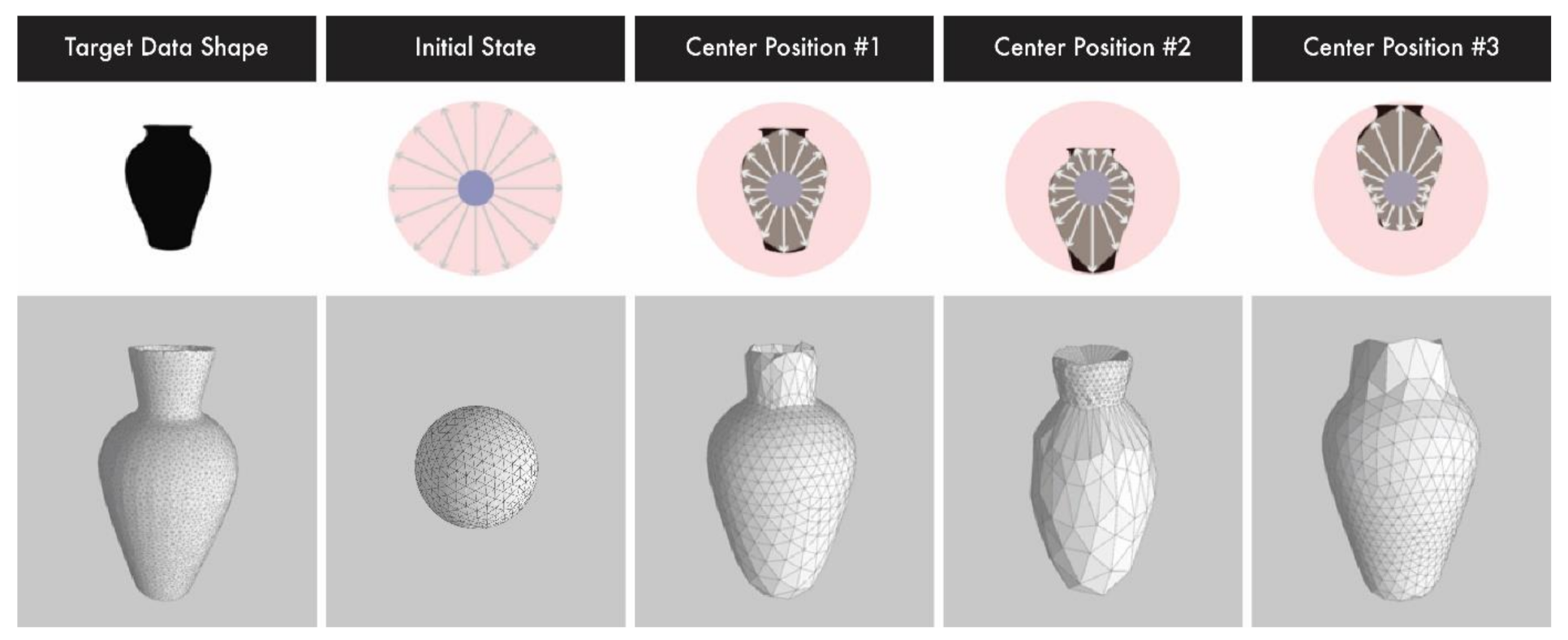

3.2.2. Actuator Center-Positioning Algorithm and Additional Weight

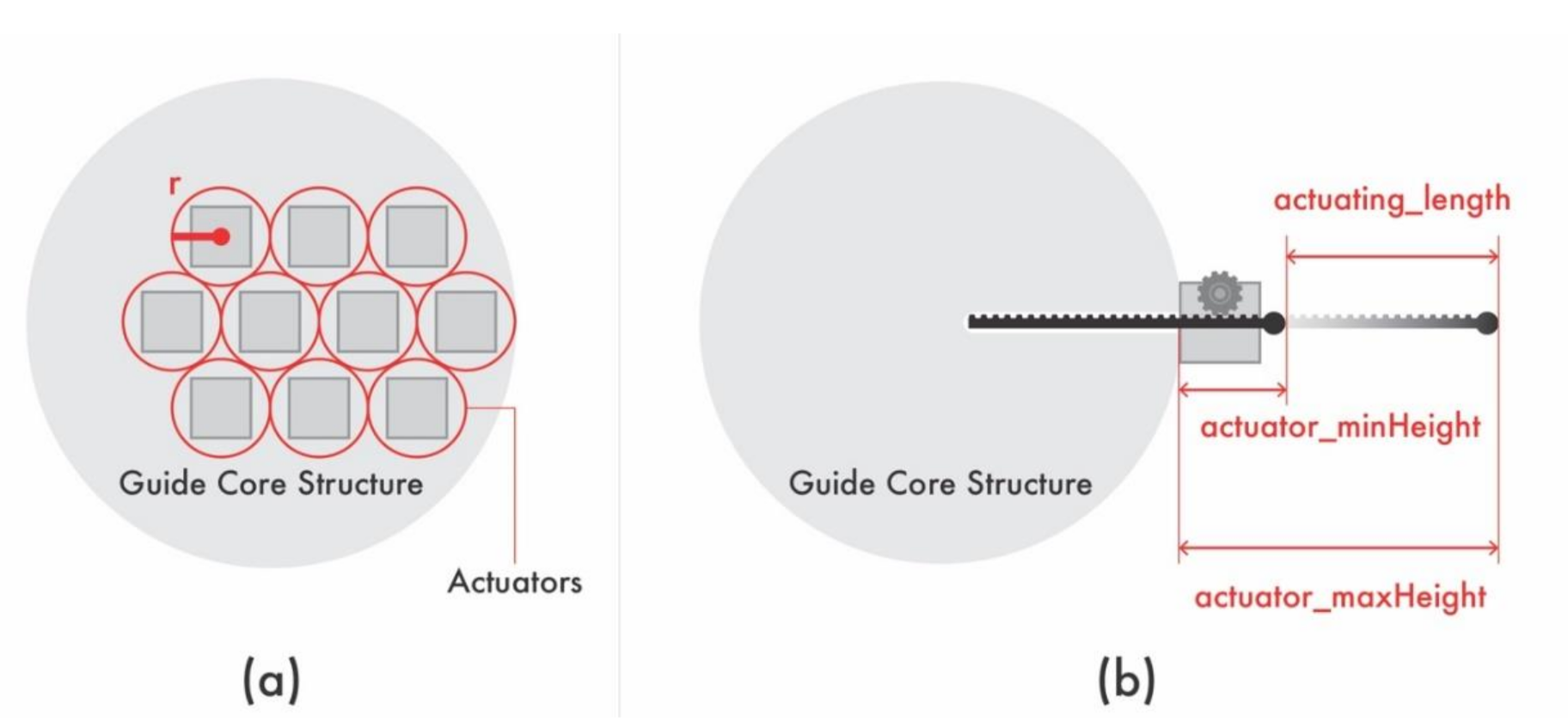

3.3. Shape-Changing Display Hardware Design

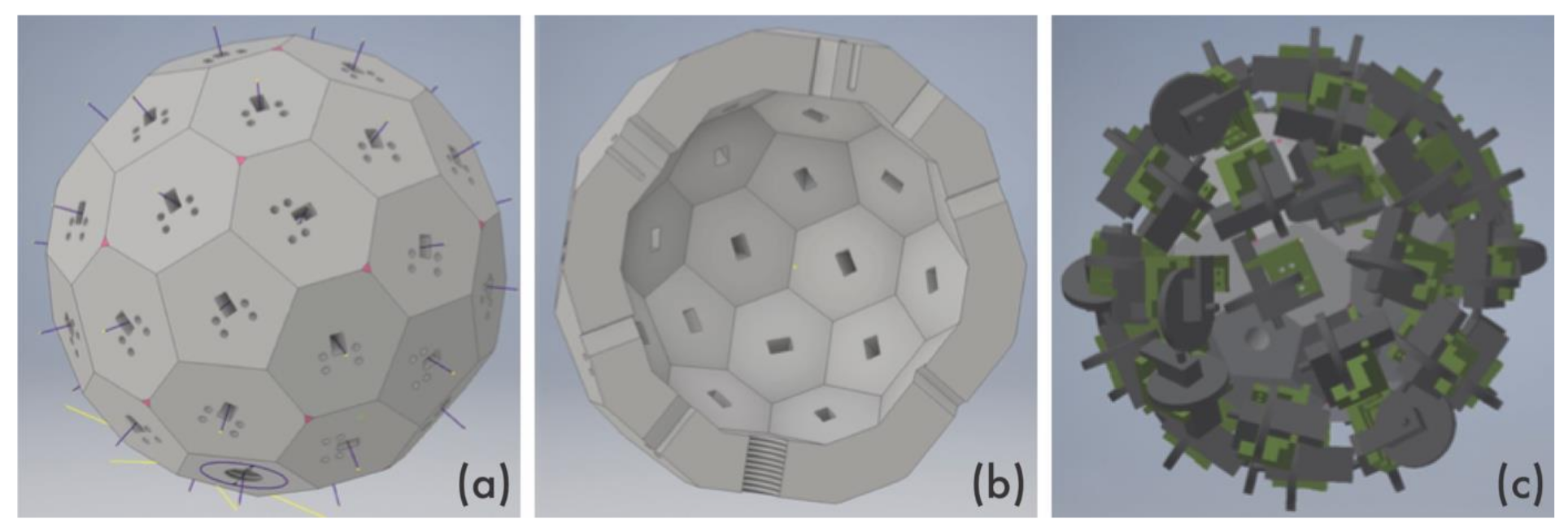

3.3.1. Guide Core Design

3.3.2. Surface Design

4. Results and Discussion

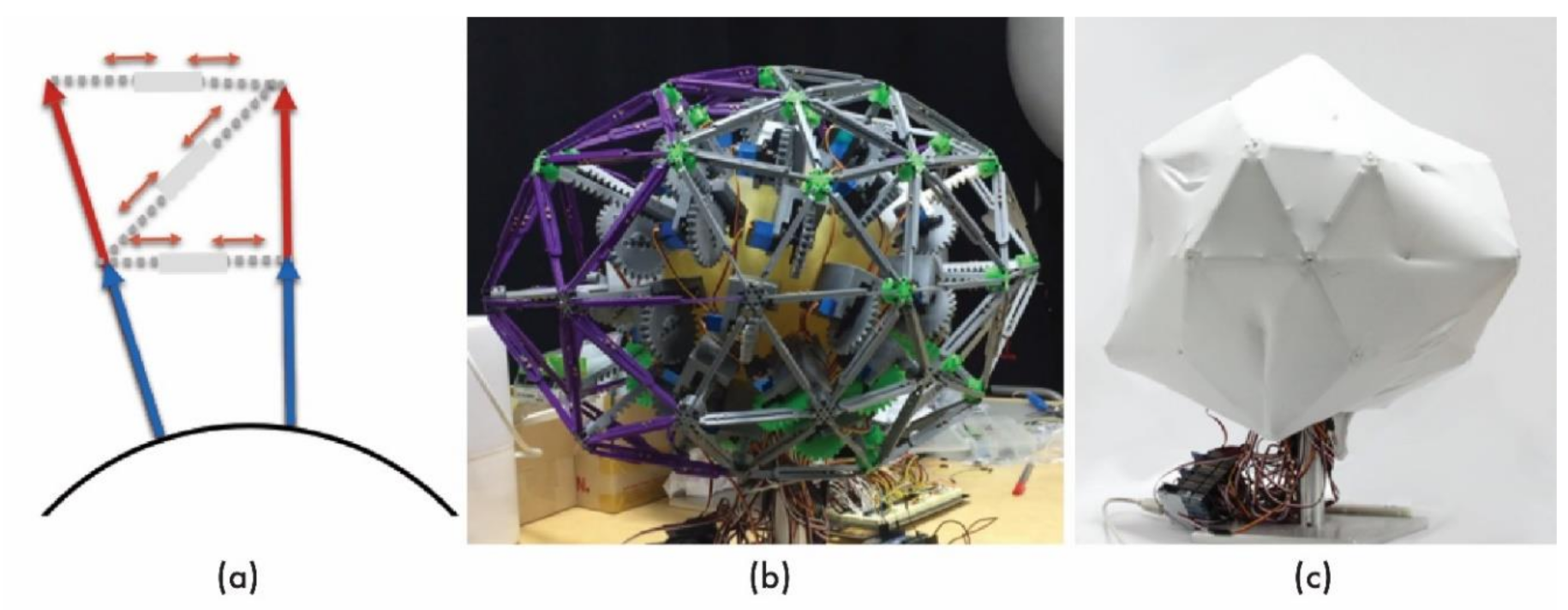

4.1. Shape-Changing Display Blueprint

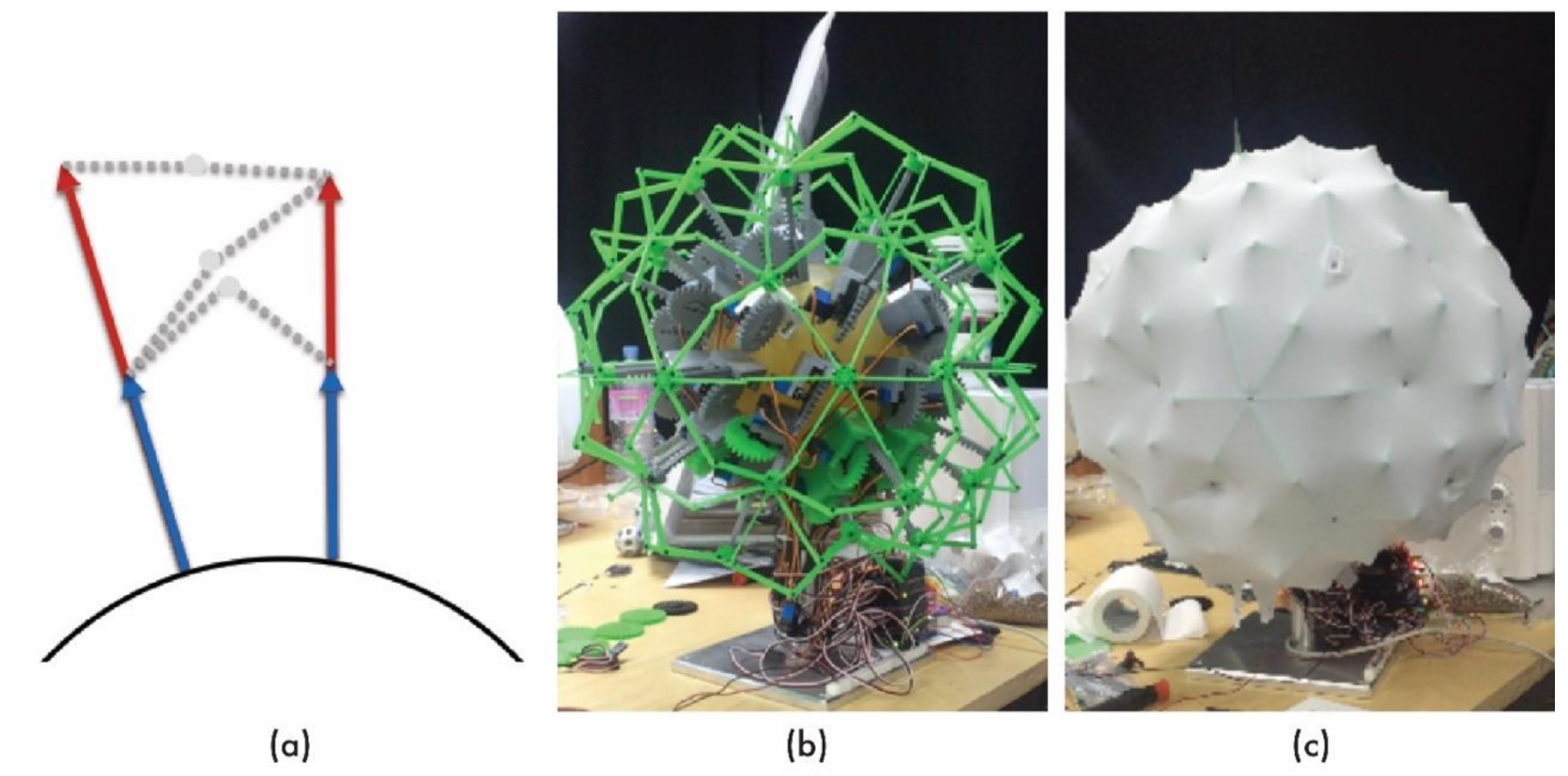

4.1.1. Linear Actuating Pins

4.1.2. Guide Core

4.1.3. Motor Controller

4.1.4. Stretchable Surface

4.1.5. Discussion

4.2. Validation Experiments

4.2.1. Experiment 1: SCD Shape Difference Measurements

4.2.2. Experiment 2: Shape Resolution Robustness

4.2.3. Experiment 3: Shape Reconstruction Expressiveness

4.2.4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Deininger, M.; Daly, S.R.; Sienko, K.H.; Lee, J.C. Novice designers’ use of prototypes in engineering design. Des. Stud. 2017, 51, 25–65. [Google Scholar] [CrossRef] [PubMed]

- Kelley, T.A. The Art of Innovation: Lessons in Creativity from IDEO, America’s Leading Design Firm; Broadway Business: New York, NY, USA, 2001; Volume 10. [Google Scholar]

- Goyale, R. Why Is Prototyping Important? Available online: https://blog.zipboard.co/why-is-prototyping-important-13150d76abc4 (accessed on 2 April 2019).

- Stappers, P.J.; van Rijn, H.; Kistemaker, S.C.; Hennink, A.E.; Sleeswijk Visser, F.S. Designing for other people’s strengths and motivations: Three cases using context, visions, and experiential prototypes. Adv. Eng. Inform. 2009, 23, 174–183. [Google Scholar] [CrossRef]

- Yuan, X.; Lee, J.-H. A quantitative approach for assessment of creativity in product design. Adv. Eng. Inform. 2014, 28, 528–541. [Google Scholar] [CrossRef]

- Schrage, M. Cultures of Prototyping. Bringing Design to Software; ACM Press: New York, NY, USA, 1996; pp. 191–205. [Google Scholar] [CrossRef]

- Camburn, B.; Viswanathan, V.; Linsey, J.; Anderson, D.; Jensen, D.; Crawford, R.; Otto, K.; Wood, K. Design prototyping methods: State of the art in strategies, techniques, and guidelines. Des. Sci. 2017, 3, 3. [Google Scholar] [CrossRef]

- Arrighi, P.-A.; Mougenot, C. Towards user empowerment in product design: A mixed reality tool for interactive virtual prototyping. J. Intell. Manuf. 2019, 30, 743–754. [Google Scholar] [CrossRef]

- Borsci, S.; Lawson, G.; Broome, S. Empirical evidence, evaluation criteria and challenges for the effectiveness of virtual and mixed reality tools for training operators of car service maintenance. Comput. Ind. 2015, 67, 17–26. [Google Scholar] [CrossRef]

- Zheng, P.; Xu, X.; Chen, C.-H. A data-driven cyber-physical approach for personalised smart, connected product co-development in a cloud-based environment. J. Intell. Manuf. 2020, 31, 3–18. [Google Scholar] [CrossRef]

- Ferrise, F.; Graziosi, S.; Bordegoni, M. Prototyping strategies for multisensory product experience engineering. J. Intell. Manuf. 2017, 28, 1695–1707. [Google Scholar] [CrossRef]

- Bund, S.; Do, E.Y.-L. SPOT! Fetch Light: Interactive navigable 3D visualization of direct sunlight. Autom. Constr. 2005, 14, 181–188. [Google Scholar] [CrossRef]

- Lovreglio, R.; Gonzalez, V.; Feng, Z.; Amor, R.; Spearpoint, M.; Thomas, J.; Trotter, M.; Sacks, R. Prototyping virtual reality serious games for building earthquake preparedness: The Auckland City Hospital case study. Adv. Eng. Inform. 2018, 38, 670–682. [Google Scholar] [CrossRef]

- Shen, Q.; Gausemeier, J.; Bauch, J.; Radkowski, R. A cooperative virtual prototyping system for mechatronic solution elements based assembly. Adv. Eng. Inform. 2005, 19, 169–177. [Google Scholar] [CrossRef]

- Shen, Q.; Grafe, M. To support multidisciplinary communication in VR-based virtual prototyping of mechatronic systems. Adv. Eng. Inform. 2007, 21, 201–209. [Google Scholar] [CrossRef]

- Thomas, P.; David, W. Augmented Reality: An Application of Heads-Up Display Technology to Manual Manufacturing Processes. In Proceedings of the 25th Hawaii International Conference on System Sciences, Kauai, HI, USA, 7–10 January 1992; pp. 659–669. [Google Scholar]

- Milgram, P.; Kishino, F. A taxonomy of mixed reality visual displays. I.E.I.C.E. Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Johnson, S.; Gibson, M.; Mutlu, B. Handheld or Handsfree? Remote Collaboration via Lightweight Head-Mounted Displays and Handheld Devices. In Proceedings of the 18th A.C.M. Conference on Computer Supported Cooperative Work & Social Computing, Vancouver, BC, Canada, 14–18 March 2015; pp. 1825–1836. [Google Scholar]

- Benko, H.; Holz, C.; Sinclair, M.J.; Ofek, E. Normaltouch and texturetouch: High-fidelity 3D haptic shape rendering on handheld virtual reality controllers. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016. [Google Scholar]

- Suzuki, Y.; Kobayash, M. Air jet driven force feedback in virtual reality. IEEE Comput. Graph. Appl. 2005, 25, 44–47. [Google Scholar] [CrossRef]

- Shigeyama, J.; Hashioto, T.; Yoshida, S.; Aoki, T.; Narumi, T.; Tanikawa, T.; Hirose, M. Transcalibur: Weight moving VR controller for dynamic rendering of 2D shape using haptic shape illusion. In Proceedings of the ACM SIGGRAPH 2018 Emerging Technologies, Vancouver, BC, Canada, 12–16 August 2018. [Google Scholar]

- Maimani, A.A.; Roudaut, A. Frozen suit: Toward a changeable stiffness suit and its application for haptic games. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017. [Google Scholar]

- Kim, H.; Yi, H.B.; Lee, H.; Lee, W. HapCube: A Wearable Tactile Device to Provide Tangential and Normal Pseudo-Force Feedback on a Fingertip. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018. [Google Scholar]

- Gu, N.; Kim, M.J.; Maher, M.L. Technological advancements in synchronous collaboration: The effect of 3D virtual worlds and tangible user interfaces on architectural design. Autom. Constr. 2011, 20, 270–278. [Google Scholar] [CrossRef]

- Nasman, J.; Cutler, B. Evaluation of user interaction with daylighting simulation in a tangible user interface. Autom. Constr. 2013, 36, 117–127. [Google Scholar] [CrossRef]

- Siu, A.F.; Yuan, S.; Pham, H.; Gonzalez, E.; Kim, L.H.; Le Goc, M.; Follmer, S. Investigating Tangible Collaboration for Design. Towards Augmented Physical Telepresence. In Design Thinking Research; Springer: Cham, Switzerland, 2018; pp. 131–145. [Google Scholar]

- Berni, A.; Maccioni, L.; Borgianni, Y. Observing pictures and videos of creative products: An eye tracking study. Appl. Sci. 2020, 10, 1480. [Google Scholar] [CrossRef]

- Neeley, W.L.; Lim, K.; Zhu, A.; Yang, M.C. Building fast to think faster: Exploiting rapid prototyping to accelerate ideation during early stage design. In Proceedings of the ASME 2013 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Portland, OR, USA, 4–7 August 2013. [Google Scholar]

- He, K.; Zhang, Q.; Hong, Y. Profile monitoring based quality control method for fused deposition modeling process. J. Intell. Manuf. 2019, 30, 947–958. [Google Scholar] [CrossRef]

- Rosso, S.; Uriati, F.; Grigolato, L.; Meneghello, R.; Concheri, G.; Savio, G. An optimization workflow in design for additive manufacturing. Appl. Sci. 2021, 11, 2572. [Google Scholar] [CrossRef]

- Georgiev, G.V.; Taura, T. Using idea materialization to enhance design creativity. In Proceedings of the 20th International Conference on Engineering Design (ICED 15), Milan, Italy, 27–30 July 2015; pp. 349–358. [Google Scholar]

- Gonçalves, M.; Cardoso, C.; Badke-Schaub, P. What inspires designers? Preferences on inspirational approaches during idea generation. Des. Stud. 2014, 35, 29–53. [Google Scholar] [CrossRef]

- Viswanathan, V.K.; Linsey, J.S. Role of sunk cost in engineering idea generation: An experimental investigation. J. Mecha. Des. 2013, 135, 121002. [Google Scholar] [CrossRef]

- Mueller, S.; Mohr, T.; Guenther, K.; Frohnhofen, J.; Baudisch, P. faBrickation: Fast 3D printing of functional objects by integrating construction kit building blocks. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Toronto, Canada, 26 April–1 May 2014; pp. 3827–3834. [Google Scholar]

- Kohtala, S.; Steinert, M. From Hand-Drawn Sketching to Laser Cutting-A Proof of Concept Prototype and Pilot Study. In Proceedings of the Design Society. International Conference on Engineering Design, Delft, The Netherlands, 5–8 August 2019; pp. 339–348. [Google Scholar]

- Iwata, H.; Yano, H.; Nakaizumi, F.; Kawamura, R. Project FEELEX: Adding Haptic Surface to Graphics. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 12-17 August 2001; pp. 469–476. [Google Scholar]

- Follmer, S.; Leithinger, D.; Olwal, A.; Hogge, A.; Ishii, H. InFORM: Dynamic Physical Affordances and Constraints through Shape and Object Actuation. In Proceedings of the 26th Annual ACM Symposium on User Interface Software and Technology, St. Andrews, Scotland, UK, 8–11 October 2013; pp. 417–426. [Google Scholar]

- Leithinger, D.; Ishii, H. Relief: A Scalable Actuated Shape Display. In Proceedings of the Fourth International Conference on Tangible, Embedded, and Embodied Interaction, Cambridge, MA, USA, 25–27 January 2010; pp. 221–222. [Google Scholar]

- Lim, Y.-K.; Stolterman, E.; Tenenberg, J. The anatomy of prototypes: Prototypes as filters, prototypes as manifestations of design ideas. ACM Trans. Comput. Hum. Interact. 2008, 15, 1–27. [Google Scholar] [CrossRef]

- Rayna, T.; Striukova, L. From rapid prototyping to home fabrication: How 3D printing is changing business model innovation. Technol. Forecast. Soc. Chang. 2016, 102, 214–224. [Google Scholar] [CrossRef]

- Thomke, S. Managing digital design at BMW. Des. Manag. J. 2001, 12, 20–28. [Google Scholar] [CrossRef]

- Gupta, R.; Whitney, D.; Zeltzer, D. Prototyping and design for assembly analysis using multimodal virtual environments. Comput. Aid. Des. 1997, 29, 585–597. [Google Scholar] [CrossRef]

- Kim, H.-J.; Kim, J.-W.; Nam, T.-J. miniStudio: Designers’ Tool for Prototyping Ubicomp Space with Interactive Miniature. In Proceedings of the 2016 Chi Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 213–224. [Google Scholar]

- Seth, A.; Su, H.-J.; Vance, J.M. SHARP: A System for Haptic Assembly and Realistic Prototyping. In Proceedings of the ASME 2006 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Philadelphia, PA, USA, 10–13 September 2006; pp. 905–912. [Google Scholar]

- Ban, S.; Hyun, K.H. Directional force feedback: Mechanical force concentration for immersive experience in virtual reality. Appl. Sci. 2019, 9, 3692. [Google Scholar] [CrossRef]

- Son, K.; Chun, H.; Park, S.; Hyun, K.H. C-Space: An Interactive Prototyping Platform for Collaborative Spatial Design Exploration. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar]

- Park, H.; Moon, H.-C.; Lee, J.Y. Tangible augmented prototyping of digital handheld products. Comput. Ind. 2009, 60, 114–125. [Google Scholar] [CrossRef]

- Barbieri, L.; Angilica, A.; Bruno, F.; Muzzupappa, M. Mixed prototyping with configurable physical archetype for usability evaluation of product interfaces. Comput. Ind. 2013, 64, 310–323. [Google Scholar] [CrossRef]

- Li, J.; Weng, J.; Xu, H.; Zhou, C.; Zhang, D.; Lu, G. Design of robotic mannequin formed by flexible belt nett. Comput. Aid. Des. 2019, 110, 1–10. [Google Scholar] [CrossRef]

- Everitt, A.; Taher, F.; Alexander, J. ShapeCanvas: An Exploration of Shape-Changing Content Generation by Members of the Public. In Proceedings of the 2016 Chi Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 2778–2782. [Google Scholar]

- Hemmert, F.; Hamann, S.; Löwe, M.; Zeipelt, J.; Joost, G. Shape-Changing Mobiles: Tapering in Two-Dimensional Deformational Displays in Mobile Phones. In Proceedings of the CHI’10 Extended Abstracts of the on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; pp. 3075–3080. [Google Scholar]

- Kim, S.; Kim, H.; Lee, B.; Nam, T.-J.; Lee, W. Inflatable Mouse: Volume-Adjustable Mouse with Air-Pressure-Sensitive Input and Haptic Feedback. In Proceedings of the S.I.G.C.H.I. Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008; pp. 211–224. [Google Scholar]

- Ramsgard Thomsen, M. Textile Logics in a Moving Architecture. In Proceedings of the Transitive Materials Workshop, CHI 2009 Workshop, Boston, MA, USA, 4–9 April 2009. [Google Scholar]

- Togler, J.; Hemmert, F.; Wettach, R. Living Interfaces: The Thrifty Faucet. In Proceedings of the 3rd International Conference on Tangible and Embedded Interaction, Cambridge, UK, 16–18 February 2009; pp. 43–44. [Google Scholar]

- Bau, O.; Petrevski, U.; Mackay, W. Bubble Wrap: A Textile-Based Electromagnetic Haptic Display. In Proceedings of the CHI’09 Extended Abstracts of the on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; pp. 3607–3612. [Google Scholar]

- Kammermeier, P.; Buss, M.; Schmidt, G. Dynamic Display of Distributed Tactile Shape Information by a Prototypical Actuator Array. In Proceedings of the IEEE Publications/RSJ International Conference on Intelligent Robots and Systems (IROS 2000) (Cat. No. 00CH37113), Takamatsu, Japan, 31 October–5 November 2000; Volume 2000, pp. 1119–1124. [Google Scholar]

- Poupyrev, I.; Nashida, T.; Okabe, M. Actuation and Tangible User Interfaces: The Vaucanson Duck, Robots, and Shape Displays. In Proceedings of the 1st International Conference on Tangible and Embedded Interaction, Baton Rouge, LA, USA, 15–17 February 2007; pp. 205–212. [Google Scholar]

- Coelho, M.; Zigelbaum, J. Shape-changing interfaces. Pers. Ubiquitous Comput. 2011, 15, 161–173. [Google Scholar] [CrossRef]

- Rasmussen, M.K.; Pedersen, E.W.; Petersen, M.G.; Hornbæk, K. Shape-Changing Interfaces: A Review of the Design Space and Open Research Questions. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 735–744. [Google Scholar]

- Cruz-Neira, C.; Sandin, D.J.; DeFanti, T.A. Surround-Screen Projection-Based Virtual Reality: The Design and Implementation of the CAVE. In Proceedings of the 20th Annual Conference on Computer Graphics and Interactive Techniques, Anaheim, CA, USA, 1–6 August 1993; pp. 135–142. [Google Scholar]

- Haeusler, M. Media Facades-History, Technology, Content. 2009. Available online: http://hdl.handle.net/10453/12401 (accessed on 2 April 2019).

- Narita, G.; Watanabe, Y.; Ishikawa, M. Dynamic projection mapping onto deforming non-rigid surface using deformable dot cluster marker. IEEE Trans. Vis. Comput. Graph. 2017, 23, 1235–1248. [Google Scholar] [CrossRef]

- Roo, J.S.; Gervais, R.; Hachet, M. Inner Garden: An Augmented Sandbox Designed for Self-Reflection. In Proceedings of the TEI’16: Tenth International Conference on Tangible, Embedded, and Embodied Interaction, Eindhoven, The Netherlands, 14–17 February 2016; pp. 570–576. [Google Scholar]

- Bruno, F.; Bruno, S.; De Sensi, G.; Luchi, M.-L.; Mancuso, S.; Muzzupappa, M. From 3D reconstruction to virtual reality: A complete methodology for digital archaeological exhibition. J. Cult. Herit. 2010, 11, 42–49. [Google Scholar] [CrossRef]

- Dalsgaard, P.; Halskov, K. 3D Projection on Physical Objects: Design Insights from Five Real Life Cases. In Proceedings of the S.I.G.C.H.I. Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; pp. 1041–1050. [Google Scholar]

- Jang, S.; Kim, L.H.; Tanner, K.; Ishii, H.; Follmer, S. Haptic Edge Display for Mobile Tactile Interaction. In Proceedings of the 2016 Chi Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 3706–3716. [Google Scholar]

- Hardy, J.; Weichel, C.; Taher, F.; Vidler, J.; Alexander, J. Shapeclip: Towards Rapid Prototyping with Shape-Changing Displays for Designers. In Proceedings of the 33rd Annual A.C.M. Conference on Human Factors in Computing Systems, Seoul, Korea, 18–23 April 2015; pp. 19–28. [Google Scholar]

| (A) | (B) | (C) | |

|---|---|---|---|

| Target Shapes |  |  |  |

| 3D-Scanned SCD Constructing the Target Shape |  |  |  |

| Shape Difference Value | 0.060 | 0.035 | 0.144 |

| Right-Quarter View | Front View | Left-Quarter View | |

|---|---|---|---|

| Ground Truth |  |  |  |

| 2V Icosphere Vertex: 42 Edge: 120 Face: 80 Shape Difference = 0.592 |  |  |  |

| 4V Icosphere Vertex: 162 Edge: 480 Face: 320 Shape Difference = 0.302 |  |  |  |

| 8V Icosphere Vertex: 642 Edge: 1920 Face: 1280 Shape Difference = 0.224 |  |  |  |

| 16V Icosphere Vertex: 2562 Edge: 7680 Face: 5120 Shape Difference = 0.173 |  |  |  |

| Ground Truth | Mean | Center-Weight | Center Positioning (Fewer Iterations) | Center Positioning (More Iterations) | Additional Weight |

|---|---|---|---|---|---|

|  |  | |||

| Bumpy Cube Shape Difference (1/original volume) | 0.065 | 0.035 | |||

|  |  |  |  |  |

| Rabbit | 0.150 | 0.105 | 0.111 | 0.118 | 0.107 |

|  |  |  |  |  |

| Cow | 0.219 | 0.147 | 0.148 | 0.158 | 0.151 |

|  |  |  |  |  |

| Face | 0.057 | 0.038 | 0.036 | 0.036 | 0.052 |

|  |  |  |  |  |

| Chair | 0.083 | 0.057 | 0.078 | 0.076 | 0.054 |

|  |  |  |  |  |

| Car | 0.140 | 0.092 | 0.091 | 0.091 | 0.091 |

|  |  |  |  |  |

| Building | 0.161 | 0.110 | 0.110 | 0.110 | 0.110 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ban, S.; Hyun, K.H. Real-Time Physical Prototyping Tool Design Based on Shape-Changing Display. Appl. Sci. 2021, 11, 4181. https://doi.org/10.3390/app11094181

Ban S, Hyun KH. Real-Time Physical Prototyping Tool Design Based on Shape-Changing Display. Applied Sciences. 2021; 11(9):4181. https://doi.org/10.3390/app11094181

Chicago/Turabian StyleBan, Seonghoon, and Kyung Hoon Hyun. 2021. "Real-Time Physical Prototyping Tool Design Based on Shape-Changing Display" Applied Sciences 11, no. 9: 4181. https://doi.org/10.3390/app11094181

APA StyleBan, S., & Hyun, K. H. (2021). Real-Time Physical Prototyping Tool Design Based on Shape-Changing Display. Applied Sciences, 11(9), 4181. https://doi.org/10.3390/app11094181