Virtual Reality as a Reflection Technique for Public Speaking Training

Abstract

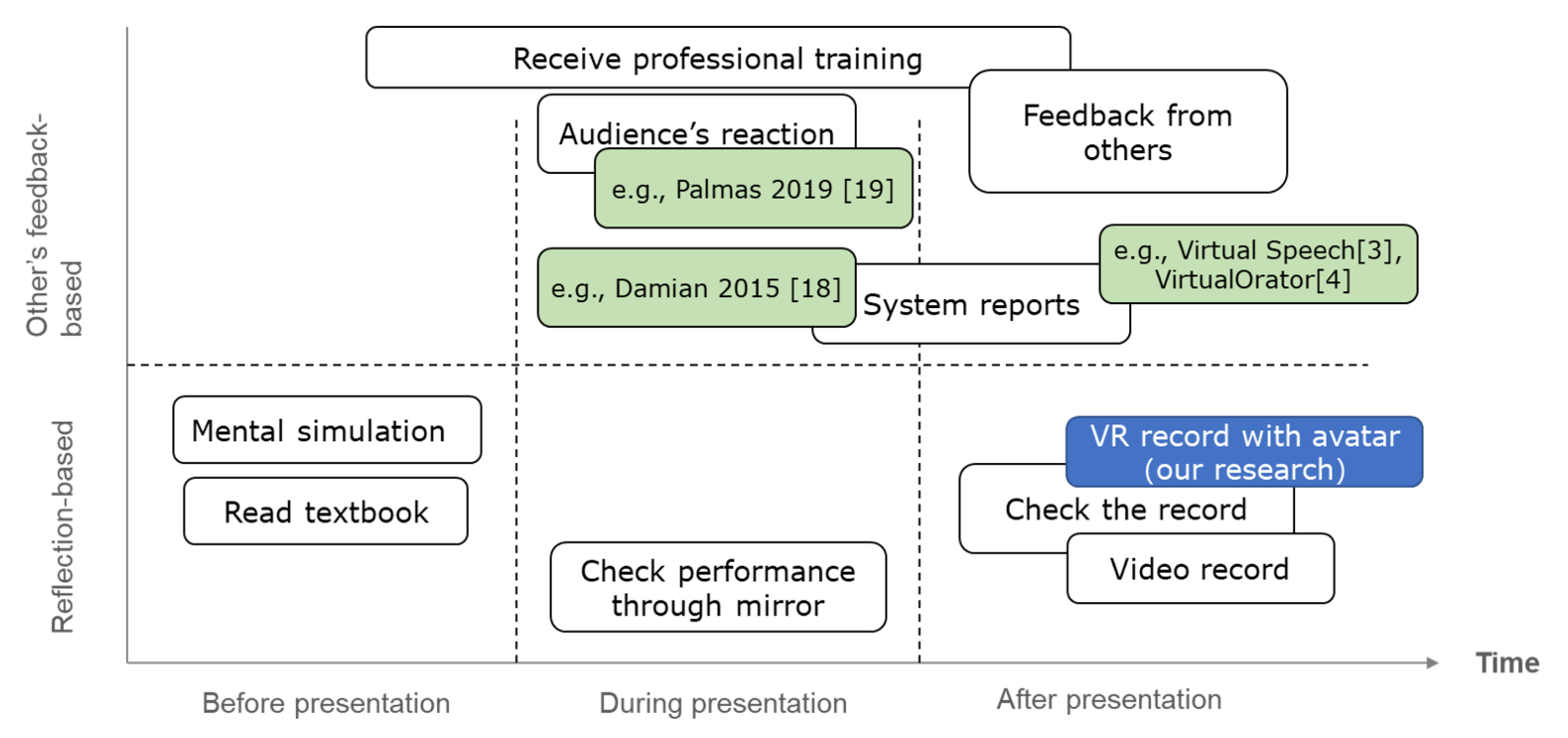

1. Introduction

2. Related Work

3. Main Idea

- R1

- Be aware of one’s problems when delivering a presentation.

- R2

- Grasp one’s visibility to others without a cognitive bias.

- R3

- Avoid excessive self-focused attention and negative emotions.

3.1. Reflections from a Third-Person Perspective

3.2. Appearance Factor

3.2.1. Position and Body Posture

3.2.2. Face Direction

3.2.3. Hand/Arm Movement

3.2.4. Voice

3.2.5. Eye Gaze

3.2.6. Personal Face

3.2.7. Clothing

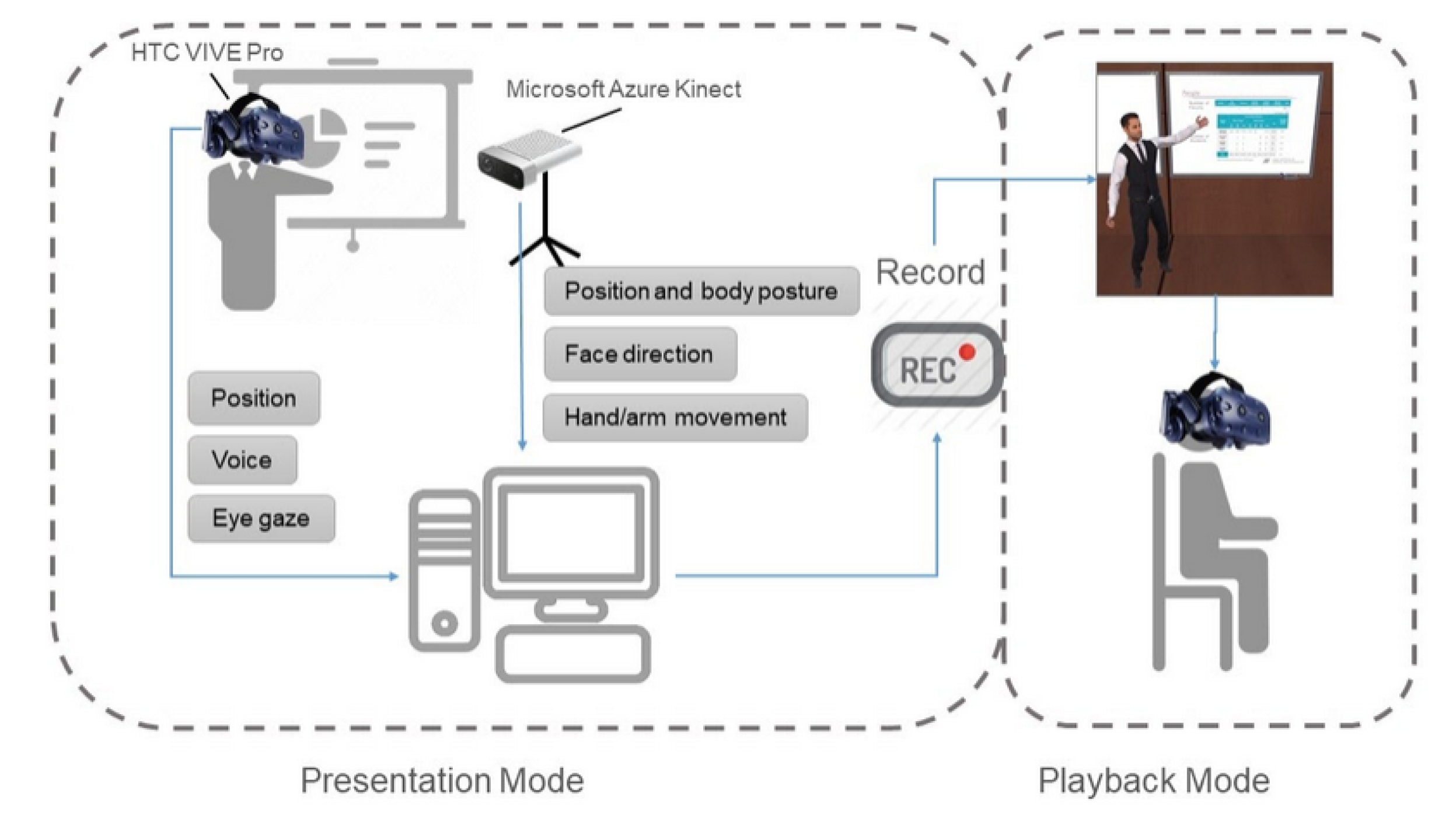

4. System Implementation

4.1. Hardware

4.2. Presentation Mode

4.3. Playback Mode

4.3.1. Position and Posture

4.3.2. Hand/Arm Movement

4.3.3. Voice

4.3.4. Slide Switching Timing

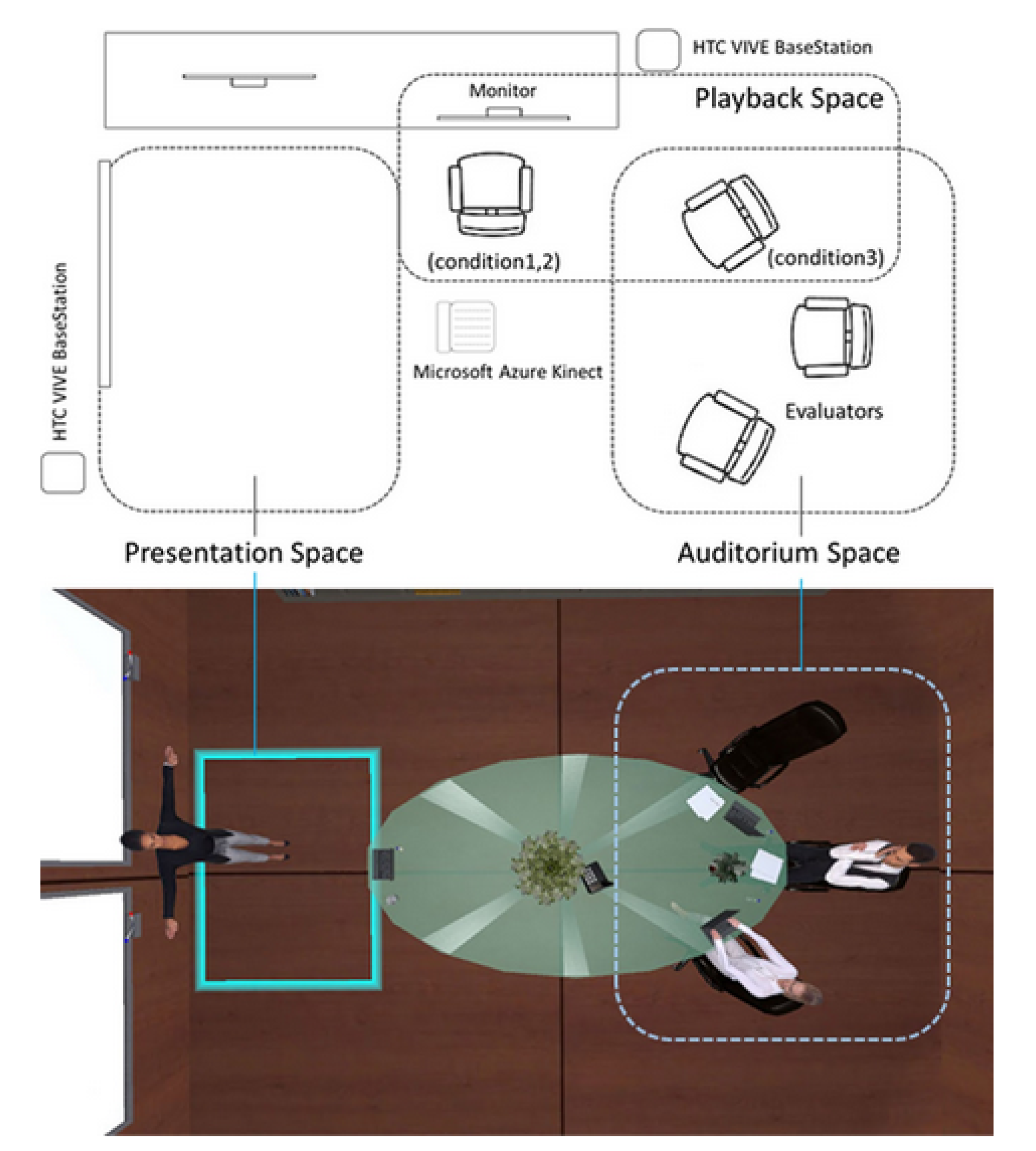

5. Evaluation

5.1. Method

5.2. Participants

5.3. Experimental Condition

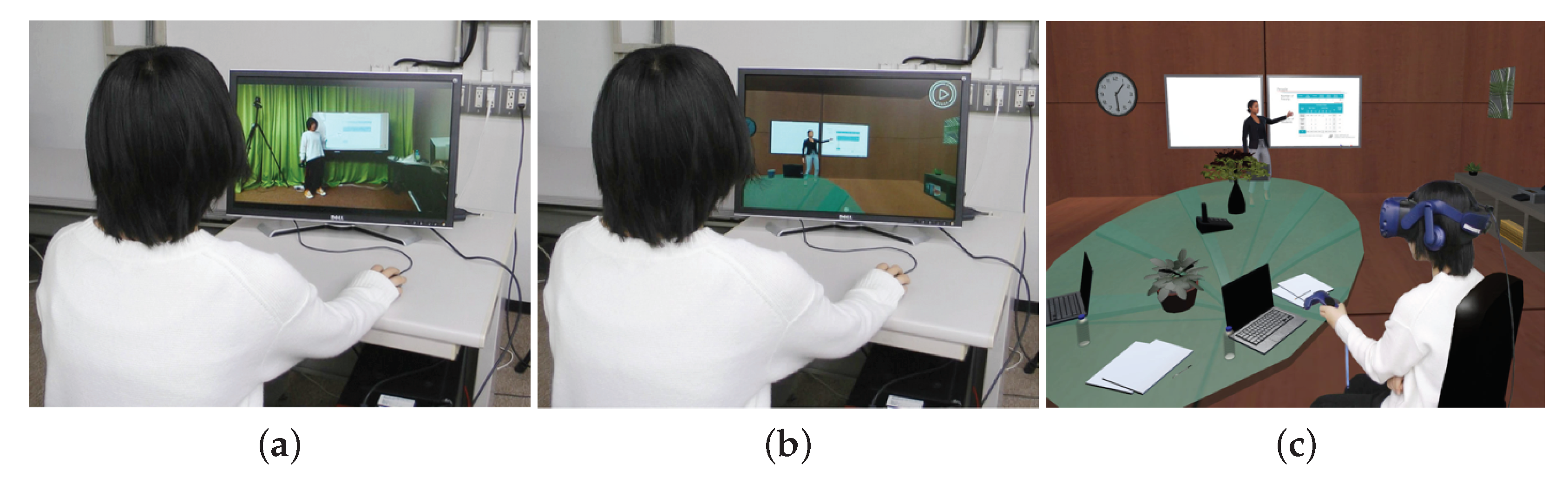

5.3.1. Condition 1: Video + PC Monitor

5.3.2. Condition 2: 3D Avatar + PC Monitor

5.3.3. Condition 3: 3D Avatar + HMD

5.4. Outcome Measurements

- Presentation quality by self(SQu) To measure changes of subjective impression on presentation performance, the PSCR score introduced in [16] considered 11 questions that focus on the content, voice, and body movement in order to provide self-assessment of the presentation quality. Four of the questions were related to the preparation and organization of the presentation material, but these four questions were not used since the presentation material was prepared in advance. Besides the PSCR score, a 100-point scale was added to survey the overall impression of the presentation.

- Presentation quality by others(OQu) The improvement of public speaking skills was measured according to the evaluators, so the PSCR score was also used as an index for the assessment by the evaluators. Two of the three evaluators who were randomly assigned made the evaluation immediately after they finished listening to the presentation. Although the evaluators were non-experts on the presentation content, the PSCR was designed such that the users can perform objective and quantitative assessments even for non-experts. Corresponding to SQu, there was a 100-point scale for overall impression of the presentation as well. The average of the evaluators’ scores was used as an indicator.

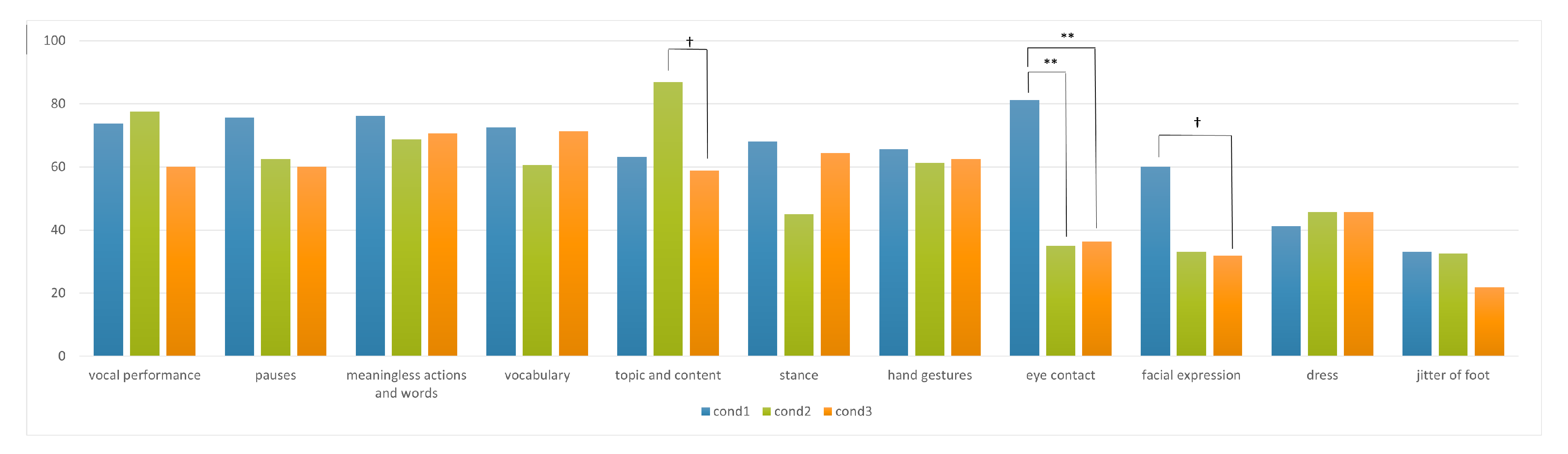

- Degree of concern(DoC) for each part in the playback A mark sheet with 11 items was used to identify the areas that received attention during the playback. These items include: (1) vocal performance (i.e., pitch, loudness), (2) pauses, (3) meaningless actions and words, (4) vocabulary, (5) topic and content, (6) stance, (7) hand gestures, (8) eye contact, (9) facial expression, (10) dress, and (11) foot jittering. The participants were asked to score each item on a 20-point scale to indicate the DoC.

- Willingness of playback(Wil) Participants were asked to score the level of willingness to use the playback method in each condition based on a 5-point scale (1: strongly disagree, 5: strongly agree).

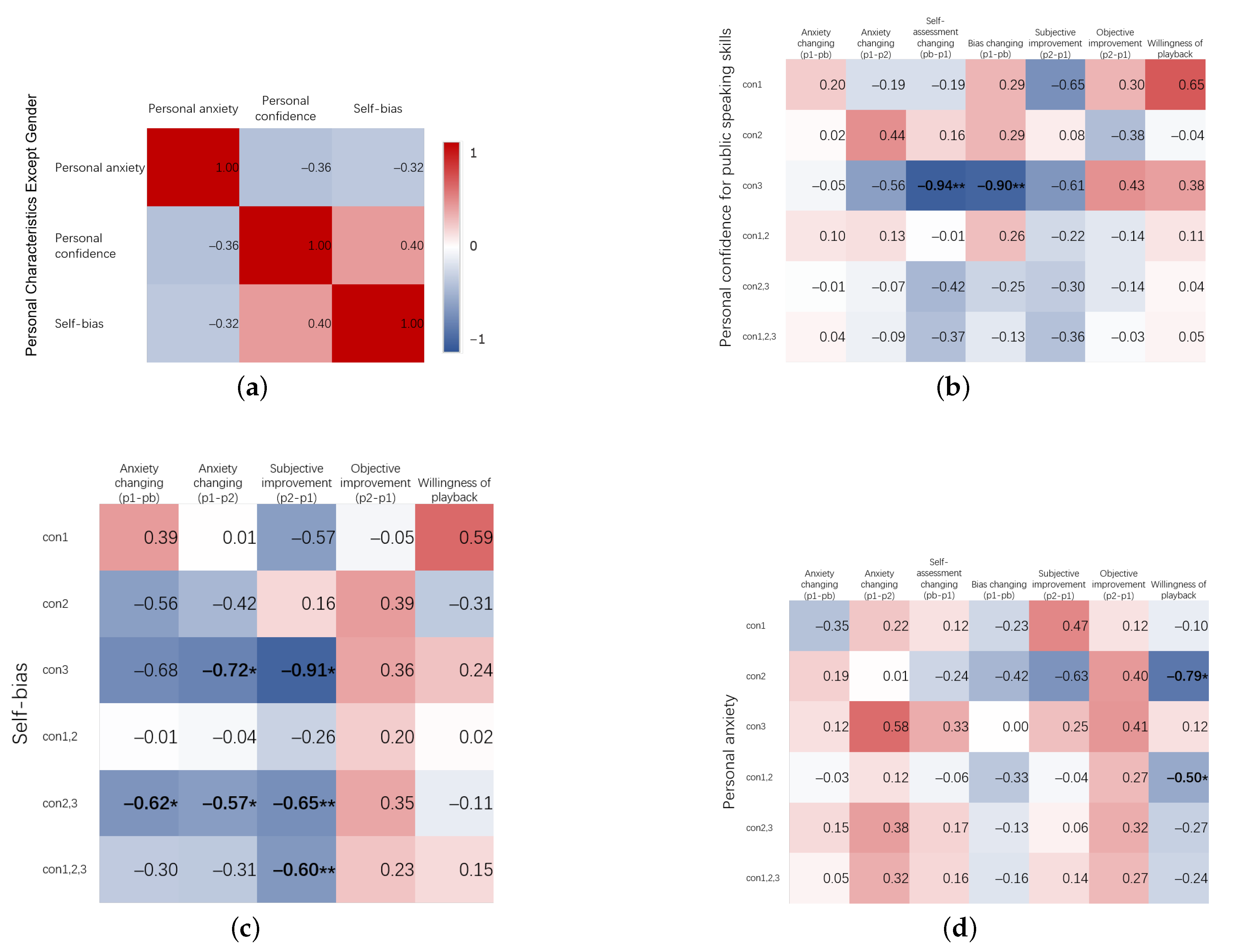

5.4.1. Presenter Characteristics

- Personal confidence for public speaking skills Confidence will engage people to accept the challenge and think about their approach as reflection [36], although it was still unclear whether the observers’ perspective provided by our VR reflection method would help people with lower confidence to focus more on their approach to their presentation. The confidence in public speaking skills was surveyed on a 5-point scale (1: very poor, 5: excellent) before the experiment.

- Self-bias The gaps between SQu and OQu indicate biased self-assessment. It is hard for some people to make fair self-assessment due to biased cognition as mentioned previously, and this will lead to ineffective reflection. In this paper, how such a person is affected by the use of the avatar and VR during playback was also discussed. Since the PSCR score focuses more on each specific part for presentation, the 100-point scale was used in this study to measure the overall impression in personal characteristics.

- Personal anxiety for public speaking Public speaking anxiety has been discussed in public speaking training in many works [22,23,37]. Normally, personal anxiety around public speaking is related to negative self-assessment according to the cognitive model, but there are also some reports revealing that people with social anxiety did not underestimate their performance [38]. So personal anxiety and self-bias were separated into two characteristics to see the result. The value was measured from the personal report using PRCS before the experiment and indicated general fear.

5.4.2. Training Indicators

- Anxiety changing(p1-pb) The Anx for Presentation 1 changing between the personal reports before and after playback.

- Anxiety changing(p1-p2) The Anx changing between two presentations, indicating the anxiety reduction from the training.

- Self-assessment changing(pb-p1) The self-assessment changes were measured by PSCR score in SQu after playback. Since the self-bias from presenter characteristics also use PSCR score in SQu before playback, we will not analyze the relationship between self-bias and self-assessment changes.

- Bias changing(p1-pb) The self-bias changing of assessment from reflection was measured as the changes in absolute value of gaps between SQu and OQu before and after playback. Similar to the self-assessment changes, the self-bias from presenter characteristics also uses SQu before playback, so we will not analyze the relationship between them.

- Subjective improvement(p2-p1) The personal feelings about the improvement on public speaking skills was measured by PSCR score changes in SQu between two presentations.

- Objective improvement(p2-p1) More objective results for the presenter’s improvement on public speaking skills were measured by PSCR score changes in OQu between two presentations.

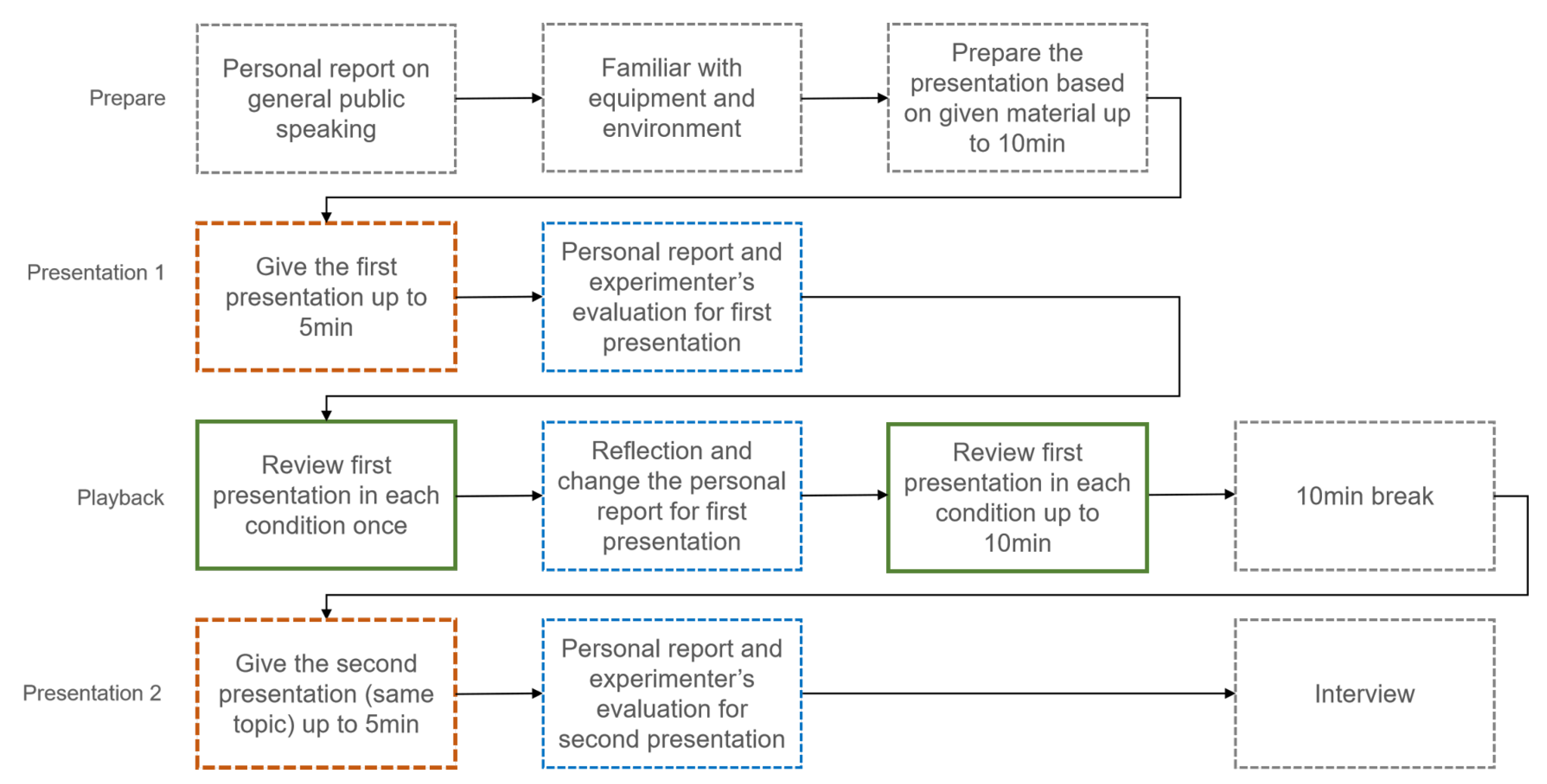

5.5. Procedures

6. Results

6.1. Attention Distribution in Each Condition

6.2. Personal Characteristics Differences

7. Discussion

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Furmark, T.; Tilfors, M.; Everz, P.O. Social phobia in the general population: Prevalence and sociodemographic profile. Soc. Psychiatry Psychiatr. Epidemiol. 1999, 34, 416–424. [Google Scholar] [CrossRef] [PubMed]

- Tejwani, V.; Ha, D.; Isada, C. Observations: Public Speaking Anxiety in Graduate Medical Education-A Matter of Interpersonal and Communication Skills? J. Grad. Med Educ. 2016, 8, 111. [Google Scholar] [CrossRef]

- VirtualSpeech Ltd. Virtual Speech. Available online: https://virtualspeech.com/ (accessed on 27 April 2021).

- Virtual Human Technologies Virtual Orator; Virtual Human Technologies: Prague, Czech Republic, 2015.

- Hoyt, C.L.; Blascovich, J.; Swinth, K.R. Social inhibition in immersive virtual environments. Presence Teleoper. Virtual Environ. 2003, 12, 183–195. [Google Scholar] [CrossRef]

- Kampmann, I.L.; Emmelkamp, P.M.; Hartanto, D.; Brinkman, W.P.; Zijlstra, B.J.; Morina, N. Exposure to virtual social interactions in the treatment of social anxiety disorder: A randomized controlled trial. Behav. Res. Ther. 2016, 77, 147–156. [Google Scholar] [CrossRef]

- Bourhis, J.; Allen, M. The role of videotaped feedback in the instruction of public speaking: A quantitative synthesis of published empirical research. Commun. Res. Rep. 1998, 15, 256–261. [Google Scholar] [CrossRef]

- Laposa, J.M.; Rector, N.A. Effects of videotaped feedback in group cognitive behavioral therapy for social anxiety disorder. Int. J. Cogn. Ther. 2014, 7, 360–372. [Google Scholar] [CrossRef]

- Arakawa, R.; Yakura, H. INWARD: A Computer-Supported Tool for Video-Reflection Improves Efficiency and Effectiveness in Executive Coaching. In Proceedings of the International Conference on Human Factors in Computing Systems, ACM, 2020, CHI ’20, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar]

- Karl, K.A.; Kopf, J.M. Will individuals who need to improve their performance the most, volunteer to receive videotaped feedback? J. Bus. Commun. 1973, 31, 213–223. [Google Scholar] [CrossRef]

- Iriye, H.; Jacques, P.L.S. Memories for third-person experiences in immersive virtual reality. Sci. Rep. 2021, 11, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Slater, M.; Pertaub, D.P.; Steed, A. Public speaking in virtual reality: Facing an audience of avatars. IEEE Comput. Graph. Appl. 1999, 19, 6–9. [Google Scholar] [CrossRef]

- Fung, M.; Jin, Y.; Zhao, R.; Hoque, M.E. ROC Speak: Semi-Automated Personalized Feedback on Nonverbal Behavior from Recorded Videos. In Proceedings of the International Joint Conference on Pervasive and Ubiquitous Computing, ACM, 2015, UbiComp ’15, Umeda, Osaka, Japan, 9–11 September 2015; pp. 1167–1178. [Google Scholar]

- Schneider, J.; Börner, D.; Van Rosmalen, P.; Specht, M. Presentation Trainer: What experts and computers can tell about your nonverbal communication. J. Comput. Assist. Learn. 2017, 33, 164–177. [Google Scholar] [CrossRef]

- Schneider, J.; Romano, G.; Drachsler, H. Beyond reality—Extending a presentation trainer with an immersive VR module. Sensors 2019, 19, 3457. [Google Scholar] [CrossRef]

- Schreiber, L.M.; Paul, G.D.; Shibley, L.R. The development and test of the public speaking competence rubric. Commun. Educ. 2012, 61, 205–233. [Google Scholar] [CrossRef]

- Chen, L.; Leong, C.W.; Feng, G.; Lee, C.M.; Somasundaran, S. Utilizing Multimodal Cues to Automatically Evaluate Public Speaking Performance. In Proceedings of the International Conference on Affective Computing and Intelligent Interaction, 2015, ACII ’15, Xi’an, China, 21–24 September 2015; pp. 394–400. [Google Scholar]

- Damian, I.; Tanz, C.S.S.; Baur, T.; Schöning, J.; Luyten, K.; André, E. Augustmenting Social Interactions: Realtime Behavioural Feedback using Social Signal Processing Techniques. In Proceedings of the International Conference on Human Factors in Computing Systems, ACM, 2015, CHI ’15, Seoul, Korea, 18–23 April 2015; pp. 565–574. [Google Scholar]

- Palmas, F.; Cichor, J.; Plecher, D.A.; Klinker, G. Acceptance and Effectiveness of a Virtual Reality Public Speaking Training. In Proceedings of the International Symposium on Mixed and Augmented Reality, 2019, ISMAR ’19, Beijing, China, 10–18 October 2019; pp. 503–511. [Google Scholar]

- Dewey, J. How we think: A restatement of the relation of reflective thinking to the educative process. Soc. Psychiatry Psychiatr. Epidemiol. 1999, 34, 416–424. [Google Scholar] [CrossRef]

- Baumer, E.P.S. Reflective Informatics: Conceptual Dimensions for Designing Technologies of Reflection. In Proceedings of the International Conference on Human Factors in Computing Systems, ACM, 2015, CHI ’15, Seoul, Korea, 18–23 April 2015; pp. 585–594. [Google Scholar]

- Stupar-Rutenfrans, S.; Ketelaars, L.E.; van Gisbergen, M.S. Beat the fear of public speaking: Mobile 360 video virtual reality exposure training in home environment reduces public speaking anxiety. Cyberpsychol. Behav. Soc. Netw. 2017, 20, 624–633. [Google Scholar] [CrossRef] [PubMed]

- Chollet, M.; Ghate, P.; Neubauer, C.; Scherer, S. Influence of individual differences when training public speaking with virtual audiences. In Proceedings of the 18th International Conference on Intelligent Virtual Agents, Sydney, NSW, Australia, 5–8 November 2018; pp. 1–7. [Google Scholar]

- Aymerich-Franch, L.; Bailenson, J. The use of doppelgangers in virtual reality to treat public speaking anxiety: A gender comparison. In Proceedings of the International Society for Presence Research Annual Conference, Vienna, Austria, 17–19 March 2014; pp. 173–186. [Google Scholar]

- Kilteni, K.; Groten, R.; Slater, M. VR Facial Animation via Multiview Image Translation. Presence Teleoper. Virtual Environ. 2012, 21. [Google Scholar] [CrossRef]

- Aronson, E.; Akert, R.M.; Wilson, T.D. Social Psychology; Pearson Prentice Hall: Hoboken, NJ, USA, 2007. [Google Scholar]

- Gorisse, G.; Christmann, O.; Amato, E.A.; Richir, S. First-and third-person perspectives in immersive virtual environments: Presence and performance analysis of embodied users. Front. Robot. AI 2017, 4, 33. [Google Scholar] [CrossRef]

- Batrinca, L.; Stratou, G.; Shapiro, A.; Morency, L.P.; Scherer, S. Cicero-towards a multimodal virtual audience platform for public speaking training. In International Workshop on Intelligent Virtual Agents; Springer: Berlin/Heidelberg, Germany, 2013; pp. 116–128. [Google Scholar]

- Chan, J.C.; Ho, E.S. Emotion Transfer for 3D Hand and Full Body Motion Using StarGAN. Computers 2021, 10, 38. [Google Scholar] [CrossRef]

- Gunes, H.; Piccardi, M. Bi-modal emotion recognition from expressive face and body gestures. J. Netw. Comput. Appl. 2007, 30, 1334–1345. [Google Scholar] [CrossRef]

- Hangyu, Z.; Fujimoto, Y.; Kanbara, M.; Kato, H. Effectiveness of Virtual Reality Playback in Public Speaking Training. In Proceedings of the Workshop on Social Affective Multimodal Interaction for Health in 22nd ACM International Conference on Multimodal Interaction (ICMI2020), ACM, 2020, SAMIH ’20, Utrecht, The Netherlands, 25–29 October 2020; 2020. [Google Scholar]

- Gilkinson, H. Social fears reported by students in college speech classes. Speech Monogr. 1942, 9, 131–160. [Google Scholar] [CrossRef]

- Hook, J.N.; Smith, C.A.; Valentiner, D.P. A short-form of the Personal Report of Confidence as a Speaker. Personal. Individ. Differ. 2008, 44, 1306–1313. [Google Scholar] [CrossRef]

- Torres-Guijarro, S.; Bengoechea, M. Gender differential in self-assessment: A fact neglected in higher education peer and self-assessment techniques. High. Educ. Res. Dev. 2017, 36, 1072–1084. [Google Scholar] [CrossRef]

- Gaibani, A.; Elmenfi, F. The role of gender in influencing public speaking anxiety. Br. J. Engl. Linguist. 2014, 2, 7–13. [Google Scholar]

- Walker, S.; Weidner, T.; Armstrong, K.J. Standardized patient encounters and individual case-based simulations improve students’ confidence and promote reflection: A preliminary study. Athl. Train. Educ. J. 2015, 10, 130–137. [Google Scholar] [CrossRef]

- Mostajeran, F.; Balci, M.B.; Steinicke, F.; Kuhn, S.; Gallinat, J. The effects of virtual audience size on social anxiety during public speaking. In Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces, VR ’20, Atlanta, GA, USA, 22–26 March 2020; pp. 303–312. [Google Scholar]

- Levitan, M.N.; Falcone, E.M.; Placido, M.; Krieger, S.; Pinheiro, L.; Crippa, J.A.; Bruno, L.M.; Pastore, D.; Hallak, J.; Nardi, A.E. Public speaking in social phobia: A pilot study of self-ratings and observers’ ratings of social skills. J. Clin. Psychol. 2012, 68, 397–402. [Google Scholar] [CrossRef] [PubMed]

- Makkar, S.R.; Grisham, J.R. Social anxiety and the effects of negative self-imagery on emotion, cognition, and post-event processing. Behav. Res. Ther. 2011, 49, 654–664. [Google Scholar] [CrossRef] [PubMed]

- Spurr, J.M.; Stopa, L. The observer perspective: Effects on social anxiety and performance. Behav. Res. Ther. 2003, 41, 1009–1028. [Google Scholar] [CrossRef]

- Cody, M.W.; Teachman, B.A. Global and local evaluations of public speaking performance in social anxiety. Behav. Ther. 2011, 42, 601–611. [Google Scholar] [CrossRef] [PubMed]

- Vasalou, A.; Joinson, A.N.; Pitt, J. Constructing my online self: Avatars that increase self-focused attention. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 445–448. [Google Scholar]

- Gorisse, G.; Christmann, O.; Houzangbe, S.; Richir, S. From robot to virtual doppelganger: Impact of visual fidelity of avatars controlled in third-person perspective on embodiment and behavior in immersive virtual environments. Front. Robot. AI 2019, 6, 8. [Google Scholar] [CrossRef] [PubMed]

- Daly, J.A.; Vangelisti, A.L.; Neel, H.L.; Cavanaugh, P.D. Pre-performance concerns associated with public speaking anxiety. Commun. Q. 1989, 37, 39–53. [Google Scholar] [CrossRef]

- LeFebvre, L.; LeFebvre, L.E.; Allen, M. Training the butterflies to fly in formation: Cataloguing student fears about public speaking. Commun. Educ. 2018, 67, 348–362. [Google Scholar] [CrossRef]

- Prasko, J.; Mozny, P.; Novotny, M.; Slepecky, M.; Vyskocilova, J. Self-reflection in cognitive behavioural therapy and supervision. Biomed. Pap. Med. Fac. Palacky Univ. Olomouc 2012, 156, 377–384. [Google Scholar] [CrossRef] [PubMed]

- Gruber, A.; Kaplan-Rakowski, R. User experience of public speaking practice in virtual reality. In Cognitive and Affective Perspectives on Immersive Technology in Education; IGI Global: Hershey, PA, USA, 2020; pp. 235–249. [Google Scholar]

- Hinojo-Lucena, F.J.; Aznar-Díaz, I.; Cáceres-Reche, M.P.; Trujillo-Torres, J.M.; Romero-Rodríguez, J.M. Virtual reality treatment for public speaking anxiety in students. Advancements and results in personalized medicine. J. Pers. Med. 2020, 10, 14. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, H.; Fujimoto, Y.; Kanbara, M.; Kato, H. Virtual Reality as a Reflection Technique for Public Speaking Training. Appl. Sci. 2021, 11, 3988. https://doi.org/10.3390/app11093988

Zhou H, Fujimoto Y, Kanbara M, Kato H. Virtual Reality as a Reflection Technique for Public Speaking Training. Applied Sciences. 2021; 11(9):3988. https://doi.org/10.3390/app11093988

Chicago/Turabian StyleZhou, Hangyu, Yuichiro Fujimoto, Masayuki Kanbara, and Hirokazu Kato. 2021. "Virtual Reality as a Reflection Technique for Public Speaking Training" Applied Sciences 11, no. 9: 3988. https://doi.org/10.3390/app11093988

APA StyleZhou, H., Fujimoto, Y., Kanbara, M., & Kato, H. (2021). Virtual Reality as a Reflection Technique for Public Speaking Training. Applied Sciences, 11(9), 3988. https://doi.org/10.3390/app11093988