Robot-Touch Promotes Memory Sensitization

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Robot-Arm

2.3. Experimental Setup

2.3.1. Personality Traits

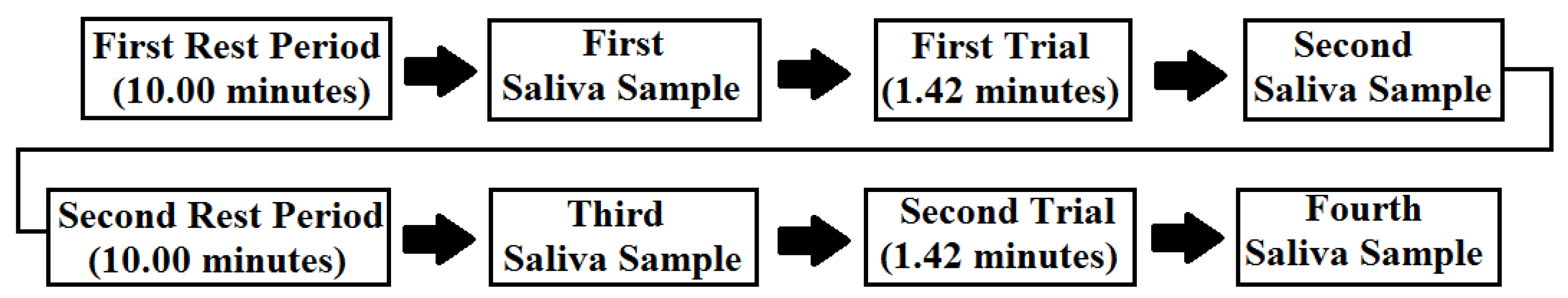

2.3.2. Saliva Samples

2.3.3. Questionnaire

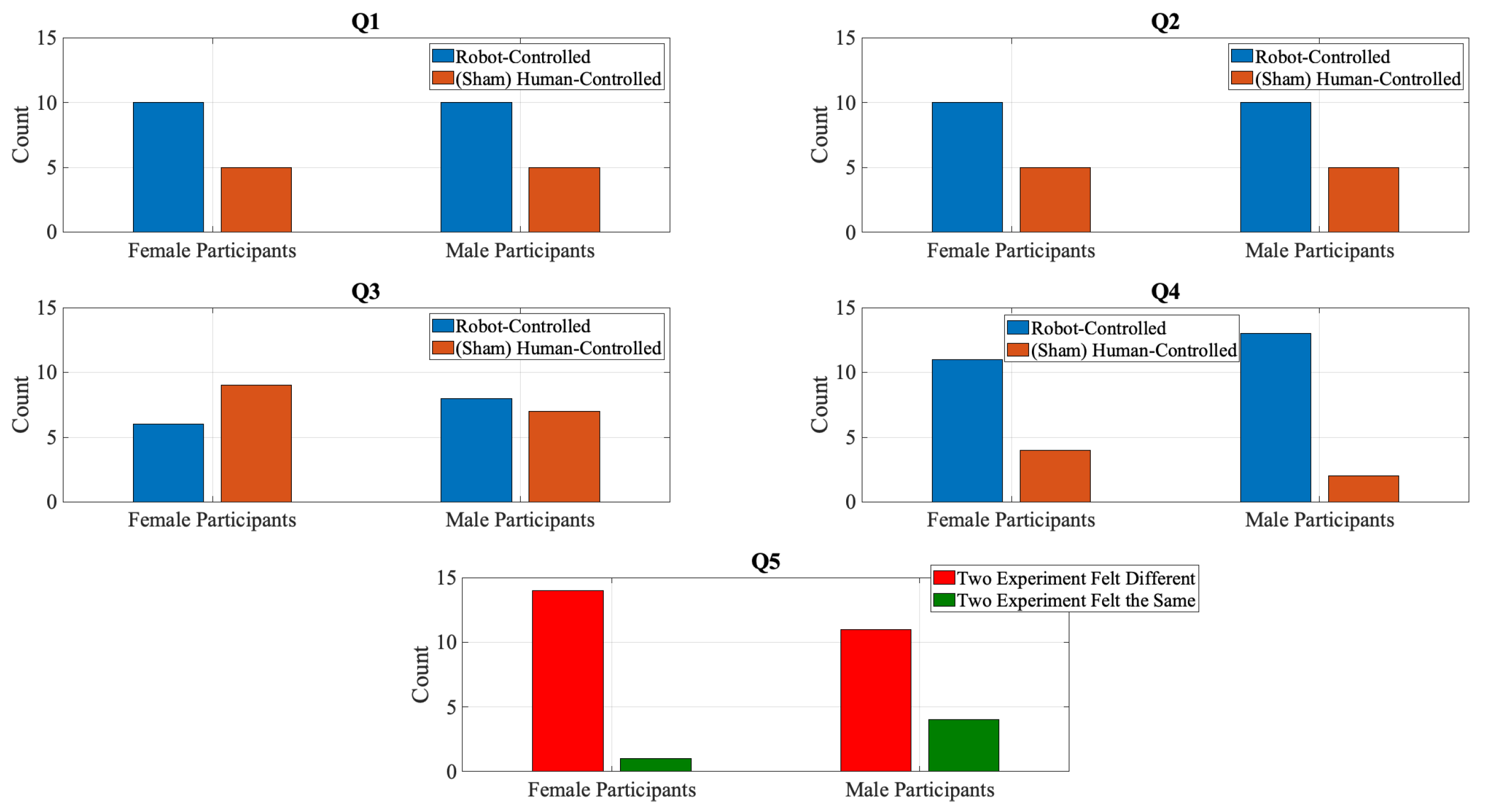

- Four-Item Written Questionnaire: After collecting their fourth saliva sample at the end of their second trial, we asked participants to fill in their responses to a written questionnaire (in Japanese). It comprised four items, each with a binary response. These four items were:

- (a)

- The robot-arm moved more naturally (Q1)

- (b)

- I felt safer (Q2)

- (c)

- I felt more comfortable with the robot-arm touching me (Q3)

- (d)

- I can trust a touch by a robot (Q4)

The participants responded to each of Q1 through Q4 using the same binary-response protocol:- (a)

- when it was controlled by the robot

- (b)

- when it was controlled by a human

- Fifth Item Verbal Questionnaire: This item was verbally communicated with participants as they were on their way to leave the facility. Specifically, the experiment assistant asked the participants (as she was guiding them to the elevator) if they felt that their two trials (i.e., robot- and the (sham) human-controlled) were the same. We then recorded these responses as fifth questionnaire item (i.e., Q5).

2.4. Procedure

2.5. Analysis

2.5.1. Main Analyses

2.5.2. Supplementary Analyses

2.6. Reported Effect-Sizes

3. Results

3.1. Consistency of the Participants’ Responses to Q1 through Q5

3.2. Effect of Experiment’s Design Factors on Participants’ Responses to Q1 through Q5

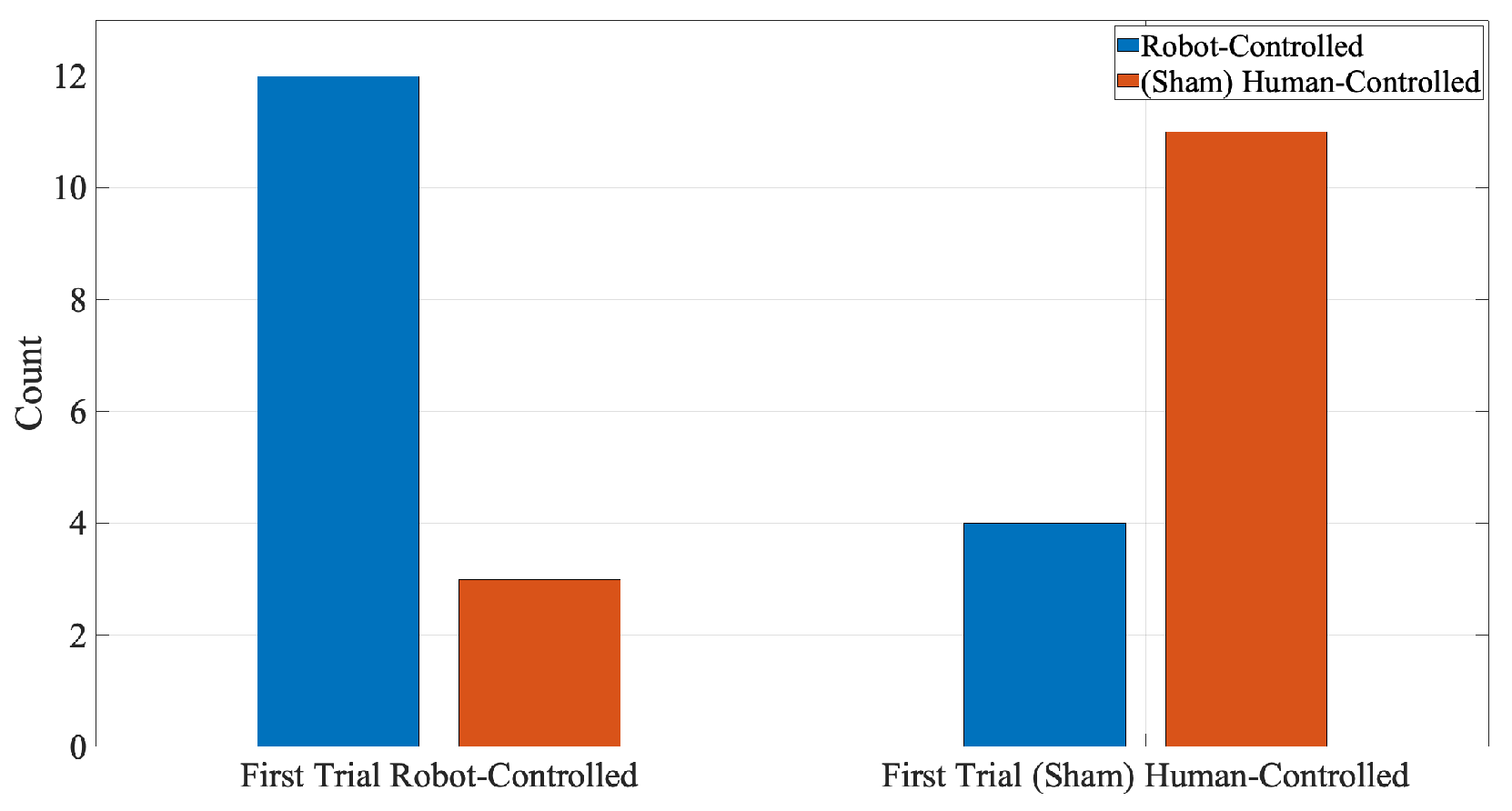

3.3. Participants’ Overall Preference between (Sham) Human- and Robot-Controlled Trials

3.3.1. Q1 through Q4

3.3.2. Q5

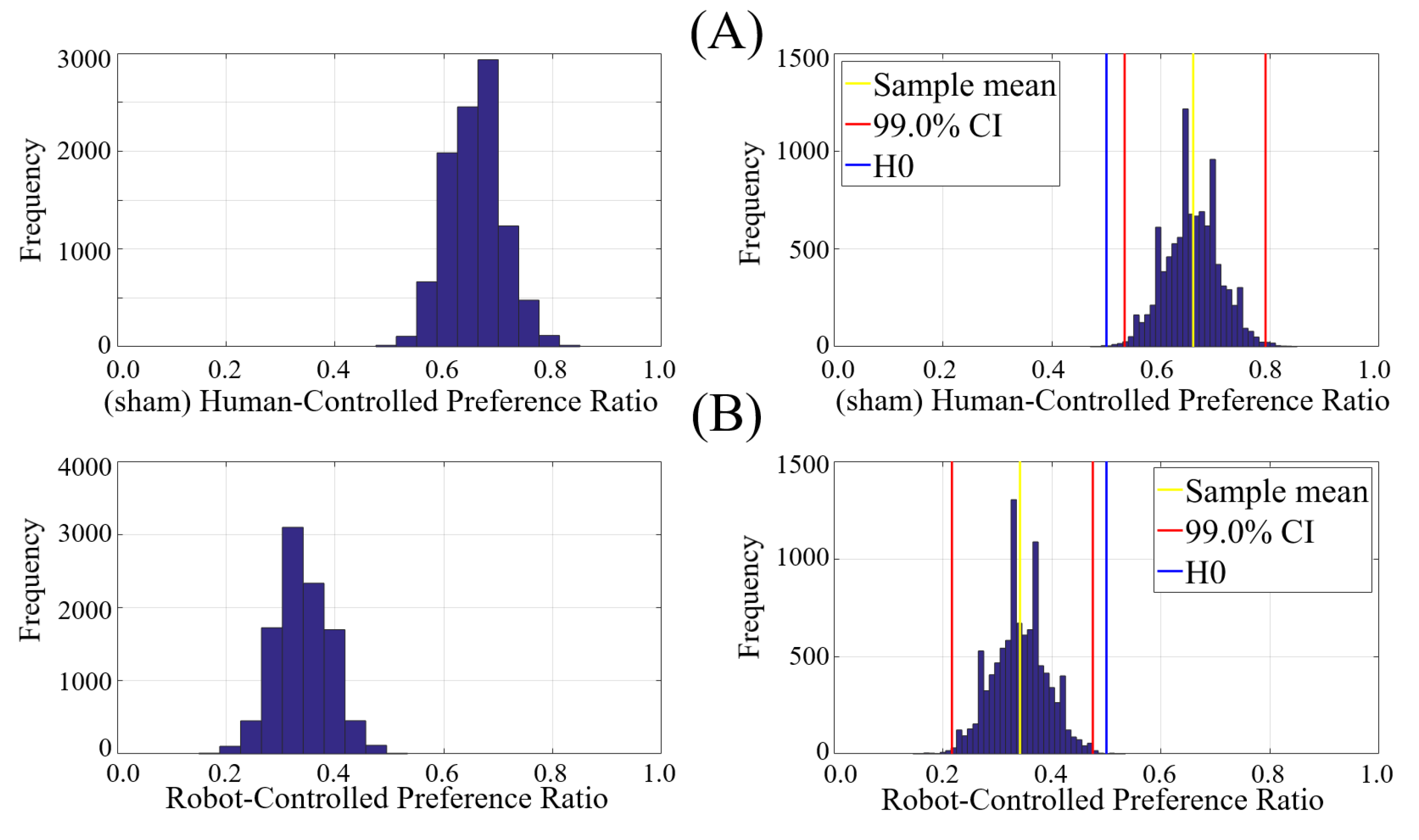

3.4. Ratio of Preferences between (Sham) Human- Versus Robot-Controlled Trials

3.5. Change in Cortisol

3.5.1. Three-Factor ANOVA

3.5.2. Two-Sample T-Test: Robor- versus (Sham) Human-Controlled Trials

4. Discussion

5. Concluding Remarks

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Appendix A. Effect of the Participants’ Personality Traits on Their Responses to Questionnaire’s Items Q1 through Q5

| Question | Factor | F | p | |

|---|---|---|---|---|

| participant gender | 0.004 | 0.950 | 0.0002 | |

| extraversion | 0.62 | 0.439 | 0.03 | |

| agreeableness | 0.18 | 0.674 | 0.007 | |

| conscientiousness | 1.03 | 0.322 | 0.04 | |

| neuroticism | 0.64 | 0.432 | 0.03 | |

| Q1 | openness | 1.29 | 0.271 | 0.05 |

| participant gender × extraversion | 0.001 | 0.980 | 0.00 | |

| participant gender × agreeableness | 0.05 | 0.827 | 0.002 | |

| participant gender × conscientiousness | 0.22 | 0.646 | 0.009 | |

| participant gender × neuroticism | 1.61 | 0.220 | 0.06 | |

| participant gender × openness | 0.30 | 0.588 | 0.01 |

| Question | Factor | F | p | |

|---|---|---|---|---|

| participant gender | 0.84 | 0.371 | 0.03 | |

| extraversion | 1.11 | 0.304 | 0.04 | |

| agreeableness | 1.92 | 0.182 | 0.07 | |

| conscientiousness | 0.07 | 0.800 | 0.003 | |

| neuroticism | 1.18 | 0.29 | 0.05 | |

| Q2 | openness | 0.63 | 0.437 | 0.02 |

| participant gender × extraversion | 0.02 | 0.889 | 0.001 | |

| participant gender × agreeableness | 0.28 | 0.605 | 0.0 | |

| participant gender × conscientiousness | 0.04 | 0.836 | 0.002 | |

| participant gender × neuroticism | 0.530 | 0.475 | 0.02 | |

| participant gender × openness | 0.60 | 0.448 | 0.02 |

| Question | Factor | F | p | |

|---|---|---|---|---|

| participant gender | 4.24 | 0.053 | 0.14 | |

| extraversion | 0.31 | 0.586 | 0.01 | |

| agreeableness | 0.16 | 0.690 | 0.01 | |

| conscientiousness | 0.33 | 0.574 | 0.01 | |

| neuroticism | 1.10 | 0.307 | 0.04 | |

| Q3 | openness | 0.16 | 0.692 | 0.01 |

| participant gender × extraversion | 3.26 | 0.087 | 0.11 | |

| participant gender × agreeableness | 0.06 | 0.812 | 0.002 | |

| participant gender × conscientiousness | 0.06 | 0.816 | 0.002 | |

| participant gender × neuroticism | 0.77 | 0.392 | 0.03 | |

| participant gender × openness | 0.16 | 0.696 | 0.01 |

| Question | Factor | F | p | |

|---|---|---|---|---|

| participant gender | 0.02 | 0.880 | 0.001 | |

| extraversion | 0.68 | 0.421 | 0.03 | |

| agreeableness | 0.270 | 0.609 | 0.01 | |

| conscientiousness | 1.76 | 0.120 | 0.07 | |

| neuroticism | 0.66 | 0.427 | 0.03 | |

| Q4 | openness | 0.49 | 0.491 | 0.02 |

| participant gender × extraversion | 0.05 | 0.830 | 0.002 | |

| participant gender × agreeableness | 0.77 | 0.392 | 0.03 | |

| participant gender × conscientiousness | 0.05 | 0.832 | 0.002 | |

| participant gender × neuroticism | 0.12 | 0.737 | 0.005 | |

| participant gender × openness | 0.01 | 0.760 | 0.004 |

| Question | Factor | F | p | |

|---|---|---|---|---|

| participant gender | 0.23 | 0.639 | 0.01 | |

| extraversion | 0.474 | 0.450 | 0.02 | |

| agreeableness | 0.41 | 0.532 | 0.02 | |

| conscientiousness | 1.188 | 0.290 | 0.05 | |

| neuroticism | 0.21 | 0.652 | 0.01 | |

| Q5 | openness | 0.52 | 0.480 | 0.02 |

| participant gender × extraversion | 1.38 | 0.254 | 0.06 | |

| participant gender × agreeableness | 0.74 | 0.340 | 0.03 | |

| participant gender × conscientiousness | 0.30 | 0.590 | 0.01 | |

| participant gender × neuroticism | 0.09 | 0.763 | 0.004 | |

| participant gender × openness | 0.13 | 0.728 | 0.01 |

Appendix B. Spearman Correlations: Participants’ Personality Traits

Appendix C. Kendall Correlations: Participants’ Personality Traits and Their Responses to Q1 through Q5

| Questions | Extraversion | Agreeableness | Conscientiousness | Neuroticism | Openness |

|---|---|---|---|---|---|

| Q1 | = −0.20 | = −0.14 | = −0.21 | = −0.07 | = −0.07 |

| p = 0.224 | p = 0.418 | p = 0.201 | p = 0.699 | p = 0.680 | |

| Q2 | = −0.01 | = −0.23 | = 0.09 | = 0.21 | = 0.14 |

| p = 0.982 | p = 0.177 | p = 0.590 | p = 0.228 | p = 0.410 | |

| Q3 | = 0.01 | = −0.10 | = −0.07 | = 0.29 | = −0.09 |

| p = 0.983 | p = 0.538 | p = 0.703 | p = 0.086 | p = 0.620 | |

| Q4 | = −0.16 | = −0.15 | = 0.20 | = −0.10 | = 0.36 |

| p = 0.340 | p = 0.381 | p = 0.223 | p = 0.573 | p = 0.038 | |

| Q5 | = 0.04 | = 0.21 | = 0.22 | = −0.04 | = −0.24 |

| p = 0.842 | p = 0.221 | p = 0.191 | p = 0.818 | p = 0.165 |

References

- Seed, A.; Byrne, R. Animal tool-use. Curr. Biol. 2010, 20, R1032–R1039. [Google Scholar] [CrossRef]

- Haslam, M. ‘Captivity bias’ in animal tool use and its implications for the evolution of hominin technology. Philos. Trans. R. Soc. B Biol. Sci. 2013, 368, 20120421. [Google Scholar] [CrossRef]

- Lamon, N.; Neumann, C.; Gruber, T.; Zuberbühler, K. Kin-based cultural transmission of tool use in wild chimpanzees. Sci. Adv. 2017, 3, e1602750. [Google Scholar] [CrossRef]

- Clay, Z.; Tennie, C. Is overimitation a uniquely human phenomenon? Insights from human children as compared to bonobos. Child Dev. 2017, 89, 1535–1544. [Google Scholar] [CrossRef]

- Byrne, R.W. The manual skills and cognition that lie behind hominid tool use. In The Evolution of Thought: Evolutionary Origins of Great Ape Intelligence; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Stout, D.; Chaminade, T. Stone tools, language and the brain in human evolution. Philos. Trans. R. Soc. B Biol. Sci. 2012, 367, 75–87. [Google Scholar]

- Orban, G.A.; Caruana, F. The neural basis of human tool use. Front. Psychol. 2014, 5, 310. [Google Scholar] [CrossRef]

- Petersen, K.H.; Napp, N.; Stuart-Smith, R.; Rus, D.; Kovac, M. A review of collective robotic construction. Sci. Robot. 2019, 4, eaau8479. [Google Scholar] [CrossRef] [PubMed]

- Billard, A.; Kragic, D. Trends and challenges in robot manipulation. Sci. Robot. 2019, 364, eaat8414. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Chien, S. Review on space robotics: Toward top-level science through space exploration. Sci. Robot. 2017, 2, eaan5074. [Google Scholar] [CrossRef]

- Li, J.; de Ávila, B.E.F.; Gao, W.; Zhang, L.; Wang, J. Micro/nanorobots for biomedicine: Delivery, surgery, sensing, and detoxification. Sci. Robot. 2017, 2, eaam6431. [Google Scholar] [CrossRef] [PubMed]

- Yang, G.Z.; Riener, R.; Dario, P. To integrate and to empower: Robots for rehabilitation and assistance. Sci. Robot. 2017, 2, eaan5593. [Google Scholar] [CrossRef] [PubMed]

- Nazarpour, K. A more human prosthetic hand. Sci. Robot. 2020, 23, eabd9341. [Google Scholar] [CrossRef]

- Matarić, M.J. Socially assistive robotics: Human augmentation versus automation. Sci. Robot. 2017, 2, eaam5410. [Google Scholar] [CrossRef] [PubMed]

- Dawe, J.; Sutherl, C.; Barco, A.; Broadbent, E. Can social robots help children in healthcare contexts? A scoping review. BMJ Paediatr. Open 2019, 3, 1. [Google Scholar] [CrossRef]

- Scassellati, B.; Boccanfuso, L.; Huang, C.M.; Mademtzi, M.; Qin, M.; Salomons, N.; Ventola, P.; Shic, F. Improving social skills in children with ASD using a long-term, in-home social robot. Sci. Robot. 2018, 3, eaam5410. [Google Scholar] [CrossRef]

- Tanaka, F.; Cicourel, A.; Movellan, J.R. Socialization between toddlers and robots at an early childhood education center. Proc. Natl. Acad. Sci. USA 2007, 104, 17954–17958. [Google Scholar] [CrossRef]

- Robinson, H.; MacDonald, B.; Kerse, N.; Broadbent, E. The psychosocial effects of a companion robot: A randomized controlled trial. J. Am. Med. Dir. Assoc. 2013, 14, 661–667. [Google Scholar] [CrossRef]

- Valenti Soler, M.; Agüera-Ortiz, L.; Olazarán Rodríguez, J.; Mendoza, R.C.; Pérez, M.A.; Rodríguez, P.I.; Osa, R.E.; Barrios, S.A.; Herrero, C.V.; Carrasco, C.L.; et al. Social robots in advanced dementia. Front. Aging Neurosci. 2015, 7, 133. [Google Scholar] [CrossRef] [PubMed]

- Dietvorst, B.J.; Simmons, J.P.; Massey, C. Algorithm aversion: People erroneously avoid algorithms after seeing them err. J. Exp. Psychol. Gen. 2015, 144, 114. [Google Scholar] [CrossRef]

- Merritt, T.; McGee, K. Protecting artificial team-mates: More seems like less. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 2793–2802. [Google Scholar]

- Oudah, M.; Babushkin, V.; Chenlinangjia, T.; Crandall, J.W. Learning to interact with a human partner. In Proceedings of the 10th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Portland, OR, USA, 2–5 March 2015; pp. 311–318. [Google Scholar]

- Ratanawongsa, N.; Barton, J.L.; Lyles, C.R.; Wu, M.; Yelin, E.H.; Martinez, D.; Schillinger, D. Association between clinician computer use and communication with patients in safety-net clinics. JAMA Intern. Med. 2016, 176, 125–128. [Google Scholar] [CrossRef]

- Promberger, M.; Baron, J. Do patients trust computers? J. Behav. Decis. Mak. 2006, 19, 455–468. [Google Scholar] [CrossRef]

- Ishowo-Oloko, F.; Bonnefon, J.F.; Soroye, Z.; Crandall, J.; Rahwan, I.; Rahwan, T. Behavioural evidence for a transparency–efficiency tradeoff in human–machine cooperation. Nat. Mach. Intell. 2019, 1, 517–521. [Google Scholar] [CrossRef]

- Gray, H.M.; Gray, K.; Wegner, D.M. Dimensions of mind perception. Science 2007, 315, 619. [Google Scholar] [CrossRef]

- Broadbent, E. Interactions with robots: The truths we reveal about ourselves. Annu. Rev. Psychol. 2017, 68, 627–652. [Google Scholar] [CrossRef]

- Uchida, T.; Takahashi, H.; Ban, M.; Shimaya, J.; Minato, T.; Ogawa, K.; Yoshikawa, Y.; Ishiguro, H. Japanese Young Women did not discriminate between robots and humans as listeners for their self-disclosure-pilot study. Multimodal Technol. Interact. 2020, 4, 35. [Google Scholar] [CrossRef]

- Manzi, F.; Peretti, G.; Di Dio, C.; Cangelosi, A.; Itakura, S.; Kanda, T.; Ishiguro, H.; Massaro, D.; Marchetti, A. A robot is not worth another: Exploring children’s mental state attribution to different humanoid robots. Front. Psychol. 2020, 11, 2011. [Google Scholar] [CrossRef]

- Graham, B. SOUL MATE Erica the Japanese Robot Is So Lifelike She ‘Has a Soul’ and Can Tell JOKES… Although They Aren’t Very Funny. THE Sun. Available online: https://www.thesun.co.uk/tech/5050946/erica-robot-lifelike-soul-jokes/ (accessed on 5 October 2018).

- Cai, F. Japanese Scientist Insists His Robot Twin Is Not Creepy Synced, AI Technology & Industry Review. 2019. Available online: https://syncedreview.com/2019/11/14/japanese-scientist-insists-his-robot-twin-is-not-creepy/ (accessed on 3 March 2021).

- Zeeberg, A. What We Can Learn about Robots from Japan, BBC, 2020. Available online: https://www.bbc.com/future/article/20191220-what-we-can-learn-about-robots-from-japan (accessed on 3 March 2021).

- Campeau-Lecours, A.; Lamontagne, H.; Latour, S.; Fauteux, P.; Maheu, V.; Boucher, F.; Deguire, C.; L’Ecuyer, L.J.C. Kinova modular robot arms for service robotics applications. In Rapid Automation: Concepts, Methodologies, Tools, and Applications; IGI Global: Hershey, PA, USA, 2019; pp. 693–719. [Google Scholar]

- Oshio, A.; Abe Shingo, S.; Cutrone, P. Development, reliability, and validity of the Japanese version of Ten Item Personality Inventory (TIPI-J). Jpn. J. Personal. 2012, 21, 40–52. [Google Scholar] [CrossRef]

- Goldberg, L.R. An alternative “description of personality”: The big-five factor structure. J. Personal. Soc. Psychol. 1990, 59, 1216–1229. [Google Scholar] [CrossRef]

- Turner-Cobb, J.M.; Asif, M.; Turner, J.E.; Bevan, C.; Fraser, D.S. Use of a non-human robot audience to induce stress reactivity in human participants. Comput. Hum. Behav. 2019, 99, 76–85. [Google Scholar] [CrossRef]

- Mardia, K.; Kent, J.; Bibby, J. Multivariate analysis. In Probability and Mathematical Statistics; Academic Press Inc.: Cambridge, MA, USA, 1979. [Google Scholar]

- Kim, H.Y. Statistical notes for clinical researchers: Chi-squared test and Fisher’s exact test. Restor. Dent. Endod. 2017, 42, 152–155. [Google Scholar] [CrossRef]

- Vacha-Haase, T.; Thompson, B. How to estimate and interpret various effect sizes. J. Couns. Psychol. 2004, 51, 473–481. [Google Scholar] [CrossRef]

- Rosnow, R.L.; Rosenthal, R.; Rubin, D.B. Contrasts and correlations in effect-size estimation. Psychol. Sci. 2000, 11, 446–453. [Google Scholar] [CrossRef]

- Rahwan, I.; Cebrian, M.; Obradovich, N.; Bongard, J.; Bonnefon, J.F.; Breazeal, C.; Crandall, J.W.; Christakis, N.A.; Couzin, I.D.; Jackson, M.O.; et al. Machine behaviour. Nature 2019, 568, 477–486. [Google Scholar] [CrossRef]

- Yang, G.Z.; Bellingham, J.; Dupont, P.E.; Fischer, P.; Floridi, L.; Full, R.; Jacobstein, N.; Kumar, V.; McNutt, M.; Merrifield, R.; et al. The grand challenges of Science Robotics. Sci. Robot. 2018, 3, eaar7650. [Google Scholar] [CrossRef]

- Tomita, M. Exploratory Study of Touch zones in college students on two campuses. Calif. J. Health Promot. 2008, 6, 1–22. [Google Scholar] [CrossRef]

- Suvilehto, J.T.; Glerean, E.; Dunbar, R.I.; Hari, R.; Nummenmaa, L. Topography of social touching depends on emotional bonds between humans. Proc. Natl. Acad. Sci. USA 2015, 112, 13811–13816. [Google Scholar] [CrossRef]

- Suvilehto, J.T.; Nummenmaa, L.; Harada, T.; Dunbar, R.I.; Hari, R.; Turner, R.; Sadato, N.; Kitada, R. Cross-cultural similarity in relationship-specific social touching. Proc. R. Soc. B 2019, 286, 20190467. [Google Scholar] [CrossRef] [PubMed]

- Gazzaniga, M.S.; Ivry, R.B.; Mangun, G.R. Cognitive Neuroscience. In The Biology of the Mind, 5th ed.; W.W. Norton & Company: New York, NY, USA, 2019. [Google Scholar]

- Yin, H.H.; Knowlton, B.J. The role of the basal ganglia in habit formation. Nat. Rev. Neurosci. 2006, 7, 464–476. [Google Scholar] [CrossRef] [PubMed]

- Cave, C.B. Very long-lasting priming in picture naming. Psychol. Sci. 1997, 8, 322–325. [Google Scholar] [CrossRef]

- LaBar, K.S.; Cabeza, R. Cognitive neuroscience of emotional memory. Nat. Rev. Neurosci. 2006, 7, 54–64. [Google Scholar] [CrossRef]

- Rosenthal-von der Pütten, A.M.; Krämer, N.C.; Maderwald, S.; Brand, M.; Grabenhorst, F. Neural mechanisms for accepting and rejecting artificial social partners in the uncanny valley. J. Neurosci. 2019, 39, 6555–6570. [Google Scholar] [CrossRef] [PubMed]

- Pollick, F.E. In search of the uncanny valley. In International Conference on User Centric Media; Springer: Berlin/Heidelberg, Germany, 2009; pp. 69–78. [Google Scholar]

- Chao, L.L.; Haxby, J.V.; Martin, A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nat. Neurosci. 1999, 2, 913–919. [Google Scholar] [CrossRef] [PubMed]

- Noppeney, U.; Price, C.J.; Penny, W.D.; Friston, K.J. Two distinct neural mechanisms for category-selective responses. Cereb. Cortex 2006, 16, 437–445. [Google Scholar] [CrossRef] [PubMed]

- Steckenfinger, S.A.; Ghazanfar, A.A. Monkey visual behavior falls into the uncanny valley. Proc. Natl. Acad. Sci. USA 2009, 106, 18362–18366. [Google Scholar] [CrossRef]

- Panksepp, J. Affective Neuroscience: The Foundations of Human and Animal Emotions; Oxford University Press: Oxford, UK, 2004. [Google Scholar]

- Henschel, A.; Hortensius, R.; Cross, E.S. Social Cognition in the Age of Human-Robot Interaction. Trends Neurosci. 2020, 43, 373–384. [Google Scholar] [CrossRef]

- Frith, C.D.; Frith, U. Interacting minds-a biological basis. Science 1999, 286, 1692–1695. [Google Scholar] [CrossRef]

- Dennett, D.C. The Intentional Stance; MIT Press: Cambridge, MA, USA, 1989; Volume 286. [Google Scholar]

- Chevallier, C.; Kohls, G.; Troiani, V.; Brodkin, E.S.; Schultz, R.T. The social motivation theory of autism. Trends Neurosci. 2012, 16, 231–239. [Google Scholar] [CrossRef] [PubMed]

- Koster-Hale, J.; Saxe, R. Theory of mind: A neural prediction problem. Neuron 2013, 79, 836–848. [Google Scholar] [CrossRef]

- Saxe, R.; Wexler, A. Making sense of another mind: The role of the right temporo-parietal junction. Neuropsychologia 2005, 43, 1391–1399. [Google Scholar] [CrossRef]

- Wang, Y.; Quadflieg, S. In our own image? Emotional and neural processing differences when observing human–human vs. human–robot interactions. Soc. Cogn. Affect. Neurosci. 2015, 10, 1515–1524. [Google Scholar] [CrossRef]

- Redcay, E.; Schilbach, L. Using second-person neuroscience to elucidate the mechanisms of social interaction. Nat. Rev. Neurosci. 2019, 20, 495–505. [Google Scholar] [CrossRef]

| Responses | Factor | F | p | |

|---|---|---|---|---|

| Operator’s Gender | 4.60 | 0.041 | 0.15 | |

| Q1 | Trial-Order | 0.0 | 1.0 | 0.0 |

| Operator’s Gender × Trial-Order | 0.14 | 0.708 | 0.005 | |

| Operator’s Gender | 1.84 | 0.186 | 0.06 | |

| Q2 | Trial-Order | 0.07 | 0.788 | 0.002 |

| Operator’s Gender × Trial-Order | 3.68 | 0.065 | 0.11 | |

| Operator’s Gender | 0.08 | 0.773 | 0.002 | |

| Q3 | Trial-Order | 10.24 | 0.003 | 0.27 |

| Operator’s Gender × Trial-Order | 0.17 | 0.684 | 0.005 | |

| Operator’s Gender | 0.55 | 0.463 | 0.02 | |

| Q4 | Trial-Order | 0.06 | 0.806 | 0.002 |

| Operator’s Gender × Trial-Order | 0.12 | 0.728 | 0.004 | |

| Operator’s Gender | 0.25 | 0.623 | 0.009 | |

| Q5 | Trial-Order | 0.25 | 0.622 | 0.009 |

| Operator’s Gender × Trial-Order | 0.50 | 0.488 | 0.02 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Keshmiri, S. Robot-Touch Promotes Memory Sensitization. Appl. Sci. 2021, 11, 2271. https://doi.org/10.3390/app11052271

Keshmiri S. Robot-Touch Promotes Memory Sensitization. Applied Sciences. 2021; 11(5):2271. https://doi.org/10.3390/app11052271

Chicago/Turabian StyleKeshmiri, Soheil. 2021. "Robot-Touch Promotes Memory Sensitization" Applied Sciences 11, no. 5: 2271. https://doi.org/10.3390/app11052271

APA StyleKeshmiri, S. (2021). Robot-Touch Promotes Memory Sensitization. Applied Sciences, 11(5), 2271. https://doi.org/10.3390/app11052271