An Efficient Multi-Scale Focusing Attention Network for Person Re-Identification

Abstract

1. Introduction

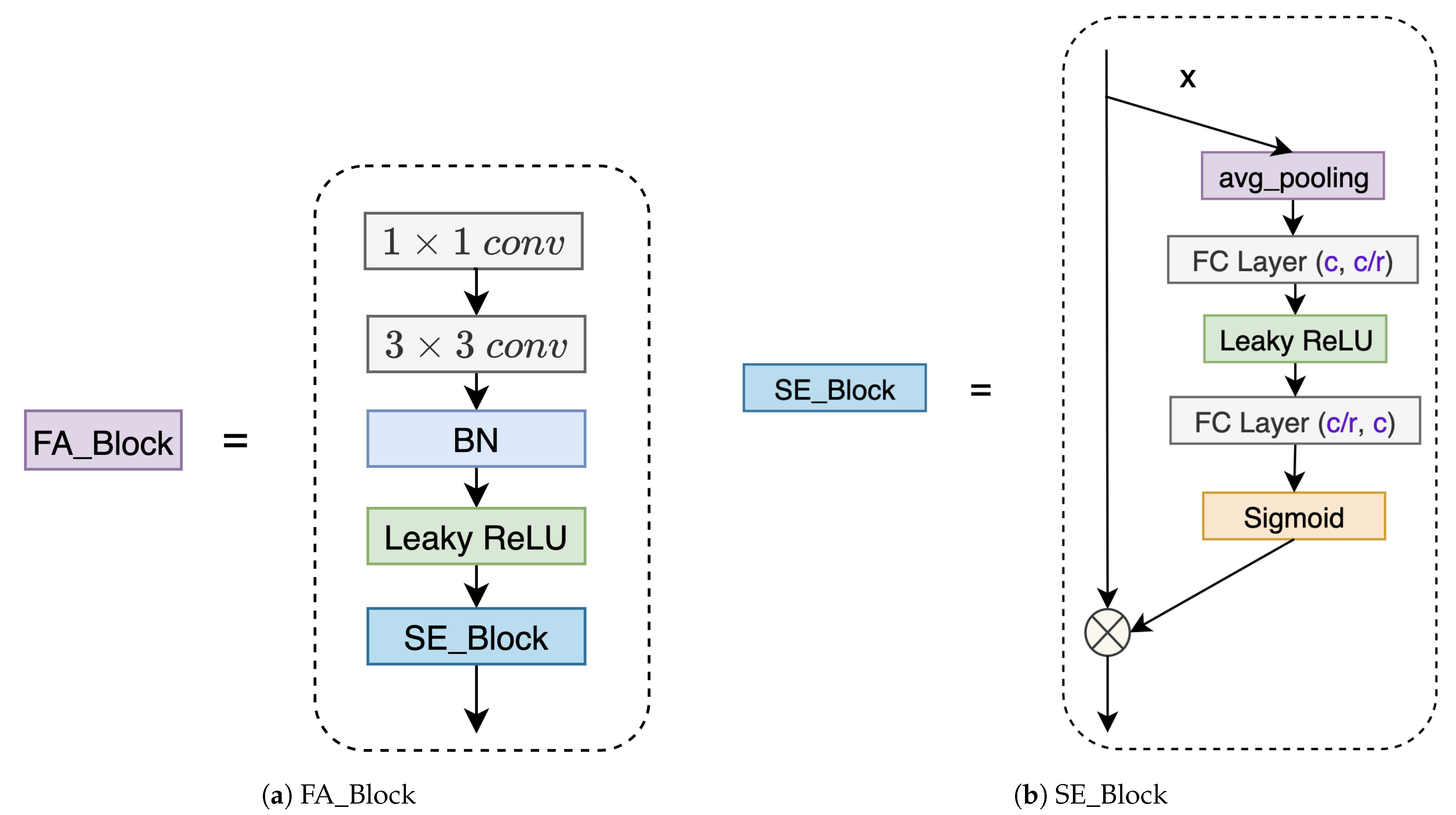

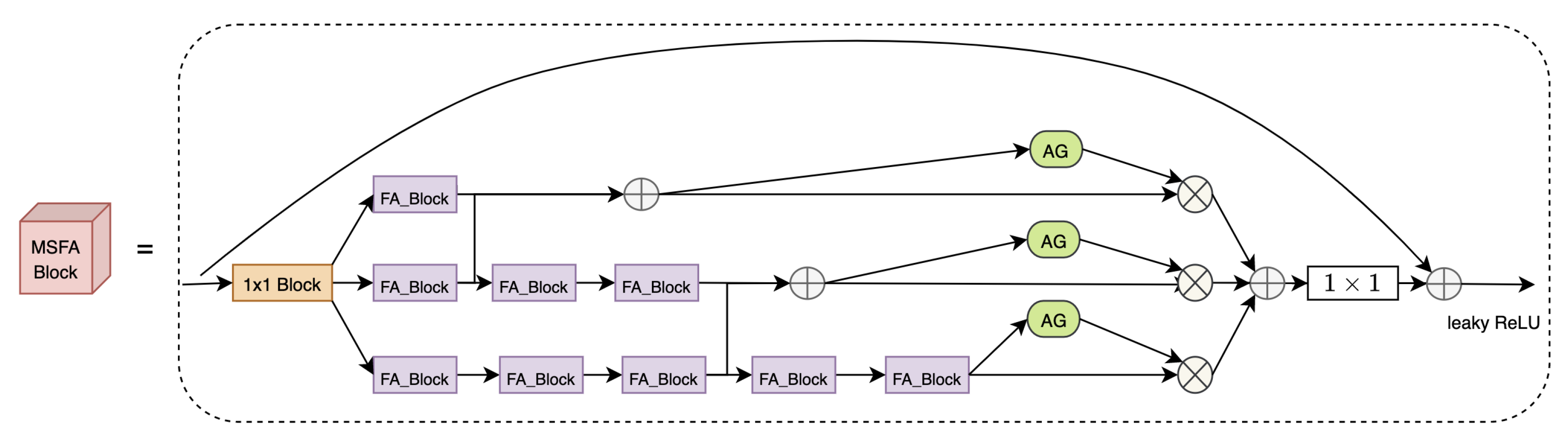

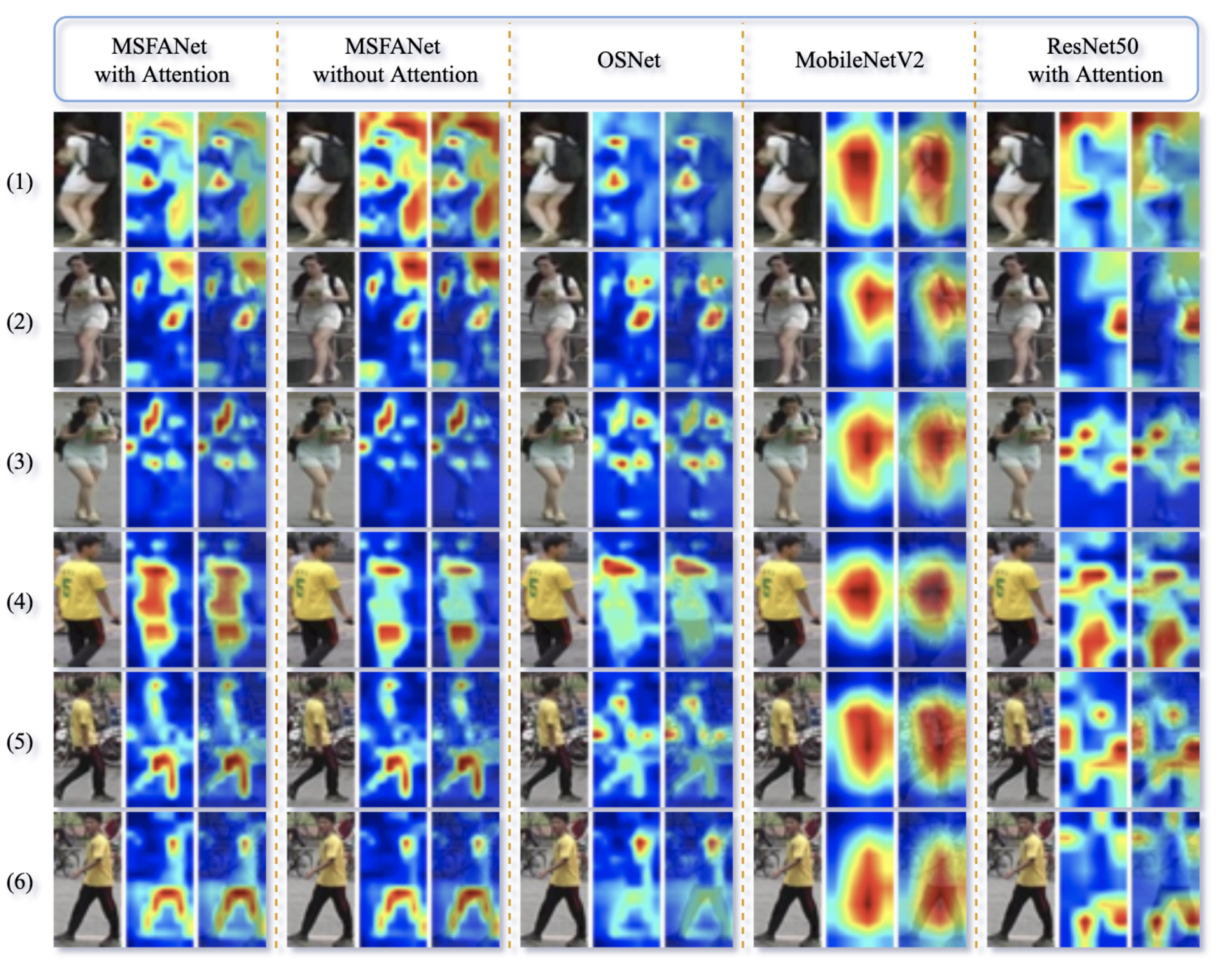

- The Multi-Scale Focusing Attention Network (MSFANet), containing receptive fields of different sizes in each stage as focusing attention (FA) block, is proposed to capture multiple scales and highly focusing attention ReID features. It is a novel efficient network module that achieves lower computational complexity and can be flexibly embedded in a person ReID framework.

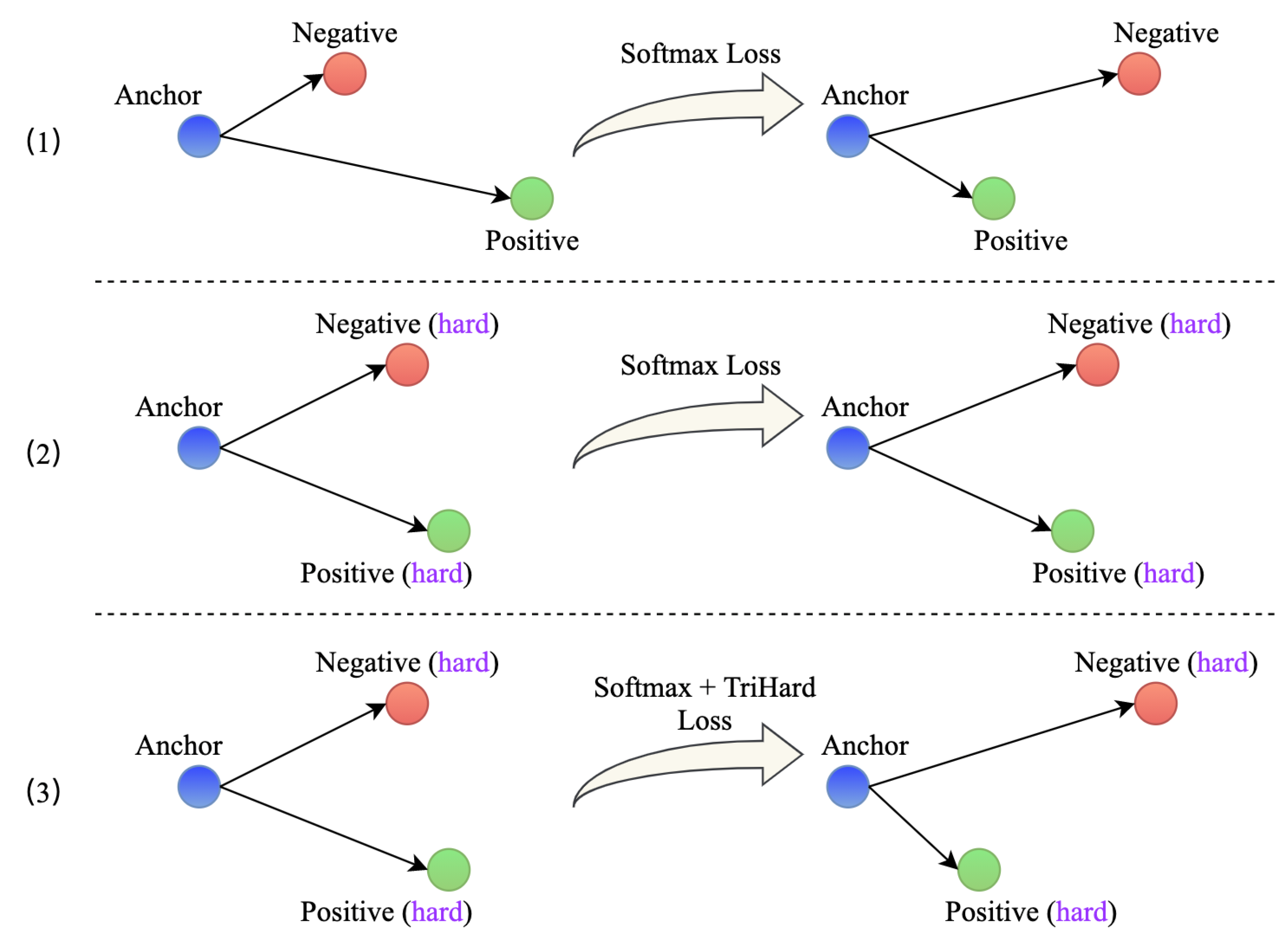

- We design a new fusion loss function to address hard sample discrimination problems, which is a combination of softmax loss and hard triplet loss. It achieves faster convergence and better generalization ability of the proposed MSFANet.

2. Related Work

2.1. Person ReID and Attention Mechanism

2.2. Lightweight Network Designs

2.3. Multi-Scale Feature Fusion Learning

3. Methods

3.1. Pipeline

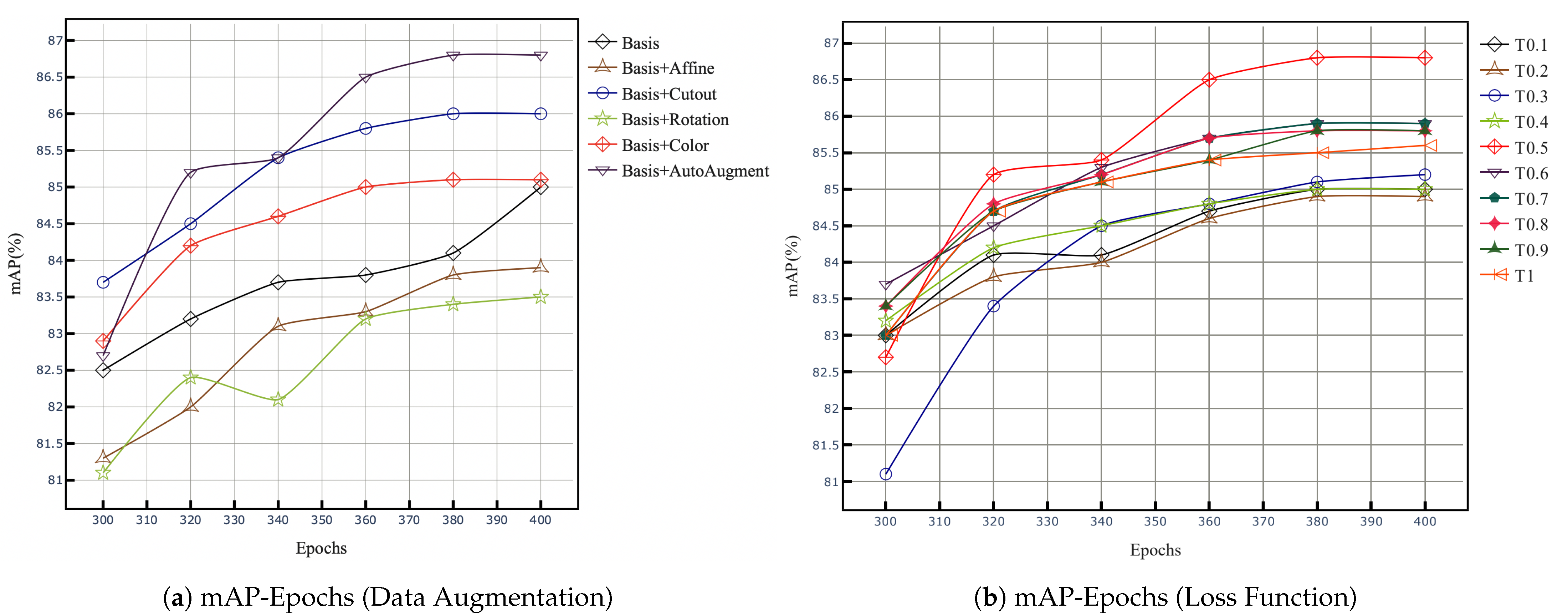

3.2. Data Augmentation

3.3. Multi-Scale Focusing Attention Network

3.4. Loss Function

4. Experiments

4.1. Implementation Details

4.2. Results

- Model complexity analysis. The complexity of ReID model, affecting the training and computational cost, plays a vital role in the edge deployment. From the last column of Table 4, it can be observed that MSFANet outperforms most published methods about the model’s complexity by a clear margin. The parameters and GFLOPs of MSFANet are calculated on resized input images by 256*128, and we can see that the GFLOPs is only 0.82 when the model’s backbone is `1-2-3’ form, decreased by 18.2% from OSNet [27]. This design verifies the effectiveness of the multi-scale focusing attention network for ReID by applying a depthwise separable convolution mechanism. However, as the backbone form is set as `2-2-2’, the GFLOPs will be increased to 0.94, almost equal to OSNet’s. Thus, MSFANet’s backbone’s structural design also plays an important role in the model’s computational complexity. Compared to other models, MSFANet is a lightweight model, with significantly smaller parameters and floating-point computations than other heavyweight models (such as ResNet for the backbone). It performs better even compared with OSNet, regarded as a state-of-the-art lightweight ReID model by far.

- Model Accuracy analysis. It can be seen from the data in Table 4 that the Rank-1 and mAP of MSFANet and other state-of-the-art methods on Market1501 and DukeMTMC datasets are calculated. The top half of the table, training model from scratch, show that the mAP has increased by 1% and 1.3% when the backbone’s structures are `1-2-3’ and `2-2-2’. For the bottom half table, training with ImageNet pre-training, the mAP of MSFANet employing the two backbone structures is 86.8% and 86.9%, respectively, huge improvements over OSNet. The results obtained from the preliminary analysis of MSFANet’s high efficiency are shown in Table 4. The designs of MSFANet contain multi-scale receptive fields, Omni-scale feature learning, and channel-level attention mechanism, which can capture much more robust and meticulous ReID features. MSFANet uses fewer branches and basic modules, integrating the attention module, which helps speed up the inference of ReID.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable person re-identification: A benchmark. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1116–1124. [Google Scholar]

- Li, S.; Xiao, T.; Li, H.; Yang, W.; Wang, X. Identity-aware textual-visual matching with latent co-attention. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1890–1899. [Google Scholar]

- Karanam, S.; Li, Y.; Radke, R.J. Person re-identification with discriminatively trained viewpoint invariant dictionaries. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4516–4524. [Google Scholar]

- Wang, Y.; Wang, L.; You, Y.; Zou, X.; Chen, V.; Li, S.; Huang, G.; Hariharan, B.; Weinberger, K.Q. Resource aware person re-identification across multiple resolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8042–8051. [Google Scholar]

- Huang, Y.; Zha, Z.J.; Fu, X.; Zhang, W. Illumination-invariant person re-identification. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 365–373. [Google Scholar]

- Saquib Sarfraz, M.; Schumann, A.; Eberle, A.; Stiefelhagen, R. A pose-sensitive embedding for person re-identification with expanded cross neighborhood re-ranking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 420–429. [Google Scholar]

- Ye, M.; Shen, J.; Lin, G.; Xiang, T.; Shao, L.; Hoi, S.C. Deep learning for person re-identification: A survey and outlook. arXiv 2020, arXiv:2001.04193. [Google Scholar]

- Wei, L.; Zhang, S.; Gao, W.; Tian, Q. Person transfer gan to bridge domain gap for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 79–88. [Google Scholar]

- Xiao, T.; Li, S.; Wang, B.; Lin, L.; Wang, X. Joint detection and identification feature learning for person search. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3415–3424. [Google Scholar]

- Zhu, X.; Wu, B.; Huang, D.; Zheng, W.S. Fast open-world person re-identification. IEEE Trans. Image Process. 2017, 27, 2286–2300. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Kong, L.; Chen, G.; Xu, F.; Wang, Z. Light-weight AI and IoT collaboration for surveillance video pre-processing. J. Syst. Archit. 2020, 101934. [Google Scholar] [CrossRef]

- Mittal, S. A Survey on optimized implementation of deep learning models on the NVIDIA Jetson platform. J. Syst. Archit. 2019, 97, 428–442. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Wang, Y.; Chen, Z.; Wu, F.; Wang, G. Person re-identification with cascaded pairwise convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1470–1478. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Chen, T.; Ding, S.; Xie, J.; Yuan, Y.; Chen, W.; Yang, Y.; Ren, Z.; Wang, Z. Abd-net: Attentive but diverse person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 8351–8361. [Google Scholar]

- Xu, S.; Cheng, Y.; Gu, K.; Yang, Y.; Chang, S.; Zhou, P. Jointly attentive spatial-temporal pooling networks for video-based person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4733–4742. [Google Scholar]

- Chen, D.; Li, H.; Xiao, T.; Yi, S.; Wang, X. Video person re-identification with competitive snippet-similarity aggregation and co-attentive snippet embedding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1169–1178. [Google Scholar]

- Fu, Y.; Wang, X.; Wei, Y.; Huang, T. Sta: Spatial-temporal attention for large-scale video-based person re-identification. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8287–8294. [Google Scholar]

- Zhang, Z.; Lan, C.; Zeng, W.; Chen, Z. Multi-Granularity Reference-Aided Attentive Feature Aggregation for Video-based Person Re-identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 14–19 June 2020; pp. 10407–10416. [Google Scholar]

- Sun, Y.; Zheng, L.; Yang, Y.; Tian, Q.; Wang, S. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline). In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 480–496. [Google Scholar]

- Li, W.; Zhu, X.; Gong, S. Harmonious attention network for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2285–2294. [Google Scholar]

- Chang, X.; Hospedales, T.M.; Xiang, T. Multi-level factorisation net for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2109–2118. [Google Scholar]

- Zhou, K.; Yang, Y.; Cavallaro, A.; Xiang, T. Omni-scale feature learning for person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 3702–3712. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Cubuk, E.D.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q.V. Autoaugment: Learning augmentation policies from data. arXiv 2018, arXiv:1805.09501. [Google Scholar]

- Hermans, A.; Beyer, L.; Leibe, B. In defense of the triplet loss for person re-identification. arXiv 2017, arXiv:1703.07737. [Google Scholar]

- Ristani, E.; Solera, F.; Zou, R.; Cucchiara, R.; Tomasi, C. Performance measures and a data set for multi-target, multi-camera tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 17–35. [Google Scholar]

- Gray, D.; Tao, H. Viewpoint invariant pedestrian recognition with an ensemble of localized features. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; pp. 262–275. [Google Scholar]

- Loy, C.C.; Liu, C.; Gong, S. Person re-identification by manifold ranking. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, Australia, 15–20 September 2013; pp. 3567–3571. [Google Scholar]

- Yi, D.; Lei, Z.; Liao, S.; Li, S.Z. Deep metric learning for person re-identification. In Proceedings of the 2014 IEEE 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 34–39. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Lin, Y.; Zheng, L.; Zheng, Z.; Wu, Y.; Hu, Z.; Yan, C.; Yang, Y. Improving person re-identification by attribute and identity learning. Pattern Recognit. 2019, 95, 151–161. [Google Scholar] [CrossRef]

- Bai, S.; Tang, P.; Torr, P.H.; Latecki, L.J. Re-ranking via metric fusion for object retrieval and person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 740–749. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Chen, H.; Wang, Y.; Xu, C.; Shi, B.; Xu, C.; Tian, Q.; Xu, C. AdderNet: Do we really need multiplications in deep learning? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 14–19 June 2020; pp. 1468–1477. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7036–7045. [Google Scholar]

- Tan, M.; Le, Q.V. Efficientnet: Rethinking model scaling for convolutional neural networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online. 14–19 June 2020; pp. 10781–10790. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Chen, L.C.; Yang, Y.; Wang, J.; Xu, W.; Yuille, A.L. Attention to scale: Scale-aware semantic image segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 3640–3649. [Google Scholar]

- Yang, S.; Peng, G. Attention to Refine Through Multi Scales for Semantic Segmentation. In Proceedings of the Pacific Rim Conference on Multimedia, Hefei, China, September 21–22 2018; pp. 232–241. [Google Scholar]

- Tao, A.; Sapra, K.; Catanzaro, B. Hierarchical Multi-Scale Attention for Semantic Segmentation. arXiv 2020, arXiv:2005.10821. [Google Scholar]

- Qian, X.; Fu, Y.; Jiang, Y.G.; Xiang, T.; Xue, X. Multi-scale deep learning architectures for person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5399–5408. [Google Scholar]

- Zhou, K.; Xiang, T. Torchreid: A library for deep learning person re-identification in pytorch. arXiv 2019, arXiv:1910.10093. [Google Scholar]

- Suh, Y.; Wang, J.; Tang, S.; Mei, T.; Mu Lee, K. Part-aligned bilinear representations for person re-identification. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 20 2018; pp. 402–419. [Google Scholar]

- Shen, Y.; Li, H.; Xiao, T.; Yi, S.; Chen, D.; Wang, X. Deep group-shuffling random walk for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2265–2274. [Google Scholar]

- Chen, D.; Xu, D.; Li, H.; Sebe, N.; Wang, X. Group consistent similarity learning via deep crf for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8649–8658. [Google Scholar]

- Hou, R.; Ma, B.; Chang, H.; Gu, X.; Shan, S.; Chen, X. Interaction-and-aggregation network for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9317–9326. [Google Scholar]

- Zheng, Z.; Yang, X.; Yu, Z.; Zheng, L.; Yang, Y.; Kautz, J. Joint discriminative and generative learning for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 2138–2147. [Google Scholar]

- Li, W.; Zhu, X.; Gong, S. Person re-identification by deep joint learning of multi-loss classification. arXiv 2017, arXiv:1705.04724. [Google Scholar]

- Liu, X.; Zhao, H.; Tian, M.; Sheng, L.; Shao, J.; Yi, S.; Yan, J.; Wang, X. Hydraplus-net: Attentive deep features for pedestrian analysis. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 350–359. [Google Scholar]

| Dataset | Time | Camera | Resolution | IDs (T-Q-G) | Images (T-Q-G) |

|---|---|---|---|---|---|

| Market1501 [1] | 2015 | 6 | fixed | 751-750-751 | 12936-3368-15913 |

| DukeMTMC [31] | 2017 | 8 | fixed | 702-702-1110 | 16522-2228-17661 |

| VIPeR [32] | 2007 | 2 | fixed | 316-316-316 | 632-632-632 |

| GRID [33] | 2009 | 8 | vary | 125-125-900 | 250-125-900 |

| MSFANet Data Augmentation on Market1501 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Horizontal flip | Crop | Erase | Patch | Color jitter | Affine | Rotation | Cutout | AutoAugment | mAP (%) | Rank-1 (%) |

| Y | Y | Y | 81.3 | 91.0 | ||||||

| Y | Y | Y | 81.8 | 91.1 | ||||||

| Y | 82.2 | 92.4 | ||||||||

| Y | Y | 83.1 | 92.5 | |||||||

| Y | Y | Y | Y | Y | 83.5 | 92.6 | ||||

| Y | Y | Y | Y | Y | 83.9 | 92.8 | ||||

| Y | Y | Y | Y | 84.6 | 93.3 | |||||

| Y | Y | Y | Y | 85.0 | 93.9 | |||||

| Y | Y | Y | Y | Y | 85.1 | 93.9 | ||||

| Y | Y | Y | Y | Y | 86.0 | 94.5 | ||||

| Y | Y | Y | Y | Y | 86.8 | 94.6 | ||||

| Loss | Dataset | mAP | Rank-1 |

|---|---|---|---|

| Softmax | Market1501 | 86.2 | 94.3 |

| 0.5*Triplet + Softmax | Market1501 | 86.8 | 94.6 |

| Softmax | DukeMTMC | 74.4 | 85.9 |

| 0.5*Triplet + Softmax | DukeMTMC | 76.6 | 86.8 |

| Method | Publication | Backbone | Market1501 | DukeMTMC | Complexity | |||

|---|---|---|---|---|---|---|---|---|

| Rank-1 | mAP | Rank-1 | mAP | Param () | GFLOPs | |||

| Without ImageNet Pre-training | ||||||||

| † ShuffleNet [15] | CVPR’18 | ShuffleNet | 84.8 | 65 | 71.6 | 49.9 | 5.34 | 0.38 |

| † MobileNetV2 [14] | CVPR’18 | MobileNetV2 | 87 | 69.5 | 75.2 | 55.8 | 4.32 | 0.44 |

| † BraidNet [16] | CVPR’18 | BraidNet | 83.7 | 69.5 | 76.4 | 59.5 | - | - |

| †‡ HAN [25] | CVPR’18 | Inception | 91.2 | 75.7 | 80.5 | 63.8 | 4.52 | 0.53 |

| † PCB [24] | ECCV’18 | ResNet | 92.4 | 77.3 | 81.9 | 65.3 | 23.53 | 2.74 |

| † OSNet [27] | ICCV’19 | OSNet | 93.6 | 81 | 84.7 | 68.6 | 2.23 | 0.98 |

| †‡ MSFANet + `1-2-3’ (ours) | - | MSFANet | 92.5 | 82 | 82.6 | 69.4 | 2.52 | 0.82 |

| †‡ MSFANet + `2-2-2’ (ours) | - | MSFANet | 92.9 | 82.3 | 82.8 | 69.5 | 2.25 | 0.94 |

| With ImageNet Pre-training | ||||||||

| DaRe [4] | CVPR’18 | DenseNet | 89 | 76 | 80.2 | 64.5 | 6.95 | 1.85 |

| MLFN [26] | CVPR’18 | ResNeXt | 90 | 74.3 | 81 | 62.8 | 22.98 | 2.76 |

| ‡ Bilinear [51] | ECCV’18 | Inception | 91.7 | 79.6 | 84.4 | 69.3 | 4.5 | 0.5 |

| G2G [52] | CVPR’18 | ResNet | 92.7 | 82.5 | 80.7 | 66.4 | 23.5 | 2.7 |

| DeepCRF [53] | CVPR’18 | ResNet | 93.5 | 81.6 | 84.9 | 69.5 | 23.5 | 2.7 |

| IANet [54] | CVPR’19 | ResNet | 94.4 | 84.5 | 87.1 | 73.4 | 23.5 | 2.7 |

| DGNet [55] | CVPR’19 | ResNet | 94.8 | 84.5 | 86.6 | 74.8 | 23.5 | 2.7 |

| OSNet [27] | ICCV’19 | OSNet | 94.8 | 84.5 | 88.6 | 73.5 | 2.2 | 0.98 |

| ‡ MSFANet + `1-2-3’ (ours) | - | MSFANet | 94.3 | 86.8 | 86.8 | 76.6 | 2.52 | 0.82 |

| ‡ MSFANet + `2-2-2’ (ours) | - | MSFANet | 94.5 | 86.9 | 87.0 | 76.3 | 2.25 | 0.94 |

| Comparison to State-of-the-Art Methods on Small ReID Datasets | ||||||

|---|---|---|---|---|---|---|

| Method | Publication | Backbone | VIPeR | GRID | ||

| Rank-1 | mAP | Rank-1 | mAP | |||

| JLML [56] | CVPR’18 | ResNet | 50.2 | - | 37.5 | - |

| HydraPlus-Net [57] | CVPR’18 | Inception | 56.6 | - | - | - |

| OSNet [27] | ICCV’19 | OSNet | 41.1 | 54.5 | 38.2 | 40.5 |

| †‡ MSFANet - data_aug (ours) | - | MSFANet | 48.9 | 60.3 | 28.9 | 40.8 |

| †‡ MSFANet + data_aug (ours) | - | MSFANet | 52.2 | 64.5 | 30.4 | 41.3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, W.; Li, Y.; Zhang, K.; Hou, X.; Xu, J.; Su, R.; Xu, H. An Efficient Multi-Scale Focusing Attention Network for Person Re-Identification. Appl. Sci. 2021, 11, 2010. https://doi.org/10.3390/app11052010

Huang W, Li Y, Zhang K, Hou X, Xu J, Su R, Xu H. An Efficient Multi-Scale Focusing Attention Network for Person Re-Identification. Applied Sciences. 2021; 11(5):2010. https://doi.org/10.3390/app11052010

Chicago/Turabian StyleHuang, Wei, Yongying Li, Kunlin Zhang, Xiaoyu Hou, Jihui Xu, Ruidan Su, and Huaiyu Xu. 2021. "An Efficient Multi-Scale Focusing Attention Network for Person Re-Identification" Applied Sciences 11, no. 5: 2010. https://doi.org/10.3390/app11052010

APA StyleHuang, W., Li, Y., Zhang, K., Hou, X., Xu, J., Su, R., & Xu, H. (2021). An Efficient Multi-Scale Focusing Attention Network for Person Re-Identification. Applied Sciences, 11(5), 2010. https://doi.org/10.3390/app11052010