Constrained Backtracking Matching Pursuit Algorithm for Image Reconstruction in Compressed Sensing

Abstract

1. Introduction

- (1)

- The restricted isometry property (RIP) is analyzed, and the relationship between observed values and signals is derived and demonstrated.

- (2)

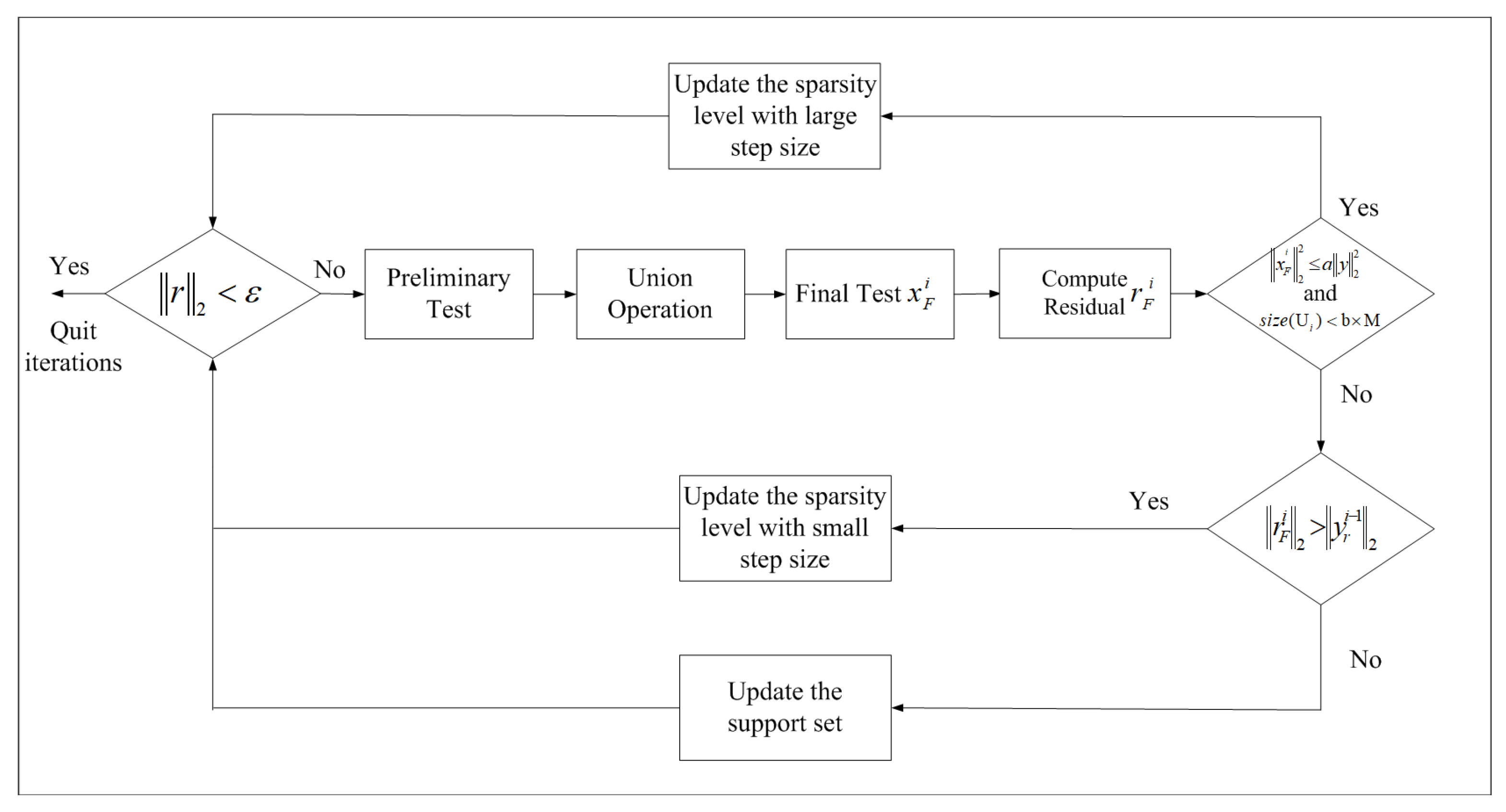

- The reconstruction process is divided into three stages, including the large step size stage, small step size stage, and support set update stage. Different step sizes are used in these stages.

- (3)

- A backtracking threshold operation is proposed, which adopts a composite strategy and uses dedicated parameters to control the different step sizes in the reconstruction process.

- (4)

- The proposed algorithm can achieve satisfactory reconstruction performance and overcome the sensitivity to step size.

2. Preliminaries

2.1. A Review of Compressed Sensing

2.2. A Review of the Greedy Pursuit Algorithms

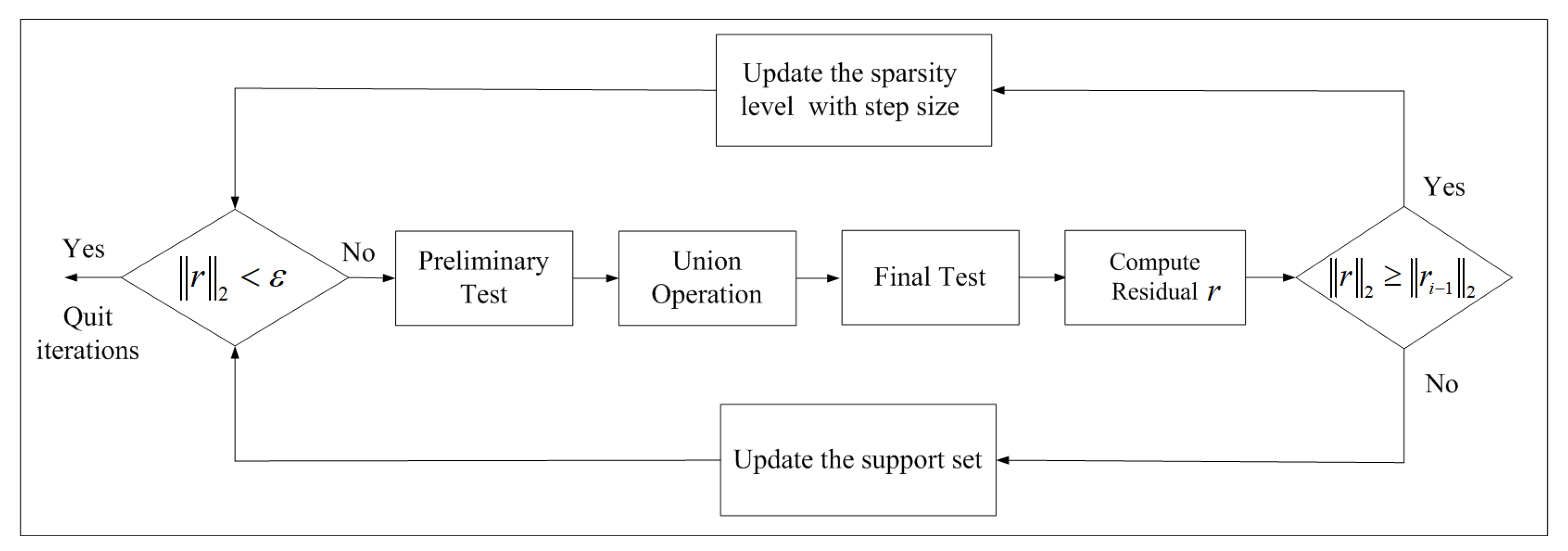

| Algorithm 1 Sparsity adaptive matching pursuit algorithm |

|

3. The Constrained Backtracking Matching Pursuit Algorithm for Image Reconstruction

| Algorithm 2 The proposed CBMP algorithm |

|

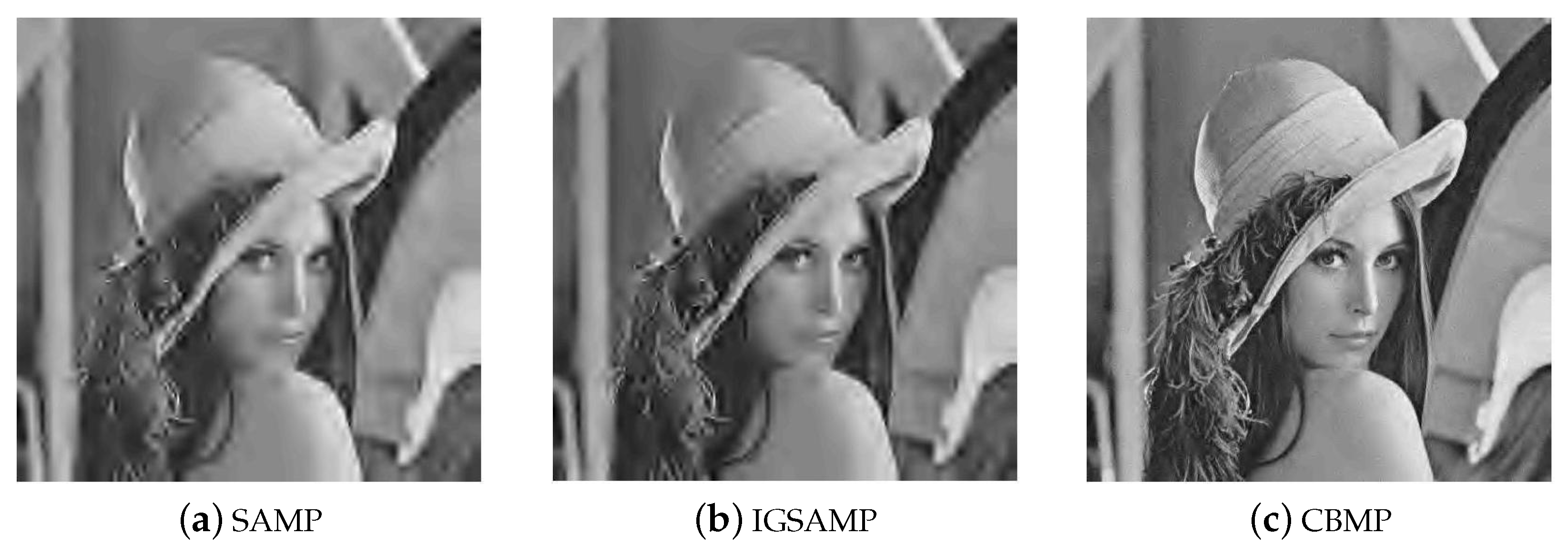

4. Experimental Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Candès, E.J.; Romberg, J.; Tao, T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Trans. Inf. Theory 2006, 5, 489–509. [Google Scholar] [CrossRef]

- Wei, Z.R.; Zhang, J.L.; Xu, Z.Y.; Liu, Y. Optimization methods of compressively sensed image reconstruction based on single-pixel imaging. Appl. Sci. 2020, 10, 3288. [Google Scholar] [CrossRef]

- Hashimoto, F.; Ote, K.; Oida, T.; Teramoto, A.; Ouchi, Y. Compressed sensing magnetic resonance image reconstruction using an iterative convolutional neural network approach. Appl. Sci. 2020, 10, 1902. [Google Scholar] [CrossRef]

- Jiang, M.F.; Lu, L.; Shen, Y.; Wu, L.; Gong, Y.L.; Xia, L.; Liu, F. Directional tensor product complex tight framelets for compressed sensing MRI reconstruction. IET Image Process. 2019, 13, 2183–2189. [Google Scholar] [CrossRef]

- Zhang, Z.M.; Liu, X.W.; Wei, S.S.; Gan, H.P.; Liu, F.F.; Li, Y.W.; Liu, C.Y.; Liu, F. Electrocardiogram reconstruction based on compressed sensing. IEEE Access 2019, 7, 37228–37237. [Google Scholar] [CrossRef]

- Bi, X.; Chen, X.D.; Zhang, Y. Image compressed sensing based on wavelet transform in contourlet domain. Signal Process. 2011, 91, 1085–1092. [Google Scholar] [CrossRef]

- Ye, J.C. Compressed sensing MRI: A review from signal processing perspective. BMC Biomed. Eng. 2019, 1, 8. [Google Scholar] [CrossRef]

- Sandino, C.M.; Cheng, J.Y.; Chen, F.; Mardani, M.; Pauly, J.M.; Vasanawala, S.S. Compressed sensing: From research to clinical practice with deep neural networks: Shortening scan times for magnetic resonance imaging. IEEE Signal Process. Mag. 2020, 37, 117–127. [Google Scholar] [CrossRef]

- Iwen, M.A.; Spencer, C.V. A note on compressed sensing and the complexity of matrix multiplication. Inf. Process. Lett. 2012, 109, 468–471. [Google Scholar] [CrossRef]

- Chartrand, R.; Staneva, V. Restricted isometry properties and nonconvex compressive sensing. Inverse Probl. 2008, 24, 1–14. [Google Scholar] [CrossRef]

- Candès, E.J.; Wakin, M.B.; Boyd, S.P. Enhancing sparsity by reweighted l1 minimization. J. Fourier Anal. Appl. 2008, 14, 877–905. [Google Scholar] [CrossRef]

- Foucart, S.; Lai, M.J. Sparsest solutions of underdetermined linear systems via lq minimization for 0 < q <= 1. Appl. Comput. Harmon. Anal. 2009, 26, 395–407. [Google Scholar]

- Nam, N.; Needell, D.; Woolf, T. Linear Convergence of Stochastic Iterative Greedy Algorithms with Sparse Constraints. IEEE Trans. Inf. Theory 2017, 63, 6869–6895. [Google Scholar]

- Tkacenko, A.; Vaidyanathan, P.P. Iterative greedy algorithm for solving the FIR paraunitary approximation problem. IEEE Trans. Signal Process. 2006, 54, 146–160. [Google Scholar] [CrossRef]

- Tropp, J.A.; Gilbert, A.C. Signal recovery from random measurements via orthogonal matching pursuit. IEEE Trans. Inf. Theory 2007, 5, 4655–4666. [Google Scholar] [CrossRef]

- Li, H.; Ma, Y.; Fu, Y. An improved RIP-based performance guarantee for sparse signal recovery via simultaneous orthogonal matching pursuit. Signal Process. 2017, 144, 29–35. [Google Scholar] [CrossRef]

- Needell, D.; Vershynin, R. Greedy signal recovery and uncertainty principles. Proc. SPIE 2008, 6814, 68140J. [Google Scholar]

- Donoho, D.L.; Tsaig, Y.; Starck, J.L. Sparse solution of under-determined linear equations by stage-wise orthogonal matching pursuit. IEEE Trans. Inf. Theory 2006, 58, 1094–1121. [Google Scholar] [CrossRef]

- Needell, D.; Vershynin, R. Signal recovery from incomplete and inaccurate measurements via regularized orthogonal matching pursuit. IEEE J. Sel. Top. Signal Process. 2010, 4, 310–316. [Google Scholar] [CrossRef]

- Zhang, H.F.; Xiao, S.G.; Zhou, P. A matching pursuit algorithm for backtracking regularization based on energy sorting. Symmetry 2020, 12, 231. [Google Scholar] [CrossRef]

- Dai, W.; Milenkovic, O. Subspace pursuit for compressive sensing signal reconstruction. IEEE Trans. Inf. Theory 2009, 55, 2230–2249. [Google Scholar] [CrossRef]

- Needell, D.; Tropp, J.A. CoSaMP: Iterative Signal Recovery from Incomplete and Inaccurate Samples. Appl. Comput. Harmon. Anal. 2009, 26, 301–321. [Google Scholar] [CrossRef]

- Do, T.T.; Gan, L.; Nguyen, N.; Tran, T.D. Sparsity adaptive matching pursuit algorithm for practical compressed sensing. In Proceedings of the 42nd Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 26–29 October 2008; pp. 581–587. [Google Scholar]

- Bi, X.; Chen, X.D.; Leng, L. Energy-based adaptive matching pursuit algorithm for binary sparse signal reconstruction in compressed sensing. Signal Image Video Pocess. 2014, 8, 1039–1048. [Google Scholar] [CrossRef]

- Shoitan, R.; Nossair, Z.; Ibrahim, I.I.; Tobal, A. Improving the reconstruction efficiency of sparsity adaptive matching pursuit based on the Wilkinson matrix. Front. Inf. Technol. Electron. Eng. 2018, 19, 503–512. [Google Scholar] [CrossRef]

- Zhao, L.Q.; Ma, K.; Jia, Y.F. Improved generalized sparsity adaptive matching pursuit algorithm based on compressive sensing. J. Electr. Comput. Eng. 2020, 4, 1–11. [Google Scholar]

- Candès, E.J.; Wakin, M.B. An introduction to compressive sampling. IEEE Signal Process. Mag. 2008, 25, 21–30. [Google Scholar] [CrossRef]

- Kutyniok, G.; Eldar, Y.C. Compressed Sensing: Theory and Applications; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Baraniuk, R.; Davenport, M.; DeVore, R.; Wakin, M. A simple proof of the restricted isometry property for random matrices. Constr. Approx. 2008, 28, 253–263. [Google Scholar] [CrossRef]

- Candès, E.J. The restricted isometry property and its implications for compressed sensing. Comptes Rendus Math. 2008, 346, 589–592. [Google Scholar] [CrossRef]

- Needell, D. Topics in Compressed Sensing. Ph.D. Dissertation, University of California, Berkeley, CA, USA, 2009. [Google Scholar]

- Mallat, S.G.; Zhang, Z. Matching pursuits with time-frequency dictionaries. IEEE Trans. Signal Process. 1993, 41, 3397–3415. [Google Scholar] [CrossRef]

- Elad, M. Sparse and Redundant Representations—From Theory to Applications in Signal and Image Processing; Springer: New York, NY, USA, 2010. [Google Scholar]

- Leng, L.; Zhang, J.S.; Khan, M.K.; Chen, X.; Alghathbar, K. Dynamic weighted discrimination power analysis: A novel approach for face and palmprint recognition in DCT domain. Int. J. Phys. Sci. 2010, 5, 2543–2554. [Google Scholar]

- Leng, L.; Li, M.; Kim, C.; Bi, X. Dual-source discrimination power analysis for multi-instance contactless palmprint recognition. Multimed. Tools Appl. 2017, 76, 333–354. [Google Scholar] [CrossRef]

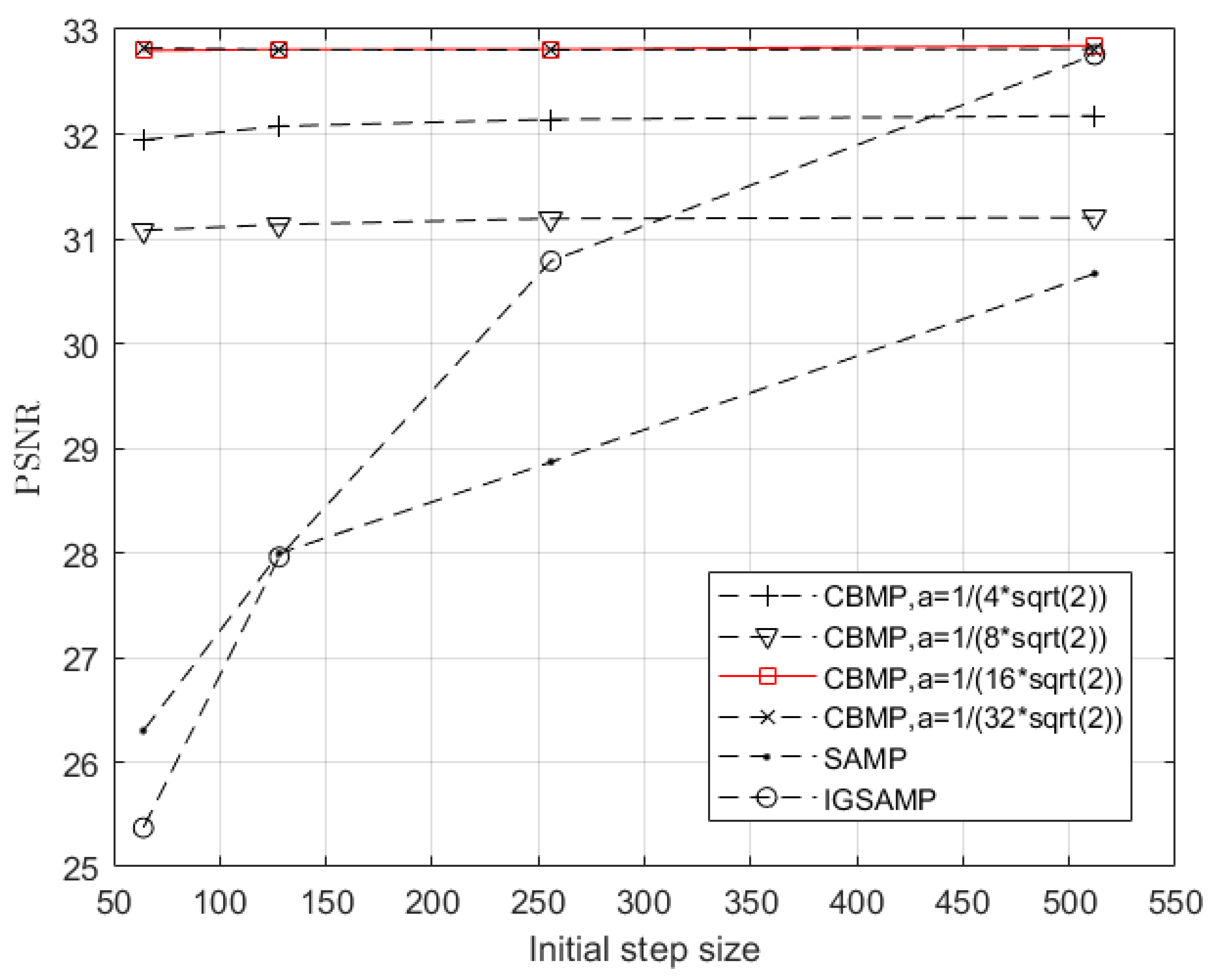

| Initial Step Size | PSNR of SAMP | PSNR of IGSAMP | PSNR of CBMP |

|---|---|---|---|

| 64 | 25.45 | 26.23 | 32.13 |

| 128 | 26.41 | 28.45 | 31.91 |

| 256 | 27.62 | 29.89 | 31.87 |

| 512 | 28.98 | 31.76 | 31.83 |

| Initial Step Size | PSNR of SAMP | PSNR of IGSAMP | PSNR of CBMP |

|---|---|---|---|

| 64 | 26.99 | 27.32 | 33.85 |

| 128 | 28.59 | 28.86 | 34.01 |

| 256 | 30.31 | 31.07 | 33.99 |

| 512 | 31.60 | 32.46 | 34.02 |

| Initial Step Size | PSNR of SAMP | PSNR of IGSAMP | PSNR of CBMP |

|---|---|---|---|

| 64 | 24.04 | 25.33 | 31.46 |

| 128 | 25.19 | 27.85 | 31.42 |

| 256 | 26.87 | 30.54 | 31.40 |

| 512 | 28.44 | 31.10 | 31.38 |

| Initial Step Size | PSNR of SAMP | PSNR of IGSAMP | PSNR of CBMP |

|---|---|---|---|

| 64 | 26.30 | 25.37 | 32.80 |

| 128 | 28.00 | 27.96 | 32.81 |

| 256 | 28.87 | 30.79 | 32.81 |

| 512 | 30.67 | 32.75 | 32.84 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bi, X.; Leng, L.; Kim, C.; Liu, X.; Du, Y.; Liu, F. Constrained Backtracking Matching Pursuit Algorithm for Image Reconstruction in Compressed Sensing. Appl. Sci. 2021, 11, 1435. https://doi.org/10.3390/app11041435

Bi X, Leng L, Kim C, Liu X, Du Y, Liu F. Constrained Backtracking Matching Pursuit Algorithm for Image Reconstruction in Compressed Sensing. Applied Sciences. 2021; 11(4):1435. https://doi.org/10.3390/app11041435

Chicago/Turabian StyleBi, Xue, Lu Leng, Cheonshik Kim, Xinwen Liu, Yajun Du, and Feng Liu. 2021. "Constrained Backtracking Matching Pursuit Algorithm for Image Reconstruction in Compressed Sensing" Applied Sciences 11, no. 4: 1435. https://doi.org/10.3390/app11041435

APA StyleBi, X., Leng, L., Kim, C., Liu, X., Du, Y., & Liu, F. (2021). Constrained Backtracking Matching Pursuit Algorithm for Image Reconstruction in Compressed Sensing. Applied Sciences, 11(4), 1435. https://doi.org/10.3390/app11041435