Evaluation of Methodologies and Measures on the Usability of Social Robots: A Systematic Review

Abstract

1. Introduction

2. Research Method

2.1. Research Objectives

- RQ1. What kind of usability evaluation methodologies are deployed in social robot studies?

- To provide a structural taxonomy of evaluation methods available

- To serve a guideline for identifying appropriate UX evaluation method for practitioners at the moment

- RQ2. What are the main evaluation dimensions in social robot studies?

- To classify usability evaluation measures addressed in recent studies related to social robot evaluation

2.2. Search Methods and Selection Criteria

2.3. Data Extraction and Synthesis

- Target audience of the robot: Elderly, Children, People who have a specific disease, Ordinary people

- Type of stimuli for evaluation: Text, Image, Video, Live interaction

- Evaluation technique: Questionnaire, Biometrics, Video analysis, Interview

- Main modality of robot interaction: Vision, Audition, Tactition, Thermoception

3. Results

3.1. Search Outcome

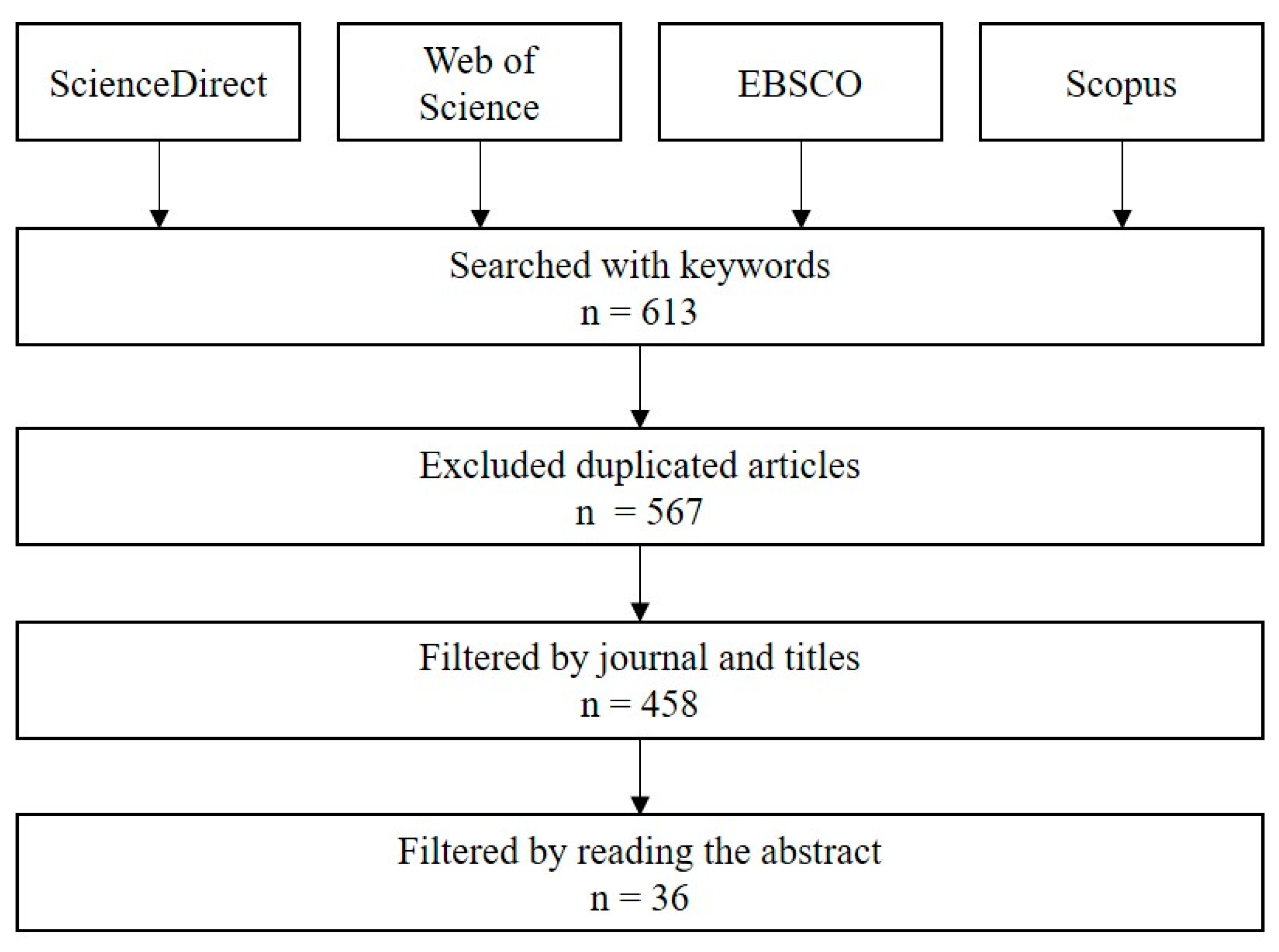

3.1.1. Search Process

3.1.2. Overview of Included Studies

3.2. Evaluation Methods

3.2.1. Type of Stimuli

3.2.2. Evaluation Technique

3.2.3. Criteria for Selecting Participants

3.3. Evaluation Dimensions

3.3.1. Consumer Acceptance of New Technology Models

3.3.2. Referred Evaluation Tools of Subjective Measures

3.3.3. Most Frequently Used Measures from Recent Studies

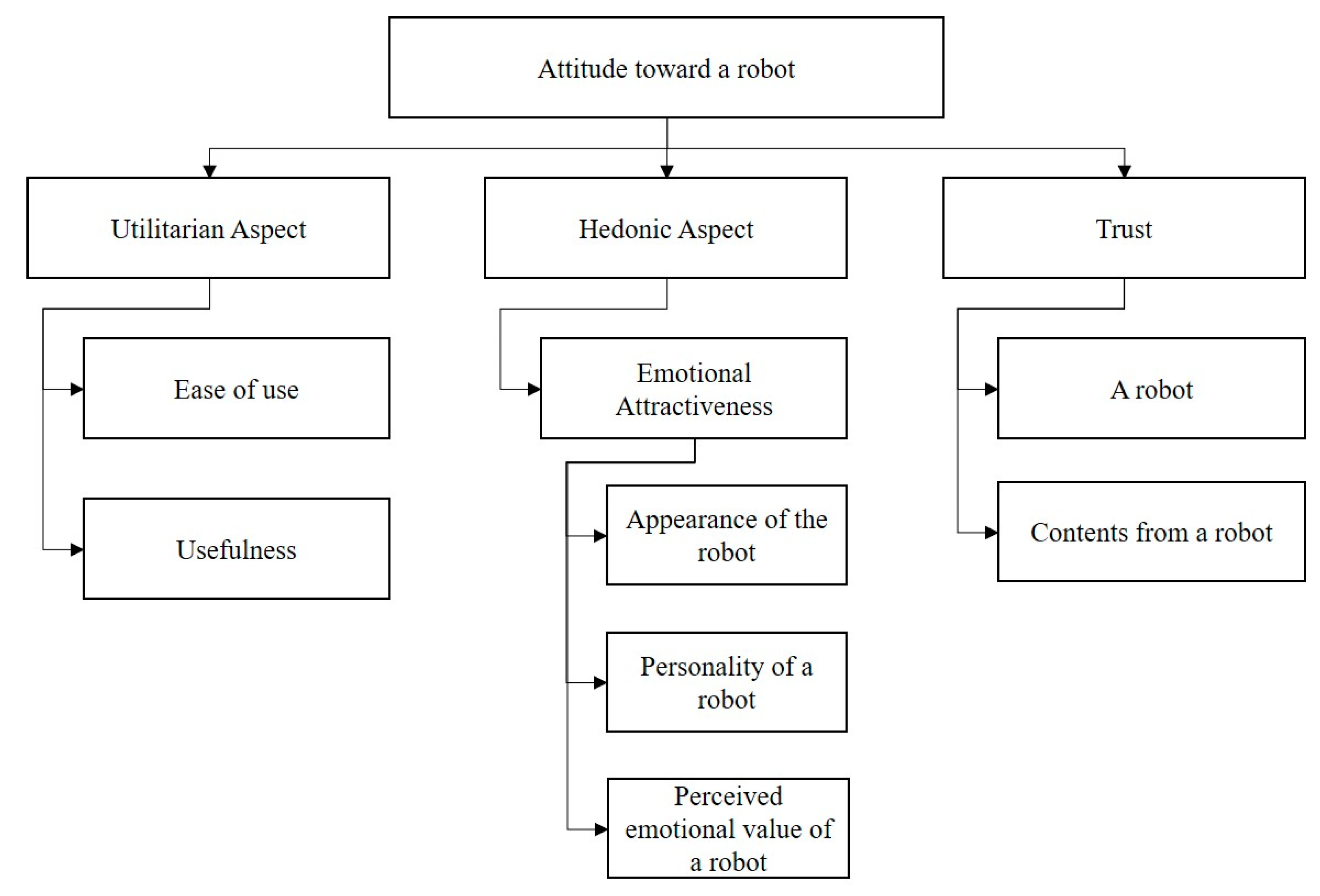

3.3.4. Categorization of Social Robot’s Evaluation Measures

4. Discussion

4.1. Proper Combination of Stimuli and Evaluation Techniques

4.2. Suggestion of Stimuli and Evaluation Technique by Assessment Conditions

5. Conclusions

- For planning and designing new social robots

- -

- Chance to consider significant and affecting factors for designing social robots from the planning phase of development

- For evaluation of new social robot

- -

- Helps to design survey questionnaires easily from the whole set of evaluation measures previously used

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- KPMG. Social Robots. 2016. Available online: https://assets.kpmg/content/dam/kpmg/pdf/2016/06/social-robots.pdf (accessed on 1 February 2021).

- Lee, K.M.; Park, N.; Song, H. Can a Robot be Perceived as a Developing Creature? Effects of Artificial Developments on Social Presence and Social Responses toward Robots in Human-Robot Interaction. In Proceedings of the International Communication Association Conference, New Orleans, LA, USA, 27–31 May 2004. [Google Scholar]

- Jung, Y.; Lee, K.M. Effects of physical embodiment on social presence of social robots. In Proceedings of PRESENCE; Temple University: Philadelphia, PA, USA, 2004; pp. 80–87. [Google Scholar]

- Breazeal, C. Designing Sociable Machines. In Methodologies and Software Engineering for Agent Systems; Springer: Berlin/Heidelberg, Germany, 2002; pp. 149–156. [Google Scholar]

- Fong, T.; Nourbakhsh, I.; Dautenhahn, K. A survey of socially interactive robots. Robot. Auton. Syst. 2003, 42, 143–166. [Google Scholar] [CrossRef]

- Weber, J. Ontological and Anthropological Dimensions of Social Robotics. In Proceedings of the Hard Problems and Open Challenges in Robot-Human Interaction, Hatfield, UK, 12–15 April 2005; p. 121. [Google Scholar]

- Agrawal, S.; Williams, M.-A. Robot Authority and Human Obedience. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 57–58. [Google Scholar]

- Lopez, A.; Ccasane, B.; Paredes, R.; Cuellar, F. Effects of Using Indirect Language by a Robot to Change Human Attitudes. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 193–194. [Google Scholar]

- Xu, Q.; Ng, J.S.L.; Cheong, Y.L.; Tan, O.Y.; Bin Wong, J.; Tay, B.T.C.; Park, T. Effect of scenario media on human-robot interaction evaluation. In Proceedings of the 7th annual ACM/IEEE international conference on Human-Robot Interaction–HRI’12, Boston, MA, USA, 5–8 March 2012; pp. 275–276. [Google Scholar]

- Deutsch, I.; Erel, H.; Paz, M.; Hoffman, G.; Zuckerman, O. Home robotic devices for older adults: Opportunities and concerns. Comput. Hum. Behav. 2019, 98, 122–133. [Google Scholar] [CrossRef]

- Hirokawa, E.; Suzuki, K. Design of a Huggable Social Robot with Affective Expressions Using Projected Images. Appl. Sci. 2018, 8, 2298. [Google Scholar] [CrossRef]

- Thimmesch-Gill, Z.; Harder, K.A.; Koutstaal, W. Perceiving emotions in robot body language: Acute stress heightens sensitivity to negativity while attenuating sensitivity to arousal. Comput. Hum. Behav. 2017, 76, 59–67. [Google Scholar] [CrossRef]

- Woods, S. Exploring the design space of robots: Children’s perspectives. Interact. Comput. 2006, 18, 1390–1418. [Google Scholar] [CrossRef]

- Schilbach, L.; Timmermans, B.; Reddy, V.; Costall, A.; Bente, G.; Schlicht, T.; Vogeley, K. Toward a second-person neuroscience. Behav. Brain Sci. 2013, 36, 393–414. [Google Scholar] [CrossRef]

- Bente, G.; Feist, A.; Elder, S. Person perception effects of computer-simulated male and female head movement. J. Nonverbal Behav. 1996, 20, 213–228. [Google Scholar] [CrossRef]

- Evers, V.; Winterboer, A.K.; Pavlin, G.; Groen, F.C.A. The Evaluation of Empathy, Autonomy and Touch to Inform the Design of an Environmental Monitoring Robot. In Proceedings of the Computer Vision; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6414, pp. 285–294. [Google Scholar]

- Di Nuovo, A.; Varrasi, S.; Conti, D.; Bamsforth, J.; Lucas, A.; Soranzo, A.; McNamara, J. Usability Evaluation of a Robotic System for Cognitive Testing. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Korea, 11–14 March 2019; pp. 588–589. [Google Scholar]

- Rossi, S.; Santangelo, G.; Staffa, M.; Varrasi, S.; Conti, D.; Di Nuovo, A. Psychometric Evaluation Supported by a Social Robot: Personality Factors and Technology Acceptance. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 802–807. [Google Scholar]

- Pan, M.K.; Croft, E.A.; Niemeyer, G. Evaluating Social Perception of Human-to-Robot Handovers Using the Robot Social Attributes Scale (RoSAS). In Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 443–451. [Google Scholar]

- Rouaix, N.; Retru-Chavastel, L.; Rigaud, A.-S.; Monnet, C.; Lenoir, H.; Pino, M. Affective and Engagement Issues in the Conception and Assessment of a Robot-Assisted Psychomotor Therapy for Persons with Dementia. Front. Psychol. 2017, 8, 950. [Google Scholar] [CrossRef] [PubMed]

- Shahid, S.; Krahmer, E.; Swerts, M. Child–robot interaction across cultures: How does playing a game with a social robot compare to playing a game alone or with a friend? Comput. Hum. Behav. 2014, 40, 86–100. [Google Scholar] [CrossRef]

- Park, E.; Lee, J. I am a warm robot: The effects of temperature in physical human–robot interaction. Robot 2014, 32, 133–142. [Google Scholar] [CrossRef]

- De Graaf, M.M.; Allouch, S.B.; van Dijk, J.A. Long-term evaluation of a social robot in real homes. Interact. Stud. 2016, 17, 462–491. [Google Scholar] [CrossRef]

- Jeong, S. Designing facial expressions of an educational assistant robot by contextual methods. Arch. Des. Res. 2013, 26, 409–435. [Google Scholar]

- Martínez-Miranda, J.; Pérez-Espinosa, H.; Espinosa-Curiel, I.; Avila-George, H.; Rodríguez-Jacobo, J. Age-based differences in preferences and affective reactions towards a robot’s personality during interaction. Comput. Hum. Behav. 2018, 84, 245–257. [Google Scholar] [CrossRef]

- Henkemans, O.A.B.; Bierman, B.P.; Janssen, J.; Looije, R.; Neerincx, M.A.; Van Dooren, M.M.; De Vries, J.L.; Van Der Burg, G.J.; Huisman, S.D. Design and evaluation of a personal robot playing a self-management education game with children with diabetes type 1. Int. J. Hum.-Comput. Stud. 2017, 106, 63–76. [Google Scholar] [CrossRef]

- Kim, M.-G.; Suzuki, K. A card-playing humanoid playmate for human behavioral analysis. Entertain. Comput. 2012, 3, 103–109. [Google Scholar] [CrossRef]

- Niculescu, A.; Van Dijk, E.M.; Nijholt, A.; See, S.L. The influence of voice pitch on the evaluation of a social robot receptionist. In Proceedings of the 2011 International Conference on User Science and Engineering (i-USEr), Shah Alam, Selangor, Malaysia, 29 November–1 December 2011; pp. 18–23. [Google Scholar]

- Kolanowski, A.; Litaker, M.; Buettner, L.; Moeller, J.; Costa, P.T., Jr. A Randomized Clinical Trial of Theory-Based Activities for the Behavioral Symptoms of Dementia in Nursing Home Residents. J. Am. Geriatr. Soc. 2011, 59, 1032–1041. [Google Scholar] [CrossRef] [PubMed]

- Hammar, L.M.; Emami, A.; Götell, E.; Engström, G. The impact of caregivers’ singing on expressions of emotion and resistance during morning care situations in persons with dementia: An intervention in dementia care. J. Clin. Nurs. 2011, 20, 969–978. [Google Scholar] [CrossRef]

- Götell, E.; Brown, S.; Ekman, S.-L. The influence of caregiver singing and background music on vocally expressed emotions and moods in dementia care. Int. J. Nurs. Stud. 2009, 46, 422–430. [Google Scholar] [CrossRef] [PubMed]

- Gamberini, L.; Spagnolli, A.; Prontu, L.; Furlan, S.; Martino, F.; Solaz, B.R.; Alcañiz, M.; Lozano, J.A. How natural is a natural interface? An evaluation procedure based on action breakdowns. Pers. Ubiquitous Comput. 2011, 17, 69–79. [Google Scholar] [CrossRef]

- Cockton, G.; Lavery, D. A framework for usability problem extraction. In INTERACT’99; IOS Press: London, UK, August 1999; pp. 344–352. [Google Scholar]

- Sefidgar, Y.S.; MacLean, K.E.; Yohanan, S.; Van Der Loos, H.M.; Croft, E.A.; Garland, E.J. Design and Evaluation of a Touch-Centered Calming Interaction with a Social Robot. IEEE Trans. Affect. Comput. 2016, 7, 108–121. [Google Scholar] [CrossRef]

- Mazzei, D.; Greco, A.; Lazzeri, N.; Zaraki, A.; Lanata, A.; Igliozzi, R.; Mancini, A.; Stoppa, F.; Scilingo, E.P.; Muratori, F.; et al. Robotic Social Therapy on Children with Autism: Preliminary Evaluation through Multi-parametric Analysis. In Proceedings of the 2012 International Conference on Privacy, Security, Risk and Trust and 2012 International Confernece on Social Computing, Liverpool, UK, 25–27 June 2012; pp. 766–771. [Google Scholar]

- Mirza-Babaei, P.; Long, S.; Foley, E.; McAllister, G. Understanding the Contribution of Biometrics to Games User Research. In Proceedings of the DiGRA’11—Proceedings of the 2011 DiGRA International Conference: Think Design Play, Hilversum, The Netherlands, 14–17 September 2011. [Google Scholar]

- Aziz, A.A.; Moganan, F.F.M.; Ismail, A.; Lokman, A.M. Autistic Children’s Kansei Responses Towards Humanoid-Robot as Teaching Mediator. Procedia Comput. Sci. 2015, 76, 488–493. [Google Scholar] [CrossRef]

- Anzalone, S.M.; Boucenna, S.; Ivaldi, S.; Chetouani, M. Evaluating the Engagement with Social Robots. Int. J. Soc. Robot. 2015, 7, 465–478. [Google Scholar] [CrossRef]

- Davis, F. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User acceptance of computer technology: A comparison of two. Manag. Sci. 1989, 35, 982. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. Extrinsic and Intrinsic Motivation to Use Computers in the Workplace1. J. Appl. Soc. Psychol. 1992, 22, 1111–1132. [Google Scholar] [CrossRef]

- Atkinson, M.; Kydd, C. Individual characteristics associated with World Wide Web use: An empirical study of playfulness and motivation. ACM SIGMIS Database 1997, 28, 53–62. [Google Scholar] [CrossRef]

- Moon, J.-W.; Kim, Y.-G. Extending the TAM for a World-Wide-Web context. Inf. Manag. 2001, 38, 217–230. [Google Scholar] [CrossRef]

- Mun, Y.Y.; Hwang, Y. Predicting the use of web-based information systems: Self-efficacy, enjoyment, learning goal orientation, and the technology acceptance model. Int. J. Hum.-Comput. Stud. 2003, 59, 431–449. [Google Scholar]

- Van der Heijden, H. User acceptance of hedonic information systems. MIS Q. 2004, 14, 695–704. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.L.; Xu, X. Consumer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of Technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Heerink, M.; Kröse, B.; Evers, V.; Wielinga, B. Assessing Acceptance of Assistive Social Agent Technology by Older Adults: The Almere Model. Int. J. Soc. Robot. 2010, 2, 361–375. [Google Scholar] [CrossRef]

- Moore, G.C.; Benbasat, I. Development of an Instrument to Measure the Perceptions of Adopting an Information Technology Innovation. Inf. Syst. Res. 1991, 2, 192–222. [Google Scholar] [CrossRef]

- Wetzlinger, W.; Auinger, A.; Dörflinger, M. Comparing Effectiveness, Efficiency, Ease of Use, Usability and User Experience When Using Tablets and Laptops. In Proceedings of the Mining Data for Financial Applications; Springer: Berlin/Heidelberg, Germany, 2014; pp. 402–412. [Google Scholar]

- Rau, P.-L.P.; Li, Y.; Li, D. Effects of communication style and culture on ability to accept recommendations from robots. Comput. Hum. Behav. 2009, 25, 587–595. [Google Scholar] [CrossRef]

- Wagner, A.R.; Robinette, P. Towards robots that trust: Human subject validation of the situational conditions for trust. Interact. Stud. 2015, 16, 89–117. [Google Scholar] [CrossRef]

- Hancock, P.A.; Billings, D.R.; Schaefer, K.E.; Chen, J.Y.C.; De Visser, E.J.; Parasuraman, R. A Meta-Analysis of Factors Affecting Trust in Human-Robot Interaction. Hum. Factors: J. Hum. Factors Ergon. Soc. 2011, 53, 517–527. [Google Scholar] [CrossRef]

- Alenljung, B.; Lindblom, J.; Andreasson, R.; Ziemke, T. User Experience in Social Human-Robot Interaction. In Rapid Automation; IGI Global: Hershey, PA, USA, 2019; pp. 1468–1490. [Google Scholar]

- Bickmore, T.; Schulman, D. The comforting presence of relational agents. In CHI ’06 Extended Abstracts on Human Factors in Computing Systems; ACM: New York, NY, USA, 2006; pp. 550–555. [Google Scholar] [CrossRef]

- Nakanishi, J.; Sumioka, H.; Ishiguro, H. A huggable communication medium can provide sustained listening support for special needs students in a classroom. Comput. Hum. Behav. 2019, 93, 106–113. [Google Scholar] [CrossRef]

- Niculescu, A.; Van Dijk, E.M.; Nijholt, A.; Li, H.; See, S.L. Making Social Robots More Attractive: The Effects of Voice Pitch, Humor and Empathy. Int. J. Soc. Robot. 2013, 5, 171–191. [Google Scholar] [CrossRef]

- Sinoo, C.; Van Der Pal, S.M.; Henkemans, O.B.; Keizer, A.; Bierman, B.P.; Looije, R.; A Neerincx, M. Friendship with a robot: Children’s perception of similarity between a robot’s physical and virtual embodiment that supports diabetes self-management. Patient Educ. Couns. 2018, 101, 1248–1255. [Google Scholar] [CrossRef] [PubMed]

- Leite, I.; Martinho, C.; Pereira, A.T.; Paiva, A. As Time goes by: Long-term evaluation of social presence in robotic companions. In Proceedings of the RO-MAN 2009—The 18th IEEE International Symposium on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009; pp. 669–674. [Google Scholar]

- Salem, M.; Kopp, S.; Wachsmuth, I.; Rohlfing, K.; Joublin, F. Generation and Evaluation of Communicative Robot Gesture. Int. J. Soc. Robot. 2012, 4, 201–217. [Google Scholar] [CrossRef]

- Lee, K.M.; Jung, Y.; Kim, J.; Kim, S.R. Are physically embodied social agents better than disembodied social agents?: The effects of physical embodiment, tactile interaction, and people’s loneliness in human–robot interaction. Int. J. Hum. Comput. Stud. 2006, 64, 962–973. [Google Scholar] [CrossRef]

- Edwards, C.; Edwards, A.; Stoll, B.; Lin, X.; Massey, N. Evaluations of an artificial intelligence instructor’s voice: Social Identity Theory in human-robot interactions. Comput. Hum. Behav. 2019, 90, 357–362. [Google Scholar] [CrossRef]

- Tanaka, F.; Movellan, J.R.; Fortenberry, B.; Aisaka, K. Daily HRI evaluation at a classroom environment: Reports from dance interaction experiments. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2–4 March 2006; pp. 3–9. [Google Scholar]

- Bickmore, T.; Caruso, L.; Clough-Gorr, K. Acceptance and usability of a relational agent interface by urban older adults. In Proceedings of the Systems, Portland, OR, USA, 7 April 5005; p. 1212. [Google Scholar] [CrossRef]

- Lindblom, J.; Alenljung, B. The ANEMONE: Theoretical Foundations for UX Evaluation of Action and Intention Recognition in Human-Robot Interaction. Sensors 2020, 20, 4284. [Google Scholar] [CrossRef] [PubMed]

| Type of Stimuli | Description | Count | Ratio |

|---|---|---|---|

| Text | A text stimulus can be a short description summarizing the product concept. | 1 | 3% |

| Image | An image stimulus is used to show the appearance of product in perspective. | 4 | 10% |

| Video | A video stimulus is more realistic and dynamic version of storyboard to communicate a possible use case clearly. | 4 | 10% |

| Live Interaction | Giving a working prototype or a finished product to user\to interact with freely. | 31 | 77% |

| Evaluation Technique | Description | Count | Ratio |

|---|---|---|---|

| Questionnaires | Easy to gather a large amount of data and easy to analyze statistically. Cannot interact with respondents and hard to interpret respondents’ answer fully. | 29 | 62% |

| Video analysis | Good to observe and understand users behavior in natural setting Needed much time and effort to analyze the video | 10 | 21% |

| Interview | Can ask more questions after users’ answers to understand their intention clearer Dependent on an interviewers’ ability | 6 | 13% |

| Biometrics | Can get objective data from users directly Hard to control unintended fluctuation of users data | 2 | 4% |

| Evaluation Tool | Main Measures |

|---|---|

| SASSI(Subjective Assessment of Speech System Interfaces) [2] | system response accuracy, likeability, cognitive demand, annoyance, habitability, speed |

| AttrakDiff/AttrakDiff 2 [3] | pragmatic quality, hedonic quality identification, hedonic quality stimulation, attraction |

| NARS(Negative Attitude toward Robots Scale) [4] | negative attitude toward situations of interaction with robots, negative attitude toward social influence of robots, negative attitude toward emotions in interaction with robots |

| Godspeedscale [5] | anthropomorphism, animacy, likeability, perceived intelligence, perceived safety |

| RoSAS(Robot Social Attributes Scale) [19] | competence, warmth, discomfort |

| Measures | Count | Ratio (%) | Reference |

|---|---|---|---|

| Easy to use | 8 | 22% | [9,17,18,23,28,49,56,57] |

| Simple (Complicated) | 4 | 11% | [17,28,57,58] |

| Easy to understand how to use | 3 | 8% | [37,56,59] |

| Undemanding (Demanding, Challenging, Cumbersome) | 3 | 8% | [17,28,52] |

| In control (Out of control) | 3 | 8% | [10,28,52] |

| Need help to use | 2 | 6% | [17,58] |

| Clear to understand | 2 | 6% | [28,52] |

| Easy to learn (Hard to learn) | 1 | 3% | [17] |

| Consistent (Inconsistent) | 1 | 3% | [17] |

| Measures | Count | Ratio (%) | Reference |

|---|---|---|---|

| Usefulness | 4 | 11% | [9,18,23,52] |

| Competent (Incompetent) | 3 | 8% | [10,51,60] |

| Responsive | 3 | 8% | [10,21,37] |

| Helpful (Unhelpful) | 2 | 6% | [28,57] |

| New (Common): Task | 2 | 6% | [28,57] |

| Knowledgeable | 2 | 6% | [10,51] |

| Informative: Contents | 2 | 6% | [28,57] |

| Related: Contents | 2 | 6% | [28,57] |

| Flexible: Contents | 2 | 6% | [28,57] |

| Functional | 1 | 3% | [17] |

| Capable | 1 | 3% | [10] |

| Expert (Inexpert) | 1 | 3% | [51] |

| Bright (Stupid) | 1 | 3% | [51] |

| Trained (Untrained) | 1 | 3% | [51] |

| Informed (Uninformed) | 1 | 3% | [51] |

| Measures | Count | Ratio (%) | Reference |

|---|---|---|---|

| Attractive/Appealing/Desirable | 4 | 11% | [23,37,51,61] |

| Presentable (Unpresentable) | 2 | 6% | [28,57] |

| Inviting (Rejecting) | 2 | 6% | [28,57] |

| Measures | Count | Ratio (%) | Reference |

|---|---|---|---|

| Anthropomorphism: Lifelike/Humanlike/Natural | 4 | 11% | [11,22,23,61] |

| Scary/Fright | 3 | 8% | [8,19,24] |

| Sad | 3 | 8% | [13,24,25] |

| Angry | 3 | 8% | [13,24,25] |

| Worried/Depressing | 2 | 6% | [34,37] |

| Lively | 2 | 6% | [37,60] |

| Organic | 1 | 3% | [19] |

| Strange | 1 | 3% | [19] |

| Dangerous | 1 | 3% | [19] |

| Upset | 1 | 3% | [38] |

| Amusing | 1 | 3% | [37] |

| Alive | 1 | 3% | [27] |

| Elegant (Rough) | 1 | 3% | [57] |

| Strong (Weak) | 1 | 3% | [57] |

| Tense | 1 | 3% | [61] |

| Measures | Count | Ratio (%) | Reference |

|---|---|---|---|

| Actively engaged | 7 | 19% | [20,28,57,58,60,61,62] |

| Nice/Kind/Good (Awful/Unkind/Bad) | 6 | 17% | [11,19,28,57,61,63] |

| Confident (Insecure) | 4 | 11% | [10,17,28,57] |

| At ease/Relaxed/Calm | 4 | 11% | [20,28,34,57] |

| Sociable (Unsociable) | 3 | 8% | [18,23,61] |

| Aggressive/Offensive | 3 | 8% | [13,19,37] |

| Interactive | 3 | 8% | [13,19,51], |

| Companionship/As a co-worker (Bossy) | 3 | 8% | [13,23,51] |

| Perceived emotional stability/Insensitive (Sensitive) | 2 | 6% | [22,61] |

| Independent (Dependent) | 2 | 6% | [10,16] |

| Exciting (Lame) | 2 | 6% | [57,61] |

| Sympathetic (Unsympathetic) | 2 | 6% | [51,60] |

| Receptive | 1 | 3% | [28] |

| Conscious (Unconscious) | 1 | 3% | [11] |

| Perceived pet likeness | 1 | 3% | [22] |

| Extrovert (Introvert) | 1 | 3% | [57] |

| Rational (Emotional) | 1 | 3% | [57] |

| Familiarity | 1 | 3% | [27] |

| Sincere | 1 | 3% | [51] |

| Shy | 1 | 3% | [13] |

| Measures | Count | Ratio (%) | Reference |

|---|---|---|---|

| Pleasant (Unpleasant) | 9 | 25% | [11,19,20,25,26,27,28,57,61] |

| Friendly/Could be a friend (Unfriendly) | 8 | 22% | [11,13,22,28,37,51,57,61] |

| Anxiety towards a robot | 4 | 11% | [9,18,20,23] |

| Happy | 4 | 11% | [13,19,24,25] |

| Satisfied (Frustrated) | 4 | 11% | [28,34,57,61] |

| Close/Connected (Distant) | 3 | 8% | [16,21,61] |

| Empathetic (Not empathetic) | 2 | 6% | [16,57] |

| Friendly communicative | 1 | 3% | [60] |

| Compassionate | 1 | 3% | [19] |

| Stimulating | 1 | 3% | [37] |

| Surprise | 1 | 3% | [24] |

| Can spend a good time with | 1 | 3% | [61] |

| Entertaining | 1 | 3% | [61] |

| Pleasant (Unpleasant) | 9 | 25% | [11,19,20,25,26,27,28,57,61] |

| Friendly/Could be a friend (Unfriendly) | 8 | 22% | [11,13,22,28,37,51,57,61] |

| Compassionate | 1 | 3% | [19] |

| Stimulating | 1 | 3% | [37] |

| Surprise | 1 | 3% | [24] |

| Can spend a good time with | 1 | 3% | [61] |

| Entertaining | 1 | 3% | [61] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jung, M.; Lazaro, M.J.S.; Yun, M.H. Evaluation of Methodologies and Measures on the Usability of Social Robots: A Systematic Review. Appl. Sci. 2021, 11, 1388. https://doi.org/10.3390/app11041388

Jung M, Lazaro MJS, Yun MH. Evaluation of Methodologies and Measures on the Usability of Social Robots: A Systematic Review. Applied Sciences. 2021; 11(4):1388. https://doi.org/10.3390/app11041388

Chicago/Turabian StyleJung, Minjoo, May Jorella S. Lazaro, and Myung Hwan Yun. 2021. "Evaluation of Methodologies and Measures on the Usability of Social Robots: A Systematic Review" Applied Sciences 11, no. 4: 1388. https://doi.org/10.3390/app11041388

APA StyleJung, M., Lazaro, M. J. S., & Yun, M. H. (2021). Evaluation of Methodologies and Measures on the Usability of Social Robots: A Systematic Review. Applied Sciences, 11(4), 1388. https://doi.org/10.3390/app11041388