Hierarchical Concept Learning by Fuzzy Semantic Cells

Abstract

1. Introduction

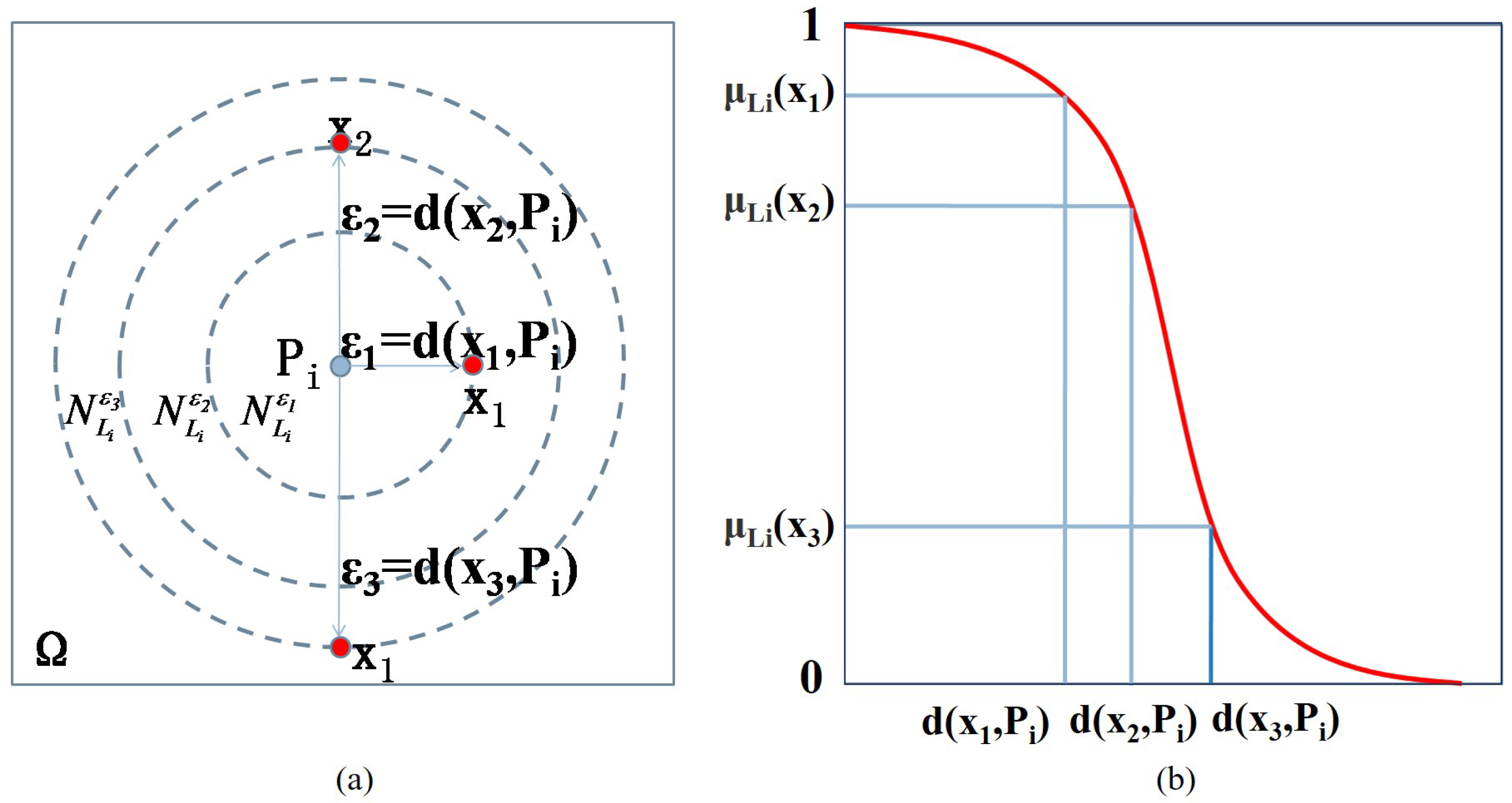

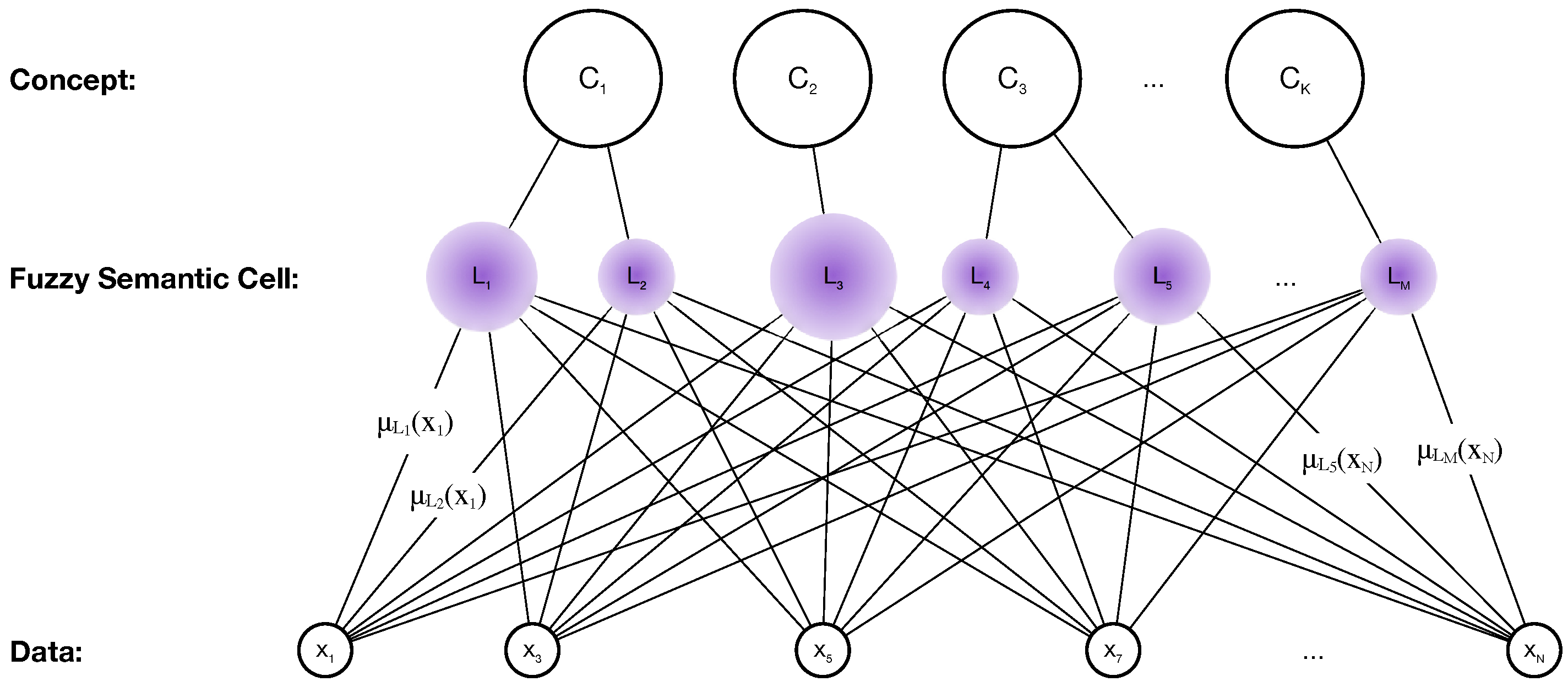

2. Fuzzy Semantic Cell

3. Method

3.1. Hierarchical Structure of Concept Leaning

3.2. Decision Rule of Classification

- for new data , compute for all fuzzy semantic cells

- take the corresponding of maximum

- choose the concept that the belongs to as the abstract concept of x

3.3. Optimization of Fuzzy Semantic Cells

| Algorithm 1 Hierarchical Concept Learning by Fuzzy Semantic Cells |

| Require: Training set of K categories with concept information where |

| Ensure: Fuzzy semantic cell |

| 1: function ()

: The dataset with concept information M: The numbers of fuzzy semantic cells |

| 2: Begin |

| 3: Partition: |

| 4: Initialize: |

| 5: for each data do |

| 6: Compute: |

| 7: Compute: |

| 8: end for |

| 9: Compute: minimize the following by Adam:

|

| 10: return |

| 11:end function |

3.4. Discussion

4. Experiments

4.1. Datasets

4.2. Initialization Method

- The first one is to perform K-means on each type of training set and get the clustering centers as the initial prototypes.

- The second way is to get the clustering centers by K-means in the entire training set to get the initial prototypes.

- The third way is to select samples in each category as prototypes randomly.

- The last way is to take the average of each category of data as initialization.

4.3. Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| FSC | Fuzzy Semantic Cell |

| KNN | K-Nearest Neighbor |

| DT | Decision Tree |

| SVM | Support Vector Machine |

References

- Shanks, D.R. Concept learning and representation. In Handbook of Categorization in Cognitive Science, 2nd ed.; Elsevier: Amsterdam, The Netherlands, 2001. [Google Scholar]

- Wang, Y. On concept algebra: A denotational mathematical structure for knowledge and software modeling. Int. J. Cogn. Informatics Nat. Intell. 2008, 2, 1–19. [Google Scholar] [CrossRef]

- Yao, Y. Interpreting Concept Learning in Cognitive Informatics and Granular Computing. IEEE Trans. Syst. Man Cybern. Part B 2009, 39, 855–866. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Mei, C.; Xu, W.; Qian, Y. Concept learning via granular computing: A cognitive viewpoint. Inf. Sci. 2015, 298, 447–467. [Google Scholar] [CrossRef] [PubMed]

- Rosch, E.H. Natural categories. Cogn. Psychol. 1973, 4, 328–350. [Google Scholar] [CrossRef]

- Rosch, E. Cognitive representations of semantic categories. J. Exp. Psychol. Gen. 1975, 104, 192–233. [Google Scholar] [CrossRef]

- Zadeh, L.A. Information and control. Fuzzy Sets 1965, 8, 338–353. [Google Scholar]

- Zadeh, L.A. Probability measures of fuzzy events. J. Math. Anal. Appl. 1968, 23, 421–427. [Google Scholar] [CrossRef]

- Goodman, I.R.; Nguyen, H.T. Uncertainty Models for Knowledge-Based Systems; Technical Report; Naval Ocean Systems Center: San Diego, CA, USA, 1991. [Google Scholar]

- Lawry, J.; Tang, Y. Relating prototype theory and label semantics. In Soft Methods for Handling Variability and Imprecision; Springer: Berlin/Heidelberg, Germany, 2008; pp. 35–42. [Google Scholar]

- Lawry, J.; Tang, Y. Uncertainty modelling for vague concepts: A prototype theory approach. Artif. Intell. 2009, 173, 1539–1558. [Google Scholar] [CrossRef]

- Tang, Y.; Lawry, J. Linguistic modelling and information coarsening based on prototype theory and label semantics. Int. J. Approx. Reason. 2009, 50, 1177–1198. [Google Scholar] [CrossRef]

- Tang, Y.; Lawry, J. Information cells and information cell mixture models for concept modelling. Ann. Oper. Res. 2012, 195, 311–323. [Google Scholar] [CrossRef]

- Tang, Y.; Lawry, J. A bipolar model of vague concepts based on random set and prototype theory. Int. J. Approx. Reason. 2012, 53, 867–879. [Google Scholar] [CrossRef][Green Version]

- Tang, Y.; Xiao, Y. Learning fuzzy semantic cell by principles of maximum coverage, maximum specificity, and maximum fuzzy entropy of vague concept. Knowl. Based Syst. 2017, 133, 122–140. [Google Scholar] [CrossRef]

- Śmieja, M.; Geiger, B.C. Semi-supervised cross-entropy clustering with information bottleneck constraint. Inf. Sci. 2017, 421, 254–271. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Zhang, H. The optimality of naive Bayes. In Proceedings of the Seventeenth International Florida Artificial Intelligence Research Society Conference, Miami Beach, FL, USA, 12–14 May 2004; Volume 1, pp. 562–567. [Google Scholar]

- Altman, N.S. An introduction to kernel and nearest-neighbor nonparametric regression. Am. Stat. 1992, 46, 175–185. [Google Scholar]

- Quinlan, J.R. C4. 5: Programs for Machine Learning; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Vapnik, V. The Nature of Statistical Learning Theory; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Freund, Y.; Schapire, R.; Abe, N. A short introduction to boosting. J. Jpn. Soc. Artif. Intell. 1999, 14, 1612. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

- Tang, Y.; Xiao, Y. Learning hierarchical concepts based on higher-order fuzzy semantic cell models through the feed-upward mechanism and the self-organizing strategy. Knowl. Based Syst. 2020, 194, 105506. [Google Scholar] [CrossRef]

- Lewis, M.; Lawry, J. Hierarchical conceptual spaces for concept combination. Artif. Intell. 2016, 237, 204–227. [Google Scholar] [CrossRef]

- Mls, K.; Cimler, R.; Vaščák, J.; Puheim, M. Interactive evolutionary optimization of fuzzy cognitive maps. Neurocomputing 2017, 232, 58–68. [Google Scholar] [CrossRef]

- Salmeron, J.L.; Froelich, W. Dynamic optimization of fuzzy cognitive maps for time series forecasting. Knowl. Based Syst. 2016, 105, 29–37. [Google Scholar] [CrossRef]

- Graf, A.B.A.; Bousquet, O.; Rätsch, G.; Schölkopf, B. Prototype Classification: Insights from Machine Learning. Neural Comput. 2009, 21, 272–300. [Google Scholar] [CrossRef] [PubMed]

- DrEng, S.A. Pattern Classification; Springer: London, UK, 2001. [Google Scholar]

- Mika, S.; Rätsch, G.; Weston, J.; Schölkopf, B.; Smola, A.; Müller, K. Constructing Descriptive and Discriminative Nonlinear Features: Rayleigh Coefficients in Kernel Feature Spaces. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 623–633. [Google Scholar] [CrossRef]

- Hecht, T.; Gepperth, A.R.T. Computational advantages of deep prototype-based learning. In Proceedings of the International Conference on Artificial Neural Networks (ICANN), Barcelona, Spain, 6–9 September 2016. [Google Scholar]

- Kasnesis, P.; Heartfield, R.; Toumanidis, L.; Liang, X.; Loukas, G.; Patrikakis, C.Z. A prototype deep learning paraphrase identification service for discovering information cascades in social networks. In Proceedings of the 2020 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), London, UK, 6–10 July 2020; pp. 1–4. [Google Scholar]

- Hastie, T.J.; Tibshirani, R. Discriminant Analysis by Gaussian Mixtures. J. R. Stat. Soc. Ser. B-Methodol. 1996, 58, 155–176. [Google Scholar] [CrossRef]

- Fraley, C.; Raftery, A.E. Model-Based Clustering, Discriminant Analysis, and Density Estimation. J. Am. Stat. Assoc. 2002, 97, 611–631. [Google Scholar] [CrossRef]

- Lake, B.M.; Salakhutdinov, R.; Tenenbaum, J.B. Human-level concept learning through probabilistic program induction. Science 2015, 350, 1332–1338. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Huang, C.; Qi, J.; Qian, Y.; Liu, W. Three-way cognitive concept learning via multi-granularity. Inf. Sci. 2017, 378, 244–263. [Google Scholar] [CrossRef]

- Andriyanov, N.A.; Andriyanov, D.A. The using of data augmentation in machine learning in image processing tasks in the face of data scarcity. J. Phys. Conf. Ser. 2020, 1661, 012018. [Google Scholar] [CrossRef]

- Iwana, B.K.; Uchida, S. An empirical survey of data augmentation for time series classification with neural networks. PLoS ONE 2021, 16, e0254841. [Google Scholar] [CrossRef] [PubMed]

- Andriyanov, N.; Dementev, V.; Tashlinskiy, A.; Vasiliev, K. The study of improving the accuracy of convolutional neural networks in face recognition tasks. In Pattern Recognition. ICPR International Workshops and Challenges; Del Bimbo, A., Cucchiara, R., Sclaroff, S., Farinella, G.M., Mei, T., Bertini, M., Escalante, H.J., Vezzani, R., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 5–14. [Google Scholar]

- Andriyanov, N. Methods for Preventing Visual Attacks in Convolutional Neural Networks Based on Data Discard and Dimensionality Reduction. Appl. Sci. 2021, 11, 5235. [Google Scholar] [CrossRef]

- Rodriguez, A.; Laio, A. Clustering by fast search and find of density peaks. Science 2014, 344, 1492–1496. [Google Scholar] [CrossRef]

- Frey, B.; Dueck, D. Clustering by Passing Messages Between Data Points. Science 2007, 315, 972–976. [Google Scholar] [CrossRef]

- Andriyanov, N.; Tashlinsky, A.; Dementiev, V. Detailed clustering based on gaussian mixture models. In Intelligent Systems and Applications; Arai, K., Kapoor, S., Bhatia, R., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 437–448. [Google Scholar]

- Tang, Y.; Xiao, Y. Learning disjunctive concepts based on fuzzy semantic cell models through principles of justifiable granularity and maximum fuzzy entropy. Knowl. Based Syst. 2018, 161, 268–293. [Google Scholar] [CrossRef]

- Caron, M.; Bojanowski, P.; Joulin, A.; Douze, M. Deep clustering for unsupervised learning of visual features. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Johnson, B.; Tateishi, R.; Xie, Z. Using geographically weighted variables for image classification. Remote Sens. Lett. 2012, 3, 491–499. [Google Scholar] [CrossRef]

- Dua, D.; Graff, C. UCI Machine Learning Repository; University of California, School of Information and Computer Science: Irvine, CA, USA, 2019; Available online: http://archive.ics.uci.edu/ml (accessed on 9 November 2021).

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Weyrauch, B.; Heisele, B.; Huang, J.; Blanz, V. Component-based face recognition with 3D morphable models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshop, Washington, DC, USA, 2–27 July 2004; pp. 85–89. [Google Scholar]

- Hinton, G.; Srivastava, N.; Swersky, K. Overview of mini-batch gradient descent. In Neural Networks for Machine Learning Lecture; Coursera: Online, 2012; p. 14. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

| Datasets | Instances | Attributes | Classes |

|---|---|---|---|

| Synthetic dataset | 750 | 2 | 3 |

| Forest | 523 | 9 | 4 |

| Pendigits | 10,992 | 16 | 10 |

| MNIST | 70,000 | 784 | 10 |

| MIT face | 3240 | 4096 | 10 |

| Ratio as Training Sets | Train | Test | Accuracy on Training Sets | Accuracy on Test Sets |

|---|---|---|---|---|

| 10% | 52 | 471 | 86.46 ± 1.58 | 82.64 ± 1.43 |

| 20% | 104 | 419 | 89.91 ± 0.85 | 81.42 ± 1.19 |

| 30% | 156 | 367 | 89.53 ± 1.26 | 79.69 ± 1.58 |

| 40% | 208 | 315 | 90.57 ± 0.93 | 80.97 ± 1.54 |

| 50% | 260 | 263 | 89.10 ± 1.25 | 76.81 ± 2.03 |

| 60% | 312 | 211 | 87.08 ± 1.15 | 79.11 ± 3.79 |

| Prototypes | Accuracy on Training Sets | Accuracy on TEST Sets |

|---|---|---|

| 10 | 86.64 ± 0.05 | 83.78 ± 0.05 |

| 20 | 92.52 ± 0.11 | 91.46 ± 0.07 |

| 30 | 94.49 ± 0.30 | 92.27 ± 0.49 |

| 40 | 95.47 ± 0.20 | 94.03 ± 0.10 |

| Ratio as Training Set | 0.1 | 0.3 | 0.5 | 0.7 |

|---|---|---|---|---|

| Prototypes Initialization | Train Acc/Test Acc | Train Acc/Test Acc | Train Acc/Test Acc | Train Acc/Test Acc |

| K-means in categories | / | / | / | / |

| K-means the training set | / | / | / | / |

| Randomly selection | / | / | / | / |

| Average in categoryies | / | / | / | / |

| 0.1 | 0.3 | 0.5 | 0.7 | |

|---|---|---|---|---|

| Adam [17] | 82.37 ± 0.64 | 83.10 ± 0.44 | 83.07 ± 0.60 | 82.71 ± 0.66 |

| SGD | 78.72 ± 1.07 | 79.71 ± 0.61 | 79.54 ± 0.39 | 79.36 ± 0.73 |

| RMSprop [50] | 82.32 ± 0.71 | 82.27 ± 0.50 | 82.56 ± 0.27 | 82.25 ± 0.41 |

| L-BFGS | 44.89 ± 37.8 | 80.68 ± 0.01 | 80.45 ± 0.01 | 81.17 ± 0.01 |

| Synthetic Dataset | Forest | Pendigits | MNIST | MIT Face | |

|---|---|---|---|---|---|

| DecisionTree | 96.07 ± 1.21 | 79.45 ± 2.09 | 88.00 ± 0.31 | 76.24 ± 0.16 | 53.02 ± 8.44 |

| NaiveBayes | 98.81 ± 0.00 | 82.59 ± 0.00 | 85.41 ± 0.00 | 78.65 ± 0.00 | 57.53 ± 0.00 |

| KNN | 97.93 ± 0.00 | 83.86 ± 0.00 | 95.58 ± 0.00 | 90.63 ± 0.00 | 90.34 ± 0.00 |

| SVM | 97.93 ± 0.00 | 82.80 ± 0.00 | 95.35 ± 0.71 | 90.78 ± 0.00 | 71.76 ± 0.02 |

| AdaBoost | 96.74 ± 0.00 | 77.49 ± 1.73 | 69.01 ± 0.00 | 72.12 ± 0.00 | 60.96 ± 0.74 |

| Neural Network | 96.89 ± 0.31 | 31.66 ± 14.94 | 98.29 ± 0.00 | 92.37 ± 0.11 | 75.42 ± 4.95 |

| FSC (ours) | 98.50 ± 0.04 | 82.93 ± 1.66 | 96.27 ± 0.14 | 90.88 ± 0.43 | 91.32 ± 0.59 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, L.; Li, W.; Tang, Y. Hierarchical Concept Learning by Fuzzy Semantic Cells. Appl. Sci. 2021, 11, 10723. https://doi.org/10.3390/app112210723

Zhu L, Li W, Tang Y. Hierarchical Concept Learning by Fuzzy Semantic Cells. Applied Sciences. 2021; 11(22):10723. https://doi.org/10.3390/app112210723

Chicago/Turabian StyleZhu, Linna, Wei Li, and Yongchuan Tang. 2021. "Hierarchical Concept Learning by Fuzzy Semantic Cells" Applied Sciences 11, no. 22: 10723. https://doi.org/10.3390/app112210723

APA StyleZhu, L., Li, W., & Tang, Y. (2021). Hierarchical Concept Learning by Fuzzy Semantic Cells. Applied Sciences, 11(22), 10723. https://doi.org/10.3390/app112210723