Automatic Asbestos Control Using Deep Learning Based Computer Vision System †

Abstract

:Featured Application

Abstract

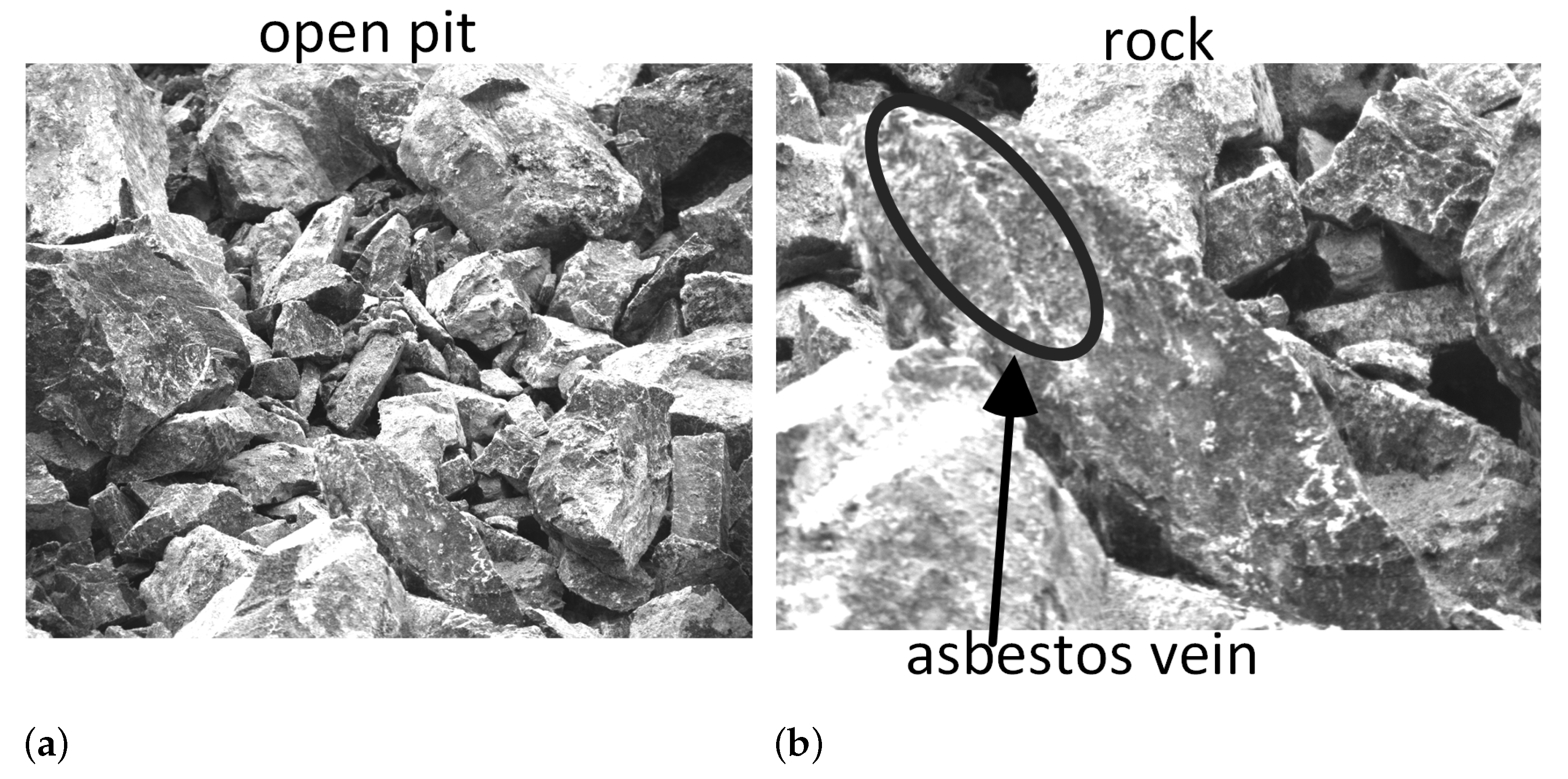

1. Introduction

2. Description of the Experimental System and Algorithm of Its Work

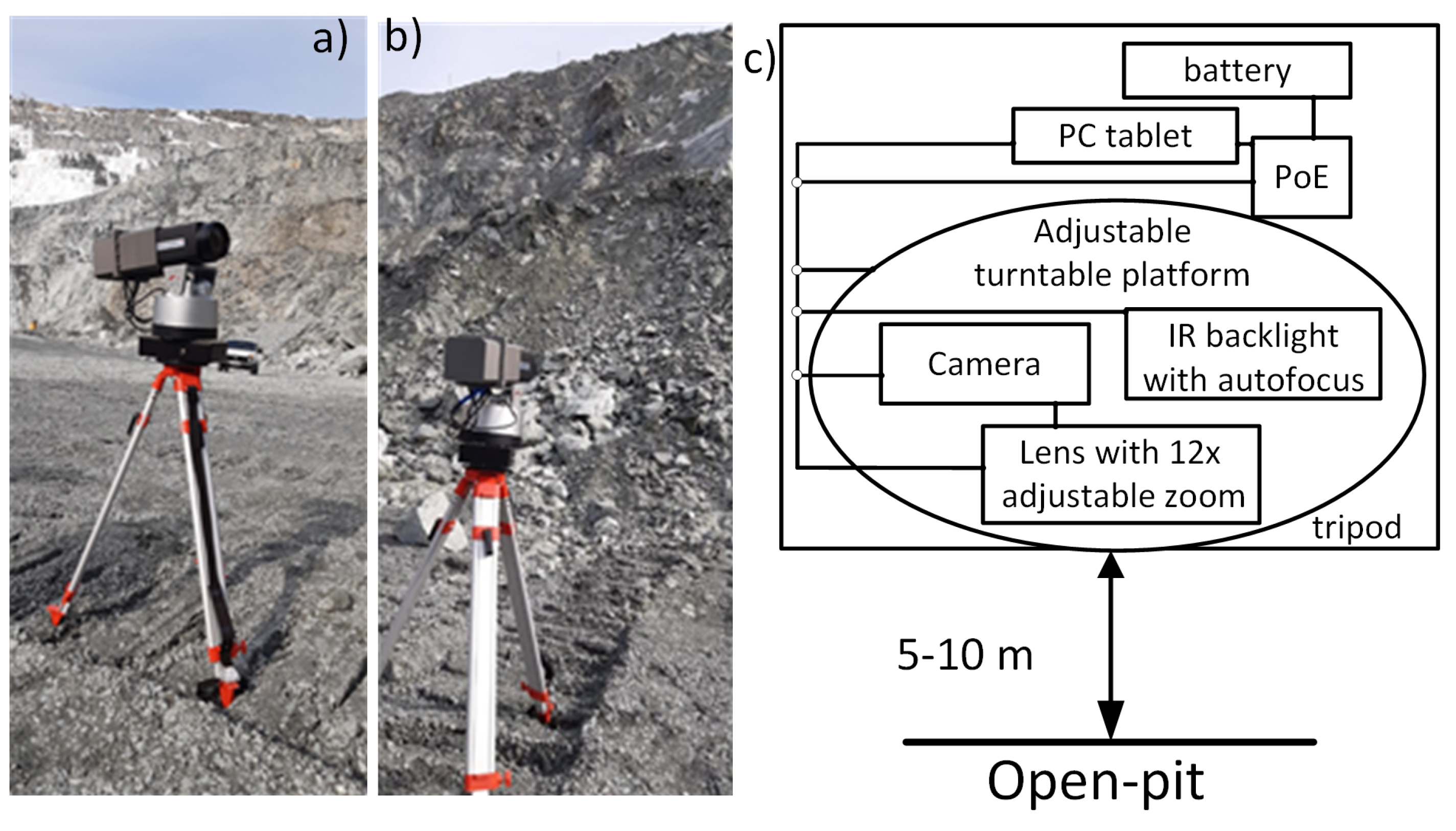

2.1. Description of the Designed Experimental System

- tripod as a base;

- electrically adjustable turntable platform maintained on the tripod;

- camera for computer vision “Dalsa Genie Nano M2590NIR” with the gray-scale matrix 1 inch and resolution 2590 × 2048 pixels (5MP), and enhanced sensitivity in the near-infrared range [20];

- lens “LMZ25300M3P-IR” with electrically adjustable 12× zoom and enhanced sensitivity in the near-infrared range [21].

- PC tablet for control and image processing ( Intel Core i5 3427U, Intel HD Graphics 4000, 4 GB RAM);

- infrared backlight with wavelength 850 nm and with the manually adjustable zoom set within the distance 5–10 m with lighting angle of 30 degrees;

- supply battery for autonomous work and PoE system (Power over Ethernet) for camera supply.

2.2. Algorithm of the System Work

| Algorithm 1:General algorithm of the system work |

Input:

Output:

|

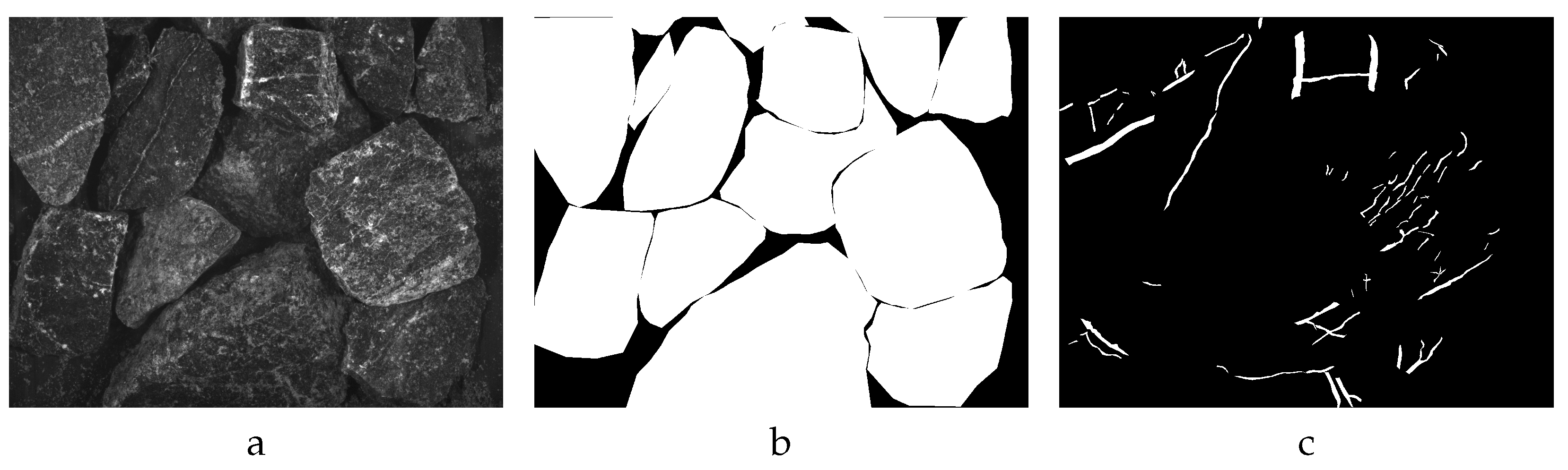

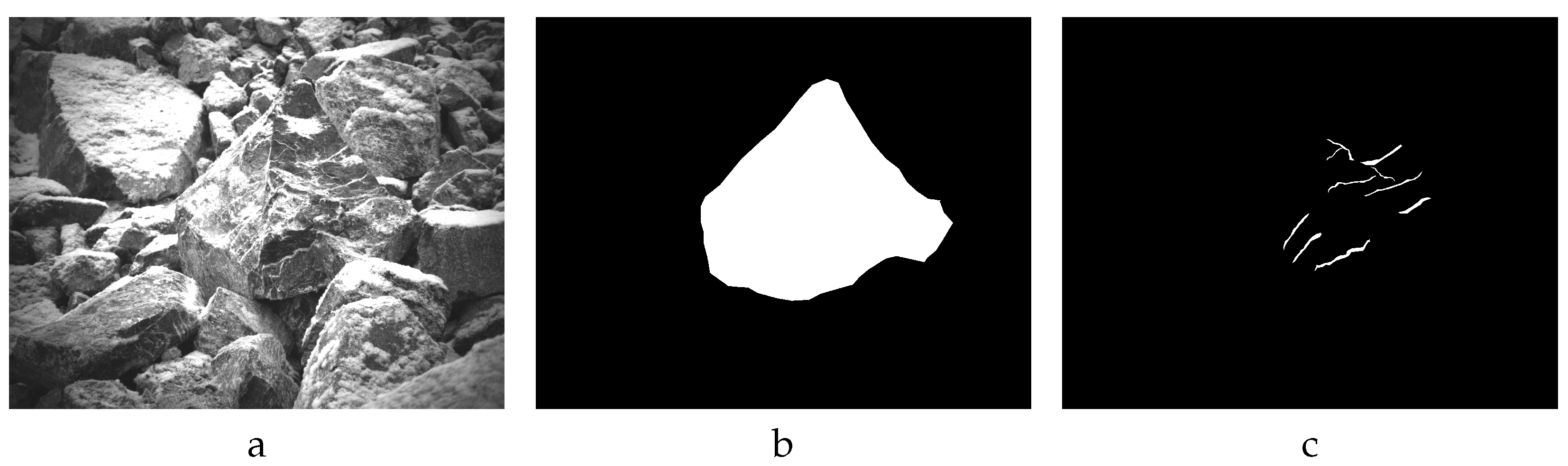

3. The Collected Datasets Description

4. CNN Architectures and Training Details

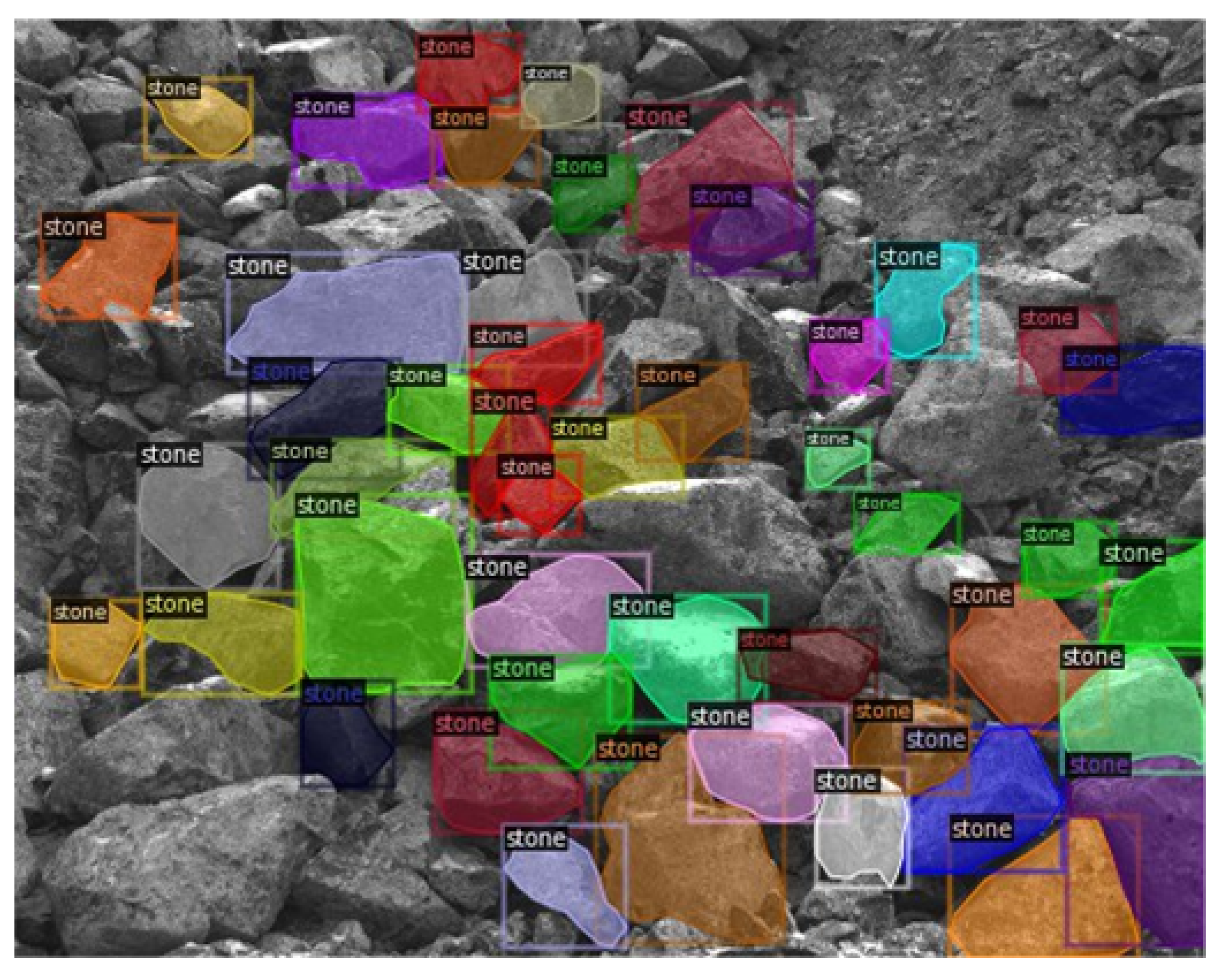

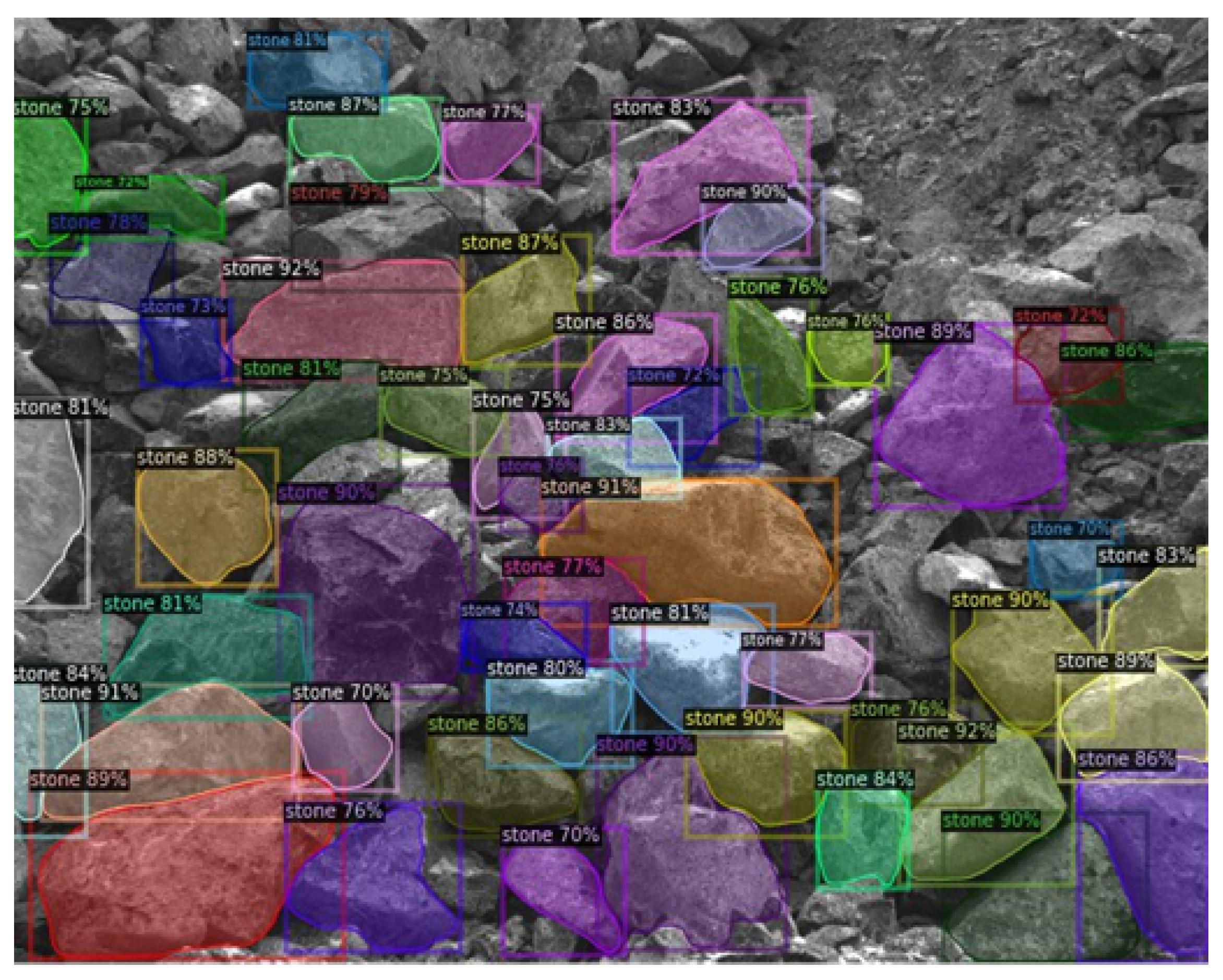

4.1. Rock Chunks Detection

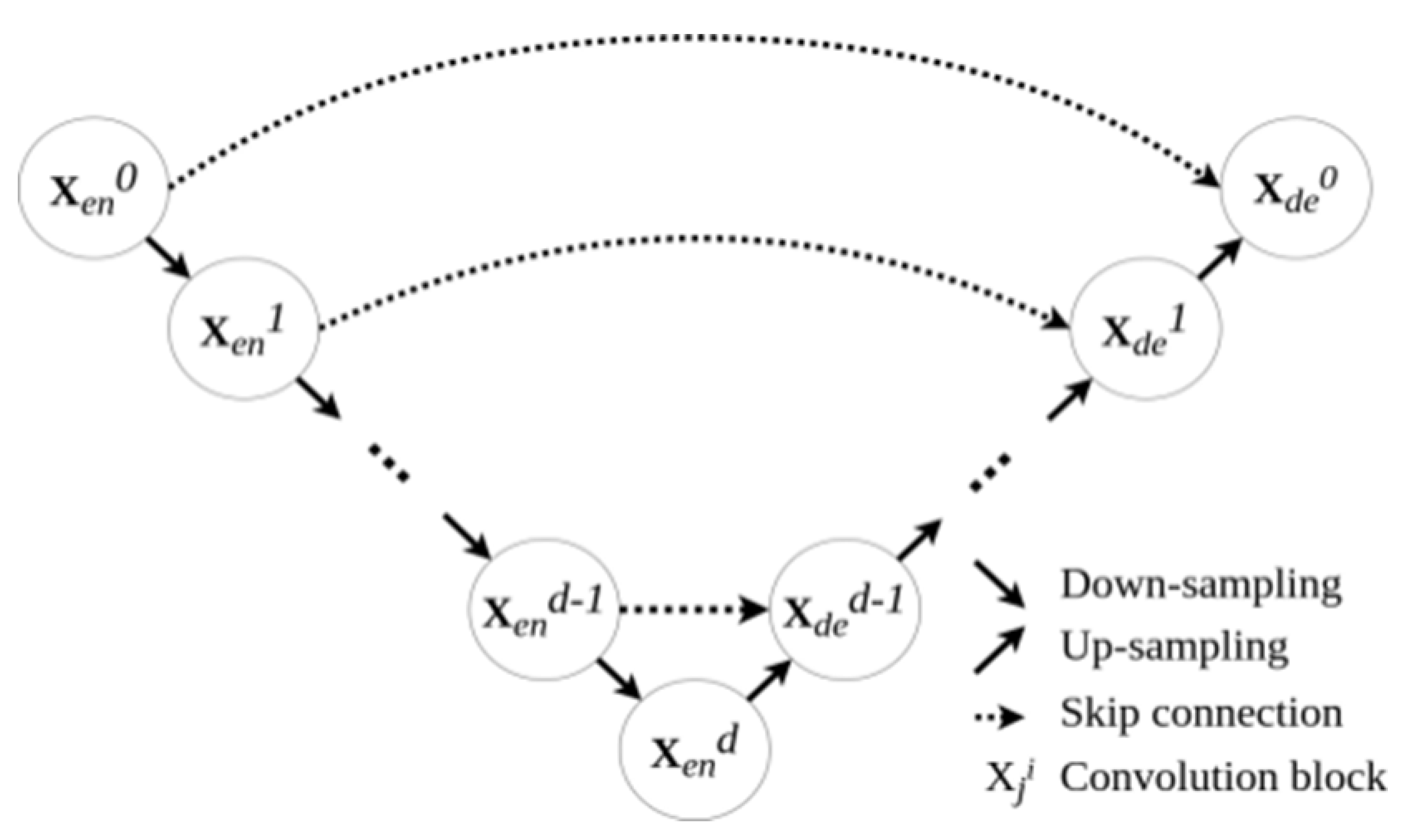

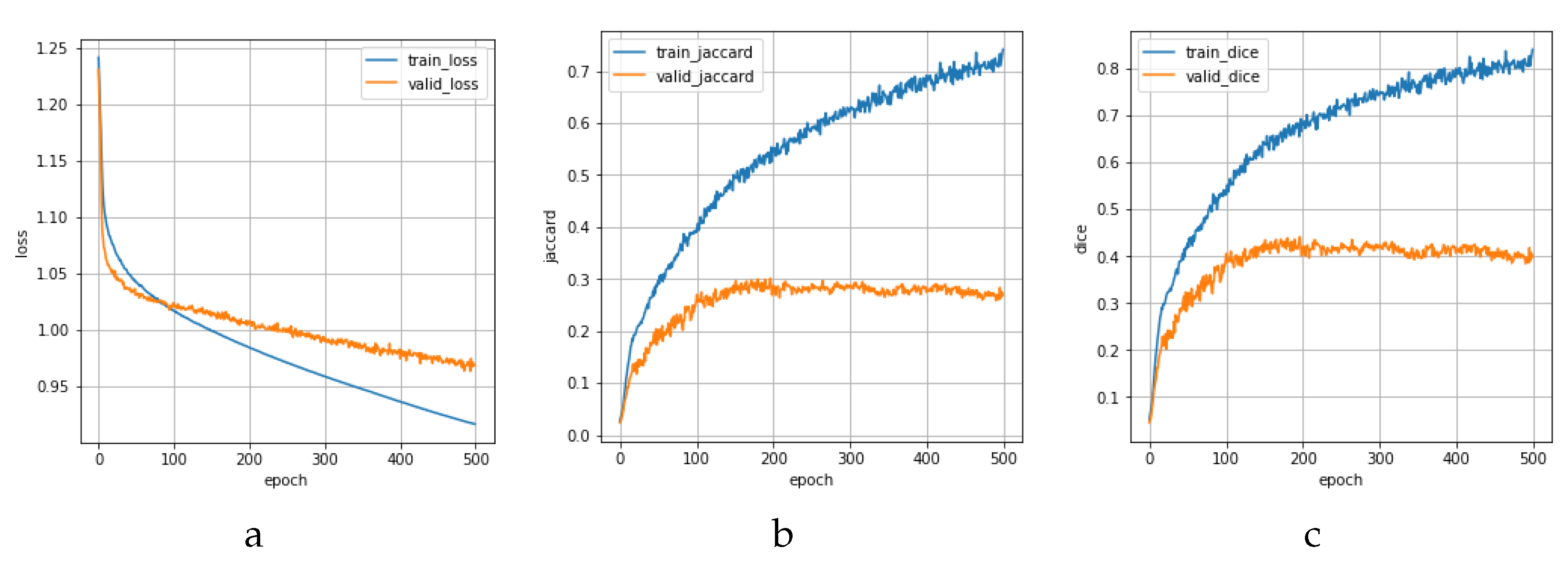

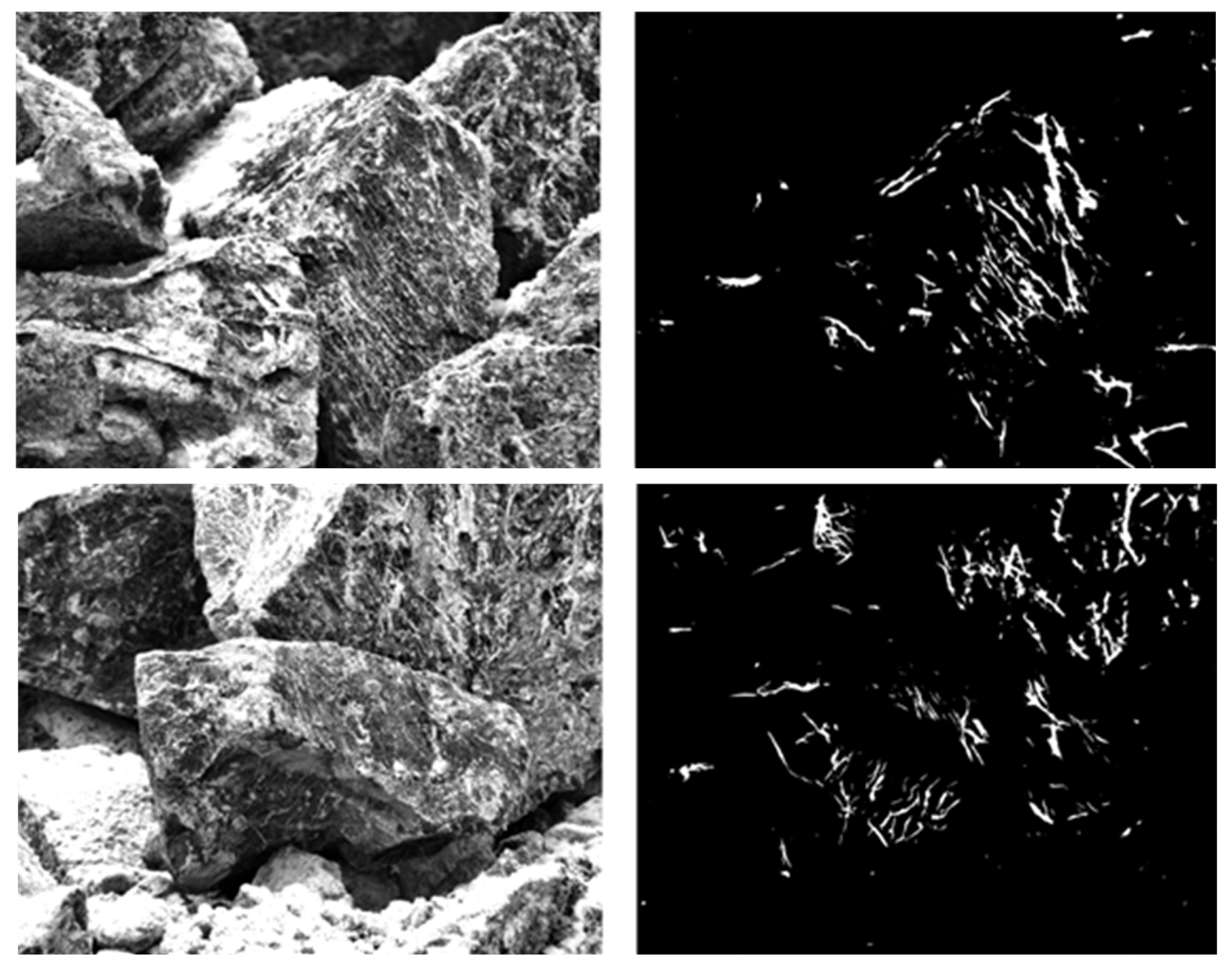

4.2. Asbestos Veins Segmentation

5. Asbestos Content Estimation

5.1. Method of Asbestos Productivity (Content) Estimation

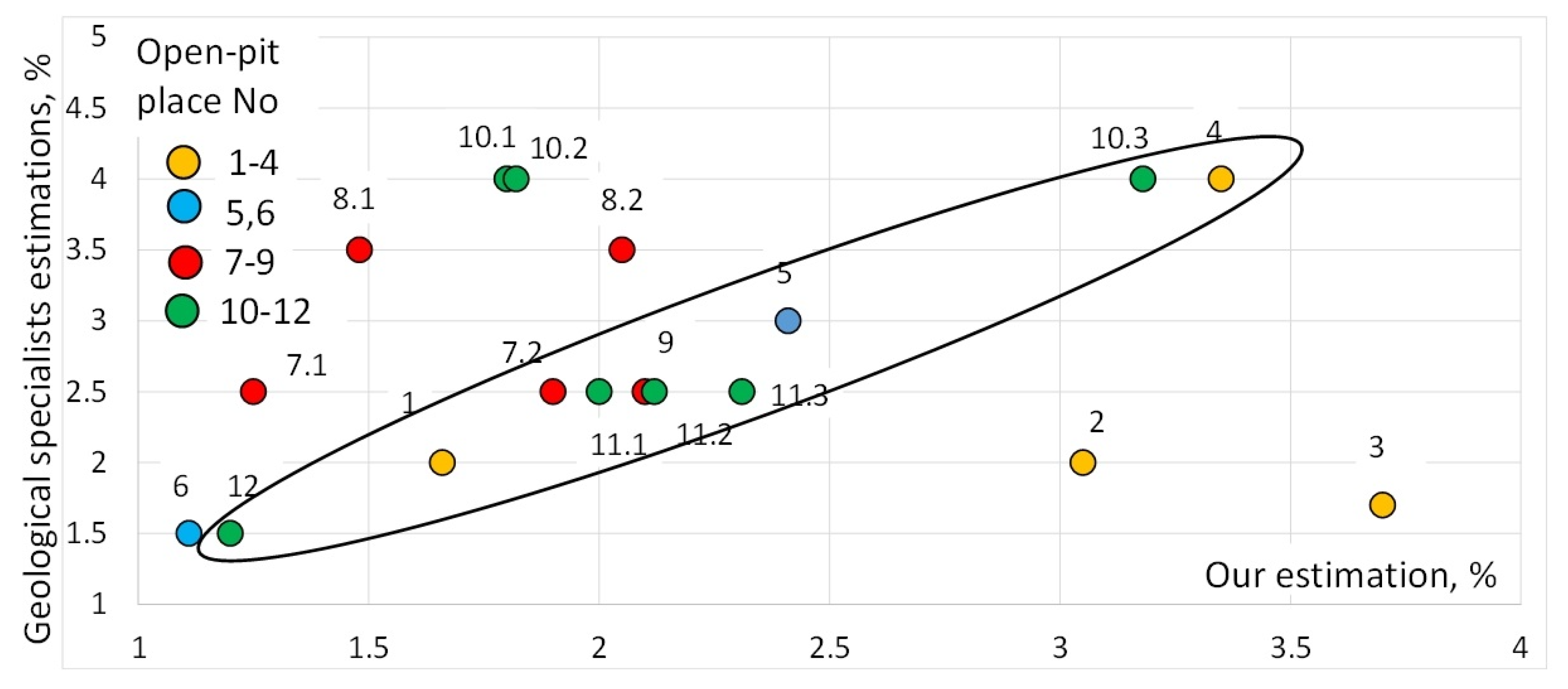

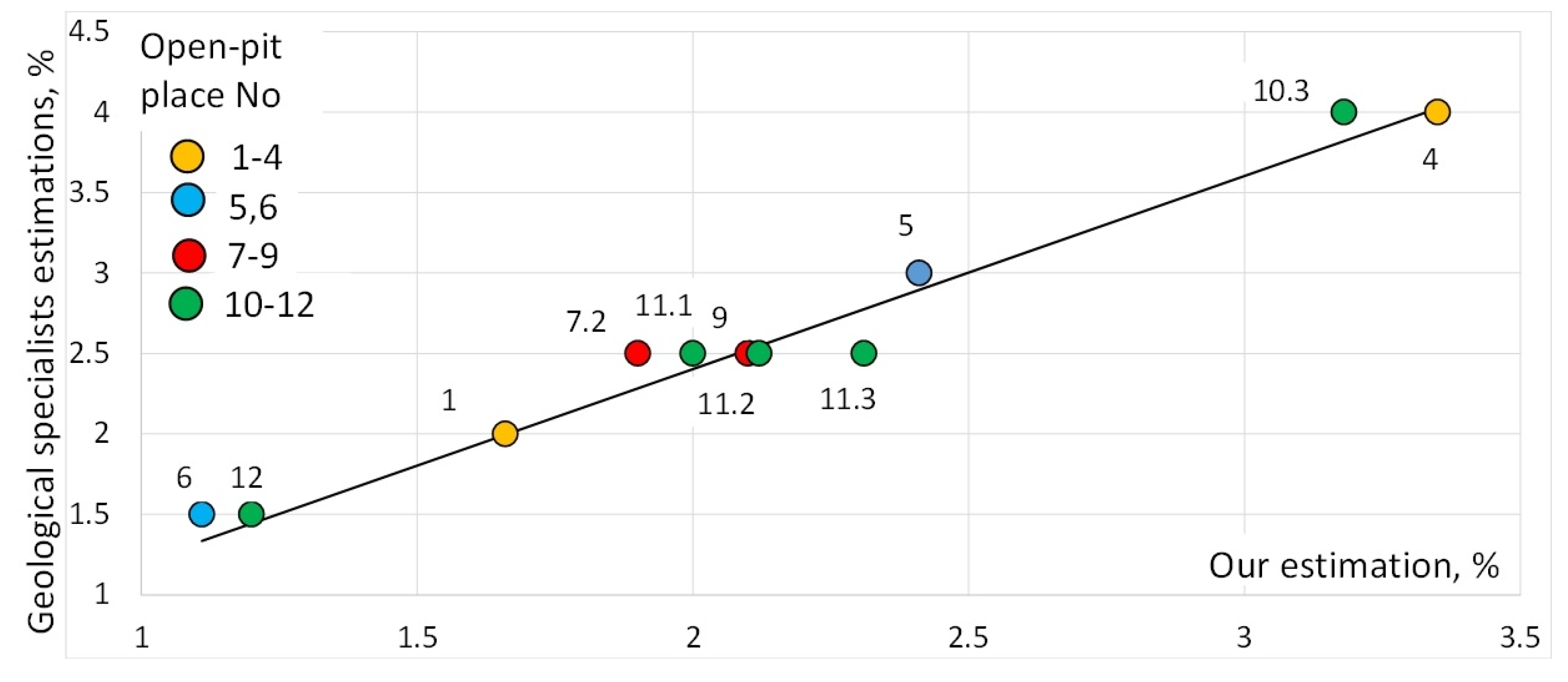

5.2. Results Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gao, R.; Sun, Z.; Li, W.; Pei, L.; Hu, Y.; Xiao, L. Automatic coal and gangue segmentation using u-net based fully convolutional networks. Energies 2020, 13, 829. [Google Scholar] [CrossRef] [Green Version]

- Si, L.; Xiong, X.; Wang, Z.; Tan, C. A deep convolutional neural network model for intelligent discrimination between coal and rocks in coal mining face. Math. Probl. Eng. 2020, 12, 2616510. [Google Scholar] [CrossRef]

- Su, C.; Xu, S.J.; Zhu, K.Y.; Zhang, X.C. Rock classification in petrographic thin section images based on concatenated convolutional neural networks. Earth Sci. Inform. 2020, 13, 1477–1484. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, Y.; Jing, H.; Wang, L.; Zhao, S. Ore image segmentation method using U-Net and Res_Unet convolutional networks. RSC Adv. 2020, 10, 9396–9406. [Google Scholar] [CrossRef] [Green Version]

- Vu, T.; Bao, T.; Hoang, Q.V.; Drebenstetd, C.; Hoa, P.V.; Thang, H.H. Measuring blast fragmentation at Nui Phao open-pit mine. Vietnam using the Mask R-CNN deep learning model. Min. Technol. 2021, 1–12. [Google Scholar] [CrossRef]

- Zyuzin, V.; Ronkin, M.; Porshnev, S.; Kalmykov, A. Computer vision system for the automatic asbestos content control in stones. J. Phys. Conf. Ser. 2021, 1727, 012014. [Google Scholar] [CrossRef]

- Luzin, V.P. Complex Investigation of the Longitudinal fiber Chrisolit-Asbestos Field (In Russian) [Kompleksnye Issledovaniya Prodol’novoloknistogo Hrizotilasbesta Bazhenovskogo Mestorozhdeniya]. Available online: http://resources.krc.karelia.ru/krc/doc/publ2011/miner_tech_ocenka_118-126.pdf (accessed on 8 October 2021).

- Rehn, E.; Rehn, A.; Possemiers, A. Fossil charcoal particle identification and classification by two convolutional neural networks. Quat. Sci. Rev. 2019, 226, 106038. [Google Scholar] [CrossRef]

- Cai, C.; Nishimura, T.; Hwang, J.; Hu, X.M.; Kuroda, A. Asbestos Detection with Fluorescence Microscopy Images and Deep Learning. Sensors 2021, 21, 4582. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning, 2nd ed.; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Guan, S.; Khan, A.A.; Sikdar, S.; Chitnis, P.V. Fully dense UNet for 2-D sparse photoacoustic tomography artifact removal. IEEE J. Biomed. Health Inform. 2019, 24, 568–576. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Du, G.; Cao, X.; Liang, J.; Chen, X.; Zhan, Y. Medical image segmentation based on u-net: A review. J. Imaging Sci. Technol. 2020, 64, 20508–20510. [Google Scholar] [CrossRef]

- Smith, A.G.; Petersen, J.; Selvan, R.; Rasmussen, C.R. Segmentation of roots in soil with U-Net. Plant Methods 2020, 16, 1–15. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Khan, W.; Ansell, D.; Kuru, K.; Bilal, M. Flight guardian: autonomous flight safety improvement by monitoring aircraft cockpit instruments. J. Aerosp. Inf. Syst. 2018, 15, 203–214. [Google Scholar] [CrossRef]

- Khan, W.; Crockett, K.; O’Shea, J.; Hussain, A.; Khan, B.M. Deception in the eyes of deceiver: A computer vision and machine learning based automated deception detection. Expert Syst. Appl. 2021, 169, 114341. [Google Scholar] [CrossRef]

- Khan, W.; Hussain, A.; Kuru, K.; Al-Askar, H. Pupil localisation and eye centre estimation using machine learning and computer vision. Sensors 2020, 20, 3785. [Google Scholar] [CrossRef] [PubMed]

- DALSA Genie Nano Series Manual. Available online: https://www.stemmer-imaging.com/media/uploads/cameras/dalsa/12/122239-Teledyne-DALSA-Genie-Nano-Series-Manual.pdf (accessed on 24 August 2021).

- Kowa LMZ25300M3P-IR. Available online: https://www.rmaelectronics.com/kowa-lmz25300m3p-ir/ (accessed on 24 August 2021).

- Raspution, N.V. Primenenie Opticheskikh Metodov dlia Otcenki Kachestva Asbestovykh Rud. Ph.D. Thesis, VNII Proekt Asbest, Yekaterinburg, Russia, 1984. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2117–2125. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. Int. Conf. Mach. Learn. 2019, 6105–6114. Available online: https://proceedings.mlr.press/v97/tan19a/tan19a.pdf (accessed on 24 August 2021).

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 248–255. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Shields, M. Research Methodology and Statistical Methods; Scientific e-Resources: New Delhi, India, 2019. [Google Scholar]

| Dataset Part | Samples | Specifity | Weather Conditions | Aim |

|---|---|---|---|---|

| 1 | 46 | Alone stones. | laboratory condition | Pretraining, semantic segmentation of veins. |

| 2 | 134 | 10–20 rock chunks. | Conveyor belt of the processing factory. | Pretraining, semantic segmentation of veins. |

| 3 | 69 | Rock chunk in the open pit with the manual aiming. | 6 open pit, sunny weather in autumn. | Test, semantic segmentation of veins. |

| 4 | 984 | Rock chunk in the open pit with auto aiming. | 12 open pits (22 parts), sunny, cloudy, rainy, and snowy weather in the Autumn and Winter. | Train, test semantic segmentation of veins. |

| 5 | 56 | Open pit places. | Sunny, cloudy, rainy, and snowy weather in Autumn and Winter. | Train, test instance segmentation of rock chunks. |

| Dice | IoU | |||

|---|---|---|---|---|

| Train | Validation | Train | Validation | |

| mean | 0.54 | 0.43 | 0.42 | 0.27 |

| std | 0.29 | 0.17 | 0.25 | 0.13 |

| № | Expert Estimations | Our Estimations | Variance | Number of Images |

|---|---|---|---|---|

| 1 | 2.0 % | 1.51% | 0.05% | 8 |

| 2 | 2.0 % | 2.80% | 1.15% | 10 |

| 3 | 1.7 % | 3.71% | 1.50% | 5 |

| 4 | 4.0 % | 3.19% | 0.82% | 12 |

| 5 | 3.0 % | 1.9% | 1.01% | 26 |

| 6 | 1.5 % | 1.17% | 0.26% | 8 |

| № | Expert Estimations | Our Estimations | Variance | Number of Images |

|---|---|---|---|---|

| Test part, 542 images | ||||

| 7 | 2.5 % | 0.69% | 0.16% | 48 |

| 0.65% | 0.88% | 49 | ||

| 8 | 3.5% | 0.70% | 0.09% | 35 |

| 0.84% | 0.17% | 45 | ||

| 9 | 2.5% | 0.98% | 0.31% | 49 |

| 10 | 4.0% | 0.61% | 0.68% | 45 |

| 0.73% | 0.18% | 39 | ||

| 0.49% | 0.14% | 58 | ||

| 11 | 2.5% | 1.31% | 1.25% | 28 |

| 1.94% | 2.13% | 39 | ||

| 2.35% | 3.46% | 44 | ||

| 12 | 1.5% | 0.63% | 0.16% | 63 |

| Train and validation part, 442 images | ||||

| 13 | 1.5% | 0.69% | 0.14% | 55 |

| 0.74% | 0.11% | 49 | ||

| 14 | 2.5% | 1.16% | 0.36% | 45 |

| 1.11% | 0.37% | 50 | ||

| 0.72% | 0.21% | 45 | ||

| 15 | 4.0% | 0.91% | 0.25% | 47 |

| 0.97% | 0.18% | 43 | ||

| 0.82% | 0.39% | 41 | ||

| 16 | 2.5% | 1.33% | 0.46% | 35 |

| 1.25% | 0.56% | 32 | ||

| No | Expert Estimations | Our Estimations After Threshold | Effective Number of Chunks | Number of Images |

|---|---|---|---|---|

| 1 | 2.0% | 1.66% | 7 | 8 |

| 2 | 2.0% | 3.05% | 10 | 10 |

| 3 | 1.7% | 3.7% | 5 | 5 |

| 4 | 4.0% | 3.35% | 11 | 12 |

| 5 | 3.0% | 2.41% | 18 | 26 |

| 6 | 1.5% | 1.11% | 8 | 8 |

| 7 | 2.5% | 1.9% | 1 | 48 |

| 1.25% | 1 | 49 | ||

| 8 | 3.5% | 1.48% | 1 | 35 |

| 2.05% | 1 | 45 | ||

| 9 | 2.5% | 2.0% | 10 | 49 |

| 10 | 4.0% | 1.8% | 3 | 45 |

| 1.82% | 1 | 39 | ||

| 3.18% | 1 | 58 | ||

| 11 | 2.5% | 2.0% | 23 | 28 |

| 2.12% | 39 | 39 | ||

| 2.31% | 44 | 44 | ||

| 12 | 1.5% | 1.2% | 18 | 63 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zyuzin, V.; Ronkin, M.; Porshnev, S.; Kalmykov, A. Automatic Asbestos Control Using Deep Learning Based Computer Vision System. Appl. Sci. 2021, 11, 10532. https://doi.org/10.3390/app112210532

Zyuzin V, Ronkin M, Porshnev S, Kalmykov A. Automatic Asbestos Control Using Deep Learning Based Computer Vision System. Applied Sciences. 2021; 11(22):10532. https://doi.org/10.3390/app112210532

Chicago/Turabian StyleZyuzin, Vasily, Mikhail Ronkin, Sergey Porshnev, and Alexey Kalmykov. 2021. "Automatic Asbestos Control Using Deep Learning Based Computer Vision System" Applied Sciences 11, no. 22: 10532. https://doi.org/10.3390/app112210532

APA StyleZyuzin, V., Ronkin, M., Porshnev, S., & Kalmykov, A. (2021). Automatic Asbestos Control Using Deep Learning Based Computer Vision System. Applied Sciences, 11(22), 10532. https://doi.org/10.3390/app112210532