Reduced Reference Quality Assessment for Image Retargeting by Earth Mover’s Distance

Abstract

:1. Introduction

2. EMD between Two Histograms

2.1. Basic EMD

2.2. Weighted EMD

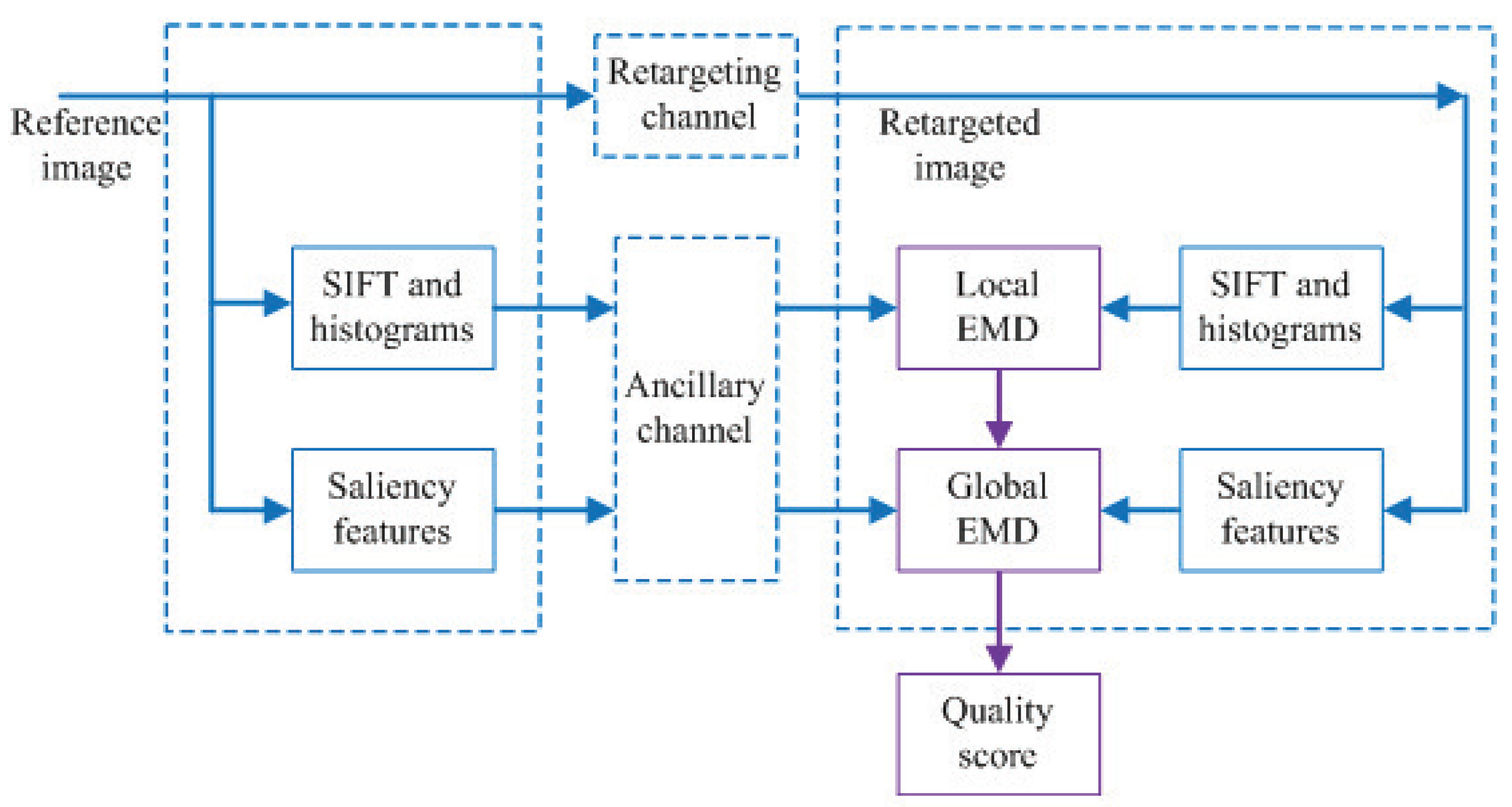

3. Quality Assessment Using EMD

3.1. Local EMD Based on SIFT Features

3.2. Global EMD Based on Saliency Features

3.3. The Overall Quality Score Based on Local and Global EMD

4. Experimental Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Abbreviation | Full Name |

| RR | Reduced Reference |

| EMD | Earth Mover’s Distance |

| SIFT | Scale Invariant Feature Transform |

| IQA | Image Quality Assessment |

| FR | Full Reference |

| NR | No Reference |

| MO | Multi-operator |

| CR | Cropping |

| SV | Streaming Video |

| SM | Shift-map |

| SC | Seam Carving |

| SCL | Scaling |

| SNS | Scale-and-stretch |

| WARP | Warping |

| PSNR | Peak Signal-to-noise Ratio |

| SSIM | Structural Similarity |

| CL | Layout Descriptor |

| EH | Edge Histogram |

| BDW | Bidirectional Warping |

| BDS | Bidirectional Similarity |

| VIF | Information Fidelity |

| DNT | Divisive Normalization Transformation |

| RRED | Reduced Reference Entropic Differencing |

| RRSSIM | Reduced-Reference SSIM |

| LEMD | Local Image Quality Score using EMD |

| GEMD | Global Image Quality Score using EMD |

| QS | Quality Score |

| PLCC | Pearson Linear Correlation Coefficient |

| SRCC | Spearman’s Rank Correlation Coefficient |

| KRCC | Kendall’s Rank Correlation Coefficient |

| RMS | Root Mean Squared |

References

- Zhou, Z.; Li, J.; Quan, Y.; Xu, R. Image Quality Assessment Using Kernel Sparse Coding. IEEE Trans. Multimed. 2021, 23, 1592–1604. [Google Scholar] [CrossRef]

- Liu, Y.; Zhai, G.; Gu, K.; Liu, X.; Zhao, D.; Gao, W. Reduced-Reference Image Quality Assessment in Free-Energy Principle and Sparse Representation. IEEE Trans. Multimed. 2018, 20, 379–391. [Google Scholar] [CrossRef]

- Rubinstein, M.; Gutiérrez, D.; Sorkine, O.; Shamir, A. A Comparative Study of Image Retargeting. ACM Trans. Graph. 2010, 29, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Ma, L.; Li, S.; Zhang, F.; Ngan, K.N. Reduced-Reference Image Quality Assessment Using Reorganized DCT-Based Image Representation. IEEE Trans. Multimed. 2011, 13, 824–829. [Google Scholar] [CrossRef]

- Wu, J.; Lin, W.; Shi, G.; Liu, A. Reduced-Reference Image Quality Assessment With Visual Information Fidelity. IEEE Trans. Multimed. 2013, 15, 1700–1705. [Google Scholar] [CrossRef]

- Li, Q.; Wang, Z. Reduced-Reference Image Quality Assessment Using Divisive Normalization-Based Image Representation. IEEE J. Sel. Top. Signal Process. 2009, 3, 202–211. [Google Scholar] [CrossRef]

- Soundararajan, R.; Bovik, A.C. RRED Indices: Reduced Reference Entropic Differencing for Image Quality Assessment. IEEE Trans. Image Process. 2012, 21, 517–526. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rehman, A.; Wang, Z. Reduced-Reference Image Quality Assessment by Structural Similarity Estimation. IEEE Trans. Image Process. 2012, 21, 3378–3389. [Google Scholar] [CrossRef] [PubMed]

- Bampis, C.G.; Gupta, P.; Soundararajan, R.; Bovik, A.C. SpEED-QA: Spatial Efficient Entropic Differencing for Image and Video Quality. IEEE Signal Process. Lett. 2017, 24, 1333–1337. [Google Scholar] [CrossRef]

- Min, X.; Gu, K.; Zhai, G.; Hu, M.; Yang, X. Saliency-induced reduced-reference quality index for natural scene and screen content images. Signal Process 2018, 145, 127–136. [Google Scholar] [CrossRef]

- Rubner, Y.; Tomasi, C.; Guibas, L.J. The Earth Mover’s Distance as a Metric for Image Retrieval. Int. J. Comput. Vis. 2000, 40, 99–121. [Google Scholar] [CrossRef]

- Ling, H.; Okada, K. An Efficient Earth Mover’s Distance Algorithm for Robust Histogram Comparison. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 840–853. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.; Tang, Y.Y.; Fang, B.; Shang, Z.; Huang, Y.; Wang, S. A Visual-Attention Model Using Earth Mover’s Distance-Based Saliency Measurement and Nonlinear Feature Combination. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 314–328. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Levina, E.; Bickel, P. The Earth Mover’s distance is the Mallows distance: Some insights from statistics. In Proceedings of the Eighth IEEE International Conference on Computer Vision (ICCV), Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 251–256. [Google Scholar] [CrossRef] [Green Version]

- Ma, J.; Qiu, W.; Zhao, J.; Ma, Y.; Yuille, A.L.; Tu, Z. Robust L2E Estimation of Transformation for Non-Rigid Registration. IEEE Trans. Signal Process. 2015, 63, 1115–1129. [Google Scholar] [CrossRef]

- Liu, C.; Yuen, J.; Torralba, A. SIFT Flow: Dense Correspondence across Scenes and Its Applications. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 978–994. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xue, W.; Zhang, L.; Mou, X.; Bovik, A.C. Gradient Magnitude Similarity Deviation: A Highly Efficient Perceptual Image Quality Index. IEEE Trans. Image Process. 2014, 23, 684–695. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Baluch, F.; Itti, L. Mining videos for features that drive attention. In Multimedia Data Mining and Analytics; Springer: Berlin, Germany, 2015; pp. 311–326. [Google Scholar]

- Wei, L.; Luo, D. A biologically inspired computational approach to model top-down and bottom-up visual attention. Opt. Int. J. Light Electron. Opt. 2015, 126, 522–529. [Google Scholar] [CrossRef]

- Ponomarenko, N.; Jin, L.; Ieremeiev, O.; Lukin, V.; Egiazarian, K.; Astola, J.; Vozel, B.; Chehdi, K.; Carli, M.; Battisti, F. Image database TID2013: Peculiarities, results and perspectives. In Proceedings of the Signal Processing: Image Communication, Bellingham, WA, USA, 31 July–4 August 2005; Volume 32, pp. 57–77. [Google Scholar] [CrossRef] [Green Version]

- Lin, H.; Hosu, V.; Saupe, D. KADID-10k: A Large-scale Artificially Distorted IQA Database. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Berlin, Germany, 5–7 June 2019; pp. 1–3. [Google Scholar] [CrossRef]

- Virtanen, T.; Nuutinen, M.; Vaahteranoksa, M.; Oittinen, P.; Häkkinen, J. CID2013: A database for evaluating noreference image quality assessment algorithms. IEEE Trans. Image Process. 2015, 24, 390–402. [Google Scholar] [CrossRef] [PubMed]

- Ghadiyaram, D.; Bovik, A.C. Massive Online Crowdsourced Study of Subjective and Objective Picture Quality. IEEE Trans. Image Process. 2016, 25, 372–387. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lin, H.; Hosu, V.; Saupe, D. Koniq-10k: Towards an ecologically valid and large-scale iqa database, Pittsburgh, Pennsylvania. arXiv 2018, arXiv:1803.08489. [Google Scholar]

| Algorithm | Lines/Edges | Faces/People | Texture | Foreground Objects | Geometric Structures | Symmetry | |

|---|---|---|---|---|---|---|---|

| FR IQA for retargeted images | EL | 0.043 | −0.076 | −0.060 | −0.079 | 0.103 | 0.298 |

| CL | −0.023 | −0.181 | −0.071 | −0.183 | −0.009 | 0.214 | |

| BDW | 0.031 | 0.048 | −0.048 | 0.060 | 0.004 | 0.119 | |

| BDS | 0.040 | 0.190 | 0.060 | 0.167 | −0.004 | −0.012 | |

| SIFT Flow | 0.097 | 0.252 | 0.119 | 0.218 | 0.085 | 0.071 | |

| RR IQA for nonretargeted images | DCT | 0.124 | -0.002 | 0.051 | 0.201 | 0.035 | 0.103 |

| VIF | 0.210 | 0.233 | 0.078 | 0.230 | 0.101 | −0.004 | |

| DNT | 0.142 | 0.248 | −0.025 | 0.185 | 0.046 | 0.151 | |

| RRED | −0.031 | 0.341 | 0.075 | 0.284 | −0.003 | 0.293 | |

| RRSSIM | 0.179 | 0.328 | 0.020 | 0.257 | 0.237 | 0.226 | |

| RR IQA for retargeted images | LEMD | 0.236 | 0.432 | 0.068 | 0.379 | 0.026 | 0.332 |

| GEMD | 0.125 | 0.452 | 0.025 | 0.420 | 0.012 | 0.150 | |

| QS | 0.262 | 0.461 | 0.073 | 0.438 | 0.034 | 0.357 |

| Algorithm | PLCC | SRCC | KRCC | RMS | |

|---|---|---|---|---|---|

| FR IQA for retargeted images | EL | 0.048 | 0.025 | 0.004 | 0.324 |

| CL | 0.019 | 0.013 | −0.068 | 0.457 | |

| BDW | 0.062 | 0.050 | 0.046 | 0.274 | |

| BDS | 0.091 | 0.097 | 0.083 | 0.262 | |

| SIFT Flow | 0.149 | 0.151 | 0.145 | 0.238 | |

| RR IQA for nonretargeted images | DCT | 0.073 | 0.069 | 0.056 | 0.266 |

| VIF | 0.185 | 0.187 | 0.168 | 0.232 | |

| DNT | 0.161 | 0.153 | 0.137 | 0.241 | |

| RRED | 0.253 | 0.247 | 0.234 | 0.225 | |

| RRSSIM | 0.260 | 0.266 | 0.259 | 0.221 | |

| RR IQA for retargeted images | LEMD | 0.325 | 0.322 | 0.294 | 0.172 |

| GEMD | 0.129 | 0.126 | 0.120 | 0.236 | |

| QS | 0.370 | 0.365 | 0.331 | 0.164 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, L.; Zhao, L.; Peng, J. Reduced Reference Quality Assessment for Image Retargeting by Earth Mover’s Distance. Appl. Sci. 2021, 11, 9776. https://doi.org/10.3390/app11209776

Wei L, Zhao L, Peng J. Reduced Reference Quality Assessment for Image Retargeting by Earth Mover’s Distance. Applied Sciences. 2021; 11(20):9776. https://doi.org/10.3390/app11209776

Chicago/Turabian StyleWei, Longsheng, Lei Zhao, and Jian Peng. 2021. "Reduced Reference Quality Assessment for Image Retargeting by Earth Mover’s Distance" Applied Sciences 11, no. 20: 9776. https://doi.org/10.3390/app11209776

APA StyleWei, L., Zhao, L., & Peng, J. (2021). Reduced Reference Quality Assessment for Image Retargeting by Earth Mover’s Distance. Applied Sciences, 11(20), 9776. https://doi.org/10.3390/app11209776