Ontology-Based Regression Testing: A Systematic Literature Review

Abstract

1. Introduction

2. Literature Review

3. Research Method

3.1. Research Questions

3.2. Search Keywords

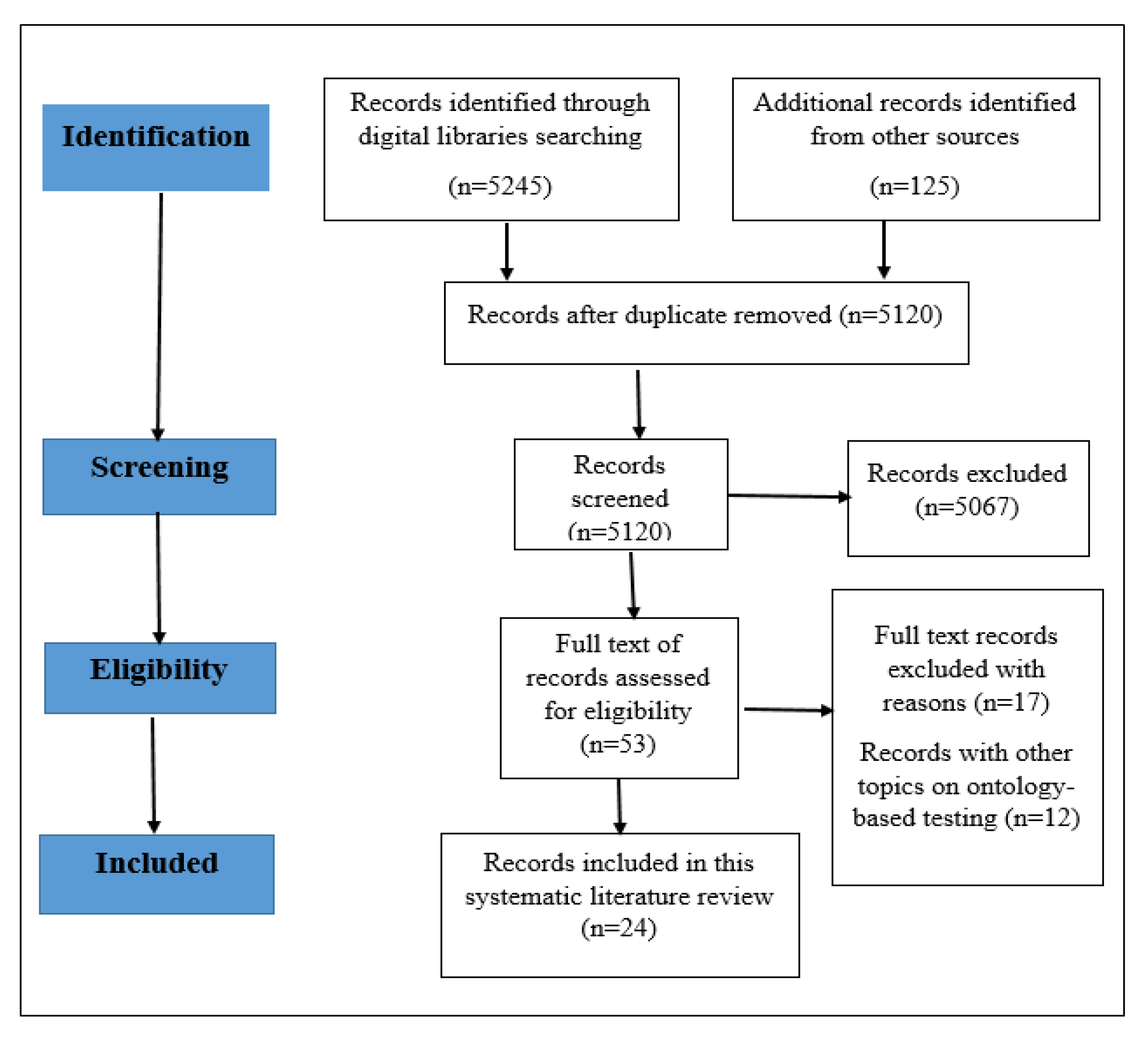

3.3. Selection of Research Publications

3.4. Inclusion and Exclusion Criteria

- ➢

- Publications that discuss ontology-based testing of web services were included.

- ➢

- Publications that discuss ontology-based regression testing of web services were included.

- ➢

- Publications that discuss the ontology-based test case prioritization techniques were included.

- ➢

- Publications that discuss issues/challenges regarding ontology-based regression testing were included.

- ➢

- Duplicate papers on the research topic were excluded.

- ➢

- Publications that did not discuss ontology-based regression testing of web services were excluded.

- ➢

- Publications that did not provide a technical discussion on the research topic of this SLR were included.

- ➢

- Publications written in a language other than English were excluded.

3.5. Quality Assessment

4. Results and Discussion

4.1. RQ1: What Is the Roadmap of Regression Testing?

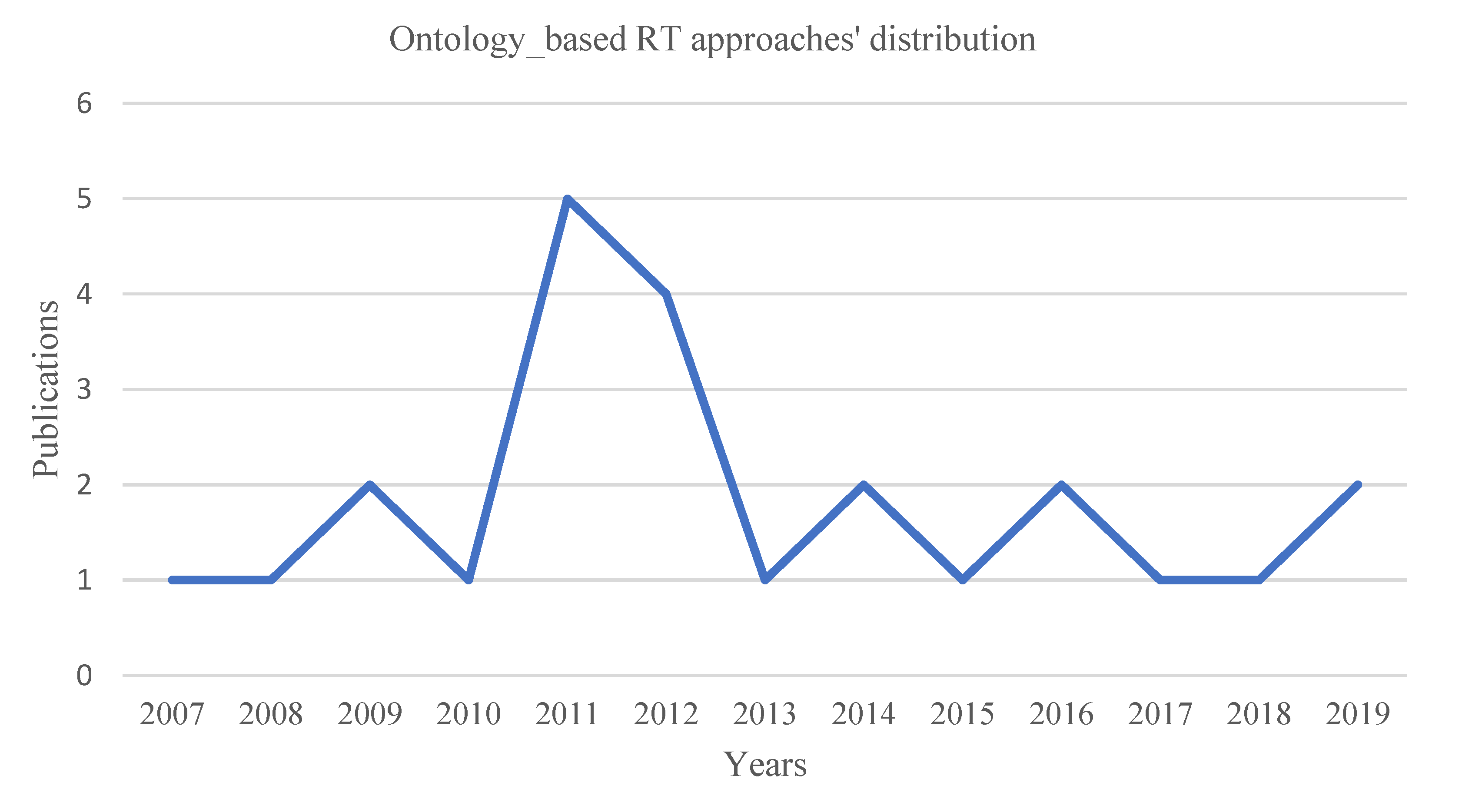

4.2. RQ2: What Are the State-of-the-Art Techniques of Ontology-Based Regression Testing?

4.3. RQ3: What Are the Challenges and Limitations of Current Approaches?

- Validation of approaches. Although test case grouping presented in [39] helps remove the failed services, it still shows limitations in prioritizing test cases as low prioritized test cases might be grouped with the high prioritized test case [43]. Grouping high and low priority test cases is justified and plausible only if it is supported by previously evaluated criteria [22,42].

- Risk assessment. Application output behavior and risky scenarios are also not addressed in these research studies [22,39]. Study [39] presented a model based on the semantics from the workflow of web services, ontology usage, and ontology dependency to identify the contributing risks during the execution of web services. At the same time, the risk of errors was not taken into account while web systems were updated during the execution of tasks. To prevent or reduce such errors is an open question for researchers [22].

- Reliability. Web systems’ reliability in terms of regression testing is missed in the existing studies.

4.4. RQ4: What Are the Possible Future Research Directions?

4.5. RQ5: What Are the Unique Issues of Ontology-Based Regression Testing Compared to Other Regression Testing Approaches?

5. Limitations of the SLR

6. Conclusions and Future Implications

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chen, J.; Zhu, L.; Chen, T.Y.; Towey, D.; Kuo, F.C.; Huang, R.; Guo, Y. Test case prioritization for object-oriented software: An adaptive random sequence approach based on clustering. J. Syst. Softw. 2018, 135, 107–125. [Google Scholar] [CrossRef]

- Spieker, H.; Gotlieb, A.; Marijan, D.; Mossige, M. Reinforcement learning for automatic test case prioritization and selection in continuous integration. In Proceedings of the ISSTA 2017: 26th ACM SIGSOFT International Symposium on Software Testing and Analysis, Santa Barbara, CA, USA, 10–14 July 2017; pp. 12–22. [Google Scholar]

- Kazmi, R.; Jawawi, D.N.; Mohamad, R.; Ghani, I. Effective Regression Test Case Selection: A Systematic Literature Review. ACM Comput. Surv. 2017, 50, 1–32. [Google Scholar] [CrossRef]

- de Oliveira Neto, F.G.; Torkar, R.; Machado, P.D. Full modification coverage through automatic similarity-based test case selection. Inf. Softw. Technol. 2016, 80, 124–137. [Google Scholar] [CrossRef]

- Krishnamoorthi, R.; Mary, S.S.A. Factor oriented requirement coverage based system test case prioritization of new and regression test cases. Inf. Softw. Technol. 2009, 51, 799–808. [Google Scholar] [CrossRef]

- Koutsomitropoulos, D.A.; Kalou, A.K. A standards-based ontology and support for Big Data Analytics in the insurance industry. ICT Express 2017, 3, 57–61. [Google Scholar] [CrossRef]

- García-Peñalvo, F.J.; Colomo-Palacios, R.; García, J.; Theron, R. Towards an ontology modeling tool. A validation in software engineering scenarios. Expert Syst. Appl. 2012, 39, 11468–11478. [Google Scholar] [CrossRef]

- García, J.; Jose’García-Penalvo, F.; Therón, R. A Survey on Ontology Metrics. In World Summit on Knowledge Society; Springer: Berlin/Heidelberg, Germany, 2010; pp. 22–27. [Google Scholar]

- Yoo, S.; Harman, M. Regression testing minimization, selection and prioritization: A survey. Softw. Test. Verif. Reliab. 2010, 22, 67–120. [Google Scholar] [CrossRef]

- Singh, Y.; Kaur, A.; Suri, B.; Singhal, S. Systematic literature review on regression test prioritization techniques. Informatica 2012, 36, 308–379. [Google Scholar]

- Catal, C.; Mishra, D. Test case prioritization: A systematic mapping study. Softw. Qual. J. 2013, 21, 445–478. [Google Scholar] [CrossRef]

- Qiu, D.; Li, B.; Ji, S.; Leung, H.K.N. Regression Testing of Web Service: A Systematic Mapping Study. ACM Comput. Surv. 2015, 47, 1–46. [Google Scholar] [CrossRef]

- Khatibsyarbini, M.; Isa, M.A.; Jawawi, D.N.; Tumeng, R. Test case prioritization approaches in regression testing: A systematic literature review. Inf. Softw. Technol. 2018, 93, 74–93. [Google Scholar] [CrossRef]

- Mukherjee, R.; Patnaik, K.S. A survey on different approaches for software test case prioritization. J. King Saud Univ. Comput. Inf. Sci. 2018, 33, 1041–1054. [Google Scholar] [CrossRef]

- de Souza Neto, J.B.; Moreira, A.M.; Musicante, M.A. Semantic Web Services testing: A Systematic Mapping study. Comput. Sci. Rev. 2018, 28, 140–156. [Google Scholar] [CrossRef]

- Arora, P.K.; Bhatia, R. A Systematic Review of Agent-Based Test Case Generation for Regression Testing. Arab. J. Sci. Eng. 2017, 43, 447–470. [Google Scholar] [CrossRef]

- Akbari, Z.; Khoshnevis, S.; Mohsenzadeh, M. A Method for Prioritizing Integration Testing in Software Product Lines Based on Feature Model. Int. J. Softw. Eng. Knowl. Eng. 2017, 27, 575–600. [Google Scholar] [CrossRef]

- Hemmati, H. Advances in Techniques for Test Prioritization. In Advances in Computers; Elsevier Inc.: Amsterdam, The Netherlands, 2018; Volume 112, pp. 185–221. [Google Scholar]

- Hillah, L.M.; Maesano, A.-P.; De Rosa, F.; Kordon, F.; Wuillemin, P.-H.; Fontanelli, R.; Di Bona, S.; Guerri, D.; Maesano, L. Automation and intelligent scheduling of distributed system functional testing. Int. J. Softw. Tools Technol. Transf. 2017, 19, 281–308. [Google Scholar] [CrossRef]

- Schwartz, A.; Do, H. Cost-effective regression testing through Adaptive Test Prioritization strategies. J. Syst. Softw. 2016, 115, 61–81. [Google Scholar] [CrossRef]

- Miranda, B.; Bertolino, A. Scope-aided test prioritization, selection and minimization for software reuse. J. Syst. Softw. 2017, 131, 528–549. [Google Scholar] [CrossRef]

- Zhu, H.; Zhang, Y. Collaborative Testing of Web Services. IEEE Trans. Serv. Comput. 2010, 5, 116–130. [Google Scholar] [CrossRef]

- Wang, W.; Huang, Z.; Wang, L. ISAT: An intelligent Web service selection approach for improving reliability via two-phase decisions. Inf. Sci. 2018, 433, 255–273. [Google Scholar] [CrossRef]

- De Souza, E.F.; de Almeida Falbo, R.; Vijaykumar, N.L. Knowledge management initiatives in software testing: A mapping study. Inf. Softw. Technol. 2015, 57, 378–391. [Google Scholar] [CrossRef]

- Serna, E.; Serna, A. Ontology for knowledge management in software maintenance. Int. J. Inf. Manag. 2014, 34, 704–710. [Google Scholar] [CrossRef]

- Bai, X.; Kenett, R.S. Risk-Based Adaptive Group Testing of Semantic Web Services. In Proceedings of the 2009 33rd Annual IEEE International Computer Software and Applications Conference, Seattle, WA, USA, 20–24 July 2009; Volume 2, pp. 485–490. [Google Scholar]

- Moher, D.; Shamseer, L.; Clarke, M.; Ghersi, D.; Liberati, A.; Petticrew, M.; Shekelle, P.; Stewart, L.A. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst. Rev. 2015, 4, 1–9. [Google Scholar] [CrossRef]

- Kitchenham, B.A.; Brereton, O.P.; Budgen, D. Protocol for Extending an Existing Tertiary Study of Systematic Literature Reviews in Software Engineering; EPIC Technical Report. EBSE-2008-006 June 2008; University of Durham: Durham, UK, 2017. [Google Scholar]

- Kitchenham, B.; Brereton, O.P.; Budgen, D.; Turner, M.; Bailey, J.; Linkman, S. Systematic literature reviews in software engineering—A systematic literature review. Inf. Softw. Technol. 2009, 51, 7–15. [Google Scholar] [CrossRef]

- Nidhra, S.; Yanamadala, M.; Afzal, W.; Torkar, R. Knowledge transfer challenges and mitigation strategies in global software development—A systematic literature review and industrial validation. Int. J. Inf. Manag. 2013, 33, 333–355. [Google Scholar] [CrossRef]

- Panda, S.; Munjal, D.; Mohapatra, D.P. A Slice-Based Change Impact Analysis for Regression Test Case Prioritization of Object-Oriented Programs. Adv. Softw. Eng. 2016, 1–20. [Google Scholar] [CrossRef][Green Version]

- Ma, T.; Zeng, H.; Wang, X. Test case prioritization based on requirement correlations. In Proceedings of the 2016 17th IEEE/ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD), Shanghai, China, 30 May–1 June 2016; pp. 419–424. [Google Scholar]

- Noor, T.B.; Hemmati, H. A similarity-based approach for test case prioritization using historical failure data. In Proceedings of the 2015 IEEE 26th International Symposium on Software Reliability Engineering (ISSRE), Gaithersbury, MD, USA, 2–5 November 2015. [Google Scholar]

- Sampath, S.; Bryce, R.C. Improving the effectiveness of test suite reduction for user-session-based testing of web applications. Inf. Softw. Technol. 2012, 54, 724–738. [Google Scholar] [CrossRef]

- Tarasov, V.; Tan, H.; Ismail, M.; Adlemo, A.; Johansson, M. Application of inference rules to a software requirements ontology to generate software test cases. In OWL: Experiences and Directions—Reasoner Evaluation; Springer: Cham, Switzerland, 2016; pp. 82–94. [Google Scholar]

- Ryschka, S.; Murawski, M.; Bick, M. Location-Based Services. Bus. Inf. Syst. Eng. 2016, 58, 233–237. [Google Scholar] [CrossRef]

- Askarunisa, A.; Punitha, K.A.J.; Ramaraj, N. Test case generation and prioritization for composite web service based on owl-s. Neural Netw. World 2011, 21, 519–538. [Google Scholar] [CrossRef][Green Version]

- Askarunisa, A.; Punitha, K.A.J.; Abirami, A.M. Black box test case prioritization techniques for semantic based composite web services using OWL-S. In Proceedings of the 2011 International Conference on Recent Trends in Information Technology (ICRTIT), Chennai, India, 3–5 June 2011; pp. 1215–1220. [Google Scholar]

- Bai, X.; Kenett, R.S. Risk-Based Testing of Web Services. Oper. Risk Manag. 2010, 99–123. [Google Scholar]

- Bai, X.; Kenett, R.S.; Yu, W. Risk assessment and adaptive group testing of semantic web services. Int. J. Softw. Eng. Knowl. Eng. 2012, 22, 595–620. [Google Scholar] [CrossRef]

- Kim, M.; Cobb, J.; Harrold, M.J.; Kurc, T.; Orso, A.; Saltz, J.; Post, A.; Malhotra, K.; Navathe, S.B. Efficient regression testing of ontology-driven systems. In Proceedings of the ISSTA 2012 International Symposium on Software Testing and Analysis, Minneapolis, MN, USA, 15–20 July 2012; pp. 320–330. [Google Scholar]

- Do, H. Recent advances in regression testing techniques. In Advances in Computers; Elsevier: Amsterdam, The Netherlands, 2016; Volume 103, pp. 53–77. [Google Scholar]

- Fan, C.-F.; Wang, W.-S. Validation test case generation based on safety analysis ontology. Ann. Nucl. Energy 2012, 45, 46–58. [Google Scholar] [CrossRef]

- Tseng, W.-H.; Fan, C.-F. Systematic scenario test case generation for nuclear safety systems. Inf. Softw. Technol. 2013, 55, 344–356. [Google Scholar] [CrossRef]

- Nguyen, C.D.; Perini, A.; Tonella, P. Experimental Evaluation of Ontology-Based Test Generation for Multi-agent Systems. In AOSE 2008: Agent-Oriented Software Engineering, IX International Workshop, Estoril, Portugal, 12–13 May 2008; Springer: Berlin, Heidelberg, 2009; pp. 187–198. [Google Scholar]

- Moser, T.; Dürr, G.; Biffl, S. Ontology-Based Test Case Generation for Simulating Complex Production Automation Systems. In SEKE; Knowledge Systems Institute: Skokie, IL, USA, 2010; pp. 478–482. [Google Scholar]

- Haq, S.U.; Qamar, U. Ontology Based Test Case Generation for Black Box Testing. In Proceedings of the 2019 8th International Conference on Educational and Information Technology, Cambridge, UK, 2–4 March 2019; pp. 236–241. [Google Scholar]

- Ali, S.; Yue, T.; Hoffmann, A.; Wendland, M.-F.; Bagnato, A.; Brosse, E.; Schacher, M.; Dai, Z.R. How Does the UML Testing Profile Support Risk-Based Testing. In Proceedings of the 2014 IEEE International Symposium on Software Reliability Engineering Workshops, Naples, Italy, 3–6 November 2014; pp. 311–316. [Google Scholar]

- Li, Y.; Tao, J.; Wotawa, F. Ontology-based test generation for automated and autonomous driving functions. Inf. Softw. Technol. 2020, 117, 106200. [Google Scholar] [CrossRef]

- Mei, L.; Chan, W.K.; Tse, T.H.; Jiang, B.; Zhai, K. Preemptive regression testing of workflow-based web services. IEEE Trans. Serv. Comput. 2014, 8, 740–754. [Google Scholar] [CrossRef]

- Mani, P.; Prasanna, M. Validation of automated test cases with specification path. J. Stat. Manag. Syst. 2017, 20, 535–542. [Google Scholar] [CrossRef]

- Jokhio, M.S.; Sun, J.; Dobbie, G.; Hu, T. Goal-based testing of semantic web services. Inf. Softw. Technol. 2017, 83, 1–13. [Google Scholar] [CrossRef]

- Abadeh, M.N.; Ajoudanian, S. A model-driven framework to enhance the consistency of logical integrity constraints: Introducing integrity regression testing. Softw. Pr. Exp. 2019, 49, 274–300. [Google Scholar] [CrossRef]

| Sr. No | Research Focus | Ontology-Based Regression Testing | Number of Scholarly Studies | Reference |

|---|---|---|---|---|

| 1. | Regression Testing | x | 159 | [9] |

| 2. | Regression test case prioritization | x | 65 | [10] |

| 3. | Test case prioritization | x | 120 | [11] |

| 4. | Regression testing of web services | √ | 30 | [12] |

| 5. | Regression test case selection | x | 47 | [3] |

| 6. | Test case prioritization | x | 69 | [13] |

| 7. | Test Case prioritization of systems | x | 90 | [14] |

| 8. | Testing of semantic web services | √ | 43 | [15] |

| 9. | Agent-based test generation | x | 115 | [16] |

| RQ ID. | Description |

|---|---|

| RQ1 | What is the roadmap of regression testing? |

| RQ2 | What are the state-of-the-art techniques of ontology-based regression testing? |

| RQ3 | What are the challenges and limitations of current approaches? |

| RQ4 | What are the possible future research directions? |

| RQ5 | What are the unique issues of ontology-based regression testing compared to other regression testing approaches? |

| Data Repository Name | Search String |

|---|---|

| IEEE Xplore | (((((“All Metadata”: Ontology) AND “All Metadata”: Regression Testing) OR “All Metadata”: Test Case Prioritization) AND “All Metadata”: Test Case Selection) AND “All Metadata”: Test Case Generation) |

| ACM digital Library | (+Ontology + Regression + Testing + Test + Case + Prioritization + Test + Case + Selection + Test + Case + Generation) |

| ScienceDirect | “Ontology, regression testing, test case prioritization, Test Case Selection, Test Case Generation.” |

| SpringerLink | ‘Ontology AND “Regression Testing” AND (Test OR Case OR Prioritization, OR Test OR Case OR Selection, OR Test OR Case OR Generation)’ |

| Web of Science | (Ontology) AND TOPIC: (Regression Testing) OR TOPIC: (test case prioritization) OR TOPIC: (Test Case Selection) OR TOPIC: (Test Case Generation) |

| Wiley & Sons | “Ontology” anywhere and “Regression Testing” anywhere and “Test Case Prioritization” anywhere and "Test Case Selection” anywhere and “Test Case Generation”. |

| QAC Question No. | Description |

|---|---|

| Q1 | Does the research topic is relevant to ontology-based regression testing? |

| Q2 | Does the research study has a clear context regarding the research topic? |

| Q3 | Does the research sufficiently define research methodology? |

| Q4 | Is the data collection process effectively revealed? |

| Q5 | Is the data analysis method appropriately explained? |

| Authors | Problem | Proposed Technique | Advantage | Limitation |

|---|---|---|---|---|

| Askarunisa et al. [37] | High cost on web services testing | An automated testing framework | Effective prioritization of web services | No cost resources were used in the proposed approach |

| Askarunisa et al. [38] | An increase in cost due to source code unavailability | Semantic-based protégé tool | Faults detection is better than the traditional approaches | Coverage criteria is not clearly mentioned |

| Bai et al. [40] | Web services testing in an open platform | A risk-based approach using semantics | Faults detection with a high impact | The reliability of web services is not mentioned |

| Kim et al. [41] | Handling changes in the evolving system is a difficult task | Regression test selection | Reduces the overall time to rerun test cases | This approach might have missed some necessary test cases |

| Fan et al. [43] | Validation of tests in an ad hoc fashion | Domain-specific ontology-based approach | Systematic generation of TCG from safety analysis report | The cost to ensure the safety of a web system is not mentioned |

| Tseng et al. [44] | Uncertain test coverage from random testing | Domain-specific ontology using requirements | Ensures users’ safety, more effective than random testing | Validation of web systems at large-scale systems is not done |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hasnain, M.; Ghani, I.; Pasha, M.F.; Jeong, S.-R. Ontology-Based Regression Testing: A Systematic Literature Review. Appl. Sci. 2021, 11, 9709. https://doi.org/10.3390/app11209709

Hasnain M, Ghani I, Pasha MF, Jeong S-R. Ontology-Based Regression Testing: A Systematic Literature Review. Applied Sciences. 2021; 11(20):9709. https://doi.org/10.3390/app11209709

Chicago/Turabian StyleHasnain, Muhammad, Imran Ghani, Muhammad Fermi Pasha, and Seung-Ryul Jeong. 2021. "Ontology-Based Regression Testing: A Systematic Literature Review" Applied Sciences 11, no. 20: 9709. https://doi.org/10.3390/app11209709

APA StyleHasnain, M., Ghani, I., Pasha, M. F., & Jeong, S.-R. (2021). Ontology-Based Regression Testing: A Systematic Literature Review. Applied Sciences, 11(20), 9709. https://doi.org/10.3390/app11209709