An Improved Collaborative Filtering Recommendation Algorithm Based on Retroactive Inhibition Theory

Abstract

1. Introduction

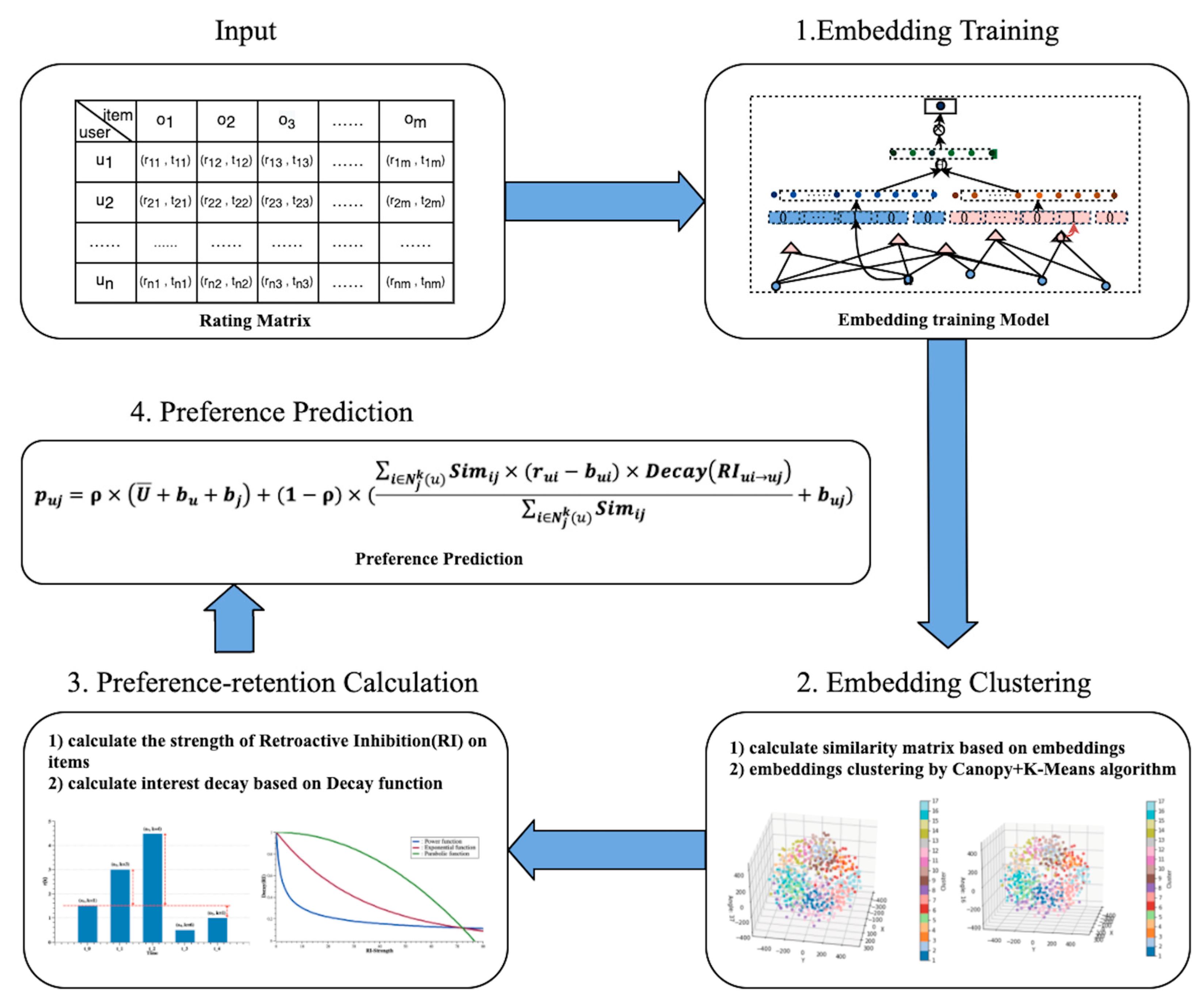

- This paper introduces the theory of retroactive inhibition into recommendation systems to more interpretably measure the decay of a user’s preference over time. Specifically, we modified the application of the forgetting mechanism of brain memory to the recommendation system by using retroactive inhibition instead of using forgetting over time directly, in order to calculate the change of user’s preference more accurately.

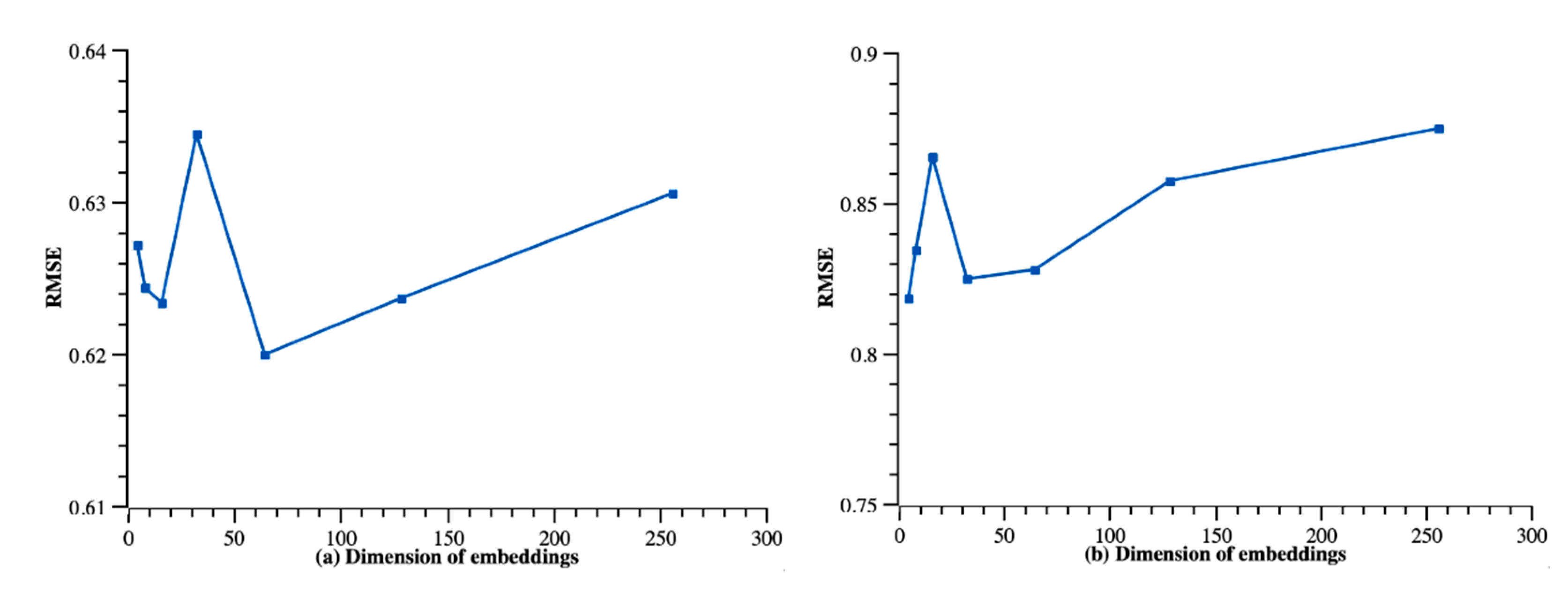

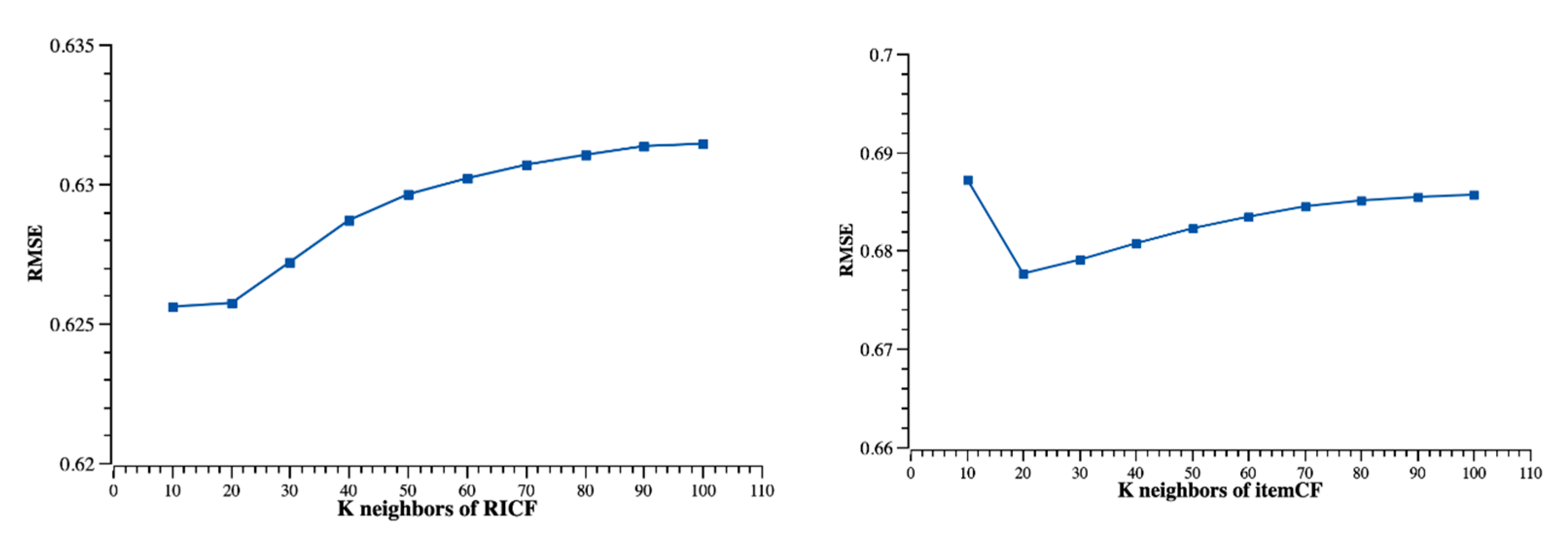

- The proposed RICF algorithm not only takes into account the evolution of user preferences but also uses more powerful item embeddings by fusing user, item and rating information to alleviate the problem of data sparsity and improve the accuracy of rating prediction. In addition, the embeddings trained by the model (using rating-prediction as an optimization goal) can help to reduce the number of similar neighbors in the collaborative process.

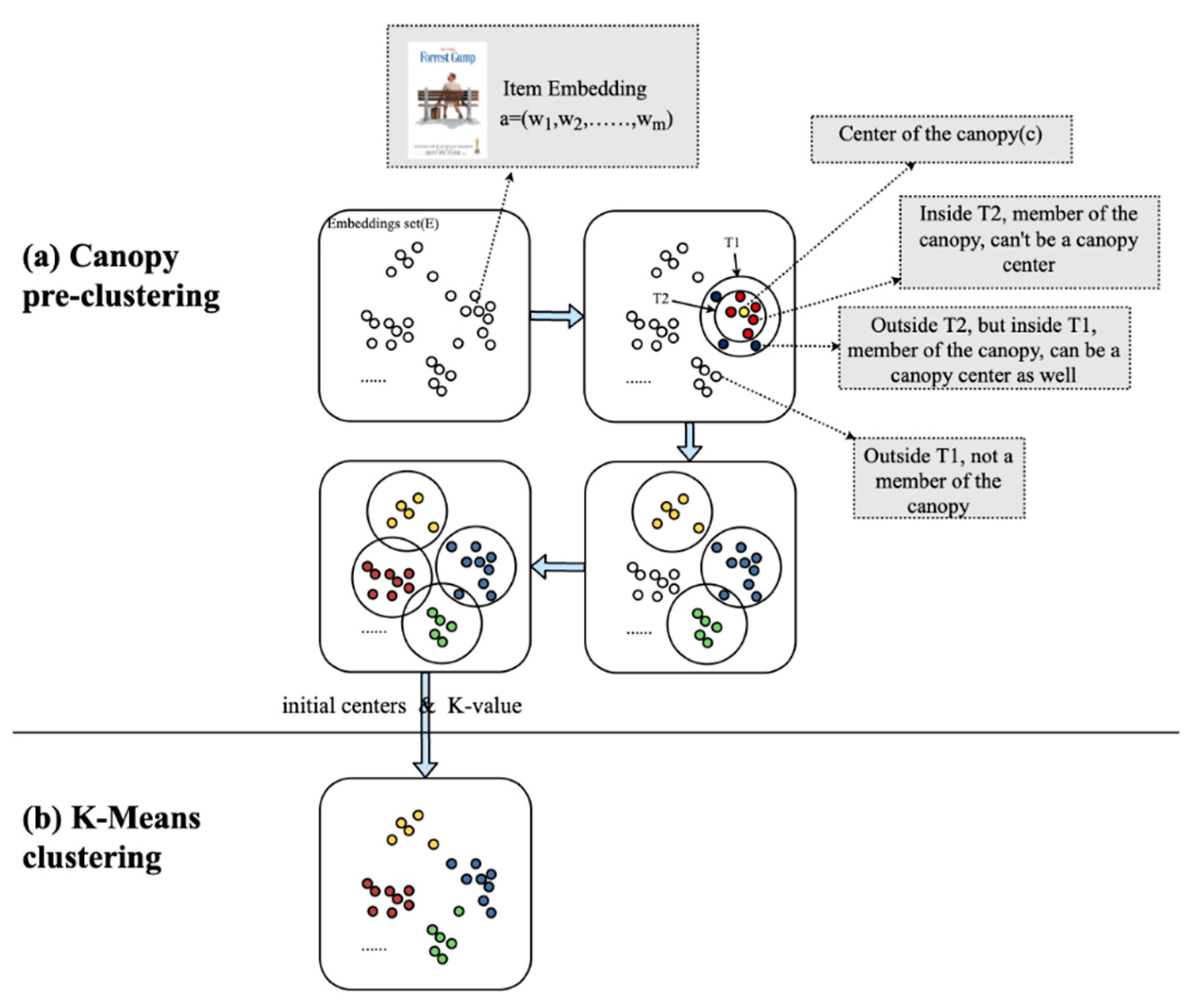

- Differing from previous related studies, this paper proposes to use a clustering of embeddings to obtain a preference points model. Meanwhile, RICF combines the Canopy and K-Means algorithms to overcome the problem that the clustering efficiency decreases with the increasing size of the dataset, as well as the feature dimension.

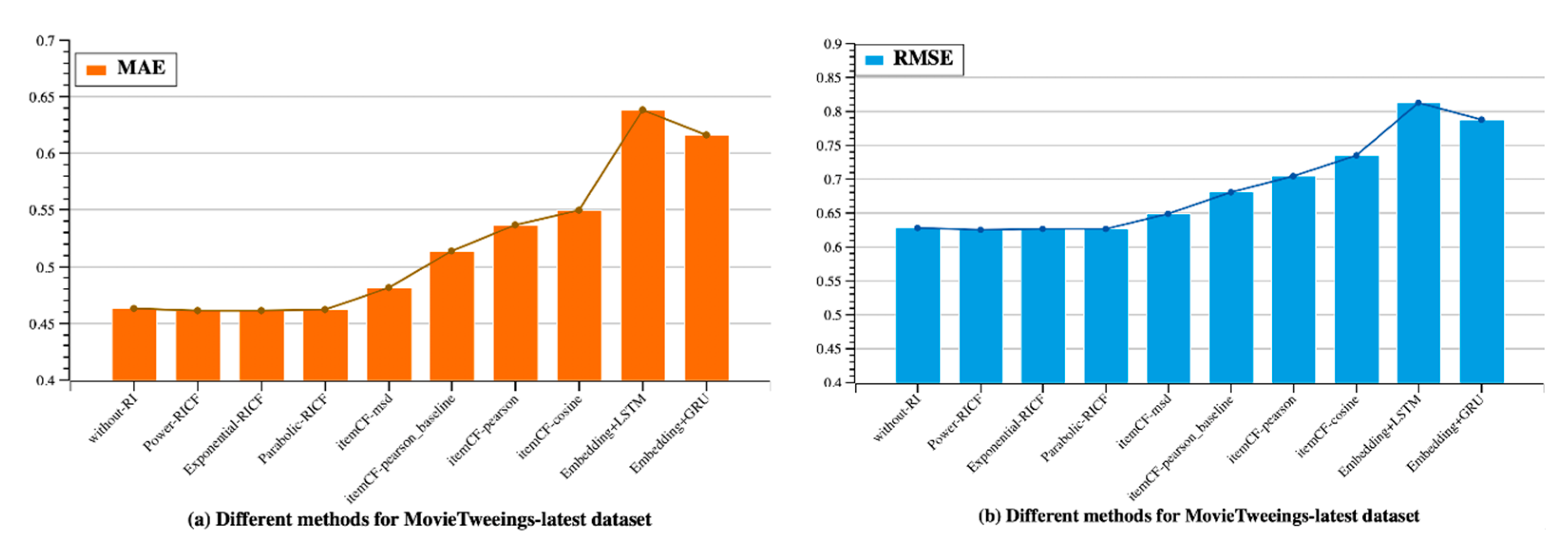

- To show the practicability of the proposed algorithm, this paper applies real datasets with real timestamps, which are the live movie rating dataset collected from Twitter [13] and the digital music dataset collected from Amazon [14]. The experiment results show that RICF performs better and is more interpretable than the traditional itemCF as well as the state-of-the-art sequential algorithms that focus on the research of preference decay.

2. Related Work

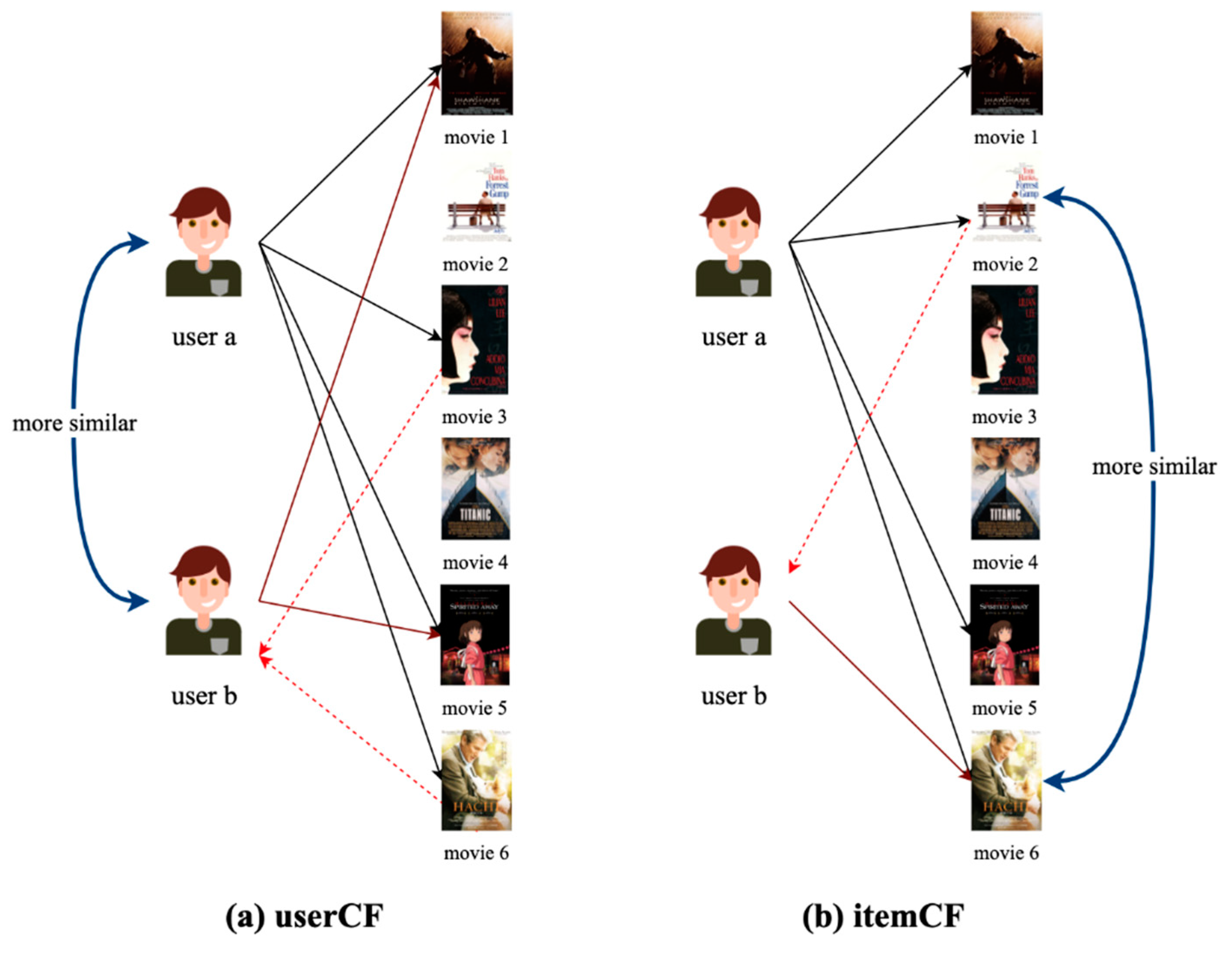

2.1. Collaborative Filtering Recommendation

2.2. Retroactive Inhibition Theory

3. Proposed Model: RICF

3.1. Preliminary

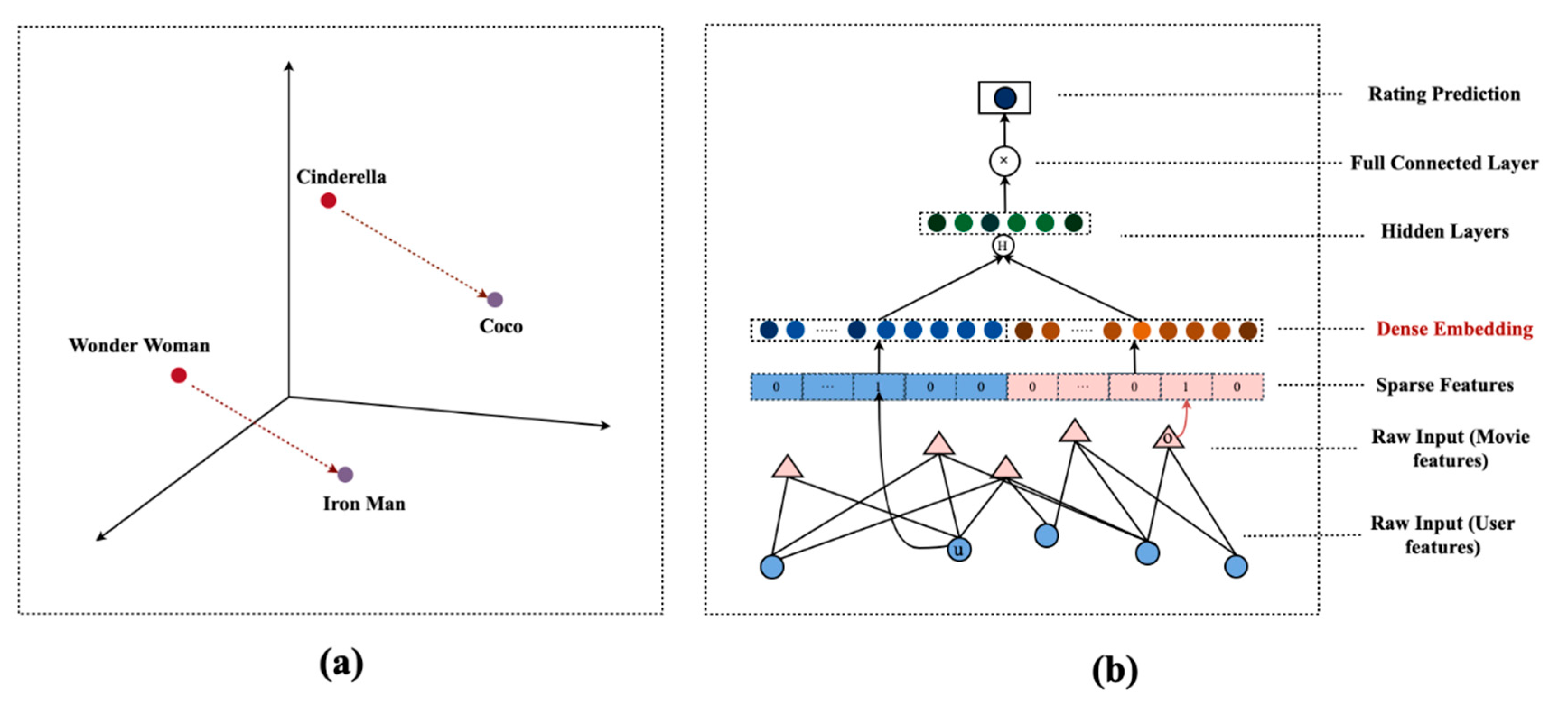

3.2. Embedding Training

3.3. Embedding Clustering

- (1)

- Canopy coarse clustering

| Algorithm 1. Pseudocode for Canopy clustering algorithm for embeddings. | |

| 1: | Input: the set of item embeddings }. |

| 2: | Output: the K-value and initial centroids } of cluster. |

| 3: | Initialize |

| 4: | whiledo |

| 5: | Select sample from randomly |

| 6: | Initialize Canopy |

| 7: | Remove from |

| 8: | for remaining sample do |

| 9: | compute |

| 10: | if then |

| 11: | Canopy |

| 12: | else ifthen |

| 13: | remove from |

| 14: | end if |

| 15: | end for |

| 16: | end while |

| 17: | return K-value, initial centroids |

- (2)

- K-Means clustering

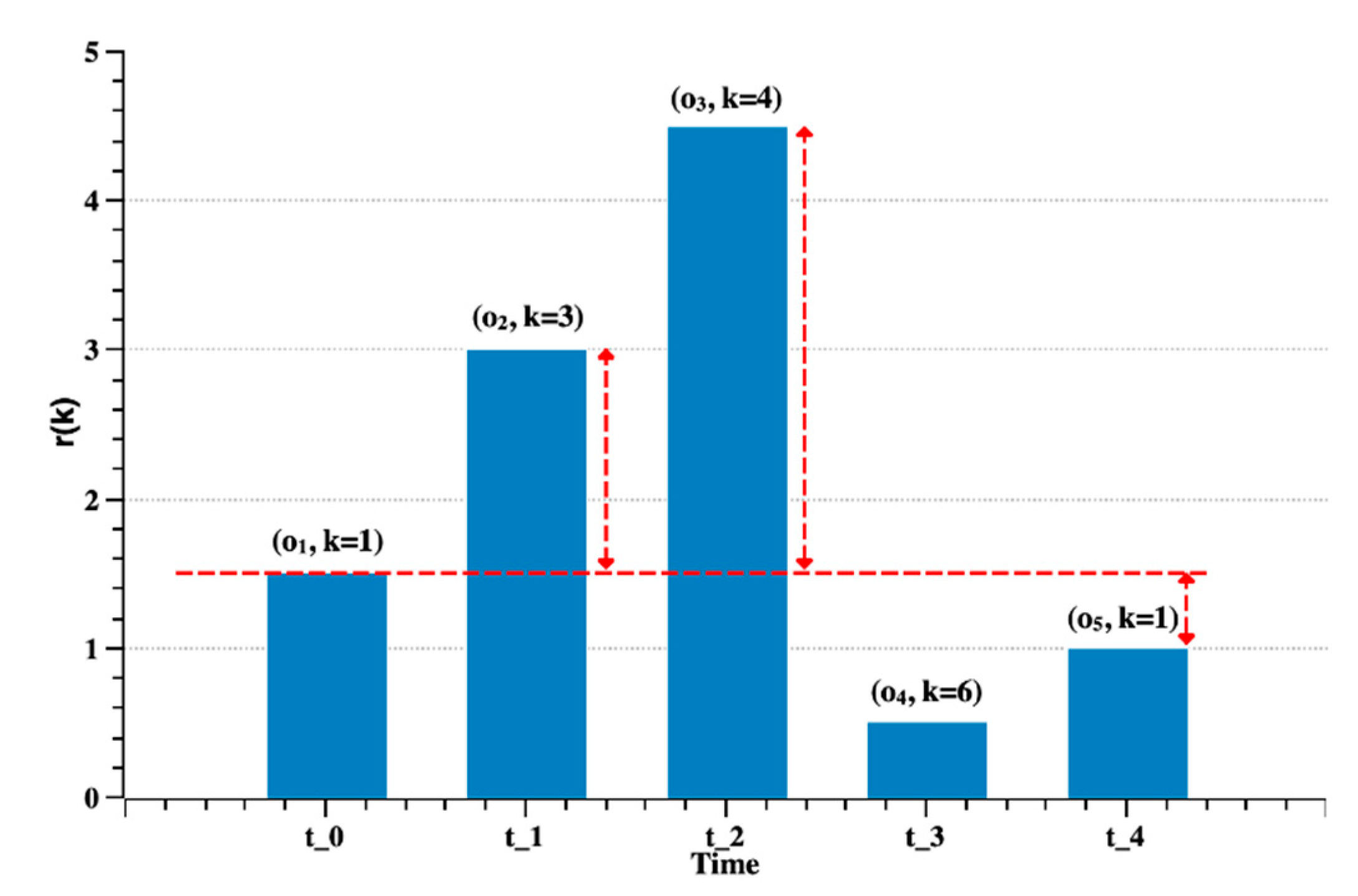

3.4. Preference Retention Calculation

3.5. Preference Prediction

Definition 2 Embedding Similarity

4. Results and Discussion

4.1. Dataset and Experimental Setup

4.2. Evaluation Metrics

4.3. Results

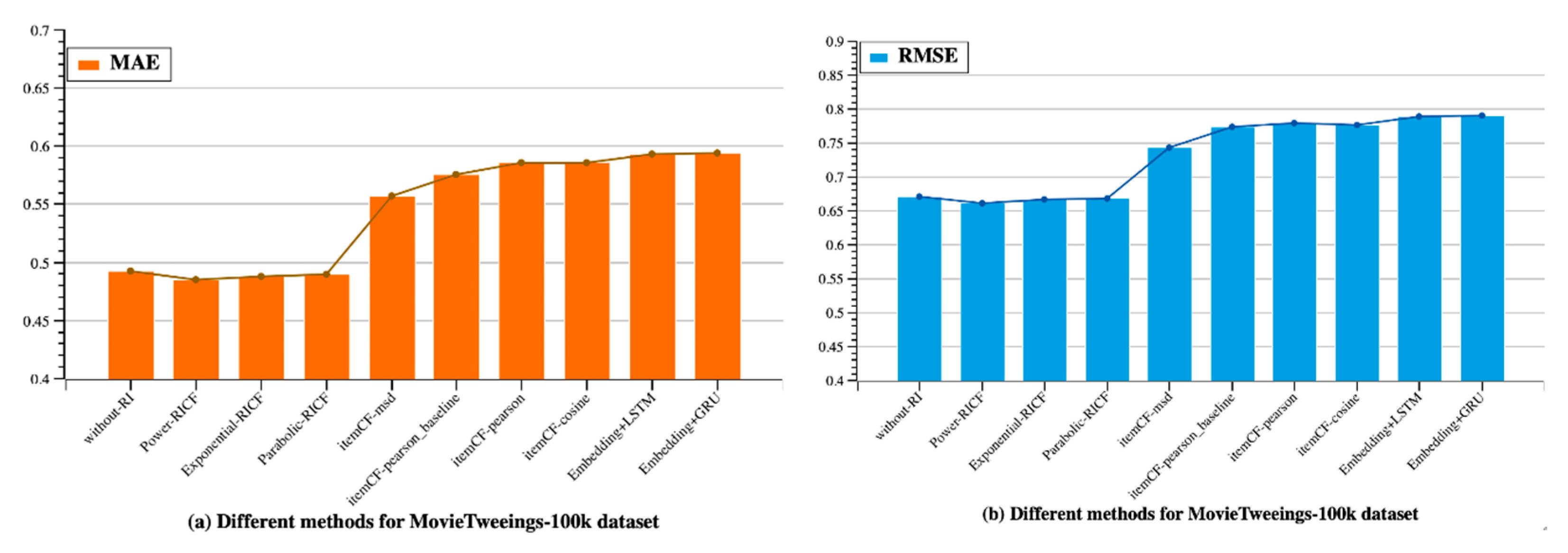

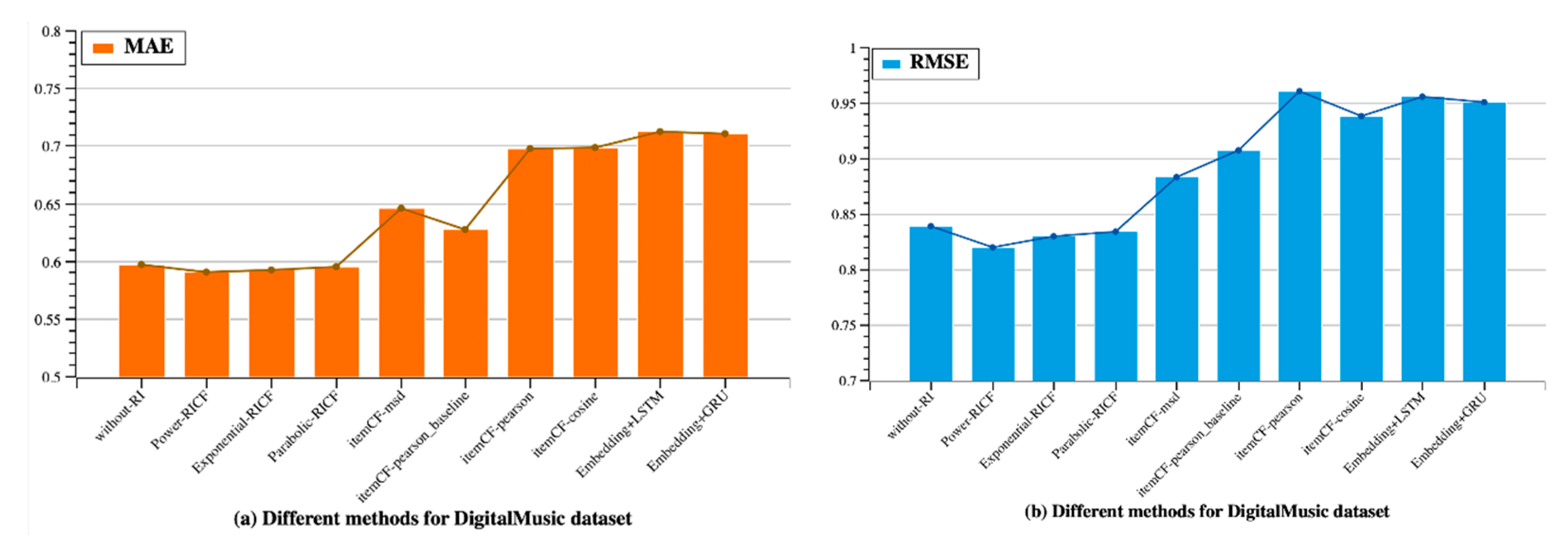

4.3.1. Recommendation Quality

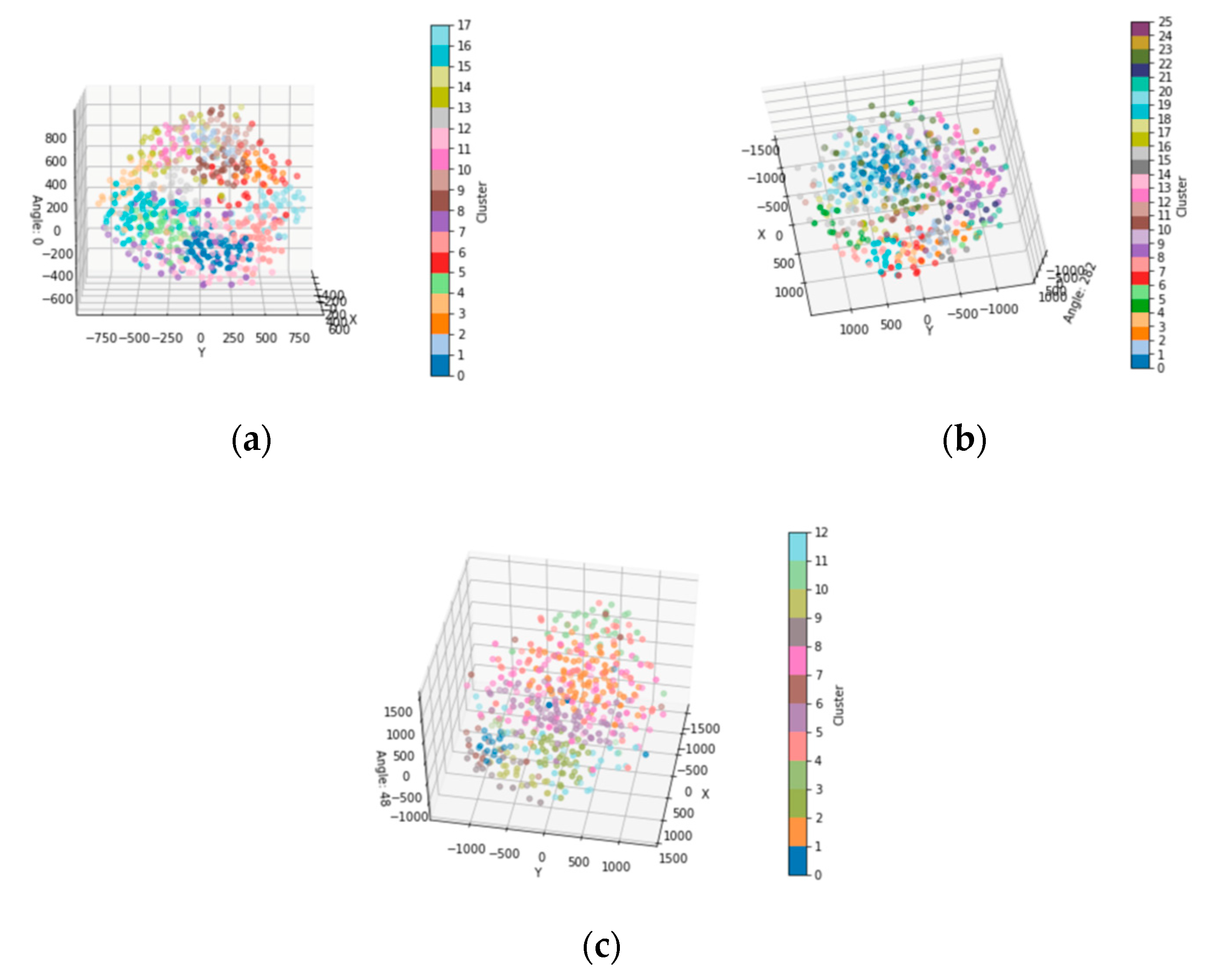

4.3.2. Embedding Clustering Visualization

4.3.3. The stability of RICF

4.4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ma, X.; Lu, H.; Gan, Z.; Zeng, J. An Explicit Trust and Distrust Clustering Based Collaborative Filtering Recommendation Approach. Electron. Commer. Res. Appl. 2017, 25, 29–39. [Google Scholar] [CrossRef]

- Wang, J.; de Vries, A.P.; Reinders, M.J.T. Unifying User-Based and Item-Based Collaborative Filtering Approaches by Similarity Fusion. In Proceedings of the 29th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval—SIGIR’06, Seattle, WA, USA, 6–11 August 2006; ACM Press: Seattle, WA, USA, 2006; p. 501. [Google Scholar]

- Masicampo, E.J.; Ambady, N. Predicting Fluctuations in Widespread Interest: Memory Decay and Goal-Related Memory Accessibility in Internet Search Trends. J. Exp. Psychol. Gener. 2014, 143, 205. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Yin, F.; Su, P.; Li, S.; Ye, L. Step-Enhancement of Memory Retention for User Interest Prediction. IEEE Access 2020, 8, 110203–110213. [Google Scholar] [CrossRef]

- Jia, D.; Qu, Z.; Wang, X.; Li, F.; Zhang, L.; Yang, K. Interest Mining Model of Micro-Blog Users by Using Multi-Modal Semantics and Interest Decay Model. In Proceedings of the International Conference on Artificial Intelligence and Security, Hohhot, China, 17–20 July 2020; Springer: Singapore, 2020; pp. 478–489. [Google Scholar]

- Lalwani, A.; Agrawal, S. What Does Time Tell? Tracing the Forgetting Curve Using Deep Knowledge Tracing. In Proceedings of the International Conference on Artificial Intelligence in Education, Chicago, IL, USA, 25–29 June 2019; Springer: Cham, Switzerland, 2019; pp. 158–162. [Google Scholar]

- Chen, J.; Wang, C.; Wang, J. Modeling the Interest-Forgetting Curve for Music Recommendation. In Proceedings of the ACM International Conference on Multimedia—MM’14, Orlando, FL, USA, 3–7 November 2014; ACM Press: Orlando, FL, USA, 2014; pp. 921–924. [Google Scholar]

- Li, T.; Jin, L.; Wu, Z.; Chen, Y. Combined Recommendation Algorithm Based on Improved Similarity and Forgetting Curve. Information 2019, 10, 130. [Google Scholar] [CrossRef]

- Yu, H.; Li, Z. A Collaborative Filtering Method Based on the Forgetting Curve. In Proceedings of the 2010 International Conference on Web Information Systems and Mining, Sanya, China, 23–24 October 2010; IEEE: Sanya, China, 2010; pp. 183–187. [Google Scholar]

- Walker, M.P.; Brakefield, T.; Hobson, J.A.; Stickgold, R. Dissociable Stages of Human Memory Consolidation and Reconsolidation. Nature 2003, 425, 616–620. [Google Scholar] [CrossRef]

- Tempel, T.; Niederée, C.; Jilek, C.; Ceroni, A.; Maus, H.; Runge, Y.; Frings, C. Temporarily Unavailable: Memory Inhibition in Cognitive and Computer Science. Interact. Comput. 2019, 31, 231–249. [Google Scholar] [CrossRef]

- Raaijmakers, J.G.W. Inhibition in Memory. In Stevens’ Handbook of Experimental Psychology and Cognitive Neuroscience; Wixted, J.T., Ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2018; pp. 1–34. ISBN 978-1-119-17016-7. [Google Scholar]

- Dooms, S.; De Pessemier, T.; Martens, L. Movietweetings: A Movie Rating Dataset Collected from Twitter. In Proceedings of the Workshop on Crowdsourcing and Human Computation for Recommender Systems, Hong Kong, China, 12–16 October 2013; Volume 2013, p. 43. [Google Scholar]

- McAuley, J.; Targett, C.; Shi, Q.; van den Hengel, A. Image-Based Recommendations on Styles and Substitutes. In Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval—SIGIR’15, Santiago, Chile, 9–13 August 2015; ACM Press: Santiago, Chile, 2015; pp. 43–52. [Google Scholar]

- Rich, E. User Modeling via Stereotypes. Cognit. Sci. 1979, 3, 329–354. [Google Scholar] [CrossRef]

- Salton, G. Automatic Text Processing: The Transformation, Analysis, and Retrieval of Reading; Addison-Wesley: Boston, MA, USA, 1989; Volume 169. [Google Scholar]

- Murthi, B.P.S.; Sarkar, S. The Role of the Management Sciences in Research on Personalization. Manag. Sci. 2003, 49, 1344–1362. [Google Scholar] [CrossRef]

- Lilien, G.L.; Kotler, P.; Moorthy, K.S. Marketing Models; Prentice Hall: Upper Saddle River, NJ, USA, 1995. [Google Scholar]

- Adomavicius, G.; Tuzhilin, A. Toward the next Generation of Recommender Systems: A Survey of the State-of-the-Art and Possible Extensions. IEEE Trans. Knowl. Data Eng. 2005, 17, 734–749. [Google Scholar] [CrossRef]

- Breese, J.S.; Heckerman, D.; Kadie, C. Empirical Analysis of Predictive Algorithms for Collaborative Filtering. arXiv 2013, arXiv:1301.7363. [Google Scholar]

- Tay, Y.; Anh Tuan, L.; Hui, S.C. Latent Relational Metric Learning via Memory-Based Attention for Collaborative Ranking. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 729–739. [Google Scholar]

- Yu, K.; Schwaighofer, A.; Tresp, V.; Xu, X.; Kriegel, H.-P. Probabilistic Memory-Based Collaborative Filtering. IEEE Trans. Knowl. Data Eng. 2004, 16, 56–69. [Google Scholar]

- Deshpande, M.; Karypis, G. Item-Based Top-n Recommendation Algorithms. ACM Trans. Inf. Syst. (TOIS) 2004, 22, 143–177. [Google Scholar] [CrossRef]

- Sarwar, B.; Karypis, G.; Konstan, J.; Reidl, J. Item-Based Collaborative Filtering Recommendation Algorithms. In Proceedings of the Tenth International Conference on World Wide Web—WWW’01, Hong Kong, China, 1–5 May 2001; ACM Press: Hong Kong, China, 2001; pp. 285–295. [Google Scholar]

- Aggarwal, C.C. Model-based collaborative filtering. In Recommender Systems; Springer: Cham, Switzerland, 2016; pp. 71–138. [Google Scholar]

- Shi, Y.; Larson, M.; Hanjalic, A. List-Wise Learning to Rank with Matrix Factorization for Collaborative Filtering. In Proceedings of the Fourth ACM Conference on Recommender Systems, Barcelona, Spain, 26–30 September 2010; pp. 269–272. [Google Scholar]

- Lian, J.; Zhang, F.; Xie, X.; Sun, G. CCCFNet: A Content-Boosted Collaborative Filtering Neural Network for Cross Domain Recommender Systems. In Proceedings of the 26th International Conference on World Wide Web Companion, Perth, Australia, 3–7 April 2017; pp. 817–818. [Google Scholar]

- Shi, C.; Hu, B.; Zhao, W.X.; Philip, S.Y. Heterogeneous Information Network Embedding for Recommendation. IEEE Trans. Knowl. Data Eng. 2018, 31, 357–370. [Google Scholar] [CrossRef]

- Cai, X.; Han, J.; Yang, L. Generative Adversarial Network Based Heterogeneous Bibliographic Network Representation for Personalized Citation Recommendation. In Proceedings of the AAAI, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Wang, X.; He, X.; Cao, Y.; Liu, M.; Chua, T.-S. Kgat: Knowledge Graph Attention Network for Recommendation. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 3–7 August 2019; pp. 950–958. [Google Scholar]

- Khoshneshin, M.; Street, W.N. Collaborative Filtering via Euclidean Embedding. In Proceedings of the Fourth ACM Conference on Recommender Systems—RecSys’10, Barcelona, Spain, 26–30 September 2010; ACM Press: Barcelona, Spain, 2010; p. 87. [Google Scholar]

- Aggarwal, C.C. Recommender Systems; Springer: Cham, Switzerland, 2016; Volume 1. [Google Scholar]

- Da Costa, A.F.; Manzato, M.G.; Campello, R.J. Group-Based Collaborative Filtering Supported by Multiple Users’ Feedback to Improve Personalized Ranking. In Proceedings of the 22nd Brazilian Symposium on Multimedia and the Web, Teresina, Brazil, 8–11 November 2016; pp. 279–286. [Google Scholar]

- Najafabadi, M.K.; Mahrin, M.N.; Chuprat, S.; Sarkan, H.M. Improving the Accuracy of Collaborative Filtering Recommendations Using Clustering and Association Rules Mining on Implicit Data. Comput. Hum. Behav. 2017, 67, 113–128. [Google Scholar] [CrossRef]

- Li, Q.; Kim, B.M. Clustering Approach for Hybrid Recommender System. In Proceedings of the IEEE/WIC International Conference on Web Intelligence (WI 2003), Halifax, NS, Canada, 13–17 October 2003; pp. 33–38. [Google Scholar]

- Zhang, W.; Du, Y.; Yoshida, T.; Yang, Y. DeepRec: A Deep Neural Network Approach to Recommendation with Item Embedding and Weighted Loss Function. Inf. Sci. 2019, 470, 121–140. [Google Scholar] [CrossRef]

- Barkan, O.; Koenigstein, N. ITEM2VEC: Neural Item Embedding for Collaborative Filtering. In Proceedings of the 2016 IEEE 26th International Workshop on Machine Learning for Signal Processing (MLSP), Vietri sul Mare, Italy, 13–16 September 2016; IEEE: Vietri sul Mare, Italy, 2016; pp. 1–6. [Google Scholar]

- Chen, Y.-C.; Hui, L.; Thaipisutikul, T. A Collaborative Filtering Recommendation System with Dynamic Time Decay. J. Supercomput. 2020. [Google Scholar] [CrossRef]

- Gui, Y.; Tian, X. A Personalized Recommendation Algorithm Considering Recent Changes in Users’ Interests. In Proceedings of the 2nd International Conference on Big Data Research—ICBDR 2018, Weihai, China, 27–29 October 2018; ACM Press: Weihai, China, 2018; pp. 127–132. [Google Scholar]

- Wang, S.; Hu, L.; Wang, Y.; Cao, L.; Sheng, Q.Z.; Orgun, M. Sequential Recommender Systems: Challenges, Progress and Prospects. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; International Joint Conferences on Artificial Intelligence Organization: Macao, China, 2019; pp. 6332–6338. [Google Scholar]

- Xingjian, S.H.I.; Chen, Z.; Wang, H.; Yeung, D.-Y.; Wong, W.-K.; Woo, W. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 802–810. [Google Scholar]

- Wu, C.-Y.; Ahmed, A.; Beutel, A.; Smola, A.J.; Jing, H. Recurrent Recommender Networks. In Proceedings of the Tenth ACM International Conference on Web Search and Data Mining, Cambridge, UK, 6–10 February 2017; pp. 495–503. [Google Scholar]

- Zhang, Z.; Robinson, D.; Tepper, J. Detecting Hate Speech on Twitter Using a Convolution-Gru Based Deep Neural Network. In Proceedings of the European Semantic Web Conference, Anissaras, Greece, 3–7 June 2018; Springer: Anissaras, Greece, 2018; pp. 745–760. [Google Scholar]

- Underwood, B.J. Interference and Forgetting. Psychol. Rev. 1957, 64, 49. [Google Scholar] [CrossRef]

- Alves, M.V.C.; Bueno, O.F.A. Retroactive Interference: Forgetting as an Interruption of Memory Consolidation. Temas Psicol. 2017, 25, 1055–1067. [Google Scholar] [CrossRef]

- Melton, A.W.; von Lackum, W.J. Retroactive and Proactive Inhibition in Retention: Evidence for a Two-Factor Theory of Retroactive Inhibition. Am. J. Psychol. 1941, 54, 157. [Google Scholar] [CrossRef]

- Řehuuřek, R.; Sojka, P. Gensim—Statistical Semantics in Python. 2011. Available online: https://radimrehurek.com/gensim/ (accessed on 19 June 2020).

- McCallum, A.; Nigam, K.; Ungar, L.H. Efficient Clustering of High-Dimensional Data Sets with Application to Reference Matching. In Proceedings of the Sixth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Boston, MA, USA, 20–23 August 2000; pp. 169–178. [Google Scholar]

- Zhang, G.; Zhang, C.; Zhang, H. Improved K-Means Algorithm Based on Density Canopy. Knowl. Based Syst. 2018, 145, 289–297. [Google Scholar] [CrossRef]

- Kumar, A.; Ingle, Y.S.; Pande, A. Canopy Clustering: A Review on Pre-Clustering Approach to K-Means Clustering. Int. J. Innov. Adv. Comput. Sci. 2014, 3, 22–29. [Google Scholar]

- Jain, A.K. Data Clustering: 50 Years beyond K-Means. Pattern Recognit. Lett. 2010, 31, 651–666. [Google Scholar] [CrossRef]

- Alsabti, K.; Ranka, S.; Singh, V. An Efficient K-Means Clustering Algorithm. Electr. Eng. Comput. Sci. 1997, 43, 1–7. [Google Scholar]

- Harper, F.M.; Konstan, J.A. The Movielens Datasets: History and Context. ACM Trans. Interac. Intell. Syst. 2015, 5, 1–19. [Google Scholar] [CrossRef]

- van der Maaten, L.; Hinton, G. Visualizing Data Using T-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Candia, C.; Jara-Figueroa, C.; Rodriguez-Sickert, C.; Barabási, A.-L.; Hidalgo, C.A. The Universal Decay of Collective Memory and Attention. Nat. Hum. Behav. 2019, 3, 82–91. [Google Scholar] [CrossRef]

| User | Movie | Rating | Time | Preference Cluster | Similarity to Movie 4 |

|---|---|---|---|---|---|

| 1 | 2.5 | 05 April 2018 | C1 | 0.9 | |

| 3 | 4.0 | 11 May 2018 | C2 | 0.8 | |

| 4 | 3.5 | 05 April 2018 | C3 | 1.0 | |

| 1 | 2.0 | 01 June 2018 | C1 | 0.9 | |

| 2 | 2.5 | 10 October 2018 | C4 | 0.7 | |

| 3 | 3.0 | 15 October 2018 | C2 | 0.8 | |

| 4 | ? | 11 December 2018 | C3 | 1.0 |

| MovieTweetings-100k | MovieTweetings-Latest | DigitalMusic | |

|---|---|---|---|

| Number of users | 16,554 | 68,332 | 478,235 |

| Number of items | 10,506 | 35,931 | 266,414 |

| Number of ratings | 100,000 | 876,673 | 836,006 |

| Rating range | 1–10 | 1–10 | 1–5 |

| Time range | 28/02/2013 to 01/09/2013 | 28/02/2013 to 10/07/2020 | 20/01/1998 to 23/07/2014 |

| RMSE | MAE | |

|---|---|---|

| without-RI | 0.6272 | 0.4624 |

| Power-RICF | 0.6256 | 0.4609 |

| Exponential-RICF | 0.6260 | 0.4614 |

| Parabolic-RICF | 0.6261 | 0.4616 |

| ItemCF | 0.6809 | 0.5134 |

| Embedding+LSTM | 0.8121 | 0.6385 |

| Embedding+GRU | 0.7870 | 0.6158 |

| RMSE | MAE | |

|---|---|---|

| without-RI | 0.6711 | 0.4919 |

| Power-RICF | 0.6606 | 0.4850 |

| Exponential-RICF | 0.6663 | 0.4877 |

| Parabolic-RICF | 0.6680 | 0.4895 |

| ItemCF | 0.7741 | 0.5752 |

| Embedding+LSTM | 0.7892 | 0.5933 |

| Embedding+GRU | 0.7908 | 0.5935 |

| RMSE | MAE | |

|---|---|---|

| without-RI | 0.8389 | 0.5971 |

| Power-RICF | 0.8201 | 0.5908 |

| Exponential-RICF | 0.8298 | 0.5923 |

| Parabolic-RICF | 0.8339 | 0.5949 |

| ItemCF | 0.9079 | 0.6277 |

| Embedding+LSTM | 0.9557 | 0.7126 |

| Embedding+GRU | 0.9508 | 0.7106 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, N.; Chen, L.; Yuan, Y. An Improved Collaborative Filtering Recommendation Algorithm Based on Retroactive Inhibition Theory. Appl. Sci. 2021, 11, 843. https://doi.org/10.3390/app11020843

Yang N, Chen L, Yuan Y. An Improved Collaborative Filtering Recommendation Algorithm Based on Retroactive Inhibition Theory. Applied Sciences. 2021; 11(2):843. https://doi.org/10.3390/app11020843

Chicago/Turabian StyleYang, Nihong, Lei Chen, and Yuyu Yuan. 2021. "An Improved Collaborative Filtering Recommendation Algorithm Based on Retroactive Inhibition Theory" Applied Sciences 11, no. 2: 843. https://doi.org/10.3390/app11020843

APA StyleYang, N., Chen, L., & Yuan, Y. (2021). An Improved Collaborative Filtering Recommendation Algorithm Based on Retroactive Inhibition Theory. Applied Sciences, 11(2), 843. https://doi.org/10.3390/app11020843