A Multi-Resolution Approach to GAN-Based Speech Enhancement

Abstract

1. Introduction

- We propose a progressive generator to reflect the multi-resolution characteristics of speech.

- We propose a multi-scale discriminator to stabilize the GAN training without additional complex training techniques.

- The experimental results showed that the multi-scale structure is an effective solution for both deterministic and GAN-based models, outperforming the conventional GAN-based speech enhancement techniques.

2. GAN-Based Speech Enhancement

3. Multi-Resolution Approach for Speech Enhancement

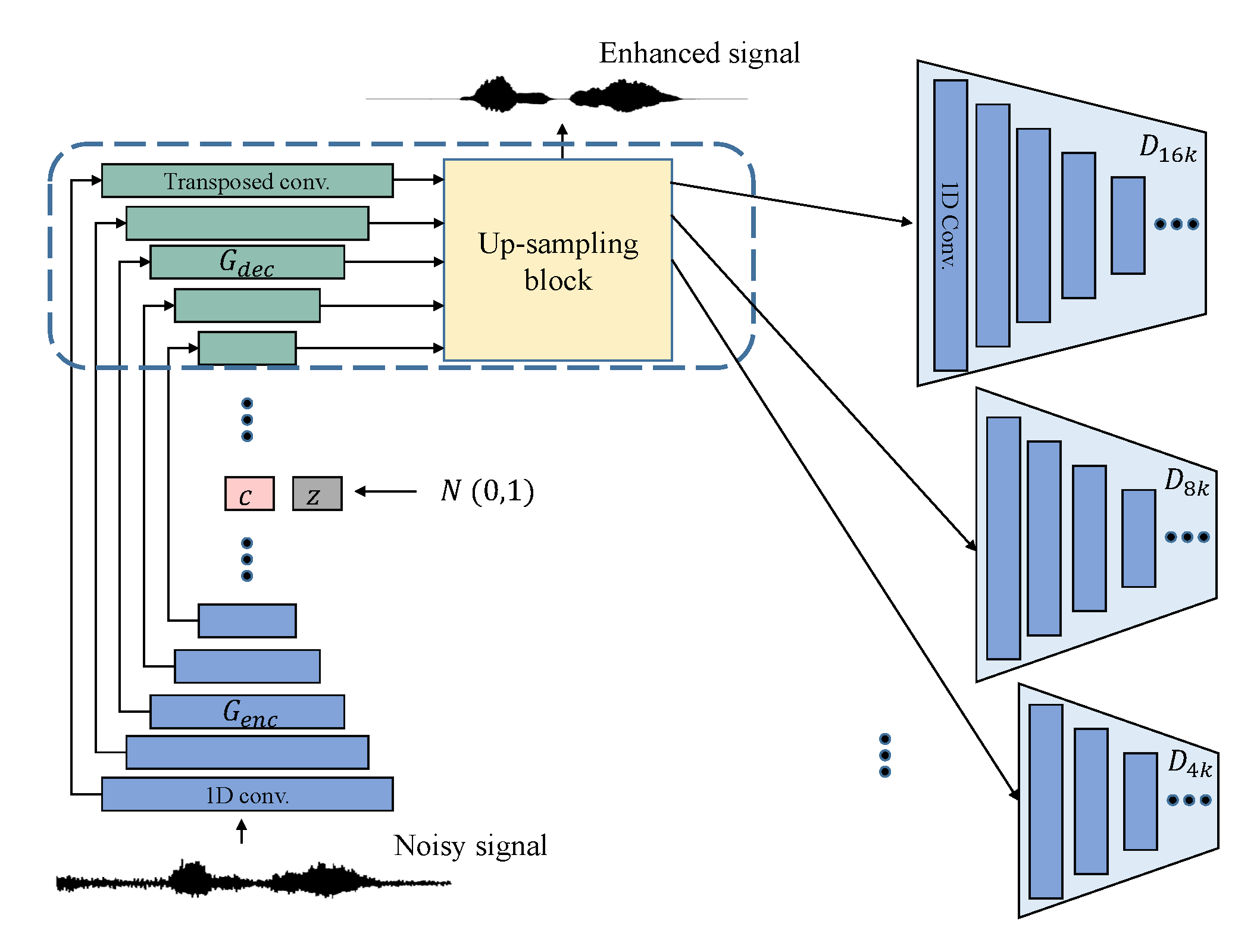

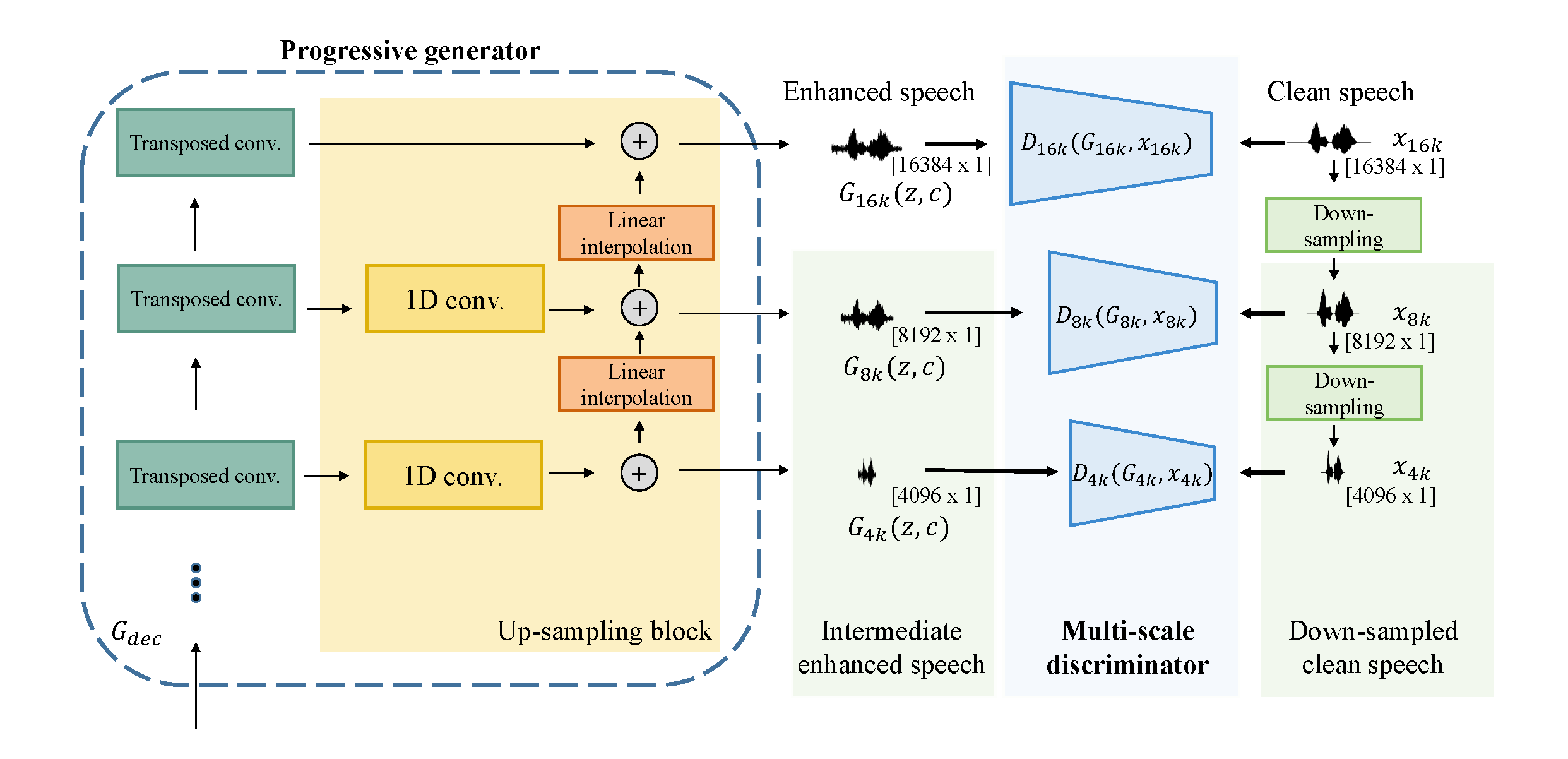

3.1. Progressive Generator

3.2. Multi-Scale Discriminator

4. Experimental Settings

4.1. Dataset

4.2. Network Structure

4.3. Evaluation Methods

4.3.1. Objective Evaluation

- PESQ: Perceptual evaluation of speech quality defined in the ITU-T P.862 standard [19] (from −0.5 to 4.5),

- STOI: Short-time objective intelligibility [20] (from 0 to 1),

- CSIG: Mean opinion score (MOS) prediction of the signal distortion attending only to the speech signal [39] (from 1 to 5),

- CBAK: MOS prediction of the intrusiveness of background noise [39] (from 1 to 5),

- COVL: MOS prediction of the overall effect [39] (from 1 to 5).

4.3.2. Subjective Evaluation

5. Experiments and Results

5.1. Performance of Progressive Generator

5.1.1. Objective Results

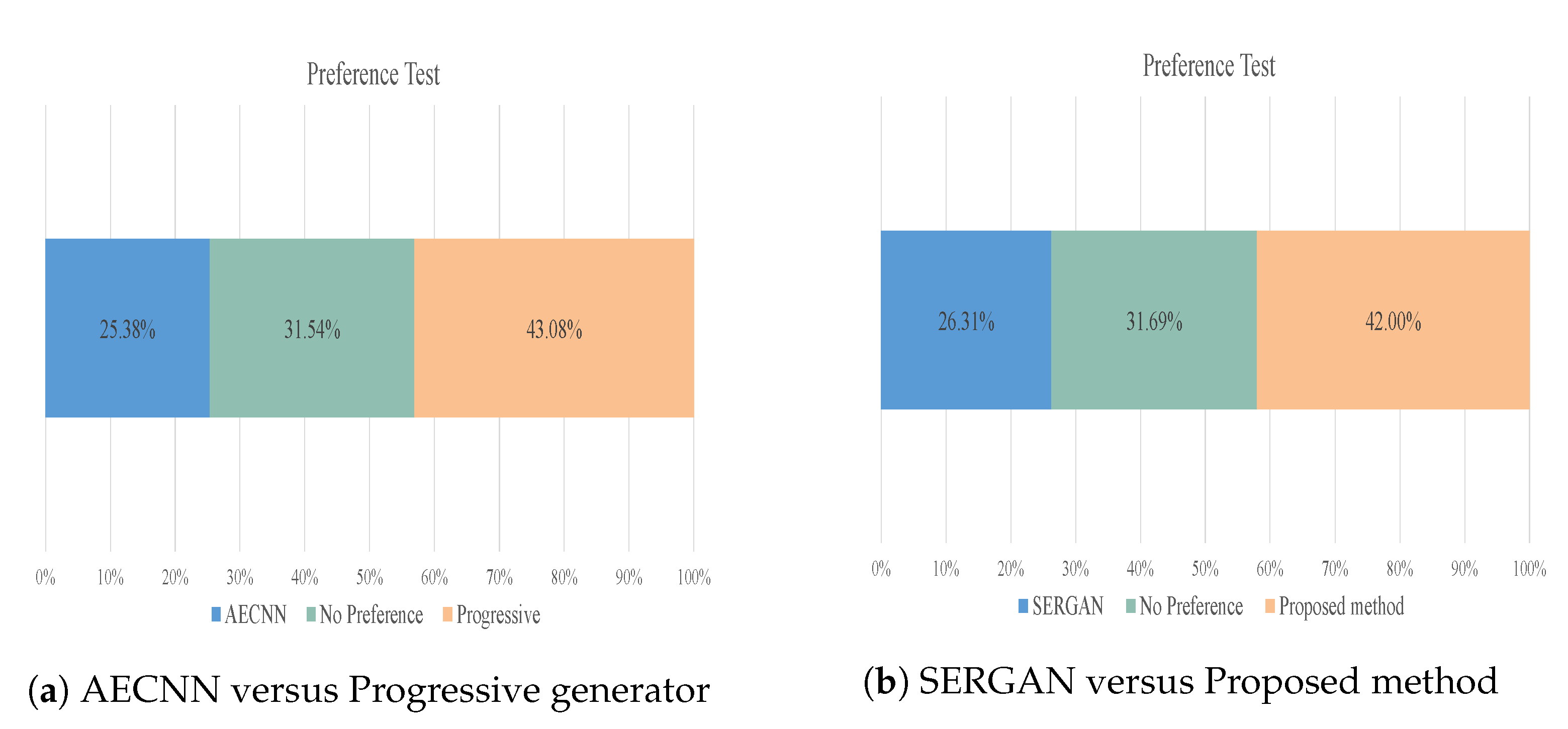

5.1.2. Subjective Results

5.2. Performance of Multi-Scale Discriminator

5.2.1. Objective Results

5.2.2. Subjective Results

5.2.3. Real-Time Feasibility

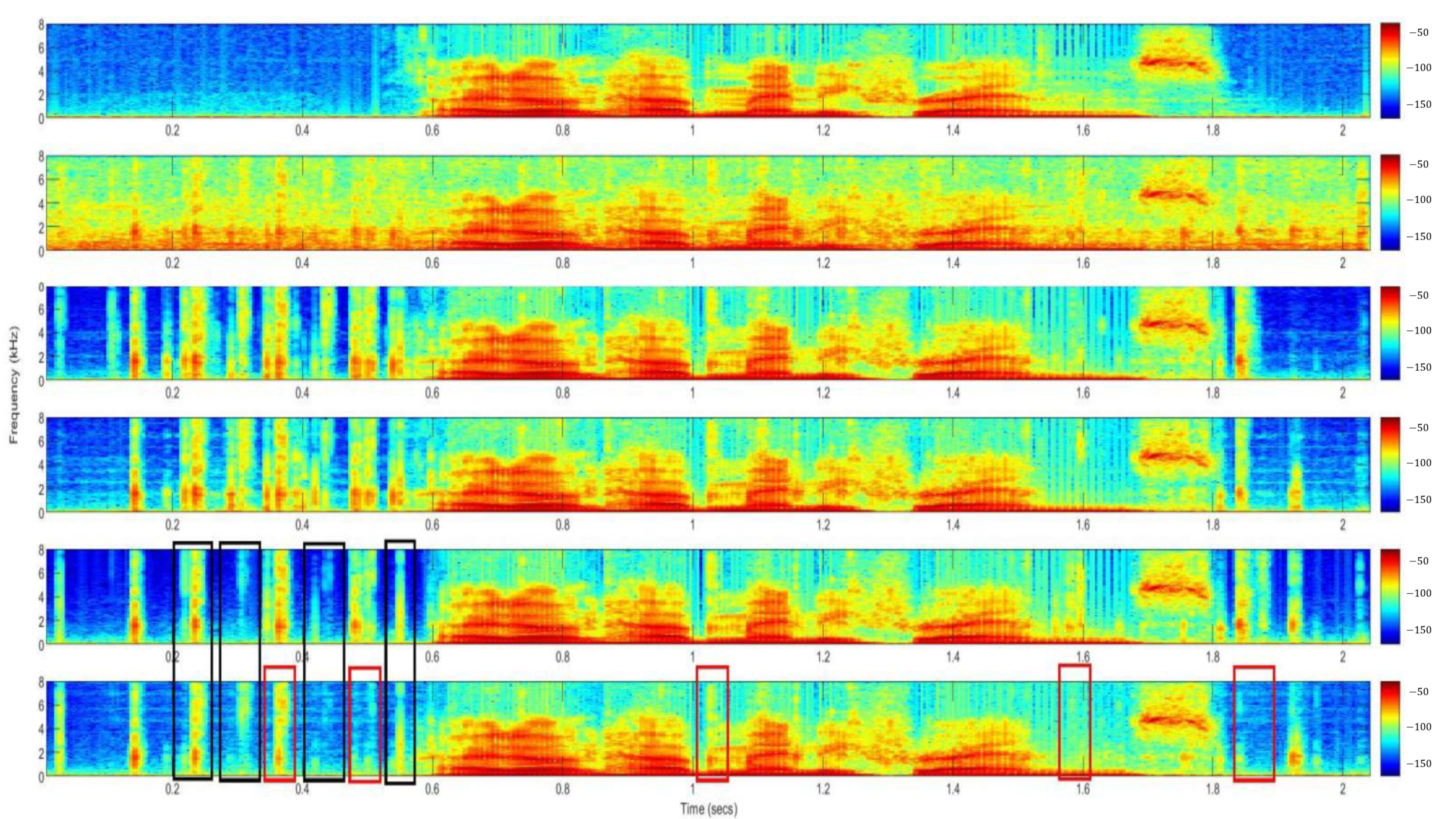

5.3. Analysis and Comparison of Spectorgrams

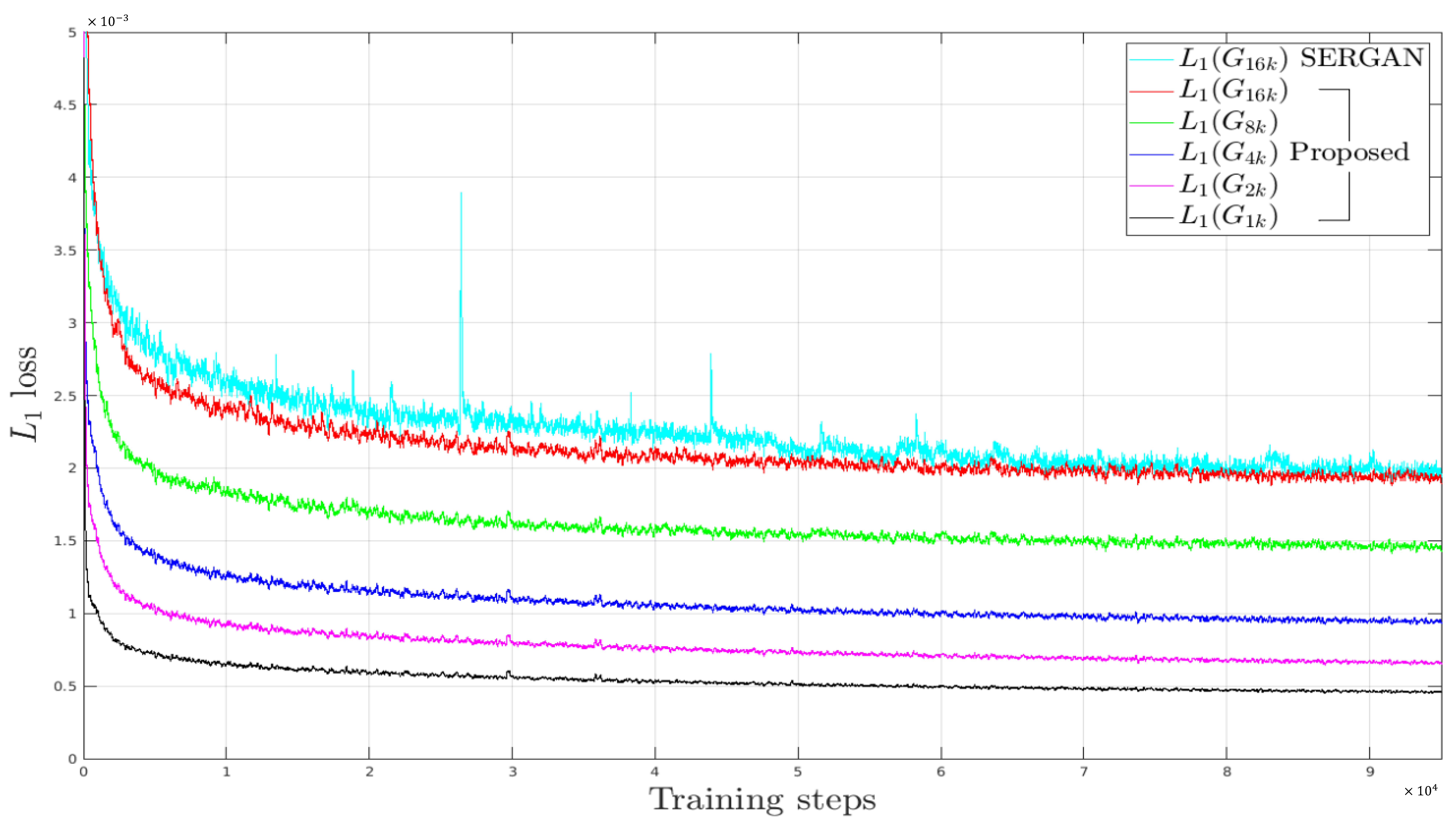

5.4. Fast and Stable Training of Proposed Model

5.5. Comparison with Conventional GAN-Based Speech Enhancement Techniques

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Benesty, J.; Makino, S.; Chen, J.D. Speech Enhancement; Springer: New York, NY, USA, 2007. [Google Scholar]

- Boll, S.F. Suppression of acoustic noise in speech using spectral subtraction. IEEE Trans. Acoust. Speech Signal Process. 1979, 27, 113–120. [Google Scholar] [CrossRef]

- Lim, J.S.; Oppenheim, A.V. Enhancement and bandwidth compression of noisy speech. Proc. IEEE 1979, 67, 1586–1604. [Google Scholar] [CrossRef]

- Scalart, P. Speech enhancement based on a priori signal to noise estimation. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Atlanta, GA, USA, 7–10 May 1996; pp. 629–632. [Google Scholar]

- Ephraim, Y.; Malah, D. Speech enhancement using a minimum-mean square error short-time spectral amplitude estimator. IEEE Trans. Acoust. Speech Signal Process. 1984, 32, 1109–1121. [Google Scholar] [CrossRef]

- Kim, N.S.; Chang, J.H. Spectral enhancement based on global soft decision. IEEE Signal Process. Lett. 2000, 7, 108–110. [Google Scholar]

- Kwon, K.; Shin, J.W.; Kim, N.S. NMF-based speech enhancement using bases update. IEEE Signal Process. Lett. 2015, 22, 450–454. [Google Scholar] [CrossRef]

- Wilson, K.; Raj, B.; Smaragdis, P.; Divakaran, A. Speech denoising using nonnegative matrix factorization with priors. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Las Vegas, NV, USA, 30 March–4 April 2008; pp. 4029–4032. [Google Scholar]

- Xu, Y.; Du, J.; Dai, L.R.; Lee, C.H. A regression approach to speech enhancement based on deep neural networks. IEEE Trans. Audio Speech Lang. Process. 2015, 23, 7–19. [Google Scholar] [CrossRef]

- Grais, E.M.; Sen, M.U.; Erdogan, H. Deep neural networks for single channel source separation. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 3734–3738. [Google Scholar]

- Zhao, H.; Zarar, S.; Tashev, I.; Lee, C.H. Convolutional-recurrent neural networks for speech enhancement. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 2401–2405. [Google Scholar]

- Huang, P.S.; Kim, M.; Hasegawa, J.M.; Smaragdis, P. Joint optimization of masks and deep recurrent neural networks for monaural source separation. IEEE Trans. Audio Speech Lang. Process. 2015, 23, 2136–2147. [Google Scholar] [CrossRef]

- Roux, J.L.; Wichern, G.; Watanabe, S.; Sarroff, A.; Hershey, J. The Phasebook: Building complex masks via discrete representations for source separation. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 66–70. [Google Scholar]

- Wang, Z.; Tan, K.; Wang, D. Deep Learning based phase reconstruction for speaker separation: A trigonometric perspective. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 71–75. [Google Scholar]

- Wang, Z.; Roux, J.L.; Wang, D.; Hershey, J. End-to-End Speech Separation with Unfolded Iterative Phase Reconstruction. arXiv 2018, arXiv:1804.10204. [Google Scholar]

- Pandey, A.; Wang, D. A new framework for supervised speech enhancement in the time domain. In Proceedings of the INTERSPEECH, Hyderabad, India, 2–6 September 2018; pp. 1136–1140. [Google Scholar]

- Stoller, D.; Ewert, S.; Dixon, S. Wave-U-net: A multi-scale neural network for end-to-end audio source separation. In Proceedings of the International Society for Music Information Retrieval Conference (ISMIR), Paris, France, 23–27 September 2018; pp. 334–340. [Google Scholar]

- Rethage, D.; Pons, J.; Xavier, S. A wavenet for speech denoising. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5069–5073. [Google Scholar]

- ITU-T. Perceptual Evaluation of Speech Quality (PESQ): An Objective Method for End-to-End Speech Quality Assessment of Narrow-Band Telephone Networks and Speech Codecs. Rec. ITU-T P. 862; 2000. Available online: https://www.itu.int/rec/T-REC-P.862 (accessed on 18 February 2019).

- Jensen, J.; Taal, C.H. An algorithm for predicting the intelligibility of speech masked by modulated noise maskers. IEEE Trans. Audio Speech Lang. Process. 2016, 24, 2009–2022. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Pascual, S.; Bonafonte, A.; Serrà, J. SEGAN: Speech enhancement generative adversarial network. In Proceedings of the INTERSPEECH, Stockholm, Sweden, 20–24 August 2017; pp. 3642–3646. [Google Scholar]

- Soni, M.H.; Shah, N.; Patil, H.A. Time-frequency masking-based speech enhancement using generative adversarial network. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5039–5043. [Google Scholar]

- Pandey, A.; Wang, D. On adversarial training and loss functions for speech enhancement. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5414–5418. [Google Scholar]

- Fu, S.-W.; Liao, C.-F.; Yu, T.; Lin, S.-D. MetricGAN: Generative adversarial networks based black-box metric scores optimization for speech enhancement. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 September 2019. [Google Scholar]

- Baby, D.; Verhulst, S. Sergan: Speech enhancement using relativistic generative adversarial networks with gradient penalty. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 106–110. [Google Scholar]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1125–1134. [Google Scholar]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of wasserstein gans. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 5769–5779. [Google Scholar]

- Jolicoeur-Martineau, A. The Relativistic Discriminator: A Key Element Missing from Standard GAN. arXiv 2018, arXiv:1807.00734. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive Growing of GANs for Improved Quality, Stability, and Variation. arXiv 2018, arXiv:1710.10196. [Google Scholar]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and improving the image quality of styleGAN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 8107–8116. [Google Scholar]

- Alexandre, D.; Gabriel, S.; Yossi, A. Real time speech enhancement in the Waveform domain. In Proceedings of the INTERSPEECH, Shanghai, China, 25–29 October 2020; pp. 3291–3295. [Google Scholar]

- Yamamoto, R.; Song, E.; Kim, J.-M. Parallel wavegan: A fast waveform generation model based on generative adversarial networks with multi-resolution spectrogram. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6199–6203. [Google Scholar]

- Valentini-Botinhao, C.; Wang, X.; Takaki, S.; Yamagishi, J. Investigating rnn-based speech enhancement methods for noise robust text-to-speech. In Proceedings of the International Symposium on Computer Architecture, Seoul, Korea, 18–22 June 2016; pp. 146–152. [Google Scholar]

- Veaux, C.; Yamagishi, J.; King, S. The voice bank corpus: Design, collection and data analysis of a large regional accent speech database. In Proceedings of the International Conference Oriental COCOSDA held jointly with 2013 Conference on Asian Spoken Language Research and Evaluation (O-COCOSDA/CASLRE), Gurgaon, India, 25–27 November 2013; pp. 1–4. [Google Scholar]

- Thiemann, J.; Ito, N.; Vincent, E. The diverse environments multi-channel acoustic noise database (DEMAND): A database of multichannel environmental noise recordings. In Proceedings of the Meetings on Acoustics (ICA2013), Montreal, QC, Canada, 2–7 June 2013; Volume 19, p. 035081. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. Tensorflow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Hu, Y.; Loizou, P.C. Evaluation of objective quality measures for speech enhancement. IEEE Trans. Audio Speech Lang. Process. 2008, 16, 229–238. [Google Scholar] [CrossRef]

- Liu, G.; Gong, K.; Liang, X.; Chen, Z. CP-GAN: Context pyramid generative adversarial network for speech enhancement. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6624–6628. [Google Scholar]

| Block | Operation | Output Shape | ||

|---|---|---|---|---|

| Input | ||||

| Encoder | Conv1D (filterlength = 31, stide = 2) | |||

| Latent vector | ||||

| Decoder | Trconv (filterlength = 31, stide = 2) | |||

| Trconv (filterlength = 31, stide = 2) | Conv1D (filterlength = 17, stide = 1) Element-wise addition Linear interpolation layer | |||

| Model | PESQ | |

|---|---|---|

| AECNN [26] | 2.5873 | |

| Proposed | 2.6257 | |

| 2.6335 | ||

| 2.6407 | ||

| 2.6516 |

| Model | Generator | Discriminator | PESQ | RTF | |

|---|---|---|---|---|---|

| SERGAN [26] | U-net | Single | 2.5898 | 0.008 | |

| Proposed | Progressive | Single | 2.6514 | 0.010 | |

| Progressive | Multi-scale | 2.6541 | |||

| 2.7077 | |||||

| 2.6664 | |||||

| 2.6700 |

| Model | PESQ | CSIG | CBAK | COVL | STOI |

|---|---|---|---|---|---|

| Noisy | 1.97 | 3.35 | 2.44 | 2.63 | 0.91 |

| SEGAN [24] | 2.16 | 3.48 | 2.68 | 2.67 | 0.93 |

| AECNN [26] | 2.59 | 3.82 | 3.30 | 3.20 | 0.94 |

| SERGAN [26] | 2.59 | 3.82 | 3.28 | 3.20 | 0.94 |

| CP-GAN [38] | 2.64 | 3.93 | 3.29 | 3.28 | 0.94 |

| The progressive generator without adversarial training | 2.65 | 3.90 | 3.30 | 3.27 | 0.94 |

| The progressive generator with the multi-scale discriminator | 2.71 | 3.97 | 3.26 | 3.33 | 0.94 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.Y.; Yoon, J.W.; Cheon, S.J.; Kang, W.H.; Kim, N.S. A Multi-Resolution Approach to GAN-Based Speech Enhancement. Appl. Sci. 2021, 11, 721. https://doi.org/10.3390/app11020721

Kim HY, Yoon JW, Cheon SJ, Kang WH, Kim NS. A Multi-Resolution Approach to GAN-Based Speech Enhancement. Applied Sciences. 2021; 11(2):721. https://doi.org/10.3390/app11020721

Chicago/Turabian StyleKim, Hyung Yong, Ji Won Yoon, Sung Jun Cheon, Woo Hyun Kang, and Nam Soo Kim. 2021. "A Multi-Resolution Approach to GAN-Based Speech Enhancement" Applied Sciences 11, no. 2: 721. https://doi.org/10.3390/app11020721

APA StyleKim, H. Y., Yoon, J. W., Cheon, S. J., Kang, W. H., & Kim, N. S. (2021). A Multi-Resolution Approach to GAN-Based Speech Enhancement. Applied Sciences, 11(2), 721. https://doi.org/10.3390/app11020721