A Training Method for Low Rank Convolutional Neural Networks Based on Alternating Tensor Compose-Decompose Method

Abstract

1. Introduction

2. Related Works

2.1. Works on Compressing the Parameters of Pre-Trained CNNs

2.2. Works on Designing a Compressed Model

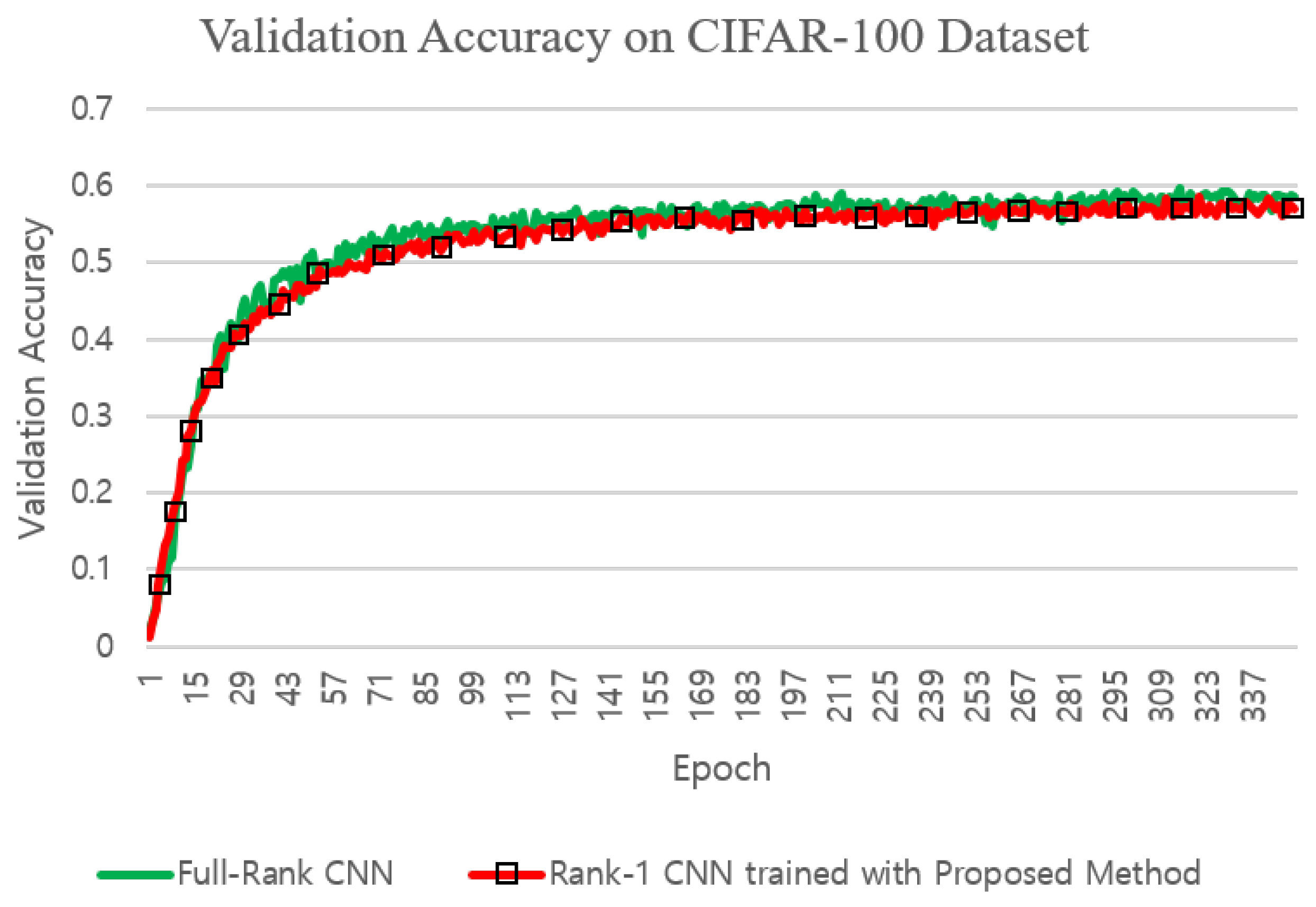

2.3. Works on Edge AI

3. Preliminaries for the Proposed Method

3.1. Bilateral-Projection Based 2DPCA

3.2. Flattened Convolutional Neural Networks

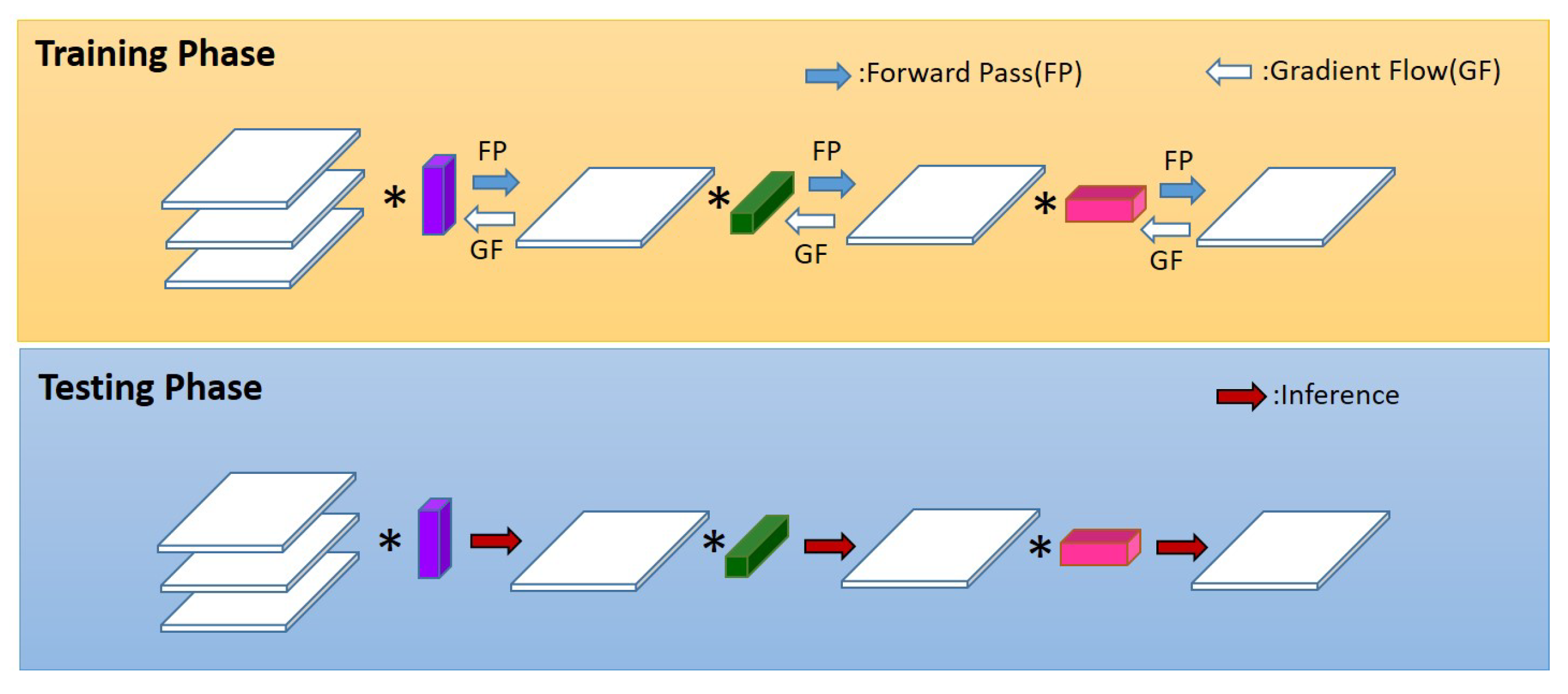

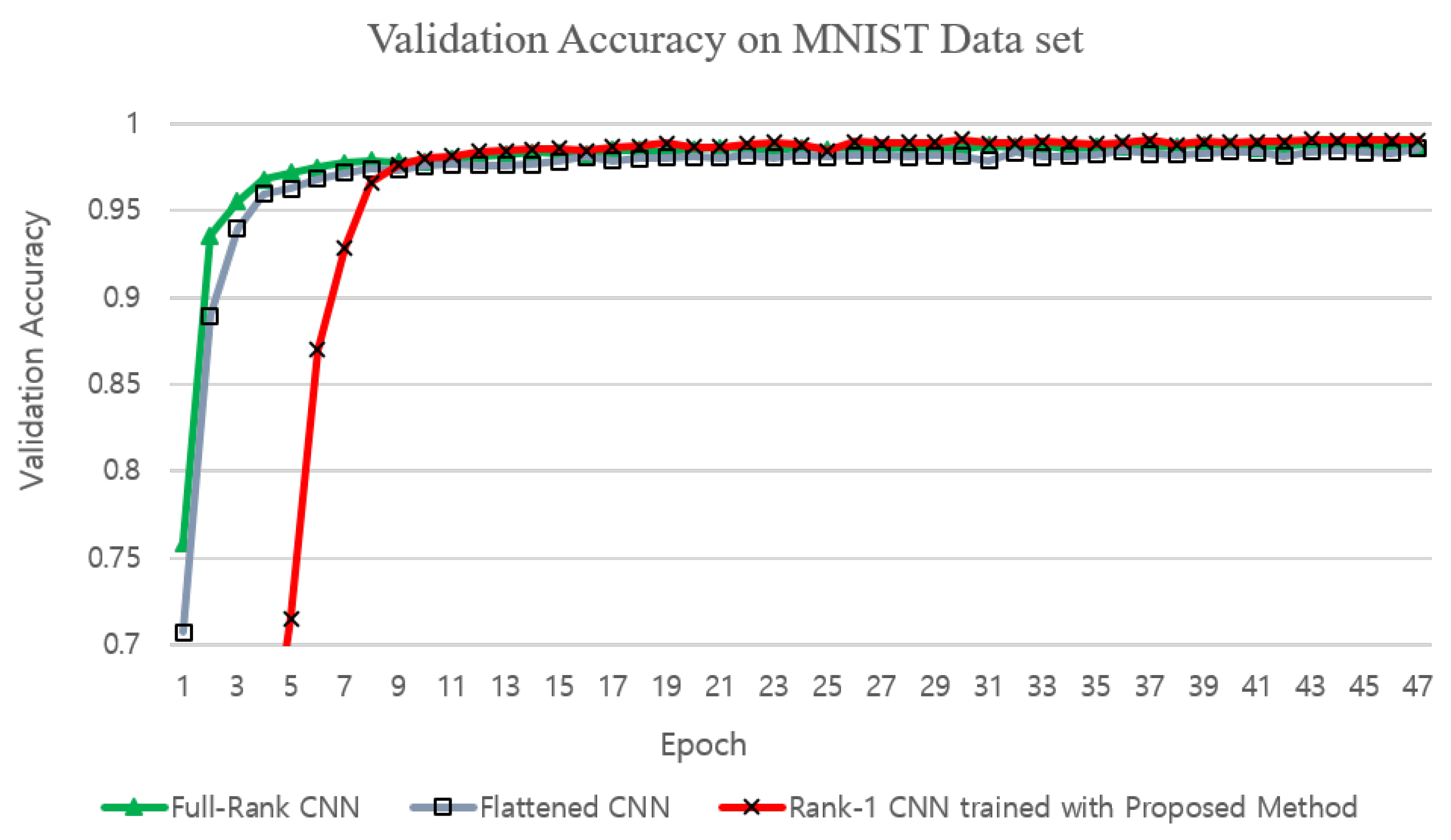

4. Application of the Proposed Training Method to the Rank-1 CNN

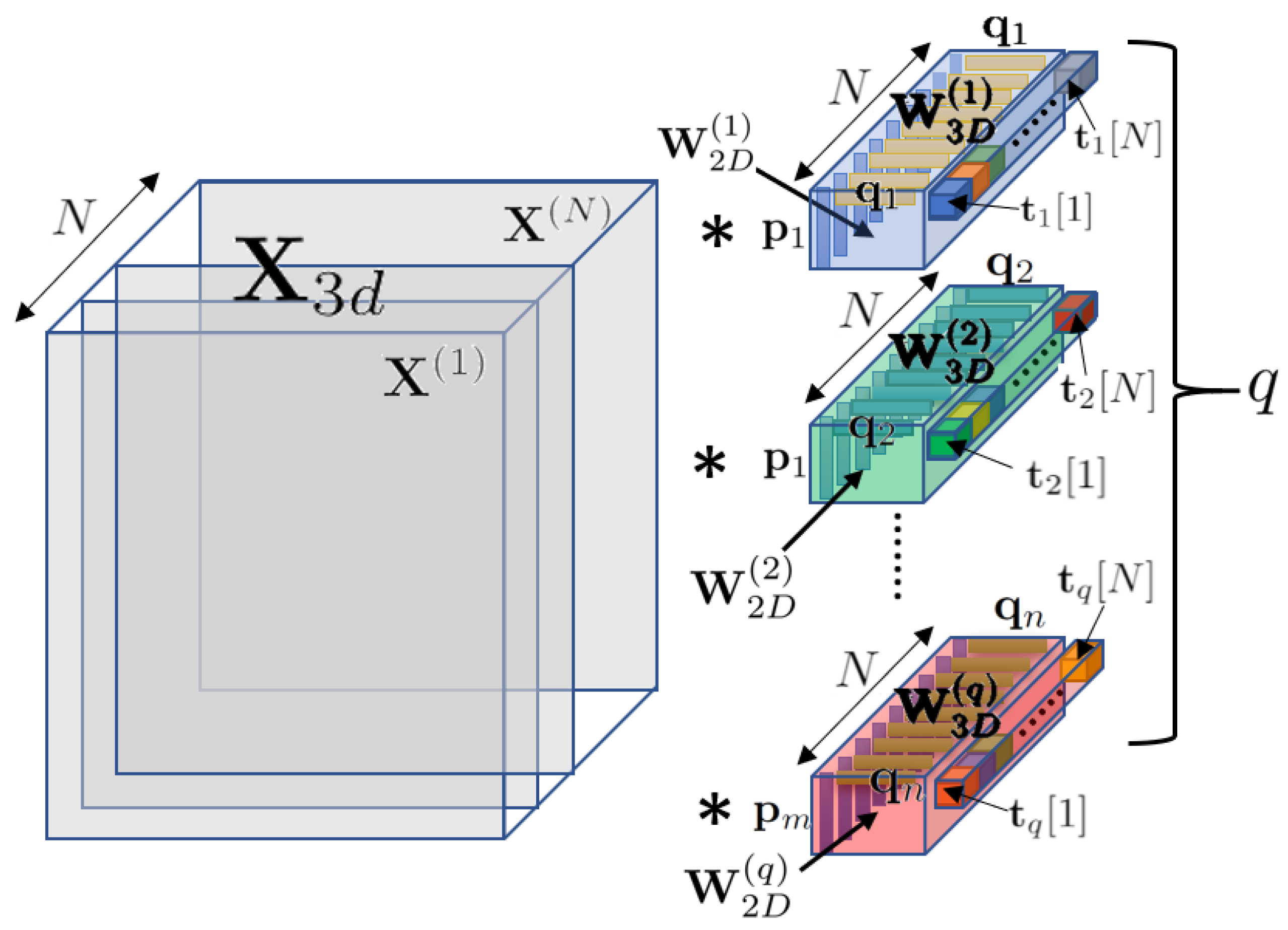

4.1. Construction of the 3-D Rank-1 Filters

4.2. Training Process

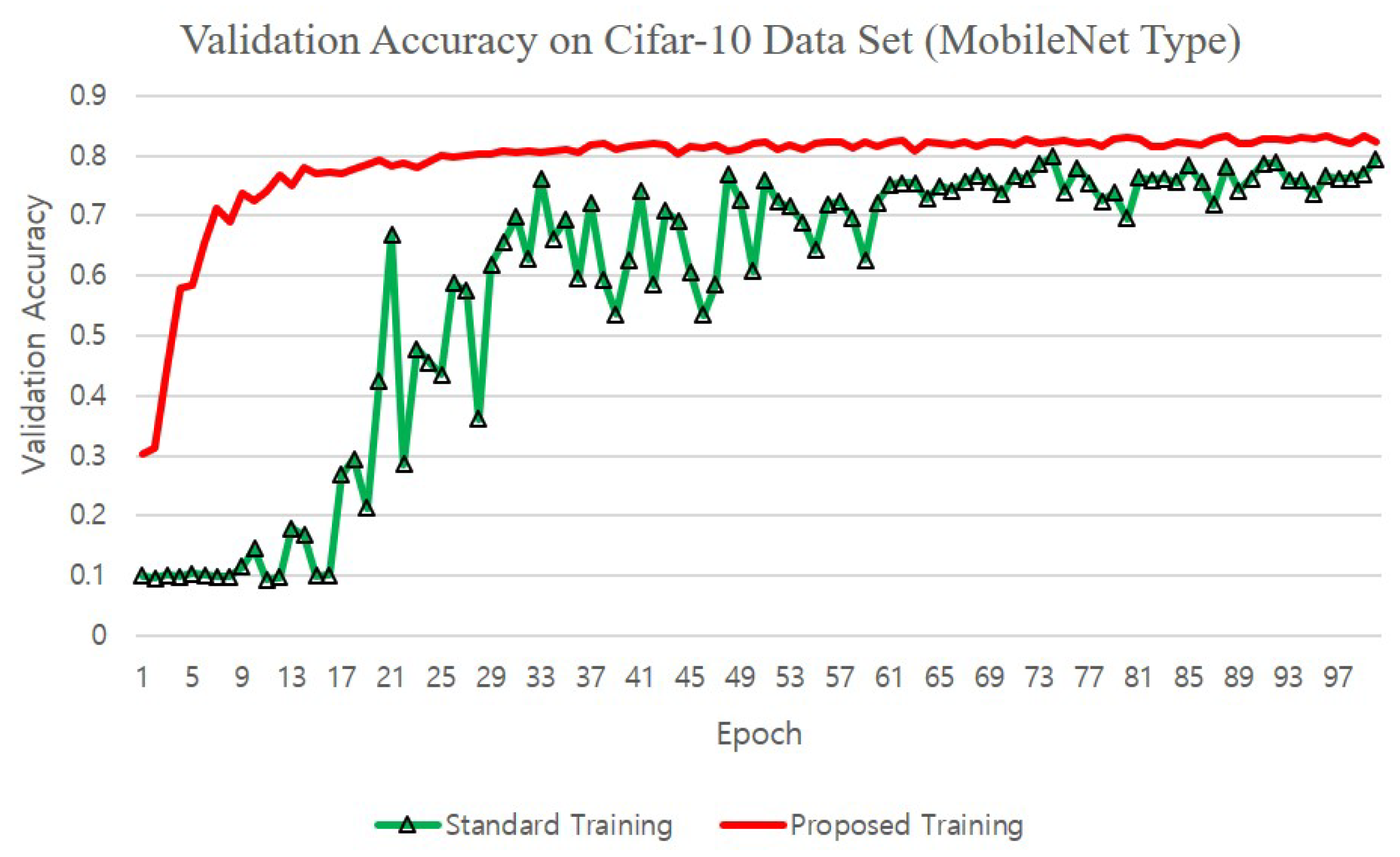

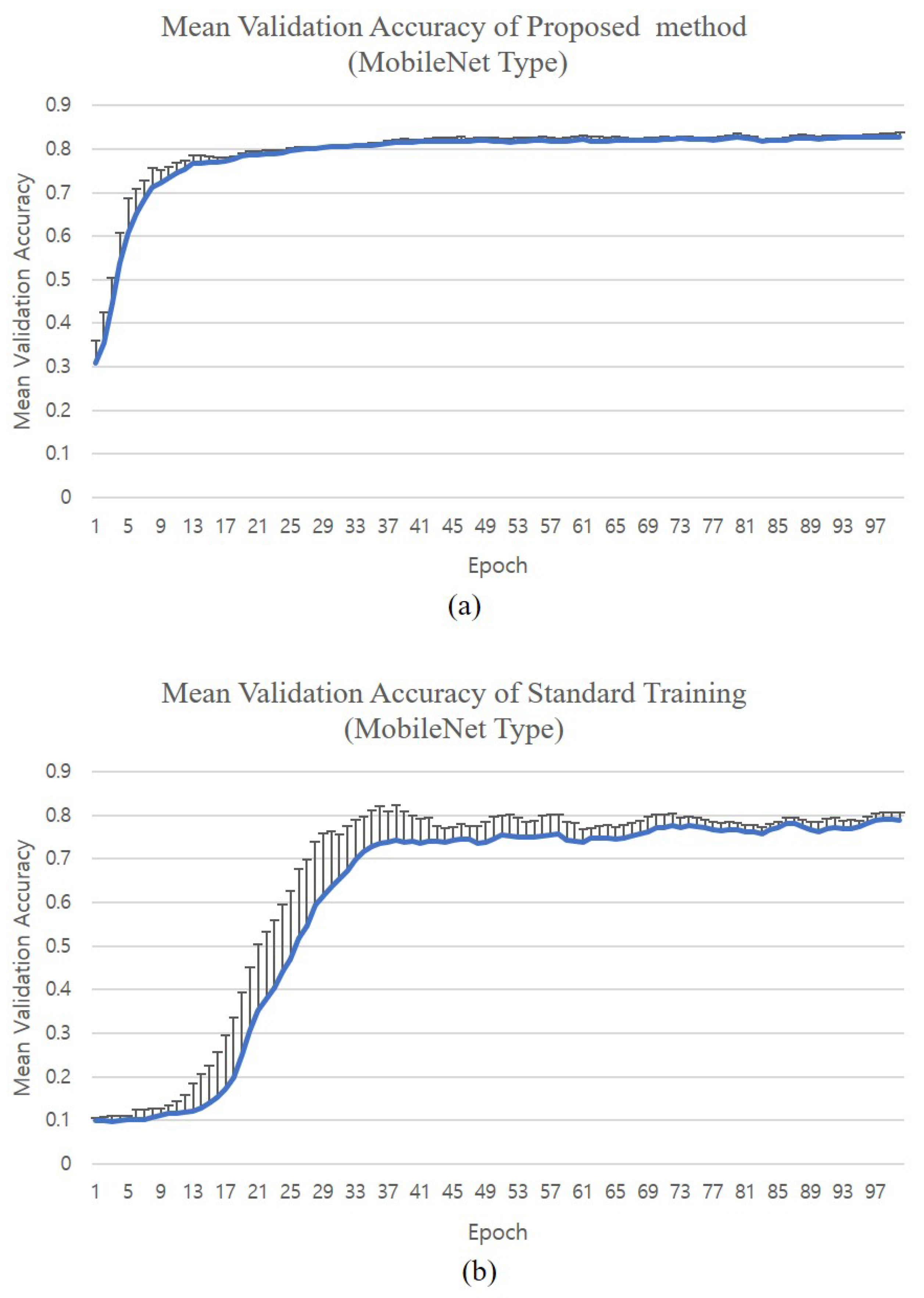

5. Application of the Proposed Training Method to the MobileNet

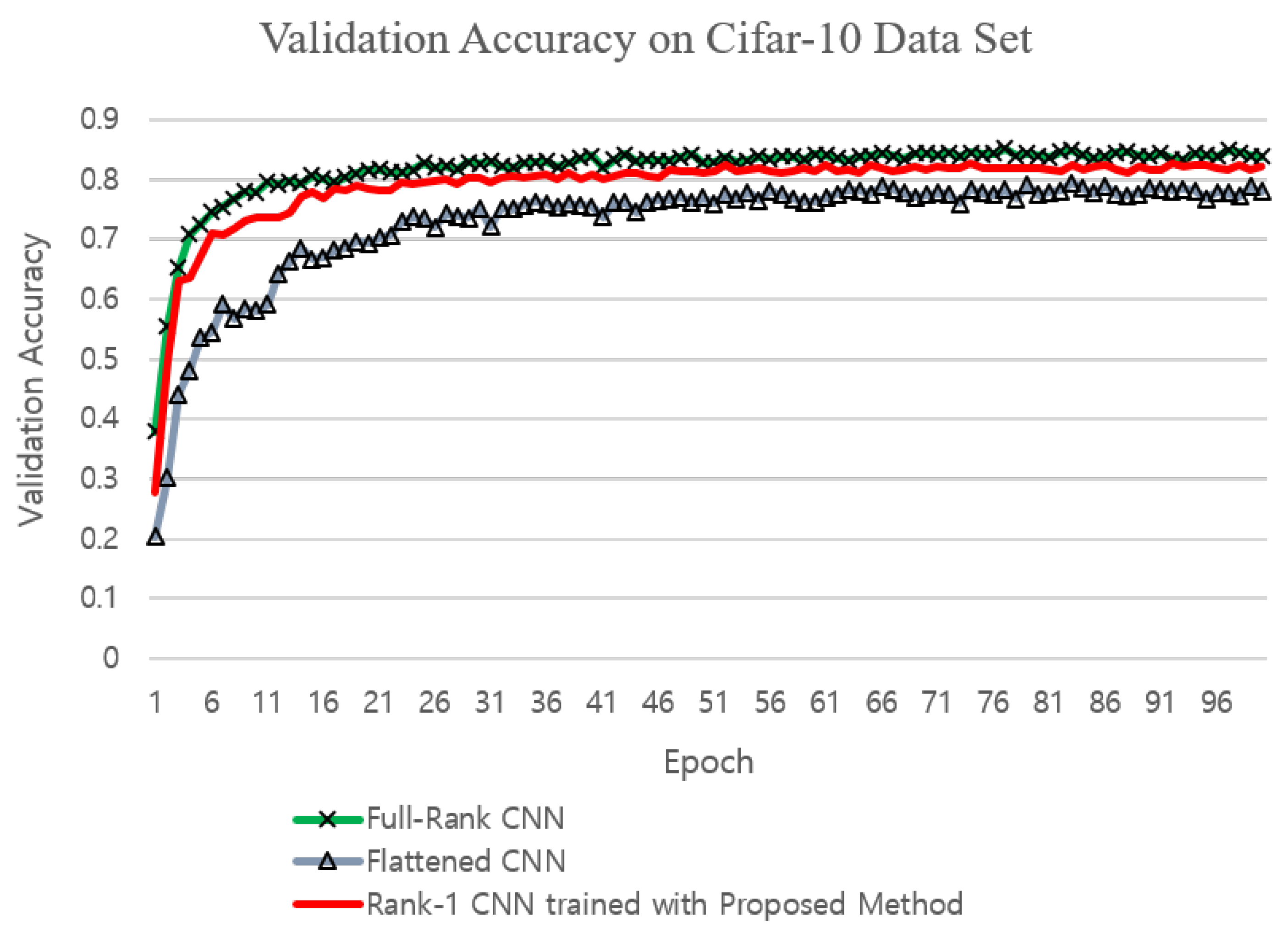

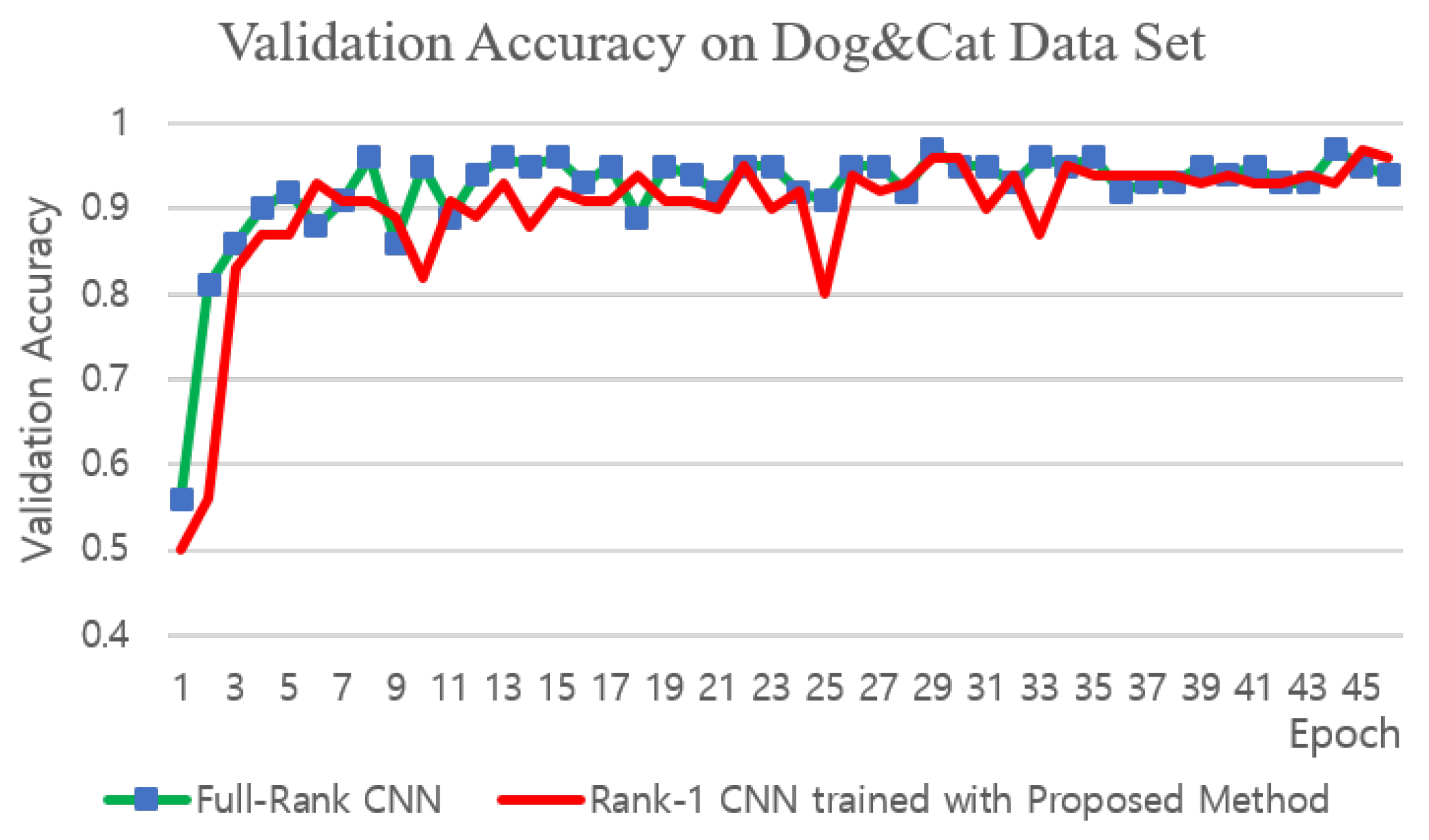

6. Experiments

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Fontanini, T.; Iotti, E.; Donati, L.; Prati, A. MetalGAN: Multi-domain label-less image synthesis using cGANs and meta-learning. Neural Netw. 2020, 131, 185–200. [Google Scholar] [CrossRef] [PubMed]

- Paier, W.; Hilsmann, A.; Eisert, P. Interactive facial animation with deep neural networks. IET Comput. Vis. 2020, 14, 359–369. [Google Scholar] [CrossRef]

- Santos, F.; Zor, C.; Kittler, J.; Ponti, M.A. Learning image features with fewer labels using a semi-supervised deep convolutional network. Neural Netw. 2020, 132, 131–143. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Zhou, P.; Wang, M. Person Reidentification via Structural Deep Metric Learning. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2987–2998. [Google Scholar] [CrossRef] [PubMed]

- Sultan, W.; Anjum, N.; Stansfield, M.; Ramzan, N. Hybrid Local and Global Deep-Learning Architecture for Salient-Object Detection. Appl. Sci. 2020, 10, 8754. [Google Scholar] [CrossRef]

- Fuentes, L.; Farasin, A.; Zaffaroni, M.; Skinnemoen, H.; Garza, P. Deep Learning Models for Road Passability Detection during Flood Events Using Social Media Data. Appl. Sci. 2020, 10, 1–22. [Google Scholar]

- Livni, R.; Shalev-Shwartz, S.; Shamir, O. On the Computational Efficiency of Training Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems(NIPS), Montreal, QC, Canada, 8–13 December 2014; pp. 855–863. [Google Scholar]

- Zhang, C.; Bengio, S.; Hardt, M.; Recht, B.; Vinyals, O. Understanding deep learning requires rethinking generalization. In Proceedings of the International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017; pp. 1–15. [Google Scholar]

- Han, S.; Pool, J.; Tran, J.; Dally, W. Learning both weights and connections for efficient neural network. In Proceedings of the Advances in Neural Information Processing Systems (NIPS) Conference, Montreal, QC, Canada, 7–12 December 2015; pp. 1135–1143. [Google Scholar]

- Yu, R.; Li, A.; Chen, C.F.; Lai, J.H.; Morariu, V.; Han, X.; Gao, M.; Lin, C.Y.; Davis, L.S. Nisp: Pruning networks using neuron importance score propagation. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 9194–9203. [Google Scholar]

- Denton, E.L.; Zaremba, W.; Bruna, J.; LeCun, Y.; Fergus, R. Exploiting linear structure within convolutional networks for efficient evaluation. In Proceedings of the Advances in Neural Information Processing Systems (NIPS) Conference, Montreal, QC, Canada, 8–13 December 2014; pp. 1269–1277. [Google Scholar]

- Jaderberg, M.; Vedaldi, A.; Zisserman, A. Speeding up convolutional neural networks with low rank expansions. In Proceedings of the British Machine Vision Conference (BMVC), Nottingham, UK, 1–5 September 2014; pp. 1–13. [Google Scholar]

- Zhang, X.; Zou, J.; He, K.; Sun, J. Accelerating very deep convolutional networks for classification and detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 1943–1955. [Google Scholar] [CrossRef]

- Lin, S.; Ji, R.; Chen, C.; Tao, D.; Luo, J. Holistic CNN compression via low-rank decomposition with knowledge transfer. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2889–2905. [Google Scholar] [CrossRef]

- Wu, C.; Cui, Y.; Ji, C.; Kuo, T.W.; Xue, C.J. Pruning Deep Reinforcement Learning for Dual User Experience and Storage Lifetime Improvement on Mobile Devices. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2020, 39, 3993–4005. [Google Scholar] [CrossRef]

- Chen, W.; Wilson, J.T.; Tyree, S.; Weinberger, K.Q.; Chen, Y. Compressing neural networks with the hashing trick. In Proceedings of the 32nd International Conference on International Conference on Machine Learning (ICML), Lille, France, 6–11 July 2015; pp. 2285–2294. [Google Scholar]

- Gong, Y.; Liu, L.; Yang, M.; Bourdev, L. Compressing deep convolutional networks using vector quantization. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015; pp. 1–10. [Google Scholar]

- Han, S.; Mao, H.; Dally, W.J. Deep compression: Compressing deep neural network with pruning, trained quantization and huffman coding. In Proceedings of the International Conference on Learning Representations (ICLR), San Juan, Puerto Rico, 2–4 May 2016; pp. 1–14. [Google Scholar]

- Rastegari, M.; Ordonez, V.; Redmon, J.; Farhadi, A. XNOR-Net: ImageNet classification using binary convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 525–542. [Google Scholar]

- Wang, Y.; Xu, C.; You, S.; Tao, D.; Xu, C. CNNpack: Packing convolutional neural networks in the frequency domain. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Barcelona, Spain, 5–10 December 2016; pp. 253–261. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolution. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Iandola, F.N.; Moskewicz, M.W.; Ashraf, K.; Han, S.; Dally, W.J.; Keutzer, K. Squeezenet: Alexnet-level accuracy with 50x fewer parameters and 1mb model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Liang-Chieh Chen, L.C. MobileNet V2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 2019 International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; pp. 1–10. [Google Scholar]

- Huang, G.; Liu, S.; Maaten, L.; Weinberger, K.Q. Condensenet: An efficient densenet using learned group convolutions. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 1–11. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Proceedings of the 2018 European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Jin, J.; Dundar, A.; Culurciello, E. Flattened convolutional neural networks for feedforward acceleration. In Proceedings of the International Conference on Learning Representations(ICLR), San Diego, CA, USA, 7–9 May 2015; pp. 1–11. [Google Scholar]

- Ioannou, Y.; Robertson, D.; Shotton, J.; Cipolla, R.; Criminisi, A. Training CNNs with Low-Rank Filters for Efficient Image Classification. In Proceedings of the 2016 International Conference on Learning Representations (ICLR), San Juan, Puerto Rico, 2–4 May 2016; pp. 1–17. [Google Scholar]

- Cao, S.; Wang, X.; Kitani, K.M. Learnable embedding space for efficient neural architecture compression. In Proceedings of the 2019 International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019; pp. 1–17. [Google Scholar]

- Ashok, A.; Rhinehart, N.; Beainy, F.; Kitani, K.M. N2n learning: Network to network compression via policy gradient reinforcement learning. In Proceedings of the 2018 International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018; pp. 1–21. [Google Scholar]

- Tan, M.; Chen, B.; Pang, R.; Vasudevan, V.; Le, Q. Mnasnet: Platformaware neural architecture search for mobile. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–21 June 2019. [Google Scholar]

- Dong, J.D.; Cheng, A.C.; Juan, D.C.; Wei, W.; Sun, M. DPP-Net: Device-Aware Progressive Search for Pareto-Optimal Neural Architectures. In Proceedings of the 2018 European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 540–555. [Google Scholar]

- Kandasamy, K.; Neiswanger, W.; Schneider, J.; Póczós, B.; Xing, E. Neural architecture search with Bayesian optimisation and optimal transport. In Proceedings of the Advances in Neural Information Processing Systems conference (NeurIPS), Montreal, QC, Canada, 3–18 December 2018; pp. 2020–2029. [Google Scholar]

- Liu, C.; Zoph, B.; Neumann, M.; Shlens, J.; Hua, W.; Li, L.-J.; Fei-Fei, L.; Yuille, A.; Huang, J.; Murphy, K. Progressive neural architecture search. In Proceedings of the 2018 European Conference on Computer Vision(ECCV), Munich, Germany, 8–14 September 2018; pp. 19–34. [Google Scholar]

- Eshratifar, A.E.; Abrishami, M.S.; Pedram, M. JointDNN: An Efficient Training and Inference Engine for Intelligent Mobile Cloud Computing Services. IEEE Trans. Mob. Comput. 2019. [Google Scholar] [CrossRef]

- Li, E.; Zeng, L.; Zhou, Z.; Chen, X. Edge AI: On-Demand Accelerating Deep Neural Network Inference via Edge Computing. IEEE Trans. Wirel. Commun. 2020, 19, 447–457. [Google Scholar] [CrossRef]

- Eshratifar, A.E.; Esmaili, A.; Pedram, M. Bottlenet: A deep learning architecture for intelligent mobile cloud computing services. In Proceedings of the 2019 IEEE/ACM International Symposium on Low Power Electronics and Design (ISLPED), Lausanne, Switzerland, 29–31 July 2019; pp. 1–7. [Google Scholar]

- Bateni, S.; Liu, C. Apnet: Approximation-aware real-time neural network. In Proceedings of the 2018 IEEE Real-Time Systems Symposium (RTSS), Nashville, TN, USA, 11–14 December 2018; pp. 67–79. [Google Scholar]

- Yu, X.; Liu, T.; Wang, X.; Tao, D. On Compressing Deep Models by Low Rank and Sparse Decomposition. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Conference, Honolulu, HI, USA, 21–26 July 2017; pp. 7370–7379. [Google Scholar]

- Szegedy, C.; Loffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence (AAAI’17), San Francisco, CA, USA, 4–9 February 2017; pp. 4278–4284. [Google Scholar]

- McClellan, M.; Pastor, C.; Sallent, S. Deep Learning at the Mobile Edge: Opportunities for 5G Networks. Appl. Sci. 2020, 10, 4735. [Google Scholar] [CrossRef]

- Yang, K.; Xing, T.; Liu, Y.; Li, Z.; Gong, X.; Chen, X.; Fang, D. cDeepArch: A Compact Deep Neural Network Architecture for Mobile Sensing. IEEE/ACM Trans. Netw. 2019, 27, 2043–2055. [Google Scholar] [CrossRef]

- Rago, A.; Piro, G.; Boggia, G.; Dini, P. Multi-Task Learning at the Mobile Edge: An Effective Way to Combine Traffic Classification and Prediction. IEEE Trans. Veh. Technol. 2020, 69, 10362–10374. [Google Scholar] [CrossRef]

- Filgueira, B.; Lesta, D.; Sanjurjo, M.; Brea, V.M.; López, P. Deep Learning-Based Multiple Object Visual Tracking on Embedded System for IoT and Mobile Edge Computing Applications. IEEE Internet Things J. 2019, 6, 5423–5431. [Google Scholar] [CrossRef]

- Mazzia, V.; Khaliq, A.; Salvetti, F.; Chiaberge, M. Real-Time Apple Detection System Using Embedded Systems With Hardware Accelerators: An Edge AI Application. IEEE Access 2020, 8, 9102–9114. [Google Scholar] [CrossRef]

- Kong, H.; Wang, L.; Teoha, E.K.; Li, X.; Wang, J.-G.; Venkateswarlu, R. Generalized 2D principal component analysis for face image representation and recognition. Neural Netw. 2005, 18, 585–594. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

| Standard CNN | Flattened CNN | Proposed CNN |

|---|---|---|

| Conv1: 64 filters, each filter constituted as: | ||

| conv | conv, conv, | |

| conv | conv | |

| Conv2: 64 filters, each filter constituted as: | ||

| conv | conv, conv, | |

| conv | conv | |

| Max Pool ( | ||

| Conv3: 144 filters, each filter constituted as: | ||

| conv | conv, conv | |

| conv | conv | |

| Conv4: 144 filters, each filter constituted as: | ||

| conv | conv, conv | |

| conv | conv | |

| Max Pool ( | ||

| Conv5: 144 filters, each filter constituted as: | ||

| conv | conv, conv | |

| conv | conv | |

| Conv6: 256 filters, each filter constituted as: | ||

| conv | conv, conv | |

| conv | conv | |

| Conv7: 256 filters, each filter constituted as: | ||

| conv | conv, conv | |

| conv | conv | |

| FC 2048 + Batch Normalization + ReLU + Drop Out (P = 0.5) | ||

| FC 1024 + Batch Normalization + ReLU + Drop Out (P = 0.5) | ||

| FC 10 + ReLU + Drop Out (P = 0.5) | ||

| Soft-Max | ||

| Standard CNN | Flattened CNN | Proposed CNN |

|---|---|---|

| Conv1: 64 filters, each filter constituted as: | ||

| conv | conv, conv | |

| conv | conv | |

| ReLU + Batch Normalization | ||

| Conv2: 64 filters, each filter constituted as: | ||

| conv | conv, conv | |

| conv | conv | |

| ReLU + Max Pool ( + Drop Out (P = 0.5) | ||

| Conv3: 144 filters, each filter constituted as: | ||

| conv | conv, conv | |

| conv | conv | |

| ReLU + Batch Normalization | ||

| Conv4: 144 filters, each filter constituted as: | ||

| conv | conv, conv | |

| conv | conv | |

| ReLU + Max Pool ( +Drop Out (P = 0.5) | ||

| Conv5: 256 filters, each filter constituted as: | ||

| conv | conv, conv | |

| conv | conv | |

| ReLU + Batch Normalization | ||

| Conv6: 256 filters, each filter constituted as: | ||

| conv | conv, conv | |

| conv | conv | |

| ReLU + Max Pool ( + Drop Out (P = 0.5) | ||

| FC 1024 + Batch Normalization + ReLU + Drop Out (P = 0.5) | ||

| FC 512 + Batch Normalization + ReLU + Drop Out (P = 0.5) | ||

| FC 10 | ||

| Soft-Max | ||

| Standard CNN | Proposed CNN |

|---|---|

| Conv1: 64 filters, each filter constituted as: | |

| conv | conv |

| Conv2: 64 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU + Max Pool ( | |

| Conv3: 144 filters, each filter constituted as: | |

| conv | conv |

| ReLU | |

| Conv4: 144 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU + Max Pool ( | |

| Conv5: 256 filters, each filter constituted as: | |

| conv | conv |

| ReLU | |

| Conv6: 256 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU + Max Pool ( | |

| Conv7: 256 filters, each filter constituted as: | |

| conv | conv |

| ReLU | |

| Conv8: 484 filters, each filter constituted as: | |

| conv | conv |

| ReLU | |

| Conv9: 484 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU + Max Pool ( | |

| Conv10: 484 filters, each filter constituted as: | |

| conv | conv |

| ReLU | |

| Conv11: 484 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU + Max Pool ( | |

| FC 1024 + Batch Normalization + ReLU | |

| FC 512 + Batch Normalization + ReLU | |

| FC 2 | |

| Soft-Max | |

| Standard CNN | Proposed CNN |

|---|---|

| Conv1: 64 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU + Drop Out (P = 0.5) | |

| Conv2: 64 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU + Max Pool ( | |

| Conv3: 144 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU + Drop Out (P = 0.4) | |

| Conv4: 144 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU + Max Pool ( | |

| Conv5: 256 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU + Drop Out (P = 0.4) | |

| Conv6: 256 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU + Drop Out (P = 0.4) | |

| Conv7: 256 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU + Max Pool ( | |

| Conv8: 484 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU+ Drop Out (P = 0.4) | |

| Conv9: 484 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU + Drop Out (P = 0.4) | |

| Conv10: 484 filters, each filter constituted as: | |

| conv | conv |

| Batch Normalization + ReLU+Max Pool ( | |

| conv | conv |

| Batch Normalization + ReLU+ Drop Out (P = 0.4) | |

| FC 2048 Batch Normalization + ReLU + Drop Out (P = 0.5) | |

| FC 1024 + Batch Normalization + ReLU + Drop Out (P = 0.5) | |

| FC 512 + Batch Normalization + ReLU + Drop Out (P = 0.5) | |

| FC 100 | |

| Soft-Max | |

| Architecture | Method | Accuracy Mean | Accuracy Std. | CPU Inference Time | GPU Inference Time | ||

|---|---|---|---|---|---|---|---|

| Mean (sec) | Std. | Mean (sec) | Std. | ||||

| Standard | 0.9888 | 8.01 | 0.01834 | 0.43 | 0.00242 | 1.38 | |

| CNN1 Type | Flattened | 0.9850 | 9.16 | 0.00931 | 0.25 | 0.00132 | 0.76 |

| Proposed | 0.9911 | 5.08 | 0.00931 | 0.25 | 0.00132 | 0.76 | |

| Standard | 0.8422 | 4.19 | 0.01337 | 0.22 | 0.00178 | 1.01 | |

| CNN2 Type | Flattened | 0.7777 | 6.58 | 0.00732 | 0.18 | 0.00082 | 0.54 |

| Proposed | 0.8213 | 3.40 | 0.00732 | 0.18 | 0.00082 | 0.54 | |

| CNN3 Type | Standard | 0.9450 | 1.32 | 0.04490 | 1.22 | 0.00788 | 2.46 |

| Proposed | 0.9413 | 1.55 | 0.02452 | 1.07 | 0.00374 | 2.13 | |

| CNN4 Type | Standard | 0.5851 | 3.38 | 0.04383 | 1.21 | 0.00748 | 2.51 |

| Proposed | 0.5750 | 5.50 | 0.02371 | 1.15 | 0.00363 | 2.11 | |

| Standard CNN | CNN1 | CNN2 | CNN3 | CNN4 |

|---|---|---|---|---|

| Standard CNN | 1,415,232 | 825,408 | 9,258,480 | 9,222,768 |

| Proposed | 157,752 | 137,072 | 1,029,904 | 964,236 |

| Reduction Ratio | 11.1% | 16.6% | 11.1% | 10.4% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.; Kim, H.; Jeong, B.; Yoon, J. A Training Method for Low Rank Convolutional Neural Networks Based on Alternating Tensor Compose-Decompose Method. Appl. Sci. 2021, 11, 643. https://doi.org/10.3390/app11020643

Lee S, Kim H, Jeong B, Yoon J. A Training Method for Low Rank Convolutional Neural Networks Based on Alternating Tensor Compose-Decompose Method. Applied Sciences. 2021; 11(2):643. https://doi.org/10.3390/app11020643

Chicago/Turabian StyleLee, Sukho, Hyein Kim, Byeongseon Jeong, and Jungho Yoon. 2021. "A Training Method for Low Rank Convolutional Neural Networks Based on Alternating Tensor Compose-Decompose Method" Applied Sciences 11, no. 2: 643. https://doi.org/10.3390/app11020643

APA StyleLee, S., Kim, H., Jeong, B., & Yoon, J. (2021). A Training Method for Low Rank Convolutional Neural Networks Based on Alternating Tensor Compose-Decompose Method. Applied Sciences, 11(2), 643. https://doi.org/10.3390/app11020643