Strong Influence of Responses in Training Dialogue Response Generator

Abstract

:1. Introduction

2. Related Work

3. Learning Response Weight

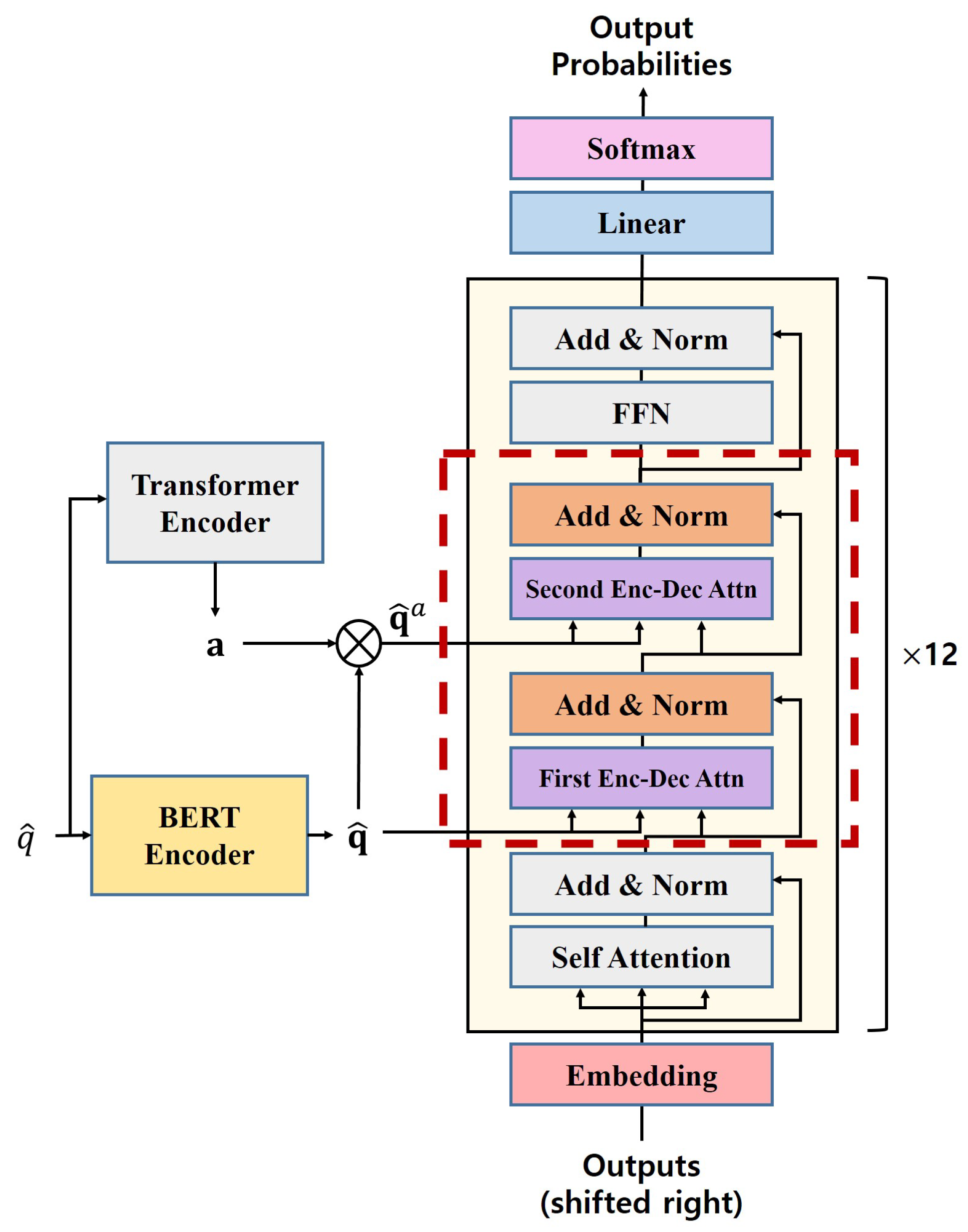

4. Response Generation with Response Weight

5. Experiments

5.1. Experimental Settings

5.2. Response Weight

5.3. Response Generator

5.4. Case Study

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Sutskever, I.; Vinyals, O.; Le, Q. Sequence to Sequence Learning with Neural Networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2014; pp. 3104–3112. [Google Scholar]

- Vinyals, O.; Le, Q. A Neural Conversational Model. arXiv 2015, arXiv:1506.05869. [Google Scholar]

- Sordoni, A.; Galley, M.; Auli, M.; Brockett, C.; Ji, Y.; Mitchell, M.; Nie, J.Y.; Gao, J.; Dolan, B. A Neural Network Approach to Context-Sensitive Generation of Conversational Responses. In Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics, Denver, CO, USA, 31 May–5 June 2015; pp. 196–201. [Google Scholar]

- Serban, I.V.; Sordoni, A.; Lowe, R.; Charlin, L.; Pineau, J.; Courville, A.; Bengio, Y. A Hierarchical Latent Variable Encoder-Decoder Model for Generating Dialogues. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 3295–3301. [Google Scholar]

- Ghazvininejad, M.; Brockett, C.; Chang, M.; Dolan, B.; Gao, J.; tau Yih, W.; Galley, M. A Knowledge-Grounded Neural Conversation Model. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Wu, S.; Li, Y.; Zhang, D.; Zhou, Y.; Wu, Z. Diverse and Informative Dialogue Generation with Context-Specific Commonsense Knowledge Awareness. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Seattle, WA, USA, 5–10 July 2020; pp. 5811–5820. [Google Scholar]

- Li, J.; Galley, M.; Brockett, C.; Gao, J.; Dolan, B. A Diversity-Promoting Objective Function for Neural Conversation Models. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 110–119. [Google Scholar]

- Wu, B.; Jiang, N.; Gao, Z.; Li, M.; Wang, Z.; Li, S.; Feng, Q.; Rong, W.; Wang, B. Why Do Neural Response Generation Models Prefer Universal Replies? arXiv 2018, arXiv:1808.09187. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskeveri, I. Language Models Are Unsupervised Multitask Learners; Technical Report; OpenAI: San Francisco, CA, USA, 2019. [Google Scholar]

- Onan, A. Topic-enriched Word Embeddings for Sarcasm Identification. In Proceedings of the Computer Science On-line Conference, Zlin, Czech Republic, 24–27 April 2019; pp. 293–304. [Google Scholar]

- Onan, A.; Korukoğlu, S.; Bulut, H. Ensemble of Keyword Extraction Methods and Classifiers in Text Classification. Expert Syst. Appl. 2016, 57, 232–247. [Google Scholar] [CrossRef]

- Zhou, H.; Young, T.; Huang, M.; Zhao, H.; Xu, J.; Zhu, X. Commonsense Knowledge Aware Conversation Generation with Graph Attention. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 4623–4629. [Google Scholar]

- Xu, Z.; Liu, B.; Wang, B.; Sun, C.; Wang, X.; Wang, Z.; Qi, C. Neural Response Generation via GAN with an Approximate Embedding Layer. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 617–626. [Google Scholar]

- Zhao, T.; Zhao, R.; Eskenazi, M. Learning Discourse-level Diversity for Neural Dialog Models using Conditional Variational Autoencoders. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 654–664. [Google Scholar]

- Li, J.; Monroe, W.; Jurafsky, D. Data Distillation for Controlling Specificity in Dialogue Generation. arXiv 2017, arXiv:1702.06703. [Google Scholar]

- Young, T.; Cambria, E.; Chaturvedi, I.; Zhou, H.; Biswas, S.; Huang, M. Augmenting End-to-End Dialogue Systems With Commonsense Knowledge. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 4970–4977. [Google Scholar]

- Liu, Z.; Niu, Z.Y.; Wu, H.; Wang, H. Knowledge Aware Conversation Generation with Explainable Reasoning over Augmented Graphs. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, Hong Kong, China, 3–7 November 2019; pp. 1782–1792. [Google Scholar]

- Dinan, E.; Roller, S.; Shuster, K.; Fan, A.; Auli, M.; Weston, J. Wizard of wikipedia: Knowledge-powered conversational agents. arXiv 2018, arXiv:1811.01241. [Google Scholar]

- Zhang, S.; Dinan, E.; Urbanek, J.; Szlam, A.; Kiela, D.; Weston, J. Personalizing Dialogue Agents: I have a dog, do you have pets too? In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 2204–2213. [Google Scholar]

- Rashkin, H.; Smith, E.; Li, M.; Boureau, Y.L. Towards Empathetic Open-domain Conversation Models: A New Benchmark and Dataset. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 5370–5381. [Google Scholar]

- Jiang, S.; Ren, P.; Monz, C.; Rijke, M. Improving Neural Response Diversity with Frequency-Aware Cross-Entropy Loss. In Proceedings of the Web Conference 2019, San Francisco, CA, USA, 15 May 2019; pp. 2879–2885. [Google Scholar]

- Onan, A.; Toçoğlu, M.A. A Term Weighted Neural Language Model and Stacked Bidirectional LSTM based Framework for Sarcasm Identification. IEEE Access 2021, 9, 7701–7722. [Google Scholar] [CrossRef]

- Xing, C.; Wu, W.; Wu, Y.; Liu, J.; Huang, Y.; Zhou, M.; Ma, W.Y. Topic Aware Neural Response Generation. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 3351–3357. [Google Scholar]

- Tang, J.; Zhao, T.; Xiong, C.; Liang, X.; Xing, E.; Hu, Z. Target-Guided Open-Domain Conversation. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August; pp. 5624–5634.

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Pal, S.; Herbig, N.; Krüger, A.; van Genabith, J. A Transformer-Based Multi-Source Automatic Post-Editing System. In Proceedings of the Third Conference on Machine Translation: Shared Task Papers, Brussels, Belgium, 31 October–1 November 2018; pp. 827–835. [Google Scholar]

- Zhang, M.; Wang, X.; Fang, F.; Li, H.; Yamagishi, J. Joint Training Framework for Text-to-Speech and Voice Conversion Using Multi-Source Tacotron and WaveNet. In Proceedings of the Interspeech 2019, 20th Annual Conference of the International Speech Communication Association, Graz, Austria, 15–19 September 2019; pp. 1298–1302. [Google Scholar]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

- Li, Y.; Su, H.; Shen, X.; Li, W.; Cao, Z.; Niu, S. DailyDialog: A Manually Labelled Multi-turn Dialogue Dataset. In Proceedings of the Eighth International Joint Conference on Natural Language Processing, Taipei, Taiwan, 27 November–1 December 2017; pp. 986–995. [Google Scholar]

- Marujo, L.; Ling, W.; Trancoso, I.; Dyer, C.; Black, A.; Gershman, A.; Martins de Matos, D.; Neto, J.; Carbonell, J. Automatic Keyword Extraction on Twitter. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015; pp. 637–643. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Medelyan, O.; Perrone, V.; Witten, I. Subject Metadata Support Powered by Maui. In Proceedings of the 10th Annual Joint Conference on Digital Libraries, Gold Coast, Australia, 21–25 June 2010; pp. 407–408. [Google Scholar]

- Ling, W.; Dyer, C.; Black, A.; Trancoso, I. Two/Too Simple Adaptations of Word2Vec for Syntax Problems. In Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Denver, CO, USA, 31 May–5 June 2015; pp. 1299–1304. [Google Scholar]

- Owoputi, O.; O’Connor, B.; Dyer, C.; Gimpel, K.; Schneider, N.; Smith, N. Improved Part-of-Speech Tagging for Online Conversational Text with Word Clusters. In Proceedings of the 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Atlanta, GA, USA, 9–14 June 2013; pp. 380–390. [Google Scholar]

- Marujo, L.; Gershman, A.; Carbonell, J.; Frederking, R.; Neto, J.P. Supervised Topical Key Phrase Extraction of News Stories using Crowdsourcing, Light Filtering and Co-reference Normalization. In Proceedings of the Eighth International Conference on Language Resources and Evaluation, Istanbul, Turkey, 21–27 May 2012; pp. 399–403. [Google Scholar]

- Gu, J.; Lu, Z.; Li, H.; Li, V. Incorporating Copying Mechanism in Sequence-to-Sequence Learning. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 1631–1640. [Google Scholar]

- Zhu, W.; Mo, K.; Zhang, Y.; Zhu, Z.; Peng, X.; Yang, Q. Flexible End-to-End Dialogue System for Knowledge Grounded Conversation. arXiv 2017, arXiv:1709.04264. [Google Scholar]

- Liu, C.W.; Lowe, R.; Serban, I.V.; Noseworthy, M.; Charlin, L.; Pineau, J. How NOT To Evaluate Your Dialogue System: An Empirical Study of Unsupervised Evaluation Metrics for Dialogue Response Generation. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 2122–2132. [Google Scholar]

- Li, J.; Monroe, W.; Shi, T.; Jean, S.; Ritter, A.; Jurafsky, D. Adversarial Learning for Neural Dialogue Generation. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 2157–2169. [Google Scholar]

- Mou, L.; Song, Y.; Yan, R.; Li, G.; Zhang, L.; Jin, Z. Sequence to Backward and Forward Sequences: A Content-Introducing Approach to Generative Short-Text Conversation. In Proceedings of the COLING 2016, the 26th International Conference on Computational Linguistics, Osaka, Japan, 11–16 December 2016; pp. 3349–3358. [Google Scholar]

- Zhao, M.; Cong, Y.; Carin, L. On Leveraging Pretrained GANs for Generation with Limited Data. In Proceedings of the Thirty-Seventh International Conference on Machine Learning, Vienna, Austria, 12–18 July 2020. [Google Scholar]

| Q: Orange or gold, pick one |

| R: Orange. Easy choice |

| Q: I’d love if you gave it a shot! |

| R: All right. I’ll give it a try. Do you have it somewhere I can download it? |

| Q: I ordered march 11th and I haven’t received anything yet. |

| R: Ordered same day, still waiting on mine as well. |

| # Train | # Valid | # Test | |

|---|---|---|---|

| Dailydialog | 45,337 | 3851 | - |

| MAUI Twitter | - | - | 500 |

| 1,352,961 | 40,000 | 40,000 |

| Model | P | R | F1 |

|---|---|---|---|

| MAUI-df | 53.97 | 53.15 | 53.56 |

| MAUI-wv | 55.80 | 54.45 | 55.12 |

| MAUI-br | 71.95 | 75.01 | 73.45 |

| MAUI-brwv | 72.05 | 75.16 | 73.57 |

| MAUI-out | 55.54 | 48.74 | 51.92 |

| RW | 55.75 | 55.57 | 55.66 |

| Metric | Embedding | Overlap | Diversity | Inform | R | |||

|---|---|---|---|---|---|---|---|---|

| Embavg | Embex | Bleu-2 | Bleu-3 | Dist-1 | Dist-2 | Entropy | ||

| TS2S | 0.764 | 0.845 | 1.50 | 0.44 | 1.47 | 14.17 | 8.47 | 0.87 |

| COPY | 0.868 | 0.841 | 5.43 | 2.26 | 1.73 | 8.33 | 7.87 | 1.09 |

| GenDS | 0.876 | 0.851 | 4.68 | 1.79 | 0.74 | 3.97 | 7.73 | 0.89 |

| CCM | 0.871 | 0.841 | 5.18 | 2.01 | 1.05 | 5.29 | 7.73 | 0.96 |

| ConKADI | 0.867 | 0.852 | 3.53 | 1.27 | 2.77 | 18.78 | 8.10 | 1.19 |

| RGRW | 0.794 | 0.870 | 1.70 | 0.49 | 3.24 | 25.04 | 11.42 | 1.26 |

| Model | Bleu-2 | Dist-2 | Entropy | |

|---|---|---|---|---|

| RGRW | 1.70 | 25.04 | 11.42 | 1.26 |

| - EDA | 1.59 | 25.60 | 11.45 | 1.23 |

| - RW | 1.52 | 20.45 | 8.96 | 1.08 |

| Query & Attentions |  |

| Golden Response | All right. I’ll give it a try. Do you have it somewhere I can download it? |

| W/o RW | It would be nice to have them. |

| W/RW | I’ll give it a shot and see what happen! |

| Query & Attentions |  |

| Golden Response | Too bad. They taste awful. |

| W/o RW | I think it’s a good idea. |

| W/RW | Oh yeah! I’ve always wanted to try them! They taste great! |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.-E.; Lim, Y.-S.; Park, S.-B. Strong Influence of Responses in Training Dialogue Response Generator. Appl. Sci. 2021, 11, 7415. https://doi.org/10.3390/app11167415

Kim S-E, Lim Y-S, Park S-B. Strong Influence of Responses in Training Dialogue Response Generator. Applied Sciences. 2021; 11(16):7415. https://doi.org/10.3390/app11167415

Chicago/Turabian StyleKim, So-Eon, Yeon-Soo Lim, and Seong-Bae Park. 2021. "Strong Influence of Responses in Training Dialogue Response Generator" Applied Sciences 11, no. 16: 7415. https://doi.org/10.3390/app11167415

APA StyleKim, S.-E., Lim, Y.-S., & Park, S.-B. (2021). Strong Influence of Responses in Training Dialogue Response Generator. Applied Sciences, 11(16), 7415. https://doi.org/10.3390/app11167415