1. Introduction

Excavation is a major task that is performed in the construction sector. It is the process of earthmoving, such as creating building foundations, land grading, pile driving, highway maintenance, etc. Therefore, excavators are considered essential equipment for any construction. With the increasing number of fatal accidents on construction sites, researchers have conducted numerous studies to raise the issue of safety concerns. The construction sites for excavation are dynamic as they include interactions between different objects such as machines and workers, which can increase the chance of potential collisions due to poor visibility, blind spots, and operator carelessness. Thus, there has been a need for more efficient and advanced safety management to tackle such high-risk accidents.

To fully achieve autonomous operations, advanced safety systems are required to protect the workers and construction infrastructure by gathering information from machine dynamics (proprioceptive) and the external environment (exteroceptive) [

1]. Various safety management systems have been proposed for construction equipment to detect dangerous proximities, alert operators and workers about these, and identify blind spots by using sensing data obtained from RF (radio frequency) ID techniques, GPS (global positioning system), cameras, and laser scanners [

2].

During the past decade, RFID (radio frequency identification) has been the most widely used sensor for safety management, followed by wireless, camera-based, and UWB (ultra-wide-band) sensors [

3]. The RTLS (real-time location system) developed by Lee et al. [

4], was intended to enhance worker safety using RFID tags. The implementation of this method requires tags to be linked with the target objects and the leaving-out of objects without tags. However, the study lacks in providing a strategy to prevent collisions. Another RFID-based method was proposed by [

5] that utilizes RFID tags for identifying and tracking material locations on construction sites. This approach allows for automatic reading of tags by a supervisor. However, the reader requires a specific range of optimal speed to move to achieve higher accuracy. Besides, it requires the installation of multiple labels and receivers, limiting its practicality.

A computer vision-based methodology was proposed by Seo et al. [

6] for construction safety and health monitoring. However, the study lacks in determining unsafe conditions and acts for construction sites. At the same time, magnetic-field proximity detection and alarming techniques were proposed by Teizer [

7]. Since their experiments were carried out under limited test conditions (i.e., a flat and unobstructed outdoor surface only with one type of vehicle (loader)), further testing is required to apply the developed safety system in the construction sector.

Neito et al. [

8] developed a safety system for preventing collisions between workers and machines based on an integration of wireless networks, GPS, and 3D graphics. However, the GPS can lose its accuracy in small and indoor places. Ref. [

9] developed the motion capture and recognition framework for safety management, which helps in achieving behavior-based monitoring for unsafe actions. In this study, the framework can collect site videos and motion templates, extract a 3D skeleton from the videos, and detect unsafe actions using skeleton and motion templates. 3D sensing devices such as stereo-vision and depth cameras have been widely utilized to provide distance information from the given 3D spatial information. However, these sensing devices have a limited detection range and sensitivity to lighting, and thus they are not ideally suited for construction safety management.

Kim et al. [

10] considered the swing velocity and braking time of swing motion to propose collision avoidance algorithms. A wearable device using augmented reality was proposed by [

11] to identify the hazard’s orientation and distance to workers. Since the alarms are based on the worker’s vision, hazards out of the view may not be detected.

An object detection algorithm using a VLP-16 LiDAR was developed by Alireza et al. [

12], which used the voxel-based plane algorithm. The pedestrian detection approach was presented by [

13] through analyzing the temporal changes of grids in an occupancy map. However, variations of the size of the detected object size in the map may influence the performance of the tracking filter negatively. Although the study has the above limitation and the considered application is not construction equipment, it provides a useful reference in tracking pedestrians that can be equivalent to workers around construction machines.

Fuerstenberg [

14] proposed a laser scanner-based method to detect and track pedestrians. The limitation of this methodology is that its classification criteria do not cover all the objects and may not be suitable for tracking vehicles or cyclists. The method of utilizing video frames was proposed by [

15] to detect workers and mobile machines, including construction equipment, and to predict their motions by applying a Kalman filter. The limitation of this study is that the proposed tracking filter works with linear states only. A multi-object tracking and management system was proposed in [

16], which integrates the unscented Kalman filter (UKF) with joint probabilistic data association (JPDA) and the interacting multiple model filter (IMM). A safety system that can generate warnings to avoid collisions in the excavator was presented by [

17], using 2D laser scanners and new safety indices. However, the use of 2D laser scanners restricts the vertical position of the detected objects. Another restriction of this method is that this type of laser scanner allows 270 degree-FOV (field-of-view) and hence, two scanners are needed to meet the full coverage of 360 degrees. The fusion of two laser scanners makes the system expensive and complicated due to the integration of their data.

The majority of prior studies in the area of safety management focused on proximity-detection and utilization of current states of the detected objects that may not be comprehensive in capturing potential safety risks. For example, the proximity between construction equipment and workers may not be used to indicate potential unsafe conditions if a manipulator or vehicle remains stationary (i.e., does not move) or moves rapidly despite sufficient safe margins. Therefore, quantitative and predictive metrics are highly necessary to assess unsafe conditions and potential risks in fully automated excavators.

To tackle the above issue, this study proposes 3D Lidar-based safety algorithms for autonomous excavators that can effectively detect, track, and predict object motion around the excavator, and then evaluate the severity of collision risks using the defined risk metric.

Section 1 describes the introduction and literature review of state-of-the-art methodologies.

Section 2 briefly explains a framework of the proposed methodologies that covers object tracking and safety evaluation.

Section 3 presents the sensory data processing and safety index calculations.

Section 4 provides experimental results and analysis, and the paper is concluded in

Section 5.

3. Data Processing and Kinematic Analysis

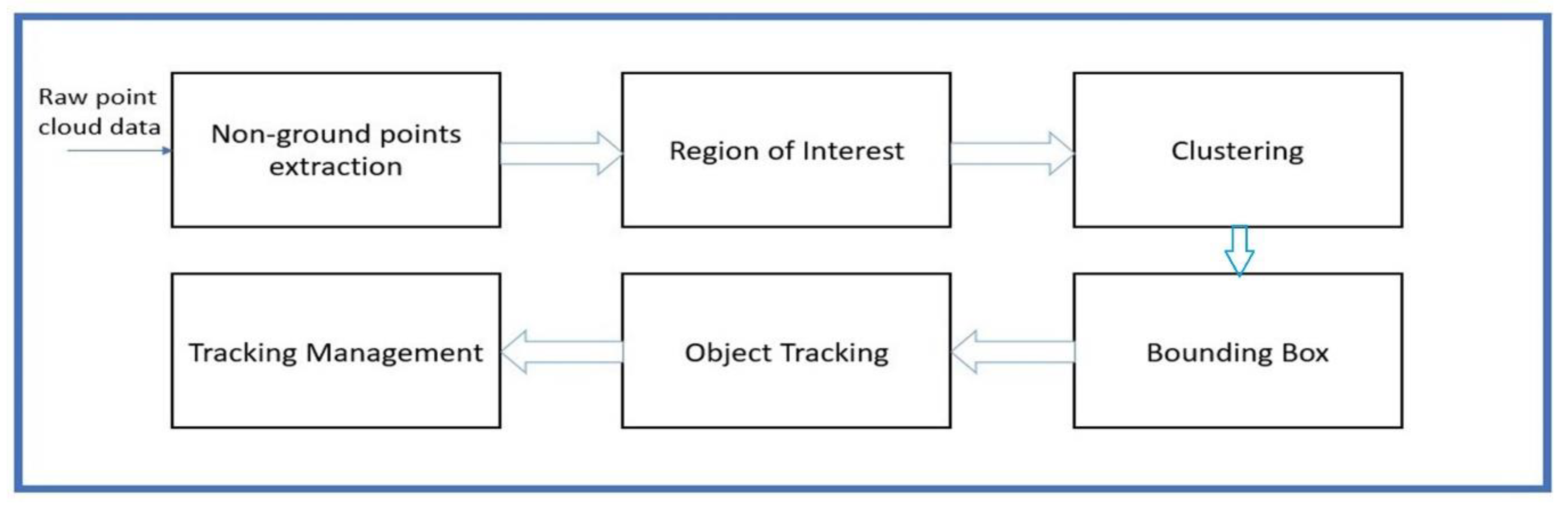

The extraction and processing of sensor data are described in this section, as shown in

Figure 2. The VLP-16 LiDAR [

18] (VLP-16, Velodyne Lidar, San Jose, CA, US, 2020) was selected as the sensor used for this research as it provides a horizontal detection range of 360 degrees and a vertical range of 30 degrees, respectively. It also generates approximately 300,000 points per second, which allows for fast and high-resolution detection. In addition, the point cloud of each Lidar includes the information of the

x,

y, and

z coordinates that can be used to identify the position of the detected objects. In the study, MATLAB (R2020a, MathWorks, Natick, MA, US, 2020) software was used to store, process, and visualize the point cloud data. The data processing for object tracking and track management was taken through the steps discussed below.

3.1. Extraction of Ground Point Cloud

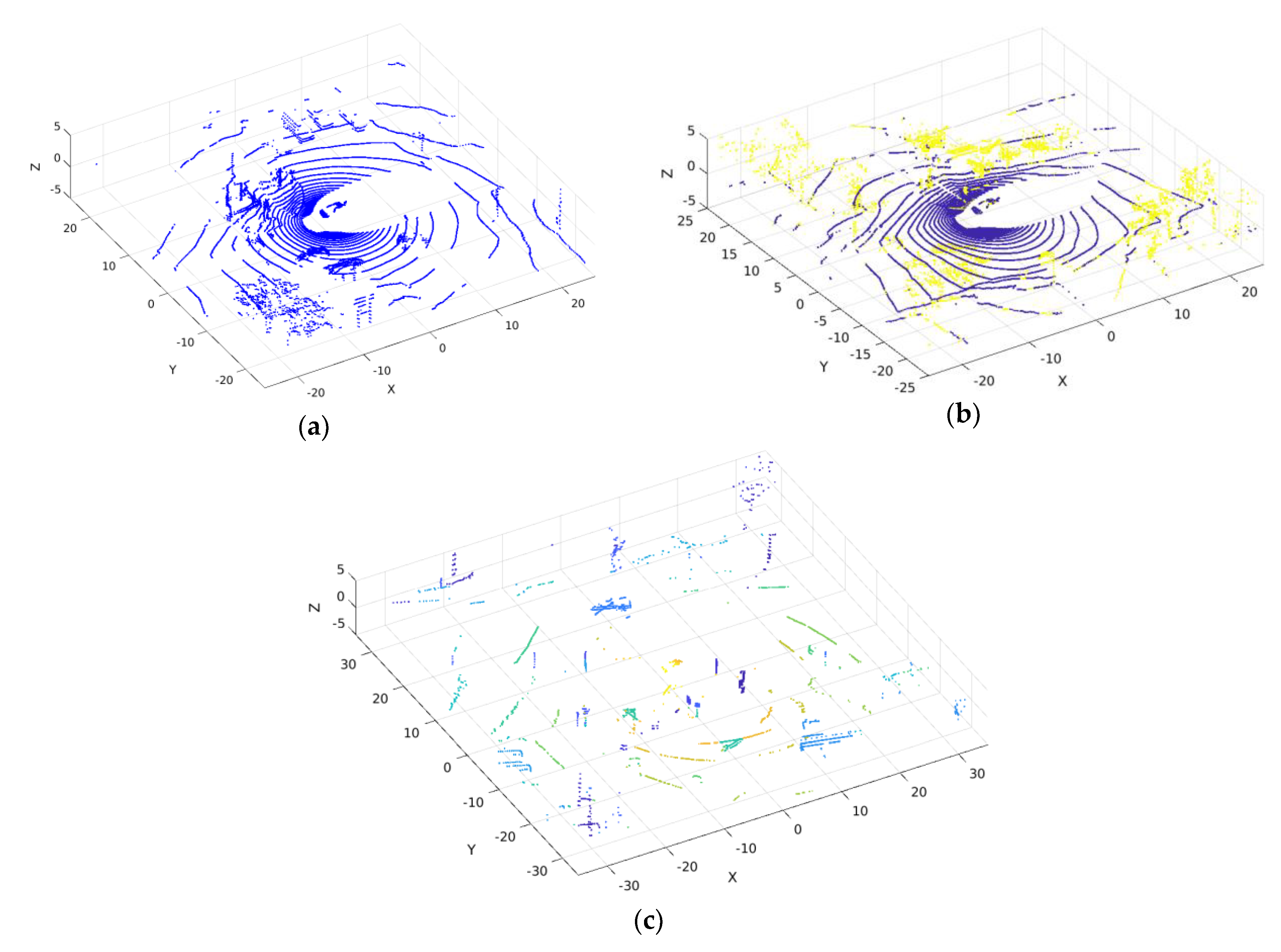

The raw point cloud data represents many points containing ground and non-ground points (

Figure 3a). Since our focus is on the detection of moving objects around the excavator, the point clouds representing a ground place (earth surface) firstly need to be eliminated from the point cloud. This is because the moving objects belong to the non-ground points and the ground plane can act as unwanted points during the object detection. Therefore, the extraction of these unwanted points is an essential step, and it can also help reduce the computation load by removing them from the large quantity of unprocessed raw data of LiDAR measurements.

In this research, the point cloud data representing the ground plane were extracted by adopting the MSAC (M-estimator sample and consensus) algorithm as a variant of RANSAC (random sample consensus) [

19]. This is a repetitive method to estimate a model from a given dataset containing outliers. To extract the ground points, a plane model was fitted to the raw points cloud by the MSAC algorithm. The RANSAC algorithm can determine the number of outliers by selecting a subset of data and fitting a model to it.

The point clouds representing non-ground points are shown in

Figure 3b. After detecting the non-ground points, the next step was to cluster the non-ground points and detect the obstacles (

Figure 3c), which is discussed in the next section.

3.2. Obstacle Points

After the removal of non-ground points, the remaining point clouds were formed into clusters. However, these forming clusters are still computationally high, as the LiDAR has a long detection range, thus a region of interest (ROI) was restricted by the defined radius to solve this problem. The point cloud points inside the ROI were considered obstacle points [

20]. Clustering was carried out with the obstacle points.

3.3. Clustering and Object Detection

The Euclidean distance required for the clustering process was set to 0.4 m (minimum) ~2 m (maximum) that correspond to the averages of a human (worker) chest width and vehicle size, respectively. Then, the only objects detected within this range were considered for clustering. In this way, the objects that are smaller than a human and bigger than a vehicle were ignored. As the next step, a bounding box was assigned to fit into a single cluster that indicates a detected object. The bounding box generates the center point of each detected object in terms of x, y, and z coordinates. This center point was used for a tracking filter to estimate and predict the object states.

3.4. Object Tracking and Management

To track multiple objects around the excavator, we integrated several filters to design our tracking filter composed of IMM (interacting-multiple-model), UKF (unscented Kalman filter), and JPDA (joint probabilistic data association filter) techniques. The reason for choosing the integrated filters was to handle multiple models representing different motion behaviors (IMM), estimate their non-linear states (UKF), and provide a tracking management system that allows for multitarget tracking in clutter at the stage of the data association (JPDA). The IMM-UKF-JPDA approach is computationally effective for recursive state estimation and the mode probabilities of targets. For the implementation of this filter, we consider model set

with

r = 2 meaning that the M

1, constant velocity (CV), and M

2, constant turn (CT) models are considered. These two models share a common state vector

consisting of bounding box center points in the

directions

their corresponding velocities (

), and the yaw angle

, turn rate (

and the length (

, width (

, and height (

of a bounding box. The discrete time–state equation for the CV model is given as:

and the state for the CT model is:

where

is the sampling time,

uk is the control input of the excavator, and both models include a white Gaussian noise

having a mean at zero and the covariance matrices

, respectively. Such models are common for maneuvering multi-object tracking and are extensions of the models found in [

21]. Among the two models, the common measurement model is given as:

with the measurement noise

having the covariance matrix

.

The next step is to estimate the probability to identify the measurements according to the CV and CT models. The progress of the system among the models is operated on the Markovian model transition probability matrix.

where

represents the probability of mode transition. Several studies have addressed the integration of the above filter, but this paper follows the five-step process introduced in [

21,

22]. These steps include (1) interaction, (2) state prediction and measurement validation, (3) data association and tracking management, (4) mode probability update, and (5) combination. Each step can be explained in mathematical representations as follows.

3.4.1. Interaction

In the interaction step, the initial state and covariance of the model filter are probabilistically mixed with the state and covariance of the previous time step.

The conditional mode probabilities denoted by

indicate that the system’s transition from mode

i (previous time step) to mode

j (current time step), and can be calculated as:

The conditional mode probabilities are dependent on the priori mode probabilities of the current frame denoted by = As seen in Equation (6), the conditional mode probabilities of the current frame can be expressed using the prediction previous frame’s probabilities, and elements of the matrix from Equation (4).

3.4.2. State Prediction and Measurement Validation

The next step is to predict the current states using the stochastic non-linear model. To deal with nonlinearities, UKF is implemented to predict the states and covariances.

Sigma points,

with

are selected with a square root decomposition of the mixed initial covariance of each filter. Each sigma point has scalar weights

and

that are associated, and can be calculated as:

where

, and

are the scalar parameters. The sigma points are propagated with the system function,

f. As seen in Equation (7), the sigma points are propagated using the transition function

f, and the weighted sigma points are used to calculate the predicted state (

) and covariance (

). Similarly, the sigma points are projected by the observation function

h in Equation (8) and then the weighted sigma points are involved in generating the predicted measurement (

) and predicted measurement covariance (

).

A measurement is regarded valid if it falls within the following elliptical validation gate

. The validation gate of an object

is the same for all the models in

.

where

is the index of the model in

,

is the gate threshold, and

is the set of validated measurements.

3.4.3. Tracking Management and Data Association

Data association is the step of labeling each detected object with a unique ID and associating the tracking information obtained from a LiDAR sensor that includes the estimation/prediction of the detected object’s position/velocity, with each label. The data association in the tracking filter was dealt with through JPDA [

23], which follows the procedure of initialization, confirmation, correction, prediction, and deletion of tracks. Among various data association algorithms, JPDA enables the calculation of all possible target association probability of each observation (see Equations (14)–(17)).

The ‘tentative’ label was given to a newly detected track through the data association process; it was labeled as ‘confirmed’ if a specific track was sufficiently detected to record; the identical ID was assigned if the same track as the current one was detected. The logic flow [

24] of JPDA starts with the division of detections from multiple sensors into multiple groups (however, a single LiDAR was used in this study). For each sensor, the distances from detections to existing tracks and a validation matrix were calculated by turns. After the calculation of the validation matrix, tracks and detections were separated into clusters.

The following steps outline how to update each cluster:

S1: Generation of feasible joint events.

S2: Calculation of the posteriori probability of each joint event.

S3: Calculation of the marginal probability of each detection–track pair.

S4: Reporting of weak detections that are within the validation gate of at least one track.

S5: Unassigned and weak detections get new tracks.

S6: Tracks are deleted based on the defined number of scans without detection.

S7: All tracks are predicted to the latest time value.

To formulate JPDA, we assume to have and as the states of objects and measurements at time , respectively. Here, the state vector means the position and velocity for the detected object and the measurements are the cluttered and noisy detected positions observed by a sensor.

The probability of the data association,

which represents the measurement index

i ϵ[

M]

0 ≜ {0, 1, …,

M}, is defined as follows [

24].

where

denotes the detection probability,

represents the density of the false detection,

is the prediction state of the object,

is the innovation covariance matrix of the Kalman filter, and

is the normal distribution.

is assumed to be a linear Gaussian model and its 0 value means missed or dummy detection.

The joint data association space, Θ, defined in Equation (15) comprises all possible combinations of measurement-to-object assignments in order that each measurement is assigned to at most one object (A1) and each object is uniquely assigned to a measurement (A2).

where

is the total number of joint assignments and

represents the binary vector that is one possible solution to the data association problem.

.

Then, the marginalized JPDA probability,

over

can be calculated as

where

As the last step, the normalized is used to update the state of the detected object.

3.4.4. Mode Probability Update

The mode probabilities are updated in this step using the best-fitting measurements and the uniform mixture model likelihoods

where

3.4.5. Combination

In the last step, the updated states of each filter are used to generate the final states and covariances using the model probabilities of tracks as follows.

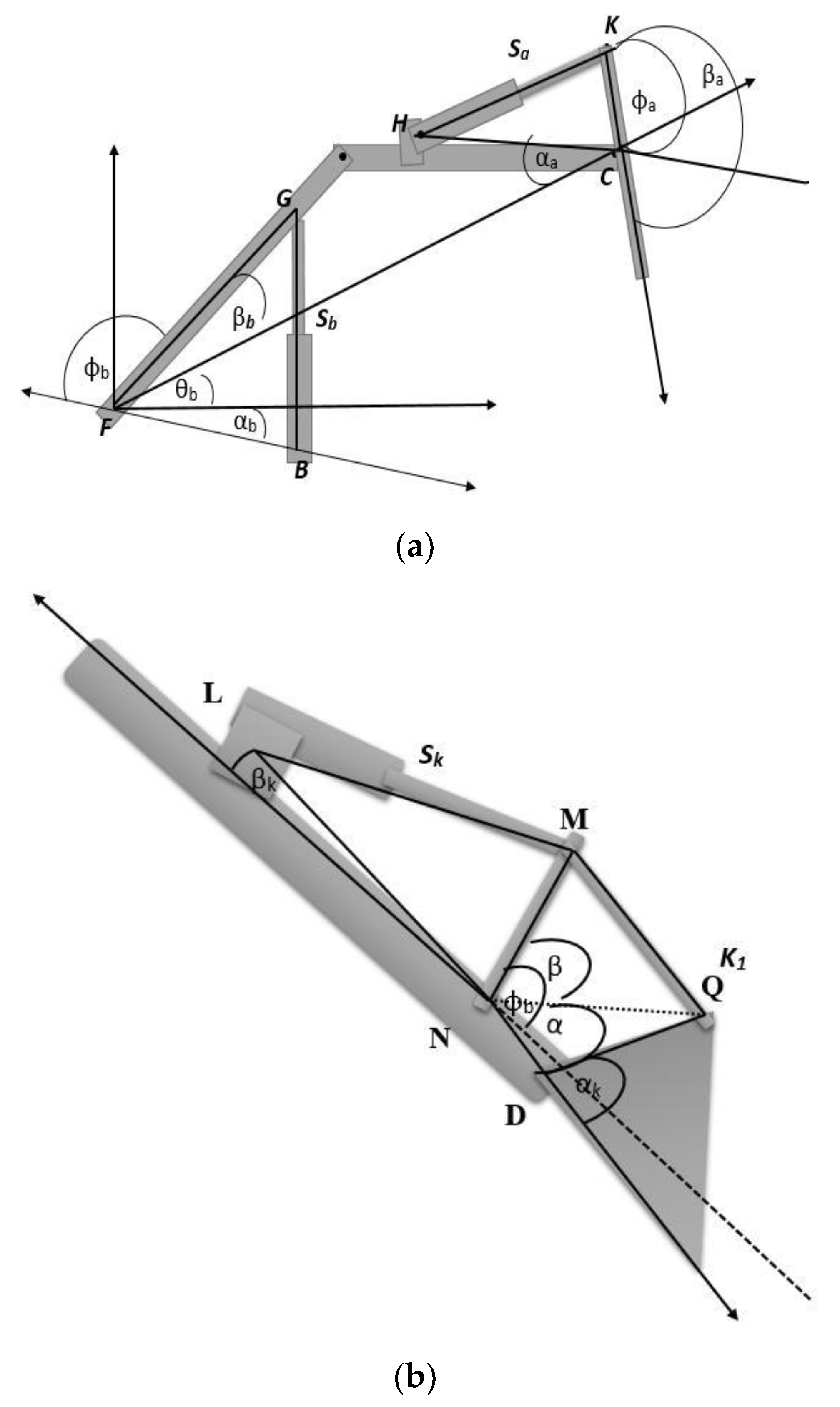

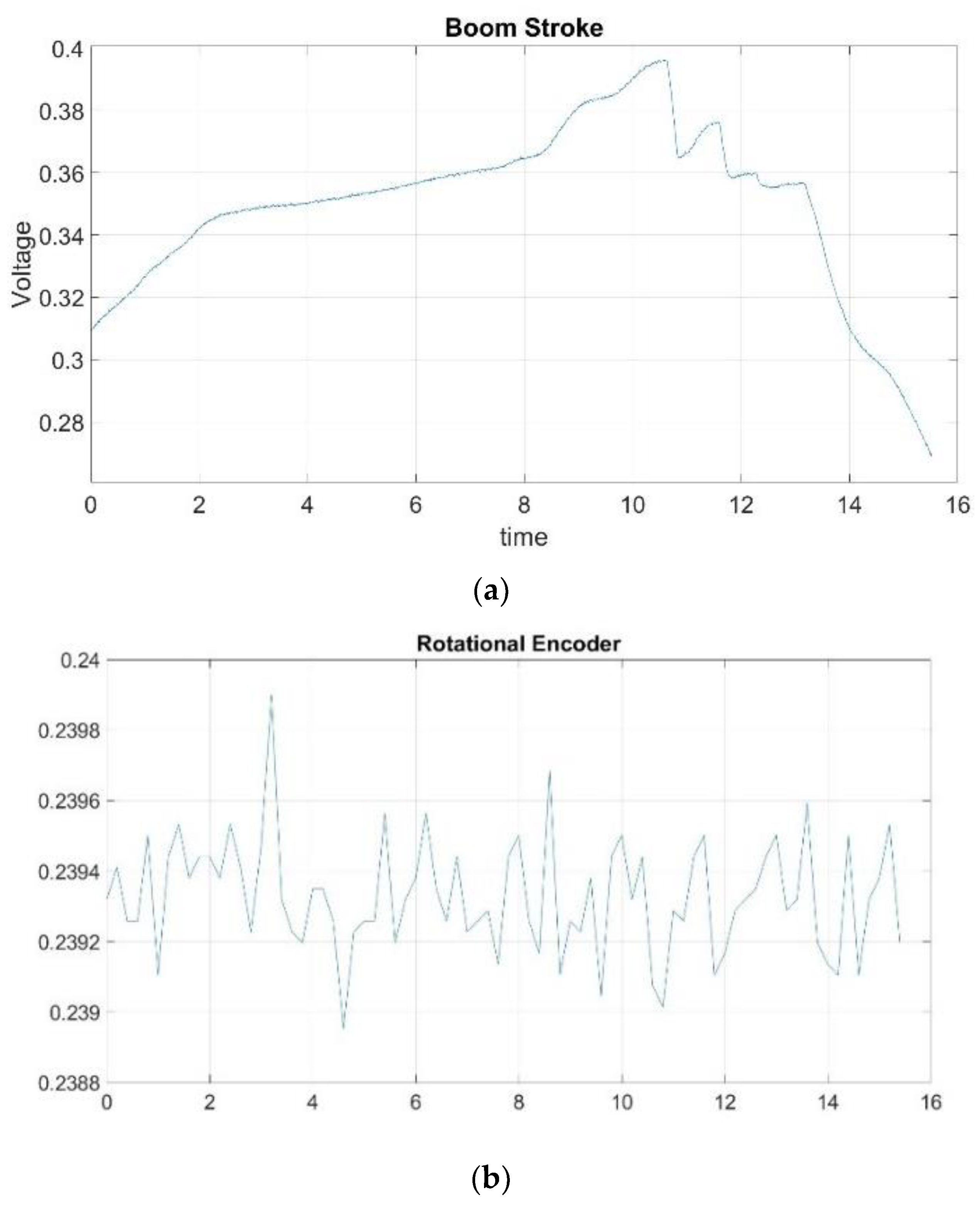

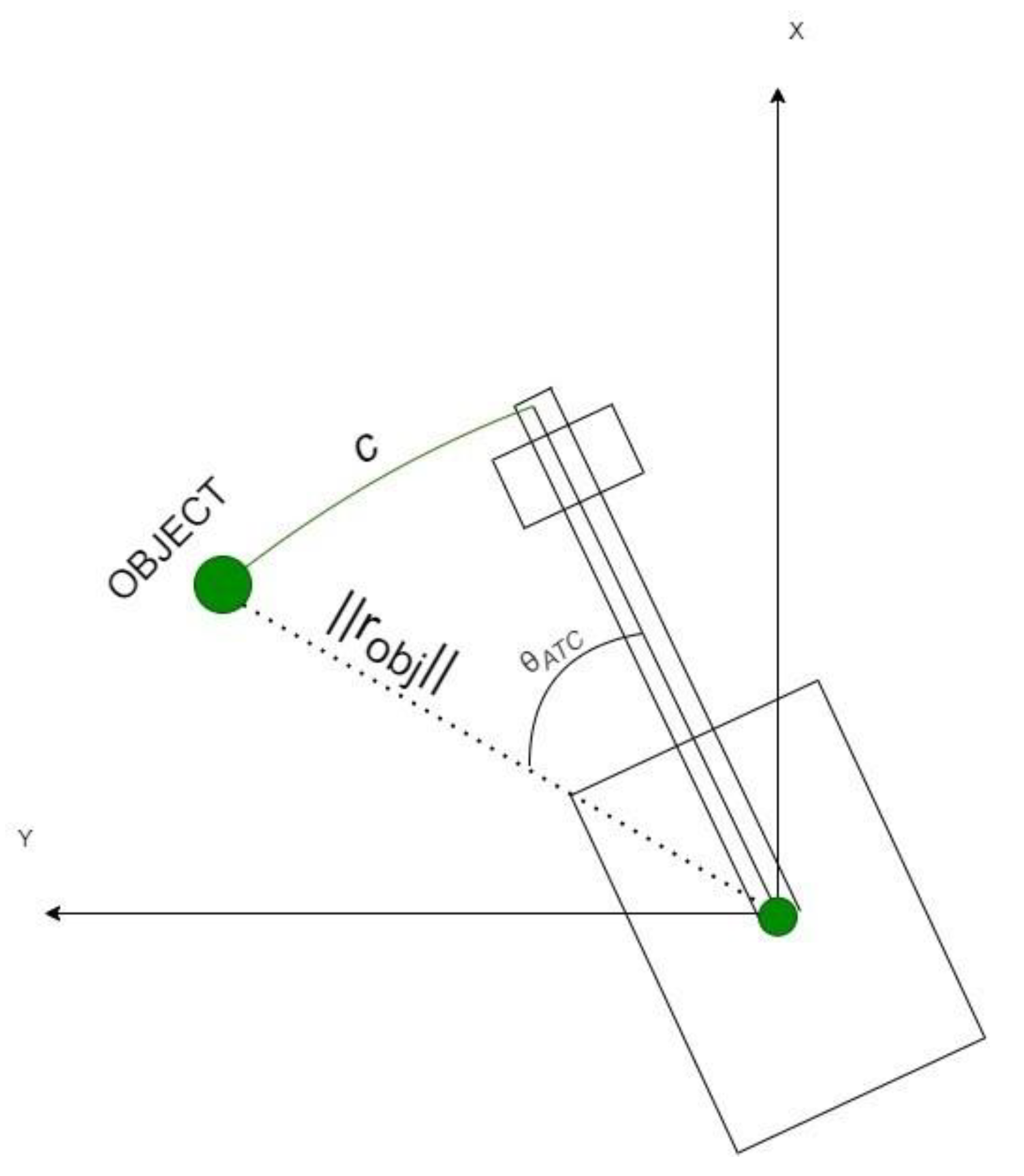

3.5. Calculation of the Excavator’s Working Area

Figure 4 illustrates the positions of the boom, arm, and bucket links. Using the measurements with the stroke sensors and rotational encoder, the position of each link and the rotation of the main body were identified. Stroke sensors and the encoder were mounted on the links of the excavator and its bottom as mentioned in

Section 5.

Figure 5 presents the measurement data from each sensor. Using the information of the measured stroke and Equations (21)–(23), the angle of each link can be computed. Then, the application of a kinematic analysis with the angles of links enables the calculation of the predicted and actual working areas (see

Figure 6).

where

.

In

Figure 6,

denotes the arm joint,

represents the bucket joint,

is the midpoint of the bucket, and

shows the bucket tip,

shows the distance between the body center (origin of the defined coordinate system) and the boom link. The maximum working area can be achieved by the full extension of the links that can be denoted by

(in this case, bucket tip position from the origin).

where,

where

is the maximum value among the predicted longitudinal displacements,

is the current longitudinal displacement,

= 1, 2, 3-1, and 3-2 are the current main points of the excavator, and

denotes the prediction step (1,2, …,

N);

The predicted angle of each part was calculated using Equation (24), which follows the Gaussian distribution, having a zero mean and a standard deviation of the noise in angular velocity. The maximum possible value of noise is

.

is the discrete-time increment.

i is the prediction step.

By using Equations (24) and (25), the x (horizontal position) of the point p (P1~P3-2) is calculated as shown in Equation (26). The predicted horizontal displacements, can be generated using Equation (27). The maximum radius for the predicted working area is chosen from the maximum value among the predicted displacements.

Figure 6 illustrates the horizontal position of

p (left subfigure) and the predicted and maximum working areas (right subfigure). One notes that the maximum working area is defined as the maximum working limit, and therefore it does not change since it can be measured by extending the three links fully. The actual working area is determined by calculating the maximum values among all

x components.

5. Experimental Results

A LiDAR sensor (Velodyne VLP-16) in

Figure 9a was used to detect objects around the excavator during experimental tests. Data obtained from the LiDAR was post-processed with MATLAB whose libraries allow us real-time processing.

The algorithms were tested on the autonomous mini excavator at AVEC lab (Ontario Tech University) shown in

Figure 9b that was modified from an existing manual type excavator (RMD 500, Yantai Rima Machinery, Yantai, SD, China, 2020) by implementing a HW controller (dSPACE), valve blocks, and several types of sensors.

The developed test platform excavator was comprised of three subsystems, i.e., hydraulic, electronic, and mechanical systems. The hydraulic subsystem consisted of electro-hydraulic proportional valves (EHPVs), directional control valves (DCVs), a hydraulic reservoir, hydraulic actuators, and a hydraulic pump. The electronic subsystem was composed of a power supply, relay box, pressure sensors, LVDT stroke sensors (

Figure 9c), and a rotary encoder (

Figure 9d). The mechanical subsystem was made up of three links (boom, arm, and bucket) and the main body.

5.1. Experimental Test Scenario

Experiments were carried out in the presence of multiple static objects, as well as moving ones that were walking around the excavator to represent a collision scenario.

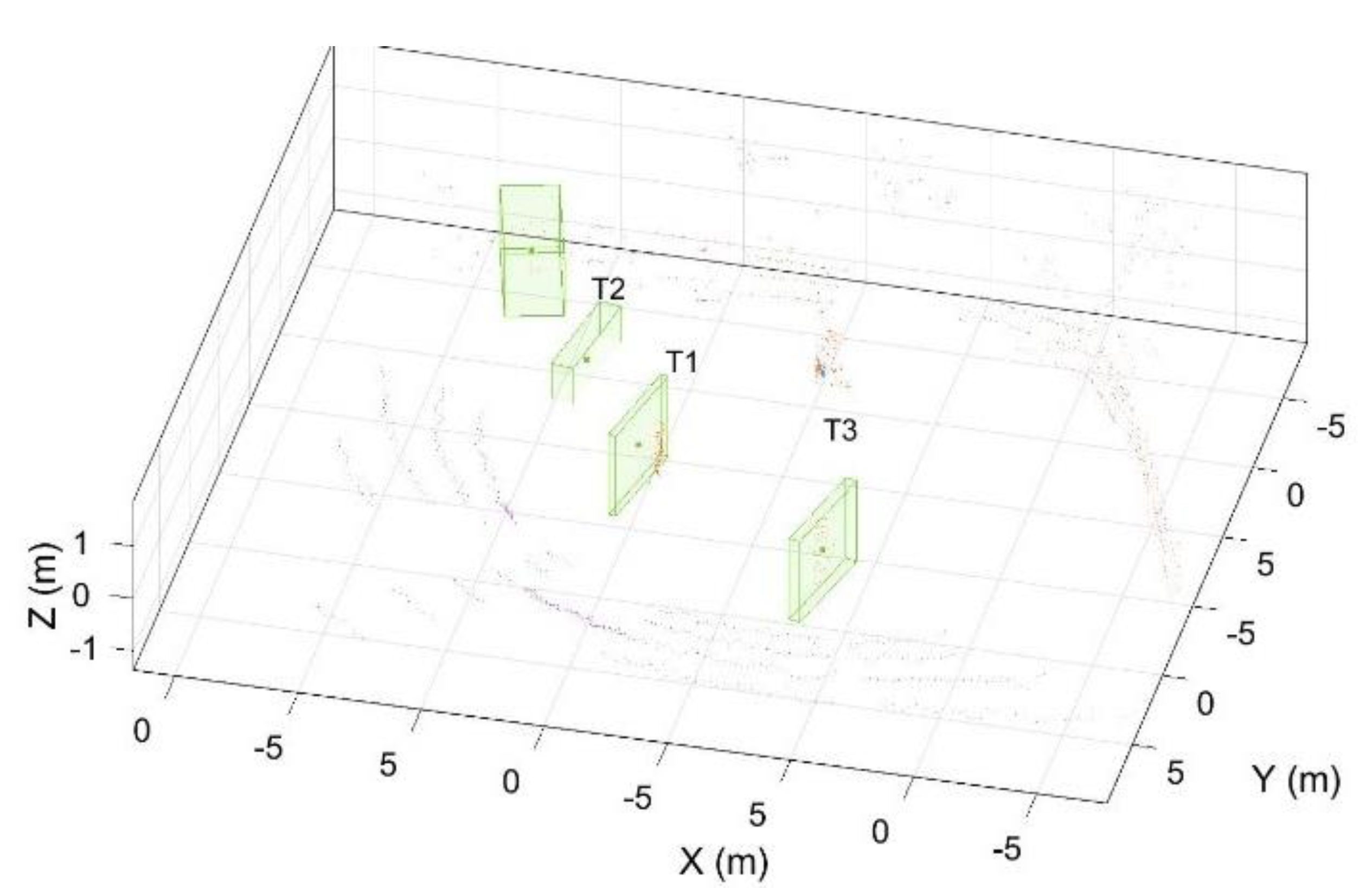

In

Figure 10, one monitored static object

T2, and two moving objects,

T1 and

T3, are indicated. The objects present in the ROI carry their unique labels. If an object leaves the ROI, its corresponding label is deleted, and a new label will be assigned upon returning to the ROI. Therefore, some labels in

Figure 10 are missing because the objects that had left the ROI did not present in the ROI anymore. As the object enters the ROI and continues to move closer to the excavator, object detection, tracking, and safety evaluation are performed.

Note that some points in

Figure 10 are not clustered at the current time frame due to the issue of processing time. Therefore, these points may be in a cluster formed after a few frames.

5.2. Results

Figure 10 shows the raw point cloud data for detected objects. Note that every object has its unique label that helps track management to associate data to each detected object (

and

). This could also help in counting the number of objects around the excavator.

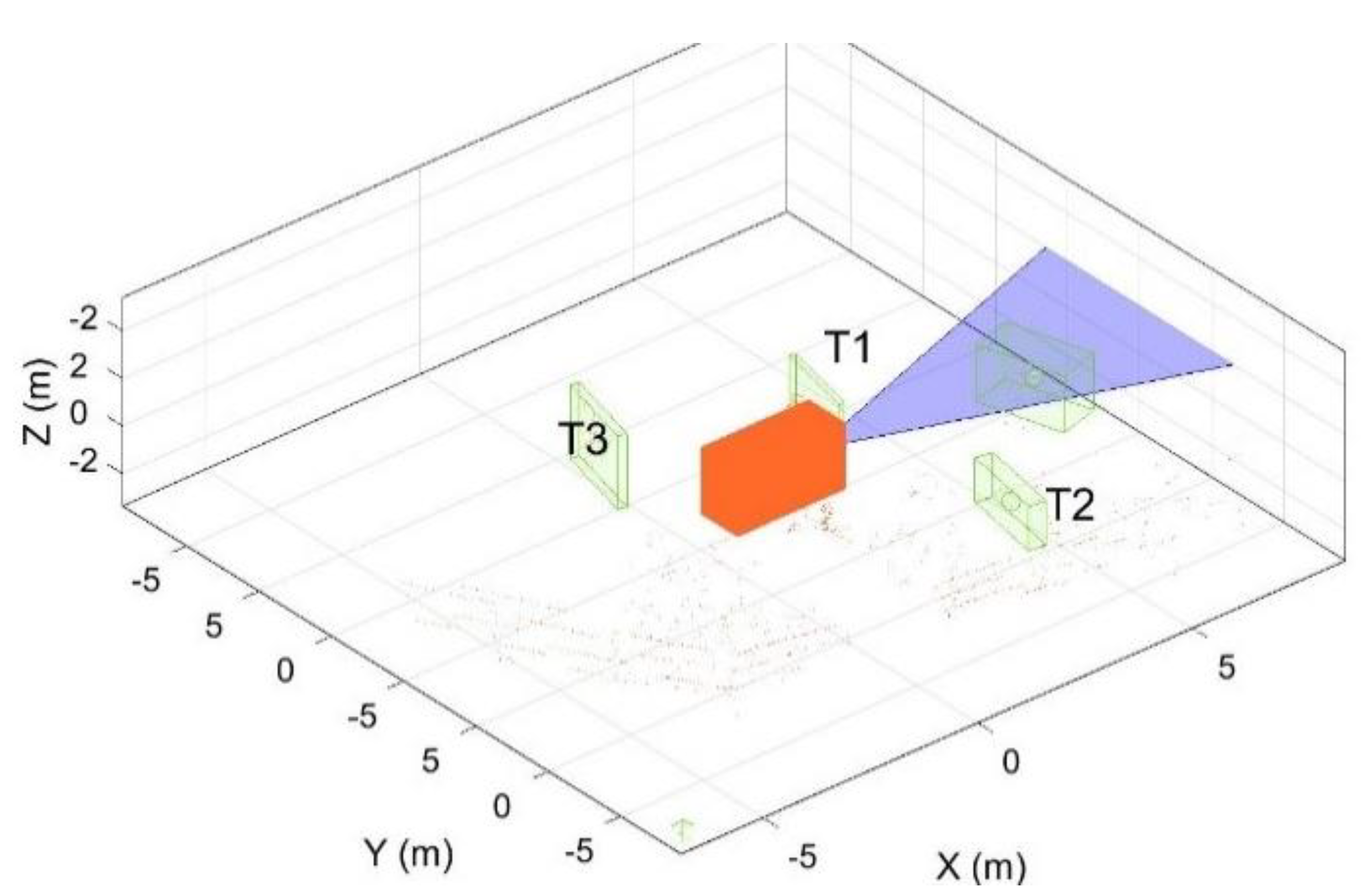

Figure 11 presents the operator’s view to see the excavator and moving objects (

and

) around it. The box (orange color) and fan shape (purple color) in the figure represent the excavator and the operator’s visible sight, respectively. The bounding boxes denote the objects around the excavator. The track IDs in

Figure 10 and

Figure 11 are identical to maintain unique labels for the objects.

The detailed tracking results are presented in

Figure 12 that includes the information of the

, and

coordinates of each object. These coordinates indicate the current and predicted (1 s ahead) positions of the detected objects. The tracking details were utilized for object tracking and safety evaluation. In

Figure 13, the maximum and the actual working areas of the excavator are shown. It also shows the rotation angle of the excavator, the heading angle of tracked moving objects, and their current and predicted states.

The current area of work represented by the inner circle was obtained by conducting the kinematic analysis, while the maximum working area was achieved through a full extension of three links. The safety indices were calculated based on the actual working area. Note that is the static object, and thus its predicted and current positions did not change.

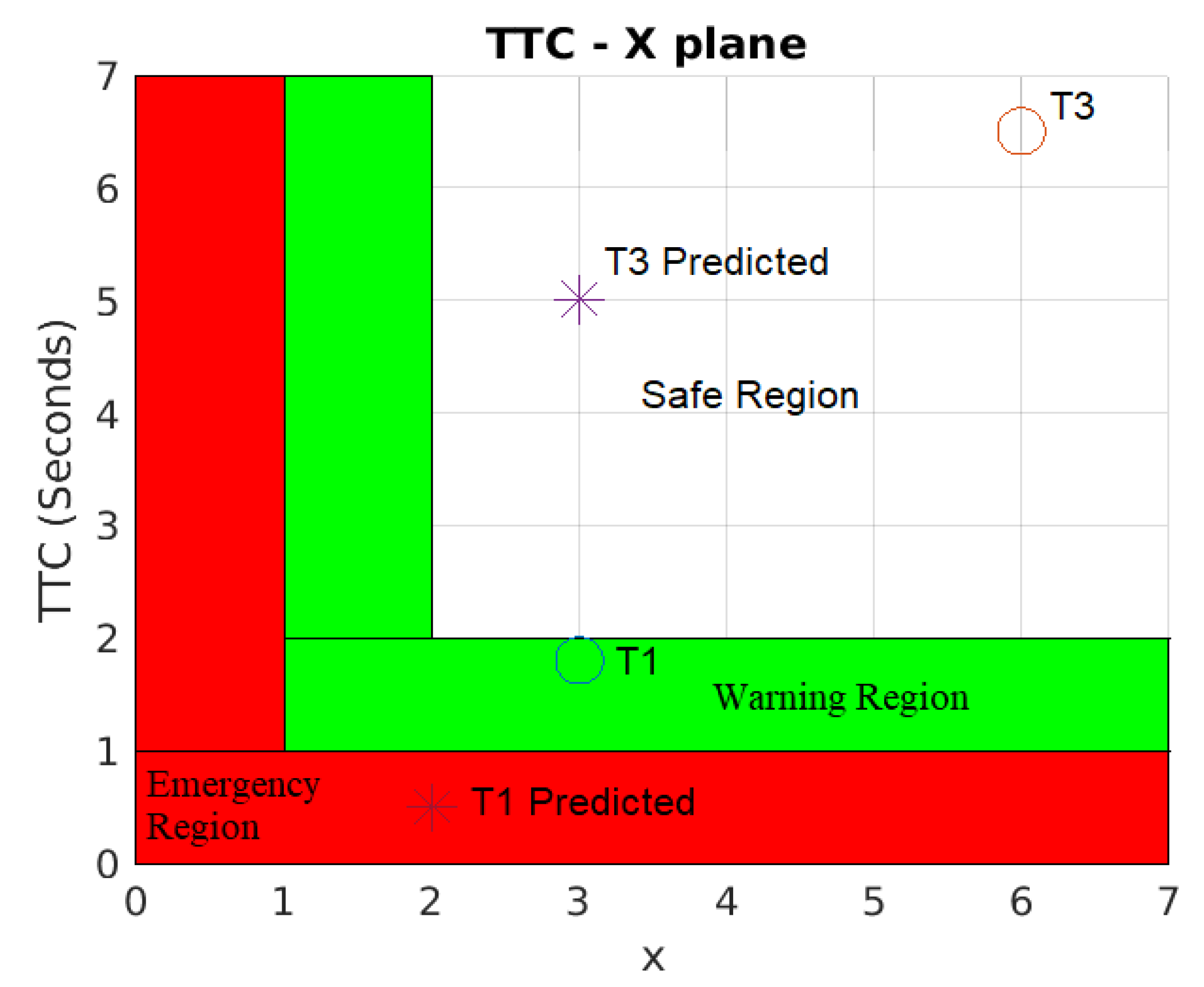

Figure 14 shows the results of the safety evaluation results computed with the safety indices presented in

Figure 8. The current states of

T1 and

T3 with a circle mark are in the warning and safe regions, respectively. However, the predicted state (1 s ahead) of

T1 with the × mark indicates that it is entering the emergency region, and thus the chance of collision has highly increased. Therefore, immediate braking should be taken in 1 s to prevent a collision accident for

T1 since

TTC is less than 1 s. All the detected static objects were far from the maximum working area in the test scenario (i.e., no potential hazard exists in this case), and thus only moving objects are included for the safety evaluation, as seen in

Figure 14. Monitoring in

Figure 13 and safety evaluation in

Figure 14 can work synchronously (see the attached video clip).

Finally, the performance of the developed safety algorithms was evaluated in terms of prediction accuracy using the same experimental data from the test scenario in

Figure 13 and

Figure 14. For this evaluation, we compared the predicted positions of the moving objects

T1 and

T3 at each time frame with the actual positions 1 s after prediction. Since the minimum Euclidean distance for clustering (i.e., the minimum size of a bounding box to detect moving objects) was set as 0.4 m (average of a worker’s chest size), this value was determined as a threshold to judge the success or failure of the prediction. Specifically, the prediction is recognized as ‘success’ if the error between the predicted and actual positions at each time frame (0.1 s) per object is smaller than the threshold value.

Table 1 includes evaluation results to show a successful operation (prediction) rate for each object achieved by the developed algorithms. During the considered test scenario, the successful operation rate for

T1 was 85.27% to indicate that the error is less than the threshold in 220 time frames out of the total, 258. The average error across all the time frames (258) was 0.29 m for

T1. However, the successful operation rate for

T3 was slightly lower (83.59%) than the case of

T1, and its prediction accuracy was more or less increased with the decreased average error of 0.28 m. Therefore, it is noted that the proposed algorithms provide satisfactory performance on position prediction in most of the given time frames.

6. Conclusions

This study proposed sensing algorithms for object tracking and predictive safety evaluation for an autonomous excavator that effectively enables us to avoid potential collision risks between the excavator and the workers around it. Firstly, the proposed method extracted non-ground points from raw point cloud data with 3D LiDAR. The non-ground points were then clustered, and each cluster was fit into a bounding box that was considered as a detected object. The positions and velocities of the detected objects were then estimated and predicted using the integrated tracking filter where the JPDA for multi-target tracking was coupled with UKF and IMM to improve the performance of the tracking management by dealing with the non-linear state estimation and different motion behaviors (i.e., constant velocity and constant turn models), respectively.

The developed algorithm can also monitor the current working areas of the excavator and predict them to conduct safety evaluations. The defined safety indices, and were computed through the prediction of the motion of the detected objects and calculation of the working areas of the excavator based on kinematic analysis. The severity of potential collision risks was defined using the safety indices.

Validations of the developed algorithms were performed with a modified mini excavator having a VLP-16 3D LiDAR, LVDT stroke sensors, and a rotational encoder. Test results indicate that the proposed predictive safety algorithms can successfully conduct tracking of objects, predict their motion, and provide safety evaluations to prevent a collision accident.

A novel feature of the developed safety strategy is the ability to predict the future states of both multiple objects and an excavator within a prediction horizon (i.e., 1 s in advance) during operations. Since previous studies of construction equipment have focused on monitoring its present state, the predictive attribute of this study, conceptualized through a risk metric with time and distance to potential collisions, is the most significant advance, and it will be used to control autonomous excavation equipment that requires more elaborate and precise safety strategies. As part of the evaluation of the developed safety algorithms, this work provides a unique methodology for defining a threshold for judging success or failure predictions and analyzing the success rates of operations with respect to time frame. Therefore, the study proposes an advanced safety management framework that can be applied to many industrial applications, such as construction equipment in transition to full automation and/or autonomy, in which predictive safety is a prerequisite component.

As the constant velocity and constant turn models were assumed for the prediction of the moving objects in the tracking filter, the application of the variable velocity and random motion models will assist in improving the performance of the safety algorithms in predicting the nonlinear motions of the objects in future work. System dynamics [

25,

26] can also be considered for an extension of the current work to enhance the predictive capability of the developed algorithms by investigating the interrelations among the factors causing safety incidents, such as worker-machine interaction.

Excavator safety can be improved by pairing the proposed safety algorithms with the development of sophisticated sensing algorithms or platforms that can avoid the restricted FOV and occlusions caused by obstacles in excavation spaces. Finally, a further advantage of state prediction is that the actuators of an autonomous excavator can be controlled in an adaptive manner depending on the degree of potential collision risk, thereby providing maximum productivity and safety over simply stopping the excavator.