Balancer Genetic Algorithm—A Novel Task Scheduling Optimization Approach in Cloud Computing

Abstract

1. Introduction

- Devising and inculcating a novel load balancing mechanism in addition to makespan in BGA without disturbing the behavioral working of Genetic Algorithm.

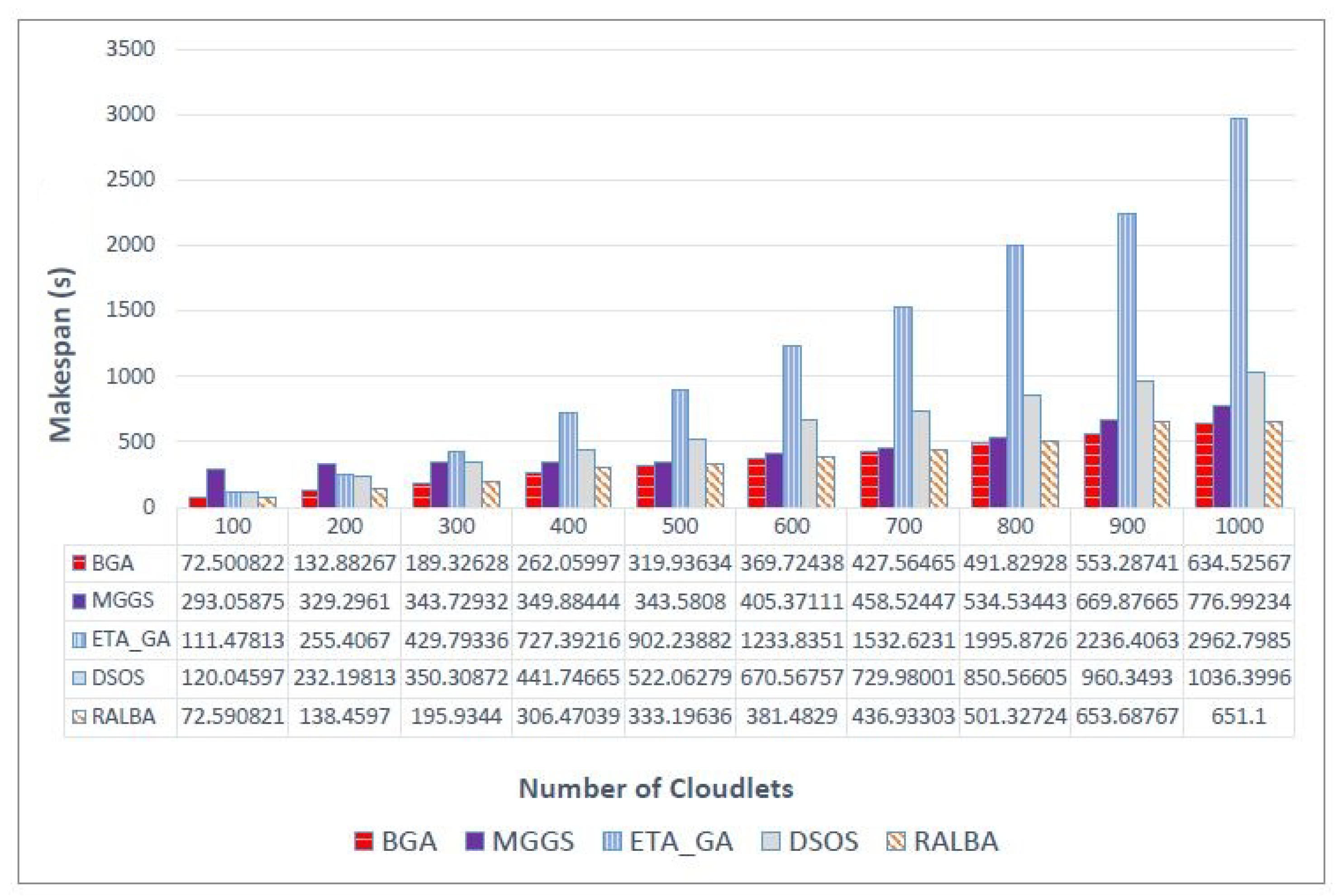

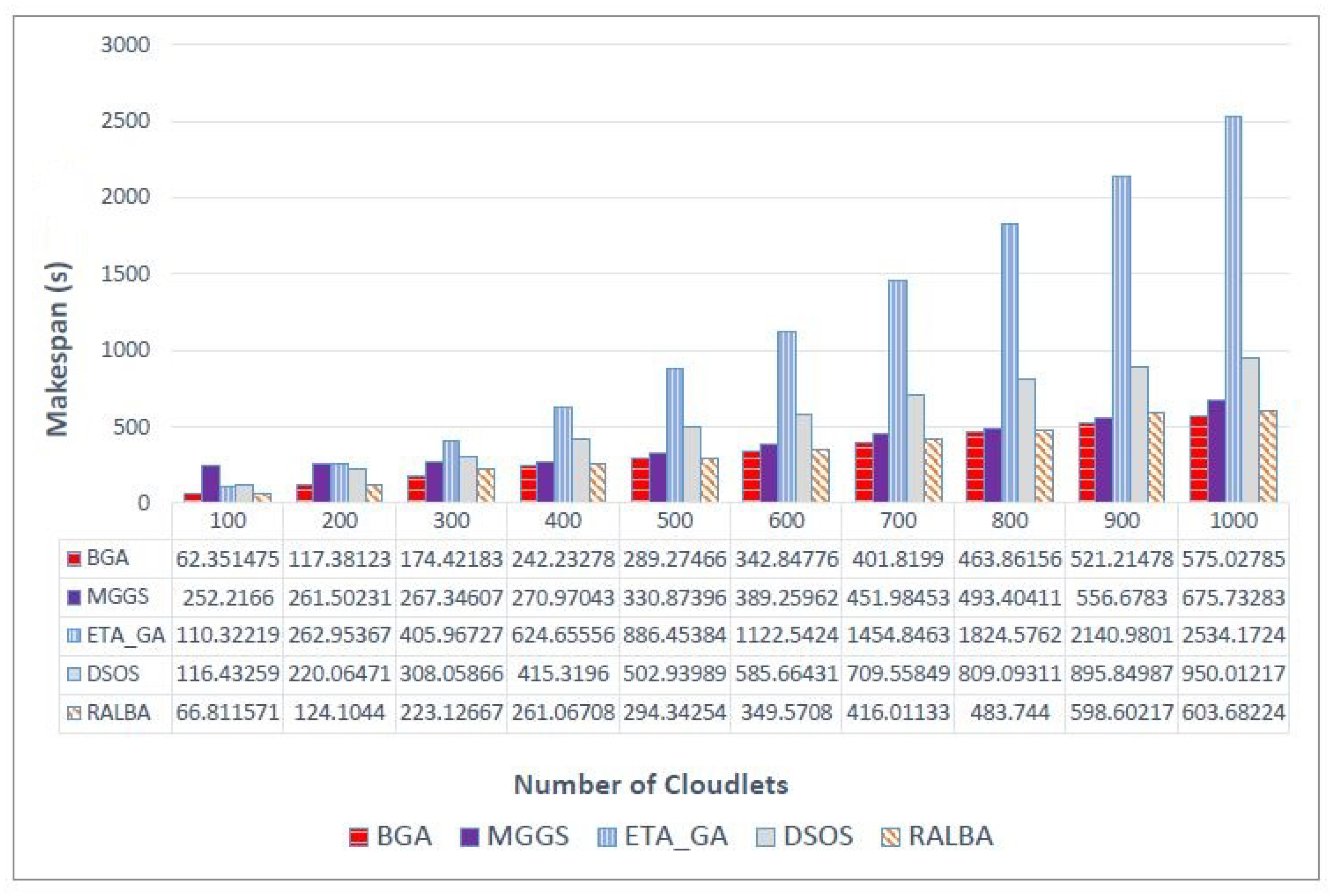

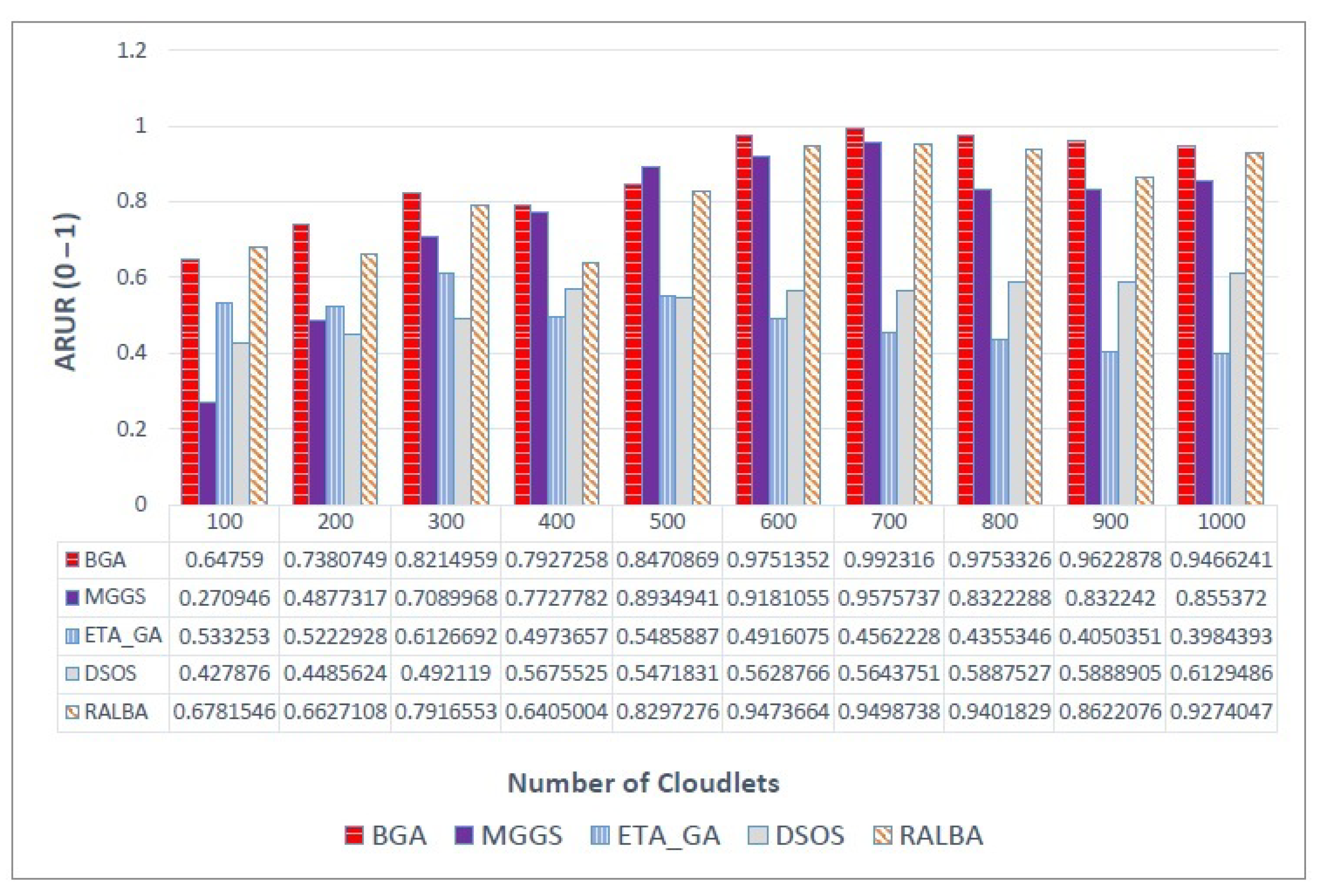

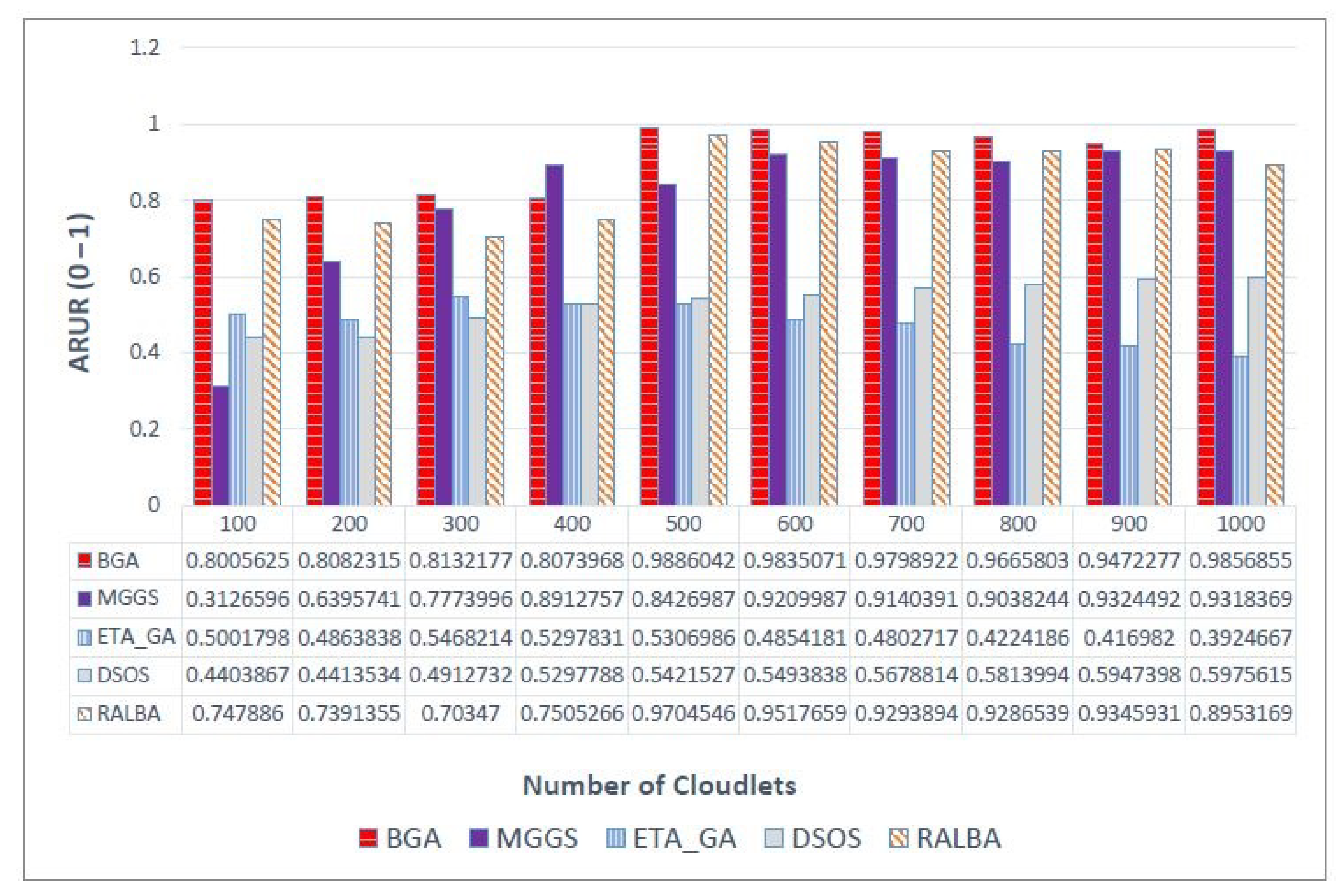

- Evaluation of BGA with other state-of-the-art heuristic and meta-heuristic schedulers for the metrics of makespan, resource utilization and throughput.

- Rationale of opting two conflicting objectives for better task scheduling is also provided.

2. Related Work

2.1. Cloud Scheduling Heuristics

2.2. Cloud Scheduling Meta-Heuristics

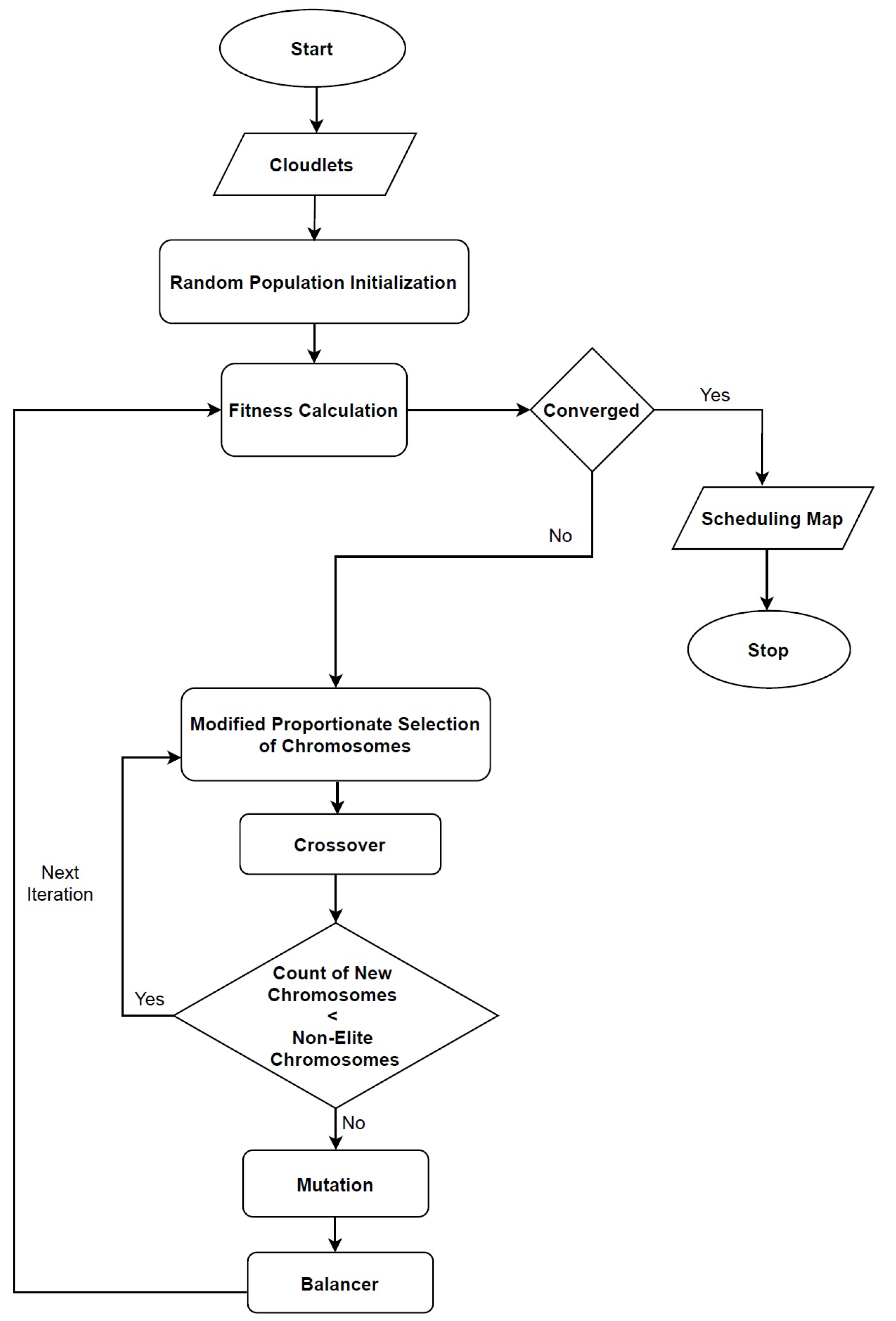

3. Balancer Genetic Algorithm (BGA)

3.1. Task Scheduling

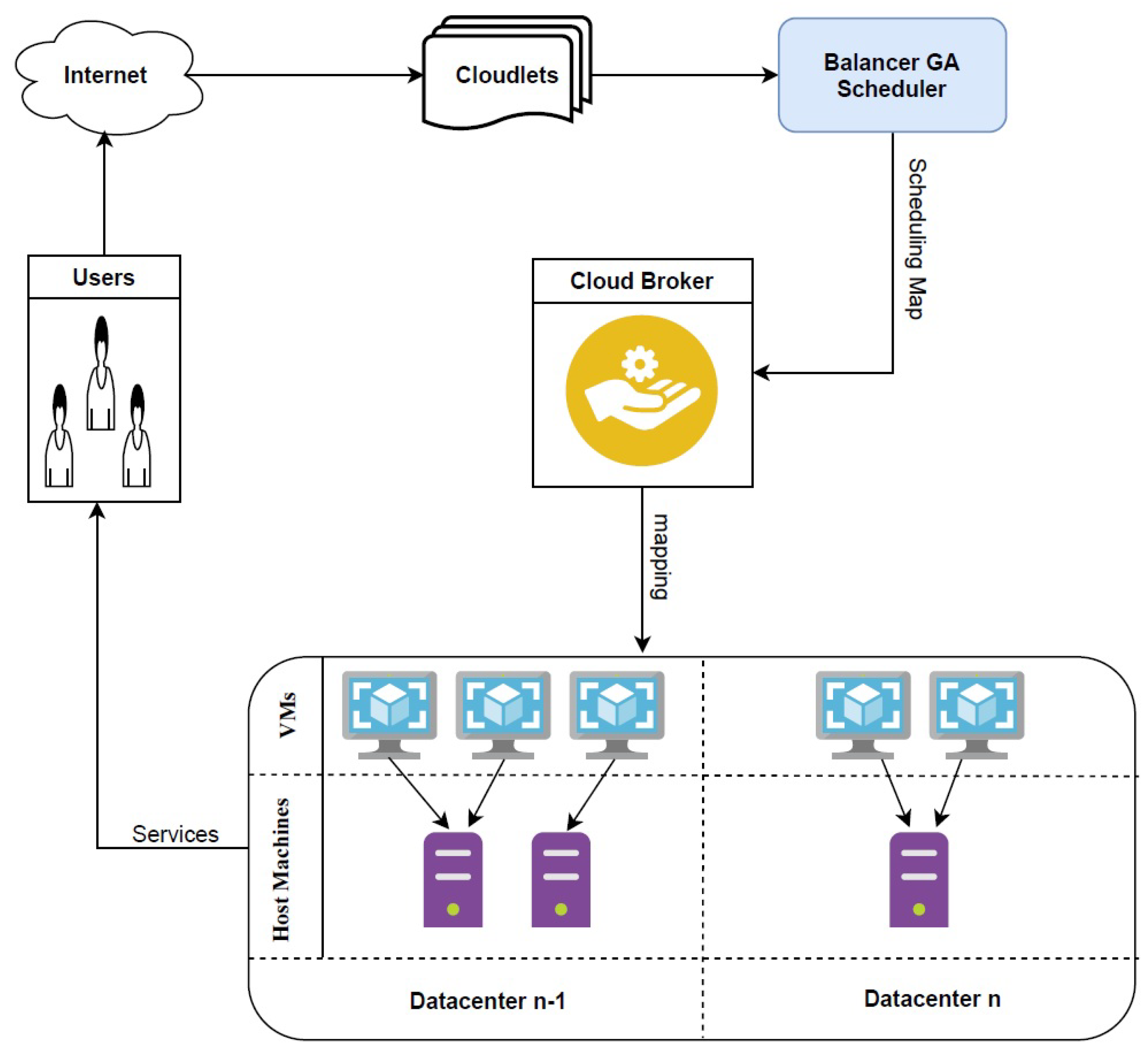

3.2. Architecture of BGA

3.2.1. Encoding

| Algorithm 1 BGA. |

| Input: Size of Jobs, Power of VMs Output: Mapping of Jobs to VMs

|

3.2.2. Fitness Function

3.2.3. Selection Operator

- Adding LoadBalance of chromosomes to compute the sum.

- Converting LoadBalance values of chromosome to maximization.

- Sorting chromosomes in decreasing order based on scaled LoadBalance values.

- Generating a random number , where .

- Adding LoadBalance values to until .

3.2.4. Crossover Operator

| Algorithm 2 LoadBalance. |

| Input: Output:

|

| Algorithm 3 Selection. |

| Input: Output:

|

3.2.5. Mutation Operator

3.2.6. Balancer Operator

| Algorithm 4 Crossover. |

| Input: Output:

|

| Algorithm 5 Mutation. |

| Input: Output:

|

| Algorithm 6 Balancer. |

| Input: Output:

|

3.2.7. Fusion of Heuristic

4. Performance Metrics

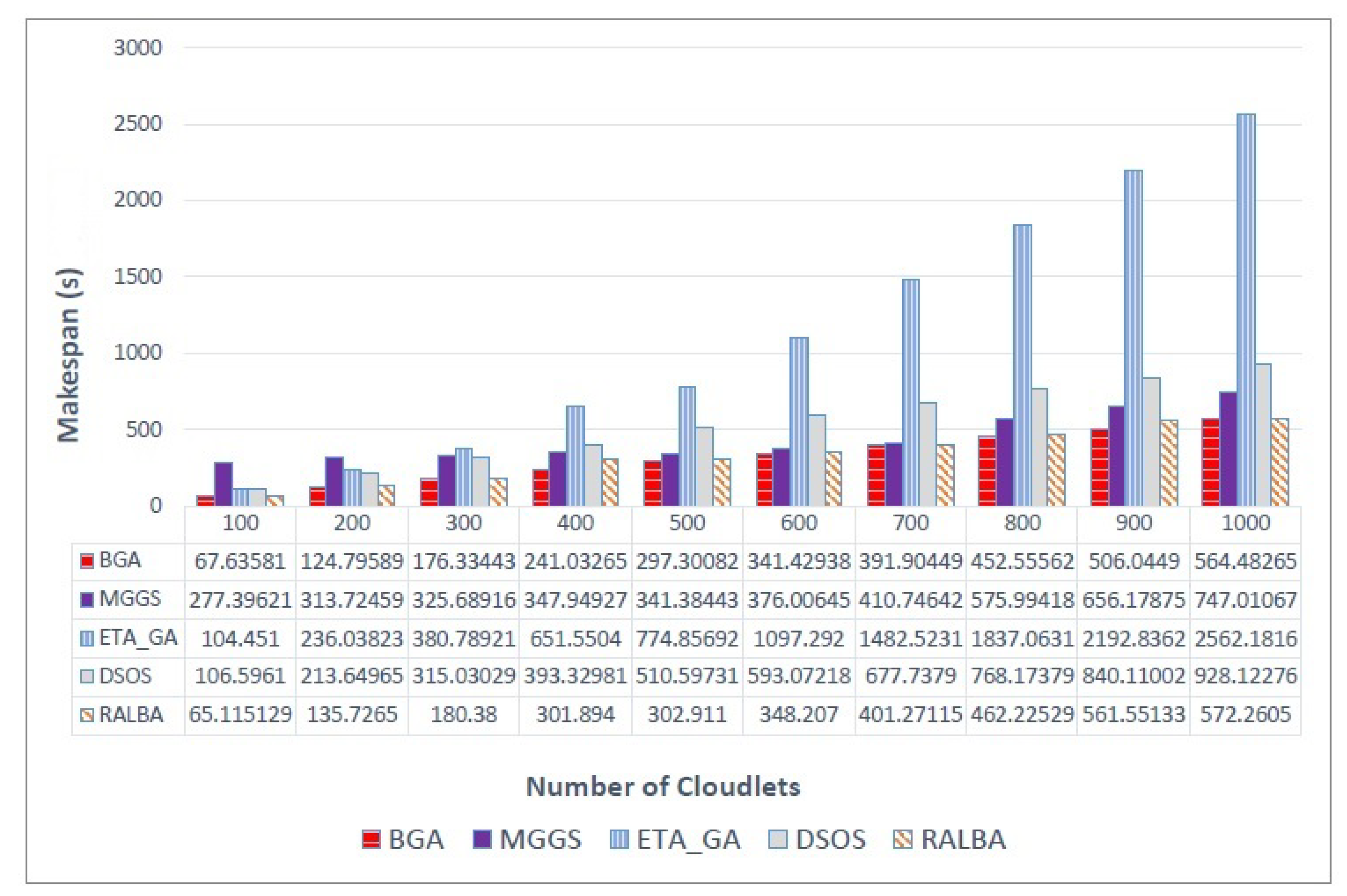

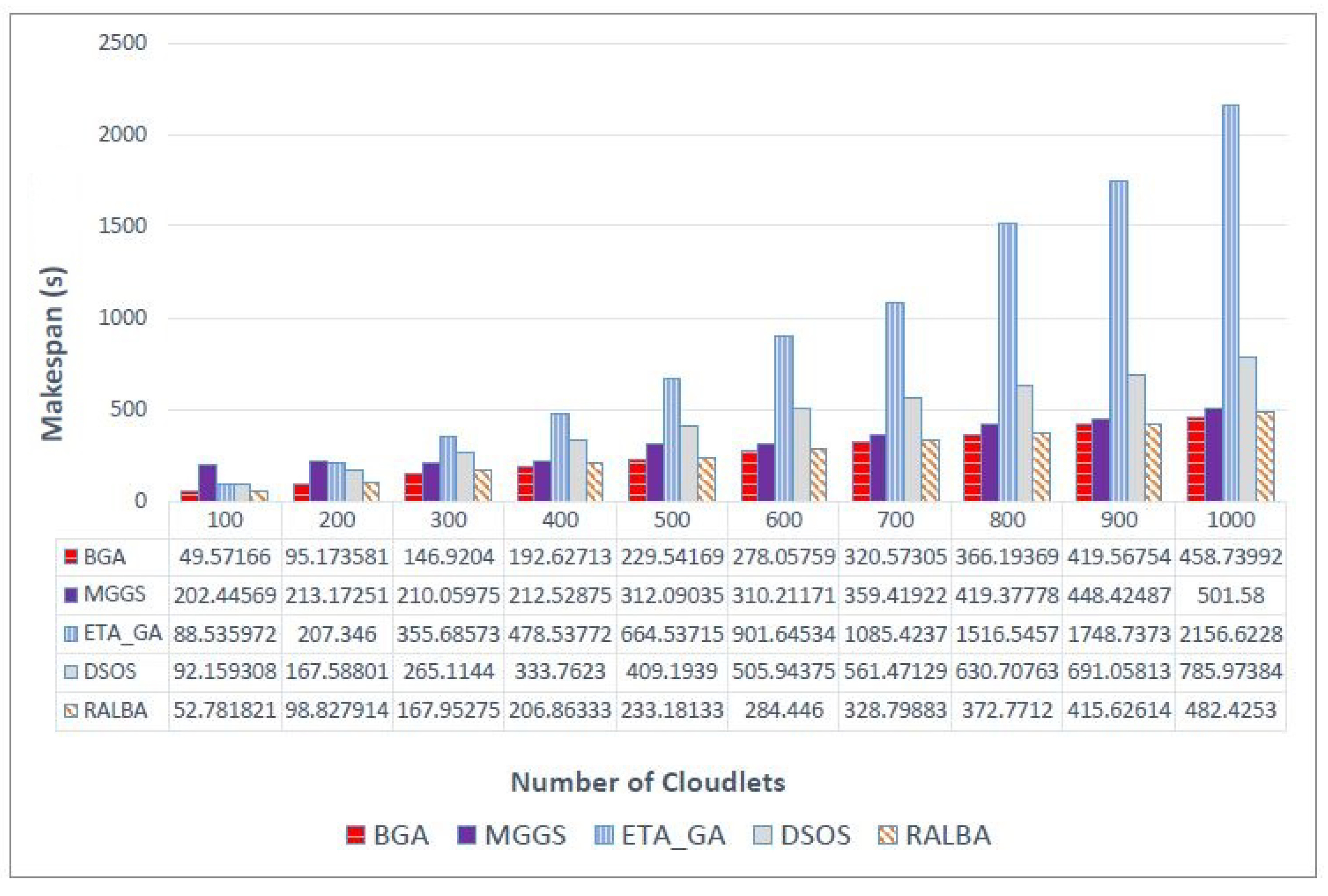

4.1. Makespan

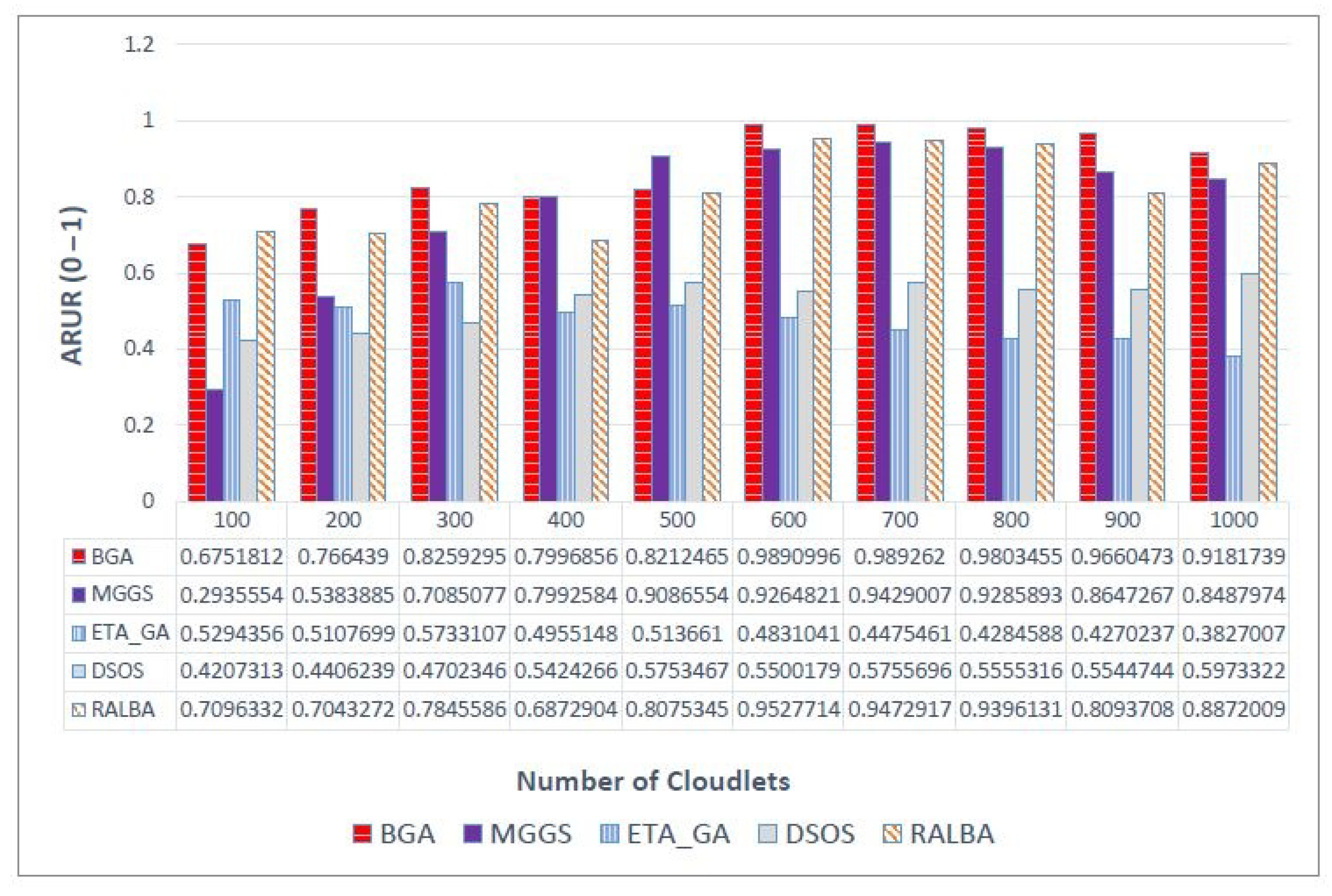

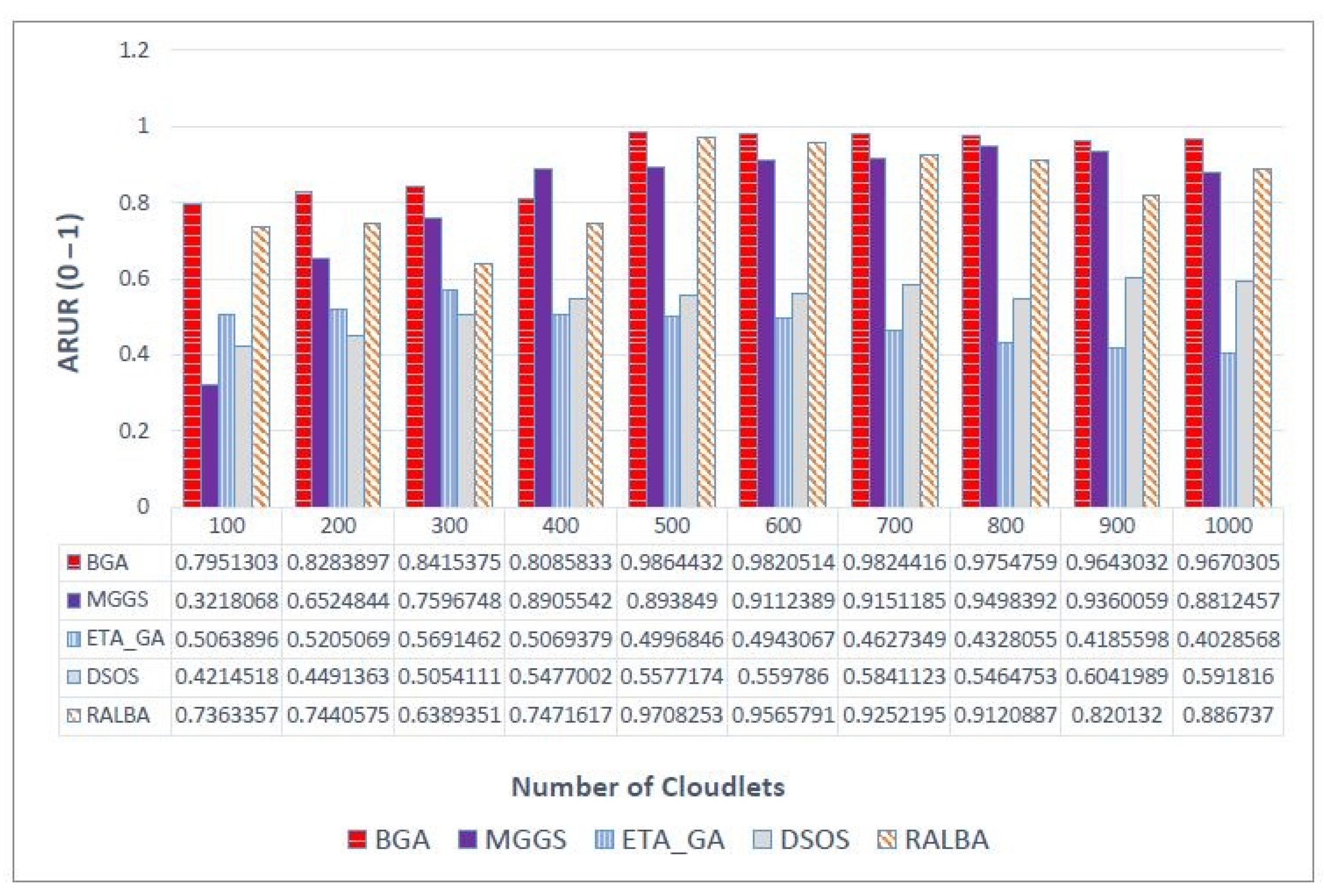

4.2. ARUR

5. Experimental Results and Discussion

5.1. Experimental Setup

5.2. Workload Generation

- Left Skewed: Large-sized tasks are numerous.

- Right Skewed: Small-sized tasks are numerous.

- Normal: Small- and large-sized tasks are almost equal in number.

- Uniform: Tasks are of almost same sizes.

5.3. Benchmark Techniques

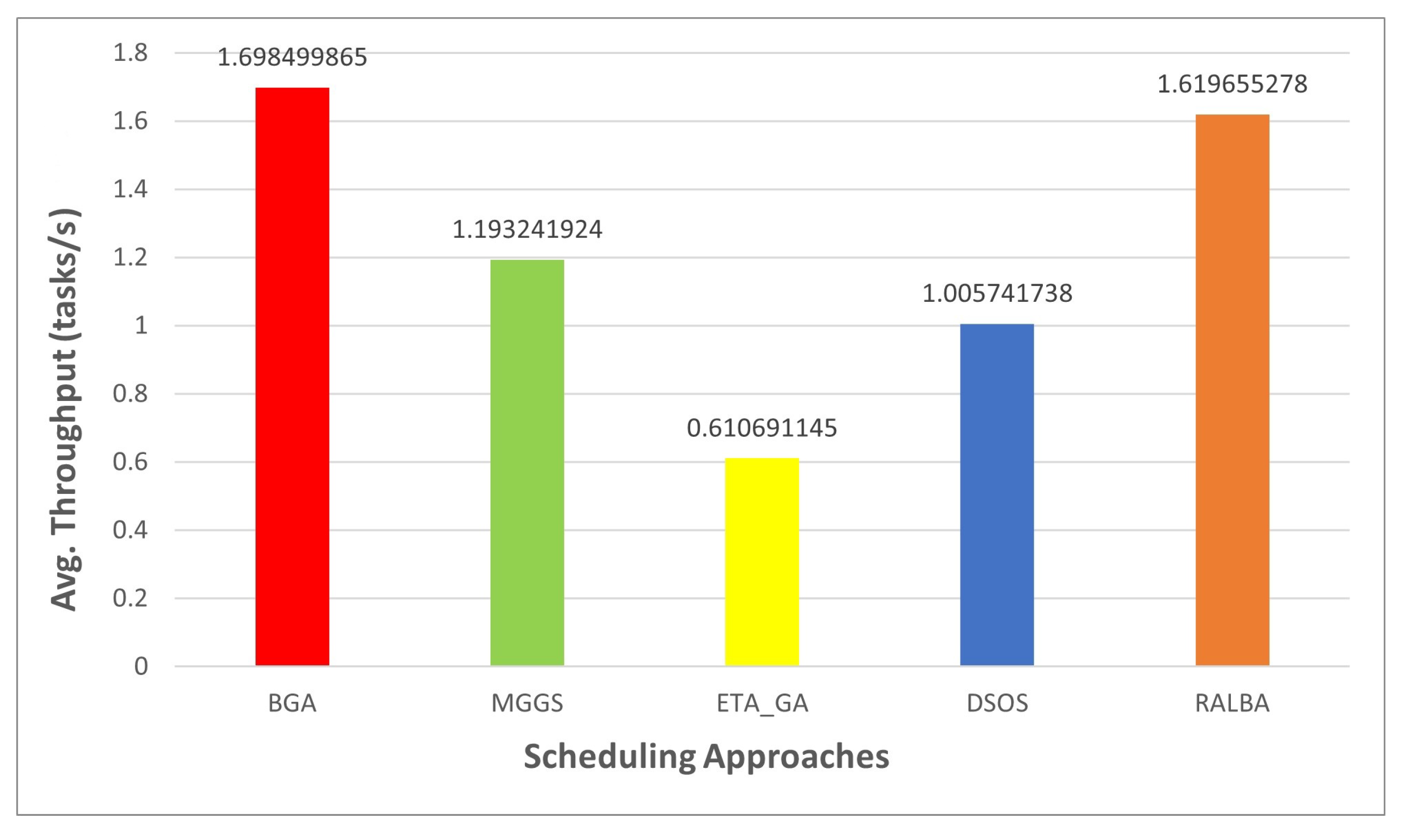

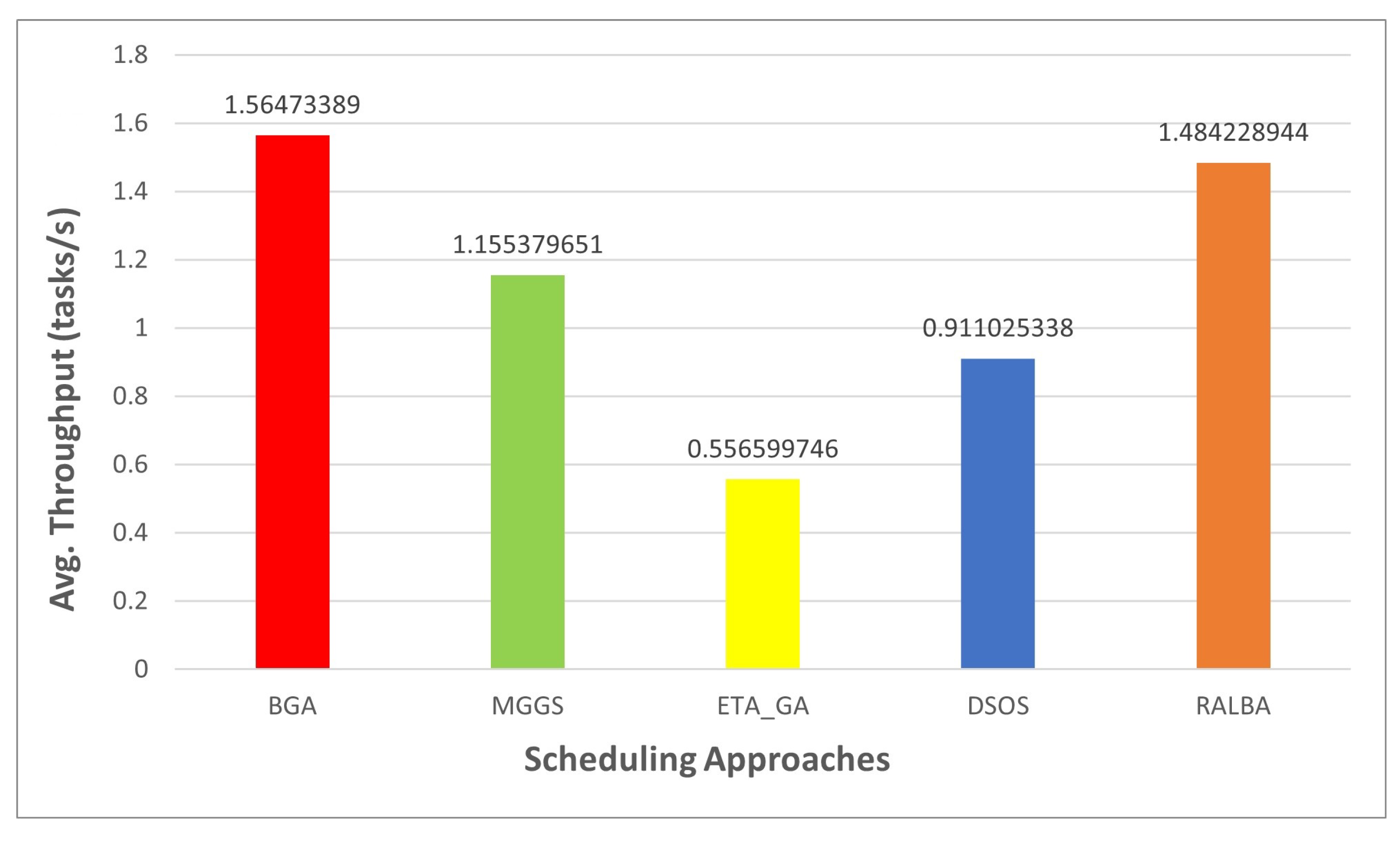

5.4. Discussion on the Comparison

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fang, Y.; Xiao, X.; Ge, J. Cloud Computing Task Scheduling Algorithm Based On Improved Genetic Algorithm. In Proceedings of the 2019 IEEE 3rd Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chengdu, China, 15–17 March 2019; pp. 852–856. [Google Scholar] [CrossRef]

- Abdullahi, M.; Ngadi, M.A.; Dishing, S.I.; Ahmad, B.I.E. An efficient symbiotic organisms search algorithm with chaotic optimization strategy for multi-objective task scheduling problems in cloud computing environment. J. Netw. Comput. Appl. 2019, 133, 60–74. [Google Scholar] [CrossRef]

- Hussain, A.; Aleem, M.; Khan, A.; Iqbal, M.A.; Islam, M.A. RALBA: A computation-aware load balancing scheduler for cloud computing. Cluster Comput. 2018, 21, 1667–1680. [Google Scholar] [CrossRef]

- Gong, C.; Liu, J.; Zhang, Q.; Chen, H.; Gong, Z. The characteristics of cloud computing. In Proceedings of the 2010 39th International Conference on Parallel Processing Workshops, San Diego, CA, USA, 13–16 September 2010; pp. 275–279. [Google Scholar] [CrossRef]

- Duan, K.; Fong, S.; Siu, S.W.I.; Song, W.; Guan, S.S.-U. Adaptive Incremental Genetic Algorithm for Task Scheduling in Cloud Environments. Symmetry 2018, 10, 168. [Google Scholar] [CrossRef]

- Wang, Y.; Zuo, X. An Effective Cloud Workflow Scheduling Approach Combining PSO and Idle Time Slot-Aware Rules. IEEE/CAA J. Autom. Sin. 2021, 8, 1079–1094. [Google Scholar] [CrossRef]

- Xu, Z.; Xu, X.; Zhao, X. Task scheduling based on multi-objective genetic algorithm in cloud computing. J. Inf. Comput. Sci. 2015, 1429–1438. [Google Scholar] [CrossRef]

- Panda, S.K.; Jana, P.K. Load balanced task scheduling for cloud computing: A probabilistic approach. Knowl. Inf. Syst. 2019, 61, 1607–1631. [Google Scholar] [CrossRef]

- Mansouri, N.; Zade, B.M.H.; Javidi, M.M. Hybrid task scheduling strategy for cloud computing by modified particle swarm optimization and fuzzy theory. Comput. Ind. Eng. 2019, 130, 597–633. [Google Scholar] [CrossRef]

- Ebadifard, F.; Babamir, S.M. A PSO-based task scheduling algorithm improved using a load-balancing technique for the cloud computing environment. Concurr. Comput. Pract. Exp. 2018, 30, e4368. [Google Scholar] [CrossRef]

- Hussain, A.; Aleem, M.; Iqbal, M.A.; Islam, M.A. SLA-RALBA: Cost-efficient and resource-aware load balancing algorithm for cloud computing. J. Supercomput. 2019, 75, 6777–6803. [Google Scholar] [CrossRef]

- Manglani, V.; Jain, A.; Prasad, V. Task scheduling in cloud computing. Inter. J. Adv. Res. Comput. Sci. 2018, 821–825. [Google Scholar] [CrossRef]

- Zhou, Z.; Li, F.; Zhu, H.; Xie, H.; Abawajy, J.H.; Chowdhury, M.U. An improved genetic algorithm using greedy strategy toward task scheduling optimization in cloud environments. Neural Comput. Appl. 2019, 1–11. [Google Scholar] [CrossRef]

- Kaur, S.; Verma, A. An efficient approach to genetic algorithm for task scheduling in cloud computing environment. Int. J. Inf. Technol. Comput. Sci. (IJITCS) 2012, 4, 74. [Google Scholar] [CrossRef]

- Mohamad, Z.; Mahmoud, A.A.; Nik, W.N.S.W.; Mohamed, M.A.; Deris, M.M. A genetic algorithm for optimal job scheduling and load balancing in cloud computing. Inter. J. Eng. Technol. 2018, 290–294. [Google Scholar] [CrossRef]

- Hamad, S.A.; Omara, F.A. Genetic-based task scheduling algorithm in cloud computing environment. Int. J. Adv. Comput. Sci. Appl. 2016, 7, 550–556. [Google Scholar] [CrossRef]

- Zhan, Z.H.; Zhang, G.Y.; Gong, Y.J.; Zhang, J. Load balance aware genetic algorithm for task scheduling in cloud computing. In Proceedings of the Asia-Pacific Conference on Simulated Evolution and Learning, Dunedin, New Zealand, 15–18 December 2014; pp. 644–655. [Google Scholar] [CrossRef]

- Rekha, P.M.; Dakshayini, M. Efficient task allocation approach using genetic algorithm for cloud environment. Clust. Comput. 2019, 22, 1241–1251. [Google Scholar] [CrossRef]

- Javanmardi, S.; Shojafar, M.; Amendola, D.; Cordeschi, N.; Liu, H.; Abraham, A. Hybrid job scheduling algorithm for cloud computing environment. In Proceedings of the Fifth International Conference on Innovations in Bio-Inspired Computing and Applications IBICA 2014, Ostrava, Czech Republic, 23–25 June 2014; pp. 43–52. [Google Scholar] [CrossRef]

- Kokilavani, T.; Amalarethinam, D.G. Load balanced min-min algorithm for static meta-task scheduling in grid computing. Int. J. Comput. Appl. 2011, 20, 43–49. [Google Scholar] [CrossRef]

- Alnusairi, T.S.; Shahin, A.A.; Daadaa, Y. Binary PSOGSA for Load Balancing Task Scheduling in Cloud Environment. Int. J. Adv. Comput. Sci. Appl. 2018. [Google Scholar] [CrossRef]

- Abdi, S.; Motamedi, S.A.; Sharifian, S. Task scheduling using modified PSO algorithm in cloud computing environment. In Proceedings of the International Conference on Machine Learning, Electrical and Mechanical Engineering, Tomsk, Russia, 16–18 October 2014; Volume 4, pp. 8–12. [Google Scholar]

- Cao, Y.; Zhang, H.; Li, W.; Zhou, M.; Zhang, Y.; Chaovalitwongse, W.A. Comprehensive learning particle swarm optimization algorithm with local search for multimodal functions. IEEE Trans. Evol. Comput. 2018, 23, 718–731. [Google Scholar] [CrossRef]

- Abdullahi, M.; Ngadi, M.A. Symbiotic Organism Search optimization based task scheduling in cloud computing environment. Future Gener. Comput. Syst. 2016, 56, 640–650. [Google Scholar] [CrossRef]

- Nasr, A.A.; El-Bahnasawy, N.A.; Attiya, G.; El-Sayed, A. Using the TSP solution strategy for cloudlet scheduling in cloud computing. J. Netw. Syst. Manag. 2019, 27, 366–387. [Google Scholar] [CrossRef]

- Panda, S.K.; Jana, P.K. An energy-efficient task scheduling algorithm for heterogeneous cloud computing systems. Clust. Comput. 2019, 22, 509–527. [Google Scholar] [CrossRef]

- Singh, S.; Kalra, M. Scheduling of independent tasks in cloud computing using modified genetic algorithm. In Proceedings of the 2014 International Conference on Computational Intelligence and Communication Networks, Bhopal, India, 14–16 November 2014; pp. 565–569. [Google Scholar] [CrossRef]

- Kaur, S.; Sengupta, J. Load Balancing using Improved Genetic Algorithm (IGA) in Cloud computing. Int. J. Adv. Res. Comput. Eng. Technol. (IJARCET) 2017, 6. [Google Scholar]

- Kumar, A.S.; Venkatesan, M. Multi-Objective Task Scheduling Using Hybrid Genetic-Ant Colony Optimization Algorithm in Cloud Environment. Wirel. Pers. Commun. 2019, 107, 1835–1848. [Google Scholar] [CrossRef]

- Krishnasamy, K. Task scheduling algorithm based on hybrid particle swarm optimization in cloud computing environment. J. Theor. Appl. Inf. Technol. 2013, 55, 33–38. [Google Scholar]

- Kaur, G.; Sharma, E.S. Optimized utilization of resources using improved particle swarm optimization based task scheduling algorithms in cloud computing. Int. J. Emerg. Technol. Adv. Eng. 2014, 4, 110–115. [Google Scholar]

- Beegom, A.A.; Rajasree, M.S. Integer-PSO: A discrete PSO algorithm for task scheduling in cloud computing systems. Evol. Intell. 2019, 12, 227–239. [Google Scholar] [CrossRef]

- Bitam, S. Bees life algorithm for job scheduling in cloud computing. In Proceedings of the Third International Conference on Communications and Information Technology, Coimbatore, India, 26–28 July 2012; pp. 186–191. [Google Scholar] [CrossRef]

- Awad, A.I.; El-Hefnawy, N.A.; Abdel_kader, H.M. Enhanced particle swarm optimization for task scheduling in cloud computing environments. Procedia Comput. Sci. 2015, 65, 920–929. [Google Scholar] [CrossRef]

- Rjoub, G.; Bentahar, J.; Abdel Wahab, O.; Bataineh, A.S. Deep and reinforcement learning for automated task scheduling in large-scale cloud computing systems. Concurr. Comput. Pract. Exp. 2020, e5919. [Google Scholar] [CrossRef]

- Sharma, M.; Garg, R. An artificial neural network based approach for energy efficient task scheduling in cloud data centers. Sustain. Comput. Inform. Syst. 2020, 26, 100373. [Google Scholar] [CrossRef]

- Calheiros, R.N.; Ranjan, R.; Beloglazov, A.; De Rose, C.A.; Buyya, R. CloudSim: A toolkit for modeling and simulation of cloud computing environments and evaluation of resource provisioning algorithms. Softw. Pract. Exp. 2011, 41, 23–50. [Google Scholar] [CrossRef]

| Parameters | Values |

|---|---|

| Population size | 120 |

| Iterations | 1000 |

| 0.18–0.22 | |

| 0.75–0.85 | |

| 1.98–2.22 | |

| Mutation rate | 0.0047 |

| VM No. | Power (MIPS) | VM No. | Power (MIPS) |

|---|---|---|---|

| 1–7 | 100 | 27-32 | 1250 |

| 8–14 | 500 | 33-38 | 1500 |

| 15–20 | 750 | 39-44 | 1750 |

| 21–26 | 1000 | 45-50 | 4000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gulbaz, R.; Siddiqui, A.B.; Anjum, N.; Alotaibi, A.A.; Althobaiti, T.; Ramzan, N. Balancer Genetic Algorithm—A Novel Task Scheduling Optimization Approach in Cloud Computing. Appl. Sci. 2021, 11, 6244. https://doi.org/10.3390/app11146244

Gulbaz R, Siddiqui AB, Anjum N, Alotaibi AA, Althobaiti T, Ramzan N. Balancer Genetic Algorithm—A Novel Task Scheduling Optimization Approach in Cloud Computing. Applied Sciences. 2021; 11(14):6244. https://doi.org/10.3390/app11146244

Chicago/Turabian StyleGulbaz, Rohail, Abdul Basit Siddiqui, Nadeem Anjum, Abdullah Alhumaidi Alotaibi, Turke Althobaiti, and Naeem Ramzan. 2021. "Balancer Genetic Algorithm—A Novel Task Scheduling Optimization Approach in Cloud Computing" Applied Sciences 11, no. 14: 6244. https://doi.org/10.3390/app11146244

APA StyleGulbaz, R., Siddiqui, A. B., Anjum, N., Alotaibi, A. A., Althobaiti, T., & Ramzan, N. (2021). Balancer Genetic Algorithm—A Novel Task Scheduling Optimization Approach in Cloud Computing. Applied Sciences, 11(14), 6244. https://doi.org/10.3390/app11146244