Performance Evaluation of RNN with Hyperbolic Secant in Gate Structure through Application of Parkinson’s Disease Detection

Abstract

1. Introduction

2. Related Work

3. The Model Description and Data Preprocessing

3.1. RNN Models

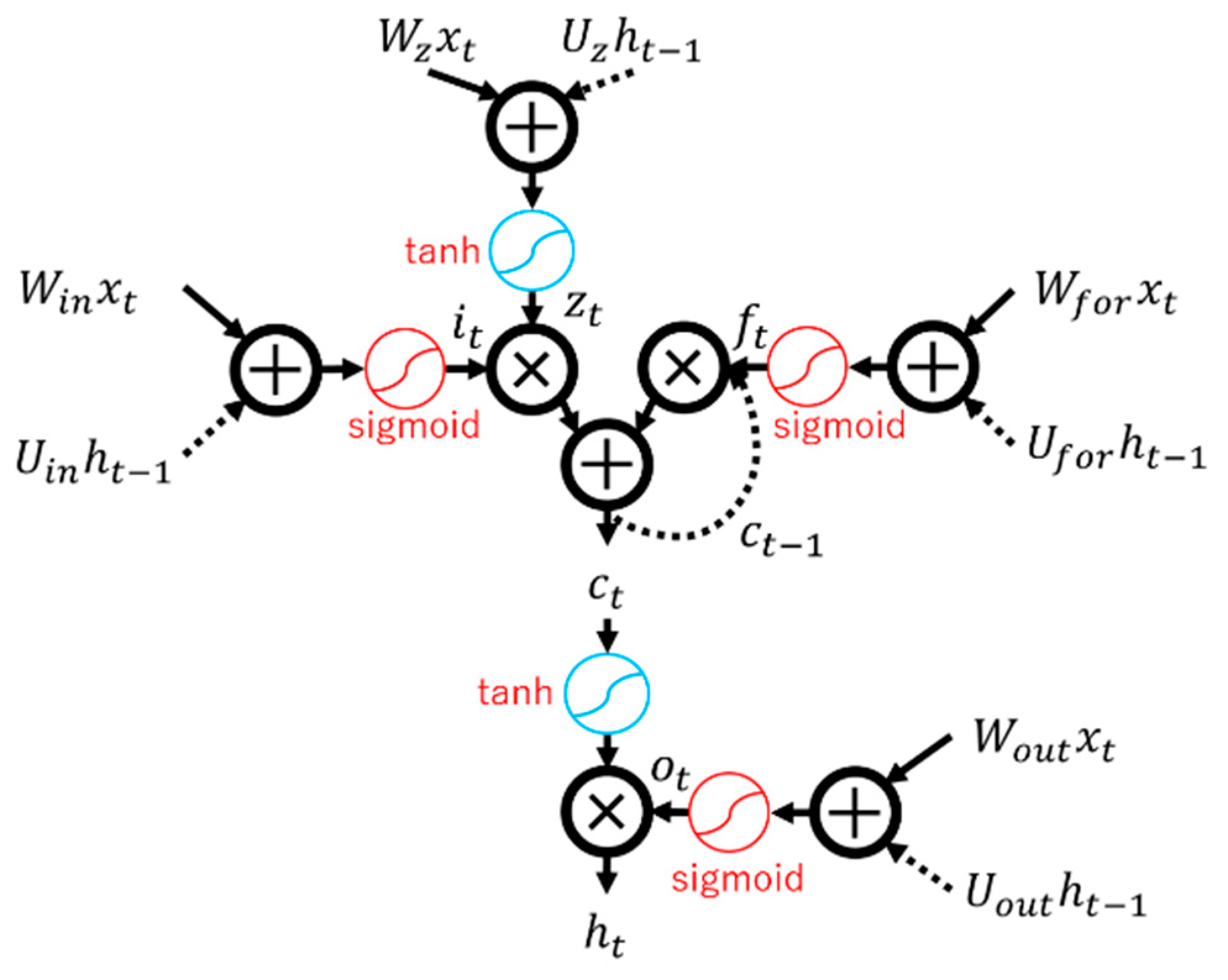

3.1.1. Long Short-Term Memory (LSTM)

3.1.2. Gated Recurrent Unit (GRU)

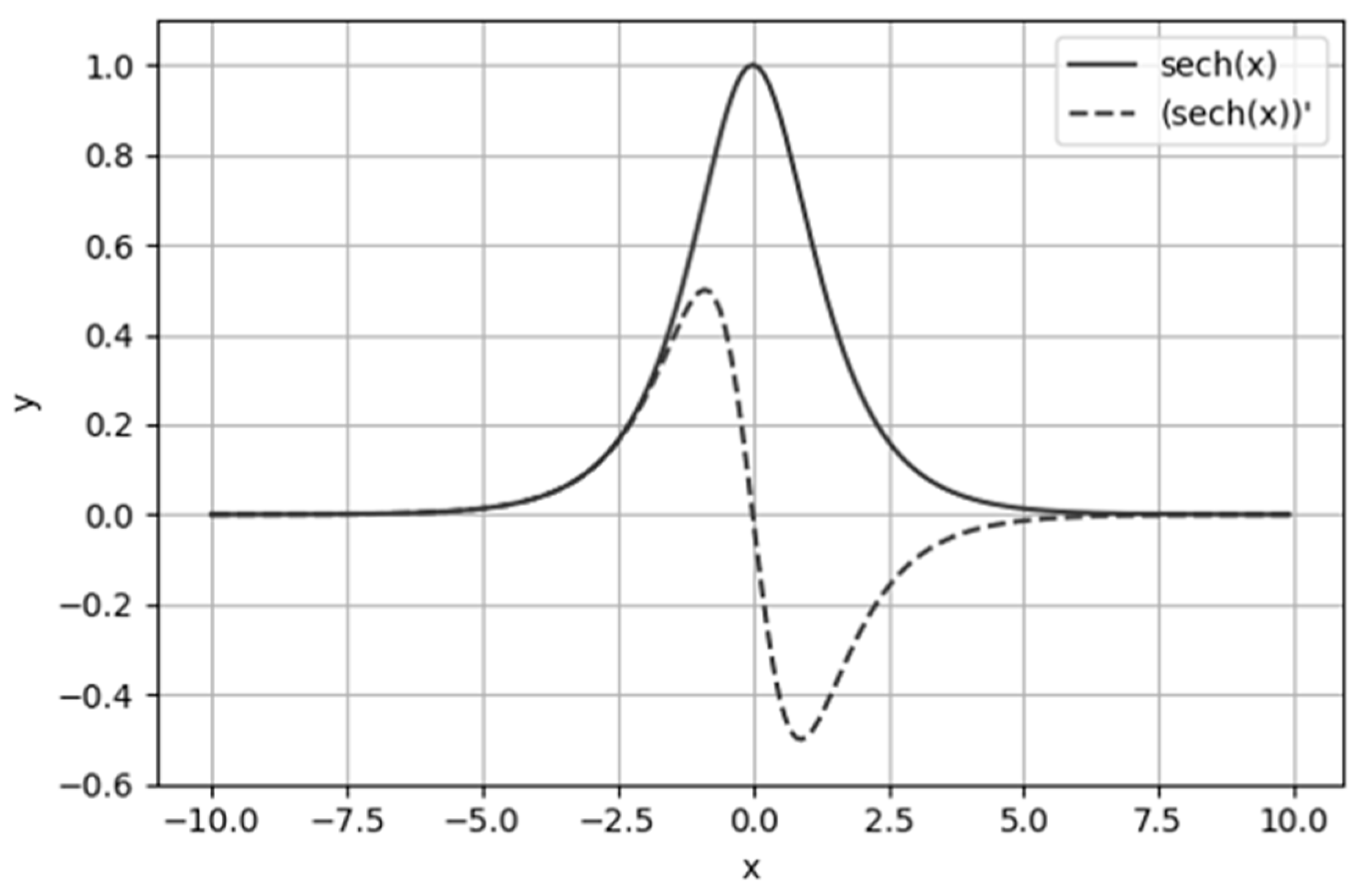

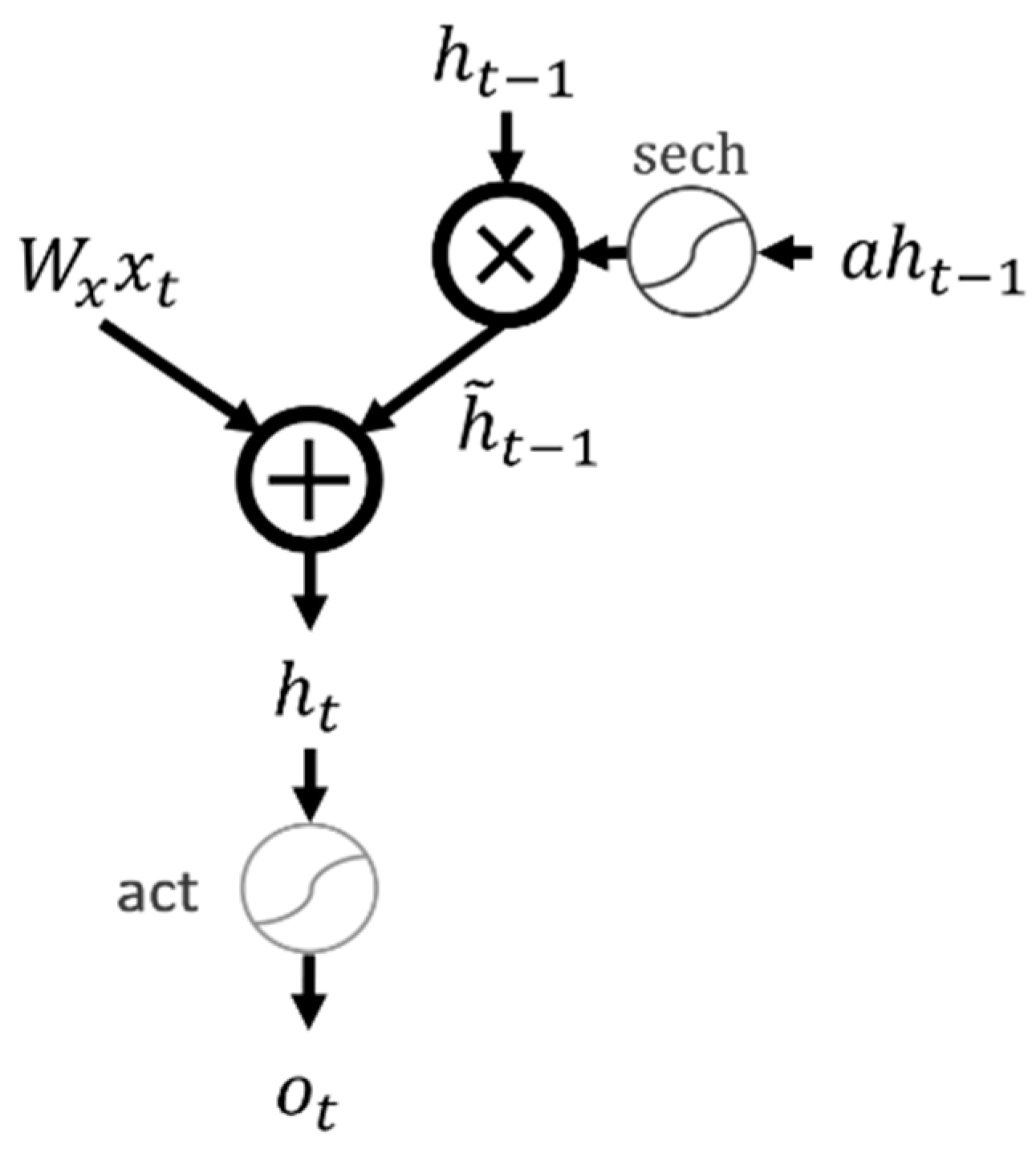

3.1.3. Our Proposed RNN Model

3.2. Parkinson’s Disease Detection

3.2.1. Voice Data Preprocessing and Recurrence Plot Creation

- Delete the silent section before and after voice data: In order to avoid the influence of voice volume, all voice data are normalized so that the maximum amplitude is the expressible maximum value, and the sections of −40 dB or less are deleted only from before and after the sound.

- Furthermore, delete the first and last of the above voice data for 0.1 s: This was because the first and last sounds after deleting the silent sections were often unstable.

- Divide the above preprocessed voice data into 0.01 s sections.

- Immediately before creating the recurrence plot, normalize the sound of 0.01 s section so that it becomes [−1, 1], and then plot points with a distance smaller than the 35th percentile in 0.01 s section. The reason for using the 35th percentile is that the accuracy was highest when the 35th percentile was used in the experiments, which is described later.

- Compress the generated black-and-white RP with 160 × 160 image size to 20 × 20 using bilinear interpolation. This may deteriorate the accuracy, but it is carried out in consideration of memory efficiency of Video Random Access Memory (VRAM) when inputting to the neural network.

- Repeat above 1–3 until all divided voice data become recurrence plots. However, if a fraction less than 0.01 s appears in the last section, it will be rounded down.

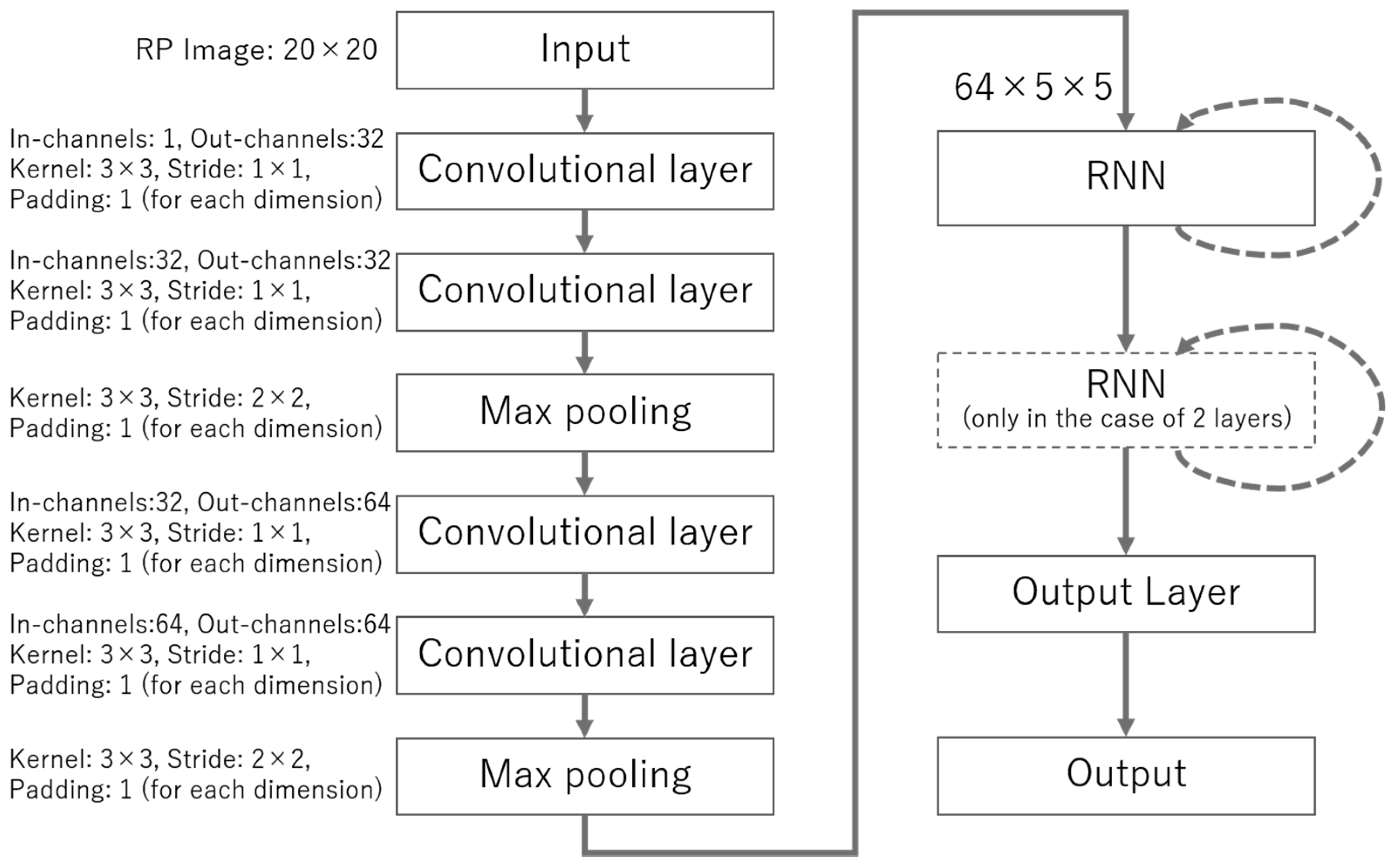

3.2.2. Parkinson’s Disease Detection Model

4. Performance Evaluation

4.1. Outline of Experiments

4.2. Input Voices and Preprocessing

4.3. RNN Model Configuration and Hyperparameters

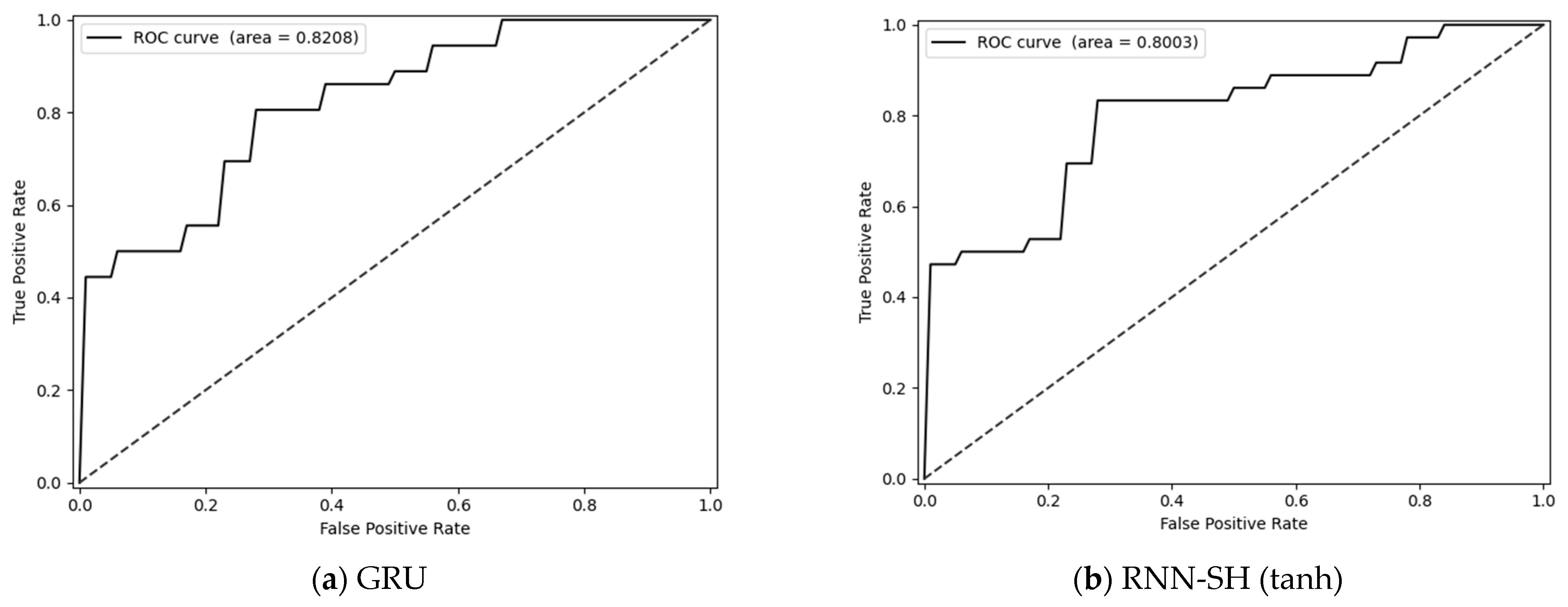

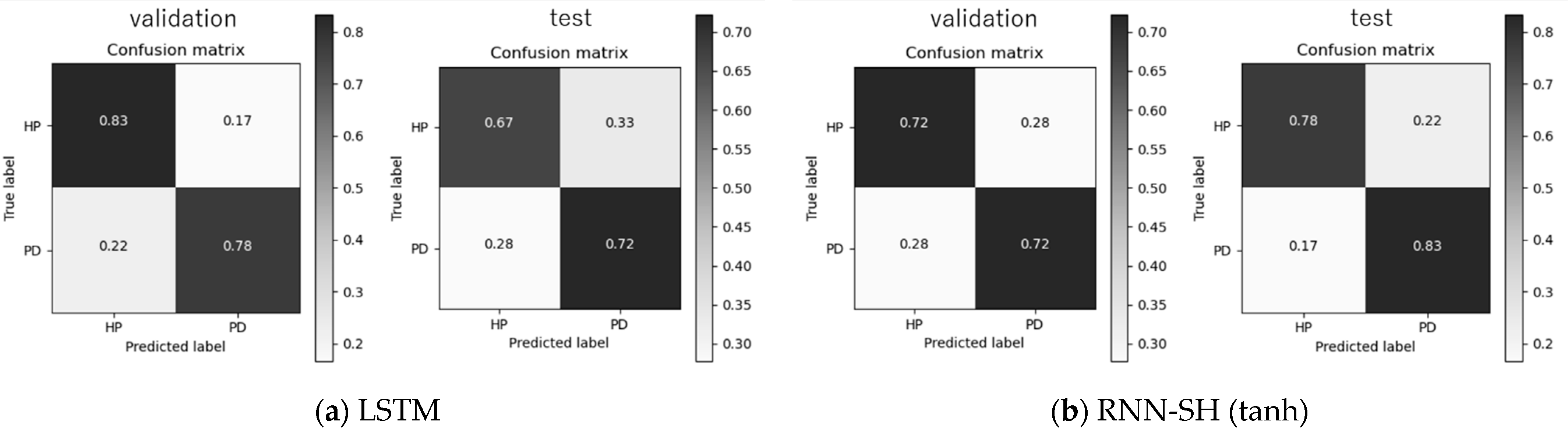

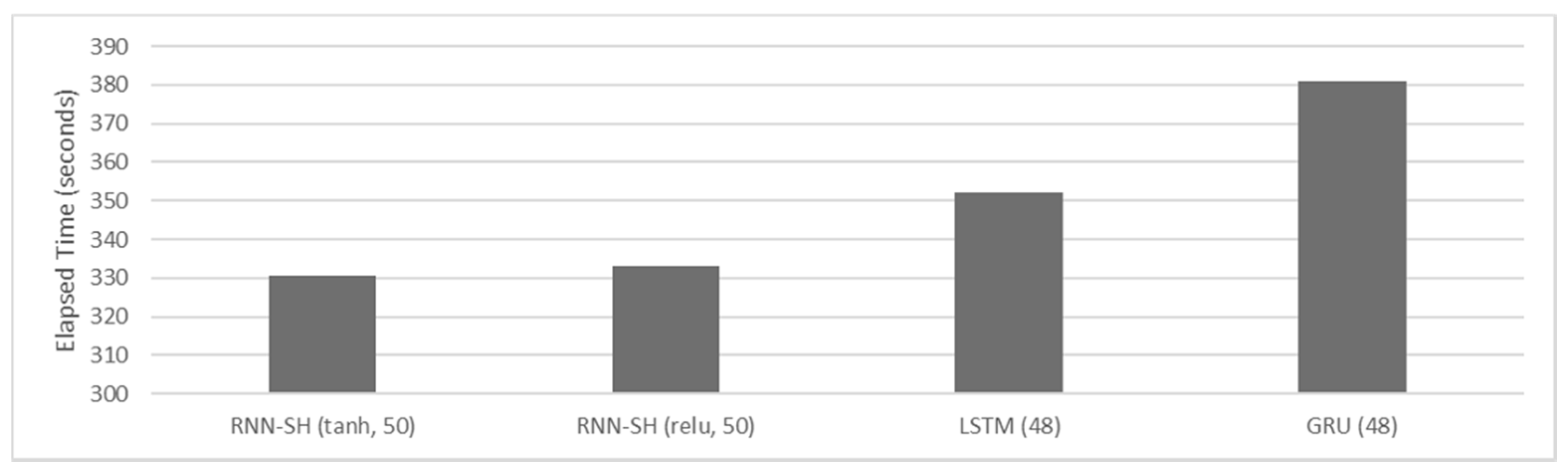

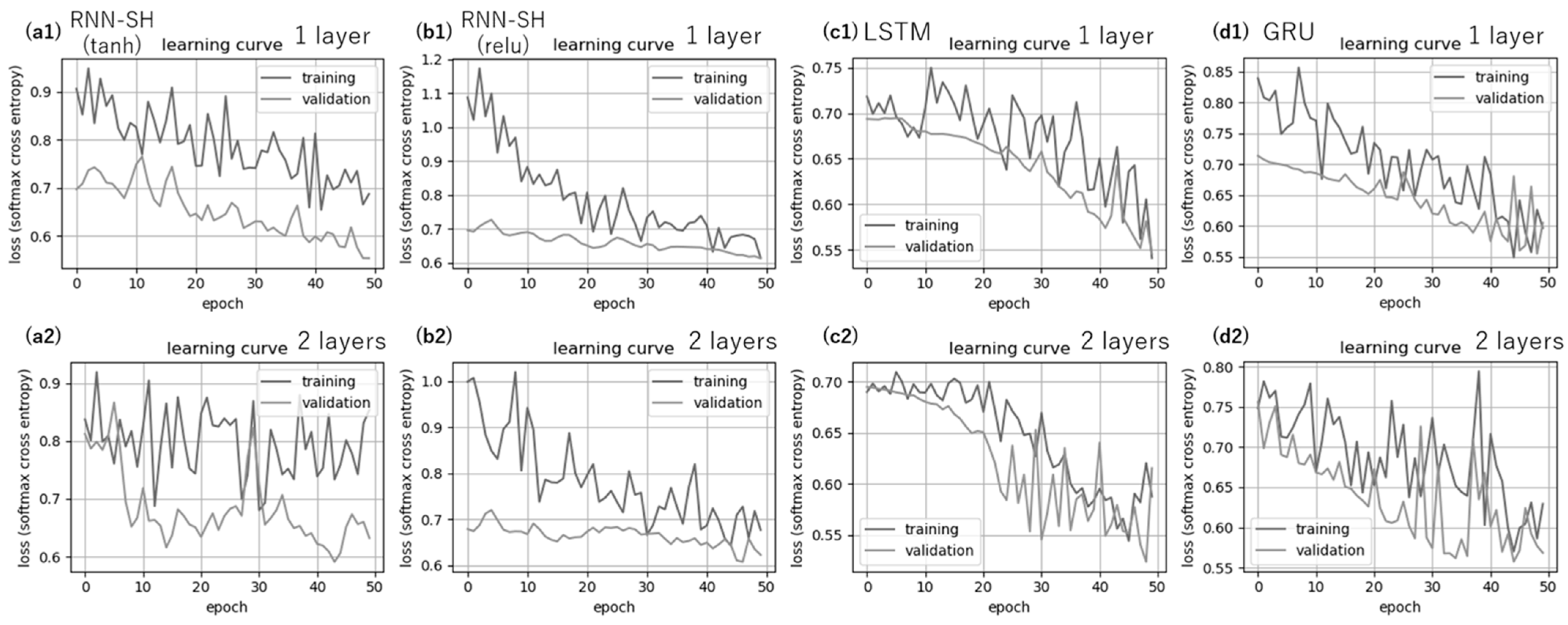

4.4. Experimental Results

4.5. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Elman, J.L. Finding Structure in Time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to Forget: Continual Prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representa-tions using RNN encoder-decoder for statistical machine translation. Conf. Empir. Methods Nat. Lang. Process. 2014, 1724–1734. [Google Scholar]

- Zhou, G.-B.; Wu, J.; Zhang, C.-L.; Zhou, Z.-H. Minimal gated unit for recurrent neural networks. Int. J. Autom. Comput. 2016, 13, 226–234. [Google Scholar] [CrossRef]

- Lei, T.; Zhang, Y.; Wang, S.I.; Dai, H.; Artzi, Y. Simple Recurrent Units for Highly Parallelizable Recurrence. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing; Association for Computational Linguistics (ACL): Stroudsburg, PA, USA, 2018; pp. 4470–4481. [Google Scholar]

- Yue, B.; Fu, J.; Liang, J. Residual Recurrent Neural Networks for Learning Sequential Representations. Information 2018, 9, 56. [Google Scholar] [CrossRef]

- Li, S.; Li, W.; Cook, C.; Zhu, C.; Gao, Y. Independently Recurrent Neural Network (IndRNN): Building A Longer and Deeper RNN. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 23 June 2018; pp. 5457–5466. [Google Scholar]

- Fujita, T.; Luo, Z.; Quan, C.; Mori, K. Simplification of RNN and Its Performance Evaluation in Machine Translation. Trans. Inst. Syst. Control Inf. Eng. 2020, 33, 267–274. [Google Scholar] [CrossRef]

- Fujita, T.; Luo, Z.; Quan, C.; Mori, K. Structure Construction and Performance Analysis of RNN Aiming for Reduction of Calculation Costs. Trans. Inst. Syst. Control Inf. Eng. 2021, 34, 89–97. [Google Scholar]

- Wirdefeldt, K.; Adami, H.-O.; Cole, P.; Trichopoulos, D.; Mandel, J. Epidemiology and etiology of Parkinson’s disease: A review of the evidence. Eur. J. Epidemiol. 2011, 26, 1–58. [Google Scholar] [CrossRef]

- Eckmann, J.-P.; Eckmann, J.-P.; Kamphorst, S.O.; Kamphorst, S.O.; Ruelle, D.; Ruelle, D. Recurrence Plots of Dynamical Systems. Synchronization Syst. Time Delayed Coupling 1995, 16, 441–445. [Google Scholar]

- Shiro, M.; Hirata, Y.; Aihara, K. Similarities and Differences between Recurrence Plot and Fourier Transform. Seisan Kenkyu 2020, 72, 137–138. [Google Scholar]

- Facchini, A.; Kantz, H.; Tiezzi, E. Recurrence plot analysis of nonstationary data: The understanding of curved patterns. Phys. Rev. E 2005, 72, 021915. [Google Scholar] [CrossRef] [PubMed]

- Garcia-Ceja, E.; Uddin, Z.; Torresen, J. Classification of Recurrence Plots’ Distance Matrices with a Convolutional Neural Network for Activity Recognition. Procedia Comput. Sci. 2018, 130, 157–163. [Google Scholar] [CrossRef]

- Chen, Y.; Su, S.; Yang, H. Convolutional Neural Network Analysis of Recurrence Plots for Anomaly Detection. Int. J. Bifurc. Chaos 2020, 30, 2050002. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2012, 60, 1097–1105. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Afonso, L.C.; Rosa, G.H.; Pereira, C.R.; Weber, S.A.; Hook, C.; Albuquerque, V.H.C.; Papa, J.P. A recurrence plot-based approach for Parkinson’s disease identification. Futur. Gener. Comput. Syst. 2019, 94, 282–292. [Google Scholar] [CrossRef]

- Jeancolas, L.; Petrovska-Delacrétaz, D.; Mangone, G.; Benkelfat, B.E.; Corvol, J.C.; Vidailhet, M.; Lehéricy, S.; Benali, H. X-Vectors: New quantitative biomarkers for early Parkinson’s disease detection from speech. Front. Neuroinform. 2021, 15, 4. [Google Scholar] [CrossRef]

- Almeida, J.S.; Rebouças Filho, P.P.; Carneiro, T.; Wei, W.; Damaševičius, R.; Maskeliūnas, R.; de Albuquerque, V.H.C. De-tecting Parkinson’s disease with sustained phonation and speech signals using machine learning techniques. Pattern Recognit. Lett. 2019, 125, 55–62. [Google Scholar] [CrossRef]

- Berus, L.; Klancnik, S.; Brezocnik, M.; Ficko, M. Classifying Parkinson’s Disease Based on Acoustic Measures Using Artificial Neural Networks. Sensors 2018, 19, 16. [Google Scholar] [CrossRef]

- Grover, S.; Bhartia, S.; Akshama; Yadav, A. Predicting Severity of Parkinson’s Disease Using Deep Learning. Procedia Comput. Sci. 2018, 132, 1788–1794. [Google Scholar] [CrossRef]

- Pydub. Available online: https://github.com/jiaaro/pydub (accessed on 15 February 2021).

- FFmpeg. Available online: https://github.com/FFmpeg/FFmpeg (accessed on 15 February 2021).

- Liu, L.; Jiang, H.; He, P.; Chen, W.; Liu, X.; Gao, J.; Han, J. On the variance of the adaptive learning rate and beyond. arXiv 2019, arXiv:1908.03265. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the International Conference Learning Representations (ICLR), San Diego, CA, USA, 5–8 May 2015. [Google Scholar]

- LeCun, Y.A.; Bottou, L.; Orr, G.B.; Müller, K.-R. Efficient BackProp. In Transactions on Petri Nets and Other Models of Concurrency XV; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2012; pp. 9–48. [Google Scholar]

- CS231n Convolutional Neural Networks for Visual Recognition Course Website. Available online: https://cs231n.github.io/neural-networks-1/#actfun (accessed on 15 February 2021).

| The Number of Trials | Average (Validation/Test) | Total Average | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Layers | Model | Units | Indicators | 1 | 2 | 3 | 4 | 5 | ||

| 1 | LSTM | 64 | Acc. (%) | 75.00/72.22 | 77.78/55.56 | 58.33/75.00 | 69.44/63.89 | 61.11/63.89 | 68.33 ± 7.58/66.11 ± 6.89 | 67.22 ± 7.33 |

| F1. (%) | 74.98/72.14 | 77.50/55.42 | 58.04/74.51 | 69.42/63.18 | 60.63/62.47 | 68.11 ± 7.67/65.54 ± 6.95 | 66.83 ± 7.43 | |||

| MCC | 0.501/0.447 | 0.570/0.112 | 0.169/0.521 | 0.390/0.289 | 0.228/0.302 | 0.371 ± 0.154/0.334 ± 0.141 | 0.353 ± 0.149 | |||

| 128 | Acc. (%) | 69.44/69.44 | 75.00/55.56 | 66.67/69.44 | 61.11/72.22 | 44.44/27.78 | 63.33 ± 10.45/58.89 ± 16.61 | 61.11 ± 14.05 | ||

| F1. (%) | 69.23/68.84 | 74.02/55.42 | 66.56/68.24 | 60.63/72.22 | 44.44/27.55 | 62.98 ± 10.23/58.46 ± 16.48 | 60.72 ± 13.90 | |||

| MCC | 0.394/0.405 | 0.543/0.112 | 0.335/0.422 | 0.228/0.444 | -0.111/-0.447 | 0.278 ± 0.219/0.187 ± 0.340 | 0.233 ± 0.290 | |||

| 256 | Acc. (%) | 75.00/77.78 | 80.56/61.11 | 72.22/61.11 | 61.11/72.22 | 55.56/77.78 | 68.89 ± 9.20/70.00 ± 7.54 | 69.44 ± 8.43 | ||

| F1. (%) | 74.98/77.71 | 80.17/60.99 | 70.78/59.09 | 60.63/72.14 | 55.42/77.78 | 68.40 ± 9.13/69.54 ± 8.04 | 68.97 ± 8.62 | |||

| MCC | 0.501/0.559 | 0.636/0.224 | 0.496/0.248 | 0.228/0.447 | 0.112/0.556 | 0.395 ± 0.194/0.407 ± 0.145 | 0.401 ± 0.171 | |||

| GRU | 64 | Acc. (%) | 69.44/75.00 | 80.56/58.33 | 63.89/63.89 | 63.89/55.56 | 58.33/77.78 | 67.22 ± 7.54/66.11 ± 8.85 | 66.67 ± 8.24 | |

| F1. (%) | 69.42/74.98 | 80.17/58.30 | 63.18/60.17 | 63.86/55.42 | 58.04/77.78 | 66.94 ± 7.54/65.33 ± 9.19 | 66.13 ± 8.44 | |||

| MCC | 0.390/0.501 | 0.636/0.167 | 0.289/0.351 | 0.278/0.112 | 0.169/0.556 | 0.352 ± 0.158/0.337 ± 0.176 | 0.345 ± 0.167 | |||

| 128 | Acc. (%) | 69.44/77.78 | 75.00/63.89 | 69.44/66.67 | 66.67/72.22 | 47.22/52.78 | 65.56 ± 9.56/66.67 ± 8.43 | 66.11 ± 9.03 | ||

| F1. (%) | 69.42/77.78 | 73.33/62.47 | 69.42/62.50 | 66.56/72.22 | 46.18/49.63 | 64.98 ± 9.64/64.92 ± 9.64 | 64.95 ± 9.64 | |||

| MCC | 0.390/0.556 | 0.577/0.302 | 0.390/0.447 | 0.335/0.444 | -0.058/0.064 | 0.327 ± 0.209/0.363 ± 0.170 | 0.345 ± 0.191 | |||

| 256 | Acc. (%) | 69.44/75.00 | 83.33/58.33 | 75.00/61.11 | 72.22/69.44 | 52.78/69.44 | 70.56 ± 10.03/66.67 ± 6.09 | 68.61 ± 8.52 | ||

| F1. (%) | 69.42/74.98 | 83.13/58.30 | 74.83/57.86 | 72.22/68.84 | 51.85/69.23 | 70.29 ± 10.29/65.84 ± 6.70 | 68.07 ± 8.97 | |||

| MCC | 0.390/0.501 | 0.684/0.167 | 0.507/0.267 | 0.444/0.405 | 0.058/0.394 | 0.417 ± 0.205/0.347 ± 0.117 | 0.382 ± 0.170 | |||

| RNN-SH (tanh) | 64 | Acc. (%) | 72.22/69.44 | 83.33/58.33 | 63.89/69.44 | 75.00/63.89 | 61.11/55.56 | 71.11 ± 7.97/63.33 ± 5.67 | 67.22 ± 7.93 | |

| F1. (%) | 72.14/68.84 | 83.13/57.51 | 61.48/69.23 | 74.98/63.18 | 59.09/50.00 | 70.16 ± 8.87/61.75 ± 7.27 | 65.96 ± 9.13 | |||

| MCC | 0.447/0.405 | 0.684/0.174 | 0.321/0.394 | 0.501/0.289 | 0.248/0.149 | 0.440 ± 0.151/0.282 ± 0.107 | 0.361 ± 0.153 | |||

| 128 | Acc. (%) | 75.00/72.22 | 77.78/63.89 | 66.67/63.89 | 69.44/61.11 | 55.56/55.56 | 68.89 ± 7.74/63.33 ± 5.39 | 66.11 ± 7.22 | ||

| F1. (%) | 74.98/71.88 | 77.14/63.64 | 65.71/63.18 | 69.42/60.63 | 47.64/55.00 | 66.98 ± 10.48/62.86 ± 5.45 | 64.92 ± 8.60 | |||

| MCC | 0.501/0.456 | 0.589/0.282 | 0.354/0.289 | 0.390/0.228 | 0.177/0.114 | 0.402 ± 0.140/0.274 ± 0.111 | 0.338 ± 0.142 | |||

| 256 | Acc. (%) | 72.22/80.56 | 83.33/61.11 | 72.22/66.67 | 63.89/72.22 | 61.11/69.44 | 70.56 ± 7.78/70.00 ± 6.43 | 70.28 ± 7.14 | ||

| F1. (%) | 72.22/80.54 | 83.13/61.11 | 72.14/63.88 | 63.64/72.22 | 59.09/68.84 | 70.04 ± 8.26/69.32 ± 6.80 | 69.68 ± 7.58 | |||

| MCC | 0.444/0.612 | 0.684/0.222 | 0.447/0.401 | 0.282/0.444 | 0.248/0.405 | 0.421 ± 0.155/0.417 ± 0.124 | 0.419 ± 0.140 | |||

| RNN-SH (relu) | 64 | Acc. (%) | 63.89/77.78 | 44.44/50.00 | 63.89/69.44 | 58.33/66.67 | 55.56/75.00 | 57.22 ± 7.16/67.78 ± 9.72 | 62.50 ± 10.04 | |

| F1. (%) | 63.64/77.71 | 37.50/37.69 | 58.47/69.23 | 57.51/66.56 | 55.00/74.98 | 54.42 ± 8.92/65.24 ± 14.33 | 59.83 ± 13.10 | |||

| MCC | 0.282/0.559 | -0.149/0.000 | 0.402/0.394 | 0.174/0.335 | 0.114/0.501 | 0.164 ± 0.185/0.358 ± 0.195 | 0.261 ± 0.213 | |||

| 128 | Acc. (%) | 75.00/66.67 | 66.67/63.89 | 66.67/66.67 | 63.89/69.44 | 55.56/52.78 | 65.56 ± 6.24/63.89 ± 5.83 | 64.72 ± 6.09 | ||

| F1. (%) | 74.83/65.71 | 62.50/60.17 | 64.94/66.25 | 63.64/69.42 | 44.62/39.23 | 62.10 ± 9.78/60.16 ± 10.88 | 61.13 ± 10.39 | |||

| MCC | 0.507/0.354 | 0.447/0.351 | 0.372/0.342 | 0.282/0.390 | 0.243/0.169 | 0.370 ± 0.099/0.321 ± 0.078 | 0.346 ± 0.092 | |||

| 256 | Acc. (%) | 66.67/75.00 | 83.33/61.11 | 69.44/63.89 | 66.67/75.00 | 63.89/44.44 | 70.00 ± 6.89/63.89 ± 11.25 | 66.94 ± 9.82 | ||

| F1. (%) | 66.25/74.83 | 83.13/61.11 | 68.24/63.64 | 66.56/74.98 | 63.64/42.86 | 69.56 ± 6.94/63.48 ± 11.76 | 66.52 ± 10.13 | |||

| MCC | 0.342/0.507 | 0.684/0.222 | 0.422/0.282 | 0.335/0.501 | 0.282/−0.118 | 0.413 ± 0.143/0.279 ± 0.229 | 0.346 ± 0.202 | |||

| 2 | LSTM | 64 | Acc. (%) | 80.56/69.44 | 77.78/58.33 | 83.33/61.11 | 69.44/66.67 | 61.11/77.78 | 74.44 ± 8.13/66.67 ± 6.80 | 70.56 ± 8.44 |

| F1. (%) | 80.54/69.42 | 77.14/58.30 | 83.33/57.86 | 69.42/66.56 | 60.99/77.78 | 74.29 ± 8.12/65.98 ± 7.43 | 70.14 ± 8.82 | |||

| MCC | 0.612/0.390 | 0.589/0.167 | 0.667/0.267 | 0.390/0.335 | 0.224/0.556 | 0.496 ± 0.165/0.343 ± 0.130 | 0.420 ± 0.167 | |||

| 128 | Acc. (%) | 75.00/75.00 | 75.00/58.33 | 75.00/66.67 | 69.44/66.67 | 55.56/77.78 | 70.00 ± 7.54/68.89 ± 6.89 | 69.44 ± 7.24 | ||

| F1. (%) | 74.98/74.98 | 74.02/58.30 | 74.02/62.50 | 69.42/65.71 | 55.42/77.78 | 69.57 ± 7.34/67.85 ± 7.40 | 68.71 ± 7.42 | |||

| MCC | 0.501/0.501 | 0.543/0.167 | 0.543/0.447 | 0.390/0.354 | 0.112/0.556 | 0.418 ± 0.163/0.405 ± 0.136 | 0.411 ± 0.150 | |||

| 256 | Acc. (%) | 63.89/75.00 | 75.00/55.56 | 69.44/61.11 | 63.89/72.22 | 52.78/83.33 | 65.00 ± 7.37/69.44 ± 9.94 | 67.22 ± 9.02 | ||

| F1. (%) | 62.47/74.83 | 74.02/55.42 | 68.24/59.09 | 63.64/72.14 | 49.63/83.28 | 63.60 ± 8.08/68.95 ± 10.30 | 66.27 ± 9.64 | |||

| MCC | 0.302/0.507 | 0.543/0.112 | 0.422/0.248 | 0.282/0.447 | 0.064/0.671 | 0.322 ± 0.160/0.397 ± 0.197 | 0.360 ± 0.183 | |||

| GRU | 64 | Acc. (%) | 75.00/77.78 | 77.78/63.89 | 63.89/55.56 | 66.67/61.11 | 61.11/69.44 | 68.89 ± 6.43/65.56 ± 7.58 | 67.22 ± 7.22 | |

| F1. (%) | 74.98/77.71 | 77.14/63.64 | 61.48/53.25 | 66.56/61.11 | 61.11/69.23 | 68.26 ± 6.69/64.99 ± 8.18 | 66.62 ± 7.65 | |||

| MCC | 0.501/0.559 | 0.589/0.282 | 0.321/0.124 | 0.335/0.222 | 0.222/0.394 | 0.394 ± 0.133/0.316 ± 0.150 | 0.355 ± 0.147 | |||

| 128 | Acc. (%) | 66.67/77.78 | 80.56/61.11 | 80.56/66.67 | 69.44/69.44 | 55.56/55.56 | 70.56 ± 9.40/66.11 ± 7.54 | 68.33 ± 8.80 | ||

| F1. (%) | 66.56/77.78 | 80.17/61.11 | 80.54/62.50 | 69.42/68.84 | 55.42/54.29 | 70.42 ± 9.36/64.90 ± 7.93 | 67.66 ± 9.10 | |||

| MCC | 0.335/0.556 | 0.636/0.222 | 0.612/0.447 | 0.390/0.405 | 0.112/0.118 | 0.417 ± 0.193/0.350 ± 0.158 | 0.383 ± 0.180 | |||

| 256 | Acc. (%) | 69.44/77.78 | 83.33/55.56 | 77.78/66.67 | 63.89/72.22 | 58.33/77.78 | 70.56 ± 9.06/70.00 ± 8.31 | 70.28 ± 8.70 | ||

| F1. (%) | 69.42/77.71 | 83.13/55.00 | 77.71/62.50 | 63.64/72.14 | 58.30/77.71 | 70.44 ± 9.04/69.01 ± 8.94 | 69.72 ± 9.02 | |||

| MCC | 0.390/0.559 | 0.684/0.114 | 0.559/0.447 | 0.282/0.447 | 0.167/0.559 | 0.416 ± 0.186/0.425 ± 0.163 | 0.421 ± 0.175 | |||

| RNN-SH (tanh) | 64 | Acc. (%) | 61.11/52.78 | 83.33/61.11 | 72.22/61.11 | 61.11/66.67 | 66.67/38.89 | 68.89 ± 8.31/56.11 ± 9.69 | 62.50 ± 11.06 | |

| F1. (%) | 54.18/39.23 | 83.13/60.00 | 70.78/60.00 | 56.25/63.88 | 66.56/38.13 | 66.18 ± 10.50/52.25 ± 11.18 | 59.21 ± 12.89 | |||

| MCC | 0.354/0.169 | 0.684/0.236 | 0.496/0.236 | 0.298/0.401 | 0.335/-0.228 | 0.433 ± 0.142/0.163 ± 0.210 | 0.298 ± 0.225 | |||

| 128 | Acc. (%) | 72.22/69.44 | 75.00/63.89 | 72.22/58.33 | 63.89/66.67 | 61.11/55.56 | 68.89 ± 5.39/62.78 ± 5.15 | 65.83 ± 6.09 | ||

| F1. (%) | 70.78/69.23 | 74.02/61.48 | 71.43/55.56 | 63.64/66.56 | 54.18/44.62 | 66.81 ± 7.19/59.49 ± 8.78 | 63.15 ± 8.82 | |||

| MCC | 0.496/0.394 | 0.543/0.321 | 0.471/0.193 | 0.282/0.335 | 0.354/0.243 | 0.429 ± 0.097/0.297 ± 0.071 | 0.363 ± 0.108 | |||

| 256 | Acc. (%) | 75.00/75.00 | 80.56/63.89 | 69.44/75.00 | 58.33/58.33 | 58.33/61.11 | 68.33 ± 8.89/66.67 ± 7.03 | 67.50 ± 8.06 | ||

| F1. (%) | 74.98/74.51 | 80.42/63.18 | 68.24/74.98 | 57.51/56.70 | 54.04/56.25 | 67.04 ± 10.03/65.12 ± 8.23 | 66.08 ± 9.23 | |||

| MCC | 0.501/0.521 | 0.620/0.289 | 0.422/0.501 | 0.174/0.181 | 0.211/0.298 | 0.385 ± 0.170/0.358 ± 0.132 | 0.372 ± 0.153 | |||

| RNN-SH (relu) | 64 | Acc. (%) | 72.22/77.78 | 69.44/63.89 | 63.89/72.22 | 58.33/63.89 | 52.78/55.56 | 63.33 ± 7.11/66.67 ± 7.66 | 65.00 ± 7.58 | |

| F1. (%) | 72.14/77.71 | 66.30/60.17 | 61.48/72.22 | 57.51/63.18 | 51.85/53.25 | 61.86 ± 6.99/65.31 ± 8.69 | 63.58 ± 8.08 | |||

| MCC | 0.447/0.559 | 0.491/0.351 | 0.321/0.444 | 0.174/0.289 | 0.058/0.124 | 0.298 ± 0.163/0.354 ± 0.146 | 0.326 ± 0.158 | |||

| 128 | Acc. (%) | 69.44/75.00 | 80.56/58.33 | 63.89/63.89 | 63.89/63.89 | 61.11/66.67 | 67.78 ± 6.94/65.56 ± 5.44 | 66.67 ± 6.34 | ||

| F1. (%) | 67.41/74.51 | 80.54/55.56 | 61.48/63.86 | 63.64/63.18 | 60.00/62.50 | 66.61 ± 7.40/63.92 ± 6.08 | 65.27 ± 6.90 | |||

| MCC | 0.449/0.521 | 0.612/0.193 | 0.321/0.278 | 0.282/0.289 | 0.236/0.447 | 0.380 ± 0.136/0.346 ± 0.120 | 0.363 ± 0.129 | |||

| 256 | Acc. (%) | 66.67/72.22 | 77.78/63.89 | 61.11/63.89 | 58.33/55.56 | 52.78/50.00 | 63.33 ± 8.50/61.11 ± 7.66 | 62.22 ± 8.16 | ||

| F1. (%) | 66.25/71.88 | 77.71/62.47 | 57.86/63.64 | 57.51/53.25 | 42.86/37.69 | 60.44 ± 11.46/57.78 ± 11.65 | 59.11 ± 11.63 | |||

| MCC | 0.342/0.456 | 0.559/0.302 | 0.267/0.282 | 0.174/0.124 | 0.101/0.000 | 0.288 ± 0.158/0.233 ± 0.157 | 0.261 ± 0.160 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fujita, T.; Luo, Z.; Quan, C.; Mori, K.; Cao, S. Performance Evaluation of RNN with Hyperbolic Secant in Gate Structure through Application of Parkinson’s Disease Detection. Appl. Sci. 2021, 11, 4361. https://doi.org/10.3390/app11104361

Fujita T, Luo Z, Quan C, Mori K, Cao S. Performance Evaluation of RNN with Hyperbolic Secant in Gate Structure through Application of Parkinson’s Disease Detection. Applied Sciences. 2021; 11(10):4361. https://doi.org/10.3390/app11104361

Chicago/Turabian StyleFujita, Tomohiro, Zhiwei Luo, Changqin Quan, Kohei Mori, and Sheng Cao. 2021. "Performance Evaluation of RNN with Hyperbolic Secant in Gate Structure through Application of Parkinson’s Disease Detection" Applied Sciences 11, no. 10: 4361. https://doi.org/10.3390/app11104361

APA StyleFujita, T., Luo, Z., Quan, C., Mori, K., & Cao, S. (2021). Performance Evaluation of RNN with Hyperbolic Secant in Gate Structure through Application of Parkinson’s Disease Detection. Applied Sciences, 11(10), 4361. https://doi.org/10.3390/app11104361