1. Introduction

The Internet has provided many application services for several decades by means of exchanging packets between two hosts, where one consumes information and the other produces it. In other words, it is a host-centric network in which a consumer generates a packet destined for a producer’s IP address, and intermediate routers forward the packet based on the destination address. On the other hand, the current Internet with such a communication pattern is gradually revealing its limitation in providing rapidly increasing information-oriented services. Such information-oriented services are often location-independent, while the IP addresses are associated with specific sites. Moreover, the Internet has a burden that the domain name should be translated to the IP address before a packet is sent. Named data networking (NDN) [

1,

2] is one of the representative Internet structures of the future. It enables a growing number of information-based services to be deployed efficiently and effectively as packets are delivered based on the content name rather than the destination IP address. In the delivery of an NDN packet, the name of the information is more important than the location of an information provider.

A packet in NDN contains a name or a content name, indicating what information is of interest. A name has a human-friendly hierarchical structure made up of several components, each of which consists of character strings. An NDN router manages a forwarding information base (FIB) containing a great number of name prefixes with associated output faces. To deliver an incoming packet, the router searches for a prefix in the FIB that is matched with the name in the packet. This process is called a name lookup. The name lookup is accomplished by the component-wise matching in NDN, while IP address lookup is accomplished by bit-wise matching on the Internet. Since multiple prefixes can be matched during the name lookup, the NDN router must perform the lookup as the longest prefix matching (LPM), which is to select the longest one among them as the best match. Similarly, Internet routers perform IP address lookup as LPM [

3].

An IP address has a fixed size of 32 bits (or 128 bits) for IPv4 (or IPv6). Thus, an IP prefix is also limited in its size. On the other hand, in NDN, the length of a component is variable and unbounded, and the number of components in a name is also unlimited. Thus, the lengths of both the name and the name prefix are varied and unlimited. It is expected that the number of names and name prefixes will both increase significantly as application services grow rapidly. Hence, compared to the Internet, the FIB of NDN is more complex and is to be much larger.

The name lookup is challenging due to the difficulty of the LPM process, as well as the complex structure and the large size of the FIB. Even though it is a complicated and time-consuming task, it must be carried out so as to meet the link speed in NDN. In recent days, many researchers focused on high-speed name lookup techniques.

Most name lookup schemes are usually based on tries or hashing techniques. The trie is a hierarchical search structure widely used in IP lookup. Similarly, a name is hierarchically composed of components in NDN. For this reason, several trie-based name lookup techniques were researched [

4,

5,

6,

7,

8]. Those techniques primarily focused on reducing the average number of memory accesses per lookup to speed up the name lookup. Alternatively, hashing techniques were used to achieve efficient name lookups [

9,

10,

11,

12,

13,

14,

15], since a name consists of several components, each of which is a variable-length string. The hashing techniques are generally used to map variable-size data to a fixed-size value. Some methods dealt with the name lookup using tries and hashing together [

16,

17].

Routing for a packet delivery is performed according to the matching prefix in the FIB; thus, the prefix is also called a route prefix. A route prefix represents a common route for multiple names. Thus, the name lookup time can be greatly reduced if the route prefix is cached, which is called route prefix caching or prefix caching. A few prefix caching schemes were proposed to perform the name lookup efficiently [

18,

19].

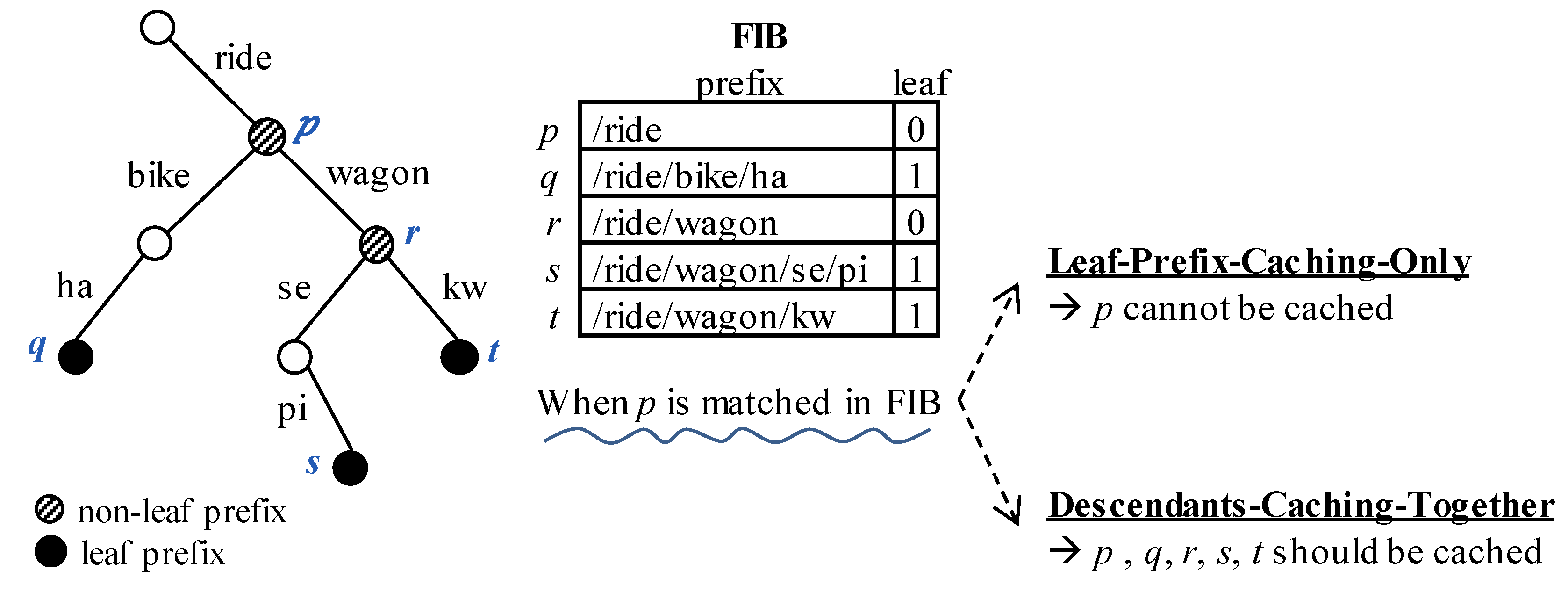

Prefix caching is now challenging because there is a problem that needs to be solved. In the FIB, a leaf prefix is a prefix that has no descendant prefix, whereas a non-leaf prefix has some descendant. Non-leaf prefix caching may incur a problem not found for leaf prefix caching. If a matching prefix is non-leaf, its descendants can also be matched. Thus, non-leaf prefix caching may result in a wrong LPM result if there are some descendants which are not cached yet. In this paper, we present an elaborate method to avoid such an incomplete prefix caching problem that occurs when a non-leaf prefix is cached. Our technique caches non-leaf prefixes together with the branch information of their children. The branch information is represented by a Bloom filter [

20] which is used to check whether a child exists on the branch.

The rest of this paper is organized as follows: we describe the name lookup for the packet forwarding in NDN in

Section 2. We also overview the route prefix caching and the related works.

Section 3 explains the proposed route prefix caching scheme using Bloom filters in NDN. In

Section 4, the performance is evaluated in terms of several variables. Finally,

Section 5 concludes the paper.

2. Background

2.1. Name Lookup in NDN Packet Forwarding

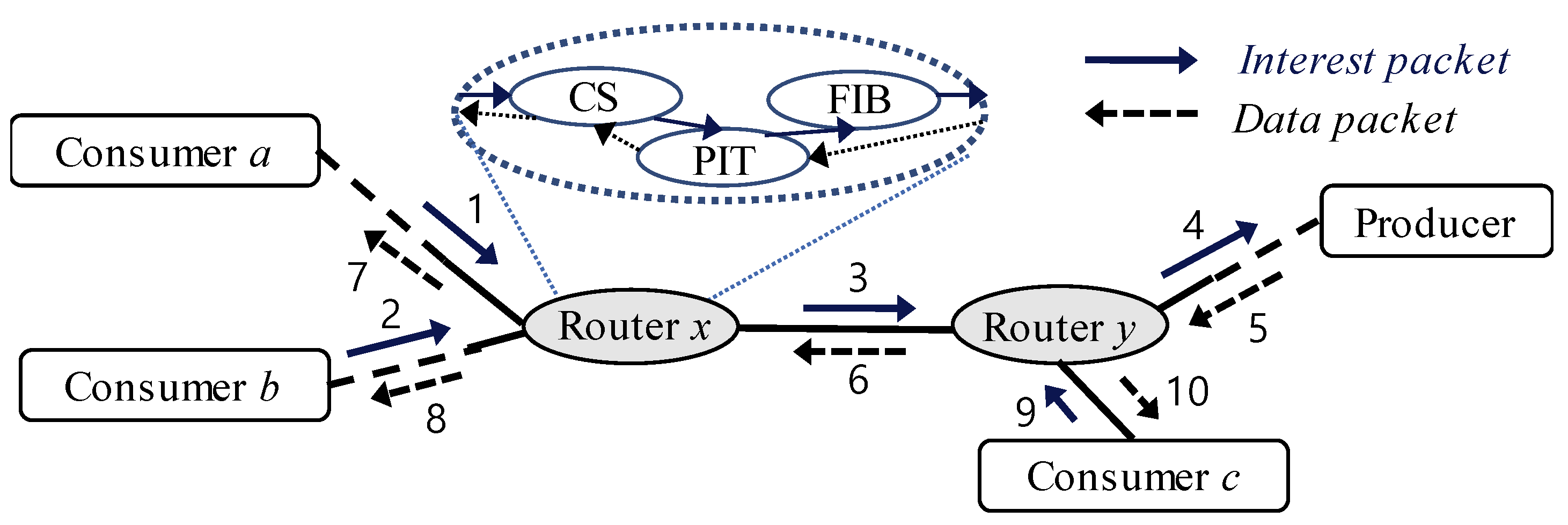

The NDN is composed of numerous nodes, which can be categorized into hosts and routers, as well as communication links connecting them, as the Internet is. A host can be either a consumer or a producer of the data. As a consumer, a host issues an interest packet containing a name of data. The router forwards the packet to an output face which is determined by the name. The producer replies with the data packet which contains the data associated with the name.

The router has a content store (CS) and a pending interest table (PIT) in addition to an FIB to deliver packets efficiently. The CS is a cache that supports the in-network caching feature of NDN. It allows a previously cached data packet to be reused when an interest packet arrives with the same name as before. PIT allows only one packet to be delivered when two or more identical interest packets arrive. If the content name of an incoming interest packet is the same as one of the names already kept in the PIT, only its incoming interface is added on that entry in the PIT, and the packet is no longer forwarded.

Figure 1 shows an example of how interest packets and data packets are delivered when three consumers send the same interest in the NDN. In this figure, the numbers indicate the transmission sequence numbers. When the router

x receives two interest packets (1 and 2), it stores their incoming interfaces in the PIT and transmits only one interest packet (3). Note that the data packet is delivered along the opposite direction to the transmission of the interest packet. When router

y receives a data packet (5), the data are cached in the CS for future reuse. Later, when the router receives the interest packet (9), it can immediately transmit back the data packet (10) which is already cached in the CS.

A content name comprises a series of components, each of which is a character string with a variable length. They are separated by slashes. For example, a name, /ride/wagon/horse, is composed of three components: ride, wagon, and horse. A name prefix is also represented as a sequence of components like a name. Therefore, the name lookup must perform matching between a name and a name prefix on a component basis rather than a bit basis, unlike IP address lookup. Thus, the name lookup is more complicated than the IP address lookup.

Since CS and PIT store names, exact matching (EM) is performed to find the exact name. On the other hand, FIB stores prefixes; hence, the name lookup must perform the LPM that selects the longest one among the prefixes matched with a name. For example, if prefixes /ride and /ride/wagon are stored in FIB, for a given interest, both prefixes, /ride and /ride/wagon, are matched for a given content name /ride/wagon/kw. Then, the LPM selects /ride/wagon as the final lookup result, whose length is the longest. The LPM is a more difficult and time-consuming process than EM. The performance of packet forwarding is highly dependent on the speed of the name lookup and is determined by the efficiency of the LPM. For these reasons, many previous studies on packet forwarding in NDN focused on improving the speed of the name lookup by increasing the efficiency of LPM [

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19].

2.2. Route Prefix Caching

A way to speed up the name lookup process is the route caching technique to store and reuse previous lookup results in the local cache associated with the FIB. The name lookup is to find an entry having the longest matching prefix for a content name. The entry is a pair of the prefix and its output face. The route caching can be classified into the content name caching and the name prefix caching according to the information stored in the cache. The content name caching stores a content name with the corresponding output face. It exploits a sort of temporal locality, but its effectiveness is limited because NDN already provides in-network caching. The name prefix caching stores a name prefix with the corresponding output face. In this caching scheme, a single name prefix in the cache can be matched as the LPM result for more than one content name. In other words, it also takes advantage of spatial locality. Therefore, the name prefix caching is more effective than the content name caching.

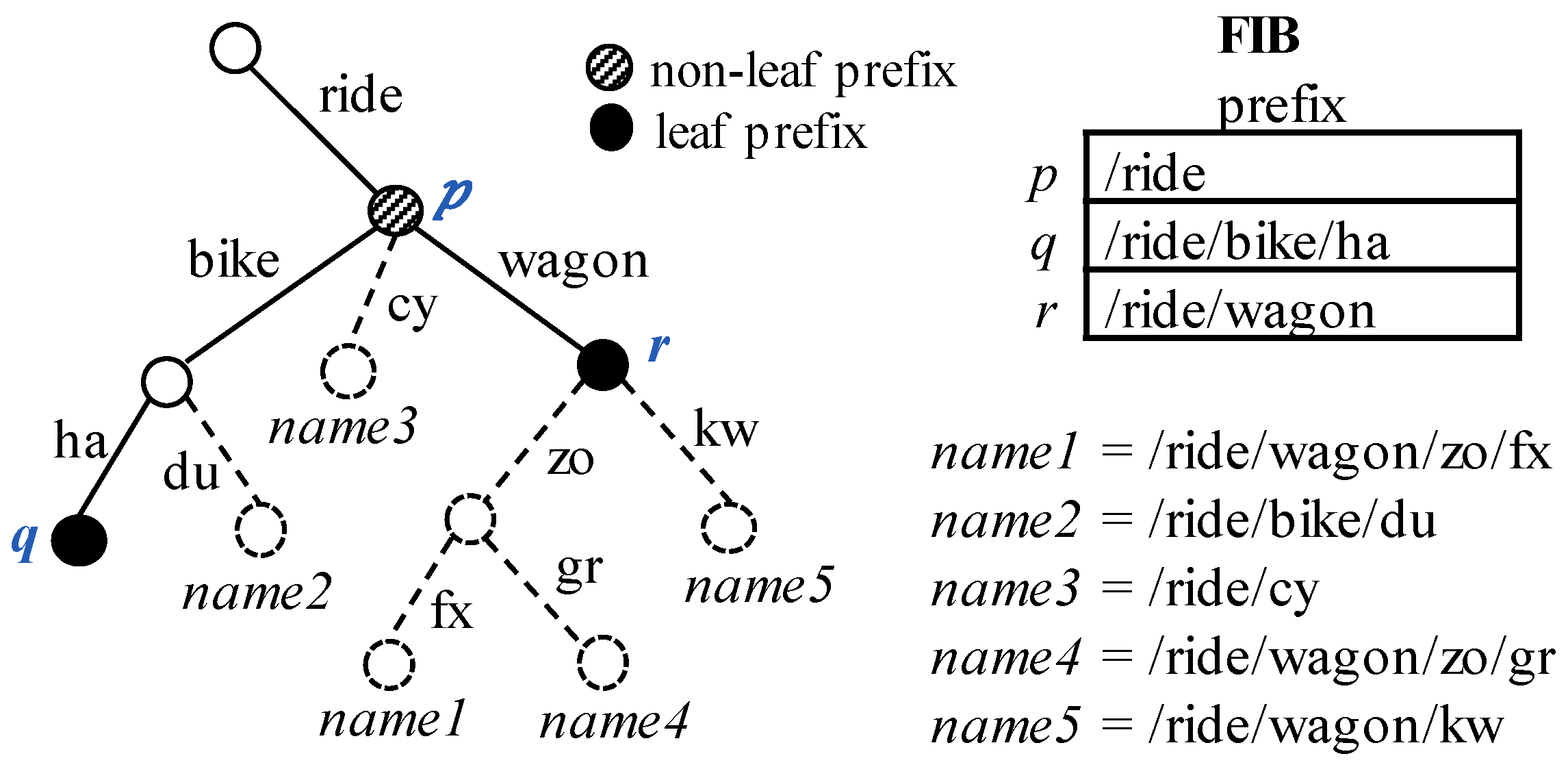

Figure 2 shows some examples for the name prefix caching supposing that the FIB contains three prefixes

p,

q, and

r. Assume that the name of the first packet is

name1 (=/ride/wagon/zo/fx), and its longest matching prefix

r (=/ride/wagon) is cached. Then, the cached lookup result can be reused for fast forwarding when a subsequent content name is any of

name1,

name4 (=/ride/wagon/zo/gr), or

name5 (=/ride/wagon/kw). Now, assume that the first content name is

name2 (=/ride/bike/du), and its lookup result

p (=/ride) is cached. If the next content name is

name4 (=/ride/wagon/zo/gr), the prefix

p is misleadingly regarded as its longest matching prefix due to the cache hit. In this case, the correct result for

name4 should be the prefix

r but not the prefix

p. The problem happens because the non-leaf prefix is cached but not all of its descendants are cached. This is called incomplete prefix caching. Note that the non-leaf caching does not always incur the problem. If the second content name was

name3 (=/ride/cy) in the above, the result prefix

p would be the correct.

2.3. Related Work

Several prefix caching techniques were studied for fast IP address lookup [

21,

22,

23]. The prefix caching in IP address lookup suffers from the incomplete prefix caching problem like name lookup. Prefix expansion is one of the most efficient ways of resolving the incomplete prefix caching problem. This is accomplished whenever the longest matching prefix for a given IP address is a non-leaf. This creates virtual prefixes by expanding the prefix. The expanded prefixes do not have any dependency on other descendant prefixes of the longest matching prefix.

Complete prefix tree expansion (CPTE) and partial prefix tree expansion (PPTE) [

21] were proposed to solve the incomplete prefix caching problem in IP address lookup. In these expansion techniques, all non-leaf prefixes should be expanded in the forwarding table. Thus, they enlarge the size of the original forwarding table. Moreover, it is impractical to apply those techniques to the name lookup because the degree of a prefix node is unbounded. The multi-zone pipelined cache (MPC) [

22] performs either prefix caching with prefix expansion for short prefixes or IP address caching for long prefixes. The reverse route cache with minimal expansion (RRC-ME) [

23] was also presented for prefix caching. It expands non-leaf prefixes dynamically without any modification of the original forwarding table. However, additional memory accesses may be required up to the extended length. Moreover, it is applicable only to trie-based IP lookup engines.

Recently, a few works were conducted on prefix caching for the name lookup. The prefix expansion technique is also used to resolve incomplete prefix caching issues in the proposed name lookup. On-the-fly caching [

18] caches the most specific non-overlapping prefix in the name lookup. It dynamically expands the longest matching prefix so that the expanded prefixes have no overlap with any other prefixes in the FIB. It caches the expanded prefixes like RRC-ME in the IP address lookup. Thus, it has similarities with the pros and cons of RRC-ME. Furthermore, on-the-fly caching in the name lookup is more complex and difficult to implement, because the branches of the trie node are not easily represented in fixed size, unlike IP address lookup. A new type of prefix caching scheme was also presented in the name lookup using the prefix expansion based on critical distance [

19]. The critical distance means the maximum distance between a prefix and its descendant prefixes. This prefix expansion ensures independence between the expanded prefix and other descendant prefixes.

The incomplete prefix caching caused by caching a non-leaf prefix is a significant problem for both name lookup and IP address lookup. Most studies suggested their prefix expansion technologies in various ways. However, since the expanded prefix is always longer than the original prefix, the number of name occurrences that can be matched would be smaller than the original. This paper proposes a technique to solve incomplete prefix caching without any prefix expansion using a Bloom filter.

4. Evaluation

For the experiment, we used the route prefixes that came from a name set

dmoz [

24] because there is currently no real route prefix table for NDN. Since the name set was originated from Internet URLs, some conversions were made for the experiment. For instance, some intermediate nodes which are not prefixes were changed to prefixes randomly.

Table 1 shows the FIB table containing 3,644,942 prefixes in total. The FIB table consists of leaf prefixes and non-leaf prefixes, which account for 77.6% and 22.4% of the total, respectively.

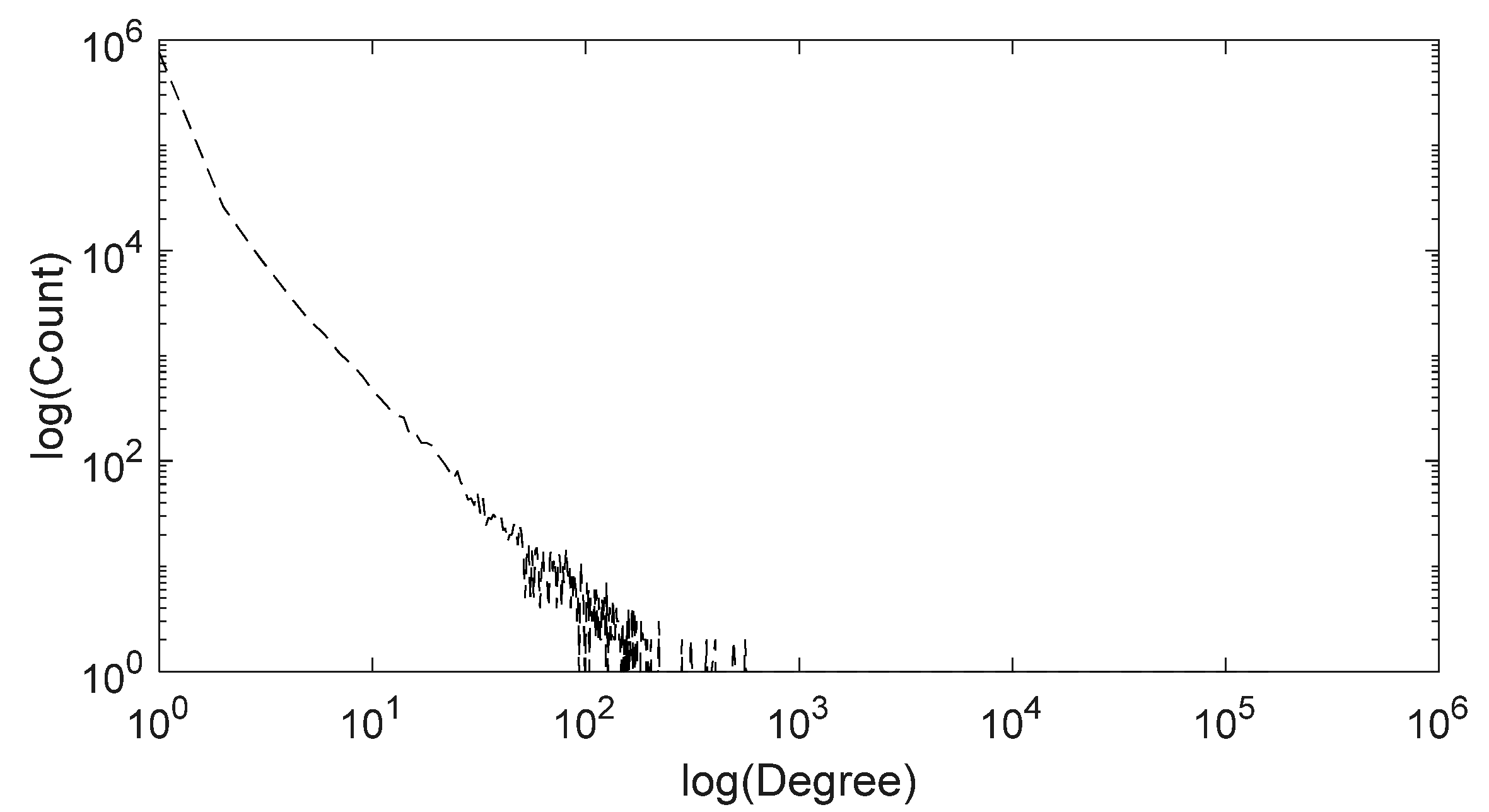

Figure 8 shows the frequency of prefixes according to their degrees. The degree of a prefix represents the number of the first components appearing just after the prefix. This is related to the false positive ratio of a Bloom filter. All the leaf prefixes no doubt have a degree of 0, and most non-leaf prefixes have a degree of 1, which is 94.0% of the total non-leaf prefixes. The mean value of the degree is 2.023 for non-leaf prefixes; however, if an outlier is excluded, the mean value becomes 1.814.

Six traces were used for our experiment, each of which was generated stochastically using Zipf distribution. Internet traces are known to follow Zipf-like behavior [

25].

where

r is the rank in the frequency of packets. When the generated traces for α = {0.6, 0.7, 0.8, 0.9, 1.0, 1.1} were used, the cache hit ratio in our scheme was as shown in

Table 2. As α increases, the high-ranking packets have a higher frequency of occurrences in a trace. Generally, a high cache hit ratio is achieved with a large cache and in the trace of a high α value. In cases where the cache size is greater than 160 KB, the cache hit ratio is not affected much by the value of α.

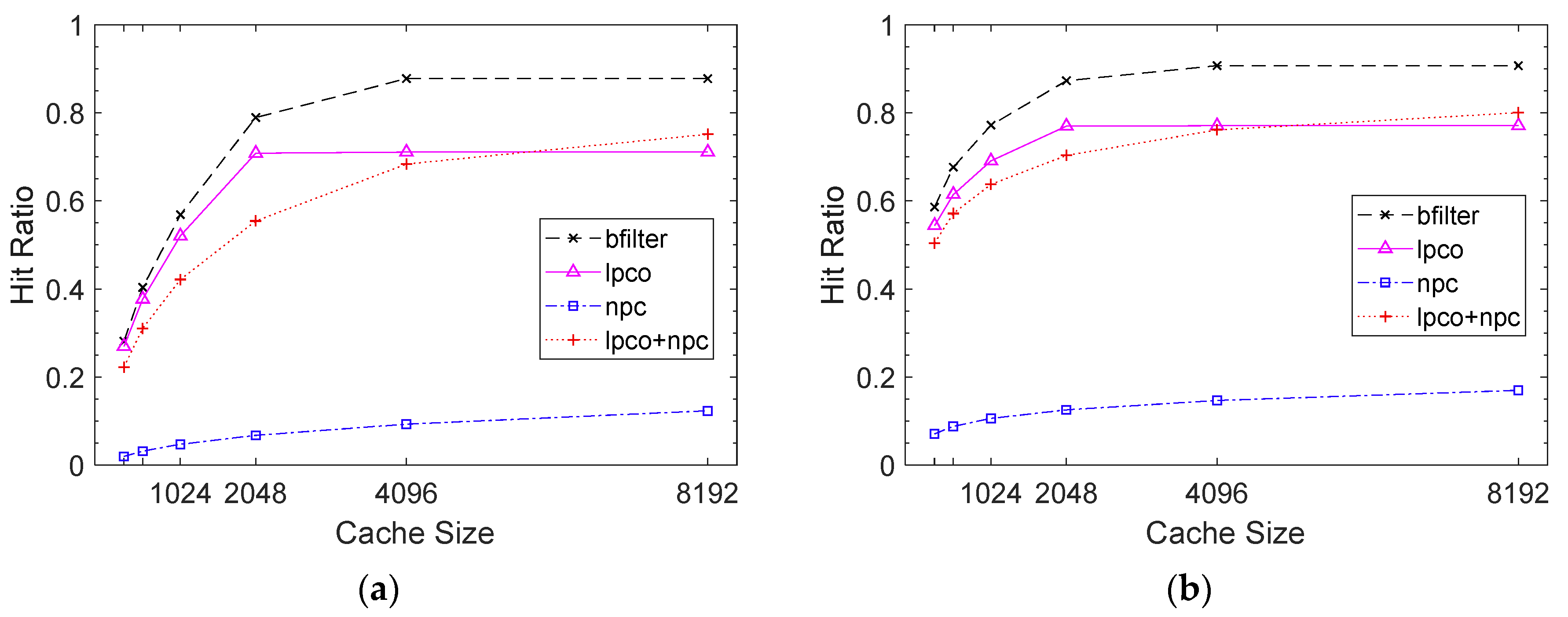

Non-leaf prefix caching without any supplementary method causes an incomplete prefix caching problem. A few caching schemes to avoid the problem are compared in

Figure 9. Our Bloom filter-based scheme is denoted by “bfilter”, and the Leaf-Prefix-Caching-Only scheme, which allows only leaf prefixes to be cached, is denoted by “lpco”. The No-Prefix-Caching scheme, which caches a content name itself instead of its matching prefix, is denoted by “npc”. “lpco+npc” denotes a scheme which caches a matching leaf prefix or a content name in cases where the matching prefix is a non-leaf prefix. The Bloom filter-based prefix cache is far superior to the other schemes in the cache hit ratio. “npc” no doubt shows the worst cache hit ratio because it does not cache prefixes and, thus, it does not exploit any spatial locality. The cache hit ratio of “lpco+npc” is worse than that of “lpco” up to the cache size of 4096 entries. The content name caching requires more cache entries to utilize a similar level of the locality. Therefore, “lpco+npc” results in more conflict misses than “lpco” in a small cache. However, if the cache is sufficiently large, “lpco+npc” can utilize the space and reduce the cache misses, even in cases where the matching prefix is a non-leaf prefix.

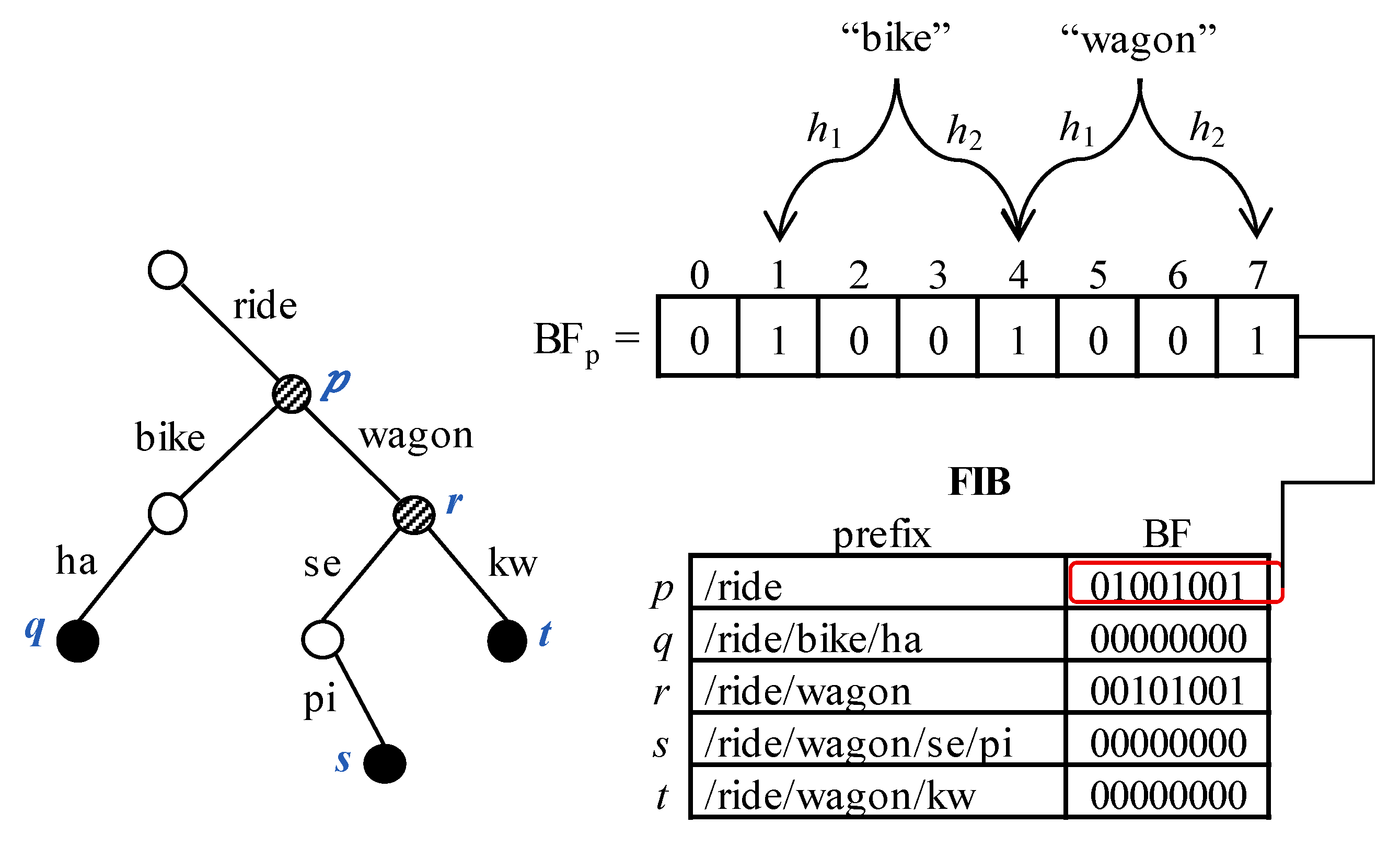

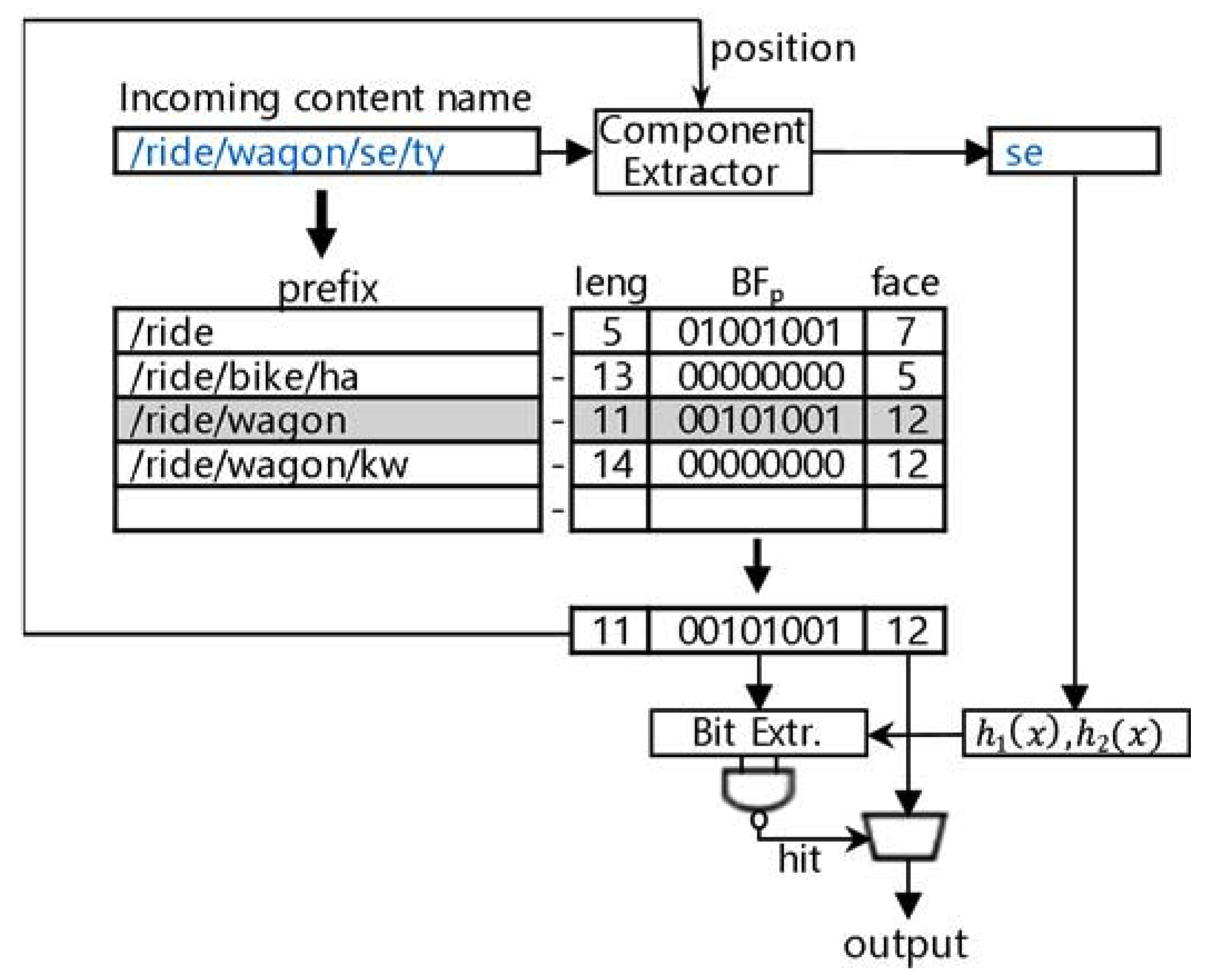

A cache miss occurs when there is no matching prefix in the cache, or when the matching prefix’s Bloom filter asserts a positive. The positive assertion of a Bloom filter implies that there is possibly a longer matching prefix in the FIB other than the cache. However, the Bloom filter may misleadingly assert a positive. The probability of the false positive is affected by filter size

m, the number of hash functions

k, and the number of elements

n to be contained. It is calculated as follows [

26]:

In our scheme, the number of elements

n corresponds to the degree of a prefix. Hence, it is close to 1 (94%) as previously discussed, and the mean value of it is 1.814. When the filter size

m is either 4 or 8, and the number of hash functions

k is 1, 2, or 3, the probability of the false positive is as summarized in

Table 3. In general, the false positive ratio decreases as the filter size

m increases. However, the false positive ratio does not always decrease as

k increases. For instance, the false positive ratio increases as

k increases for

n ≥ 2 and

m = 4.

Table 4 shows the cache hit ratio over the parameters of the Bloom filters,

m and

k. If

m is 4, the cache hit ratio is rather lower on

k = 2 than

k = 1. For

m = 8, the cache hit ratio increases as

k grows. However, the gain from a Bloom filter with

k = 3 is insubstantial when compared to a Bloom with

k = 2.

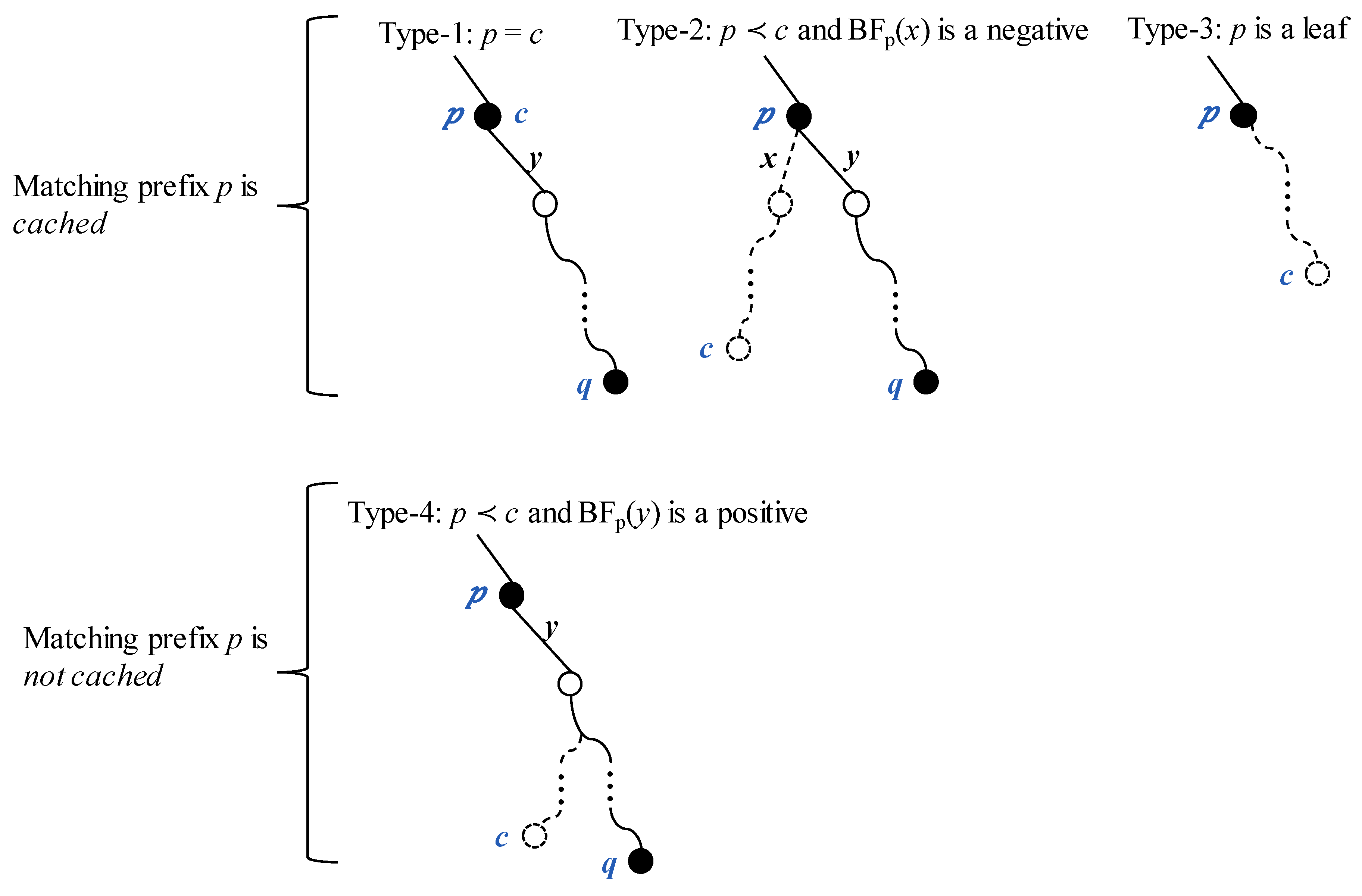

The matching type in FIB is classified into four categories, as described in

Section 3.2. In Type 1, the matching prefix is the same as the content name. If the matching prefix is a leaf prefix, then it is of Type 3. If the matching prefix is a non-leaf prefix but its Bloom filter asserts a positive, it is of Type 4. The matching prefix, which is a non-leaf prefix, is of Type 2 if its Bloom filter asserts a negative.

Figure 10a shows the distribution of each matching type in the traces with α ranging from 0.6 to 1.1. Type 3 is the dominating matching type in all the traces. Type 2 and Type 4 occupy a small portion, and the packets of Type 1 are very few.

Figure 10b shows the cache hit count in each type. The cache hit count increases as the cache size increases, especially in Type 3, because the packets of Type 3 are substantial in the trace. There is no cache hit in Type 4 because it is not cached.

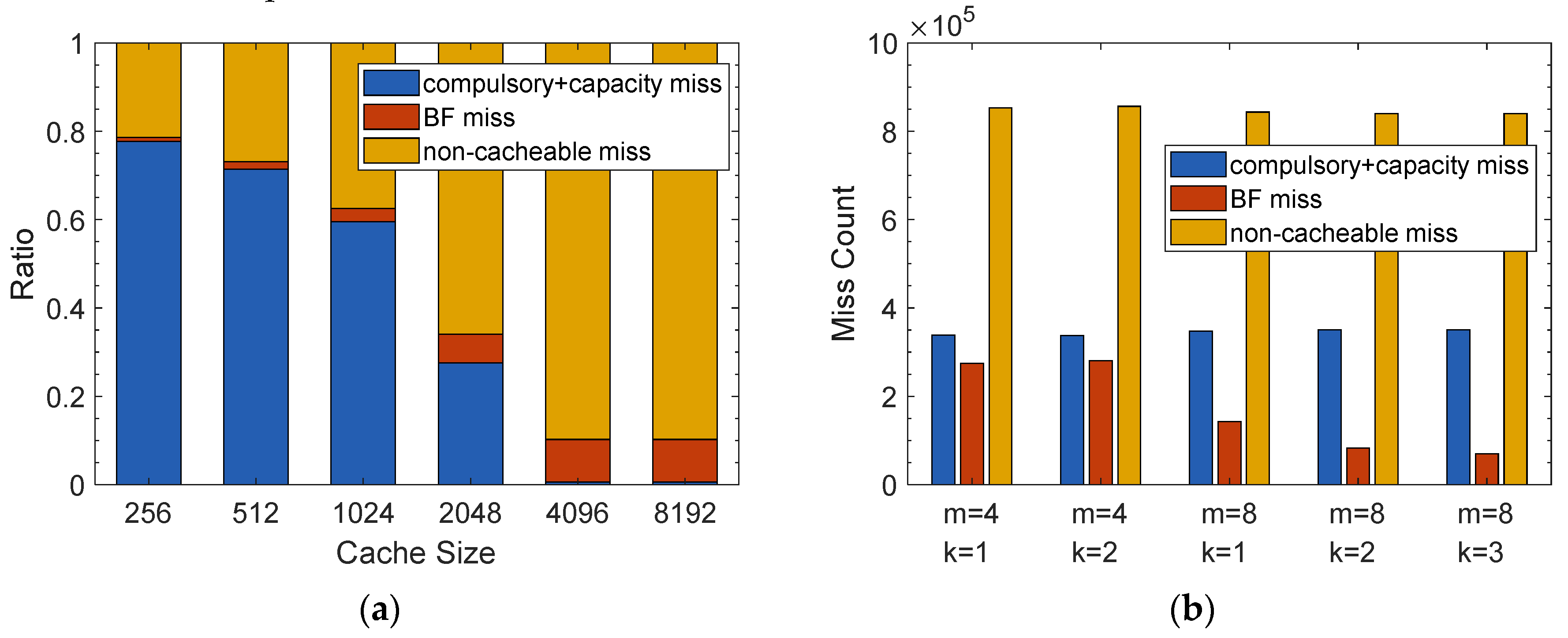

The conventional cache misses can be classified into compulsory, capacity, and conflict misses. In our cache scheme, a conflict miss does not occur because the cache memory is fully associative. The capacity and compulsory misses occur when there is no matching prefix in the cache due to the replacement or being never loaded before, respectively. In the Bloom filter-based cache scheme, we have two more miss types: BF miss and non-cacheable miss. In the BF miss, there is a matching prefix in the cache, but its Bloom filter asserts a positive. The non-cacheable miss occurs if the matching prefix in the FIB is not cached because it corresponds to Type 4. The capacity misses do not include the non-cacheable misses in this context.

Figure 11a shows the distribution of the cache miss counts according to their types. For a small cache, the capacity misses and the compulsory misses are a substantial portion of the total misses. There are very few capacity and compulsory misses if the cache size is greater than 2048. The BF misses and the non-cacheable misses become a relatively large portion as the cache size increases. The actual miss count for various Bloom filter configurations is shown in

Figure 11b. There is no doubt that the compulsory and the capacity misses do not change over the Bloom filter configuration. The BF miss count is lower when

m is 8 than when

m is 4. For

m = 8, the BF miss counts are nearly similar when compared for

k = 2, 3. Furthermore, for

m = 4, the BF miss counts are similar for

k = 1, 2.

5. Conclusions

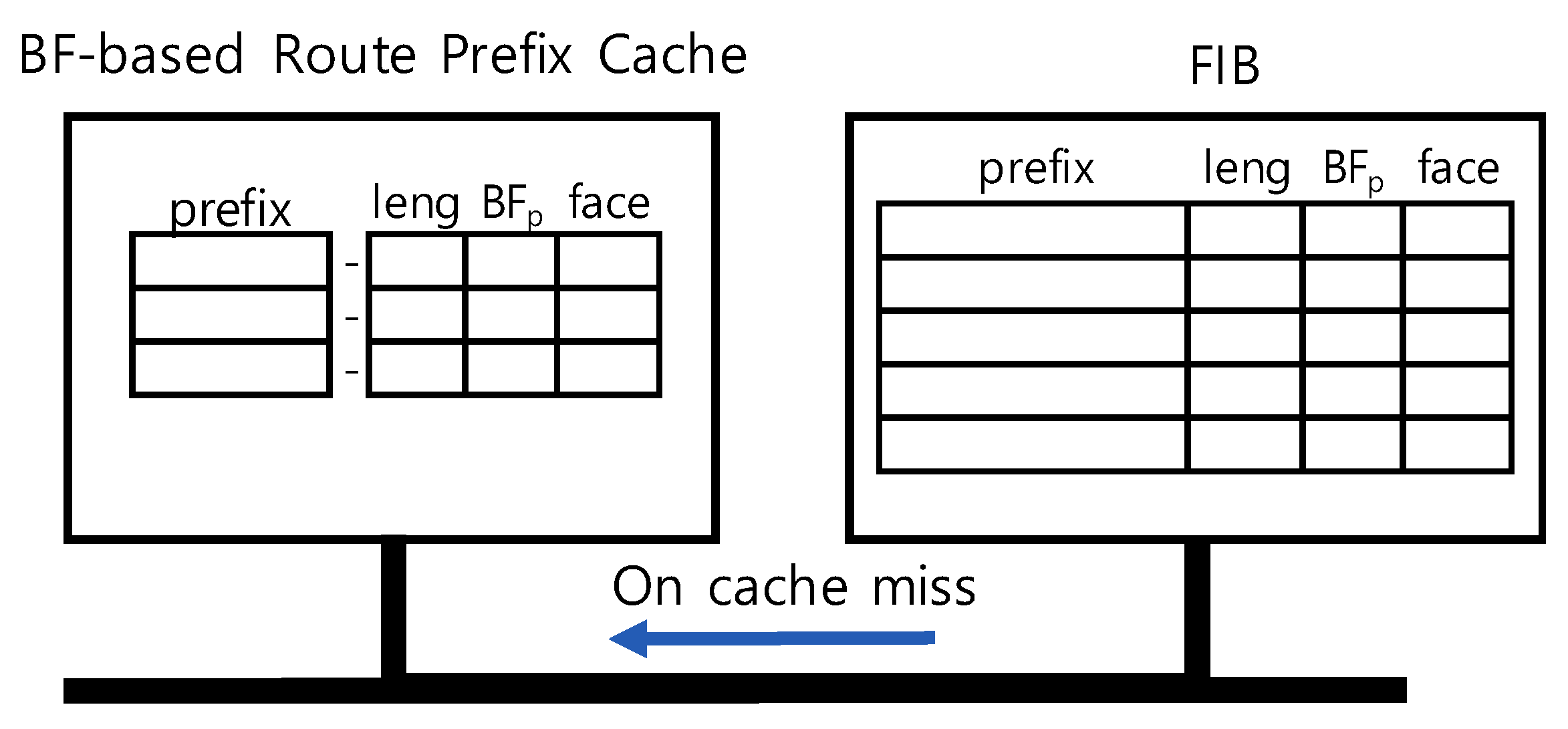

In this paper we proposed a novel route prefix caching scheme for NDN. The fundamental idea is to use a Bloom filter to test whether there is a longer matching prefix in the FIB. The route prefix cache can be used to reduce the name lookup latency. In NDN, the name lookup process is required to find the longest matching prefix as the result. Prefix caching is challenging because the matching result from a cache would be incorrect unless all the matching prefixes are already in the cache. In the event that the longest matching prefix is not cached but the shorter one is cached, an incorrect result can be issued by the cache.

To get around the problem, the content name itself can be simply cached instead of the matching prefix. However, in that scheme, the cache hit occurs only when the given name is exactly the same as the key. Therefore, the cache hit ratio would be much lower than for other prefix caching schemes with the same capacity. Caching only for leaf prefixes is another way to avoid incorrect prefix matching. In that scheme, non-leaf prefixes would never be cached; hence, the caching effect is restrictive. Our design goal is to cache non-leaf prefixes without incurring an incorrect result. A Bloom filter is provided for each prefix to test whether there is a longer matching prefix.

We devised a Bloom filter-based prefix cache considering two key elements. Firstly, our design does not require much space. Non-leaf prefix caching could be made efficient just by adding a 4-bit or 8-bit Bloom filter onto each prefix. Secondly, miss penalty and hit time are not significantly affected by the Bloom filter. On a cache miss, the Bloom filter is fetched together with the matching prefix from the FIB. The miss penalty for delivering the additional 4-bit or 8-bit filter is not a heavy load. Hashing for the Bloom filter affects the hit time, but it is only one-component hashing and requires little processing time.

The experimental result shows that the cache hit ratio of the Bloom filter-based prefix cache is superior to others even if it has small filters and few hash functions. The probability of the false positive of a Bloom filter can be analyzed through the filter size, the number of hash functions, and the number of elements. The cache miss counts due to Bloom filter’s positive assertion were compared to the experiment result, and the 8-bit filter with two hash functions was considered to be suitable for our prefix caching scheme.