Abstract

Chronic wounds or wounds that are not healing properly are a worldwide health problem that affect the global economy and population. Alongside with aging of the population, increasing obesity and diabetes patients, we can assume that costs of chronic wound healing will be even higher. Wound assessment should be fast and accurate in order to reduce the possible complications, and therefore shorten the wound healing process. Contact methods often used by medical experts have drawbacks that are easily overcome by non-contact methods like image analysis, where wound analysis is fully or partially automated. Two major tasks in wound analysis on images are segmentation of the wound from the healthy skin and background, and classification of the most important wound tissues like granulation, fibrin, and necrosis. These tasks are necessary for further assessment like wound measurement or healing evaluation based on tissue representation. Researchers use various methods and algorithms for image wound analysis with the aim to outperform accuracy rates and show the robustness of the proposed methods. Recently, neural networks and deep learning algorithms have driven considerable performance improvement across various fields, which has a led to a significant rise of research papers in the field of wound analysis as well. The aim of this paper is to provide an overview of recent methods for non-contact wound analysis which could be used for developing an end-to-end solution for a fully automated wound analysis system which would incorporate all stages from data acquisition, to segmentation and classification, ending with measurement and healing evaluation.

1. Introduction

The definition of chronic wounds (CW) varies from different aspects of medicine but often they are defined by the time required for the wound to heal. According to WoundSource, a broad definition would be, “A chronic wound is one that has failed to progress through the phases of healing in an orderly and timely fashion and has shown no significant progress toward healing in 30 days” [1]. Dadkhah et al. [2] define it as a wound that did not heal 40% in four weeks, while Mukherjee et al. [3] and Gautam and Mukhopadhyay [4] define it as a wound that remained unhealed for more than 6 or 12 weeks, respectively. Irrelevant to healing time, CW are wounds that are not healing despite medical treatment and should further be analyzed. Overall, CW can heal in a couple of months or even years but if it is concluded that a wound is not healing properly then wound treatment should be changed accordingly. Knowledge and fast decision making are crucial throughout the ongoing wound healing process, from the initial diagnosis to the final examination, because if the wounds are not accurately and orderly treated, the severity of the wound can increase drastically at any time. Based on the categorization of the healing period, wounds can be separated into two main categories: acute and chronic. Acute wounds heal gradually, appropriate for size and type of the wound, usually a short period of time [5]. Chronic wounds can be divided depending on its cause, e.g., venous leg ulcer, diabetic foot ulcer, pressure ulcer, and burns [6]. In Chakraborty [7], CW are similarly grouped, but instead of burns, the authors introduced arterial ulcers. Every type of CW has special circumstances and should therefore be treated and healed accordingly.

Chronic wounds affect population and economies worldwide. The aging of the population together with an increase in obesity and diabetes contributes to an increasing number of chronic wounds. According to [5], expenses for USA per year for the overall treatment of chronic wounds were estimated to be $25 billion affecting 6.5 million people. According to the same source, the prevalence rate of chronic wounds in the UK population is around 5.9% which results in £2.3–£3.1 billion for healthcare costs per year. Germany and India have prevalence rates of 5.9% and 4.5%, respectively [8]. Worldwide, in 2012, there were 24 million people affected by chronic wounds [7]. Overall cost data for other parts of the world like Africa or India is not presented but we can assume numerous chronic wound problems due to shortages of medical staff and equipment especially in big rural areas [6].

Manual wound analysis is a problematic task because it is susceptible to the opinion of an individual; hence, wound imaging has a great potential to offer much more information than analysis from one point of view. Chronic wound analysis methods can be divided into contact and non-contact methods. Contact methods such as manual planimetry using rulers, transparency tracing, color dye injection, and alginate molds, are considered traditional and have been used mostly in the past [9]. They are usually very painful for patients as well as unpractical for medical staff, and because of the irregularity of wound shape, they often lack accuracy and precision. The application of non-contact wound analysis has improved with the increase in computational power. Moreover, advances in data analysis have led to the fast-growing use of the digital imaging approach in wound assessment. Wound imaging methods can be separated into digital camera imaging, hyperspectral imaging, thermal imaging, laser doppler imaging, confocal microscopy, optical coherence tomography (OCT), near-infrared (NIR) reflectance spectroscopy imaging, ultrasound imaging, and other novel imaging techniques [6].

Zahia et al. [10] made a review of image-based pressure wounds analysis of 82 scientific papers and surmised four main wound imaging tasks: wound segmentation, wound measurement, tissue classification, and healing evaluation. 3D reconstruction as an important part of the wound imaging was not evaluated. Furthermore, the paper widely explains the types of neural networks, their definition and usage, and their contribution to skin wound image analysis. All analyzed papers were published before 2018.

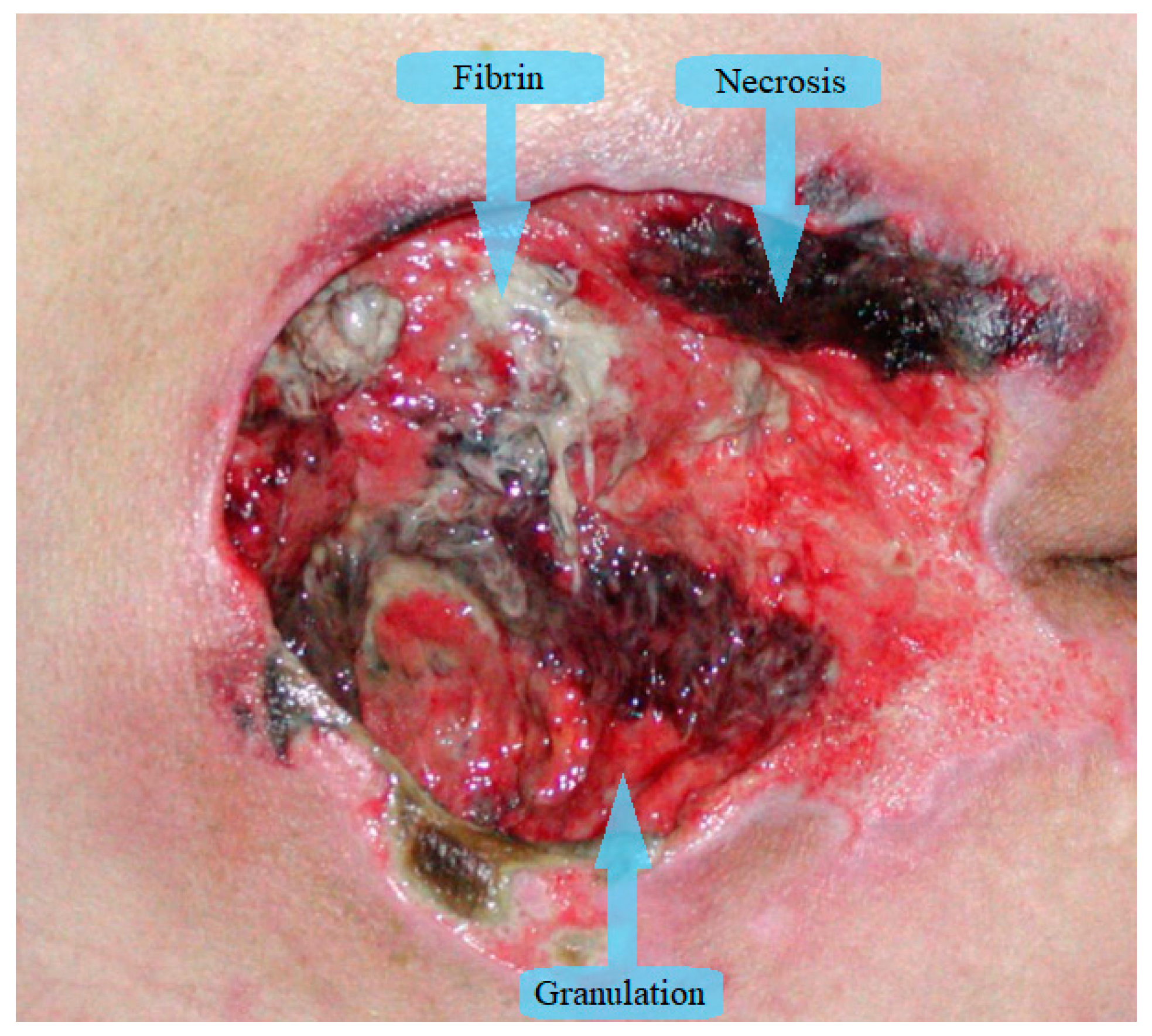

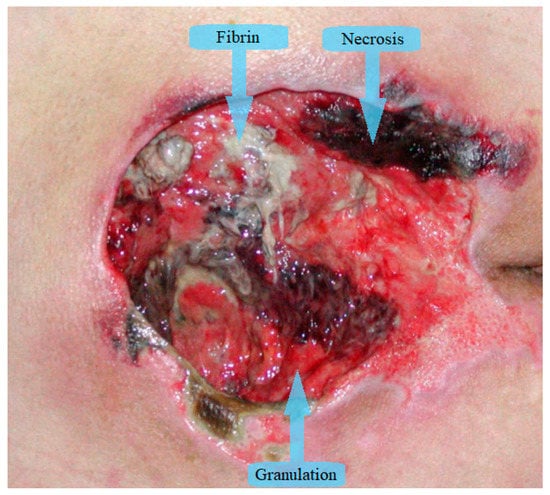

Wound segmentation separates the wound from surrounding skin and background, wound measurement determines wound dimensions, tissue classification outlines different wound tissues and healing evaluation predicts the time necessary for wound healing. Most of the research papers in image wound assessment are about wound segmentation and/or classification of wound tissues. The majority of papers divide wound tissues into three types: granulation, fibrin (slough), and necrosis (eschar) as can be seen in Figure 1 [11]. Additionally, some authors expand the number of tissue types for the purpose of increased accuracy. In Rajathi et al. [12], the authors added epithelial healing tissue, while Kumar and Reddy [13] expand to eight types of tissues, adding fascia, bone, muscle tissue, and tendon. Overall, granulation is red tissue that is healing properly, fibrin (slough) is yellow wet tissue that accumulates on the wound surface, and necrosis is black colored tissue that has died.

Figure 1.

Tissue types in chronic wounds.

In order to increase the objectivity of the results, computer-aided wound analysis should be automated as much as possible, without user interference, camera adjustment, placing rulers, markers, or manual cropping of images. Similarly, test images should be acquired in different lighting conditions, since this is one of the significant problems affecting the robustness of a method.

Unfortunately, in order to achieve such objectivity and robustness there are many challenges which need to be overcome. Different wound types such as burns or pressure, venous, and diabetic ulcers all have their specific locations where they appear, whether they are on a flat or curved part of the body and the respective size they can achieve. Furthermore, wound surfaces can be very complex with multiple types of tissues intertwined together which make them very hard to separate. Surrounding wound tissue can also interfere with separation with its various skin pigments and especially if it has only recently been healed, its color can still resemble some parts of the wound. Depending on the camera used, wounds can be recorded in one or multiple shots. If a standard 2D camera is used and the wound is on a curved surface, the potential measurement would not be very precise if only one image is taken. It is generally accepted that image-based methods have a maximum error of area measurement of about 10% depending on the wound location and the camera angle. This fact has been experimentally proven in several studies including [14] where the authors also conclude that image-based measurement is a viable alternative to contact-based methods such as VisiTrak. In order to resolve the issue with curved surfaces, multiple images of the same wound from different angles must be taken and then multiple view geometry methods used in order to reconstruct the surface of the wound and precisely measure it. Multiple view geometry approaches have some limitations which could result in arbitrary surfaces not being able to be reconstructed or creation of noisy 3D surfaces, in which case it would be better to use a 3D camera. 3D cameras provide 3D point clouds which means that precise measurement of curved surfaces is possible even from a single view. However, if multiple views with associated point clouds are available, more detailed and precise reconstruction is possible with point cloud registration, regardless of the wound position and size. Regardless of the camera used, the recording of the wound should be as close as possible with respect to camera specifications in order to achieve the best possible resolution and sampling precision. In order to improve generalization and robustness of the overall system, lighting conditions for recording images should be such that it should not favor a single type of lighting especially when creating data samples used for training various machine learning methods. Furthermore, using diffused type of lighting sources, i.e., not using directional light should somewhat alleviate the influence of shadows from surface geometry and reflections from glossy parts of the wound.

Typically, image analysis is tested only on a selected group of images which are often not a representative group, so high diversity in used images for training and testing would result in a more robust method. Another issue is the small number of images of chronic wounds that exist in publicly available databases, especially annotated. The availability of annotated databases would save a lot of time for researches in this field and it would enable easier entry of other researchers into the field and faster application of novel methods. Most commonly used wound image database is provided by Medical Device Technical Consultancy Service (Medetec) [15], which is used by many researchers in this field [4,6,11,16].

The aim of this paper is to provide an overview of most recent methods used for various stages of non-contact wound analysis which could be used for developing an end-to-end solution for fully automated system for wound analysis. This system would incorporate all stages from data acquisition, to segmentation and classification, ending with measurement and healing evaluation.

Such a solution could even be integrated into the Clinical Decision Support Systems (CDSS). CDSS helps clinicians with decision making where it is basically a support system that uses knowledge gathered from two or more sources of patient data in order to generate specific advice. The system analyzes available patient data and can even be combined with electronic health records (EHR) [17]. By using the aggregated data, it provides assistance for complex tasks and makes it easier and faster for clinicians to make decisions on important cases. CDSS cannot replace the medical expert and make decisions on its own, but it can advise and help with its knowledge base in order to provide a better solution for the patient. In the study by [18], CDSS showed good results where it improved the performance of medical practitioners. Therefore, integrating an automated wound assessment solution into the CDSS would be beneficial because it would enhance the system with another valuable source of data. The system could then integrate data on wound metrics and tissue prevalence into its decision making process or it could display the 3D wound model for follow-up comparison and further analysis.

The main contributions of this paper are as follows: (i) detailed overview of papers which deal with all types of wounds (including burns and melanoma) in the field of wound image analysis (alongside with computation times for conducting the proposed methods if available) as recent as 2020; (ii) overview of the most used preprocessing and post processing methods; (iii) overview of most recent wound image analysis tasks of wound segmentation, tissues classification, 3D reconstruction, wound measurement, and healing prediction presented orderly as in the studied papers.

The rest of the paper is divided as follows. In Section 1.1, a brief historical overview is provided, while Section 1.2 describes the methodology used for this paper. Section 2 explains basic concepts for assessing used method’s accuracy and algorithms overview, while in Section 3 wound image acquisition alongside with preprocessing and postprocessing methods are explained. Section 4 and Section 5 present the most recent wound segmentation and tissue classification methods respectively. 3D reconstruction is evaluated in Section 6, and important tasks of wound measurement and healing evaluation are explained in Section 7. Section 8 contains discussion, and Section 9 concludes this review paper.

1.1. Brief Historical Overview

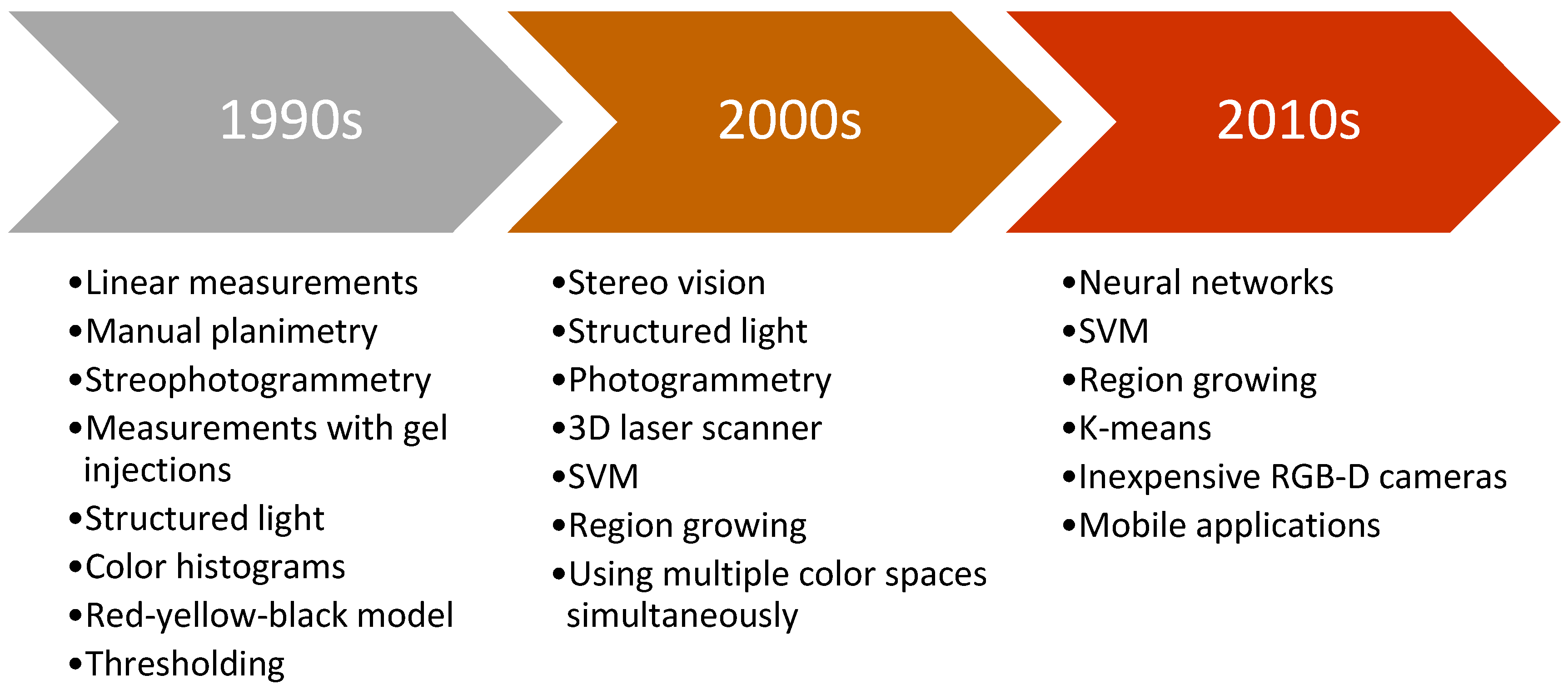

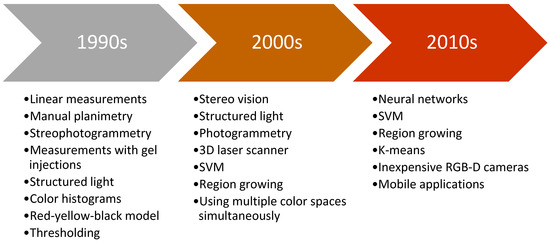

Chronic wounds as one of the medical weak spots need precise and fast healing treatment. Improvements in technology and computing in the past contributed to development in the field of automation and computer vision. Due to obvious shortcomings of contact based methods, researchers are focused on the development of automated non-contact methods for wound assessment. Objective and precise wound healing assessment would help clinicians in determining most appropriate wound treatment. Figure 2 presents typical methods used for wound analysis in specific decades. It can be seen that as technology advanced, researchers moved from simple features and hardcoded thresholds to soft computing methods and machine learning.

Figure 2.

Brief timeline of typical methods used by period.

First researchers in this field analyzed the wounds in 2D images using linear measurement, planimetry or stereophotogrammetry which had fair accuracy but it was not suitable for medical centers due to bulky equipment and slow performance [19]. Furthermore, typically a ruler, or some scaling device was placed near the wound where the image recording was taken [20]. While conducting wound measurement on video images, research by [21] concluded that it is better than using simple photography.

First wound volume measurement was performed with injection of gels inside the wound [22] or with more noninvasive methods like 3D laser imaging system with notable accuracy [23]. Moreover, Measurement of Area and Volume Instrument System (MAVIS) was introduced which utilized structured light principle and generated the 3D model of the wound. The method was noninvasive and showed good performance results, but the system was large and expensive [24].

Wound tissue analysis had been primarily based on digital images with methods like red-yellow-black model [25] or color histograms, which were used to classify the wound tissues in order to assess the wound healing process [26].

Furthermore, with the development of more advanced algorithms such as support vector machines (SVM), researchers in the field of wound analysis started using these methods for image segmentation task [27]. Advances also happened in wound measurement where Visitrak system, similar to digital planimetry, was used with very high accuracy [28], but more researches focused on avoiding the contact with the wound [29]. Improved MAVIS II performed 3D reconstruction from handheld device based on stereo vision with volume error of maximum 6%, but the edge of the wound needed to be traced manually [30].

More recent methods started to implement standard digital camera for stereo vision where 3D reconstruction is conducted from two images [31], or from a handheld RGB-D camera [32]. Methods were faster, more robust and accuracy rates increased. The most recent novelty in wound analysis is the use of deep learning as it showed promising results in certain tasks [33]. With the development of widespread mobile phones, some papers performed image analysis based on pictures taken by phone with some accuracy [34]. The biggest advantage of those systems is that the user can take a picture of the wound itself, send the image to a data-center, so that a clinician can inspect the wound just by accessing the wound image remotely, and even advise the patient in healing treatment.

Although all the aforementioned systems perform some tasks of wound assessment fairly, from a medical aspect, an end to end system that would perform all tasks of wound analysis including wound detection, segmentation, classification, 3D reconstruction, measurement and healing evaluation is still unknown. In order for such a system to be implemented in a medical center, it must be accurate, fast, safe for patients, easy to use, not expensive and robust in a way that can work under different image capturing conditions, types of skin, and different types of the wounds. Moreover, the system should be as automatic as possible in order to reduce subjective input and raise measurement objectivity. Future researches in the field of wound analysis should be focused on development of such systems that would assess the wound as a whole and provide all the valuable information for clinicians.

1.2. Methodology

The body of knowledge used in this paper is collected using various online available databases namely, Wiley Online Library, ScienceDirect, BioMedical Engineering OnLine, SpringerLink, IOS Press Content Library, MAG Online Library, Sciendo, IEEE Xplore, Taylor&Francis Online, National Library of Medicine, IGI Global, etc. For assembling the bibliography of papers which are dealing with any aspect of wound assessment, certain search terms were used. The used search terms and their combinations were “image”, “analysis”, “assessment”, “management”, “wound”, “chronic”, “injury”, “segmentation”, “detection”, “diabetic”, “RGB-D camera” “computer”, “neural network”, “evaluation”, “tissue”, “classification”, “3D reconstruction”, “measurement”, “healing”, “evaluation”, “imaging”, “processing”, “automatic”, and “ulcer”.

A total of 201 papers were obtained dealing with some aspect of chronic wound analysis, where the keywords, abstract, or conclusion satisfied the aforementioned search terms. Considering only papers from 2013 until 2020, a total of 79 papers were evaluated in this overview. Of these papers, 39 papers dealt with wound segmentation, 31 papers with tissue classification, 19 papers with measurement and healing and only 8 papers with 3D reconstruction. Certain papers dealt with more than one stage of wound analysis, so these papers were used in multiple sections of this review.

Papers mentioned in segmentation section are strictly papers who delineate the wound boundary while the classification section includes papers which separate the wound tissues found inside the wound area. Measurement, 3D reconstruction and healing evaluation sections considered papers that work together with segmentation and tissue classification as obligatory stages. Measurement papers emphasize the main topic of measuring wounds, while 3D reconstruction papers taken into consideration focus on making the 3D wound model. Healing evaluation papers which are considered are extensions of tissue classification and/or wound measurement.

Certain authors alongside with their co-authors published more than one research paper in the field, so their papers are summarized in separate paragraphs in appropriate sections where the most contribution has been recognized. Summary tables present most recent papers of such authors, but not all of them. Entries in summary tables are ordered by year of the paper publication as it is the most important inclusion criteria along with the topic of their research.

2. Performance Metrics and Algorithms Overview

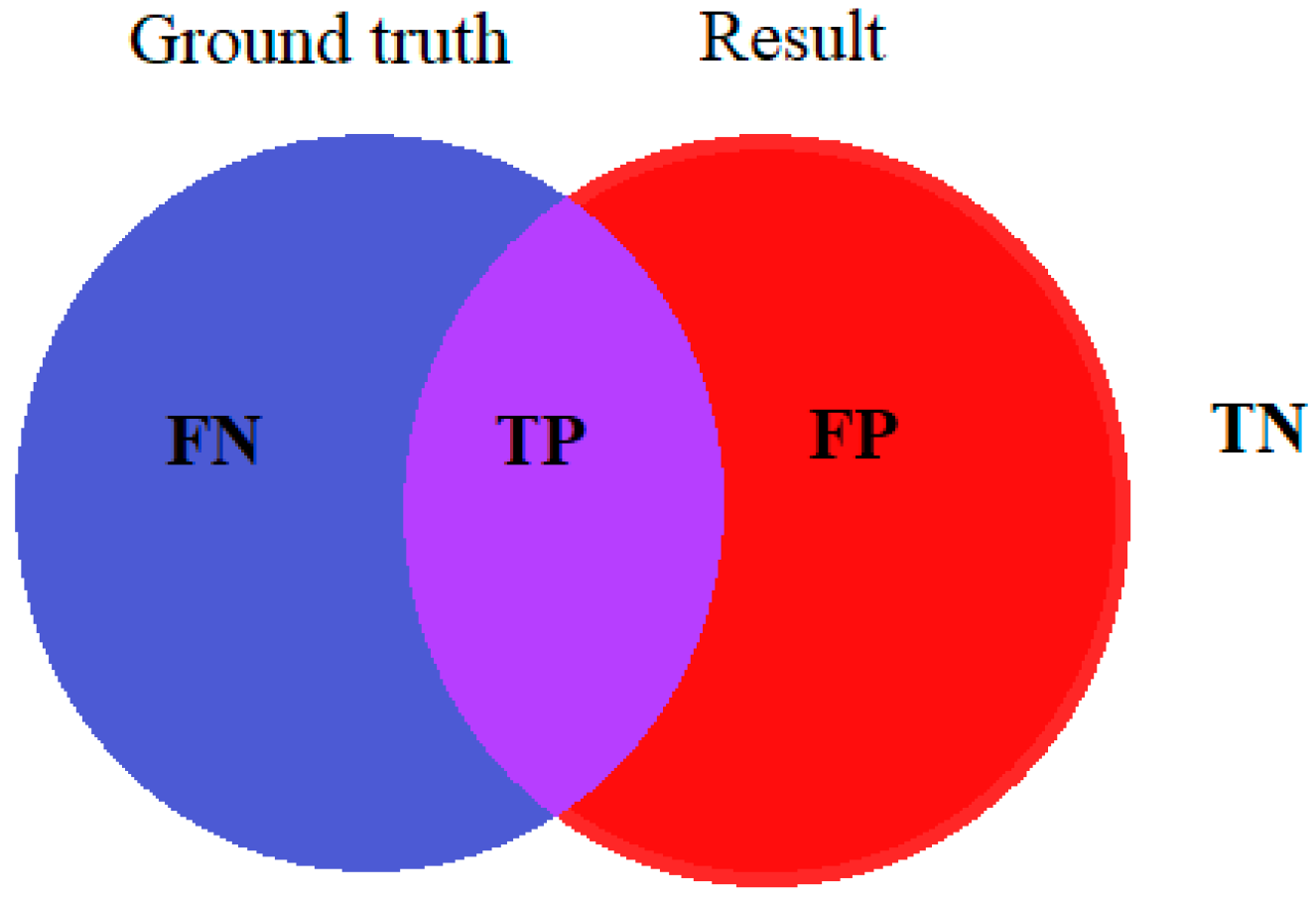

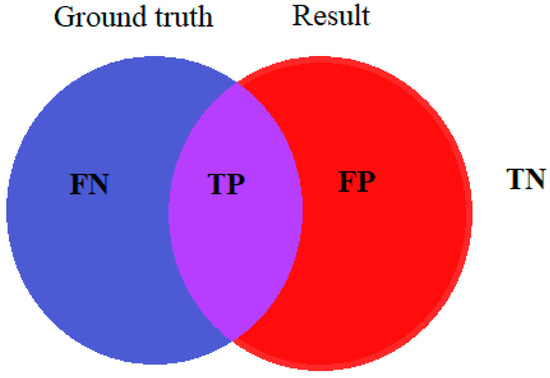

To calculate the accuracy of a particular method, the results of the method are compared with image ground truth, which is the original image typically labeled by medical experts. True positive (TP), true negative (TN), false positive (FP), and false negative (FN) are obtained by comparing the ground truth image with the resulting image of the given method. Figure 3 shows corresponding terms between the original wound labeled by medical experts and the result of the image processing algorithm.

Figure 3.

Terms for accuracy evaluation.

Taha and Hanbury [35] evaluated 20 different metrics for image segmentation in medical imaging. Their aim was to provide a standard for evaluating medical image analysis but since metrics have different properties, selecting a suitable metric is not a trivial task. Comparing the results of a certain method, it is possible to evaluate metrics like accuracy, sensitivity or recall, specificity, dice similarity coefficient (DSC), precision, etc., presented by Equations (1)–(5), respectively.

In order to increase ground truth precision, labeling should be done by more than one expert so the result is not susceptible to a singular opinion of the individual [8]. Annotated images affect the analysis result and its accuracy rates so it should be done as detailed as possible. The shape of the wound and tissue distribution is usually irregular which creates problems during the annotation of the wound and its tissue samples. Manual marking in a tool like Photoshop [36] can be time-consuming, so some researchers like [9] developed their own software for labeling the specific tissue types. Some research like Fauzi et al. [37] even calculates the agreement in ground truth labeling between three medical experts to determine the minimum, maximum, and mean value of ground truth agreement. The performed classification gave a result of 75.1% considering that the average agreement between the clinicians was between 67.4 and 84.3%.

An important metric of digital image analysis is spent CPU and GPU computational time [36]. By cropping the wound part on the test image or dividing the image into smaller parts (segments) computational time can be reduced significantly. The wound should be estimated by the most important factors which depict wound state like wound size, volume, wound edge, infection, tissue structure, pain, etc. [38]. In imaging, visual data is discretized into image pixels. Similarly, image regions composed of repeated elements are called textures [39]. The most important features a pixel can give are color and texture, if we consider other pixels in the neighborhood, from which valuable information can be extracted and used for classification and clustering [8]. Extracted descriptors or features of image segments help to differentiate between wound or tissue pixels. Researchers select which features are the most useful, as in the study by Veredas et al. [40] for the better result of their methods. Maity et al. [9] even calculated 105 features for each pixel and used them for classification in their proposed method.

Algorithms for CW image analysis can be divided into supervised and unsupervised, while for reinforcement learning algorithms we were not able to find examples where it was used for any stage of wound assessment. Supervised algorithms need predefined human-labeled training data for image segmentation and classification. A database in supervised learning is often divided into training and testing data, mostly in the ratio 3:1 as in [12]. Typical examples of supervised learning are neural networks (NN), support vector machine (SVM), etc. In unsupervised algorithms it is unknown what data belongs to what cluster, so we can observe it as the grouping of raw data into clusters. Examples of unsupervised learning are k-means clustering, fuzzy c-means, etc. One of the reasons for the expansion of image processing papers from 2017 is the usage of deep neural networks, especially convolution neural networks that showed a high accuracy rate for classification purposes [41,42,43]. A deep neural network as part of the supervised learning needs a considerable database for the training of the network but as mentioned earlier, lack of a publicly available CW annotated image database is an ongoing problem. The researchers in this field typically create a small custom annotated database and afterward increased the number of training images by rotation, zooming, or shifting of original images [44]. In Zahia et al. [41], 22 original input images of 1020 × 1020 are divided into 5 × 5 patches, thereby acquiring 380,000 small images each one containing one of the studied tissue classes for network training. The shortage of images needed for the deep neural networks learning phase can be compensated with a deep neural network previously learned on another general purpose database with a big number of images like ImageNet. This procedure is called transfer learning and it has shown some good experimental results [45].

3. Image Acquisition, Preprocessing, and Postprocessing

Most CW images are taken with a digital camera, smartphone, or RGB-D camera. New methods should be robust enough so that the images could be acquired under normal lighting conditions, without special adjustment of the wound, special instruments, or some special capturing conditions. In a study by Wang et al. [46], the authors developed an image capturing device in the shape of a box that consisted of two mirrors placed inside the box at the angle of 90°. The foot was placed on the surface of the box for image capturing. The aforementioned image acquisition is not suitable for wounds on other parts of the body and without image capturing experience it cannot be used elsewhere. As a rule, the user should not be particularly familiar with the proposed analysis method so he can be objective in wound acquisition [37].

The preprocessing stage is fundamental for the majority of the methods because images must be properly adjusted in order for wound segmentation or tissue classification to be more effective. The most used methods are:

- Conversion of color spacesImages captured by a camera or smartphone are typically saved in RGB color space which is not an ideal color space for analyzing color features. Two visually close colors for the human eye may be more separated in the color space than two other colors that are visually more distant [47]. Most of the considered research convert to some of the following color spaces:

- ▪

- HSV or HISHue, saturation, and value, or intensity color space were used in [3,37,48,49,50].

- ▪

- YCbCrLuminosity component and blue-difference and red-difference chroma components. Li et al. [51] concluded that there is a clear difference between the skin and the background in the Cr channel.

- ▪

- YDbDrSimilar in composition to YCbCr. Yadav et al. [52] and Chakraborty [11] showed that in this color space contrast between wound and background is highest.

- ▪

- CIELabColor space where numerical change in its channels values roughly corresponds to the same amount of visually perceived change. Used by e.g., [46,53,54].

- ▪

- RYKWRed-Yellow-Black color space for determining the tissue types used in [55].

- ▪

- L*u*v* also called CIELUVColor space that attempts perceptual uniformity, defined by the CIE as a transformation of the 1931 CIE XYZ color space, used by [56].

- ▪

- GrayscaleConversion from RGB image to grayscale is typically performed by the Equation (6) and has been used in [41,57,58].

Research like that of Haider et al. [53] combines multiple color spaces, CIELab color space for wound detection, and HSV color space for healing evaluation. In Huang et al. [59] authors apply a white balance method in which 5% of the brightest and darkest parts of the image are removed, while Navas et al. [60] use 4 different color spaces for color feature extraction RGB, HSV, YCbCr, and YIQ. Dark red granulation tissue and black necrosis tissue have similar hue characteristics therefore, in [5] and [37] the authors modified all three channels in HSV color space for better segmentation.

- Choosing the right color channel for further analysisResearchers always take the most convenient channel from color space to achieve the best performance in the proposed method. Some of the used channels are:

- ▪

- Db channel—YDbDr color spaceManohar Dhane et al. [8] chose this color channel by measuring the mean value of contrast between wound and background for 14 color spaces and all of their channels, so as [11] who evaluated that the Db channel has maximum contrast between wound and background.

- ▪

- Cr channel—YCbCr color spaceValue for skin of 135–160 with certain modifications can be easily used for wound segmentation as in [51].

- ▪

- S channel—HSV color spaceUsed by Gupta [49].

- Noise removal and image blurringImage denoising is an important task in digital image analysis as its role is removing white and black pixels from the image caused by shadows, camera flash, etc. while preserving the edges. In the blurring of an image, the aim is to remove drastic changes in pixel values which usually occur on border pixels so the border becomes fuzzy. Chakraborty and Gupta [61] tested 16 different filters for noise removal and concluded that the best one is an adaptive median filter. Some of the other filters used in papers of wound analysis:

- ▪

- Median filter [49,60,62,63].

- ▪

- Gaussian filter (blur) [64].

- ▪

- Ordered statistics filter [8].

- Image croppingIn Shenoy et al. [44] authors resized images to fit the input of their CNN architecture, while Zhao et al. [65] segmented the target wound region with an annotation app. This was because if original images were fed into the network, it would learn most of the features of the background and therefore would not accurately learn wound features.

- Superpixel segmentationAuthors like Biswas et al. [5] and Liu and Duan [66] divide the input image into pixel groups that contain similar features, also called superpixels. Superpixel segmentation is often performed using simple linear iterative clustering (SLIC) algorithm.

- Color correctionColor correction is often performed by using a combination of gray world estimator and retinex theory as in [8,52,63,67].

- Color homogenizationAn anisotropic diffusion filter is used for the homogenization of colors along with the preservation of edges, thus only smoothing between edges as in [8,52,63,67].

- Contrast equalizationThe aim is to enhance contrast in the image to bring out subtle differences, usually done with contrast limited adaptive histogram equalization (CLAHE) as in [44].

Most papers, after the proposed wound image analysis, finally perform some type of postprocessing, such as noise removal, edge softening, or conduct some morphological operations to make the segmented wound region look natural. To refine the output image, Biswas et al. [58] perform morphological operations like hole filling or area base filtering. Fauzi et al. [37] apply morphological closing and filling to remove noise and soften the edges, while Yadav et al. [52] smooth the object by sequential dilation and erosion of the image with a diamond structuring element. Manohar Dhane et al. [8] also use morphological operation of hole filling, area opening and closing, bridging, thickening, and thinning.

4. Wound Segmentation

Wound image segmentation where the wound is separated from the background and the other parts of the body is a necessary task in order to perform additional wound analysis such as tissue classification, wound measurement, 3D reconstruction, or healing evaluation. The goal of segmentation is to separate the image in two classes, namely, wound, and non-wound [48]. Some researchers like Biswas et al. [58] first segment the part of the body with the wound, and then the wound itself from the surrounding skin. The performance of image segmentation depends on the input image that is fed into the algorithm because if the wound on the input image is a too small, or very dark, the probability of accurate segmentation is uncertain [37].

As mentioned before, authors in [58] separated wound segmentation into two stages. The first stage was removing the background of the image which resulted in an image containing only the wound and surrounding skin. The result is then fed to the second stage classifier to delineate the wound. In each stage, the SVM classifier is used and trained on a combination of texture and color features with only two classes, first stage, background, and skin class (wound and surrounding skin together), and a second stage wound and surrounding skin class. Wound area segmentation is fully automated with an accuracy of 71.98% tested on 10 images. On the most recent work by Biswas et al. [5], authors continued to work with superpixel image regions but with a different algorithm approach. Based on a region growing algorithm, as in previous work by Ahmad Fauzi et al. [68], the authors combined 4D probability map generation with a superpixel region growing algorithm and achieved an overall accuracy of 79.2% on 30 images.

Dhane et al. [48] introduced the spectral clustering method for wound image segmentation. Based on the S channel in HSV color space, the image was converted into data points from which the similarity graph was calculated with the Gaussian similarity function. Calculating the similarity matrix and finally, with the aid of the k-means clustering method, an encouraging accuracy of 86.73% on 105 images was obtained. In their new research [8], authors continued with fuzzy spectral clustering. Gray based fuzzy similarity measure was used to construct the similarity matrix and k-means to cluster the points. Wound segmentation based on 70 images obtained an accuracy of 91.5%.

Yadav et al. [52] used fuzzy c-means and k-means for wound segmentation of five different types of chronic wounds on 77 images from the Medetec image database. Analysis of contrast in fifteen color spaces and their 45 color channels provided the highest contrast in Db and Dr channels in YDbDr color space. The proposed method gave average accuracy results in the Db channel 75.23% and Dr channel 72.54%.

Li et al. [51] proposed a deep neural network based on modified MobileNet architecture together with traditional methods to remove the backgrounds of the images and semantically correct the segmentation results. The precision of 94.69% was achieved on 950 images obtained from the internet and hospital. The proposed WoundSeg neural network in X. Liu et al. [69] also adapted MobileNet architecture with different numbers of channels alongside with VGG16 architecture. According to the same authors, networks with MobileNet architecture are more efficient compared with those using VGG16, although with a little lower accuracy of 98.18%. MobileNet is also the backbone of CNN in the most recent work by Li et al. [70]. Convolutional kernel enchanted with location information is added at the first convolution layer of the network which improves the segmentation accuracy. On 950 images precision was 95.02%.

Filko et al. [32] considered also wound detection as an important stage of wound analysis. All other papers consider the wound to be detected by the user who is capturing the image. In their further research Filko et al. [71] set as the main goal of the detection phase to find the center of the wound and it is performed with color histograms and kNN algorithm. Segmentation is first performed by dividing the reconstructed 3D surface into surfels. Defined surfels are then grouped into larger smooth surfaces by region growing process which utilizes geometry and color information for establishing relations between neighboring surfels. Finally, the wound boundary is reconstructed by spline interpolation and the wound is isolated as a separate 3D model using a visualization toolkit (VTK). Methods were tested on Saymour II wound care model by VATA Inc.

Gholami et al. [64] compared seven different algorithms for wound segmentation: region-based methods (region growing and active contour without edges), edge-based methods (edge and morphological operations, level set method preserving the distance function, livewire, and parametric active contour models or Snakes) and texture-based methods (Houhou–Thiran–Bresson model). The authors tested their method on 26 images and overall accuracy was the highest for the livewire method with accuracy of 97.08% but with long user interaction time, and hence longer overall computation time.

Table 1 presents research papers considering wound segmentation. Operations such as dividing the input image into segments or regions of interest are in the preprocessing column since only methods for wound segmentation are considered in the segmentation column. Values presented for accuracy rates are average or the highest one for specific methods and values for computational times are average, minimum, or maximum for overall images or for a specific method that showed the best results. Not all performance metrics are the same in all the papers and not all values of computational times are presented in researches.

Table 1.

Segmentation of chronic wounds on digital images.

5. Tissue Classification

In the wound image analysis process, tissue classification is usually performed after the wound segmentation process in order to analyze only the tissues on the wound area itself. Therefore, the wound is the only region of interest to be analyzed in this phase. Tissue separation is required for further wound analysis, e.g., healing evaluation. Some research like Fauzi et al. [37] do not perform the segmentation process separately, instead, they conduct it with tissue classification at the same time. Some other authors like Nizam et al. [86] and Maity et al. [9] assume that the boundary of the wound is known so they only focus on the tissue classification task.

Calculation of probability map of a pixel belonging to one of the color categories in RYKW color space based on distance in modified HSV space is the foundation for the separation of tissues by Ahmad Fauzi et al. [87]. In the used region growing algorithm, the user must select the initial wound image pixel for the algorithm to grow the region based on that initial properties of the pixel. Basically, if the distance between some considered pixel’s probability map and the mean probability map of the region is less than a certain threshold value, that pixel neighbor is added to the growing region. In their subsequent research, Ahmad Fauzi et al. [68] and Fauzi et al. [37], alongside with region growing algorithm introduced an optimal thresholding algorithm where the wound was segmented by thresholding the difference matrix of the probability map while taking into account the pixel’s tissue type and strength of its probability. Calculation of ground truth agreement between 3 clinicians was taken into account and the overall accuracy of 75.1% was observed on 80 wound images.

Nizam et al. [86] focused on the tissue classification task assuming the wound boundary is known. With the fuzzy c-means clustering algorithm, data points are assigned membership value for each cluster and with further analysis, the correspondence to specific class, i.e., tissue is evaluated. An analysis is divided into (i) unsure regions, (ii) sure regions, and (iii) epithelial and granulation tissue. The method is tested on 600 wound images with accuracy rates of images containing all four tissue types of 87.6%, 82.4%, 89.3%, and 82.0% for granulation, slough, eschar, epithelial tissues, respectively.

Authors in Maity et al. [9] also focused only on tissue classification task without segmentation of the wound. Training of deep neural network was performed on 105 features based on color, texture, statistics entropy, etc. The neural network performed classification on 250 images plus images from the Medetec database with a very high accuracy rate of 99%. Such accuracy can be explained due to consideration of only the manually delineated wound region of interest, i.e., no process of wound segmentation from the surrounding skin and background.

Mukherjee et al. [3] used HSI color space and its S color channel which showed high contrast at the boundary of the wound and skin. With a fuzzy divergence-based thresholding wound boundary was delineated. Five color and ten texture features were extracted from 45 color channels resulting in a total of 675 extracted features from which 50 of them showed to be statistically significant. Supervised Bayesian classifier and SVM classifier were used to learn and classify the three different tissue types. The overall accuracy of the Bayesian classifier was 81.15%, whereas the SVM classifier achieved better accuracy of 86.13%. Methods were tested on 74 wound images from the Medetec database.

Chakraborty et al. [88] established a framework called Tele-Wound Technology Network (TWTN) for wound analysis and data storage which focused on capturing the wound images with a smartphone camera to help patients at remote locations to get better treatment promptly. The basic principle is that the user sends a chronic wound image to Tele-Medical Hub, where it is saved on the server and further analyzed. Performance analysis was performed on 50 wound images from the Medetec database. The system uses k-means clustering and fuzzy c-means to segment the image with an accuracy of 93.45% and 96.25% respectively, while tissues were classified with Bayesian classifier with an accuracy of 87.11%. Inside the system, a medical expert can easily access the patient’s wound data, track the progress of the wound healing, or even advise the patient to change the wound healing treatment. Patient clinical information and wound images are linked and globally accessible through a web application [89]. In similar research by the same authors Chakraborty et al. [90] and Chakraborty [7], segmentation was based on fuzzy c-means and particle swarm optimization (PSO), respectively, while tissues were classified in both papers with linear discriminant analysis (LDA). The segmentation accuracy of fuzzy c-means was 98.98% and PSO 98.93%, while the classification accuracy of LDA in [90] was 91.45% and in [7] 98%. In most recent research by Chakraborty [11], classification was evaluated with four different algorithms, LDA with an accuracy rate of 85.67%, decision trees 84.29%, naive Bayes 78.66%, and random forest, which showed a high accuracy rate of 93.75%. In all papers, images from the Medetec database were taken alongside with captured images of the Indian population.

Another smartphone-based method was presented by L. Wang et al. [55] and furthermore L. Wang et al. [34] where segmentation was performed with an augmented mean shift algorithm and classification with a RYKW color model and k-means clustering. In their most recent paper, L. Wang et al. [46] used a superpixel SVM classifier which was trained in two stages combining color and texture features. Mean color, color histogram, and wavelet texture feature showed the best for separation between wound and surrounding skin, while dominant color, color histogram, and bag of words (BoW) histogram showed the best results for background and foot tissue. On acquired 100 foot ulcer images average sensitivity was 73.3% and specificity 94.6%. The problem with the mentioned work is that images can be captured only with a box specialized for this purpose, under controlled light, and designed only for feet which limits the method’s robustness and general application.

García-Zapirain et al. [33] proposed two different 3D CNN architectures, one for segmentation (region of interest (ROI) extraction), and one for tissue classification. Each one has different input images (models) with its own pathways that connect in fully connected layers at the end of the network. Input images for proposed wound segmentation use HSI and Grayscale color spaces, while for tissue classification linear combinations of discrete Gaussians (LCDG) image and prior visual appearance image are added as separate inputs to the network. The CNN’s were trained and tested on 193 pressure ulcer images with a DSC of 95% for wound segmentation and 92% for tissue classification. In similar research by Elmogy et al. [43] RGB image is added as an input to the CNN alongside with prior models and obtained tissue classification showed DSC accuracy of 92%. In another research by Elmogy et al. [91] for the purpose of the segmentation, the YCbCr image was added as an input model to the CNN, and in the classification network, the RGB image was used instead of the HSI image. This architecture gave DSC of 93% on 100 images obtained from IGURCO GESTON S. L. healthcare services company and Medetec database. On a database from the same institution, another experiment was carried out in García-Zapirain et al. [92] where LCDG-pixel-wise probability is estimated from the grayscale image and the color probability is estimated from the color image with the Euclidean distance. Initial tissue classification is performed using vector element with the highest probability. DSC segmentation accuracy of 90.4% was obtained on 24 images with a very high computational time of 4.84 min, which is not suitable for real-time applications. In another paper by Zahia et al. [41] new approach has been used based on CNN, where the input image is divided into small sub-images of 5 × 5 which are used as input for the network. Classification of three different tissue types gave an overall accuracy of 92.01%. In Garcia-Zapirain et al. [93], the authors developed a mobile application for wound assessment which used morphological reconstruction to prepare the image for image decomposition that has been performed by toroidal vertical and horizontal decomposition (TVD and THD). Images were decomposed in different contrast layers and using the Otsu’s thresholding method, pixels are separated into two classes. Segmentation of the wound has been tested on 40 images with an average time of 9.04 s.

Navas et al. [60] presented the segmentation process in three stages using the k-means algorithm. In the first stage, a preliminary segmentation is performed where adjacent pixels with similar color features are separated in groups, while in the second stage, thresholding strategies are applied, and preliminary ROI is obtained. The final stage clusters pixels with the k-means algorithm into two regions, wound region and surrounding skin region, which results with 104 features extracted from segmented regions. The classification task uses standard MLP neural network, SVM, decision trees, and naive Bayes classifier specifically trained to classify independently the regions in the estimated wound (four tissue classes) and the estimated surrounding healing tissue (two tissue classes) areas. MLP architecture showed the best performance with a tissue separation accuracy rate of 91.9%. In further research by Veredas et al. [56] wound was segmented using color histograms, and the probability of belonging to a certain tissue class is calculated with Bayes rule. With a very low runtime of 0.3214 s method obtained an accuracy rate of 87.77% on 322 wound images. In another research Veredas et al. [40] compared three different machine learning techniques SVM, NN, and random forest separately on a wound and surrounding tissue area for classification purposes. On 113 tested images efficacy scores are very similar, and there is no clear winner. Even so, SVM showed a slightly better overall accuracy rate of 88.08% but used all 104 extracted features. Furthermore, SVM gave the best efficacy scores of 92.68% and 87.71% when detecting necrotic and slough tissues, respectively.

In Bhavani and Jiji [78] authors segmented the ulcer contour with region growing algorithm and the four classes of ulcer wound image parts (severe region, background region, normal wound region, and skin region) are separated using k-means. Based on extracted color and texture features the image is classified to aforementioned classes with kNN algorithm. Wound is segmented with an average accuracy of 94.85% on 1770 wound images. In newer research, Rajathi et al. [12] performed an analysis of varicose ulcer images. Segmentation was performed with a gradient descent algorithm which was used by the active contour method, while CNN was used for the actual tissue classification task. Testing on 1250 wound images achieved an accuracy rate was 99%.

Shenoy et al. [44] used three CNNs with modified VGG16 architecture where results from each network are joined into a single result matrix for nine classes. The problem with a small database was overcome through the use of data augmentation and transfer learning. Based on 1335 smartphone images they achieved an overall accuracy of 82%, 85%, and 83% for wound separation, granulation, and fibrin detection, respectively.

Table 2 presents research papers dealing with wound tissue classification which potentially also included wound segmentation as a preprocessing step. Values presented for accuracy rates are average or the highest one for specific methods and values for computational times are average, minimum, or maximum for overall images or specific method that showed the best results. Not all performance metrics are the same in all the papers and not all values of computational times are presented in researches.

Table 2.

Classification of chronic wounds tissues on digital images (* papers dealing with tissue classification without wound segmentation, ** papers in which wound segmentation is part of tissue classification task).

6. 3D Wound Reconstruction

Another aspect of wound analysis is reconstructing the wound into a 3D model. The creation of a precise non-contact wound measurement system often requires the ability to reconstruct the wound as a 3D model which gives essential data of the severity of the wound [71] and provides the means for additional wound analysis. With the development of inexpensive RGB-D cameras, 3D modeling became popular in many fields including telemedicine. Many systems have been developed for wound assessment like DERMA device [96], wound measurement device (WMD) [97], Eykona [71], MAVIS [24], MEDical PHOtogrammetric System (MEDPHOS) [98], etc. The problems with the mentioned systems are high prices and difficulty of use. Recently, a lot of published papers are analyzing images taken by smartphone cameras due to their prevalence and recent high-quality [46,50,81]. The use of smartphones worldwide made these methods applicable in rural areas without sufficient medical care. Patients can save images on servers so medical experts can approach the patient data with images in real-time, track the patient’s wound changes, advise the patient on healing progress or even change the medical treatment just by analyzing remotely. For better evaluation and robustness of the smartphone-based methods different smartphone (camera) models should be evaluated [84].

Filko et al. [99] reconstructed the 3D model in two phases: scene fusion and mesh generation. Scene fusion is the process where each captured RGB-D frame is integrated into a reconstructed volume. This phase is separated into two subphases (i) registration, based on the iterative closest point (ICP) algorithm; and (ii) integration phase based on truncated signed distance function (TSDF). Finally, with the marching cubes algorithm, the 3D model of a wound and the surrounding skin is constructed. In their further research by Filko et al. [71], performance of the wound 3D reconstruction and measurement were tested with three different RGB-D cameras: Orbbec Astra S, PrimeSense Carmine 1.09, and Microsoft Kinect v2. When comparing the measurement, it was concluded that area measurement precision is better for the Astra and Carmine cameras while volume measurement showed to be highly demanding for all three RGB-D cameras. Overall PrimeSense Carmine 1.09 camera demonstrated the best results in wound analysis because of a better color to depth registration and better response to glossy surfaces. 3D reconstruction was performed in real-time with a handheld camera, hence accuracy rates were not very high.

Using an industrial 3D scanner for ground truth acquisition and standard digital camera for the proposed method, Treuillet et al. [31] obtained the 3D reconstruction from a set of image features that had been matched between two different views. Epipolar geometry is initialized with robust sparse matches, then it is iteratively reinforced, and based on triangulation, the 3D model is reconstructed. The experimental result showed error less than 0.6 mm, i.e., a geometric error of 1.3%.

Wannous et al. [100] classified wounds from different camera angles and mapped on the 3D wound model with triangulation. Each triangle is labeled according to its tissue’s highest score and then the surface of each type of tissue can be computed by summing the triangles belonging to the same class. Final classification results were better when merging two or more classification images from different points of view.

7. Wound Measurement and Healing Prediction

Accurate measurements of the wounds are essential in wound analysis as it depicts the severity of the wound and its lengthy healing process. According to Marsh and Anghel [101], there are different techniques in wound measurement like manual metric measurement, mathematical models, manual and digital planimetry, stereophotogrammetry, and digital imaging methods. From changes of the wound metrics over some period of time the numerical factor of healing prediction can be calculated.

Fauzi et al. [37] placed a white label card with known dimensions beside the wound which was used as a reference to compute the actual pixel size, i.e., cm per pixel unit in the image. Detection of the white card on the image was done from the RYKW probability map. The proposed algorithm outputs three measurements: area, length (major diameter), and width (minor diameter) of the segmented wound with an accuracy of 75.0%, 87.0%, and 85.0%, respectively.

Gaur et al. [102] showed that depth information is vital for wound measurement and its healing evaluation. First, the wound was segmented with Sobel operator and morphological operations. Measurements were calculated with the help of the ruler from which the pixel length is converted in metric measure. Measurements are performed on wound models with an accuracy of 70%, 80%, and 85% for depth, area, and volume, respectively, but not on the real wound images because of the inconvenience of actual wound measurement on the patients.

Other papers like Gholami et al. [64] positioned ruler on pictures to calculate the wound measures or gauge pin as by Treuillet et al. [31]. Authors like Wannous et al. [100] developed more advanced techniques and calculated the metrics from the labeled 3D model, which is a mesh of triangles, with the help of a checkerboard placed near the wound.

Without any physical tool and using the reconstructed 3D model Filko et al. [99] calculated the perimeter, area, and wound volume. The perimeter was measured as the length of connected points of the wound boundary, while virtual skin over the wound was used for area and volume measurement. The area is calculated as the sum of areas of individual triangles in virtual skin mesh while volume calculation is performed with the maximum unit normal component (MUNC) method and divergence theorem (DTA).

L. Wang et al. [55] developed an algorithm to calculate the healing score based on raw wound assessment results such as red, yellow, or black tissue areas. The healing score was compared with the opinion of three clinicians based on 19 pictures. The agreement between the algorithm healing score and the clinician healing score is measured with Krippendorff’s alpha coefficients (KAC) and showed similar healing trends between them.

Table 3 presents research papers dealing with 3D wound reconstruction, wound measurement, or prediction of wound healing. To perform the aforementioned tasks, wound segmentation and/or tissue classification must be done prior. Values presented as accuracy rates are average or the highest one for specific methods and values for computational times are average. Not all performance metrics are the same in all the papers and not all values of computational times are presented in researches.

Table 3.

3D reconstruction, measurement, and wound healing prediction of chronic wounds on digital images.

8. Discussion

As previously mentioned, the topic of this paper is to review recent methods for wound analysis that could lead to development of an end-to-end solution for automatic wound analysis. Such a solution would be a rather complex system with requirements to automatically detect and segment the wounds from the background and surrounding healthy tissue. Furthermore, the system should be able to classify the assortment of tissue types found on the wound surface and in the end produce measurements, such as wound dimensions, percentage of detected tissues which leads to healing evaluation, and therapy suggestions. Additionally, the system analysis could also result with the detailed 3D reconstruction of the wound which could be used for further analysis and follow-up comparisons during examinations.

In order to produce good results in wound segmentation and tissue classification stages, most considered research have some form of input data preprocessing. Input data in this field of study is usually visual data in the form of wound photographs which are sometimes accompanied with corresponding depth images as well. Preprocessing usually involves noise removal and sometimes blurring in order to remove image encoding artefacts, reflections, etc., and therefore improve segmentation or wound detection phases. Preprocessing also typically involves choosing of the most appropriate color space. HSV is the traditional choice whose performance has been proven in numerous fields including wound analysis, with CIE Lab being the second most used. Recently, some other color spaces such as YCbCr and YDbDr that originate from video transmission encoding have also been successfully used for wound analysis, and researchers have shown that these color spaces are particularly suitable for distinguishing between wound and non-wound image areas [8,11]. In case deep neural networks are used, preprocessing usually involves image cropping or image resizing in order to reduce the image to the size appropriate for the particular deep neural network architecture [44,65]. Some wound analysis systems also use presegmentation as a preprocessing step where the input image is divided into a form of superpixels which can be regularized into a grid or arbitrary shapes by using an algorithm such as a SLIC, MeanShift, QuickShft, etc. By using the aforementioned algorithms researchers gain a desired number of regions with uniform properties which they can rapidly analyze and determine their purpose w.r.t wound analysis process.

Separating the wound surface from the background, specifically from the surrounding healthy tissue can be challenging task especially if the surrounding tissue has recently healed and its surface color can often resemble that of the wound. If the wound segmentation is conducted on 2D images, then underlining surface geometry is not available. In that case, segmentation is driven exclusively by visual features available, color and texture, where color is used more predominantly then texture because intrinsic property of the wound surface often results in glossy outlook which somewhat reduces the expressiveness of surface texture properties. By application of previously mentioned presegmentation algorithms, the main segmentation phase is facilitated by the availability of desired number of regions which have distinct visual properties. The segmentation procedure is then reduced to choosing suitable and generating appropriate visual features and descriptors as well as conducting wound/non-wound classification with some of the numerous machine learning methods available [40]. The exception to this type of pipeline include applications of deep neural networks which are typically closed form solutions that provide segmented images at the end of its operation [36]. As with other fields of computer vision, deep neural networks are slowly becoming the predominant choice for machine learning method in this field. This is evident both in terms of the number of recent research and in performance [9]. Nevertheless, the main problem for further application of deep neural networks in this field is the lack of publicly available annotated database. The lack of such a database is somewhat understandable since the actual annotation should be conducted by medical experts and wound images could be very complex with multiple tissue types that are intertwined together, resulting in significant amount of time required for annotating just a single image. Development of robust deep neural network for general application requires tens of thousands of precisely annotated images which makes creation of annotated image database in this field a gargantuan task indeed. However, certain techniques such as data augmentation and transfer learning [44] helps development of useful deep neural networks even with much smaller databases.

When 3D wound data in the form of a mesh or a point cloud is available, surface geometry information beside visual information could also be used. By adding that third spatial dimension, segmentation could be facilitated and made more precise and driven by the local visual and geometric information at the same time. For now, deep neural network architectures applied in this field does not use spatial 3D geometry information, but in the future we can be sure that deep neural networks designed for 3D point clouds such as PointNet++ [110] would be adapted and used in this field.

Vast majority of research in the field of wound analysis is based on 2D images, which are acceptable if the considered wound is small and located on a locally flat area. On the other hand, because of the inherent error of measurement based on 2D images [14], and the propagation of inexpensive 3D cameras which are now being included in portable devices such as smartphones, it is plausible that in the future the majority of research will shift into 3D data analysis. Older research used expensive laser line scanners [96], or multiple view geometry [100] to reconstruct the surface of the wound. Such applications were often too expensive and impractical to use or were limited by the requirements or potential noisiness of multiple view geometry approach. Modern 3D cameras are inexpensive and have reliable measurements which could be used for precise 3D wound reconstruction [71].

Wound tissue classification is extremely important for determining wound condition and therefore proposing wound therapy. Consequently, there is a lot of research on this topic. The problem by itself is rather simple, a classification into typically three classes of wound tissues: granulation, fibrin (or slough), and necrosis. It is generally accepted that the tissue classes roughly correspond to the colors, red, yellow, and black, but what makes the problem difficult is the imperfections and inconsistency of recordings, image encoding artefacts, surface reflections etc. Furthermore, the wounds itself can be very complex where tissues permeate each other in such a way that even a seasoned medical expert could have trouble in separating them precisely. All those problems make this topic non trivial and therefore very interesting to research. Similarly, to the wound segmentation problem, wound tissue classification is basically about determining appropriate visual features typically calculated over an area and then applying machine learning. Used machine learning methods include almost all of them, from LDA [11], and PCA [46], to methods like k-means [52], random forest [11], and SVM [40] which is a sort of a pinnacle of the classical machine learning technique. Recently deep neural networks have been the focus of the research in this area as well [45]. Wound tissue classification is reliant on successful wound segmentation phase because it enables the tissue classifier to focus solely on the wound area and distinguish details and separate tissues only typically found on wounds. Wound segmentation, on the other hand, requires that the system detects and separates the wound as a whole. This enables researchers to develop a more profound and complex classifier which potentially would not give meaningful results or would run too slow if run for example on the whole input image.

Wound evaluation can be based on multiple sources of information as in [107], but is usually mostly based on wound dimensions (depth, circumference, area, and volume) and amount of presence of certain tissue types. Therefore, more precise measurements will lead to more precise wound evaluation, which means that the wound measurement should be relied on 3D cameras as opposed to regular 2D cameras that have inherent error when imaging curved surfaces [103]. Furthermore, applying new machine learning techniques developed for 3D data input could result in more precise wound segmentation and tissue classification.

9. Conclusions

Chronic wounds as wounds that are not healing properly, dramatically affect national healthcare systems, so the need for quick and precise wound assessment is essential. Even though systems for the accurate segmentation and tissue classification of wounds exist they are not combined with accurate wound measurement or healing evaluation. Therefore, not a single system provides all the data needed for practical implementation in medical centers.

Nevertheless, this overview showed that image analysis can handle wound segmentation and tissue classification tasks with some success. Therefore, with a combination of different methods, modifications of color spaces, or algorithms adjustment, some researchers achieved good performance and can be used for practical medical purposes under certain restrictions.

The impact of any new developed method is measured by its accuracy, speed, and robustness. The novel method must be applicable for all kinds of wounds, types of skin, detect multiple wounds on different parts of the body, etc. Furthermore, the acquisition of wound images should be robust to uncontrolled lighting conditions and performed without user interference or be independent of physical accessories such as markers or rulers.

With the development of high pixel smartphone and RGB-D cameras, an increase in computation power, and with the development of more accurate algorithms, wound image analysis has gradually taken precedence in the field of wound assessment. Furthermore, due to the improvements in automated wound analysis and global shortages of medical staff, the advent of artificial intelligence and robotic system can be foreseen. Moreover, the overall impact of chronic wounds in national healthcare systems is increasing; hence, the research papers in this field are expanding, and better and faster results can be expected.

While the future is always uncertain, it is the opinion of the authors that the future development will see the realization of an AI driven automatic system for wound analysis comprised of robot manipulator with attached high precision industrial 3D camera and high resolution RGB camera. Such system would be able to autonomously detect wounds, record them from multiple views and create precise 3D models of the segmented wound surface. Furthermore, it would classify tissues on the surface and precisely measure the wound. Based on the data, a therapy would be prescribed and healing evaluation provided. Based on the available research, development of such a system is possible today with the right combination of hardware, tools and algorithms.

In the future, wound analysis system should be a part of larger CDSS system where it will perform all the tasks necessary for wound assessment and, based on its results, CDSS could advise the clinician in order to provide better and faster wound treatment.

Author Contributions

Conceptualization, D.F.; research and original draft preparation, D.M.; review, editing and revised draft preparation, D.F. All authors have read and agreed to the published version of the manuscript.

Funding

The paper is developed under project “Methods for 3D reconstruction and analysis of chronic wounds” and is funded by the Croatian Science Foundation under number UIP-2019-04-4889.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chronic Wounds, Overview and Treatment. Available online: https://www.woundsource.com/patientcondition/chronic-wounds (accessed on 13 July 2020).

- Dadkhah, A.; Pang, X.; Solis, E.; Fang, R.; Godavarty, A. Wound size measurement of lower extremity ulcers using segmentation algorithms. In Proceedings of the Optical Biopsy XIV: Toward Real-Time Spectroscopic Imaging and Diagnosis, San Francisco, CA, USA, 15–17 February 2016; Volume 9703, p. 97031D. [Google Scholar]

- Mukherjee, R.; Manohar, D.D.; Das, D.K.; Achar, A.; Mitra, A.; Chakraborty, C. Automated tissue classification framework for reproducible chronic wound assessment. BioMed Res. Int. 2014, 2014, 851582. [Google Scholar] [CrossRef]

- Gautam, G.; Mukhopadhyay, S. Efficient contrast enchancement based on local–global image statistics and multiscale morphological filtering. Adv. Comput. Commun. Paradig. 2017, 2, 229–238. [Google Scholar]

- Biswas, T.; Ahmad Fauzi, M.F.; Abas, F.S.; Logeswaran, R.; Nair, H.K.R. Wound Area Segmentation Using 4-D Probability Map and Superpixel Region Growing. In Proceedings of the 2019 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuala Lampur, Malaysia, 17–19 September 2019; pp. 29–34. [Google Scholar]

- Mukherjee, R.; Tewary, S.; Routray, A. Diagnostic and prognostic utility of non-invasive multimodal imaging in chronic wound monitoring: A systematic review. J. Med. Syst. 2017, 41, 46. [Google Scholar] [CrossRef] [PubMed]

- Chakraborty, C. Chronic wound image analysis by particle swarm optimization technique for tele-wound network. Wirel. Person. Commun. 2017, 96, 3655–3671. [Google Scholar] [CrossRef]

- Manohar Dhane, D.; Maity, M.; Mungle, T.; Bar, C.; Achar, A.; Kolekar, M.; Chakraborty, C. Fuzzy spectral clustering for automated delineation of chronic wound region using digital images. Comput. Biol. Med. 2017, 89, 551–560. [Google Scholar] [CrossRef] [PubMed]

- Maity, M.; Dhane, D.; Bar, C.; Chakraborty, C.; Chatterjee, J. Pixel-based supervised tissue classification of chronic wound images with deep autoencoder. Adv. Comput. Commun. Paradig. 2017, 2, 727–735. [Google Scholar]

- Zahia, S.; Garcia Zapirain, M.B.; Sevillano, X.; González, A.; Kim, P.J.; Elmaghraby, A. Pressure injury image analysis with machine learning techniques: A systematic review on previous and possible future methods. Artif. Intell. Med. 2020, 102, 101742. [Google Scholar] [CrossRef] [PubMed]

- Chakraborty, C. Computational approach for chronic wound tissue characterization. Inform. Med. Unlocked 2019, 17, 100162. [Google Scholar] [CrossRef]

- Rajathi, V.; Bhavani, R.R.; Wiselin Jiji, G. Varicose ulcer (C6) wound image tissue classification using multidimensional convolutional neural networks. Imaging Sci. J. 2019, 67, 374–384. [Google Scholar] [CrossRef]

- Kumar, K.S.; Reddy, B.E. Wound image analysis classifier for efficient tracking of wound healing status. Sign. Image Process. Int. J. 2014, 5, 15–27. [Google Scholar]

- Chang, A.C.; Dearman, B.; Greenwood, J.E. A comparison of wound area measurement techniques: Visitrak versus photography. Eplasty 2011, 11, 158–166. [Google Scholar]

- Medical Device Technical Consultancy Service. Pictures of Wounds and Surgical Wound Dressings. Available online: http://www.medetec.co.uk/files/medetec-image-databases.html (accessed on 14 July 2020).

- Lu, H.; Li, B.; Zhu, J.; Li, Y.; Li, Y.; Xu, X.; He, L.; Li, X.; Li, J.R.; Serikawa, S. Wound intensity correction and segmentation with convolutional neural networks. Concurr. Comput. Pract. Exp. 2016, 22, 685–701. [Google Scholar] [CrossRef]

- Musen, M.A.; Middleton, B.; Greenes, R.A. Clinical decision-support systems. In Biomedical Informatics Computer Applications in Health Care and Biomedicine, 4th ed.; Springer: London, UK, 2014. [Google Scholar]

- Garg, A.X.; Adhikari, N.K.J.; McDonald, H. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: A systematic review. J. Am. Med. Assoc. 2005, 293, 1223–1238. [Google Scholar] [CrossRef]

- Langemo, D.K.; Melland, H.; Hanson, D.; Olson, B.; Hunter, S.; Henly, S.J. Two-dimensional wound measurement: Comparison of 4 techniques. Adv. Wound Care J. Prevent. Heal. 1998, 11, 337–343. [Google Scholar]

- Filko, D.; Davor, A.; Dubravko, H. WITA-Application for Wound Analysis and Management. In Proceedings of the International Conference on e-health Networking, Applications and Services (HealthCom), Lyon, France, 1–3 July 2010. [Google Scholar]

- Solomon, C.; Munro, A.R.; Van Rij, A.M.; Christie, R. The use of video image analysis for the measurement of venous ulcers. Br. J. Dermatol. 1995, 133, 565–570. [Google Scholar] [CrossRef]

- Schubert, V.; Zander, M. Analysis of the measurement of four wound variables in elderly patients with pressure ulcers. Adv. Wound Care J. Prevent. Health 1996, 9, 29–36. [Google Scholar]

- Smith, R.B.; Rogers, B.; Tolstykh, G.P.; Walsh, N.E.; Davis, M.G.; Bunegin, L.; Williams, R.L. Three-dimensional laser imaging system for measuring wound geometry. Lasers Surg. Med. 1998, 23, 87–93. [Google Scholar] [CrossRef]

- Plassmann, P.; Jones, T.D. MAVIS: A non-invasive instrument to measure area and volume of wounds. Med. Eng. Phys. 1998, 20, 332–338. [Google Scholar] [CrossRef]

- Mekkes, J.R.; Westerhof, W. Image processing in the study of wound healing. Clin. Dermatol. 1995, 13, 401–407. [Google Scholar] [CrossRef]

- Berriss, W.P.; Sangwine, S.J. A color histogram clustering technique for tissue analysis of healing skin wounds. In Proceedings of the 6th International Conference on Image Processing and Its Applications, Dublin, Ireland, 14–17 July 1997; pp. 693–697. [Google Scholar]

- Kolesnik, M.; Fexa, A. Segmentation of wounds in the combined color-texture feature space. In Proceedings of the Medical Imaging 2004: Image Processing, San Francisco, CA, USA, 14–19 February 2004; Volume 5370, p. 549. [Google Scholar]

- Sugama, J.; Matsui, Y.; Sanada, H.; Konya, C.; Okuwa, M.; Kitagawa, A. A study of the efficiency and convenience of an advanced portable Wound Measurement System (VISITRAKTM). J. Clin. Nurs. 2007, 16, 1265–1269. [Google Scholar] [CrossRef]

- Krouskop, T.A.; Baker, R.; Wilson, M.S. A noncontact wound measurement system. J. Rehabilit. Res. Dev. 2002, 39, 337–345. [Google Scholar]

- Plassmann, P.; Jones, C.D.; McCarthy, C. Accuracy and precision of the MAVIS-II wound measurement device. Wound Repair Regenerat. 2007, 15, A129. [Google Scholar]

- Treuillet, S.; Albouy, B.; Lucas, Y. Three-dimensional assessment of skin wounds using a standard digital camera. IEEE Trans. Med. Imaging 2009, 28, 752–762. [Google Scholar] [CrossRef] [PubMed]

- Filko, D.; Nyarko, E.K.; Cupec, R. Wound detection and reconstruction using RGB-D camera. In Proceedings of the 2016 39th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 30 May–3 June 2016. [Google Scholar]

- García-Zapirain, B.; Elmogy, M.; El-Baz, A.; Elmaghraby, A.S. Classification of pressure ulcer tissues with 3D convolutional neural network. Med. Biol. Eng. Comput. 2018, 56, 2245–2258. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Pedersen, P.C.; Strong, D.M.; Tulu, B.; Agu, E.; Ignotz, R. Smartphone-based wound assessment system for patients with diabetes. IEEE Trans. Biomed. Eng. 2015, 62, 477–488. [Google Scholar] [CrossRef] [PubMed]

- Taha, A.A.; Hanbury, A. Metrics for evaluating 3D medical image segmentation: Analysis, selection, and tool. BMC Med. Imaging 2015, 15, 29. [Google Scholar] [CrossRef]

- Ohura, N.; Mitsuno, R.; Sakisaka, M.; Terabe, Y.; Morishige, Y.; Uchiyama, A.; Okoshi, T.; Shinji, I.; Takushima, A. Convolutional neural networks for wound detection: The role of artificial intelligence in wound care. J. Wound Care 2019, 28, S13–S24. [Google Scholar] [CrossRef]

- Fauzi, M.F.A.; Khansa, I.; Catignani, K.; Gordillo, G.; Sen, C.K.; Gurcan, M.N. Segmentation and management of chronic wound images: A computer-based approach. Chronic Wounds Wound Dress. Wound Healing 2018, 6, 115–134. [Google Scholar]

- Sirazitdinova, E.; Deserno, T.M. System design for 3D wound imaging using low-cost mobile devices. In Proceedings of the Medical Imaging 2017: Imaging Informatics for Healthcare, Research, and Applications, San Francisco, CA, USA, 15–16 February 2017; Volume 10138, p. 1013810. [Google Scholar]

- Kumar, C.V.; Malathy, V. Image processing based wound assessment system for patients with diabetes using six classification algorithms. In Proceedings of the International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT), Chennai, India, 3–5 March 2016; pp. 744–747. [Google Scholar]

- Veredas, F.J.; Luque-Baena, R.M.; Martín-Santos, F.J.; Morilla-Herrera, J.C.; Morente, L. Wound image evaluation with machine learning. Neurocomputing 2015, 164, 112–122. [Google Scholar] [CrossRef]

- Zahia, S.; Sierra-Sosa, D.; García-Zapirain, B.; Elmaghraby, A. Tissue classification and segmentation of pressure injuries using convolutional neural networks. Comput. Methods Progr. Biomed. 2018, 159, 51–58. [Google Scholar] [CrossRef]

- Goyal, M.; Reeves, N.D.; Rajbhandari, S.; Spragg, J.; Yap, M.H. Fully convolutional networks for diabetic foot ulcer segmentation. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 618–623. [Google Scholar]