Abstract

This paper represents the first survey on the application of AI techniques for the analysis of biomedical images with forensic human identification purposes. Human identification is of great relevance in today’s society and, in particular, in medico-legal contexts. As consequence, all technological advances that are introduced in this field can contribute to the increasing necessity for accurate and robust tools that allow for establishing and verifying human identity. We first describe the importance and applicability of forensic anthropology in many identification scenarios. Later, we present the main trends related to the application of computer vision, machine learning and soft computing techniques to the estimation of the biological profile, the identification through comparative radiography and craniofacial superimposition, traumatism and pathology analysis, as well as facial reconstruction. The potentialities and limitations of the employed approaches are described, and we conclude with a discussion about methodological issues and future research.

1. Introduction

Forensic Sciences are the set of disciplines whose common objective is the materialization of the evidence for legal purposes through a scientific methodology. In this sense, any science becomes ‘forensic’ when it serves the judicial procedure. Human identification (ID) [1], often the main task that forensic sciences have to face, is crucial in a multitude of contexts of great importance in our society: from the identification of missing persons and the estimation of the age of unaccompanied migrants (whose rights could be otherwise violated) to crime analysis and massive disaster victim ID scenarios. In all these cases, personal identity is associated with the preservation and defense of Human Rights and is a tool to repair the violation of these rights.

The most commonly used methods for human ID are DNA testing and fingerprint comparison systems (AFIS), mostly due to their high accuracy (over 99%). These methods are expensive (AFIS costs can run in the millions of dollars depending on the agencies participating and the complexity of the system [2]) and time-consuming (weeks for DNA test from bone). However, their main drawback is their limited applicability: both require prior records, a trustable baseline and preserved material for the DNA extraction or fingerprint comparison. In other words, the application of these methods fails when there is not enough ante-mortem (AM) or post-mortem (PM) information available due to the lack of data (second DNA sample) or to the state of preservation of the corpse. While the skeleton usually survives both natural and non-natural decomposition processes (fire, salt, water, etc.), the soft tissue progressively degrades, being eventually lost. Therefore, techniques like DNA or fingerprint comparison are not suitable in cases where such records do not exist or in scenarios with poorly preserved bodies. To carry out the ID when the circumstances are not favorable (as is the case of skeletonized, burned or degraded individuals, mixed or disconnected remains, mass graves, etc.), methods based on Forensic Anthropology (FA) represent the main alternative at our disposal. FA studies the skeleton for its application to medico-legal issues [3], and encompasses techniques for skeleton-based forensic identification (SFI) such as craniofacial superimposition or comparative radiography. In fact, the experience of several practitioners in certain scenarios suggests the poorer effectiveness of DNA analysis (around 3% of the IDs) and dactyloscopy (15–25%) against SFI techniques (70–80%) [4]. SFI methods employed by forensic anthropologists, odontologists, and pathologists represent, in many cases, the victim’s last chance for ID.

In the last decades, artificial intelligence (AI) has allowed to automate repetitive or tedious tasks for human beings (e.g., the automation of industrial processes or cleaning tasks), as well as to surpass humans’ capacity in performing complex tasks (e.g., processing massive amounts of data to extract new knowledge or overcome human champions playing Chess or Go). Recently, advances related to machine learning (ML), under the terminological umbrella of deep learning (DL), have provided astonishing advances in image recognition, image restoration, image generation, speech recognition, and machine translation, among others. The medical field has not been an exception: AI has provided tremendously useful tools for practitioners in parameter estimation, image segmentation, pathology classification, or image enhancement, just to name a few of representative scenarios. It is, however, surprising how FA has largely remained apart from these advances and, still today, in general terms, it is an essentially manual and precarious discipline at the technological level. This lack of technological development and hybridization of AI with FA is noteworthy since, among other reasons, the human ID field, to which FA belongs, has a remarkable and increasing social and economic importance: the global human ID market reached $43.0 billion in 2019 and should reach $83.9 billion by 2024 (https://www.bccresearch.com/market-research/biotechnology/human-identification-forensics-genealogy-and-security-applications-market-report.html).

Very few surveys exist partly or totally focused on the application of AI techniques to particular SFI tasks and methodologies [5,6]. In this sense, up to the authors’ knowledge, this is the first paper to tackle the broad subject of AI-based biomedical image analysis for FA-based human ID. From this perspective, we will not tackle other forensic ID techniques like identification from biomolecular evidence (DNA), identification from latent prints (fingerprints, earprints), identification from methods of communication (e.g., handwriting), identification from podiatry and walking, or identification from personal effects. The important field of facial recognition and identification [7,8] is not addressed either, despite its great importance and technological development, because facial images are not considered biomedical images (in the sense that they are not visual representations of the interior of a human body, and they are not generally acquired for diagnostic or therapeutic purposes). In addition, there already exist exhaustive surveys [9,10,11] focusing on human ID based on facial images.

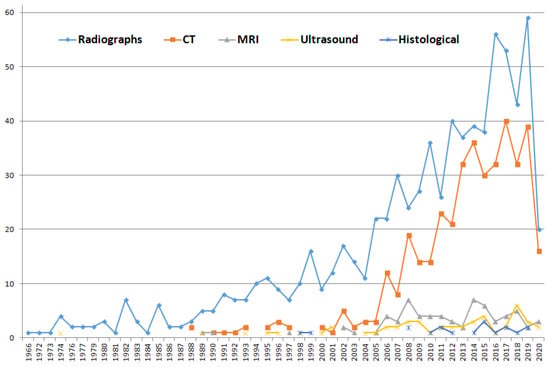

In this manuscript, we try to cover both the ID of deceased and living individuals employing hard tissues (bones) displayed using different biomedical imaging modalities (see Figure 1). The most popular biomedical image modalities employed for ID are X-ray images and CTs. The reason for this can be twofold. First, X-ray images are the most commonly acquired medical imaging modality worldwide. Just as an example, 2.02 million chest X-ray images were performed in 2015/16 by the National Health Service of United Kingdom [12], and 150 million are annually acquired in US [13]. In the forensic domain, CT imaging is increasingly being performed in forensic examinations, whereas most reports that exist on PM MRI are generally based on small case samples [14]. Second, even if PM CT and MRI have proven to be useful diagnostic tools in forensic medicine, some studies remark that CT is superior to MRI in the visualization of osseous and ligamentous injuries after trauma [15].

Figure 1.

Papers published using different biomedical image modalities for forensic ID. This figure was obtained by introducing the following queries in Scopus (search performed the 11th of May 2020): (TITLE-ABS-KEY(forensic identification radiograph) OR (TITLE-ABS-KEY(forensic identification X-ray image))); (TITLE-ABS-KEY(forensic identification CT)); (TITLE-ABS-KEY(forensic identification MRI)); (TITLE-ABS-KEY(forensic identification ultrasound)); and TITLE-ABS-KEY (forensic identification histological image). Those searches provided 771, 398, 68, 49 and 18 papers, respectively. The most commonly employed biomedical image modalities in this domain are X-ray images and computed tomographies (CTs).

After an introductory section devoted to the methodological background (Section 2), the paper focuses on the SFI-based approaches that employ biomedical images (such as radiographs, CTs, MRIs, or 3D bone scans) to the ID of living and deceased individuals. In Section 3 the different families of forensic ID methods and AI-based techniques used in the literature are specified. The manuscript concludes with Section 4, where some conclusions, recommendations and future research lines are discussed.

2. Methodological Background

2.1. Forensic Anthropology and Human Identification

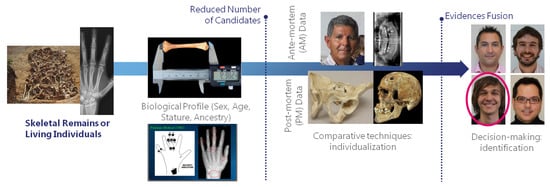

FA addresses the application of physical anthropology (devoted to the study of human body) to legal cases, usually with a focus on the human skeleton. FA methods represent an alternative and counterpart with a much broader range of applicability than other ID approaches (like fingerprints and DNA). According to the Scientific Working Group for Forensic Anthropology (SWGANTH), forensic anthropologists contribute to identification at two levels. The first level is through methods that establish positive identifications. The second level is through methods that contribute to the identification by limiting potential matches to the analyzed individual. Among the former group, the SWGANTH includes comparative radiography and the comparison of surgical implants, while it leaves for the second level craniofacial superimposition, biological profiling, medical and/or dental records, abnormalities and pathological conditions, and comparative photography. While surgical implant identification simply involves locating the manufacturer’s symbol along with the device’s unique serial number, the remaining methods are largely complex to apply. The overall pipeline of SFI is described in Figure 2. Below, we briefly describe the main FA methodologies for human ID that are relevant to this review.

Figure 2.

Skeleton-based forensic identification (SFI) pipeline. These techniques can be applied for positive identification and potential candidates reduction (or shortlisting) of both living and dead individuals. They are commonly employed when other ID techniques (e.g., DNA or fingerprint comparison) are not applicable, or in combination with them.

The estimation of the biological profile (BP) has been studied for more than 300 years, and nowadays it plays a crucial role in narrowing the range of potential matches during the ID process. BP involves the study of skeletal remains with the aim of finding characteristic traits that support determining the identity of the individual. It is a sequential process in which sex, age, stature and ancestry, in this particular order, are estimated. These traits include:

- Sex estimation of adult individuals. It is recommended to employ the pelvic and skull morphologic traits [16]. When this is not possible, the discriminant formulas for postcranial skeleton proposed by [17] are recommended. Sex is the first characteristic to be estimated, since many formulas in remaining steps vary depending on sex.

- Sex estimation of subadults (i.e., children). Sexual characteristics are not fully developed and discriminant until puberty has been passed. For this reason, estimating the sex of children is one of the main difficulties faced by FA when studying subadult individuals. However, there are some approaches that have shown a high potential, like the analysis of morphological features of the ilium, mainly the sciatic notch [18].

- Age estimation of dead adult individuals. The analysis of the degenerative processes of the pubic symphysis is recommended, preferably following the method proposed in [19]. This method should be combined with the analysis of canine root transparency (presented in [20]) according to the two-step procedure proposed in [21]. When this is not possible, the recommended methods are: that proposed in [22] for the analysis of the coxal atrial veneer; that proposed in [23] for the sternal end of the fourth rib; and finally the analysis of the processes of obliteration of the cranial sutures proposed in [24], this being the least reliable method but the only one available on many occasions.

- Age estimation of dead children. For individuals who have not yet reached maturity (subadults), the methods proposed by Scheuer and Black in 2004 [25] are recommended.

- Age estimation of the living. The hand and wrist development atlas introduced in [26], the method for dental development proposed in [27], and the analysis of ossification status of the sternal epiphysis of the clavicle presented in [28] are recommended for different age ranges. Age estimation of the living acquires special relevance when determining the legal age of the person being scrutinized (i.e., to determine if the person is 18 years old to carry out age-dependent legal procedures appropriately in accordance with the rule of law).

- Stature estimation is performed employing long bones. When the remains are contemporary and of Mediterranean origin, it is recommended to use the formulas proposed in [29] for the femur and humerus, and those proposed in [30] for the tibia. When the remains are from the North American population, the formulas proposed in [31] as well as the FORDISC computer program [32] will preferably be applied.

- The estimation of population ancestry is the most inaccurate element of the BP given the low reliability of its results. It should only be used as an orientation criterion when there is a good agreement between the human study groups and skeletal biology, giving preference to morphological criteria of the skull.

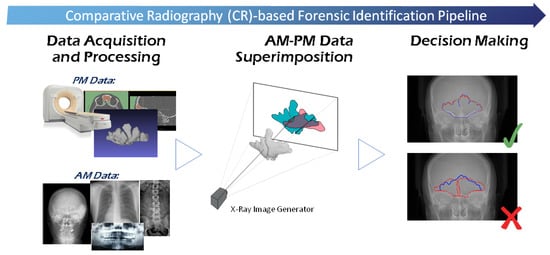

Comparative radiography (CR) involves the direct comparison of AM radiographs, generally acquired for medical reasons, with PM radiographs acquired only for ID purposes, using specific and individualizing structures. Both radiographs are visually compared and evaluated for similarity in osseous shapes and densities, to determine whether they belong to the same subject [33]. Several bones and cavities have been reported as useful for candidate short-listing or positive identification based on their individuality and uniqueness. In particular, the most widely recognized as useful and reliable methods of identification are teeth, frontal cranium bones, vertebras, and clavicles, although dental-based ID is the most employed and discriminative technique. Also, the most commonly AM and PM images employed with the CR technique include radiographs [34], CT images [35], and 3D surface models [36]. The application of CR requires the superimposition of the AM and PM data for their visual comparison by producing PM radiographs simulating the AM ones in scope and projection. This is a time-consuming and error-prone trial-and-error process that relies completely on the skills and experience of the analyst. CR requires a prior record of clinical images not always available, but if present, this technique can be extremely accurate, reaching 100% reliability for certain bones [37]. The whole CR-based ID process is depicted in Figure 3.

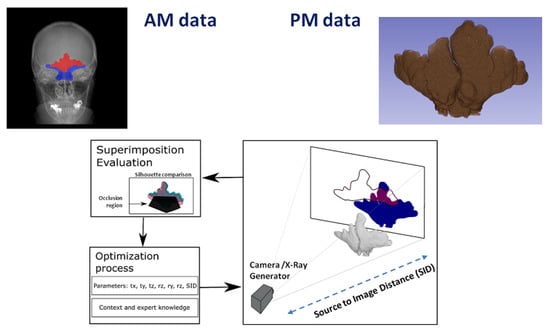

Figure 3.

General comparative radiography (CR)-based ID process. After data acquisition and processing (e.g., anatomical regions of interest segmentation), and prior to decision making, the ante-mortem (AM) and post-mortem (PM) materials are registered, so their overlap is maximized. In this figure, this superimposition process is depicted using frontal sinuses in X-Ray images.

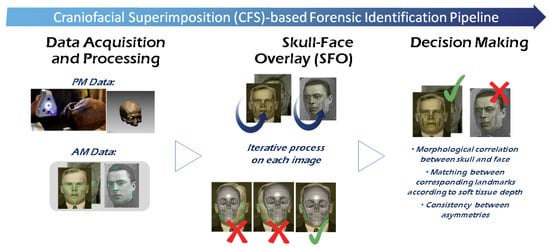

Craniofacial Superimposition (CFS) is probably the most challenging SFI method [38,39]. It involves the superimposition of an image of a skull with a number of AM face images of an individual and the analysis of their morphological correspondence. This skull-face overlay (SFO) process is usually done by corresponding anatomical (anthropometrical) landmarks located in the skull (craniometric) and the face (cephalometric). Thus, differently to CR, two objects of different nature are compared (a face and a skull). CFS has been used for one century, yet it is not a mature and fully accepted technique due to the absence of solid scientific approaches, significant reliability studies, and international standards. On the other hand, this technique is widely employed in developing countries because its application is inexpensive and the only required AM data is one or more photographs of the face. In the manner of CR, the most recent comprehensive surveys of the CFS field differentiate three consecutive stages (Figure 4) for the whole CFS process [40,41]: (1) the acquisition and processing of the materials, i.e., skull (or skull 3D model) and the AM facial photographs with the corresponding location of landmarks on both; (2) the SFO process, which deals with accomplishing the best possible superimposition of the skull on a single AM photograph of a missing person. This process is repeated for each available photograph, obtaining different overlays; (3) the decision making which evaluate the degree of support of being the same person or not (exclusion) based on the previous SFOs. This decision is influenced by the morphological correlation between the skull and the face, the matching between the corresponding landmarks according to the soft tissue depth, and the consistency between asymmetries. These criteria can vary depending on the region and the pose [42].

Figure 4.

General Craniofacial Superimposition (CFS)-based ID process.

Forensic facial approximation, also called facial reconstruction, is an ID technique for unknown skeletal remains, or corpses encountered in criminal investigations, based on the estimation of a face from a skull with the aim to obtain information about the deceased person’s identity. In [9] four different approaches are distinguished: (1) two-dimensional (2D) representation of the face over a photograph of the skull [43,44]; (2) three-dimensional (3D) manual construction of the face in clay or mastic over the skull or skull cast [43,45]; (3) computerized sculpting of the face using haptic feedback devices and a 3D scan of the skull [46,47]; and (4) computerized construction of the face using more complex computer-automated 3D routines [48,49,50,51]. All these approaches have in common the dependence of soft tissue thickness measurements of the face.

2.2. Artificial Intelligence and Forensic Human Identification

In the FA domain, AI methods can allow modeling and structuring human experts’ knowledge, as well as they can be used to extract new knowledge from massive databases and shortening ID times through the automation of certain tasks. They can also reduce human subjectivity and errors; and can contribute to provide a greater scientific basis that favors the admissibility of expert evidence, given that courtroom forensic testimony is often criticized by defense lawyers as lacking a scientific basis. In this sense, the Daubert criteria [52] determine whether evidence is admissible in a court of law. An identification method fulfills the Daubert criteria when: (1) it is testable and peer reviewed; (2) it possesses known potential error rates; and (3) it is accepted by the forensic community. Finally and overall, AI-based forensic ID approaches can help to tackle the currently unapproachable number of open ID cases worldwide.

AI is a broad interdisciplinary field including ML, knowledge representation, automatic reasoning, natural language processing, robotics, and computer vision (CV), among others. Within this vast research field, the most commonly employed AI tools applied to FA-based ID problems belong to the subfields of CV, ML, and soft computing (SC). CV is the scientific discipline that deals with the automatic interpretation of images [53]. ML is the branch of AI developing techniques that allow computers to learn directly from data [54]. The performance of ML-based AI systems (including DL approaches [55]) is reaching or even exceeding the human level on an increasing number of complex tasks. SC, or computational intelligence, techniques [56] are widely used as they exploit the tolerance for imprecision and uncertainty to achieve tractability, robustness, and low computational cost when solving real-world problems. SC focuses on the design of hybrid intelligent systems that combine nature-inspired computational approaches to appropriately handle vague and incomplete data. Within SC, fuzzy sets and fuzzy systems are aimed for reasoning and knowledge representation under imprecision and uncertainty [57] while evolutionary algorithms and metaheuristics provide single- and multi-objective methods for optimization, search and ML, yielding high-quality solutions in a reasonable time [58].

3. Forensic Human Identification through the Analysis of Biomedical Images

3.1. AI-Based Approaches for Biological Profile Estimation

The most common scenario in FA is to have direct access to the bones; bones that are extracted, cleaned and manipulated by the forensic anthropologist. However, radiological imaging, mainly CT scanning, is gaining popularity for BP since it is a non-invasive approach (in the sense that the human expert do not need to have direct visual access to the bone), and it allows better possibilities for observation and metric calculations. Estimation of BP from X-ray images has been approached from three different scientific communities with three different purposes. In clinical medicine, the biological age is important when determining endocrinological diseases in adolescents or for optimally planning the time-point of paediatric orthopaedic surgery interventions. Nevertheless, in legal medicine, is used to approximate unknown chronological age, when determining age in cases of criminal investigations or for asylum-seeking procedures, where identification documents of children or adolescents are missing. Finally, physical and forensic anthropologists are interested in determining sex, age, stature and ancestry of any human remain.

The bigger international efforts have been focused on the development of accurate and objective automatic approaches for assessing whether living individuals have reached the threshold age that implies legal adulthood. There are different computer-based proposals to assist the assessment procedure by using radiological imaging methods. Usually, MRI or X-ray images of the hand/wrist are employed in some AI-based proposals using SC techniques such as fuzzy decision trees [59], random forests [60], or neural networks [61]. Recently, the proliferation of convolutional neural networks (ConvNets) has attracted interest in medical image analysis. DL techniques overcome many of these limitations by allowing to automatically learn the suitable features for image interpretation without any direct human intervention during the training process.

Nevertheless, to our knowledge, there is no automatic approach facing a complete forensic BP assessment considering different stages and a whole age range. Most published automatic methods operate only with X-ray scans of Caucasian subjects younger than 10 years, with a few approaches dealing with subjects less than 18 years old. The automation of age estimation in adults is much less technologically developed.

The following subsections will focus on sex and age estimation. Table 1 and Table 2 include an overview of the main AI-based approaches employed in the literature for sex and age estimation, respectively. There are some cases (e.g., Pinto et al. [62], Abdullah et al. [63] or Pietka et al. [64,65,66]) that, probably, fit more the image/signal processing domain than the AI research field. However, we consider those works relevant and related at a computational level and, thus, they are also mentioned in the corresponding table or subsection. It is also important to highlight that there are other remarkable approximations (like [67]) that, despite their use of advanced intelligent systems, are not tackled in this paper, since they do not actually use biomedical images (in the case of [67], the author uses ConvNets to estimate the sex of individuals by detecting biometric tracts in photographs of hands). Finally, it is worth mentioning that there are also previous studies using AI-based approaches in BP estimation, like ML for sex estimation [68,69], but these employ measurements manually taken by forensic anthropologists and not images, so again they are not included in this survey.

Table 1.

An overview of the literature on artificial intelligence (AI)-based approaches for sex estimation from skeletal data. References are ordered according to their publication date.

Table 2.

An overview of the literature on AI-based approaches for age estimation from skeletal data. References are ordered according to their publication date.

3.1.1. Sex Estimation from Skeletal Structures

Sex estimation is a fundamental pillar of the BP. If sex estimation is incorrect, then the identification may be delayed. Current methods are mainly based on morphometric or morphological criteria [70,71]. The morphometric approach involves the measurements of hands, feet, and the extremities corresponding to the upper, lower and long bones. On the other hand, the morphological approach finds its foundation in the sexual dimorphism, presented in certain degrees in most of the bones in human body. In particular, the most common and popular parts of human body for sex estimation are skull and the pelvis area [62]. However, these methods are subject to human biases, require a high degree of expertise, are complex, time-consuming [72], and are not always suitable, mainly due to the presence of a significant damage (chemical and/or physical) on the skeletal remains.

AI techniques, especially DL approaches, offer a flexible and powerful solution to the sex estimation from skeletal structures. Some researchers have tried different DL techniques for sex estimation [62,63,73,74], mainly focusing on adults, since the adult’s body is mature enough to point out significant clues which can help to distinguish its sex, but may not be suitable for sex estimation in children [75]. Table 1 offers an overview of the main AI-based approaches employed in the literature for sex estimation.

Darmawan et al. [73] used a Hybrid Particle Swarm Artificial Neural Network technique for sex estimation of individuals. They used a dataset of left hand X-ray images of Asian population. Their data set was small and their results suggest a different accuracy for different age groups. Pinto et al. [62] introduced a methodology for objective quantification of sexually dimorphic features on images of skull and pelvis using the Wavelet Transform, as a multi-scale mathematical tool that allows for measurements of shape variations that are hidden at different scales of resolution. This information can be used by experts to improve the accuracy of BP assessment, and to describe the geographic and temporal variations within and among populations. In [63], the authors presented an automated Haversian canal detection system based on the histomorphology, which uses only bone fragments to estimate age and sex. They divided their detection system into two parts. In the first part, they manually analyzed and observed differences in the parameters of male and female sample of bones. In the second part, they applied microstructural image processing techniques to identify the gender. Bewes et al. [74] addressed the problem of sex estimation of skeletal remains by training a ConvNet with images of 900 skulls virtually reconstructed from hospital CT scans. When tested on previously unseen images of skulls, the deep network showed 95% accuracy at sex estimation. In [77], the authors employ an ensemble of shallow multilayer perceptrons to perform sex estimation from six cranial measurements (cranial sagittal arc, cranial sagittal chord, apical sagittal arc, apical sagittal chord, occipital sagittal arc, and Occipital sagittal chord). They tested their approach on 267 whole-skull CT scans (153 females and 114 males) from the Uighur ethnic group in the north of China (females aged 18–88 and males aged 20–84). An accuracy >94% was reported in all cases.

On the other hand, other authors such as Kaloi and He [75], have focused on the estimation of sex in children. They proposed a technique called GDCNN (Gender Determination with ConvNets), where left hand radiographs of the children between a wide range of ages (ranging from 1 month to 18 years old) are examined to determine the gender. To identify the area of attention (part of the hand) they used Class Activation Maps, discovering that the lower part of the hand around carpals (wrist) is more important than other factors for child sex estimation. They obtained an accuracy of 98%, identifying the gender of a child even with half of the lower part of the hand, which is impressive considering the incompletely grown skeleton of the children. In another contribution [78], the authors examined the possibilities offered by 3D descriptors on sex identification accuracy, and tested their multi-region based representation on 100 head PM CT scans (54 male and 46 female subjects between the ages of 5 to 85 years, from south east Asia). The authors yield comparable results to the commonly reported sex prediction range (70–90%) using morphometric or morphological assessment by forensic anthropologists.

As conclusion, most works for sex estimation employ either morphological methods (that rely on the visual assessment of sexually dimorphic traits) or metric methods (based on the variability in male and female dimensions, mostly utilizing different statistical methods to derive models/equations). This field has not been particularly explored using AI approaches and, generally, when tackled, the different presented approaches have been focused on sex estimation in adult individuals.

3.1.2. Age Estimation from Skeletal Structures

In legal medicine, when identification documents of children or adolescents are missing, as may be the case in asylum seeking procedures or in criminal investigations, estimation of physical maturation is used as an approximation to assess unknown chronological age. Some of the established radiological methods estimating unknown age in children and adolescents are based on visual examinations of bone ossification in X-ray images of the hand [26,79], even if some proposals to automatically analyze the sternal end of the fourth rib in CTs have also been presented [80]. Ossification is best followed in the hand due to the large number of assessable bones that are visible in X-ray images from this anatomical region, together with the fact that aging progress is not simultaneous for all hand bones. From the level of ossification assessed by the radiologist, the most common methods for the estimation of physical maturation of an individual are the GP [81] method and the TW2 [82,83] method. The GP method is the approach used by the majority of radiologists due to its simplicity and speed. This method is based on the comparison between the X-ray image of the hand and an atlas at various chronological ages. The patient’s radiograph is compared to the suitable image in the atlas. The TW2 method analyzes specific bones, instead of the whole hand as in the GP method. In particular, this method takes into account a set of specific ROIs divided into epiphysis/metaphysis ROIs and carpal ROIs. Very recently, ConvNets have shown to be successful for bone age estimation and there are some published applications based on the GP method [84,85,86,87,88] and on the TW2 method [59,89,90,91]. Table 2 includes an overview of the main AI-based approaches employed in the literature for age estimation.

Larson et al. [85] used a ConvNet over a total of 14,036 clinical hand radiographs and their corresponding reports, obtained from two children’s hospitals, to train and validate the model. The RMS of a second test set composed of 1377 examinations from the publicly available GP Digital Hand Atlas [95,98] was compared with an existing automatic model, termed BoneXpert (BoneXpert automatically reconstructs the borders of 15 bones (including metacarpal and phalangeal bones, the distal radius, and the ulna) from radiographs of hands using a generative model (active appearance model), and then estimates the age from the shape, intensity, and texture scores derived from principal component analysis for each bone. However, it is important to remark that this software is used in clinical settings, to estimate skeleton maturity and detect abnormalities/diseases, and it is not used in forensic settings for age estimation.) [115]. The estimates of the model, the clinical report, and three human reviewers were within the 95% limits of agreement. RMS for the Digital Hand Atlas data set was 0.73 years, compared with 0.61 years of a previously reported model. Kim et al. [84] used a GP method-based DL technique to develop an automatic software system for bone age estimation. Using that software, they estimated the bone age from left-hand radiographs of 200 patients (3–17 years old) using first-rank bone age (only the software), computer-assisted bone age (two radiologists with software assistance), and GP atlas-assisted bone age (two radiologists with GP atlas assistance). The reference bone age was determined by the consensus of two experienced radiologists. The first-rank bone ages determined by the automatic software system showed a 69.5% of concordance rate and significant correlations with the reference bone age (r = 0.992; p < 0.001). The concordance rates also increased with the use of the automatic software for both reviewers, and the X-ray images evaluation time required by the radiologists were reduced between 18.0% and 40.0%. Their results suggested that automatic software system reliably showed accurate bone age estimations, and appeared to enhance efficiency by reducing evaluation times without compromising diagnostic accuracy. In [86], the authors created a DL system to automatically detect and segment the hand and wrist. They perform an automated bone age assessment with a fine-tuned ConvNet over a set of 4278 female and 4047 male radiographs (with chronological age of 5–18 years old). Their model achieves 57.32% and 61.40% accuracy for the female and male cohorts on held-out test images. Female test radiographs were assigned a bone age within 1 year in 90.39% of cases and within 2 years in 98.11% of cases. Male test radiographs were assigned in 94.18% of cases within 1 year and in 99.0% of cases within 2 years. Attention maps were created which reveal what features the trained model uses to perform bone age assessment. Lee et al. [87] presented a way to use DL for age estimation from a subject’s hand X-ray images, employing a set of feature points on the hand. These points have to be defined to serve as a reference to crop a certain region that is informative in terms of aging-induced morphological changes. Mutasa et al. [88] using their proposed customized neural network architecture trained on 10,289 images of different skeletal age, achieved a test set MAE of 0.637 and 0.536, respectively. Their results support the hypothesis that purpose-built neural networks provide improved performance over networks derived from pre-trained imaging data sets.

Automated approaches reproducing the TW2 method can be mainly classified based on whether they use image processing or knowledge-based techniques, and a thorough review can be found at Mansourvar et al. [61]. The majority of the image processing-based methods date back to the 2000s. These methods use hand radiographs of living individuals as knowledge source for training classifiers. In [59], a computing-with-words-based classifier for skeletal maturity assessment is proposed. In [89] the proposal is based on a neural network and a fuzzy filter output. In [90], a fuzzy inference system is used for age assessment. More recently, Spampinato et al. [91] proposed and tested several DL approaches. In particular, several existing pre-trained ConvNets are employed to assess skeletal bone age automatically, based on the TW2 method and using a dataset with about 1400 X-ray images. The results showed an average discrepancy between the manual and the automatic evaluation of about 0.8 years. They also designed and trained from scratch a ConvNet, which proved to be the most effective and robust solution in assessing bone age across ethnic groups, age ranges and gender. Furthermore, this was the first automated skeletal bone age assessment work tested on a public dataset.

The advent and proliferation of ConvNets using X-ray hand radiographs have facilitated new applications to the evaluation of age using other bones. The iliac crest apophysis provides an excellent subject for the application of forensic age diagnostics to the living, particularly for determining age thresholds of 14, 16 and 18 years. For this reason, Li et al. [113] developed a DL system to perform automatic bone age estimation based on 1875 clinical X-ray pelvic radiological images, particularly for individuals between 10 and 25 years old. It can handle all possible cases of automated skeletal bone age assessment, even for samples from individuals of 19, 20, and 21 years old. However, it may not be practical in determining ages over 22 years due to the little change in the mean score of ossification. Compared to the existing cubic regression model, their ConvNet model achieves better average performance (MAE = 0.89 and RMSE = 1.21). These results also improve the DL architectures based on left-hand X-ray images, where the MAE values range from 0.54 to 0.80 years [84,85,88,91]. However, although their statistical analysis indicates a high positive correlation between the estimated and real age (r = 0.916; p < 0.05), this number is less accurate than hand X-ray radiographic images methods (r = 0.992; p < 0.001).

As an alternative to the age estimation methods based on X-ray images, research in age estimation using MRI has gained tremendous interest in recent years. The interest in developing automatic MRI-based methods for age estimation is determined by addressing the problems of exposure to ionizing radiation, the necessity to define new MRI specific staging systems, and the subjective influence of the examiner [114]. In Stern et al. [60], they used random forests to separately regress chronological age from intensity-based features extracted from 11 selected hand bones of adolescent subjects. A decision tree excluding metacarpal and phalanx information from older subjects served as a heuristic fusion strategy for age estimation, making this method ad hoc and dependent on parameter tuning. In Stern and Urschler [109], the capability of RFs for information fusion was explored by allowing it to internally decide from which bones to learn a subject’s chronological age. Thus, they treated aging as a global developmental process without the necessity for heuristic fusion schemes, as in [60], or predefined nonlinear functions, as in [115]. Following the current research trend of replacing handcrafted features in random forests with automatically learned ones, in [109] they proposed a ConvNet architecture to combine age information from individual bones in an automatic fashion by letting the architecture learn directly the most relevant features for age estimation. More recently, in [114] the authors present a solution for automatic age estimation from 3D MRI scans of the hand. They evaluate ML methods, as RFs and ConvNets, with different variants of the image information used as input for learning. Trained on a dataset of 328 MRI images, they compare the performance of the different input strategies and demonstrate unprecedented results achieving the state-of-the-art accuracy compared with previous MRI-based methods. For estimating biological age, they obtained a mean absolute error of 0.37 ± 0.51 years for the age range of subjects ≤18 years, i.e., where bone ossification has not yet saturated. Finally, they adapted their best performing method to 2D images, and applied it to a dataset of X-ray images in order to validate their findings, showing that their method is in line with the state-of-the-art methods developed specifically for X-ray data.

As conclusion, bone age assessment is one of the most important topics in FA and, specially, in the evaluation of biological maturity of children. It is usually performed by comparing an X-ray of left hand-wrist with an atlas of known sample bones. With the rise of DL, most works currently employ ConvNets to tackle the problem. Age estimation in adults remains a challenge, as well as the development of approaches that integrate hybrid evidence for achieving more confident results (such as X-ray of the left hand and teeth, and physical or psychological examination, to ensure that the subject has reached the legal age).

3.2. AI-Based Approaches for Traumatism and Pathology Analysis

Over the recent years, the success of ML in general, and DL in particular, to classify images has caused great interest in its application to medical image analysis in several relevant fields, including the detection of skin cancer [116], gastrointestinal lesions [117], diabetic retinopathy [118], mammographic lesions [119] or lung nodules [120]. However, a representative pathological example that is of high interest, but also rare, is bone lesions [121]. To our knowledge, there are just a few works in the field of orthopaedics related to the application of DL to detect bone lesions or pathologies in X-ray images.

Olczack et al. [122] extracted 256,000 wrists, hand, and ankle radiographs from Danderyd’s Hospital and identified 4 classes: fracture, laterality, body part, and exam view. Then, they evaluated the diagnostic accuracy of 5 openly available deep networks adapted to this task. All networks exhibited an accuracy of at least 90% when identifying laterality, body part, and exam view. The final accuracy for fractures was estimated at 83% for the best performing network.

Chung et al. [123] evaluated the ability of AI techniques for detecting and classifying proximal humerus fractures using plain anteroposterior shoulder radiographs. The evaluated dataset was composed of 1891 images (1 image per person) of normal shoulders (n = 515) and 4 proximal humerus fracture types (greater tuberosity, 346; surgical neck, 514; 3-part, 269; 4 part, 247) classified by 3 specialists. They trained a ConvNet after augmenting the training dataset. The ability of the ConvNet was measured by top-1 accuracy in comparison with humans (28 general physicians, 11 general orthopedists, and 19 orthopedists specialized in the shoulder) to detect and classify proximal humerus fractures. Their results showed 96% accuracy, for distinguishing normal shoulders from proximal humerus fractures, and 65–86% accuracy for classifying the fracture type.

In Gupta et al. [124], the authors address the problem of classifying bone lesions from X-ray images by increasing the small number of positive samples in the training set. They propose a generative data augmentation approach based on a cycle-consistent generative adversarial network that synthesizes bone lesions on images without pathology. They pose the generative task as an image-patch translation problem that they optimize specifically for distinct bones (humerus, tibia, and femur). In experimental results, they confirm that the described method mitigates the class imbalance problem in the binary classification task of bone lesion detection. They show that the augmented training sets enable the training of superior classifiers achieving better performance on a held-out test set. Additionally, they demonstrate the feasibility of transfer learning and apply a generative model that was trained on one body part to another.

3.3. AI-Based Approaches for Comparative Radiography

Methodological approaches for performing CR-based identification are divided into three groups according to the dimensionality of the employed data: 2D-2D (radiograph-radiograph), 2D-3D (radiograph-CT or 3D surface image) and 3D-3D (CT-CT or 3D model). The greater the dimensionality, the greater the accuracy and robustness of the methods. Within each of these groups, methods can be further classified into manual approaches and semi-automatic approaches. In this section, we will focus on the semi-automatic methods, those in which some tasks of the identification process are automated by means of AI techniques.

3.3.1. 2D-2D Approaches for Comparative Radiography

The comparison of AM and PM radiographs is the most extended approach in forensic literature. In order to highlight the applicability of CR-based forensic ID, it is important to remark that X-ray images represent the most commonly employed medical imaging modality [125]. In particular, chest X-rays are the most commonly performed radiology examination world-wide [126] because they are able to produce images of the heart, lungs, airways, blood, vessels, spine, and chest [127], and because of their diagnosis and treatment potential [126,128].

There are several works that semi-automatically compare different skeletal structures between AM and PM radiographs. These skeletal structures include frontal sinus [129,130], cranial vault [131], and teeth [132,133,134]. These methods are based on the comparison of the silhouettes of skeletal structures in radiographs using geometric morphometric techniques. The elliptical Fourier analysis [135] is used to compare AM and PM silhouettes obtaining a shortlist of the most probable PM matches for each AM case. The segmentation of the skeletal structures in AM and PM radiographs is required in all these methods. Related to that, there are a few computational approaches that automate the manual segmentations using ad-hoc rule-based segmentation methods, as the automated dental identification system (ADIS) [136,137] for teeth comparison [138], or [139] for frontal sinuses segmentation, or via the direct comparison of the intensities as the computer-assisted decedent identification (CADI) [138] for vertebrae comparison. However, the latter approach suffers from the elapsed time between the AM and PM radiographs, and the consequent change in the intensities of the skeletal structures. CADI reduces its impact via the manual selection of a region of interest around each vertebra, the equalization of the pixels within these areas (e.g., with a histogram equalization filter), and lastly the comparison of AM and PM vertebrae using the Jaccard similarity metric.

3.3.2. 3D-2D Approaches for Comparative Radiography

In the manual approach, the comparison methodology requires the acquisition of PM simulated radiographs from a CT trying to simulate the AM radiographs [35,140,141], instead of real radiographs. The acquisition of these simulated radiographs is a time-consuming, error-prone, and subjective task.

However, there are just a few automatic approaches for the comparison of AM radiographs and PM 3D images [33,142,143]. These approaches are based on the use of 3D laser range scanners for the acquisition of 3D surface models of the skeletal structures, clavicles in [33,143], and patellae in [142]. They follow a procedure where a set of 2D projected images are obtained from these PM 3D surface models through the 3D model rotation. These 2D projections only contain the silhouette of the target skeletal structure. Finally, this set of PM projections is automatically compared to the manually segmented silhouette of the skeletal structure in the AM radiographs using elliptical Fourier analysis descriptors. However, the limitation of these methods lies in the set of predefined 2D projections, and in the assumption that the parameters that modulate the perspective distortions are known.

Alternatively, Gómez et al. [144] developed an evolutionary image registration method which successfully solved this image comparison problem (see Figure 5). The AM data are clinical radiographies (2D images) of a particular bone that have to be compared against the actual PM bone (a 3D model). The promising results that were achieved led them to recently design a methodology to fully automate the ID process by CR (see Figure 3). This method was tested with frontal sinuses, clavicles and patellae obtaining a superb performance. However, the method showed the following drawbacks: (1) none of the considered projective projections reproduced the perspective distortion of radiographs where the X-ray generator was not perpendicular to the image receptor (e.g., in the Water’s projection of radiographs of frontal sinuses [145]); (2) the robustness of the evolutionary algorithm employed, Differential Evolution (DE), especially with clavicles and patellae, that in some runs led to bad superimpositions due to the stochastic nature of DE and the highly multimodal search space tackled; and (3) the large amount of time required to obtain a superimposition with DE (1800 s on average). This large time is motivated by the high computational cost required by each evaluation of this evolutionary computation technique (on average, it takes 0.25 s) uncovering the computationally expensive optimization nature of the CR problem, as well as the high number of evaluations required by the optimizer to converge. They also tackled the problem of segmenting multiple organs (hearts, lungs and clavicles) in chest X-ray images using ConvNets [146]. They proposed several new deep architectures to deal with this complex problem. Their best performing proposal obtained better results than the competitor methods on clavicles with 0.0884, 0.939 and 18.022 for Jaccard Index, Dice Similarity Coefficient, and Hausdorff Distance metrics, respectively. This performance is in line with the ability of humans to accurately delimit the contour of clavicles in X-ray images. The same authors also presented the first system for the automatic segmentation of frontal sinuses in skull radiographs [147] (see Figure 6), as well as the first complete, but preliminary, computer-aided CR-based ID support system using frontal sinuses [148]. This system, that integrates automatic segmentation and registration, is able to rank the candidates in a way that 70% times the true positive ID case is ranked in first or second position, as well as is able to filter out 50% of candidates while always keeping the true positive ID case within the sample.

Figure 5.

Schematic representation of the automatic comparison of anatomical structures in radiographic materials. This figure uses the frontal sinuses as an example. Once AM (X-Ray) and PM (CT) data are segmented (either automatically or manually), both are superimposed using an evolutionary image registration algorithm. Three main interconnected blocks are represented: (i) The transformation to obtain a projection of the 3D model; in this example, the geometric transformation includes translation (tx, ty, and tz), rotation (rx, ry, and rz), and source to image distance (SID). (ii) The similarity metric that compares the PM projection and the AM segmentation considering an occlusion region (i.e., where the frontal sinuses are occluded or not clearly defined). (iii) The optimization process to estimate the nine parameters of the transformation that are only limited by the context and expert knowledge from the X-ray acquisition protocol.

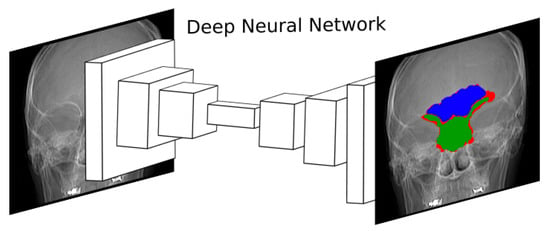

Figure 6.

Diagram showing the approximation followed in [147]. A deep ConvNet is trained to segment frontal sinuses in skull radiographs. A real outcome of the network is displayed on the right, where the frontal sinus, the occlusion region, and the segmentation error are displayed in blue, green and red, respectively. The frontal sinus is an anatomical region of extremely diffuse boundaries and complex morphology. In fact, its lower limit overlaps other anatomical structures, hardening the segmentation process.

3.3.3. 3D-3D Approaches for Comparative Radiography

The CT-CT comparison approach is the most reliable one, since the 3D shapes can be directly compared [149,150,151,152]. A few computerized approaches have been proposed for the comparison of AM and PM 3D data of different skeletal structures such as teeth [153,154,155], frontal sinuses [156], or lumbar vertebrae [157]. The segmentation of the 3D skeletal structures in both the AM and PM CTs (although the PM data could be acquired with a 3D laser range scanner), their automatic registration and the measuring of the quality of the match are required for the application of these methods. However, the availability of 3D AM data (such as CT) is scarce compared to the number of AM radiographs available reducing significantly their applicability.

In conclusion, in recent years there has been a breakthrough in automating forensic ID issues using CR. However, the automation by integrating all available information about a forensic case, using multiple superimposition procedures (with the same or different dimensionality) of multiple skeletal structures, is still a future goal. Automatic segmentation of certain structures, such as clavicles and frontal sinuses in radiographs, or the automatic superimposition of radiographs and 3D models have already been addressed. However, there are still no automatic solutions for the analysis and segmentation of any type of skeletal structure in any type of image (radiographs or CTs). There are also no automatic tools for the automatic location and classification of morphological patterns in radiographs and CTs.

3.3.4. Virtopsy

Virtopsy (or virtual autopsy) [158] is a minimally invasive procedure to perform an autopsy, that employs radiological imaging methods routinely used in clinical medicine, such as CT and MRI, to find the reason for death. Virtopsy is a multi-disciplinary technology that combines forensic medicine and pathology, roentgenology, computer graphics, biomechanics, and physics.

Some contributions presented preliminary studies about the possible application of ML to virtopsy [159,160,161], highlighting the importance and suitability of interactive ML methods [162], while others present simple image processing techniques to detect forensically relevant information in the images (e.g., the detection of metal objects embedded in a cadaver in [163]). However, in [164], the authors actually employ DL methods to detect and segment a hemopericardium (i.e., blood in the pericardial sac of the heart) in post-mortem CTs to better identify cases with a possibly non-natural cause of death (in particular, the presence of hemopericardium often leads to the diagnosis of pericardial tamponade as a cause of death). Their best performing deep network classified all cases of hemopericardium from the validation images correctly with only a few false positives, while most segmentation networks tended to underestimate the amount of blood in the pericardium. Also, in [165], a semi-supervised DL pipeline is employed to localize and classify orthopedic implants in the lower extremities (specifically the femur) on a large database of whole-body post-mortem CT scans. For the localization component, Dice scores of 0.99, 0.96, and 0.98 and mean absolute errors of 3.2, 7.1, and 4.2 mm were obtained in the axial, coronal, and sagittal views, respectively. For the classification component, test cases were properly labeled with an accuracy >97% (the recall for two of the classes was 1.00, but fell to 0.82 and 0.65 for the other two). Despite these examples, we conclude that this field remains largely unexplored.

3.4. AI-Based Approaches for Craniofacial Superimposition

The following subsections review the main existing computer-aided CFS approaches for each of the three commonly identified CFS stages (see Figure 4), highlighting the automatic methods developed over the last 15 years. Additionally, Table 3 summarizes the main AI-based contributions to the CFS problem for each particular stage.

Table 3.

An overview of the literature on AI-based approaches to the CFS problem. The stage of the process, i.e., Acquisition and Processing of the Materials (APM), skull-face overlay (SFO) and SFO assessment and decision making (ADM), that is addressed using an AI method is indicated with a ✔.

3.4.1. Acquisition and Processing of the Materials

The computerized systems developed for the first stage of CFS are related to face enhancement and skull modelling procedures. Skull 2D images, skull live images (video superimposition), and more frequently nowadays skull 3D models can be used in CFS. With the use of scanning devices, like laser range scanners, the forensic anthropologist can get a skull 3D model with a precision lower than one millimetre in a reasonable time [187]. The use of a 3D model instead of a 2D image is recommended, because it is a more accurate representation of the real skull.

Since the first proposal to use a skull 3D model to tackle the CFS problem [166], 3D image reconstruction software is necessary to construct the 3D model by aligning the views in a common coordinate frame. Such image registration process consists of finding the best 3D rigid transformation (composed of a rotation and a translation) to align the acquired views of the object. In this sense, a method [170,171] was proposed based on evolutionary algorithms for the automatic alignment of skull range images. Different views of the skull to be modeled were acquired by using a laser range scanner, and a two-step pair-wise range image registration technique was successfully applied to such images. The method is able to reconstruct the skull 3D model even if there is no turntable and the views are wrongly scanned. Today’s technology, i.e., current 3D acquisition devices and corresponding software, automatically solve the alignment of the different acquisition views without the necessity of turn-tables o any additional device.

Finally, a different related task was presented in [175]. The authors proposed a new algorithm to deal with the 3D open model mesh simplification problem from an evolutionary multi-objective point of view. An open model refers to a surface with open ends. The problem is based on the location of a certain number of points in order to approximate a mesh as accurately as possible to the initial surface. The algorithm considers two conflicting objectives, the accuracy and the simplicity of a simplified 3D mesh.

3.4.2. Skull-Face Overlay

Several proposals have been presented in the literature to perform the SFO task. The most natural way to deal with the SFO problem is to replicate the original scenario of the AM photograph in which the living person was in a given pose somewhere inside the camera’s field. Regarding computer-aided automatic methods, the task of replicating on a skull the pose and the remaining acquisition parameters of a given facial photograph is the main goal of the SFO process. This is similar to the classic CV problem of replicating the pose of a 3D object from a photo based on some reference points. Technically, we are given n points having 3D positions , …, and target 2D positions , …, . The goal is to find a projection P so that every P() is as close as possible to .

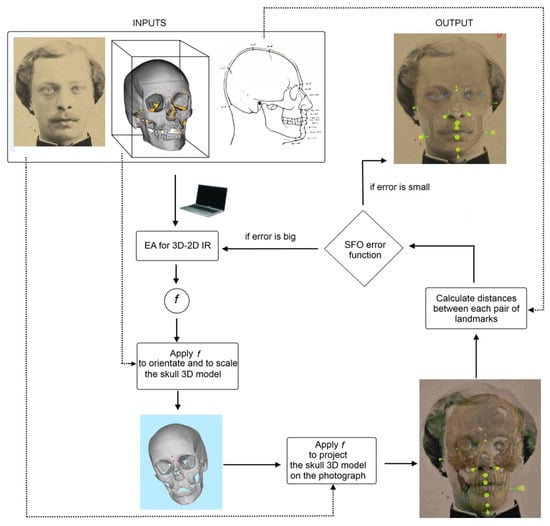

The first computer-aided approach for the SFO task was proposed by Nickerson et al. in 1991 [166]. The landmarks of the 3D skull mesh were selected to calculate the affine and perspective transformation (rotation, scaling and translation) to map with the landmarks of the 2D face image using real-coded genetic algorithms. Another automatic approach was presented in [169], in which they considered two different neural networks for the implementation of an objective assessment of the symmetry between two nearly frontal 2D images (skull and facial image). In the last decade, several works have tackled the SFO automation using evolutionary algorithms and fuzzy sets [173,174,177] (see Figure 7). These approaches are based on overlaying a 3D model of the skull over a facial photograph by minimizing the distance among pairs of landmarks while handling the imprecision introduced by the facial landmark location [40,188]. The minimization process involves the search for a specific projection of the skull model leading to the best possible matching between corresponding landmarks. More recently, Valsecchi et al. [184] proposed a novel automatic SFO algorithm called POSEST-SFO. Unlike prior approaches, POSEST-SFO algorithm solves a system of polynomial equations relating the distances between the points before and after the projection. This latter algorithm was tested on a synthetic data set comprised of 9 CBCTs from 9 different subjects and 60 simulated photographs, i.e., 540 SFOs. This method is extremely fast, since it lasts 78 milliseconds to automatically perform a single SFO. In the most realistic scenario, considering soft tissue thickness (the mean distance was employed) and ±5 pixels of error in facial landmarks, the mean back-projection error was 2.0 mm and 3.2 mm in frontal and lateral photos, respectively. However, this algorithm, contrary to previous publications [177,179], does not address the sources of uncertainty, i.e., the articulation of the mandible, the estimation of the soft tissue thickness, and the intra- and inter-error in landmark location.

Figure 7.

Diagram showing the approximation followed in [173,174,177]. An evolutionary algorithm (EA) iteratively guides the search for the transformation parameters of an image registration (IR) process. The goal is to get the best possible skull-face overlay (SFO) for craniofacial identification. More specifically, the forensic expert marks fuzzy landmarks on the facial image and then marks crisp/precise landmarks on the skull (3D model that can be rotated and manipulated with ease and precision). Those crisp landmarks are fuzzified taking into account facial soft tissue depth population studies. Finally, these 3D fuzzy landmarks are projected onto the 2D image, overlap measurements between two fuzzy sets are calculated, and all this information is integrated into the EA fitness function.

3.4.3. Skull-Face Overlay Assessment and Decision Making

The forensic expert has to make a decision to determine the degree of support that the skull and facial photographs belong to the same person or not. This decision is made through the analysis of the previous SFOs and influenced by several criteria assessing the skull-face anatomical correspondence. This way, different authors have defined and classified those criteria in four different families [168,189,190]: (1) Analysis of the consistency of the bony and facial outlines/morphological curves; (2) Assessment of the anatomical consistency by positional relationship; (3) Line location and comparison to analyze anatomical consistency; and (4) Evaluation of the consistency of the soft tissue thickness between corresponding cranial and facial landmarks. This is a subjective process that relies on the forensic expert’s skills and the quantity and quality of the used materials.

There are just a few works tackling the automation of the analysis of craniofacial correspondences within the framework of CFS ID [168,191,192]. Most of the existing literature was published more than 20 years ago and the works are very basic and limited. In addition, they do not consider the use of either skull 3D models or computer techniques to perform the SFO. Besides, the employed technique for the shape analysis implies manual interaction. They provide a value that does not take into account the actual spatial relation between skull and face since the employed methods are invariant to translation, scale and rotation. Finally, these systems only implement a single group of the criteria to assess the craniofacial correspondence.

Recently, in [178,182,183], the authors present a hierarchical system to evaluate the anatomical consistence of morphological criteria between the face and the skull and give support to the forensic expert decision-making process. From a series of SFOs of the same individual, the computer-assisted decision support system (CADSS) provides to the forensic expert a quantitative output value that is indicative of the morphological matching consistency of a given CFS problem. This quantitative value is based on the use of several skull-face anatomical criteria combined at different levels by means of fuzzy aggregation functions considering the evaluation of the anatomical correspondence between the skull and the face at three different levels: criterion evaluation (level 3), SFO evaluation (level 2), and CFS evaluation (level 1). The sources of uncertainty and degrees of confidence involved in the process (bone preservation and 3D model quality, image quality, discriminatory power of each individual criterion, BP influence) were modeled and considered at each level of the system [183]. With the aim of comparing the performance of real forensic practitioners with this CFS CADSS, the authors applied their CADSS to the same experimental dataset of [193]. In that study, 26 participants from 17 different institutions were asked to deal with 14 identification scenarios, some of them involving the comparison of multiple candidates and unknown skulls. A total number of 60 SFO problems were tackled. The mean value of the results of the 26 experts, the result of the three best experts, and the outcomes of their automatic CADSS are shown in Table 4. The designed CADSS can be considered the first automatic tool for classifying couples of unknown faces and skulls as positive or negative cases with accuracy similar to the best performing forensic experts [182]. However, one of the reached conclusions was that the identification results based on the performance of the CADSS could be strongly influenced by the poor quality of some SFOs.

Table 4.

The table shows the mean value of the results of the 26 experts, the results of the three best experts, and the outcome of the CADSS presented in [182]. Detailed performance indicators are shown, such as the percentage of correct decisions, the number of positive and negative decisions given in each case, and the corresponding rate of true and false positives and true and false negatives. Ground truth refers to the real nature of each CFS case (P = Positive, N = Negative).

A completely different approach from the previous ones is presented in [180]. The authors proposed a model for automated skull recognition without the necessity of superimposition. They claimed the use of a publicly available dataset, IdentifyMe, consisting of 464 skull images, along with semi-supervised and unsupervised transform learning models. In order to automate this process in [181], they proposed a Shared Transform Model for learning discriminative representations. The model learns robust features while reducing the intra-class variations between skulls and digital face images. Experimental evaluation on the IdentifyMe dataset showcases the efficacy of the proposed model by achieving improved performance for the two protocols given with the dataset.

3.4.4. 3D-3D Computer-Aided Approaches for Craniofacial Superimposition

Over the recent years, some authors have proposed a 3D skull-3D face approach for CFS unlike the existing traditional (image and video) and computer-aided 2D-3D approaches mentioned above.

Duan et al. [176] proposed a novel ID method based on the correlation measure between 3D skull and 3D face in morphology. The mapping between skull and face is obtained using canonical correlation analysis. Unlike existing techniques, this method does not need the accurate relationship between skull and face, and only measures the correlation between them. In order to measure the correlation between skull and face more reliably and improve the identification capability, a region fusion strategy is adopted. Experimental results validate the proposed method, and show that the region based method significantly boost the matching accuracy. The correct recognition rate reaches 100% using a CT dataset.

In [186] another 3D-3D superimposition approach was presented in an effort to contribute to computer-aided CFS. The proposed method emphasizes adherence to two important parameters: (1) maintaining the life-size of the face image in relation to the size of the skull, and (2) orienting the skull on an anthropological basis using selected feature points. The proposed method commences by reconstructing the 3D face model from a given 2D face image using a mean simplified generic elastic model, followed by registering the face model to a 3D skull along the jaw line using the analytical curvature B-spline (AC B-spline). The accuracy index of the registration is, then, evaluated to suggest the degree to which the face image corresponds to a skull. The superimpositions of positive and negative cases were conducted on a set of 3D skulls versus a set of 2D face images. The accuracy indices of the registration results suggest that the AC B-spline is more robust in 3D-3D superimposition compared to the other existing methods. The full experimental results have demonstrated the potential of the proposed method as an assistive tool to the forensic scientists for craniofacial identification.

As conclusion, CFS is arguably the SFI approach that has been more benefited from the use of AI techniques in the last years. However, the main limitation it currently presents is the lack of massive empirical evidence in favor of its use as forensic ID method. This prevents many forensic experts and institutions from using it for ID.

3.5. AI-Based Approaches for Facial Reconstruction

Forensic facial reconstruction (or forensic facial approximation) is the process of recreating the face of an individual (whose identity is often not known) from his/her skeletal remains (Figure 8). Several works on the automation of facial reconstruction have been proposed during the last 15 years, giving rise to completely computerized and largely automated methods, often using CT scans as training sets [50,51,194,195,196,197,198].

Figure 8.

Forensic facial reconstruction is probably the most subjective, and one of the most controversial, techniques in the Forensic Anthropology (FA) field. In addition to remains involved in criminal investigations, facial reconstructions are created for remains believed to be of historical value and for remains of prehistoric hominids and humans. In particular, this figure displays the facial reconstruction of the Homo Heidelbergensis. Image taken from Wikimedia Commons.

Vandermulen et al. [194] presented a fully automatic procedure for craniofacial reconstruction, using a reference database of head CT scans. All reference images are automatically segmented into head volumes (enclosed by the external skin surface) and bone/skull volumes, both represented by a signed distance transform (sDT) map. The reference skull sDTs are non-linearly warped to the target skull sDT and this warping is applied to all reference skin sDTs. A linear combination of the warped reference skin sDTs is proposed as the reconstruction of the external skin surface of the target subject. Results on a pilot reference database (N = 20) show the feasibility of this approach, although further investigations are required. First, metal streak artefacts need to be removed from the images, since they possibly distort the reconstructions to an unacceptable extent. Second, the warping procedure needs to be examined more carefully, paying attention, on one hand, to better fitting the reference to the target skull and, on the other hand, to provide a smooth extrapolation of the warping. Third, other linear combinations besides the mere average need to be explored. Finally, a more extensive quantitative validation framework for the reconstructions needs to be carried out.

Tu et al. [196] proposed the automation of the reconstruction process through a data-driven 3D generative model of the face that was constructed using a database of CT head scans for a given skull. The reconstruction can be constrained based on prior knowledge such as age and/or weight. To determine whether or not these reconstructions have merit, geometric methods for comparing reconstructions against a gallery of facial images are proposed. First, Active Shape Models, a specific type of deformable model [199], are used to automatically detect a set of facial landmarks on each image. These landmarks are associated with 3D points on the reconstruction. Direct comparison of the reconstruction is problematic since, in general, the camera geometry used for image capture is unknown and there are uncertainties associated with the reconstruction and landmark detection processes. The first method of comparison uses constrained optimization to determine the optimal projection of the reconstruction onto the image. Residuals are, then, analyzed resulting in a ranking of the gallery. The second method uses boosting to learn which points are both reliable and discriminating. This results in a match/no-match classifier.

Claes et al. [48] described the common pipeline of modern facial approximation software. First, it is necessary that an expert examines the unknown skull in order to determine the BP. Then, a virtual replica of the skull is produced and represented according to the modeling parameters. A craniofacial template encoding face, skull and soft tissue information are derived from a head database. Then, an admissible geometric transformation drives the adaptation of the craniofacial template onto the unknown skull, according to the “proximity” between the skulls. As result, the template face is deformed onto the predicted face associated with the unknown skull, linking together information coming from both the database and the examination of the unknown skull. Finally, a skin texture and hairiness are added to the reconstructed face.

Guyomarc’h et al. [50] developed a computerized method for estimating facial shape based on CT scans of 500 French individuals, Anthropological Facial Approximation in Three Dimensions. Facial soft tissue depths are estimated based on age, sex, corpulence, and craniometrics, and projected using reference planes to obtain the global facial appearance. Position and shape of the eyes, nose, mouth, and ears are inferred from cranial landmarks through geometric morphometrics. The 100 estimated cutaneous landmarks are then used to warp a generic face to the target facial approximation. A validation by re-sampling on a subsample demonstrated an average accuracy of ≈4 mm for the overall face. The resulting approximation is an objective probable facial shape, but is also synthetic (i.e., without texture), and therefore needs to be enhanced artistically prior to its use in forensic cases. This facial approximation approach is integrated in the TIVMI software and is available freely for further testing.

De Buhan and Nardoni [51] combine classical features as the use of a skulls/faces database, and more original aspects: (1) a shape matching method is used to link the unknown skull to the database templates; and (2) the final face is seen as an elastic 3D mask that is deformed and adapted onto the unknown skull. In this method, the skull is considered as a whole surface and not restricted to some anatomical landmarks, allowing a dense description of the skull/face relationship. Liu and Li [197] employed a database of portrait photos to create many face candidates, and then performed a superimposition. First, they build an effective autoencoder for image-based facial reconstruction and, second, they use a generative model for constrained face inpainting. Their experiments have demonstrated that the proposed pipeline was stable and accurate. Imaizumi et al. [198] developed a software solution for 3D facial approximation from the skull based on CT scans of the head obtained from 59 Japanese adult volunteers (40 males, 19 females). The positional relationship between the skull and head surface shape were analyzed by creating anatomically homologous shape models. Before modeling, skull shapes were simplified by concealing hollow structures of the skull. Surficial tissue thickness, represented by the distance between corresponding vertices of the simplified skull and head surface, was calculated for each individual, and averaged for each sex. Although the approximate head shapes of known individuals showed a relatively good resemblance in both the shape of the whole head and facial parts, some errors were identified, particularly in areas with thick superficial tissue at the cheek, and thicker tissue at the glabella, nose, mouth, and chin. Moreover, they created referential models for CFS from average models of the skull and head surface shape for each sex.

Recently developed computer-enabled tools have facilitated the estimation of face shape from genetic sequences [200,201]. Called “molecular photofitting” [202], these methods can supplement facial approximation methods to predict faces being especially useful for morphologies with limited tangible relationships to the skeletal structure. In terms of specific face traits, red hair color and blue/brown colors of the iris are regarded as accurately predictable from genes alone [203]. Approximately 70% accuracy has been recorded for red hair prediction [204] whereas positive predictive intervals of colours of the iris ranged from 66–100% for blue eyes and 70–100% for brown eyes [205,206,207,208,209]. Typically, positive predictive values for brown eyes were higher (>85%) than for blue (>75%), with a drastic reduction in the same statistic for the so-called intermediate eye colours [206]. Predictive models for skin color are also being investigated, tested, and validated [210,211].

4. Discussion and Conclusions

In this article, we have reviewed some of the main works applying AI techniques to different biomedical image modalities (mainly X-ray images, CTs and MRIs, but also 3D surface scans of PM materials, i.e., bone remains) with the purpose of contributing to forensic human identification of deceased and living individuals. SFI is one of the main tools at our disposal when techniques like DNA analysis or fingerprint comparison cannot be applied, because there is no second sample to compare with or because the materials are so degraded that soft tissues are not preserved, among other reasons.

AI techniques have been applied with remarkable success in many challenging tasks, including healthcare and medical imaging. From this point of view, it is highly surprising the residual presence that AI currently has in forensic anthropologists’ daily practice. Even if some integral tools start to arise [212], to date forensic experts have no AI-based tools available to automate SFI tasks.