1. Introduction

Opinion Summarization is one of the most important problems in sentiment analysis and opinion mining areas currently. Given a large collection of review text documents about a particular product in automobiles or smartphones as input, state–of–the–art opinion summarization methods usually provide (1) a few main aspects, (2) pros and cons per aspect, and (3) extractive or abstractive summary in favor of/against each aspect.

In this article, an aspect is formally defined as

a focused topic about which a majority of online consumers mainly talk in the particular product. For instance, according to domain experts,

design,

function,

performance,

price,

quality, and

service are the main aspects in automobiles [

1]. Regardless of manual or automatic approaches, if we can precisely mine key aspects from a large corpus, we can easily measure pros and cons ratios per aspect. Nowadays, since data–based market research has become popular, the aspect detection problem has been studied actively.

To address this challenging problem of

automatically detecting a few main aspects from a large corpus, association rule mining–based, lexicon–based, syntactic–based, and machine learning–based approaches are presented as solutions [

2]. However, despite active research on the aspect detection problem, it is still not trivial to find the right aspects for each domain. To make matters worse, major aspects are different for each domain and differ slightly by different consumer groups even on the same domain. In addition, today demand for handling large–scale text data and the existence of lexical semantics (ambiguous words) make this problem even harder. In particular, to surmount the challenges for which conventional solutions against this problem no longer work, we propose a novel automatic method of mining main aspects through topic analysis.

Given a collection of text documents, a probabilistic topic model extracts major latent topics that are mainly spoken in the collection. A topic consists of relevant words, where each relevant word w has its probability value that indicates its importance (weight) of w within . For example, is represented as a set of relevant words like {bank:0.5, saving: 0.35, interest: 0.11, loan: 0.04}. Probabilistic Latent Semantic Analysis (pLSA) or Latent Dirichlet Allocation (LDA), well–known as topic models, extracts topics from large text documents by the number of topics (k) entered by a domain expert. Then, he/she manually determines the label (title) of each topic by looking at the relevant words and taking advantage of domain knowledge. For example, is very likely to be the word finance as the label if he/she guesses roughly with the topic words like ‘bank’, ‘saving’, ‘interest’, and ‘loan’. In other words, finance includes the comprehensive meaning of all words in .

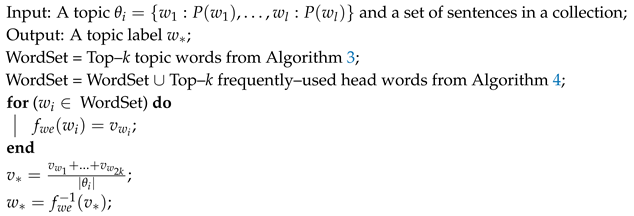

To mine aspects from large text documents,

Figure 1 presents the overall system of the proposed approach, where we first extract accurate topics that are mostly covered in the collection and then generate important aspects through clean topics. To acquire high–quality topics, we propose novel two approaches that improve the shortcomings of the existing topic models.

Firstly, we propose a new algorithm of estimating proper priors for a given topic model. The Dirichlet priors that yield the strong association of all words in a topic (topic coherence) are chosen while the topic model with different prior values is executing iteratively. In particular, we propose the word embedding–based measure to compute the average topic coherence value. We also propose a new method of automatically identifying the number of true topics in advance, where the number of true topics means the number of topics originally in a given data that is unknown. Empirically, the number of topics that yields the highest topic coherence value is chosen while the topic model with different numbers of topics is executing iteratively.

Secondly, we propose a novel Topic Cleaning (

) method that correct raw topics output by the existing topic model through topic splitting and merging processes. To split and merge incorrect topics, we propose a new post–processing method based on the word embedding and unsupervised clustering techniques in which we consider word2vec [

3], GloVe [

4], fastText [

5], ELMo [

6], and BERT [

7] as the word embedding model and Density–based Spatial Clustering of Applications with Noise (DBSCAN) [

8] as the unsupervised clustering method.

Next, we propose three automatic methods of automatically labelling clean topics after topic splitting and merging. Each topic is given a label (title) of the topic that represents the overall meaning of the topic as a single keyword. It is also assigned sub–labels that assist the semantics of the topic. Our proposed methods are based on (1) topic words, (2) head words obtained by dependency parsing of the sentences related to a given topic, or (3) a hybrid of topic and head words.

To the best of our knowledge, the proposed approach is the first study to (1) develop word embedding–based topic coherence measure, (2) to correct dirty topics generated by an existing topic model using the word embedding and unsupervised clustering techniques, and (3) to generate main and sub aspects based on clean topics and dependency parsing of topic sentences. In addition, to find top–k sentences relevant with a given topic, we propose a new probabilistic model based on Maximum Likelihood Estimation (MLE). Our experimental results show that the proposed approach outperforms LDA as the baseline method.

The remainder of this article is organized as follows—in

Section 2, we introduce both background knowledge and existing methods related to this work. In particular, we discuss the novelty of our method, in addition to main differences between previous studies and our work. In

Section 3, we describe the details of the proposed method and explain/analyze experimental set–up and results in

Section 4. We also discuss how the results can be interpreted in perspective of previous studies and limitations of the work in

Section 5. Finally, we summarize our work and future direction in

Section 6.

2. Background

In this section, we discuss the problem motivation and recent studies related to the proposed method.

2.1. Motivation

The proposed method deals with three specific sub problems—(1) Automatic and empirical prior estimation problem, (2) topic cleaning problem, and (3) automatic topic labelling problem. The final aim is to automatically find main aspects based on the solutions that handle such three problems. In next subsection, we will describe the detailed things of each problem.

2.1.1. Automatic Prior Knowledge Estimation

Since topic models use the Bayesian probability model, the prior probabilities and should be given as the input parameter. Here, is the distribution of topics in a document and is the distribution of words in a topic.

Previously, the default values have been widely used as the priors or arbitrarily determined by domain experts. However, such approaches have the following disadvantages.

First, the more accurate the priors for a given data is, the faster the convergence is. However, we cannot expect this benefit if we use the existing methods.

Second, it is hard to manually find the exact priors from a large–sized data set.

Third, the topic model with inaccurate priors is likely to extract dirty topics, which prevent us from understanding the nature of the given data set.

As such, it is significant to grasp proper priors in advance given a data set, in order to obtain good topic results. According to a recent study in Reference [

9], there exists prior knowledge suitable for a domain and the accuracy of topic models will be greatly improved if the domain–optimized prior knowledge is used in the initial step.

To optimize and , some traditional topic models use non–parametric hierarchical prior distribution. The non–parametric hLDA is based on the Chinese Process to detect the number of clusters. However, as the main disadvantage, it is considerably affected by larger clusters. In addition, note that text data are usually ambiguous and noise big data so that the mathematically–based model cannot be applied simply. In practice, to handle such noise data, the most realistic approach is to use an empirical method that automatically determine the priors by considering all combinations of and values, which is not covered in the existing studies.

2.1.2. Topic Cleaning

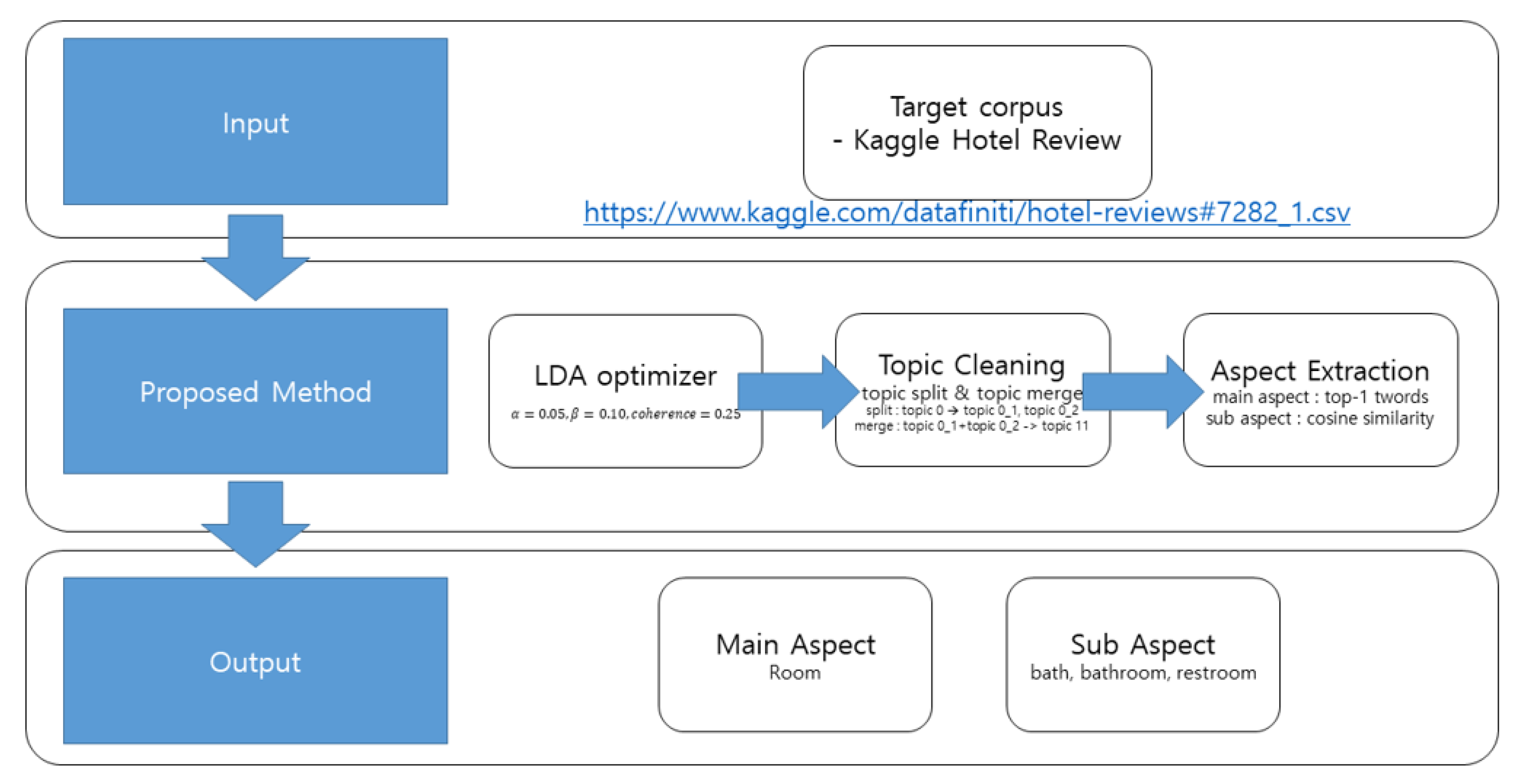

Figure 2 shows an example of topics

,

, …, and

. The labels of

,

, …, and

are words ‘service’, ‘clean’, …, and ‘price’. Each topic is represented as the probability distribution of words relevant with the topic label. The topic words such as ‘room’ and ‘bellboy’ are relevant with the topic label ‘service’. The probability value of the word ‘room’ is

= 0.02 and that of the word ‘bellboy’ is

= 0.01. The sum of the probability values of all words in

is 1. This is

= 1, where

V is a set of vocabularies (unique words) in the collection of text documents. In addition, as the weight of

is

, the sum of the weights of all topics should be 1, i.e.,

.

If we grasp the correct topics using any topic model, we can easily infer main aspects through the topic labels. Unfortunately, compared to topic results summarized by human evaluators who go over all text documents in a collection, pLSA or LDA has mostly failed to show satisfactory results in real applications. The main reasons are as follows:

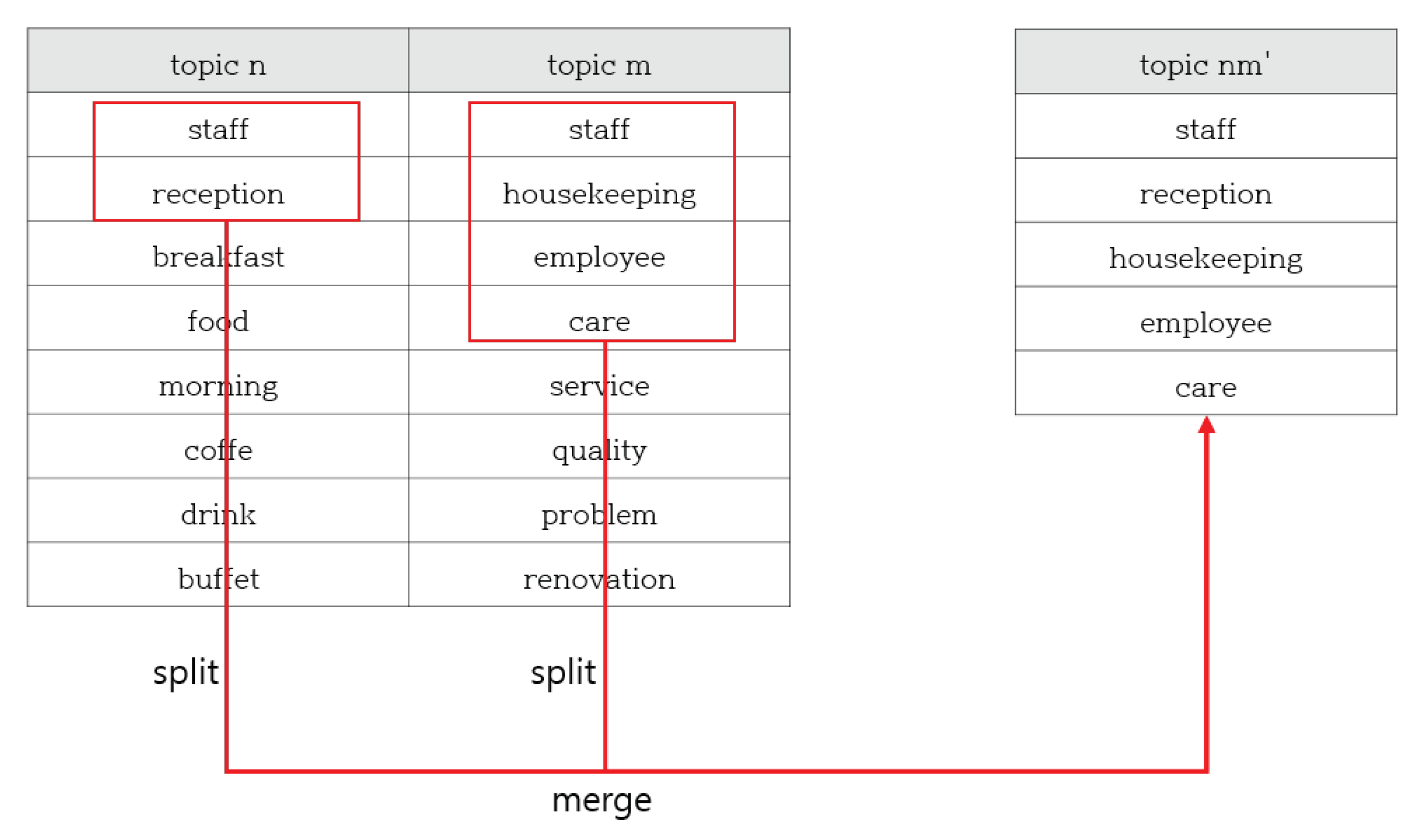

Often, the results of topic models are dirty. A topic is mixed with two or more semantic groups of topic words with different meanings. To better understand topic results, this dirty topic should be split to two or more correct topics, each of which consists of only words representing the unified meaning, as shown in

Table 1.

There exist redundant topics with similar meaning in topic result. These duplicate topics should be merged to a single topic, as shown in

Table 2. After topic splitting and merging, the topic results will be more accurate and better understood. Besides, meaningless topics in which most words have stop words (e.g., ‘a’, ‘the’, ‘in’, etc.) should be removed from the topic result.

2.1.3. Automatic Topic Labelling

In topic result, a domain expert manually labels each topic by looking at the topic words and taking advantage of domain knowledge. In general, this task of manually labelling topics is both labor–intensive and time–consuming. It is also hard to know the exact meaning of a topic by observing only words in the topic. If topic words are ambiguous or the semantics among topic words are significantly variant, there is a high likelihood of incorrect interpretation by reflecting the expert’s subjective opinion. Through the existing topic model, we can simply understand the superficial tendency hidden in review documents. For these reasons, an automatic yet accurate topic labelling method is required for practical use.

2.2. Related Work

The most similar existing problem is the aspect–based opinion summarization that has been actively studied in the field of sentiment analysis since 2016. However, the existing aspect extraction problems are radically different from our problem in this article. The existing problems are represented as multi–classification of a candidate word to one of aspects, where an aspect is not a focused topic mainly talked in a large collection but some feature that describes any product in part (e.g., in the sentence “the battery of Samsung Galaxy 9 is long”, the word ‘battery’ is one of features).

The existing aspect extraction problem is largely catogorized to two different problems of extracting

explicit and

implicit aspects from review documents. A recent study [

10] introduces the explicit aspect extraction problem, where aspect words are extracted and then clustered [

11,

12,

13,

14], and the implicit aspect extraction problem, where aspects that are not explicitly mentioned in text strings are assigned to one of the pre-defined aspects [

15,

16].

Extracting explicit aspects is relatively easier than implicit aspects. To address the explicit aspect problem, the most popular approach is based on the association rule mining technique that finds the frequent noun and noun phrase set in text documents [

17]. Furthermore, based on the frequent-based method, Popescu et al. used Point-wise Mutual Information (PMI) [

18], while Ku et al. used the TF/IDF method [

19], to find main aspects from candidate noun phrases. Besides, Moghaddam and Ester removed noise terms by using additional pattern rules in addition to the frequency-based aspect extraction method [

20]. Even though these approaches are simple and effective, they are limited because it is difficult to find low-frequency aspects and implicit aspects. Ding et al. presented a holistic lexicon-based approach by which low-frequency aspects can be detected. In their method, the authors first exploited opinion bearing words from other sentences and reviews and then found a noun closest to the opinion bearing words [

21]. Rather than using the opinion lexicon like Ding et al.’s method, Turney first proposed the syntactic–based approach based on POS tags [

22] and Zhuang et al. used the dependency parsing to find all possible aspect candidates from review documents [

23]. In recent years, learning–based approaches have been widely used. Zhai et al. proposed a semi–supervised learning method based on Expectation–Maximization (EM) with sharing words (e.g., battery, battery life, and battery power) and lexical similarity based on WordNet to label the aspect of the extracted word [

24]. In addition, Lu et al. proposed a multi–aspect sentence labelling method in which each sentence in a review document is labelled to an aspect. The authors modified the existing topic model with minimal seed words to encourage a correspondence between topics and aspects, and then predict overall multi–aspect rating scores [

25]. To analyze people’s opinions and sentiments toward certain aspects, References [

26,

27,

28] attempted to learn emotion and knowledge information based on a sentic Long Short-Term Memory (LSTM)–based model that extends LSTM with a hierarchical attention mechanism. Unlike their approaches, the proposed method focuses on finding main aspects automatically using clean topics. To improve the performance of text classification, Reference [

29] proposed a capsule network using dynamic routing that can learn hierarchically. Because the capsule network integrates multiple sub layers layer, text classification is improved from single label text classification to multi–label one.

By and large, most recent aspect extraction methods are grouped to three categories: (1) Unsupervised methods, (2) Supervised methods, and (3) Semi-supervised methods. The unsupervised methods are based on Dependency Parsing [

30], Association Rule Mining [

31], Mutual/ Association [

32], Hierarchy [

33], Ontology [

34], Generative Model [

35,

36], Co-occurrence [

37], Rule-based [

38], and Clustering [

39]. The supervised methods are based on Association Rule Mining [

40], Hierarchy with Co-occurrence [

41], Co-occurrence [

42], Classification [

43], Fuzzy Rule [

44], Rule-based [

45], and Conditional Random Fields [

46]. The semi-supervised methods are based on Association Rule Mining [

47], Clustering [

48], and Topic Model [

49].

Rather than traditional topic models, Reference [

50] used only word2vec to find topics. All vocabularies are mapped to word embedding spaces and clustered to topics, each of which is a group of relevant words in the corpus. In contrast, the proposed method basically uses some traditional topic model because the traditional topic models are likely to work well to obtain brief summary from large documents. In the approach, a topic model is first applied to find topics and then main aspects are exploited from the clean topics. To clean the topics, various word embedding methods such as word2vec, fastText, GloVe, ELMo, and BERT are used. Reference [

51] extracted some topics from the embedding space using only word–level embedding, while the proposed method takes advantage of both word–level and sentence–level embedding to detect main topics from a given large corpus.

All existing approaches based on any topic model [

25,

35,

36,

49] are quite different from ours in that the Bayesian network of the original topic model is modified and prior knowledge such as pre–defined aspects [

25], explicit aspects [

35], opinion bearing words [

36], aspect dependent sentiment lexicon [

52], and cannot/must–links [

49] are used. Alternatively, we do not change any existing topic model directly and do not use any prior knowledge like opinion words. In addition, to enforce a direct correspondence between aspects and topics, we propose optimal starting points for learning, topic cleaning, and aspect labelling based on topic structures.

3. The Proposed Approaches

Our proposed method consists of two steps. The first step is to extract raw topics from a large collection of text documents through a given topic model like pLSA or LDA and the second step is to automatically generate each topic label that is one of main aspects in our context. If we use one of the existing topic models, the topic result is poor. As we already pointed out in the introduction section, one of the reasons is that the existing models are likely to fall into local optima because (unknown domain–optimized) prior knowledge such as Dirichlet priors and the number of topics are not used in the initial step. Another reason is that raw topics are incomplete and even dirty because of the topic split and merge problems.

The goal of the proposed method in the first step is to (1) identify the best priors for a given topic model; (2) execute the topic model with the priors; and (3) clean raw topics by removing meaningless topics, splitting ambiguous topics, and merging redundant topics with similar meaning. Then, the second step of the proposed method aims at automatically generating topic labels, each of which is an aspect in the collection. In next sections, we will describe the detailed algorithms for each proposed method.

3.1. Automatic Identification of Domain-Optimized Priors and Number of True Topics

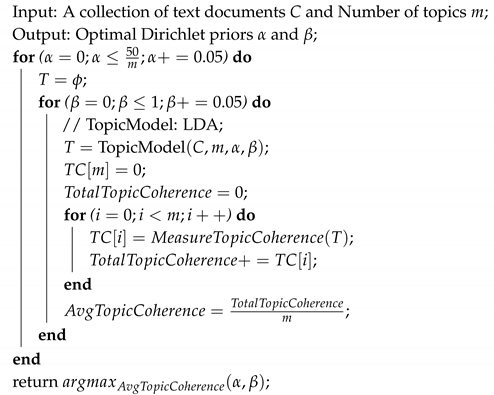

To address the problem of automatically identifying initial priors suitable to a given domain, we focus on an empirical approach instead of mathematical optimization solutions. Dirichlet priors ( and ) and the number of topics are the priors for a given topic model. and adjust the distribution of topics per document and the probability distribution of words per topic, respectively. If increases, the number of topics per document increases and if increases, the probability values of a few words within the topic increase. In existing topic models, the prior probabilities such as and are determined either by default ( and ) or arbitrarily decided by domain experts. However, we propose Algorithm 1, where the average topic coherence is measured while increasing the values of and by 0.05, and the values of and which maximize the average topic coherence value are returned. To measure the topic coherence value (corresponding to Function in Line 11), to the best of our knowledge, we propose a new topic coherence measure based on word embedding for the first time. Given a topic , where is a word, (1) converts each word to p–dimensional word embedding vector through a domain–customized word embedding model () which is one of word2vec, GloVe, fastText, ELMo, and BERT; (2) creates all possible combinations of vector pairs—e.g., ; (3) computes , the similarity of each pair through ; and (4) returns the topic coherence value , where is the number of pairs in S.

Similarly, the optimal number of topics is computed using the above function. A topic model is individually executed with each different number of topics. Next, the proposed method computes the average topic coherence value from the topic results and then finds the number of topics with the maximum topic coherence value.

3.2. Cleaning Dirty Topics

In our point of view, the proposed method is based on the hypothesis that one topic forms one semantic cluster in the word embedding space and a dirty topic is a mixture of multiple topics, so it contains multiple semantic clusters. In the pre-processing step, we use

by learning simple neural networks like Continuous Bag of Words (CBOW) and Skip–gram from a domain given as input. Suppose that the topic result is

and other topics after a topic model is executed. In addition,

, where

is a word. For

,

, where

is the

p–dimensional word embedding vector of

. In this way, the vectors corresponding to all words in

like

,

,

,

, and

are created and then projected to the

p–dimensional word embedding vector space. Similar vectors will be located close to the vector space, while disjoint vectors will be far away from the vector space.

| Algorithm 1: The empirical approach for identifying best priors |

![Applsci 10 03831 i001 Applsci 10 03831 i001]() |

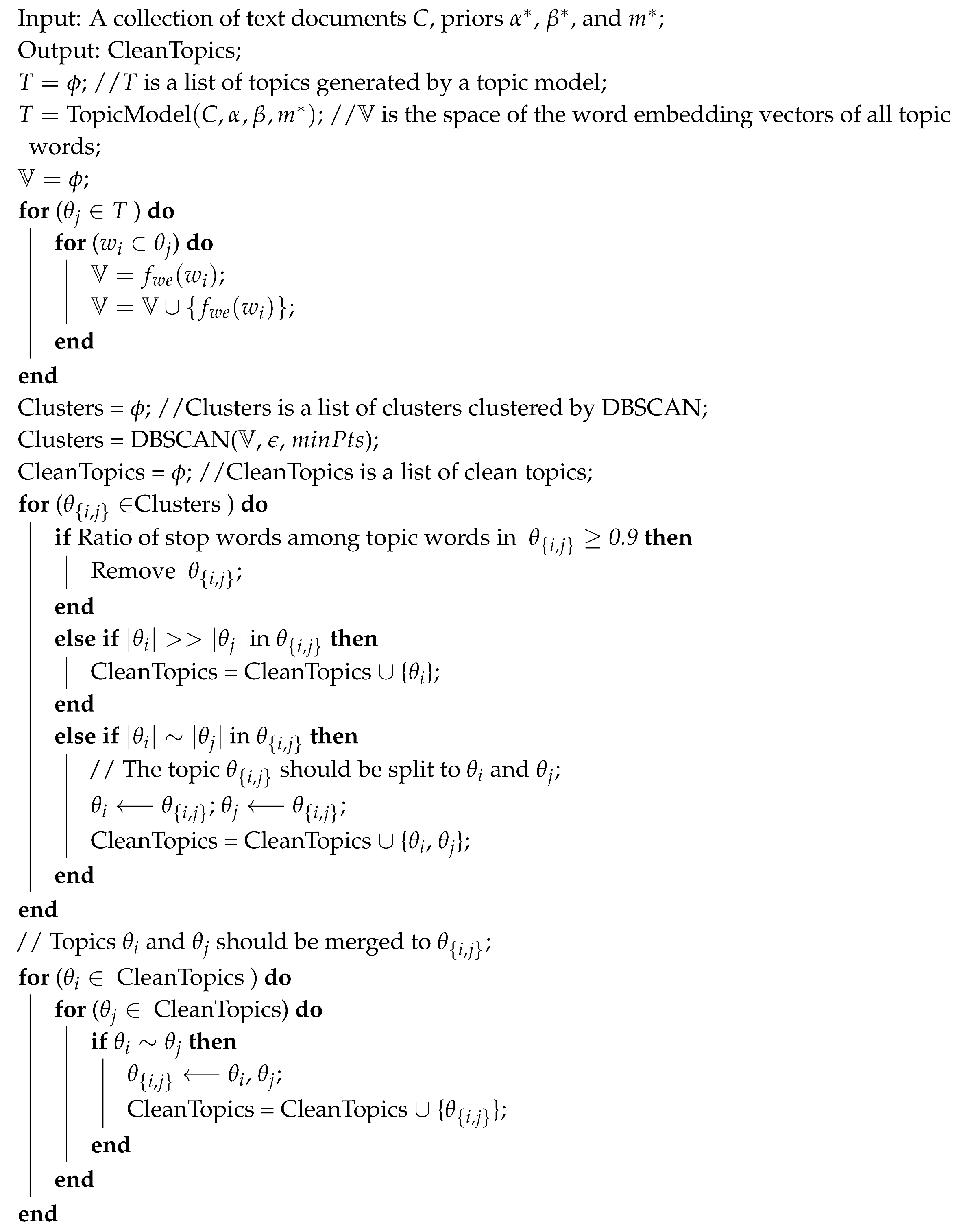

Next, the proposed method clusters the word embedding vectors using the unsupervised clustering method. In this problem, the unsupervised clustering method should be used because the number of true clusters is not known in advance. In the experiment, we used DBSCAN as the unsupervised clustering method. If a remaining cluster mostly has stop words or does not represent the united meaning (Line 14 in Algorithm 2), it is removed in the topic result. On the one hand, if there is one giant cluster and several small clusters in the clustering result (Line 16 in Algorithm 2), the main stream of is a giant cluster and the remaining clusters are noise. Therefore, the topic words in the remaining small clusters except the giant cluster are removed in . For example, let us assume that the clustering result is {, , , } and {}. In this case, the original is changed to the clean . On the other hand, if the clustering result is divided into approximately half (Line 18 in Algorithm 2), it is a topic split problem. That is, this means that there are two clusters with different meanings within a single topic. After the split process is completed, the merge process is performed for each pair of split topics (Line 22 to 27 in Algorithm 2).

3.3. Automatic Generation of Main Aspects from Clean Topics

To mine main aspects in the collection, we view each of clean topics to an aspect. The next problem is how automatically but yet accurately we can generate an aspect (topic label/title) corresponding to each topic. Please note that labelling each topic is not difficult because the topic is clean (or has a unified meaning) through Algorithm 2. Based on topic information that we can only use, we propose three new methods for detecting main aspects.

| Algorithm 2: The proposed topic cleaning method |

![Applsci 10 03831 i002 Applsci 10 03831 i002]() |

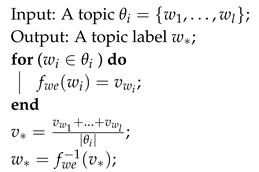

The first method is based on topic words. As an example, see a topic

. For each word

,

, where

is the domain–customized word embedding model. In this way, the vectors of all topic words in

(i.e.,

,

, and

) are created and then projected to the word embedding vector space. Then, a new vector

that are the most relevant with

,

, and

is computed by

, where

is the number of vectors in

and + is the element–wise addition between vectors. Finally, a vocabulary

close to

is chosen in the domain–customized word embedding model, where

is considered as an aspect explaining

to the best. In Line 6 of Algorithm 3,

is the inverse of

. The reason why we use the inverse function is that

is the closest to

when the value of

is the smallest.

| Algorithm 3: The first aspect auto–generation method |

![Applsci 10 03831 i003 Applsci 10 03831 i003]() |

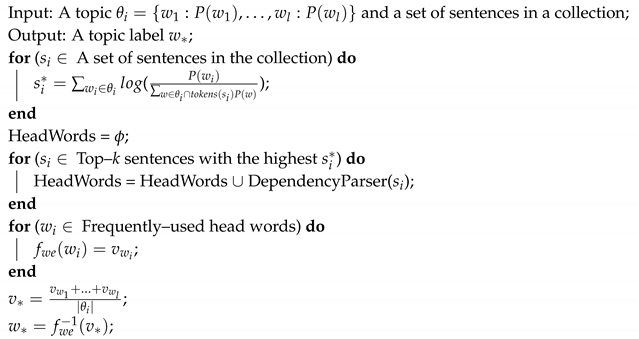

The second method is based on head words extracted by natural language processing techniques. In the pre-processing step, all text documents in a collection are segmented to a set of sentences. Now, given a topic

, the goal of the second method is to find the most relevant top–

k sentences. Given

and a sentence

, the probability value that

is relevant with

is first computed by Equation (7) and then only top–k sentences with the highest

value are selected from a total of sentences in the collection.

where

, where

is a probability of the

i–th topic word computed by a topic model and

is one probability of topic words appearing in both the topic

and the

i–th sentence

.

By Bayes’ theorem, Equation (

1) is converted to Equation (

2). The

in Equation (

3) is the normalized term so it can replaced by

. Since

is treated as a constant, it can be omitted because it does not significantly affect the results. If we replace

in Equation (

3) with the term for each word, it is represented as

in Equation (

5). Finally, we obtain Equation (

7) by taking a log on Equation (

5).

Then, for

top–

k sentences {

, …,

}, the dependency parsing is carried out to find head words from

. In the dependency parsing, there exist governor and dependent words in a given sentence. Let us assume one sentence like “I saw a beautiful flower on the road”. In this case, the word ‘beautiful’ is the dependent and the word ‘flower’ is the governor. Throughout this article, we call governors head words for convenience. If some head words appear frequently cross top–

k sentences, such words can be considered to be key words representing the topic

. Then, such head words

,

, …are converted to the vectors

,

, …through the domain–customized word embedding model. The next step is similar to the first method.

is computed by averaging element-wise sum of the vectors. Finally, a vocabulary

close to

is chosen in the domain-customized word embedding model, where

is considered as an aspect describing

to the best. Algorithm 4 is the pseudo code for the second aspect auto–generation method.

| Algorithm 4: The second aspect auto–generation method |

![Applsci 10 03831 i004 Applsci 10 03831 i004]() |

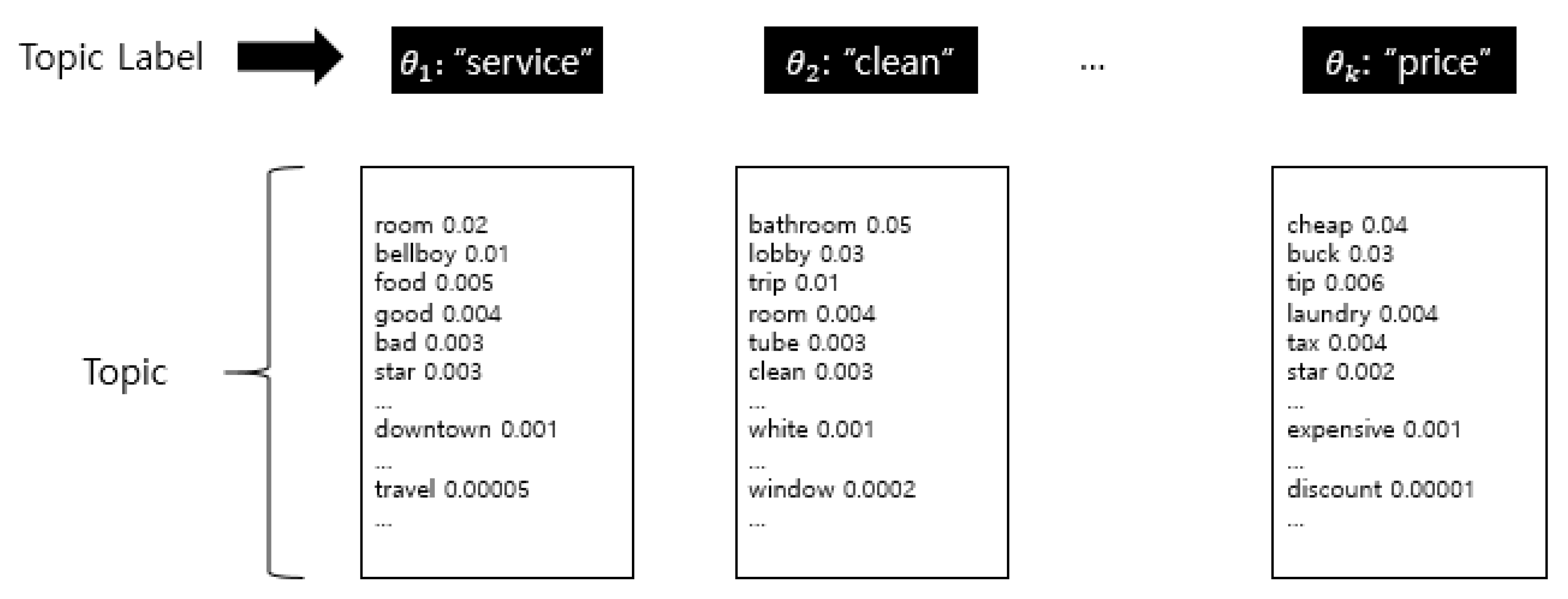

The third method is a hybrid of the first and second methods. In other words, the third method is working based on the consideration of both word-level approach (from the first method) and sentence-level approach (from the second method). Technically, it selects both top–

k topic words from the first method and top–

k head words from the second method. Then, the words are converted to the vectors and

is computed by averaging element-wise sum of the vectors. Finally, a vocabulary

close to

is chosen in the domain-customized word embedding model, where

is considered as an aspect explaining the topic

to the best. Algorithm 5 is the pseudo code for the third aspect auto–generation method.

| Algorithm 5: The third aspect auto-generation method |

![Applsci 10 03831 i005 Applsci 10 03831 i005]() |

3.4. Automatic Generation of Sub-Aspects

Given a clean topic , using one of Algorithms 3–5, the main aspect of is extracted. Specifically, the top–k topic words within are translated to the word embedding vectors and then projected to the word embedding space. Since the top–k topic words are relevant one another, the center of all the vectors is a vector () that represents semantically well. Then, the main aspect () of is the word corresponding to the center vector from the vocabulary dictionary. To find top–k sub–aspects most relevant to , the cosine similarity between and each topic word within is computed and finally the top–k topic words with the highest cosine similarity values are selected as the sub–aspects.

4. Experimental Validation

In this section, we discuss the results of the proposed methods for the defined three problems.

4.1. Evaluation of Best Priors and Number of True Topics

In the experiment, we used about 10,000 review documents about hotels in US, where the total numbers of words, vocabularies, and sentences are 282,934, 12,777, and 40,819, respectively [

53]. As a topic model, we executed Gibbs sampling–based LDA [

54] and the numbers of topics and of words per topic are 10 and 50, respectively.

Table 3 shows the reason why we selected ten topics as the output of LDA. As the number of topics increases to 3, 5, 7, and 10, we measured the values of Rouge–1 and the numbers of split and merge topics, to see how clean the topic result is. The Rouge–1 is the most well–known metric used to quantify how similar two sets are. In this set–up, for a clean topic

, we first selected the top 100 review documents that are the most relevant with

retrieved by the LDA, and then several human evaluators made a list of important and meaningful keywords after they went over the documents. We denote such a list by

X as the solution set to

. We also had another set

Y that is a list of topic words in

. Now the Rouge–1 metric is calculated by

.

Y set cleaned by the proposed method is very similar to the solution set if the value of the Rouge–1 is close to 1. In addition, if the numbers of split and merge topics are low in the topic result post-processed by the proposed method, the topic result is more clean than the raw topics generated by the existing LDA. While all Rouge–1 results are little different, the total number of the split and merge topics is small when the number of topics is ten. As a result, we selected 10 as the number of topics in all experiments. Unexpectedly, it turns out that only 1∼2 topics have three or more groups with different meanings when the number of topics is 3 but each topic has two groups with different meanings when the number of topics is 7.

For lexical semantics, we focus on word embedding including word2vec, fastText, GloVe, ELMo, and BERT to surmount the shortcomings of both one-hot encoding and WordNet–based methods.

Table 4 supports our claim that using best priors makes topic result to be more clean. In most empirical experiments using LDA, the default

and

. According to our manual investigation, the Rouge–1 results are the worst when we used the default values. In contrast, when ‘

and

, the Rouge–1 result is the best, indicating that the topic result is close to the solution set and the fastText is the best among different word embedding models. Please note that

Table 4 summarizes the results of the fastText that we selected as the best word embedding model. We can also observe that the word2vec, GloVe, and fastText are the models re–trained with our own dataset show better Rouge–1 results than ELMo and BERT that are not re–trained with our own dataset.

To evaluate Algorithm 1, we measured topic coherence values according to different

and

values. Because of the result in

Table 4, we selected fastText as the best word embedding method.

Figure 3 clearly shows that the average topic coherence value is about 0.2 when

and

. The topic coherence value is the highest in all priors. These results indicate that topic words are closely relevant one another in the particular prior values.

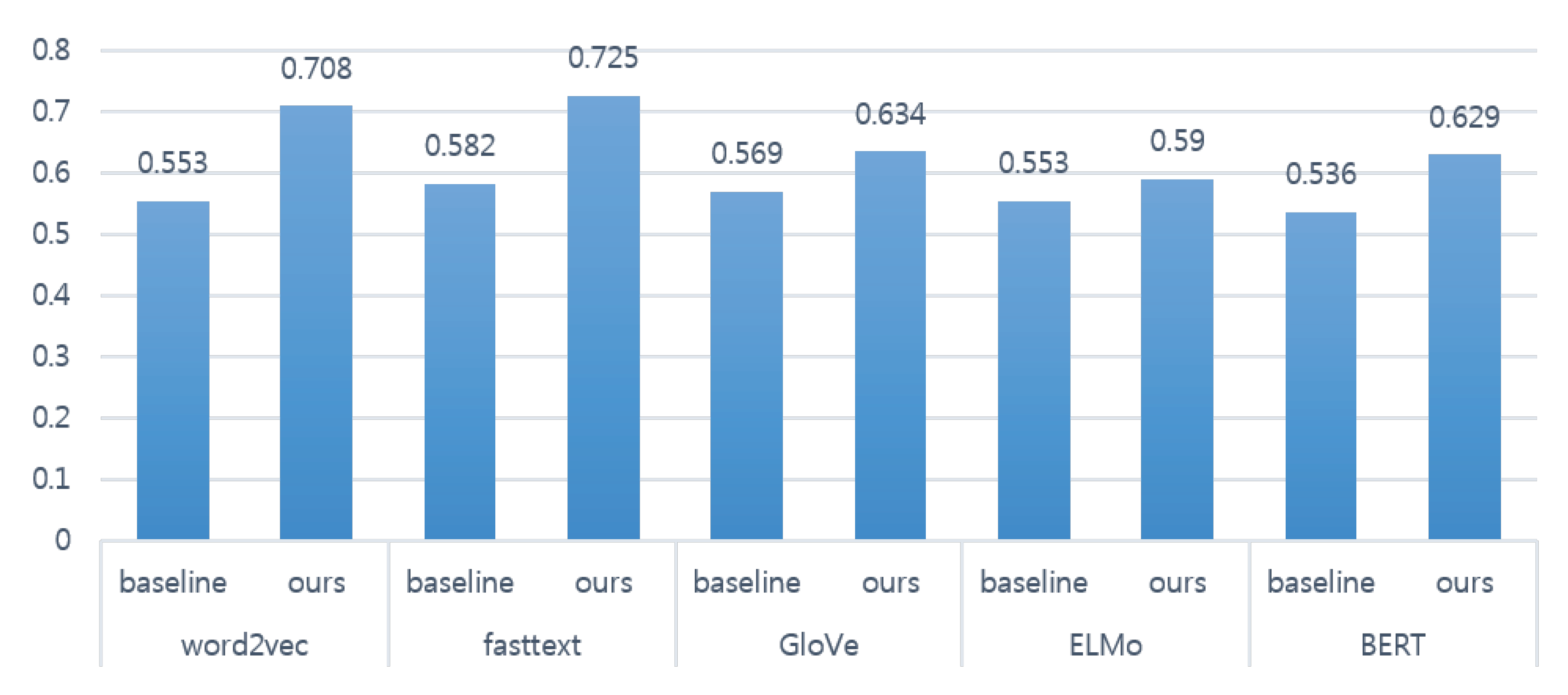

4.2. Evaluation of Clean Topics

Figure 4 shows the Rouge–1 results of the baseline and proposed methods. Each Rouge–1 result is the average of all topic Rouge–1 values. We can indirectly know that the words in each topic are closely relevant each other and the topic words represent the same meaning. The baseline method generates topics by LDA, while the proposed method (Algorithm 2) cleans incomplete topics generated by LDA. The best case is to use fastText in the proposed method. The Rouge–1 values of the baseline and proposed methods are 0.725 and 0.582. Our proposed method improves up to 25% compared to the baseline method. Furthermore, the proposed method outperforms the baseline method in all cases of using different word embedding models.

Table 5 compares the baseline and proposed methods by counting the number of topics which must be split and merged, and meaningless topics output by each method. For this evaluation, we hired three human evaluators who had nothing to do with our research. After going over the topic results and the review documents, they manually checked to see how many dirty topics are in the output. If the number of the split, merge, and meaningless topics are small, the output by either the baseline method or the proposed method will be more clean.

Table 5 clearly shows that the topic results output by the proposed method are more clean than by the baseline method.

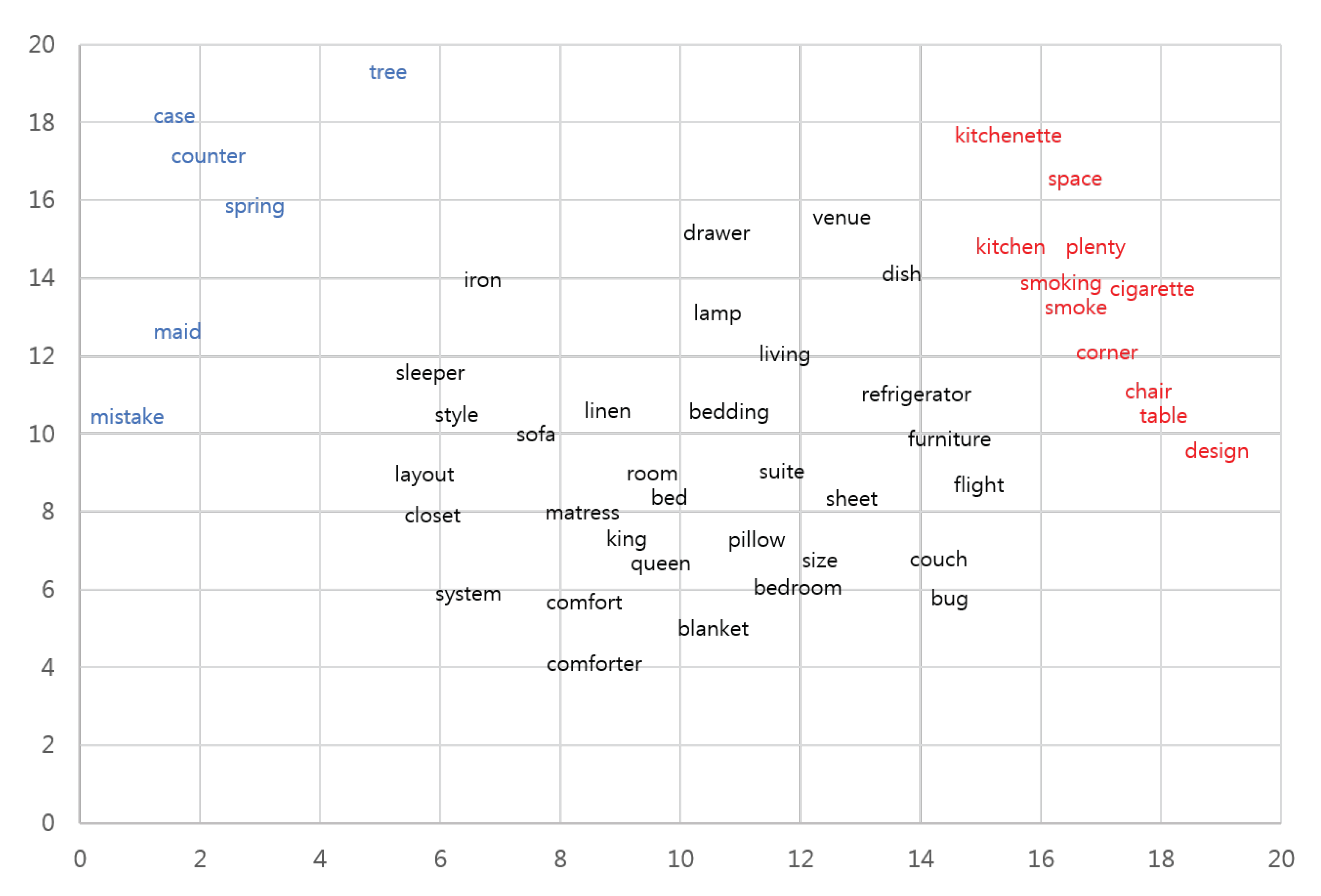

Figure 5 shows a clustering result of a topic after DBSCAN is performed. This shows a gigantic cluster (in black) and two small clusters (in blue and red). In this figure, we view the words belonging to the small clusters to the noises in the topic. Thus, we remove the small clusters except for the giant cluster. Because of space limitation, we leave out another cases in which there are 2∼3 clusters with different meanings but the numbers of words in those clusters are equal. In this case, the topic is split into 2 or 3 topics.

Figure 6 depicts a real example of the topic split and merge problems. Through word embedding and DBSCAN, a topic including clusters with different meanings is divided to two or more topics. On the other hand, the small clusters will be combined if it turns out that they have the same meaning at the topic merge stage. If each of the sub topics is meaningless, then it is removed. In a nutshell, we can split and merge raw topics by LDA through word embedding and unsupervised clustering like DBSCAN in order to make us clearly understand the semantics of the topics generated by LDA.

4.3. Evaluation of Main Aspects and Sub-Aspects

After topics are clean, we view each clean topic to an aspect. The goal of the proposed method is to automatically generate one word as an aspect which corresponds to a clean topic. If the number of the clean topics post–processed by the proposed method is five, we can figure out almost five stuffs about which most users mainly talked in the collection. Each aspect is a feature that describe a product or not. In some time, an aspect is a story not related to the product features but interested by most users.

Table 6 shows the main and sub aspects per topic. The main aspect is more general than sub aspects that describe/support the details of the main aspect. In Algorithms 3–5, given a topic

, a word with the lowest

is chosen as the main aspect. To automatically generate the sub aspects, the top–5 words with the highest probability value are first selected and then each word is converted to a vector from a word embedding model. Next, the average embedding is calculated from the five word embedding vectors. Finally, find the top–3 words that are close to the average embedding vector in the collection.

Table 6 shows each topic identifier, main aspect, three sub aspects, a sentence, and a score. To evaluate the auto–generated aspects, we hired 30 volunteers who were college students. For a topic, each human evaluator carefully and manually went over top–100 review documents that are arranged with the topic by LDA. After he/she understood the content of the topic, he/she scored between 1 and 5 on the Ricardo scale in which 1 means that these aspects have nothing to do with the topic and 5 means that these aspects are very relevant with the topic. The score of the table is the average of the scores determined by the 30 human evaluators. In addition, the column ‘Top sentence’ of the table is one sentence that summarizes the top–100 review documents relevant with the topic. They extracted the sentence after they completely understood the top–100 documents. By and large, it appears that the main and sub aspects are very relevant with the semantics of all topics. Interestingly, some aspects are the same. For example, the main aspect of topics 1 and 8 is ‘hotel’ but the meanings of the aspects are slightly different because topic 1 says the hotel rating, while topic 8 is relevant with the location. As shown in the table, because main topics of our dataset are drink, hotel, staff, room, night, and breakfast, we conjecture that many users are likely to be interested in them as the main aspects.

To automatically generate the aspect of each topic, we proposed three different auto-labelling methods. One is based on topic words; another is on head words; and the other is on a hybrid of topic and head words.

Table 7 illustrates the results of the three methods. The second column means the average of the scores evaluated by human evaluators. The experimental result shows that the second proposed method of auto-generating the aspects of the topics is better than the other individual methods.

5. Discussion

In this section, we interpret the results of the proposed methods in perspective of previous studies and of the working hypotheses. We also discuss limitations of the work and future research directions.

In this work, the final goal of our research is to automatically label topics, each of which is considered to be an aspect about which a lot of review writers talk in a particular product. To achieve this goal, we should tackle three sub problems that are real challenges for data–driven market research.

The first sub problem is to find the proper priors of a given data set. The previous studies showed that there exist priors suitable for each particular data set so that the topic model used by inaccurate priors can provide dirty topics as the result. In turn, the topic labelling method is considerably affected by such inaccurate topic results.

Table 4 shows the topic coherence scores by means of fastText, the best of variant word embedding models, according to different values of three priors—

,

, and # of topics. As we already discussed in

Section 2.1.1, because real text data are not clean, the existing statistically-based prior estimation approach does not work well. Therefore, the empirical approach like Algorithm 1 actually helps in order to find the priors suitable for a specific data.

The second sub problem is to clean dirty topics usually resulting from the traditional topic models. If such mixed and redundant topics are corrected through the proposed topic merge and split algorithms, labelling each topic is easy and we can clearly understand the nature of the given data. To validate our claim, we did additional experiments in which we downloaded 186 review posts about K5 cars in one Korean web site—Bobaedream and then compare split topics to the original topics through topic coherence measure.

Table 8 shows the ratio of original topics to split topics, indicating that the average score of topic coherence is improved by up to 25% by the split topics more than by the original topics.

To see the effectiveness of the merged topics, we first collected all pairs of two split topics. For example, for each pair of two split topics—

and

, Algorithm 2 merges

to

if

, where the similarity score between

and

is computed by word embedding. In this way, the merged topics are the

predicted result performed by Algorithm 2. When

and

are combined, human evaluators find both top–

k sentences relevant with

and both top–

k sentences relevant with

. Then, human evaluators manually judged whether the meaning of the most sentences relevant with

is similar (different) to (from) that of

. Through this manual investigation, the

actual result is obtained. Using both actual and predicted results, we consider the confusion matrix to compute average recall, precision, and

–score.

Figure 7 shows the results of the merged topics. Specifically, the

–score is 0.91 and the accuracy value is 0.93. Thus, this implies that the merged topics obtained by Algorithm 2 are more consistent than the original topics obtained by the traditional topic model.

The third sub problem is to automatically find main and sub aspects that are the label of each topic. As shown in

Table 6, the proposed three methods work well to detect main aspects from review data about hotels. For example, most review documents mainly talk about hotel breakfast, hotel interior, service, room, location, and landscape.

Table 7 shows the average of the scores evaluated by human evaluators. The score of Algorithm 4 is 4.4 that is close to 5 as the perfect score. This survey result means that the proposed main aspect detection method based on clean topics work well. Until now, all previous methods have not considered clean topics to finally detect main aspects from large review text documents.

The approaches proposed in this article might be affected by the quality of the raw review data. As the input data, review documents, including advertisements or spam, make it difficult to extract the main aspects. In addition, it will be hard to find important topics from review documents that contain little text or non-text such as emoticons and abbreviations. Therefore, it is expected that the proposed approaches will achieve a higher performance if the best technique capable of both collecting high-quality review data and filtering unnecessary data is employed. In this case, there is still room to study in our future work.

6. Conclusions

In data-driven market research, given a collection of large review posts about a particular product, it is important to automatically find main aspects that are mainly talked by most customers. For each aspect, the automatic method that quickly and easily grasps the public’s preferences for the product and by finding specific reasons they like or dislike will provide useful information to customers who want to purchase the product or company marketers who want to sell the product. In particular, finding main aspects automatically is the essential task in market research.

To address this problem, we focus first on utilizing the traditional topic model that extracts topics from large review text documents. In our standpoint, a topic is represented as an aspect. However, one obstacle of the existing topic models is that the topic results are often dirty. In other words, there exist ambiguous topics, redundant topics, and meaningless topics. To improve the dirty topics, we propose an automatic method (Algorithm 1) that estimates priors that fit the input data and a novel topic cleaning method (Algorithm 2) that both split ambiguous topics and merge duplicate topics. Moreover, based on the cleaned topics, we propose novel word–level (Algorithm 3), sentence–level (Algorithm 4), and hybrid (Algorithm 5) methods that automatically label each topic as an aspect. All algorithms are based on word embedding model. In Algorithm 1, various word embedding models are used to measure topic coherence. In Algorithm 2, word embedding models are used to merge redundant topics. In Algorithms 3–5, to find the center word among relevant words in each topic, word embedding models are used.

In a collection of review text documents about hotels, the experimental results show that Algorithm 1 using fastText is the best word embedding model with priors and . The rouge–1 score of Algorithm 1 is 0.725, while that of the baseline method with default priors is 0.582, improving up to 25%. In addition, comparing original topics to split topics, Algorithm 2 improves to about 25% on average. In case of merged topics rather than original topics, the average –score is about 0.91. These results clearly show that the topics cleaned by Algorithm 2 is more useful than the original topics in order to label topics. Finally, human evaluators judges how useful the proposed three methods of automatically labelling topics are. In particular, the survey scores of the sentence-level method are about 4.4 on average that is close to 5 as the perfect score. As a case study, main aspects about the hotel data set are breakfast, interior, service, location, and landscaping. All of these results indicate the proposed methods provide a simple, fast, and automatic way to mine main aspects from a large review data.

To the best of our knowledge, this work is the first study to consider (1) an empirical method that is effective in estimating the priors from ambiguous and noisy text data, (2) a topic clean method that splits and merges ambiguous and redundant topics, and (3) word-level and sentence-level topic labelling methods that are based on various word embedding models.

In our future work, we plan to apply the proposed method to various domains including hotels, restaurants, rent-a-car, and so forth, and improve the accuracy of the proposed method. We will also propose a new abstractive summarization method based on clean topic information. Furthermore, we will re-implement the proposed method to MapReduce-based method for processing Big Data.