Statistical Error Propagation Affecting the Quality of Experience Evaluation in Video on Demand Applications

Abstract

:1. Introduction

- We evaluated Goodness of Fit (GoF) tests to determine that the measured latency (delay) distributions are lognormal in shape—this information fed into programming our use of NetEm.

- We determined the correlation between PLR and jitter measurements. Our overall concern in studying correlation between QoS metrics is to determine how that correlation affects the error propagation to the dependent variable (QoE), so we consider the correlation between the independent variables of loss and jitter.

2. Related Work

Uncertainty Analysis

3. Methodology

3.1. Capturing QoS Measurements

3.2. Sampling Error in Packet Loss Ratio

3.3. Sampling Error in Jitter

3.4. Correlation of Packet Loss and Jitter

3.5. QoE Models for Video on Demand Applications

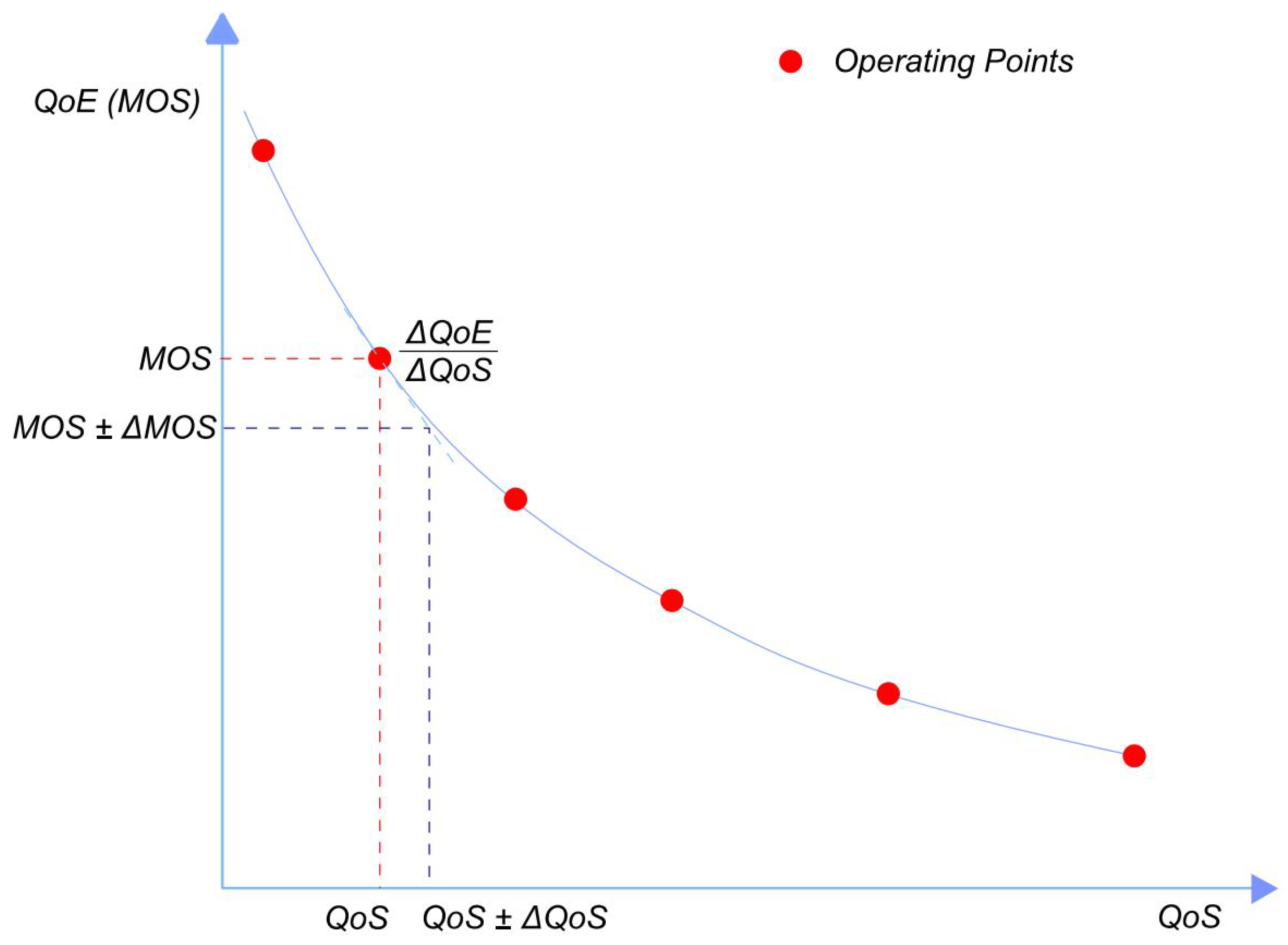

3.6. Our Approach to Evaluating Uncertainty in QoE due to Statistical Errors in QoS Measurements

3.7. Using Confidence Intervals to Model Uncertainty in QoE

4. Results

4.1. Statistical Error Propagation in QoE considering Correlation between PLR and Jitter

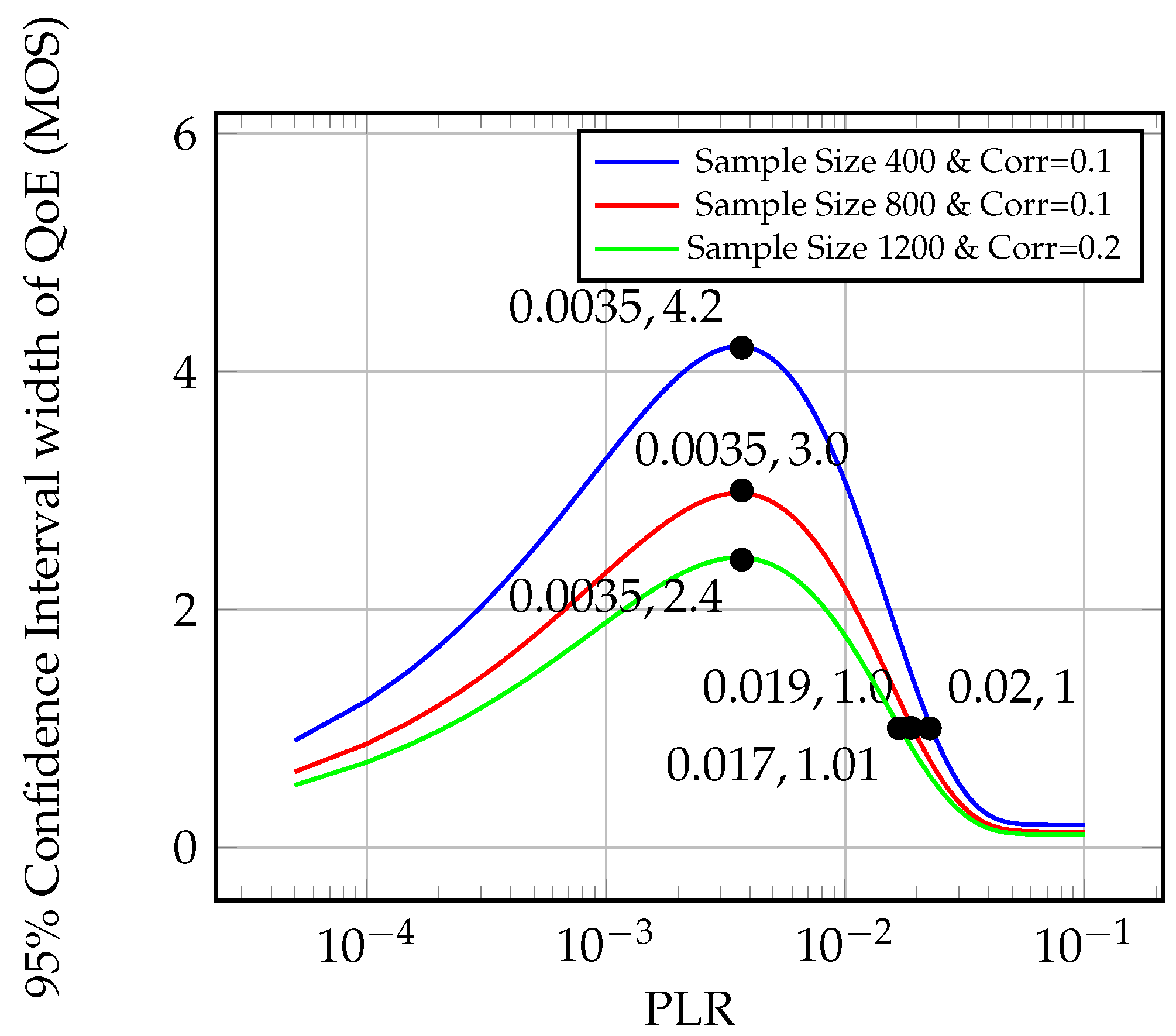

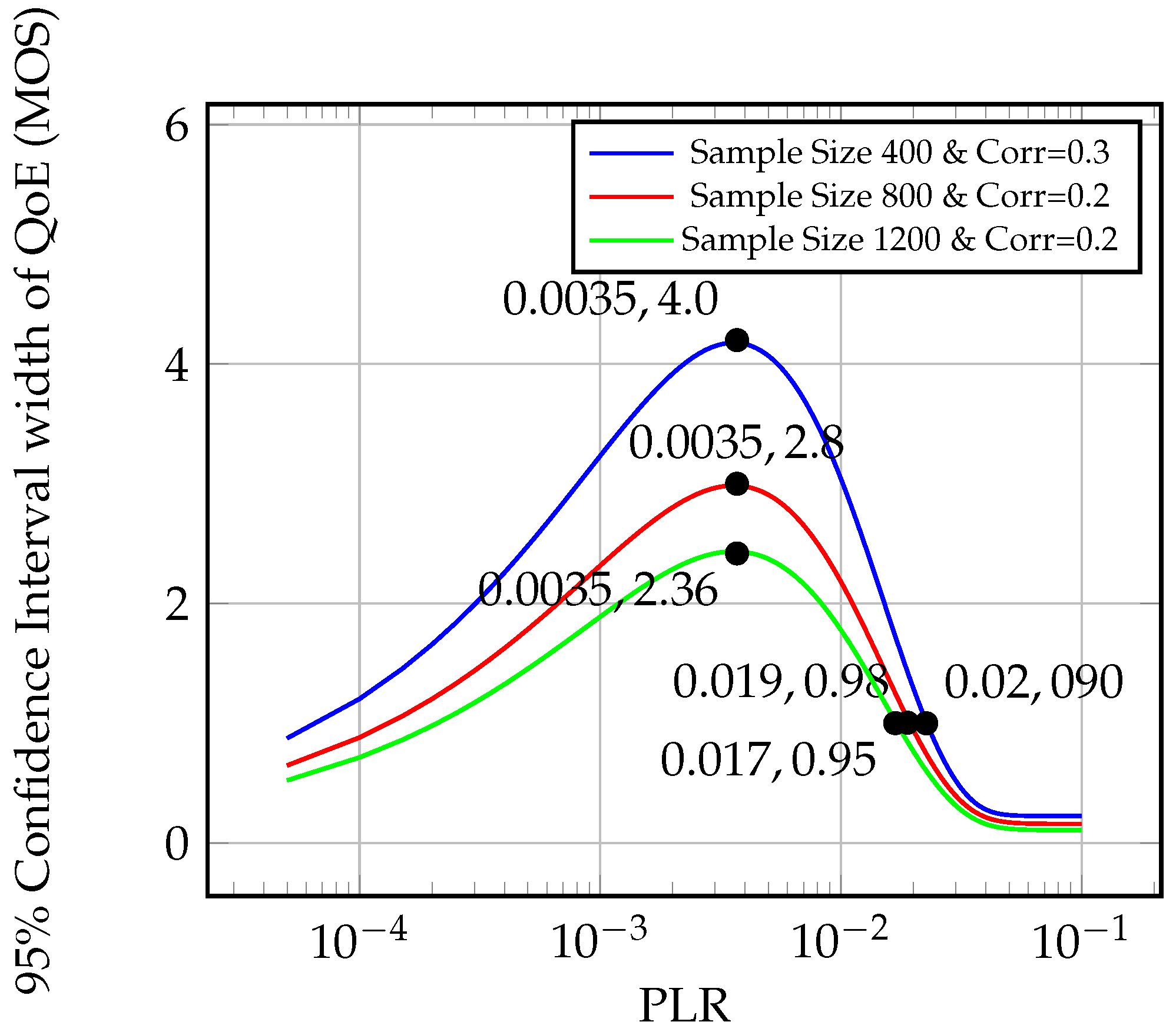

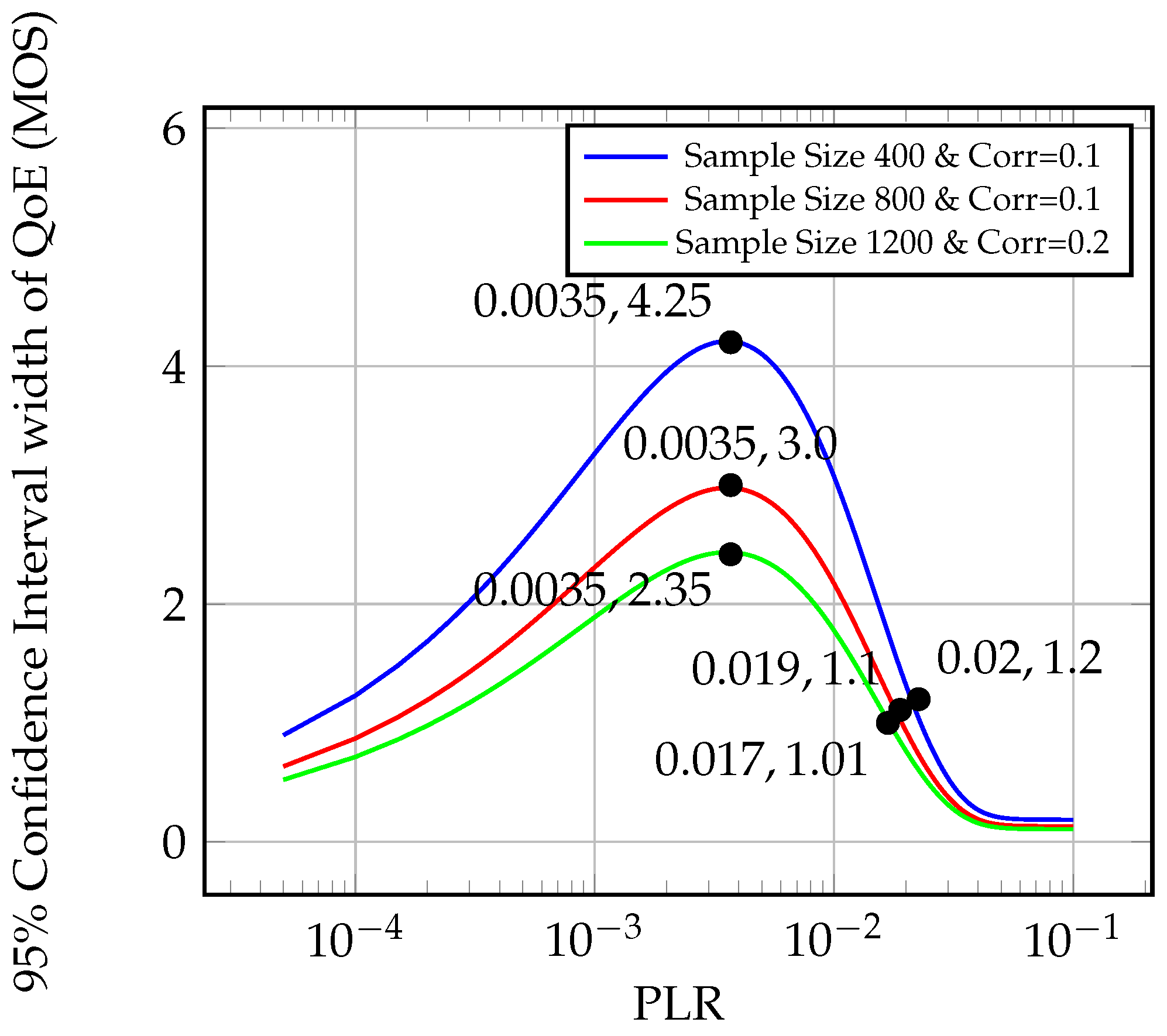

- Uncertainty (measured as CI width) in QoE has a peak somewhere between PLR = 0.001 and PLR = 0.01: this has great significance for network and service operators, as the mean PLR written into most SLAs is around the value of PLR > 0.001 and PLR < 0.01 [35].

- Uncertainty (measured as CI width) in QoE rises to a peak between PLR = 0.001 and PLR = 0.01, and then rapidly diminishes as PLR either increases or decreases.

- This shape is constant regardless of the jitter value, from very small (10 ms) to very large (80 ms) jitter values.

- The shape is also consistent regardless of the values of PCC, from very small (10%) to relatively larger (30%).

- The absolute predicted values of 95% CI width in QoE decreases for increasing sample size. However, for sample size 800 (UK sampling guideline) CI width in QoE is large and peaks at around 3 units of MOS.

4.2. Propagated Uncertainty in QoE due to Sub-Optimal Performance of NetEm

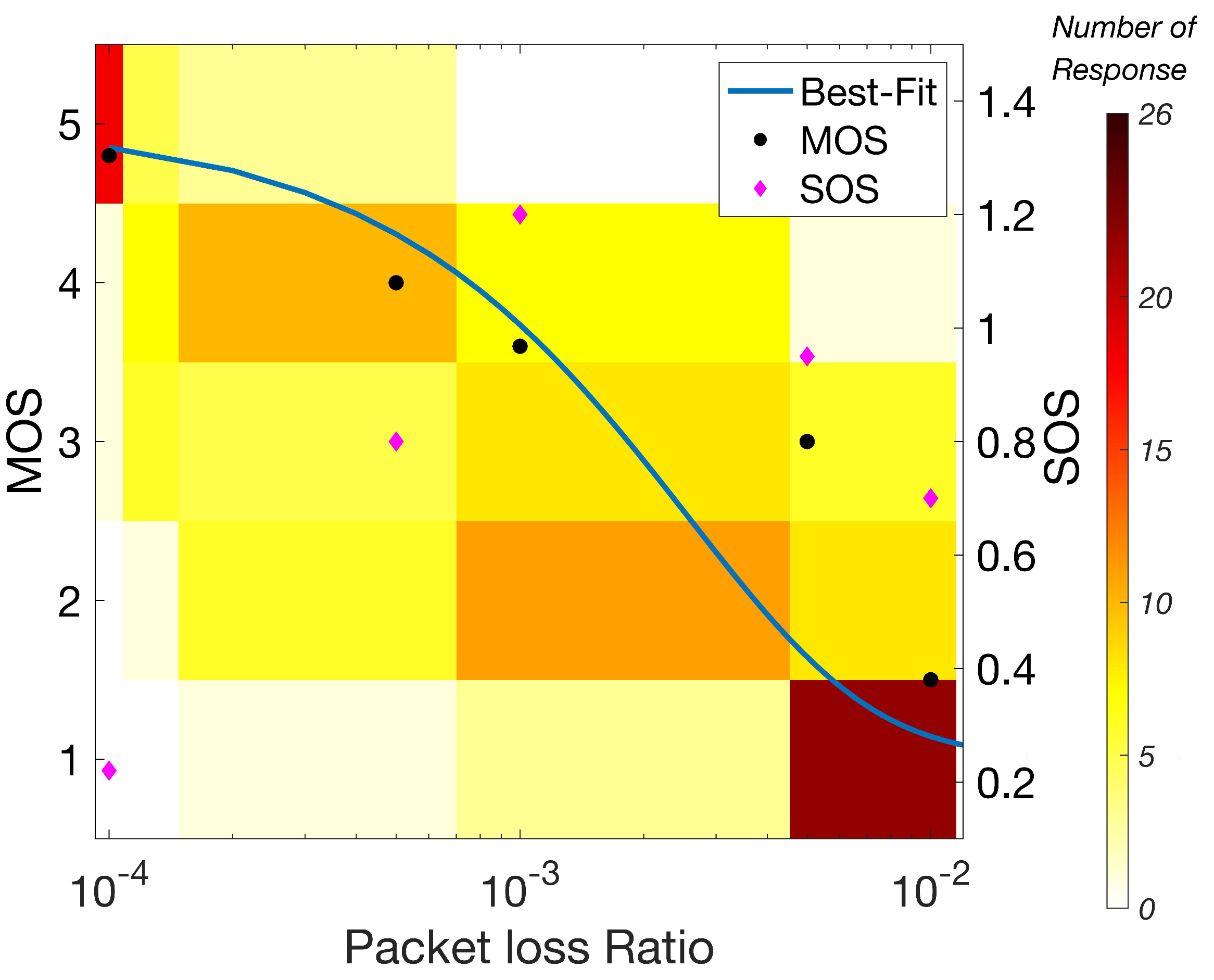

4.3. Variation in QoE Evaluation Due to Perception

5. Conclusions

Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ACR | Absolute Category Rating |

| CI | Confidence Interval |

| GoF | Goodness of Fit |

| ITU-T | International Telecommunication Union-Telecom |

| MOS | Mean Opinion Score |

| OTT | Over-the-Top |

| PCC | Pearson Correlation Coefficient |

| PLR | Packet Loss Ratio |

| QoE | Quality of Experience |

| QoS | Quality of Service |

| SLA | Service level Agreement |

| SOS | Standard deviation of Opinion Score |

| SRCC | Spearman Rank Correlation Coefficient |

| SSE | Sum of Squared estimate of Errors |

| UDP | User Datagram Protocol |

| TCP | Transport Control Protocol |

| VoD | Video-on-Demand |

References

- Watson, A. Number of Netflix Paying Streaming Subscribers World–Wide from 3rd Quarter 2011 to 4th Quarter 2019. Available online: https://www.statista.com/statistics/250934/quarterly-number-of-netflix-streaming-subscribers-worldwide (accessed on 12 February 2020).

- Telefonica: One Step Ahead in E2E QoE Assurance. Available online: https://www.huawei.com/uk/industry-insights/outlook286/customer-talk/hw_279053 (accessed on 24 August 2019).

- Wireline Video QoE Using U-vMOS. Available online: https://ovum.informa.com/$\sim$/media/informa-shop-window/tmt/files/whitepapers/huawei_wireline_video_online.pdf (accessed on 20 February 2020).

- Witbe QoE Snapshot #5—What Is The BEst neighborhood in London to Watch Videos on Mobile Devices? Available online: http://www.witbe.net/2019/05/14/snapshot5 (accessed on 1 March 2020).

- Timotijevic, T.; Leung, C.M.; Schormans, J. Accuracy of measurement techniques supporting QoS in packet-based intranet and extranet VPNs. IEE Proc. Commun. 2004, 151, 89–94. [Google Scholar] [CrossRef]

- Lan, H.; Ding, W.; Gong, J. Useful Traffic Loss Rate Estimation Based on Network Layer Measurement. IEEE Access 2019, 7, 33289–33303. [Google Scholar] [CrossRef]

- Parker, B.; Gilmour, S.G. Optimal design of measurements on queueing systems. Queueing Syst. 2015, 79, 365–390. [Google Scholar] [CrossRef] [Green Version]

- Ferrance, I.; Frenkel, R. Uncertainty of Measurement: A Review of the Rules for Calculating Uncertainty Components through Functional Relationships. The Clinical biochemist. Rev. Aust. Assoc. Clin. Biochem. 2012, 33, 49–75. [Google Scholar]

- Bell, S. Beginner’s Guide to Uncertainty of Measurements; National Physics Laboratory: Teddington, UK, 2013; pp. 1–10. [Google Scholar]

- Harding, B.; Tremblay, C.; Cousineau, C. Standard errors: A review and evaluation of standard error estimators using Monte Carlo simulations. Quant. Methods Psychol. 2014, 10, 107–123. [Google Scholar] [CrossRef]

- Roshan, M. Experimental Approach to Evaluating QoE. Ph.D. Thesis, Queen Mary University of London, London, UK, 2018. [Google Scholar]

- Khorsandroo, S.; Noor, R.; Khorsandroo, S. A generic quantitative relationship between quality of experience and packet loss in video streaming services. In Proceedings of the 2012 Fourth International Conference on Ubiquitous and Future Networks (ICUFN), Phuket, Thailand, 4–6 July 2012; pp. 352–356. [Google Scholar]

- Saputra, Y.M. The effect of packet loss and delay jitter on the video streaming performance using H.264/MPEG-4 Scalable Video Coding. In Proceedings of the 2016 10th International Conference on Telecommunication Systems Services and Applications (TSSA), Denpasar, Indonesia, 6–7 October 2016; pp. 1–6. [Google Scholar]

- Pal, D.; Vanijja, V. Effect of network QoS on user QoE for a mobile video streaming service using H.265/VP9 codec. Procedia Comput. Sci. 2017, 111, 214–222. [Google Scholar] [CrossRef]

- Ickin, S.; Vogeleer, K.D.; Fiedler, M. The effects of Packet Delay Variation on the perceptual quality of video. In Proceedings of the IEEE Local Computer Network Conference, Denver, CO, USA, 10–14 October 2010; pp. 663–668. [Google Scholar]

- Koukoutsidis, I. Public QoS and Net Neutrality Measurements: Current Status and Challenges Toward Exploitable Results. J. Inf. Policy 2015, 5, 245–286. [Google Scholar] [CrossRef]

- McDonald, J.H. Handbook of Biological Statistics. Available online: http://www.biostathandbook.com/confidence.html (accessed on 6 May 2020).

- Briggs, W. Uncertainty: The Soul of Modelling, Probability and Statistics; Springer: Berlin/Heidelberg, Germany, 2016; pp. 140–200. [Google Scholar]

- Video Quality of Service (QOS) Tutorial. Available online: https://www.cisco.com/c/en/us/support/docs/quality-of-service-qos/qos-video/212134-Video-Quality-of-Service-QOS-Tutorial.pdf (accessed on 1 January 2020).

- Verma, S. Flow Control for Video Applications, in Internet Congestion Control; Morgan Kaufmann Publishers: Burlington, MA, USA, 2015; pp. 173–180. [Google Scholar]

- Xu, Z.; Zhang, A. Network Traffic Type-Based Quality of Experience (QoE) Assessment for Universal Services. Appl. Sci. 2019, 9, 4107. [Google Scholar] [CrossRef] [Green Version]

- Orosz, P.; Skopko, T.; Nagy, Z. A No-reference Voice Quality Estimation Method for Opus-based VoIP Services. Int. J. Adv. Telecommun. 2014, 7, 12–21. [Google Scholar]

- Hossfeld, T.; Heegard, P.E.; Varela, M. QoE beyond the MOS: Added Value Using Quantiles and Distributions. In Proceedings of the 2015, Seventh International Workshop on Quality of Multimedia Experience (QoMEX), Pylos-Nestoras, Greece, 26–29 May 2015; pp. 1–6. [Google Scholar]

- Seufart, M. Fundamental Advantages of Considering Quality of Experience Distributions over Mean Opinion Scores. In Proceedings of the 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Berlin, Germany, 5–7 June 2019; pp. 1–6. [Google Scholar]

- Mondragon, R.; Moore, A.; Pitts, J.; Schormans, J. Analysis, simulation and measurement in large-scale packet networks. IET Commun. 2009, 3, 887–905. [Google Scholar] [CrossRef]

- Sommers, J.; Barford, P.; Duffield, N. Improving accuracy in end-to-end packet loss measurement. In Proceedings of the 2005 Conference on Applications, Technologies, Architectures, and Protocols for Computer Communications, Philadelphia, PA, USA, 22–26 August 2005; pp. 157–168. [Google Scholar]

- Nguyen, H.X.; Roughan, M. Rigorous Statistical Analysis of Internet Loss Measurements. IEEE Trans. Netw. 2013, 21, 734–745. [Google Scholar] [CrossRef] [Green Version]

- Wahid, A.A.; Schormans, J. Bounding the maximum sampling rate when measuring PLP in a packet buffer. In Proceedings of the 2013 5th Computer Science and Electronic Engineering Conference (CEEC), Colchester, UK, 17–18 September 2013; pp. 115–118. [Google Scholar]

- ITU. ITU-T Rec. G.1032, “Influence Factors on Gaming Quality of Experience”; ITU: Geneva, Switzerland, 2017. [Google Scholar]

- Ahn, S.; Fessler, J.A. Standard Errors of Mean, Variance, and Standard Deviation Estimators. 2003. Available online: https://web.eecs.umich.edu/fessler/papers/files/tr/stderr.pdf (accessed on 15 December 2019).

- Jurgelionis, A.; Laulajainen, J.-P.; Hirvonen, M.; Wang, A.I. An Empirical Study of NetEm Network Emulation Functionalities. In Proceedings of the 20th International Conference on Computer Communications and Networks (ICCCN), Maui, HI, USA, 31 July–4 August 2011. [Google Scholar]

- ITU. ITU-R Rec.P.910 Subjective Video Quality Assessment Method for Multimedia Applications; ITU: Geneva, Switzerland, 2018. [Google Scholar]

- ITU. ITU-R Rec.BT.500 Methodologies for the sUbjective Assessment of the Quality of Television Images; ITU: Geneva, Switzerland, 2019. [Google Scholar]

- PSN Quality of Service (QoS) Testing and Reporting. Available online: https://www.gov.uk/government/publications/psn-quality-of-service-qos-testing-and-reporting/psn-quality-of-service-qos-testing-and-reportingtest-requirements (accessed on 10 December 2019).

- ITU. Telecommunication Development Sector. Quality of Service Regulation Manual; ITU: Geneva, Switzerland, 2017. [Google Scholar]

- Hossfeld, T.; Schatz, R.; Egger, S. SOS: The MOS is not enough! In Proceedings of the 2011 Third International Workshop on Quality of Multimedia Experience, Mechelen, Belgium, 7–9 September 2011. [Google Scholar]

- Lübke, R.; Büschel, P.; Schuster, D.; Schill, A. Measuring accuracy and performance of network emulators. In Proceedings of the 2014 IEEE International Black Sea Conference on Communications and Networking (BlackSeaCom), Odessa, Ukraine, 27–30 May 2014; pp. 63–65. [Google Scholar]

| Service Providers | PCC between Jitter and PLR | SCC between Jitter and PLR |

|---|---|---|

| A | 0.22 | 0.18 |

| B | 0.19 | 0.16 |

| C | 0.28 | 0.19 |

| D | 0.35 | 0.25 |

| PLR Operating Points | (MOS) |

|---|---|

| 0.0005 | −562.7 |

| 0.001 | −519.8 |

| 0.005 | −293.4 |

| 0.01 | −155.2 |

| 0.05 | −1.6 |

| Jitter Operating Points (ms) | (MOS/ms) |

|---|---|

| 10 | −0.06 |

| 20 | −0.05 |

| 40 | −0.03 |

| 80 | −0.01 |

| PLR Configuration (%) | Absolute Standard Error in PLR (%) | Relative Standard Error in PLR | Propagated Standard Error in QoE (MOS) | Propagated CI Width of QoE (MOS) |

|---|---|---|---|---|

| 0.05 | 0.016 | 0.320 | 0.10 | 0.40 |

| 0.10 | 0.015 | 0.150 | 0.10 | 0.40 |

| 0.50 | 0.036 | 0.072 | 0.12 | 0.45 |

| 1.0 | 0.074 | 0.074 | 0.23 | 0.50 |

| 5 | 0.160 | 0.032 | 0.02 | 0.10 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wahab, A.; Ahmad, N.; Schormans, J. Statistical Error Propagation Affecting the Quality of Experience Evaluation in Video on Demand Applications. Appl. Sci. 2020, 10, 3662. https://doi.org/10.3390/app10103662

Wahab A, Ahmad N, Schormans J. Statistical Error Propagation Affecting the Quality of Experience Evaluation in Video on Demand Applications. Applied Sciences. 2020; 10(10):3662. https://doi.org/10.3390/app10103662

Chicago/Turabian StyleWahab, Abdul, Nafi Ahmad, and John Schormans. 2020. "Statistical Error Propagation Affecting the Quality of Experience Evaluation in Video on Demand Applications" Applied Sciences 10, no. 10: 3662. https://doi.org/10.3390/app10103662