1. Introduction

The particle filter [

1] is an important probabilistic method for state tracking and estimation [

2,

3], being widely utilized in robotics. For instance, particle filters are widely applied in mobile robots’ self-localization [

4,

5] and visual tracking [

6,

7]. Three major factors greatly affect the performance of particle filters; namely, measurement model, state space, and motion model. The likelihood function in a measurement model is used for calculating the weights of particles and contributes to accurate state estimation. The particle filter distributes particles in the state space and performs estimation in the state space. The state space affects the number of particles that determines the computational cost of estimation. In addition, the motion model is applied in the state space for state tracking and enables the particle state update to the next state.

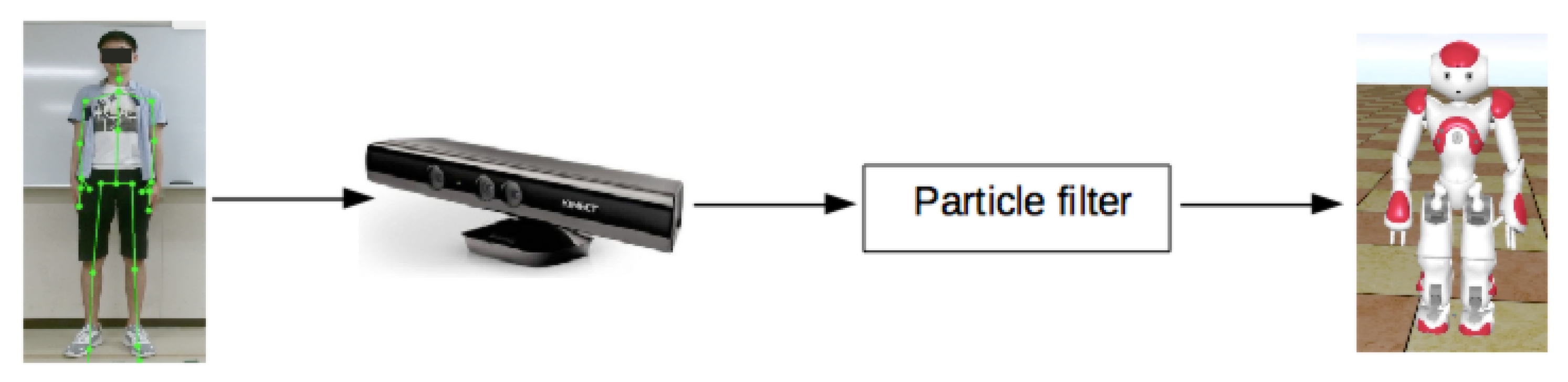

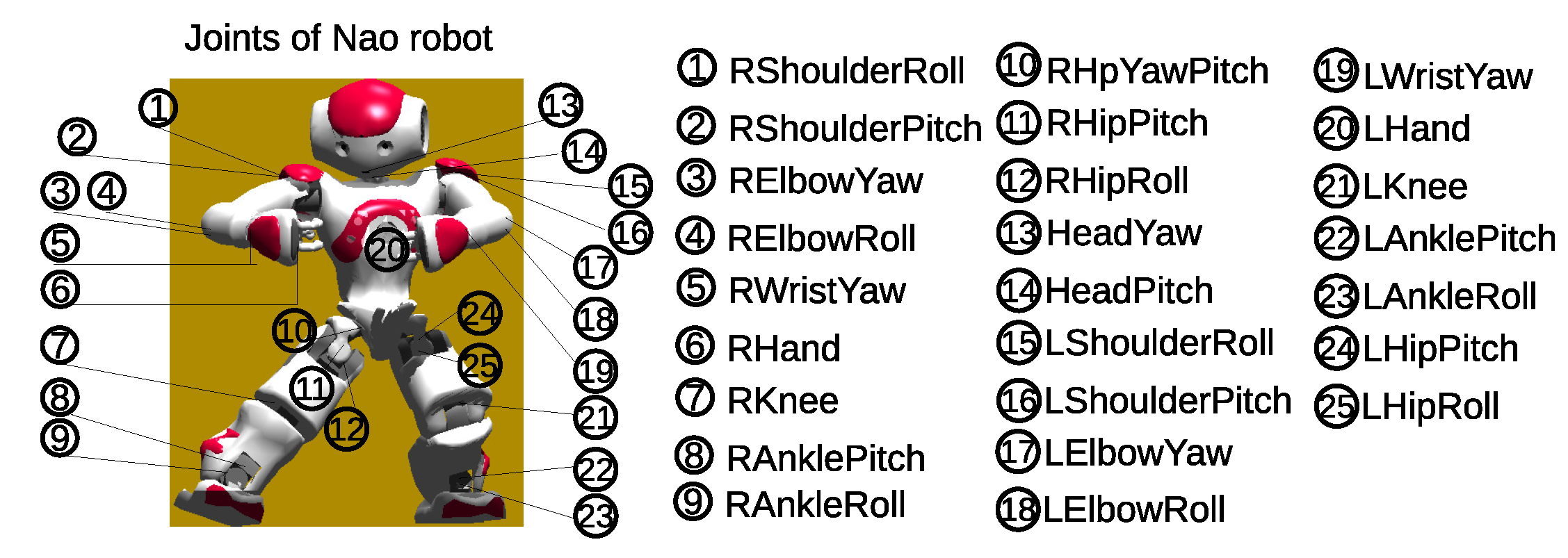

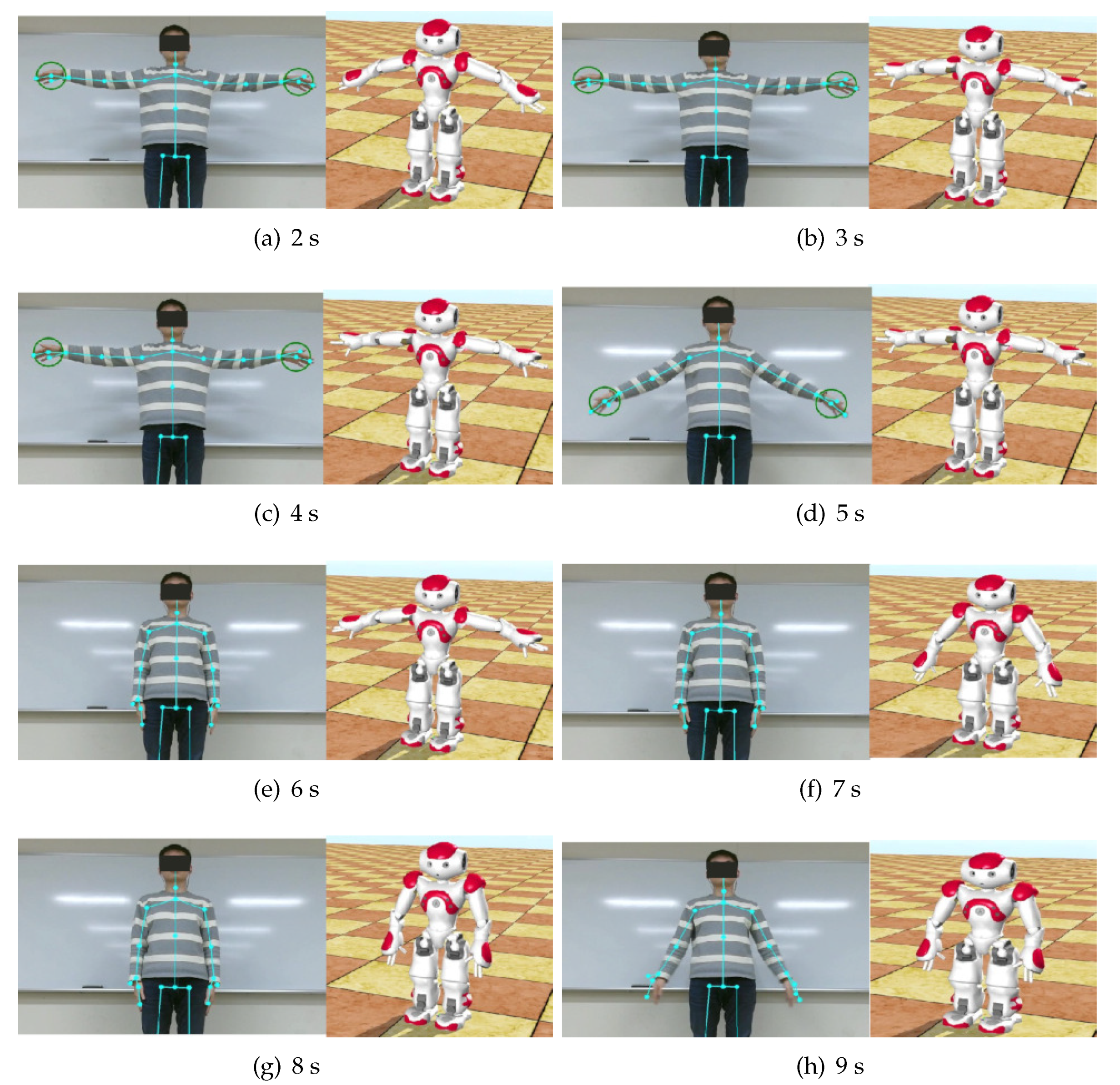

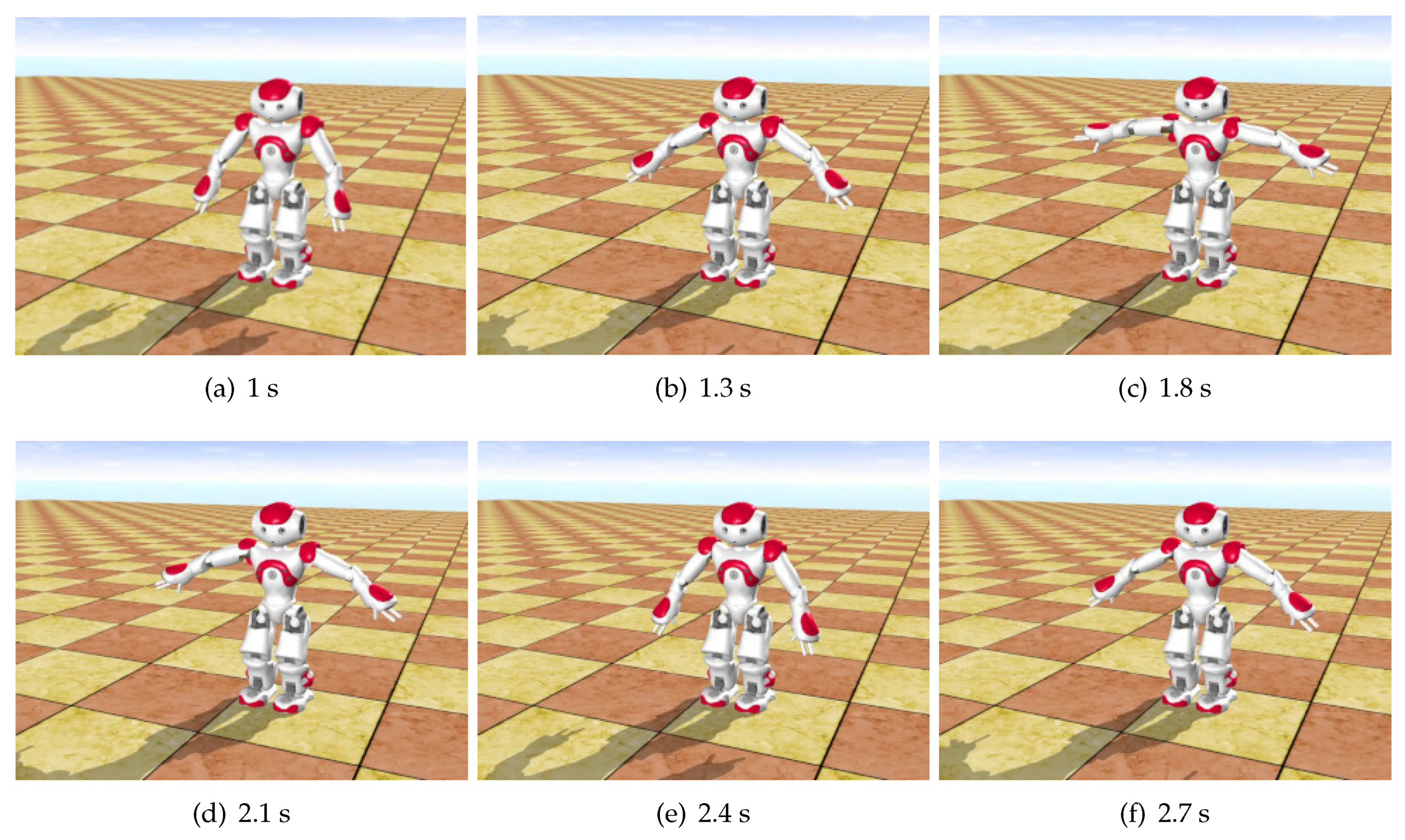

Figure 1 shows a real-time human motion imitation system using a particle filter with data acquired from a Microsoft Kinect sensor. The joint angles of the humanoid robot are estimated in real time to mirror the human motions. Note that a humanoid robot usually comprises dozens of joints, such as the NAO humanoid robot shown in

Figure 2, which has 25 actuated joints (degrees of freedom—DOFs). Conventionally, a particle filter would be applied to the 25-dimensional (25D) state space, including all the joints, to estimate the corresponding angles in the humanoid robot for imitation. This high-dimensional state space demands increasing the number of particles to ensure adequate accuracy. Consequently, the computational cost becomes relatively high, as state estimation with a particle filter is very complex for high-dimensional state spaces.

Usually, a particle filter applied to a high-dimensional state space requires many particles to perform accurate estimation, and the computational cost increases exponentially. The computational cost becomes a serious problem for humanoid robots given their usual structure with many DOFs. Hence, reducing the state space efficiently helps to constrain the computational cost of the particle filter. Such reduction can be achieved by replacing the high-dimensional state space with a low-dimensional and more efficient state space via various methods [

8].

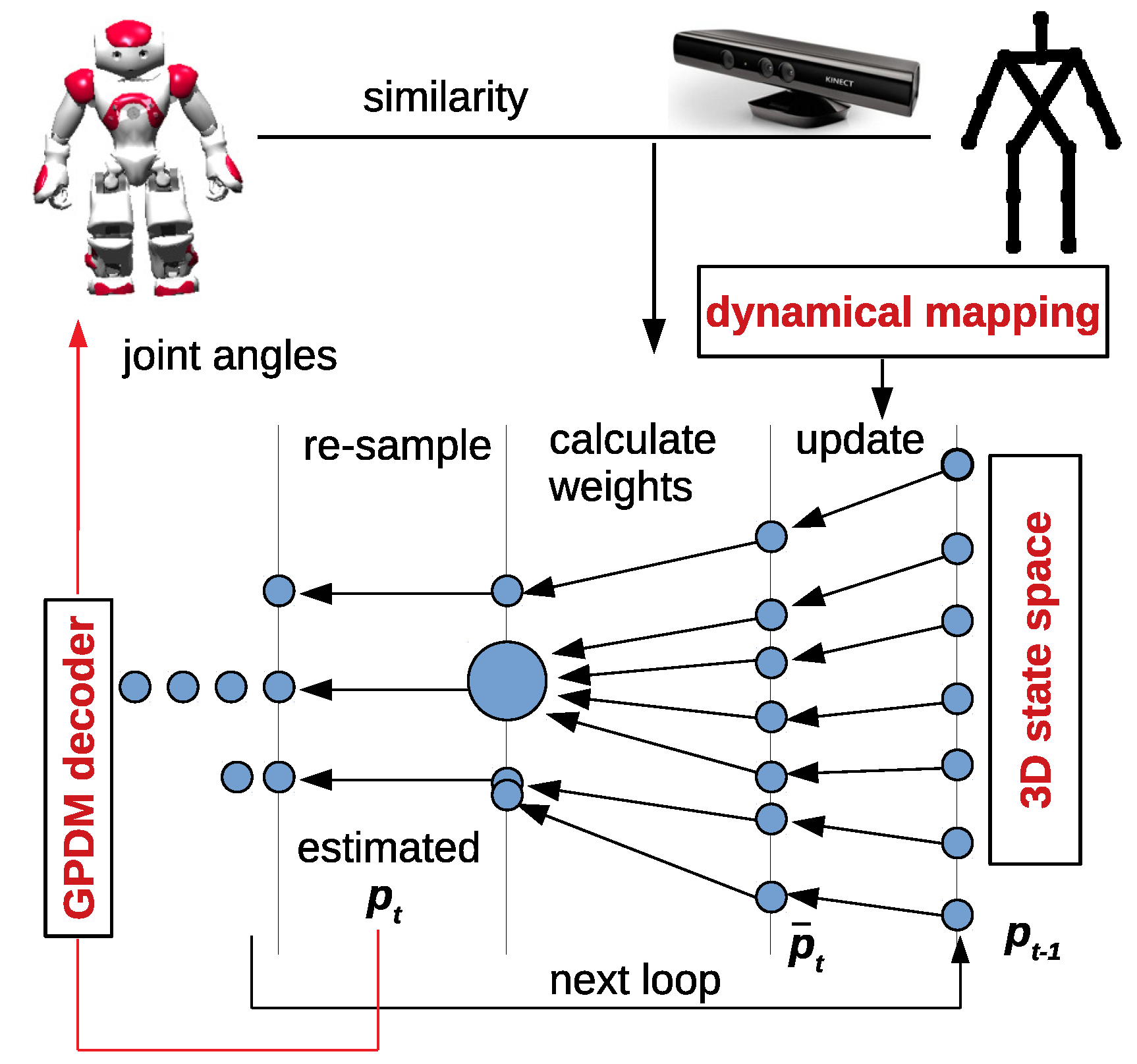

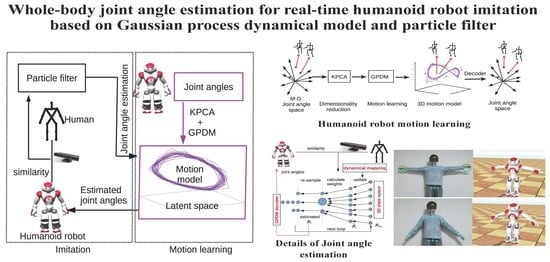

The goal of our work is to construct a real-time humanoid robot imitation system with low computational cost using a particle filter and a Gaussian process dynamical model (GPDM). One of the contributions of the work lies in combining a particle filter and GPDM. The high-dimensional state space is replaced by a GPDM learned, low-dimensional, latent state space to reduce the computational cost of state estimation using a particle filter. A GPDM learned model defines the motion model of the particle filter.

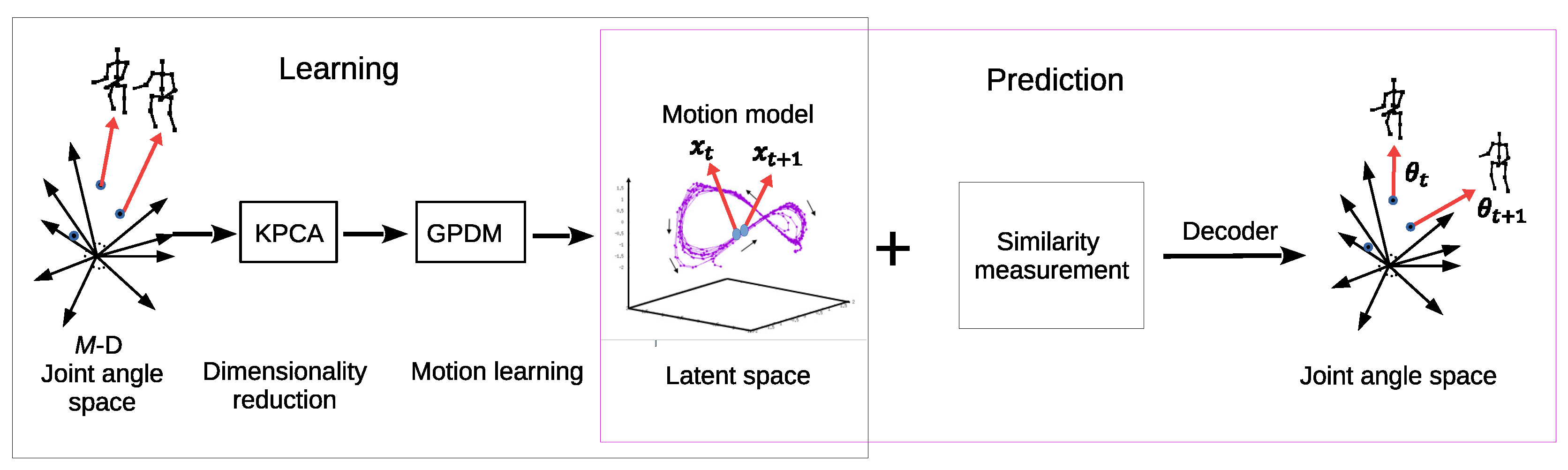

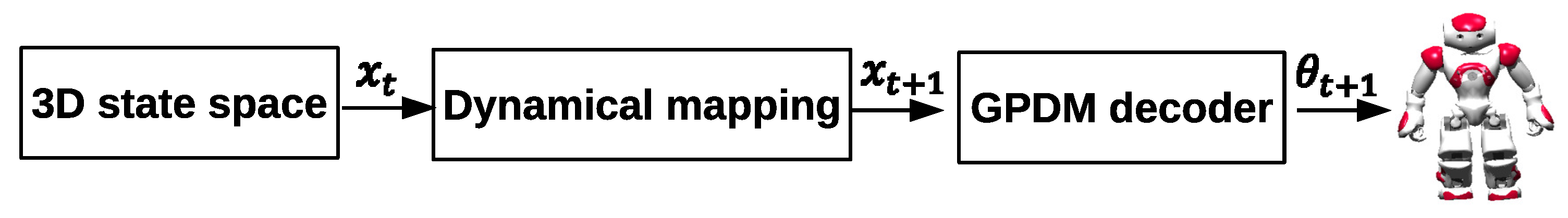

The architecture of the proposed system is illustrated in

Figure 3. Motion data of a humanoid robot are collected as sequences of joint angles. A low-dimensional latent space and a motion model are constructed based on the motion data. Then, a human demonstrator performs a motion in front of a motion capture system. Our system explores an appropriate set of joint angles for the humanoid robot to imitate the demonstrated motion in real time.

Principal component analysis (PCA) [

9] is an efficient and simple method for dimensionality reduction of data. Although it enables learning in low-dimensional latent space, it cannot provide a motion model for particle state tracking and may not be suitable for the nonlinear behavior of humanoid robot joints. Alternatively, kernel PCA [

10] allows efficiently learning nonlinear data. Still, it also lacks a motion model for state tracking. Hence, a dynamical motion model should be constructed to realize particle state tracking in the latent state space.

Gaussian process dynamical models (GDPMs) [

11] are efficient for motion learning and suitable for dynamical motion modeling in the latent state space. Urtasunet et al. [

12] and Kim et al. [

13] proved that human dynamical motion models can be constructed in a low-dimensional latent space. Humanoid robot dynamical motion models were learned in the research by Mi and Takahashi [

14] by using kernel PCA-GPDM. Compared with conventional neural network methods, GPDM has advantages over learning motion models with modest amounts of training data. It is efficient in motion learning and the time cost is much less.

In this study, the main function of GPDM is to construct a compact state space and build motion models. Then, we replace the state space of particle filter with kernel PCA-GPDM learned compact latent state space, and efficient humanoid robot motion models based on GPDMs are applied to state tracking in the learned latent state space based on a particle filter.

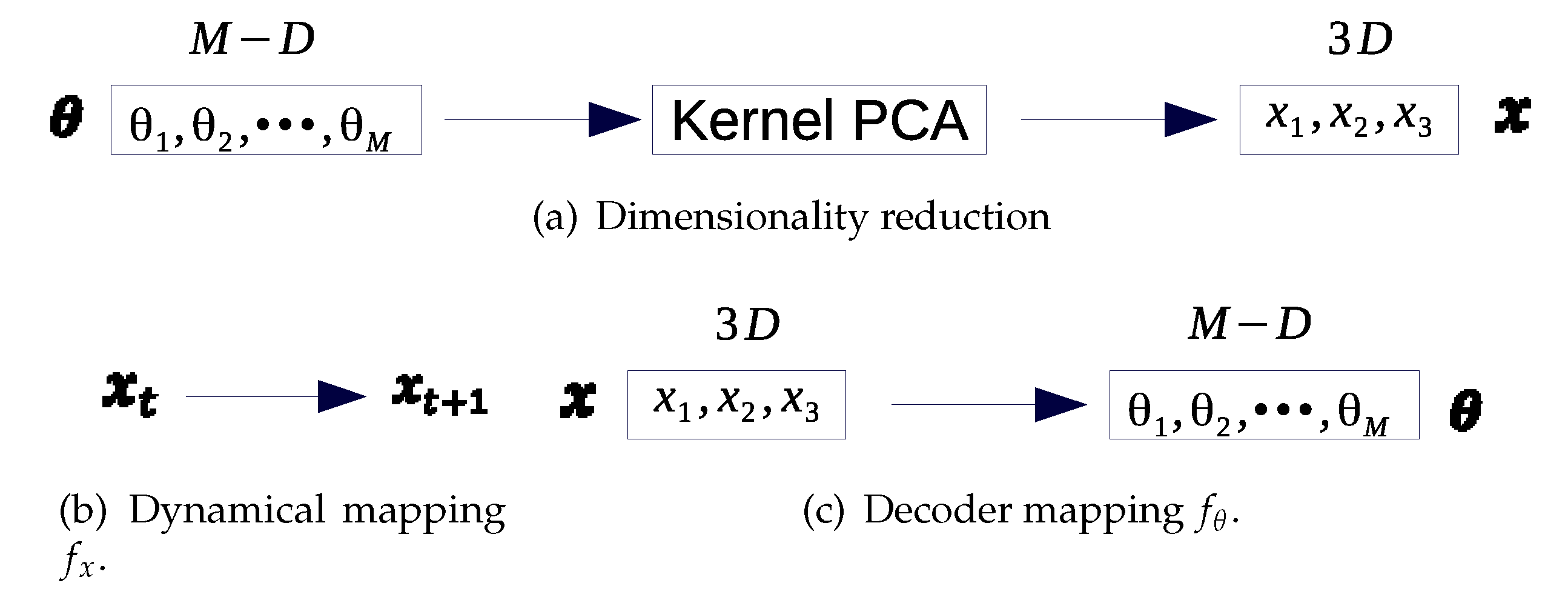

Following the architecture shown in

Figure 3, we use a particle filter and GPDM learning to realize high-dimensional humanoid robot state estimation in a reduced 3D latent space at low computational cost.

Figure 4 details the humanoid robot motion learning and prediction system. The high-dimensional space of humanoid robot joint angles is first reduced to a 3D state space using kernel PCA. Then, a 3D dynamical motion model is learned by GPDM. A decoder is used to reconstruct the humanoid robot joint angles to ensure that the learned 3D latent variables can be mapped onto the joint angle space, such that the learned motion models can be applied to particle-filter state estimation. A 3D latent state space is obtained for reducing the computational cost of robot state estimation. The learned 3D dynamical motion models are applied to the particle filter for particle state tracking. Specifically, a prediction system with similarity measurements based on the dynamical motion models is proposed for humanoid robot state estimation.

The main contributions of this paper can be summarized as follows.

We construct a real-time humanoid robot imitation system with low computational cost based on particle filter and GPDM. The high-dimensional state space is replaced by the GPDM learned compact state space and the GPDM model defines the motion model of the particle filter.

A prediction system with similarity measurement for state tracking of the particle filter is proposed to improve state estimation accuracy.

Experiments demonstrate that the proposed particle filter can estimate the joint angles of a humanoid robot efficiently using the GPDM in the 3D state space for real-time imitation of human motion.

2. Related Works

Mi and Takahashi [

15] performed robot state estimation with particle filter in a 3D state space. However, the conventional particle filter suffers the problem of a high computational cost when a particle filter is applied in high-dimensional state space. Su et al. [

6] combined a particle filter and a visual significance model to solve the sudden movement tracking problem. By embedding a visual significance model into particle filter, their method was efficient in handling abrupt motion tracking problems. However, their particle filter method was also applied in a 3D state space so that it suffers the same problem as the conventional particle filter. In addition, their method is not suitable for estimating high-dimensional humanoid robot joint angles. Zhang [

7] proposed a novel correction particle filter (CPF) for robust visual tracking. Similarly to the method in [

6], the CPF method was efficient at handling partial occlusion and scale variation for object tracking; however, it is not suitable for constructing a real-time humanoid robot imitation system.

Cao et al. [

16] proposed an approach to detect 2D pose of people in an image. Mehta et al. [

17] presented a real-time method to capture the full global 3D skeletal pose of a human using a single RGB camera. Those studies used a convolutional neural network (CNN) method to estimate human pose. Unlike those studies, we aimed to estimate a humanoid robot’s pose instead of a human’s pose. There is no necessity to build complex neural networks to capture human poses for the purpose of estimating humanoid robot joint angles. A RGB-D camera, such as Kinect, is more convenient for us to capture human poses.

Capecci et al. [

18] used a RBG-D camera to extract human motion features and developed a hidden semi-Markov model (HSMM) based approach to evaluate the performance of people affected by motor disabilities. They proposed a HSMM based method to model human motions for the purpose of evaluating the correctness of rehabilitation exercises. Unlike their research, for the purpose of constructing a real-time imitation system with low computational cost, we combine GPDM and particle filter to estimate high-dimensional humanoid robot joint angles. We first model humanoid robot motions in a compact state space using GPDM. Then, the estimation is performed in a GPDM learned compact state space, and finally, we restore the joint angles from the compact state space to the high-dimensional humanoid robot joint angle space.

Kondo and Takahashi [

8] proved that a high-dimensional state space can be replaced by a low-dimensional latent space learned by dimension reduction. They utilized an autoencoder, a type of artificial neural network, to acquire a 3D state space from the original high-dimensional space of the humanoid robot joint angles. A particle filter was then applied to the resulting 3D state space, achieving state estimation of the humanoid robot with a relatively low computational cost. Unfortunately, the autoencoder constructed the 3D state space to replace the high-dimensional space without the required motion models for particle state tracking. Therefore, they assumed that motion in the 3D space was random according to a Gaussian distribution. An appropriate motion model realizes efficient state tracking from current state

to next state

.

Humanoid robot dynamical motion models were learned in the research by Mi and Takahashi [

14] by using kernel PCA-GPDM. In their research, they only constructed 3D humanoid robot motion models, but the learned motion models were not verified. In this paper, we combine the particle filter and GPDM to realize real-time humanoid robot imitation with low computational cost.

3. Humanoid Robot State Estimation Based on a Particle Filter

In this section, we first introduce a humanoid robot imitation system based on particle filter with neither dimension reduction of the state space nor motion model construction. Then, we highlight the drawbacks of this system, motivating the use of GPDM.

We used the NAO humanoid robot manufactured by Softbank Robotics for the experiments of state estimation in the imitation system. A Microsoft Kinect V2 sensor was used for acquiring human motion data. The joint angles of the humanoid robot were estimated by a particle filter, as the robot imitated human motion. Our experimental procedure is close to that by Kondo et al. [

8] and Takahashi and Sakakibara [

19]. The humanoid robot imitates human motions using the particle filter system, as shown in

Figure 1. Human skeleton joint data are obtained from the Kinect sensor, and the robot joint angles are estimated using the particle filter. The state space of the particle filter system has 25 dimensions, corresponding to the 25 actuated joints of the robot.

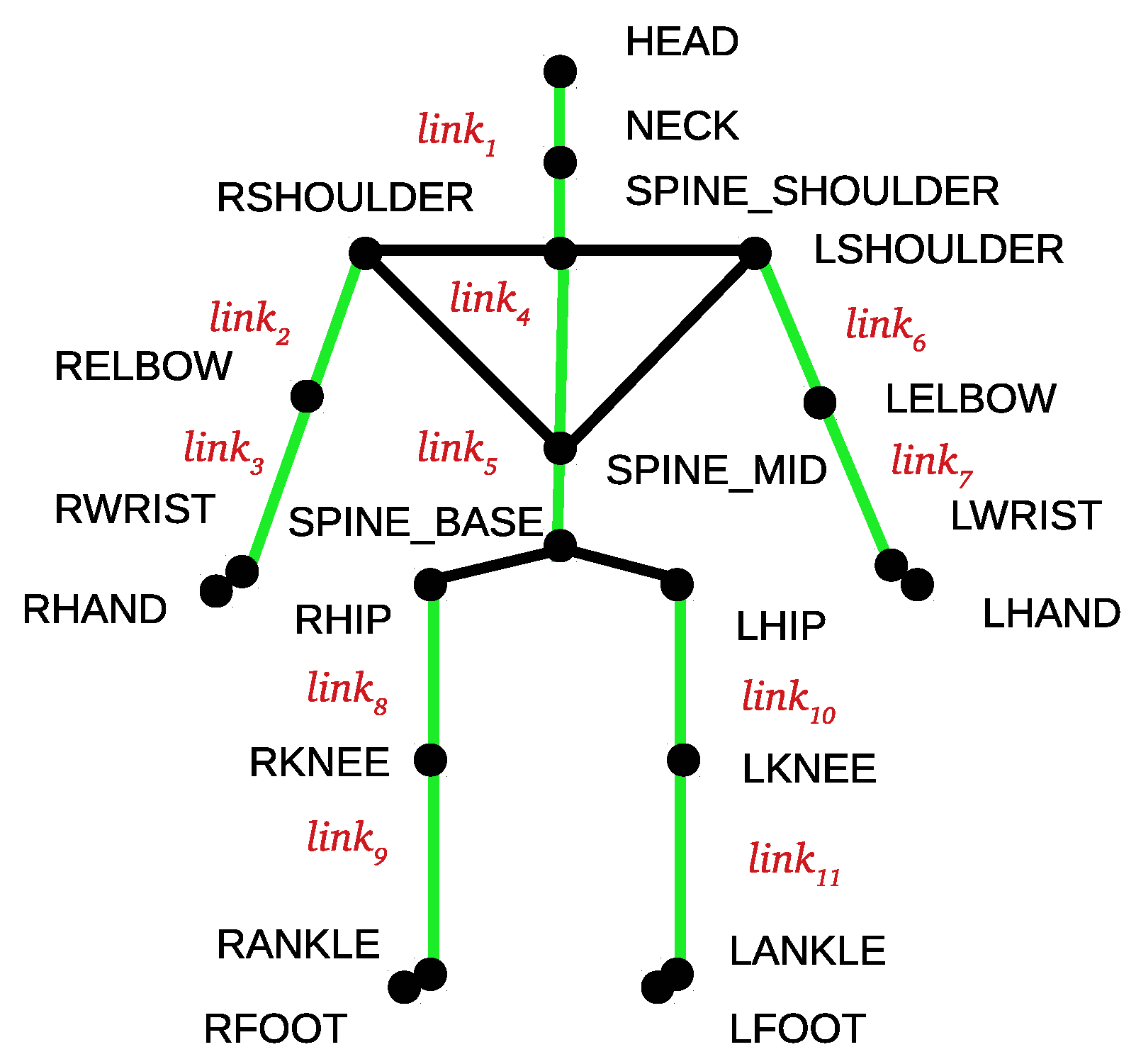

The human skeleton joints detected by the Kinect sensor are used for calculating the particle weights during the estimation of the humanoid robot joint angles. The joints of the human skeleton model are shown in

Figure 5. The elements in vector

are shown as green lines and correspond to the model links, which were used to calculate the similarity between the human and robot poses. The similarity is used for calculating the particle weights. Link

joins HEAD and SPINE_SHOULDER, and the other links, similarly join other skeleton joints to resemble the human body structure. The links of the humanoid robot are defined similarly, and the corresponding vector is denoted as

. Pose similarity

between the human and robot is calculated by a cosine similarity measurement:

where

is the number of links. Then, weight

w of a particle is calculated by the following likelihood function:

The particles are defined in a 25D joint angle space, and the particle set is denoted as

, where

Q is the number of particles. Each particle is given by

, where

M is number of the humanoid robot joints. The estimation process using particle filter is detailed in Algorithm 1.

A motion model is also important for particle state tracking in the particle filter. However, it is difficult to determine an analytical function for the 25D space of the robot joint angles. Instead, a simple motion model can be defined as

where

is the sampling time and

denotes Gaussian noise with zero mean and variance

. Below, we replace the motion model with one based on GPDM.

The joint angles of the humanoid robot can be estimated as the weighted mean of the particles:

where

is the weight of particle

q at time

t.

| Algorithm 1: Particle filter for humanoid robot joint estimation |

- 1:

Initialize particle sets and ; - 2:

for to Q do - 3:

Update particles based on motion model: ; - 4:

Calculate particle weights: ; - 5:

; - 6:

end for - 7:

Clear set ; - 8:

for to Q resample particles do - 9:

Draw q from with probability ; - 10:

Add to ; - 11:

end for - 12:

Return ;

|

The 25D state space leads to a very high computational cost of the particle filter. This hinders estimation of joint angles for humanoid robot imitation in real time.

Figure 6 shows an example sequence of humanoid robot joint angle estimation in real time using this implementation of the particle filter. As it is difficult to estimate 25 joint angles in real time directly, we estimated the joint angles of only the left and right arms, reducing the state space to 12 dimensions. In this case, the number of particles

. Even when only estimating 12 joint angles, a delay exists, as seen in

Figure 6. From

Figure 6c–e, the human motion takes about 2 s, whereas the humanoid robot needs approximately 4 s to follow the movement, with a substantial lag of approximately 2 s. Note that the computational cost would be even higher when estimating the 25 joint angles.

To reduce the computation time, we use GPDM learning to construct a reduced 3D state space with a humanoid robot dynamical motion model. Then, the humanoid robot joint angles are estimated in the learned 3D state space at low computational cost.

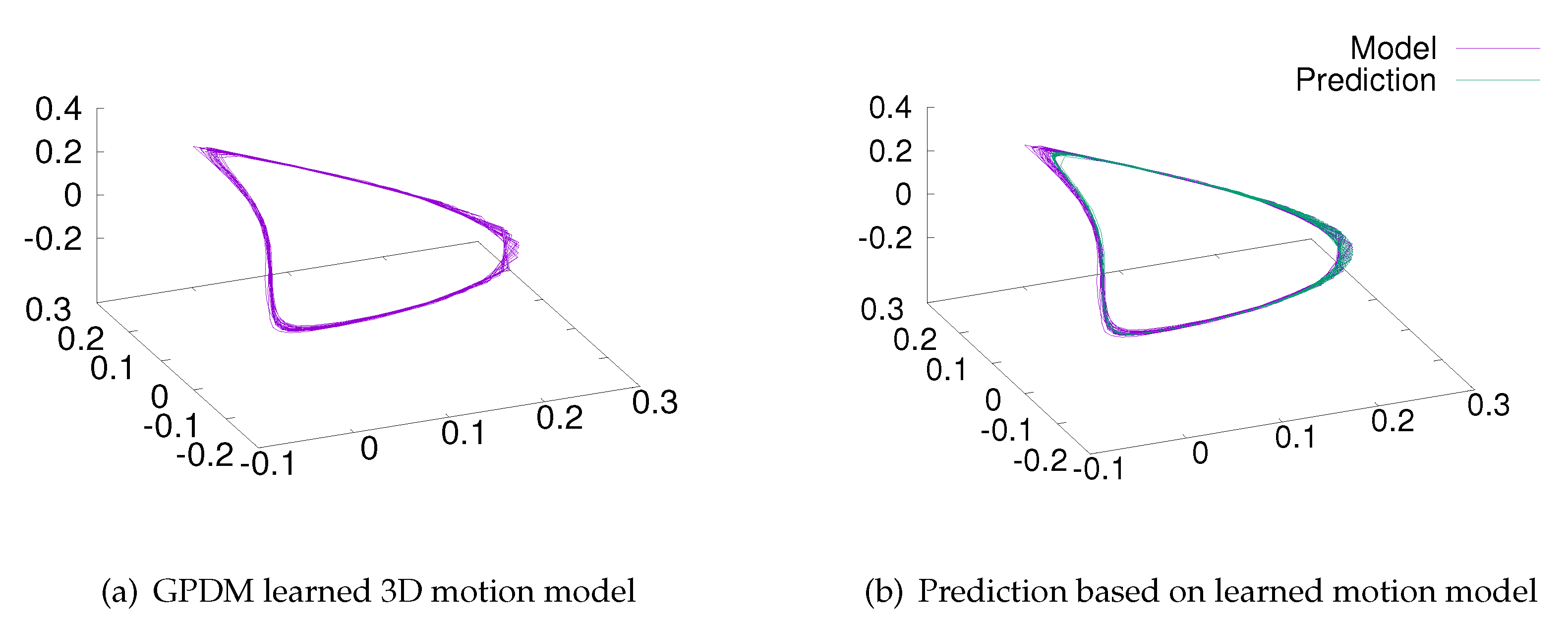

5. Motion Learned Using Gaussian Process Dynamical Model

We obtained the humanoid robot joint angles using the particle filter estimation detailed in

Section 3. The humanoid robot was supposed to wave its hands, as shown in

Figure 6. The learning data set

X comprised 500 frames of the humanoid robot joint angles selected from 1000 frames of continuous motion data.

The learning hyperparameters were initially set to

,

, and

(for more details, please see [

14]). The GPDM learned 3D motion models are illustrated in

Figure 8a. According to [

11], if learned trajectories are smooth, prediction based on the learned motion models tends to be accurate. We used the GPDM [

21] to make predictions, as Gaussian process prediction [

11] retrieves a dynamical mapping for state transition and GPDM decoder mapping to reconstruct the humanoid robot joint angles.

For dynamical mapping

in the 3D state space, state

is predicted from

as follows:

Similarly, for GPDM decoder mapping

, given latent state

, humanoid robot joint angle

can be reconstructed as

In dynamical prediction mapping

and decoder mapping

,

and

represent the corresponding variances.

and

are kernel functions.

and

are kernel matrix.

Figure 8b depicts the modeled and predicted 3D latent variables. The predicted latent variables are consistent and mostly overlap with the learned GPDM for motion. Hence, dynamical mapping

and the learned GPDM motion model are accurate.

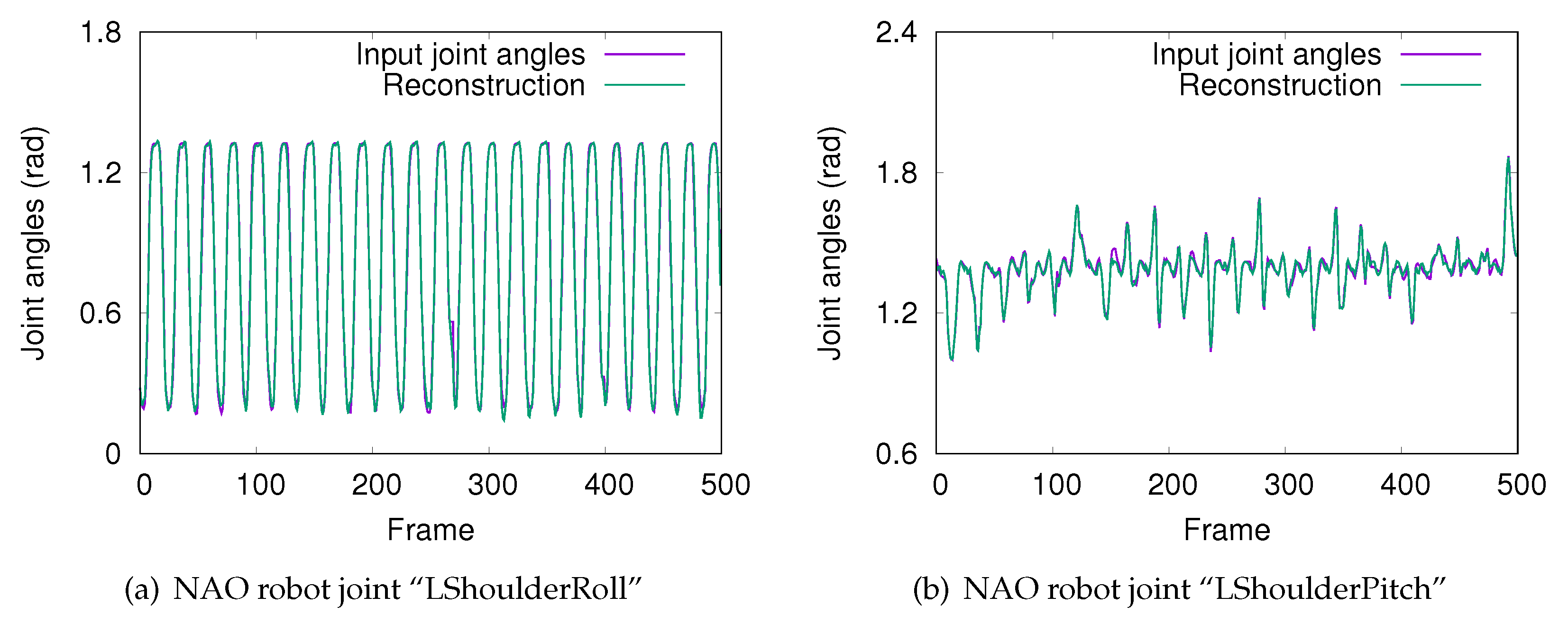

Figure 9 shows the angles from two joints of the humanoid robot reconstructed using GPDM decoder

, demonstrating the accuracy of decoder

.

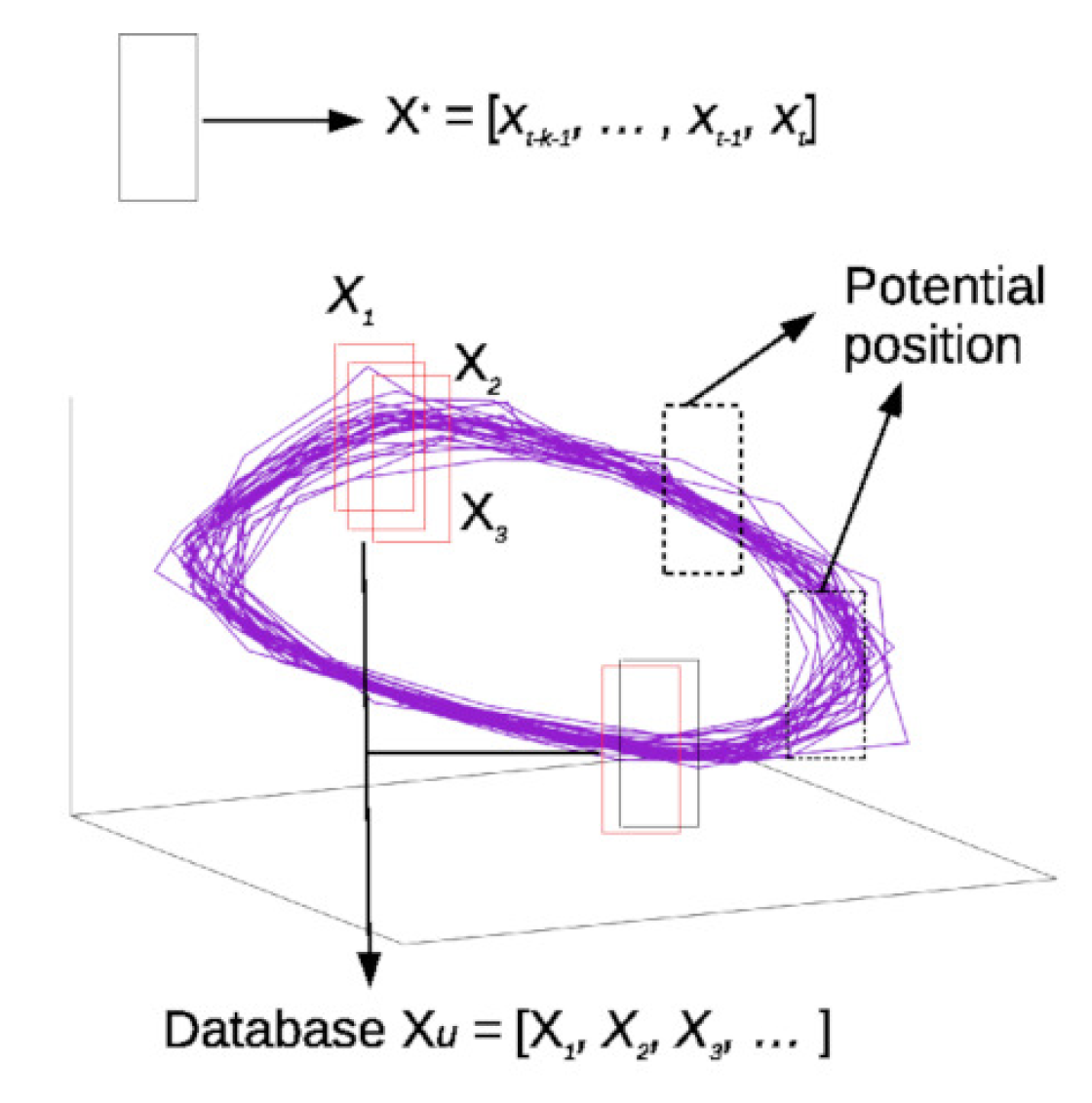

6. Joint Angle Prediction Using Gaussian Process Dynamical Motion Models

State

is used to predict

through the learned dynamical mapping,

.

Figure 10 shows the proposed state transition system for real-time robot imitation. The dynamical mapping enables state transition, and the GPDM decoder reconstructs the humanoid robot joint angles. Hence, we can predict the humanoid robot joint angles in real time by map

.

In addition, we devised a similarity measurement module to improve the prediction accuracy of the humanoid robot joint angles, as the accuracy from discrete state

is limited. The similarity measurement determines the position of

in the learned motion model. A sequence of states

is used instead of discrete state

, with

k being the size of

. The learned motion model is divided to form database

(as shown in

Figure 11), where the size of each

(

) is also

k. A cosine distance function is adopted to calculate the similarity between

and

:

Then, input

is updated with the

retrieving the highest similarity. Thus, the joint angle is predicted with an updated

, reducing the uncertainty of discrete state

, and thus, improving the prediction accuracy.

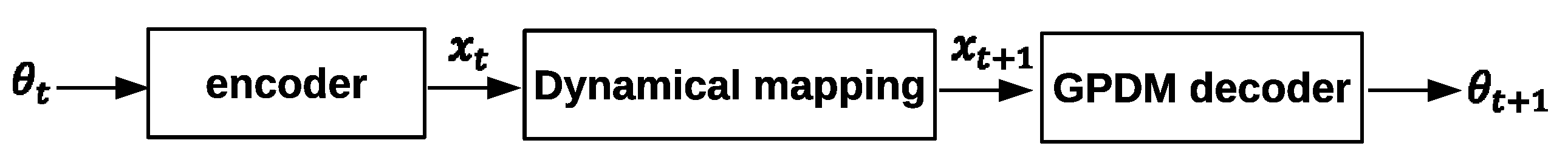

To verify the prediction system, we collected the humanoid robot joint angles from the same motion as learning data to form a test dataset. The test dataset contained 500 frames of humanoid robot joint angles.

Figure 12 illustrates the encoding of humanoid robot joint angle

into

. Based on the GPDM learned motion models, we made predictions using the dynamical mapping to obtain

. Finally, the humanoid robot joint angles in state

were reconstructed. Note that the GPDM does not define an encoder mapping, which is obtained by a feed-forward neural network, where the learning dataset of humanoid robot joint angles,

, is the input, and the GPDM learned motion model,

X, is the output. We perform relearning for map

as the encoder. Note that we divide the learning dataset in to training set (70%), validation set (15%), and testing set (15%) in learning encoder mapping.

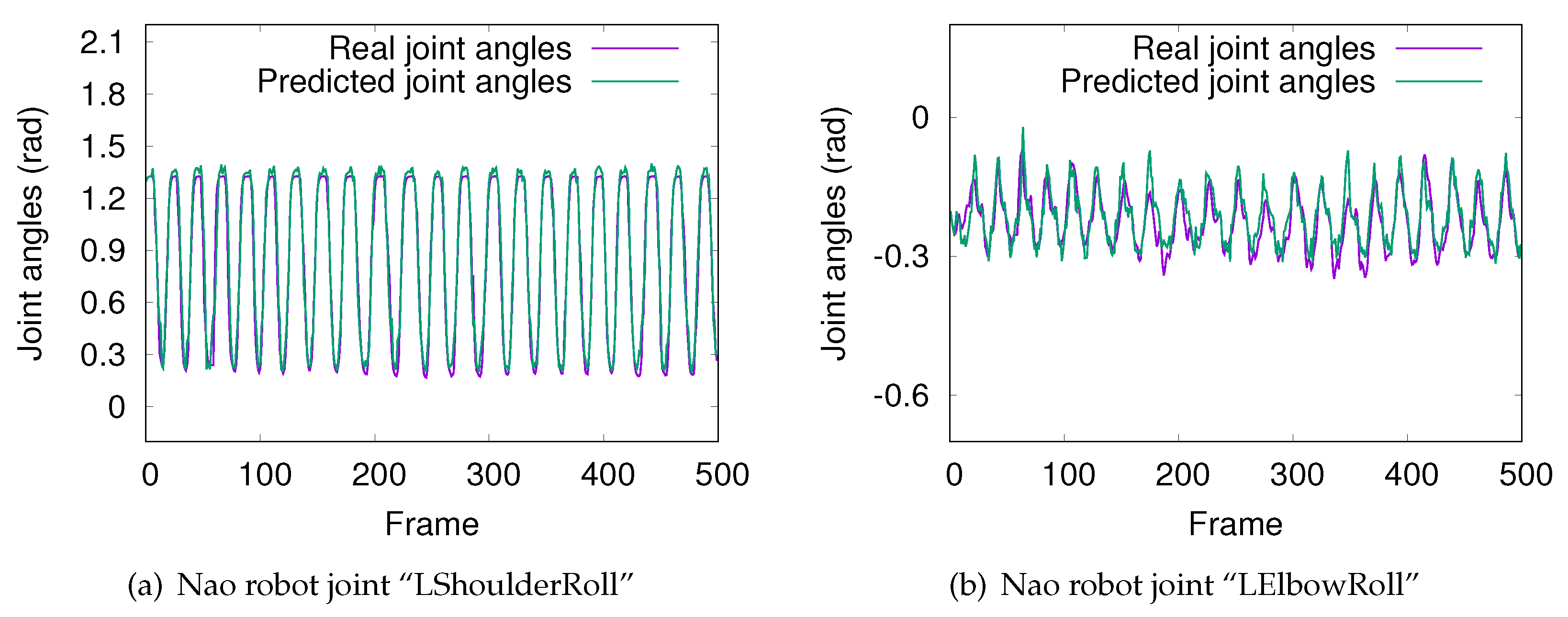

We set

and performed predictions of the humanoid robot joint angles in real time to verify the prediction system. We selected two important humanoid robot joint angles (LShoulderRoll and LElbowRoll) and obtained the accurate predictions shown in

Figure 13. For further verification, we applied the predicted joint angles to a simulated NAO humanoid robot. We found that the predicted joint angles without using the proposed similarity measurement elicit unnatural motions of the humanoid robot. Errors occur by the predicted joint angles being beyond the range of humanoid robot motion.

Figure 13a,b shows the predicted joint angles using the proposed similarity measurement. The predicted joint angles with the similarity measurement module appear to be smooth and are consistent with the real joint angles. The predicted joint angles with the proposed similarity measurement make the robot wave its hands naturally, as shown in

Figure 14, verifying the effectiveness of using this measurement.

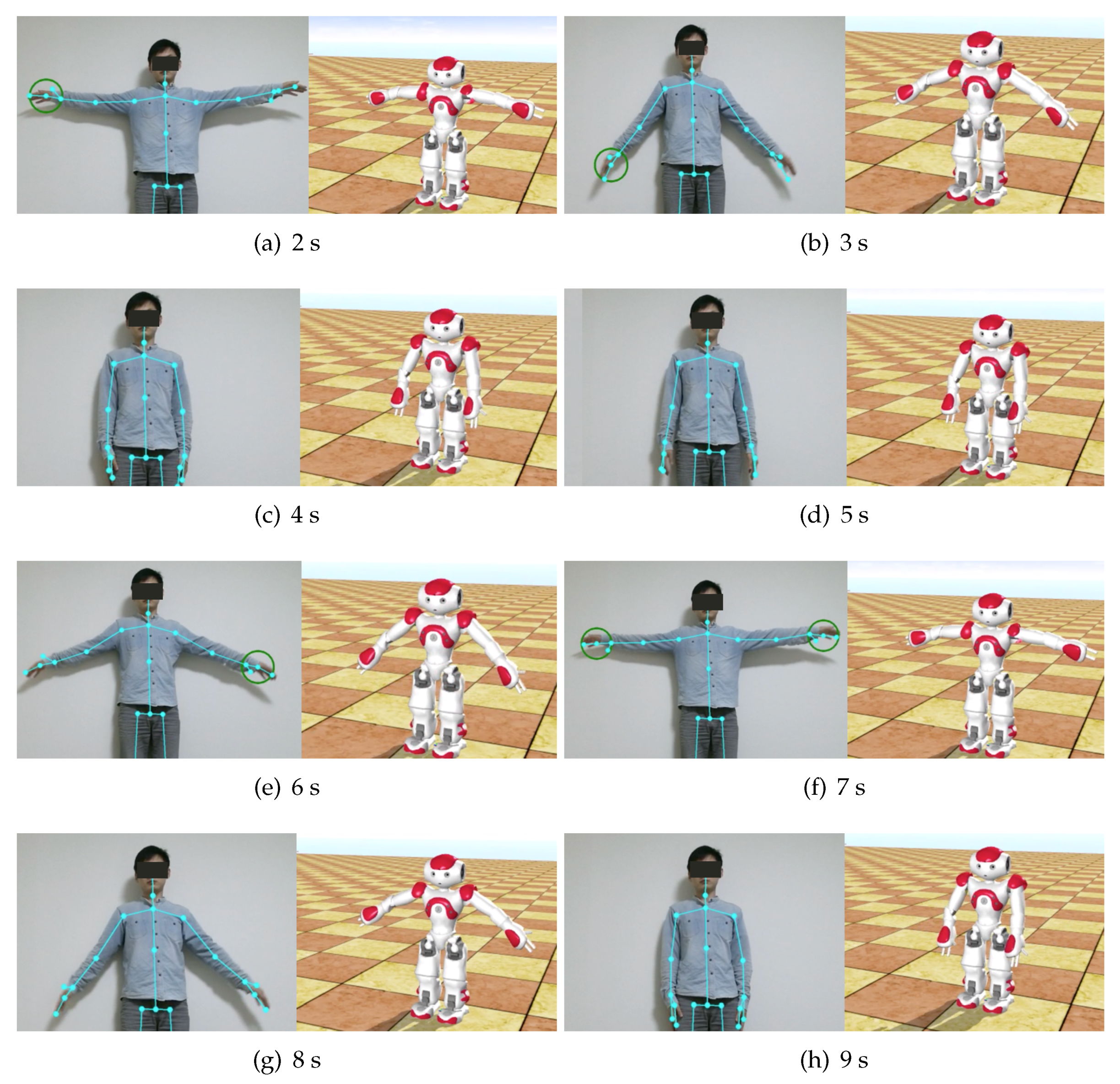

7. Humanoid Robot Joint Angle Estimation Using a Gaussian Process Dynamical Motion Model and Particle Filter

The proposed particle filter was then applied to the learned 3D state space shown in

Figure 8a. In the conventional particle filter detailed in

Section 3, each particle is defined as

, with

M being number of the humanoid robot joint angles. Using the GPDM, the particles are distributed over the 3D state space, and each particle is given by

.

Table 1 shows the differences between conventional particle filter method and our proposed system. The high-dimensional state space is replaced by a 3D state space. The computational cost is greatly reduced when replacing the high-dimensional state space by the 3D GPDM learned state space.

Figure 15 shows the particle filter estimation system using the learned GPDM, where particles are distributed in the 3D state space. The current state,

, can be obtained from the previous state,

, using learned GPDM dynamical mapping

in the 3D state space. Once the humanoid robot states are estimated in the 3D state space, the joint angles are reconstructed using GPDM decoder

.

Figure 16 shows a sequence of real-time joint angle estimation using the GPDM and particle filter. This real-time estimation outperforms that using only the particle filter, illustrated in

Figure 6. The experiment results of real-time joint angle estimation using only particle filter in

Figure 6 shows that it delayed about 2 s when the Nao robot imitated human motions. This is because the computational cost is relatively high when a particle filter performs estimation in high-dimensional state space. It cannot be avoided that the system takes much time to estimate humanoid robot joint angles in high-dimensional state space and delay exists. By combining a particle filter and a GPDM, we reduced the high-dimensional joint angle space into the GPDM learned 3D state space, and the computational cost was greatly reduced. The GPDM motion model was also adopted to make accurate the estimation of humanoid robot joint angles. The imitation experiments show that the humanoid robot moves almost simultaneously with the human, and the delay is reduced greatly by applying the estimation in the 3D state space. These results verify that the learned GPDM enable efficient humanoid robot state estimation in the 3D state space with low computational cost for real-time imitation of human motion.