Business Methodology for the Application in University Environments of Predictive Machine Learning Models Based on an Ethical Taxonomy of the Student’s Digital Twin

Abstract

1. Introduction

- Students can find countless university options through digital channels;

- Universities must provide the best teaching experience, also now in digital format;

- Students value data privacy;

- Immediate and simplified access to information is essential.

1.1. Opportunities in University Educational Institutions

- Consolidation

- Standardization and adjustment

- Chaos management

- Discovery of new paradigms

1.2. Opportunities AI Project Management

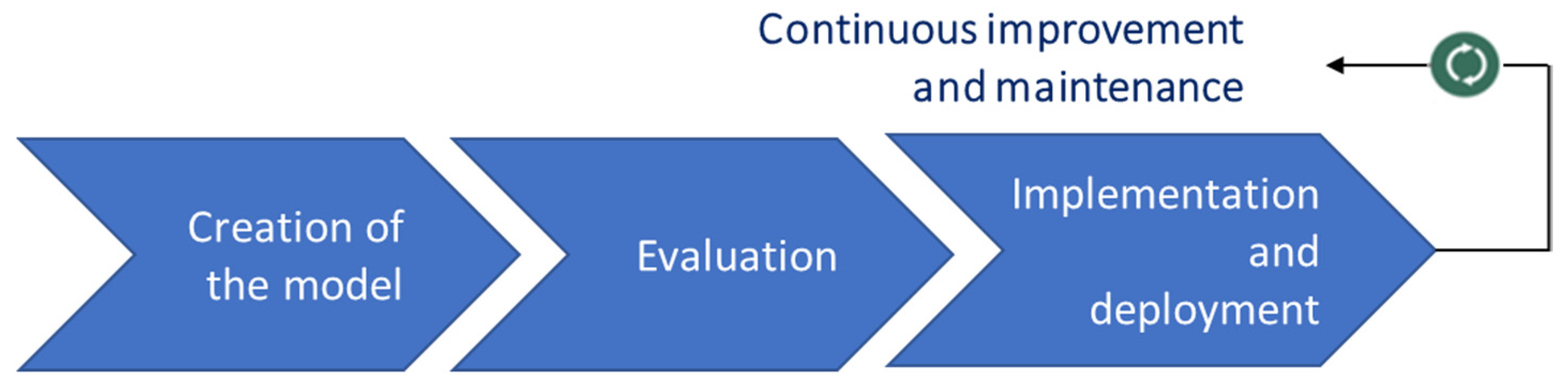

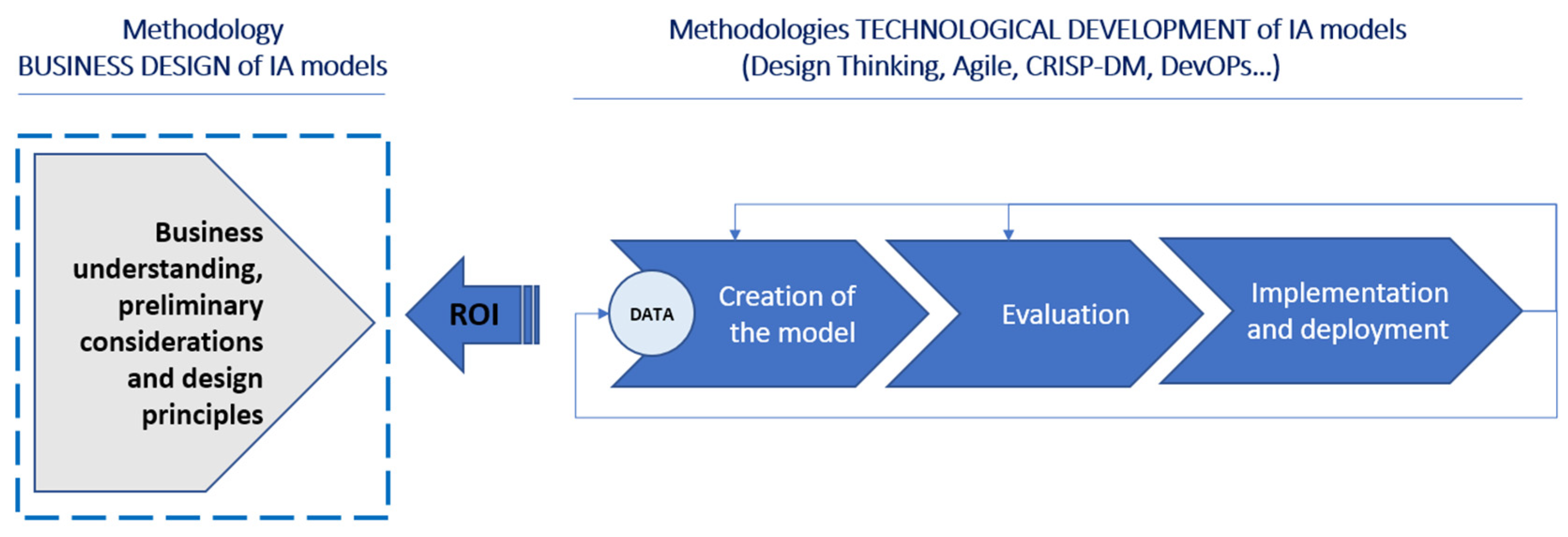

- Firstly, because artificial intelligence, as a science, requires us to follow the scientific method (Rech and Althoff 2004). The scientific method presents a set of orderly steps, from hypothesis formulation to the analysis of results and planning of conclusions, which are mainly used to discover new knowledge in science. These steps involve an iterative process if the starting hypothesis is not correct;

- Secondly, machine learning projects are data-intensive. Traditionally in data mining, the CRISP-DM methodology is used, which stands for Cross Industry Standard Process for Data Mining. This methodology starts with understanding the business, understanding the data and its preparation, and continuing with the modeling process (Moine 2016). If the evaluation of the model is positive, it is deployed. If not, it is necessary to rethink the understanding of the business and start again in an iterative process where needs and opportunities that can be solved with an innovative approach can always be detected.

1.3. Taxonomies

- Remember: memorizing and storing data and concepts;

- Understand: enabling knowledge to be explained and discussed;

- Apply: putting into practice what has been learned and being able to demonstrate a hypothesis;

- Analyze: contrasting the information and content used;

- Evaluate: assessing what has been learned with a critical approach;

- Create: generating new contributions based on their ability to construct and generate original knowledge. This is the highest level of the hierarchy to which a student should aspire.

1.4. Digital Twins

2. Materials and Methods

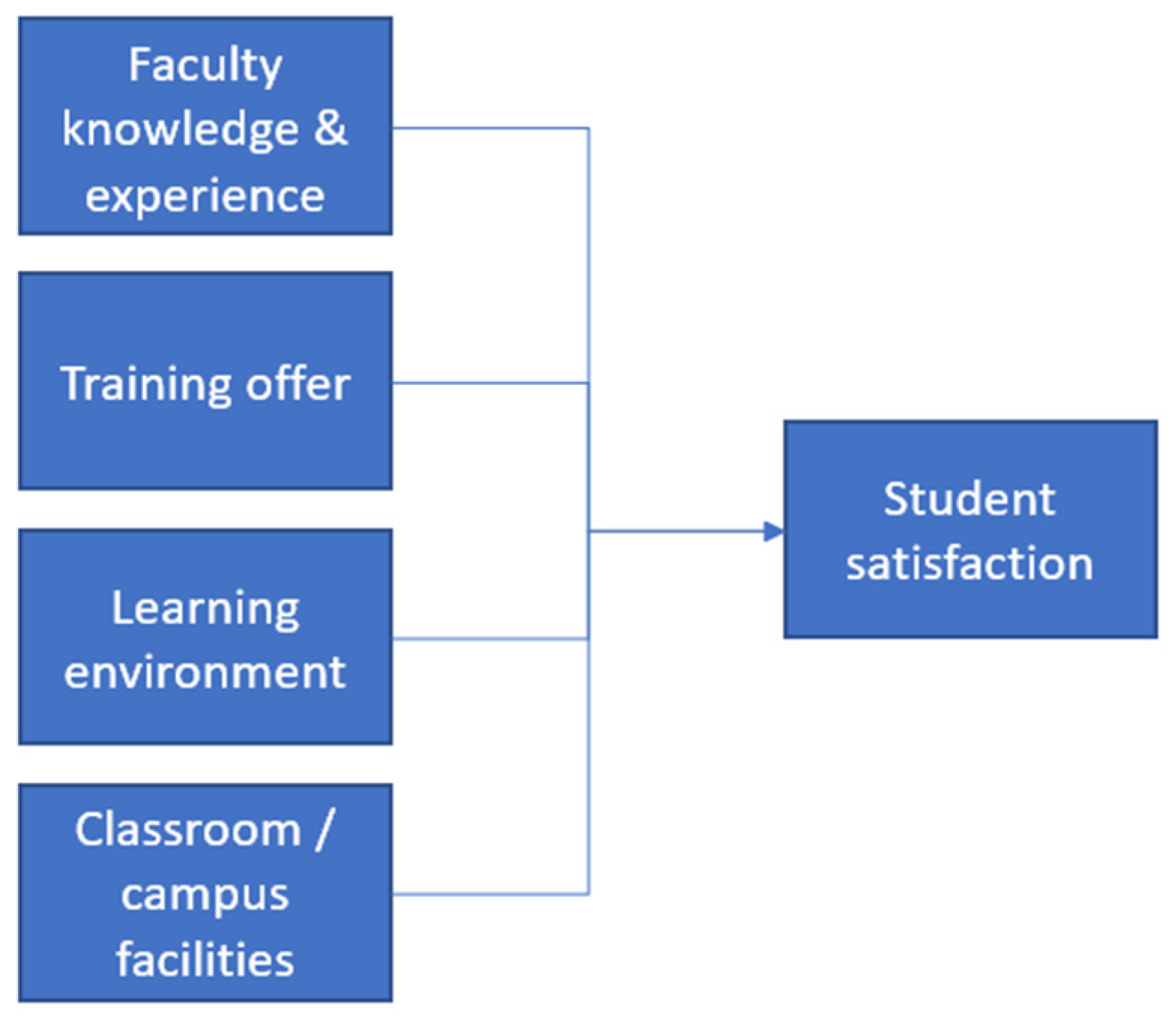

- Design a process map that defines the relationships between the student and a university in order to apply the methodology in the specific use case of satisfaction with learning;

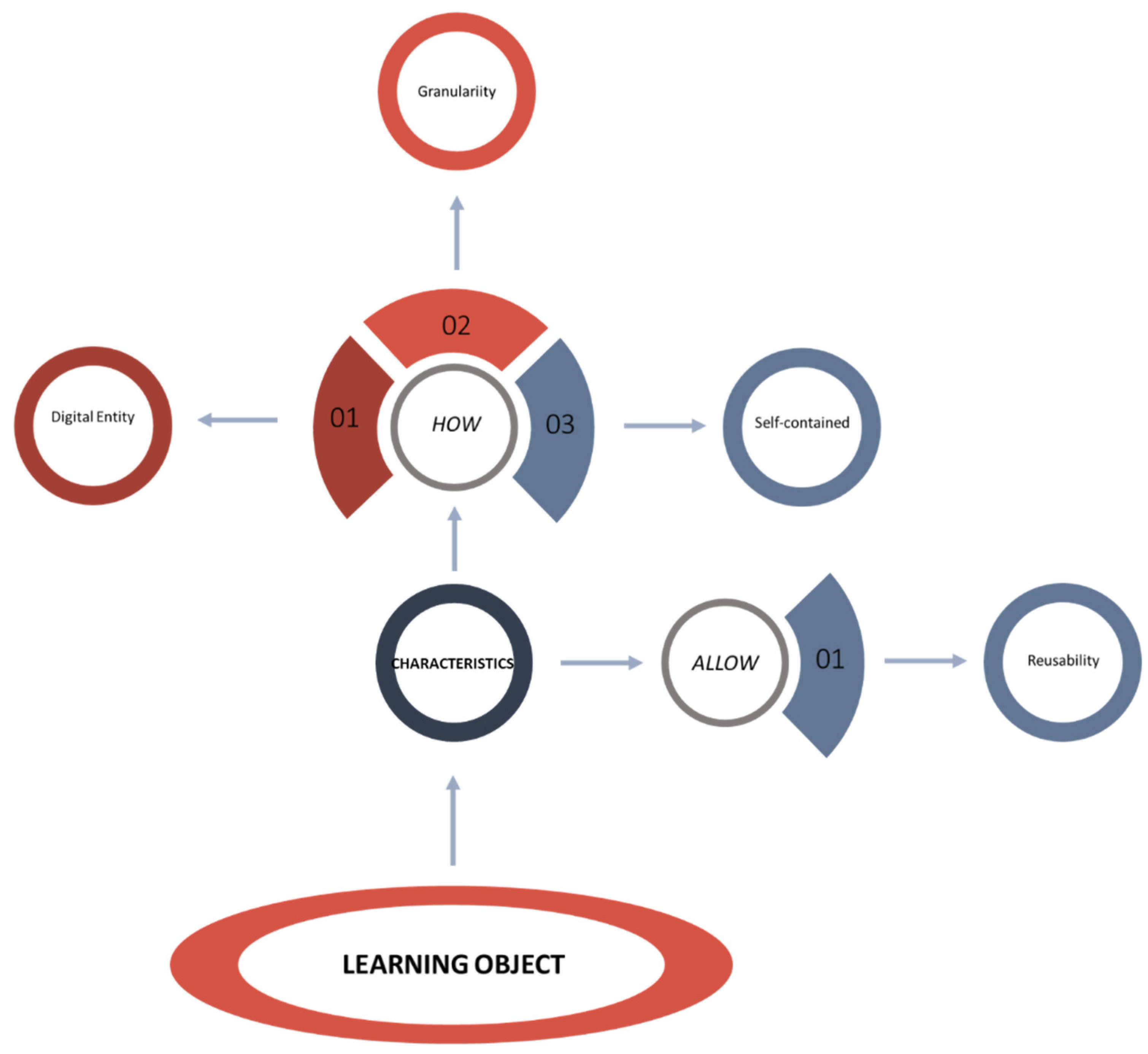

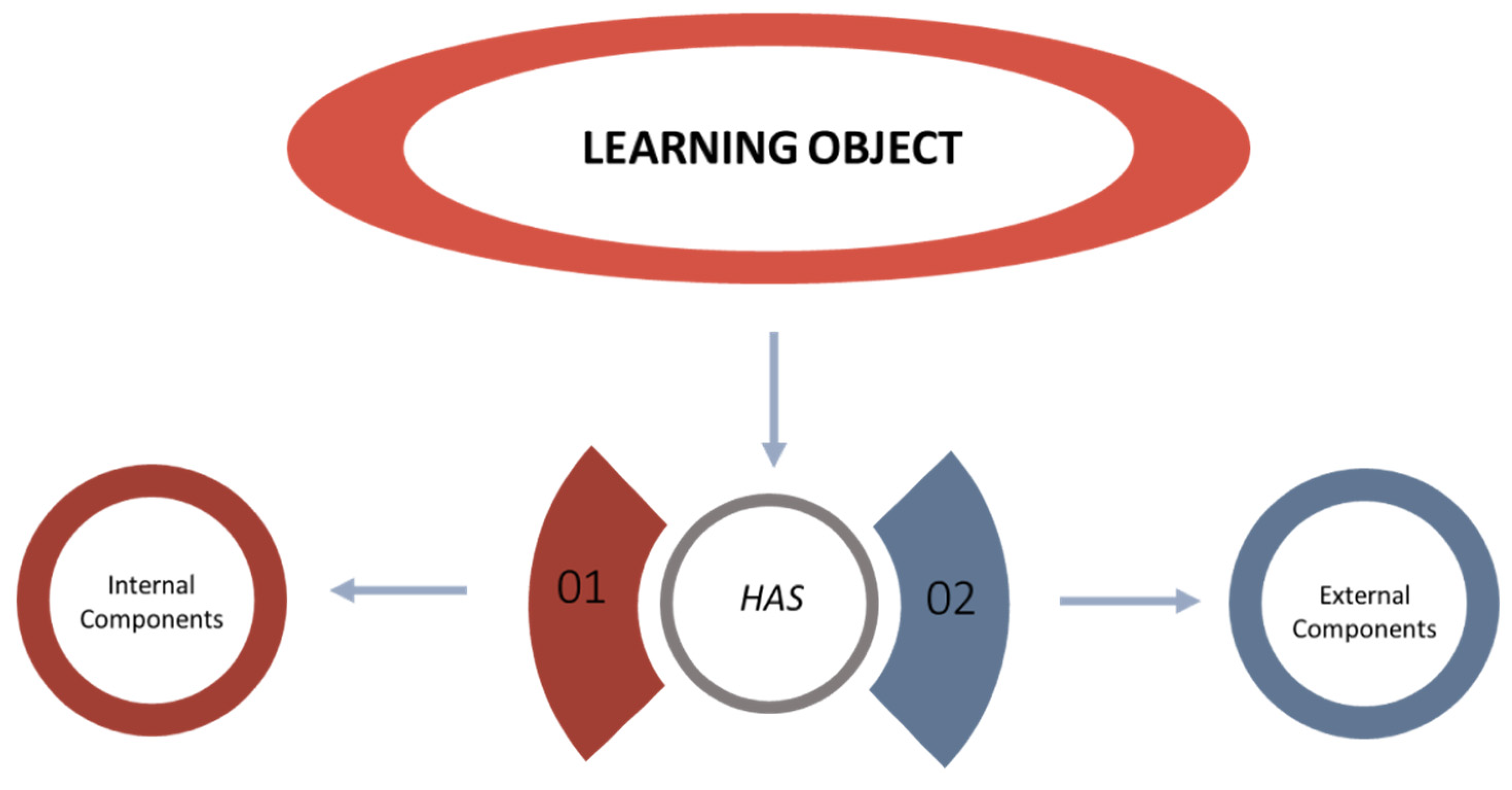

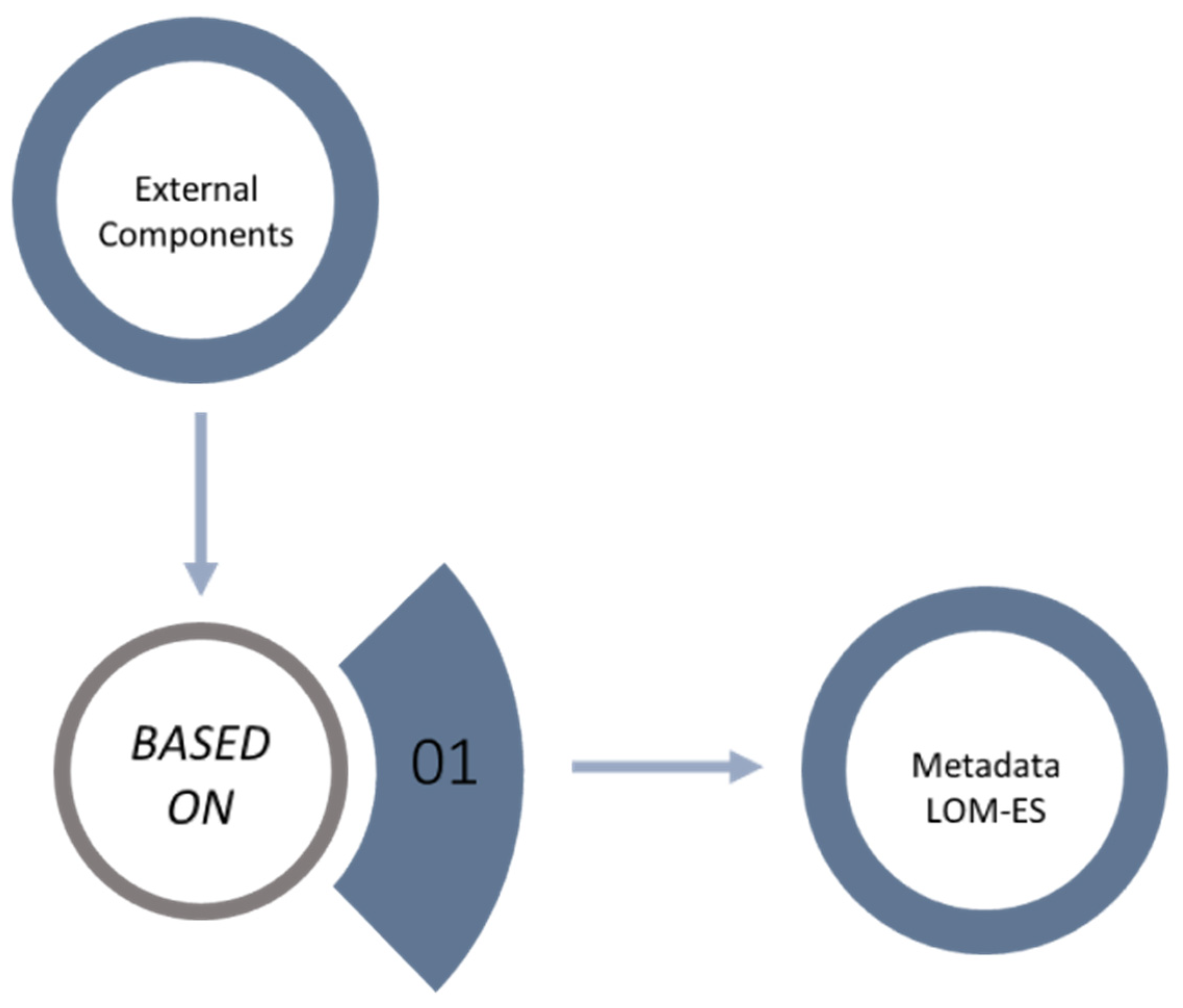

- Apply the advanced taxonomy based on learning objects that allows structuring and standardizing the relevant data of the student’s digital twin and their satisfaction in their relationship with the university.

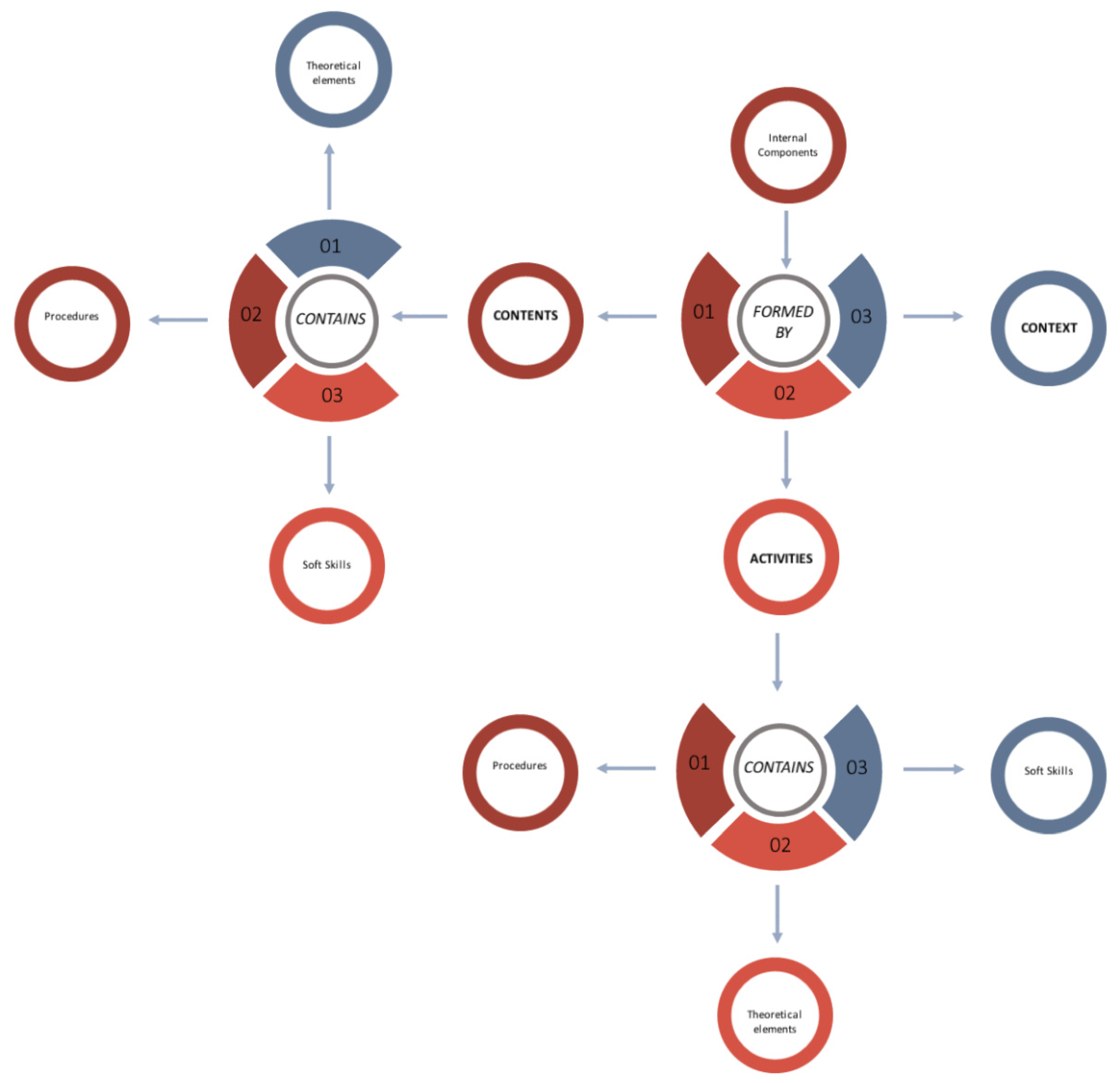

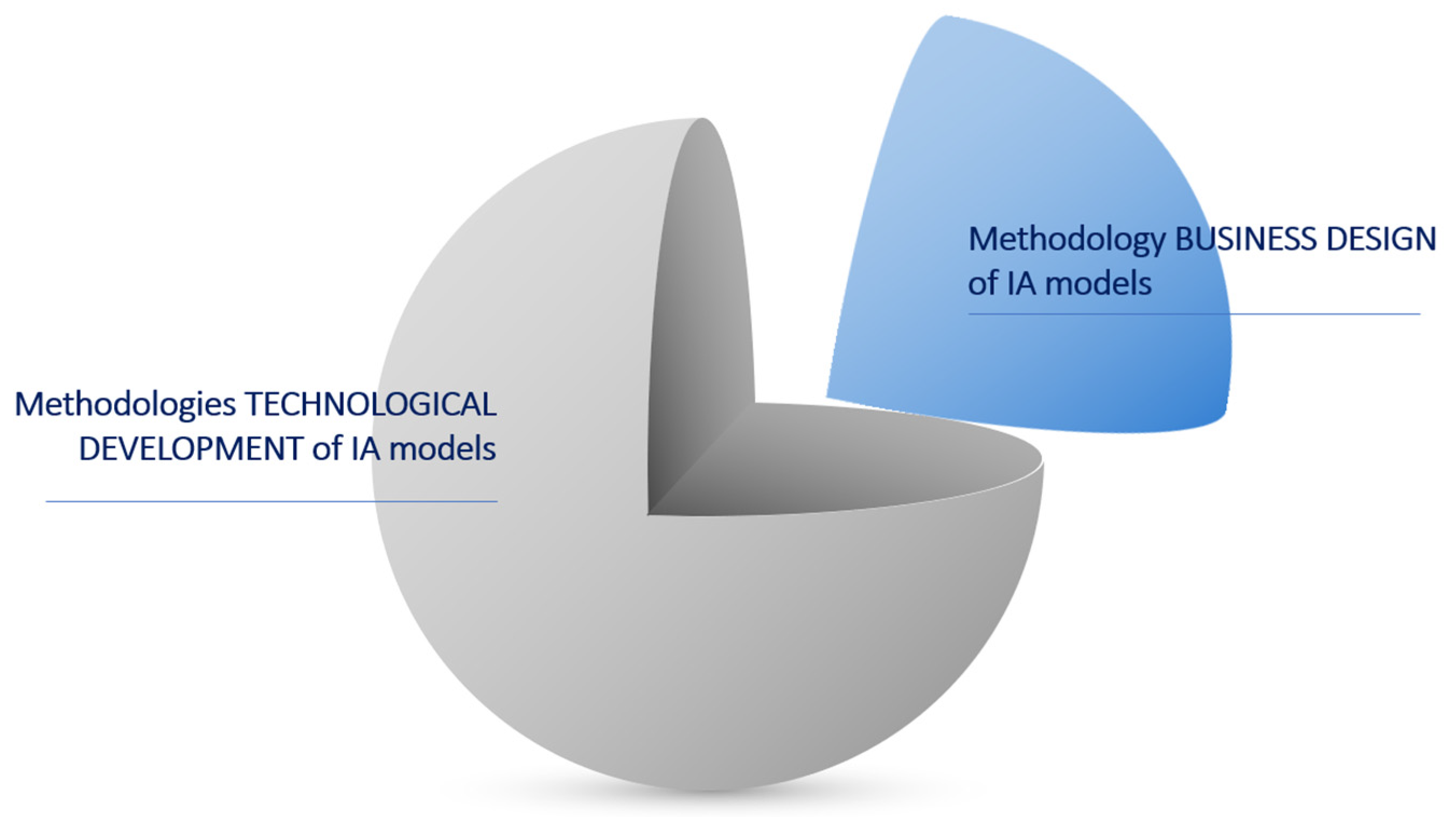

2.1. Context for Technological Development of an AI Model

2.2. Context for Business Design of IA Models

- Creating AI hypotheses and assess which ones provide the most value in a prototype design;

- Identifying potential AI capabilities that support the required functionality;

- Rationalizing what capabilities already exist in off-the-shelf solutions or whether they can be sourced from public assets (open source) or must be developed in-house. The sourcing model decision impacts the cost and availability of AI models. Generally, more strategic functionalities require less commoditized AI capabilities;

- Determining the incorporation of such model functionalities in specific development cycles based on prioritized capability planning and cost-benefit balance;

- Giving personality to the cognitive system, which will modulate how the system will respond, how it will adapt its responses to the different contexts (place and time of the interaction, type of user...), the style and tone of the language (formal, informal...);

- Considering all data sources, even if they seem absurd. Start with the most obvious ones and extend considerations to public data sources, unstructured and non-standard data types (images, videos, phone calls...), and then filter and prioritize.

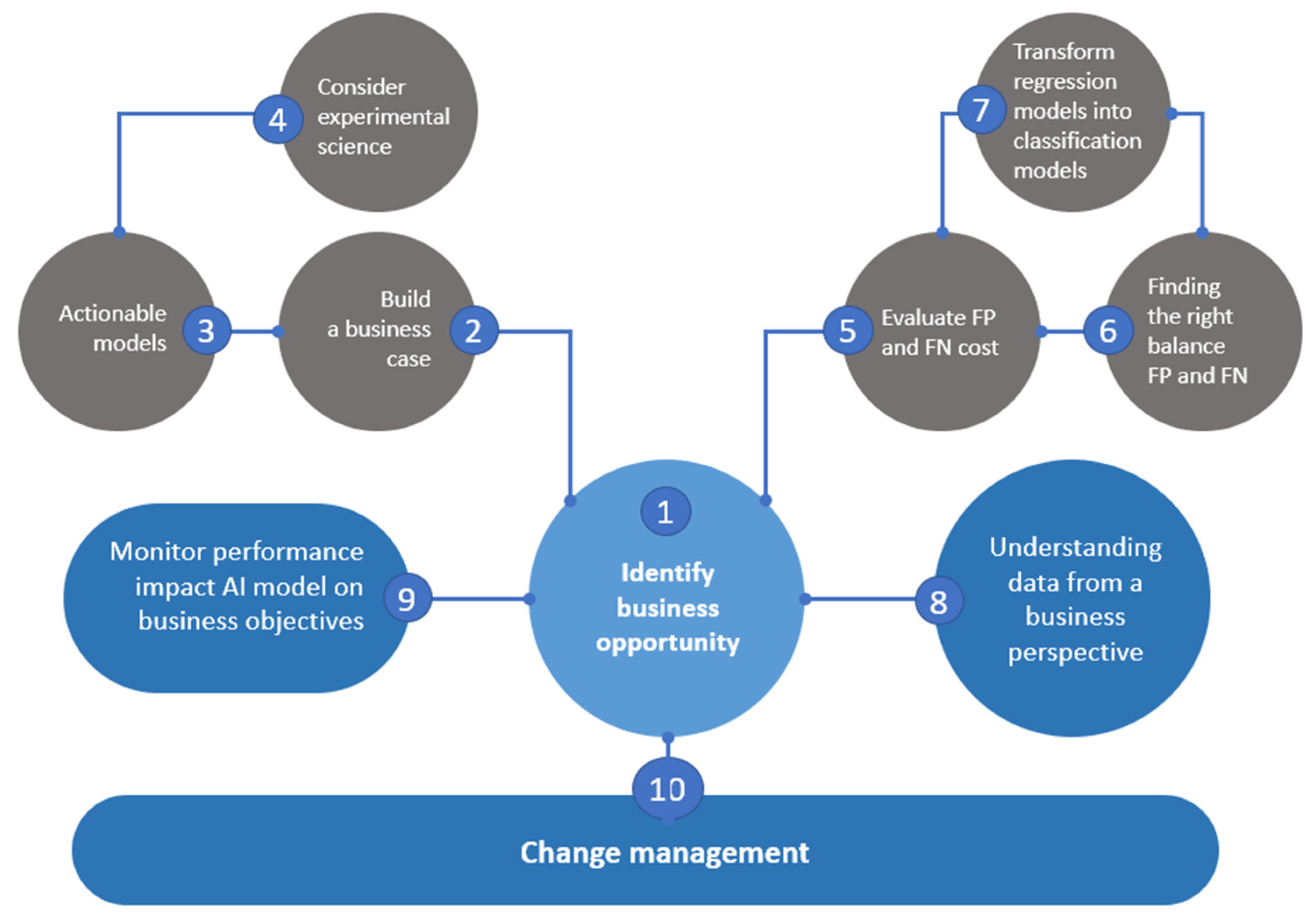

2.3. Methodology for the Prevalidation from a Business Point of View of Projects Based on Supervised Machine Learning Models in the Field of University Students’ Experiences

- 1.

- Identify the business opportunity before data and models

- Retention and graduation rates;

- Program completion times;

- Monitoring of academic performance;

- Registration for information sessions or campus tours;

- Personalized academic contacts with the student;

- Content and marketing materials that attract and engage learners more effectively than others;

- Patterns of student participation: how long it takes a student to complete a full interaction with the university, barriers to continuous student participation, etc.

- 2.

- Build the business case

- The selection of the use case is first made based on the design of the university’s process map on which to fit all student contacts and possible use cases based on these interactions.

- Secondly, all potential use cases that can be addressed from an AI point of view are placed on the process map and prioritized and selected. This selection of the specific use case allows refining the scope, functional requirements, and appropriate capabilities to make the AI model viable.

- Big Data infrastructure (data collection, storage, and processing), with a planned and sustainable architecture roadmap;

- The use of tools for data exploration, data integration, and advanced real-time analytics will be commonplace;

- A Data Governance policy will be in place and systematically applied for the development of advanced use cases with analytical, predictive, and prescriptive models;

- Relevant structured and unstructured data and information sources shall exist on a dedicated, flexible, robust, and scalable technological infrastructure in cloud/on-premises environments;

- In the case of deployment of cloud solutions, these will tend to be initially hybrid infrastructures, with a tendency to migrate to multi-cloud (hyperscale) solutions.

- •

- Website: the design and interface of the website are critical in determining how students perceive the university. Indeed, a university’s website is the ultimate brand statement, an important component of the student experience, and can greatly influence a student’s decision to apply as a student of the university or subsequent attractiveness as a student.

- Therefore, the web should be analyzed from a student’s perspective to see how they feel about using the platform as a tool for participation and work on aspects that improve it to achieve an attractive and easy-to-navigate information environment.

- The importance of improving the student’s digital experience is decisive, as digital aspects dominate their decision-making to a large extent in the new generations of students. Some of the lines of work are based on the following pillars:

- ○

- Content quality control, thorough content inventory, and problem reporting. High-quality content is critical to providing students, staff, alumni, and the general public with the information they need online, and doing so with a frictionless experience is critical.

- ○

- Improving the accessibility of their web portals to remove all barriers that prevent students from accessing essential information online.

- ○

- Prioritization of web sections and digital content, based on the analysis of student behavior and interaction and the identification of actions that have the greatest impact on improving the user experience.

- ▪

- Social networks play a very important role in students’ perception of universities. Institutions now make greater use of social media platforms such as Facebook, Twitter, YouTube, and Instagram to market their programs and interact with students. For prospective students, the way a university responds to their questions or comments makes a difference.

- 3.

- Define actionable models

- 4.

- Consider Machine Learning as an experimental science

- 5.

- Evaluate the cost of false positives or false negatives

- 6.

- Find the right balance between false positives and false negatives

- 7.

- Transform regression models into classification models

- 8.

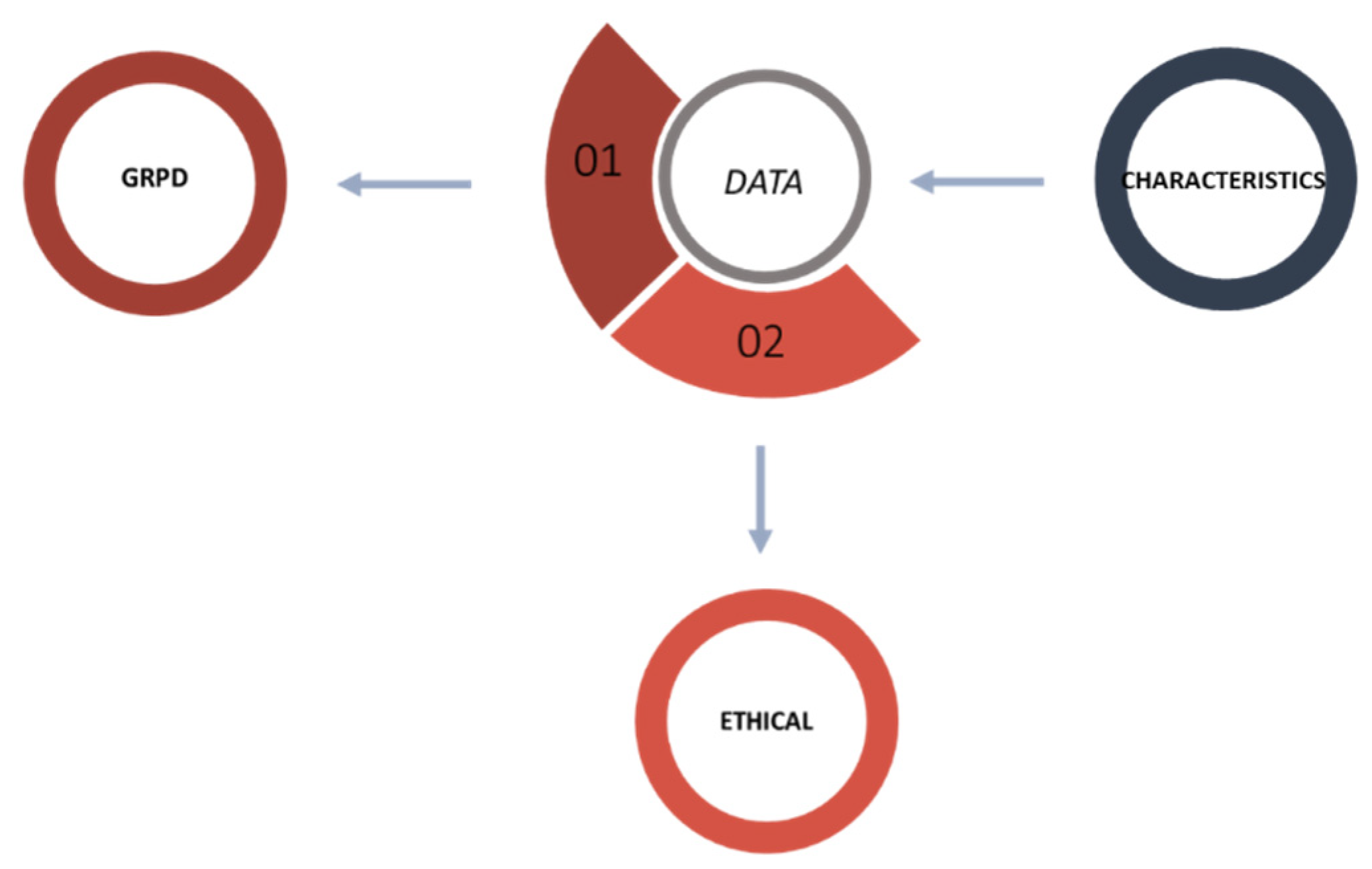

- Understand data from a business perspective

- Establish privacy policies, standards, and secure data collection processes across the university;

- Include the ethical component in the Data Governance Model;

- Comply with applicable regional, national and international data privacy laws;

- Develop and implement data privacy training programs;

- Respond promptly to breaches or privacy incidents and be prepared for them;

- A catalog that has access to student data and collects data transparently;

- Review data privacy policies and procedures regularly;

- Create an action plan to improve them periodically: data privacy laws are constantly changing.

- The data generated by digital platforms contain valuable information on what is working, what is not working, and areas for optimization. Access to student engagement data can help the university communicate with students and, for example, trigger an automated chatbot response that provides meaningful information (such as a link to a specific degree program) when the student needs it.

- Another relevant use case could be the reduction in university dropouts during the first year. By collecting student data from the LMS (Learning Management System), such as grades and attendance records, and combining it with their demographic data, a student dropout profile can be constructed, and the stages in their university career at which they typically drop out can be determined.

- With this pattern, it will be possible to target communication efforts towards those students who fit this profile at the most at-risk stages of their university career and thus help them to orientate themselves appropriately towards graduation.

- Data are only useful if they are accurate. Therefore, use only clean data that have been validated, especially concerning the following two dimensions:

- Student data: collected from digital interactions, such as clicks and website visits, and then analyzed to determine how and when to interact to improve the student’s digital experience;

- Student engagement: student data enables relevant communications at the right time, in the right place, and on the right platforms.

- 9.

- Monitor the impact of the IA Model’s performance on business objectives.

- 10.

- Change management

3. Results

4. Discussion

5. Conclusions

- (1)

- Expansion of the use cases for the application of the methodology to the student lead funnel. Some additional cases could be the profiling of potential students to classify leads into homogeneous groups according to their intrinsic characteristics, or the prioritization of potential students to optimize and accelerate the conversion of students who have shown interest in joining the university and have applied but have not formalized their enrolment;

- (2)

- Expansion of the methodology based on the development of a complete Learning Analytics ecosystem, which allows learning from the key factors for the courses, accompanying students, obtaining early warnings of abandonment or lack of interest, understanding how students interact, how to improve pedagogically, being differential as a university in its core business;

- (3)

- Incorporating data from users who are not yet university students but potential students (discovery stage) and analyzing the implications in the field of data processing;

- (4)

- Development of new taxonomies applied to continuous training environments, micro-studies, and courses for adaptation to the labor market, given at university level but without the consideration of a degree/master’s degree;

- (5)

- Development of non-university scenarios such as early childhood education, primary education, and continuous training environments in companies and self-learning environments.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Abdelmaaboud, Abdelhamid K., Ana Isabel Polo Peña, and Abeer A. Mahrous. 2020. The influence of student-university identification on student’s advocacy intentions: The role of student satisfaction and student trust. Journal of Marketing for Higher Education, 1–23. [Google Scholar] [CrossRef]

- AENOR. 2013. UNE-ISO 21500:2013 Directrices para la Dirección y Gestión de UNE-ISO 21500:2013. Available online: https://www.aenor.com/normas-y-libros/buscador-de-normas/une?c=N0050883 (accessed on 1 February 2021).

- Anderson, Lorin, and David Krathwohl. 2001. A Taxonomy for Learning, Teaching and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives. New York: Longman. [Google Scholar]

- Baccarini, David. 1999. The Logical Framework Method for Defining Project Success. ResearchGate. Available online: https://www.researchgate.net/publication/259268056_The_Logical_Framework_Method_for_Defining_Project_Success (accessed on 1 February 2021).

- Baumann, Chris, Greg Elliott, and Suzan Burton. 2012. Modeling customer satisfaction and loyalty: Survey data versus data mining. Journal of Services Marketing 26: 148–57. [Google Scholar] [CrossRef]

- Bloom, Benjamin S. 1956. Taxonomy of Educational Objectives. Vol. 1: Cognitive Domain. New York: McKay. [Google Scholar]

- Butt, Babar Zaheer, and Kashif ur Rehman. 2010. A study examining the students satisfaction in higher education. Procedia Social and Behavioral Sciences 2: 5446–50. [Google Scholar] [CrossRef]

- Castillo, Jose Miguel. 2009. Prospecting the future with AI. International Journal of Interactive Multimedia and Artificial Intelligence. Available online: http://www.ijimai.org/journal/sites/default/files/IJIMAI1-2-1.pdf (accessed on 1 February 2021).

- Castrillón, Eucario Parra. 2011. Propuesta de metodología de desarrollo de software para objetos virtuales de aprendizaje-MESOVA. Revista Virtual Universidad Católica del Norte 34: 113–37. [Google Scholar]

- Cheng, Min-Yuan, Li-Chuan Lien, Hsing-Chih Tsai, and Pi-Hung Chen. 2012. Artificial Intelligence Approaches to Dynamic Project Success Assessment Taxonomic. Life Science Journal 9: 5156–63. [Google Scholar]

- Churches, Andrew. 2009. Taxonomía de Bloom para la era Digital. Available online: https://eduteka.icesi.edu.co/articulos/TaxonomiaBloomDigital (accessed on 1 February 2021).

- Cuervo, Mauro Callejas. 2011. Objetos de aprendizaje, un estado del arte. Entramado 7: 176–89. [Google Scholar]

- Diez-Gutierrez, Enrique Javier, and Katherine Galardo-Espinoza. 2020. Educar y Evaluar en Tiempos de Coronavirus: La Situación en España. Multidisciplinary Journal of Educational Research 10: 102–34. [Google Scholar] [CrossRef]

- Eurofound. 2018. Game Changing Technologies: Exploring the Impact on Production Processes and Work. Luxembourg: Publications Office of the European Union. [Google Scholar] [CrossRef]

- García, Alejandro Rodríguez, and Ana Rosa Arias Gago. 2019. Uso de metodologías activas: Un estudio comparativo entre profesores y maestros. Brazilian Journal of Development 5: 5098–111. [Google Scholar]

- Gartner. 2021. Top Business Trends Impacting Higher Education in 2021. Available online: https://www.gartner.com/document/3997318 (accessed on 3 May 2021).

- Ginns, Paul, Michael Prosser, and Simon Barrie. 2007. Students’ perceptions of teaching quality in higher education: The perspective of currently enrolled students. Studies in Higher Education 32: 603–15. [Google Scholar] [CrossRef]

- Green, Heather J., Michelle Hood, and David L. Neumann. 2015. Predictors of Student Satisfaction with University Psychology Courses: A Review. Psychology Learning & Teaching 14: 131–46. [Google Scholar] [CrossRef]

- Interrupción y Respuesta Educativa. 2021. UNESCO. Available online: https://es.unesco.org/covid19/educationresponse (accessed on 3 May 2021).

- i-SCOOP. 2021. Digital Twins-Rise of the Digital Twin in Industrial IoT and Industry 4.0. Available online: https://www.i-scoop.eu/internet-of-things-guide/industrial-internet-things-iiot-saving-costs-innovation/digital-twins/ (accessed on 1 February 2021).

- Kuik, Kyra. 2020. Cómo medir la interacción del estudiante digital. Siteimprove. Available online: https://siteimprove.com/es-es/blog/como-medir-la-interaccion-del-estudiante-digital/ (accessed on 3 May 2021).

- Las nuevas Tecnologías en el Desarrollo Académico Universitario. 2019. Inter-Cambios. Dilemas y Transiciones de la Educación Superior, 4. [Google Scholar] [CrossRef]

- Lasa, Nekane Balluerka, Juana Gómez Benito, Dolores Hidalgo Montesinos, Arantxa Gorostiaga Manterola, José Pedro Espada Sánchez, José Luis Padilla García, and Miguel Ángel Santed Germán. 2020. Las Consecuencias Psicológicas de la COVID-19 y el Confinamiento. País Vasco: Servicio de Publicaciones de la Universidad del País Vasco. [Google Scholar]

- Lowendahl, Jan-Martin. 2019. Scaling Higher Education—Scale Is Different in the Digital Dimension. Stamford: Gartner. [Google Scholar]

- Malouff, John M., Lena Hall, Nicola S. Schutte, and Sally E Rooke. 2010. Use of Motivational Teaching Techniques and Psychology Student Satisfaction. Psychology Learning & Teaching 9: 39–44. [Google Scholar] [CrossRef]

- Moine, Juan Miguel. 2016. Análisis Comparativo de Metodologías para la Gestión de Proyectos de Minería de Datos. CIC Digital. Available online: https://digital.cic.gba.gob.ar/handle/11746/3516 (accessed on 3 March 2021).

- Morgan, Glenda, Jan-Martin Lowendahl, Robert Yanckello, Tony Sheehan, and Terri-Lynn Thayer. 2021a. Top Business Trends Impacting Higher Education in 2021. Stamford: Gartner. Available online: https://www.gartner.com/en/documents/3997318/top-business-trends-impacting-higher-education-in-2021 (accessed on 5 June 2021).

- Morgan, Glenda, Jan-Martin Lowendahl, Robert Yanckello, Tony Sheehan, and Terri-Lynn Thayer. 2021b. Top Technology Trends Impacting Higher Education in 2021. Stamford: Gartner. Available online: https://www.gartner.com/document/3997314 (accessed on 5 June 2021).

- O’Sullivan, Jamie, Dominic O’Sullivan, and Ken Bruton. 2020. A case-study in the introduction of a digital twin in a large-scale smart manufacturing facility. Procedia Manufacturing 51: 1523–30. [Google Scholar] [CrossRef]

- Oldfield, Brenda M., and Steve Baron. 2000. Student perceptions of service quality in a UK university business and management faculty. Quality Assurance in Education 8: 85–95. [Google Scholar] [CrossRef]

- Petruzzellis, Luca, Angela Maria DUggento, and Salvatore Romanazzi. 2006. Student satisfaction and quality of service in Italian universities. Managing Service Quality: An International Journal 16: 349–64. [Google Scholar] [CrossRef]

- Project Management Institute. 2019. AI Innovators: Cracking the Code on Project Performance. Available online: https://www.pmi.org/-/media/pmi/documents/public/pdf/learning/thought-leadership/pulse/ai-innovators-cracking-the-code-project-performance.pdf (accessed on 3 May 2021).

- CYD. 2021. Ranking Universidades CYD 2021. Available online: https://www.fundacioncyd.org/resultados-del-ranking-cyd-2021/ (accessed on 3 May 2021).

- Rech, Jorg, and Klaus-Dieter Althoff. 2004. Artificial Intelligence and Software Engineering: Status and Future Trends. KI 18: 5–11. [Google Scholar]

- Reier Forradellas, Ricardo Francisco, and Luis Miguel Garay Gallastegui. 2021. Digital Transformation and Artificial Intelligence Applied to Business: Legal Regulations, Economic Impact and Perspective. Laws 10: 70. [Google Scholar] [CrossRef]

- Rodríguez, Martín Caeiro. 2019. Recreando la taxonomía de Bloom para niños artistas. Hacia una educación artística metacognitiva, metaemotiva y metaafectiva. Artseduca 24: 65–84. [Google Scholar] [CrossRef]

- Sánchez Medina, Irlesa Indira. 2014. Estado del arte de las metodologías y modelos de los Objetos Virtuales de Aprendizaje (OVAS) en Colombia. Entornos 28: 93. [Google Scholar] [CrossRef][Green Version]

- Sheehan, Tony, Robert Yanckello, Terri-Lynn Thayer, Glenda Morgan, and Jan-Martin Lowendahl. 2020. Use Gartner Reset Scenarios to Move from Survival to Renewal for Higher Education. Available online: https://www.gartner.com/doc/3991632 (accessed on 3 March 2021).

- Silva Quiroz, Juan, and Daniela Maturana Castillo. 2017. Una propuesta de modelo para introducir metodologías activas en educación superior. Innovación Educativa (México, DF) 17: 117–31. [Google Scholar]

- Silvius, A. J. Gilbert, and Ronald Batenburg. 2009. Future Development of Project Management Competences. Paper presented at the 2009 42nd Hawaii International Conference on System Sciences, Big Island, HI, USA, January 5–8. [Google Scholar]

- UNESCO. 2021. Un año después del inicio de la crisis #COVID19: Estudiantes y. Available online: https://es.unesco.org/covid19/educationresponse/learningneverstops (accessed on 3 May 2021).

- Villarreal-Villa, Sandra Margarita, Universidad del Atlántico, Jesus Enrique Garcia Guiliany, Universidad Simón Bolívar (Colombia), Hugo Hernández-Palma, and Ernesto Steffens-Sanabria. 2019. Competencias docentes y transformaciones en la educación en la era digital. Formación Universitaria 12: 3–14. [Google Scholar] [CrossRef]

- Wang, Xiaojin, and Jing Huang. 2006. The relationships between key stakeholders’ project performance and project success: Perceptions of Chinese construction supervising engineers. International Journal of Project Management 24: 253–60. [Google Scholar] [CrossRef]

- WeWork and Brightspot Strategy. 2021. El impacto del COVID-19 en la experiencia de los estudiantes universitarios. Ideas (es-ES). Available online: https://www.wework.com/es-ES/ideas/research-insights/research-studies/the-impact-of-covid-19-on-the-university-student-experience#full-report (accessed on 3 May 2021).

- Yanckello, Robert. 2021. Education Digital Transformation and Innovation Primer for 2021. Stamford: Gartner. [Google Scholar]

- Yanckello, Robert, Jan-Martin Lowendahl, Terri-Lynn Thayer, and Glenda Morgan. 2019. Higher Education Ecosystem 2030: Classic U. Stamford: Gartner. [Google Scholar]

- Yanckello, Robert, Terri-Lynn Thayer, Jan-Martin Lowendahl, Kelly Calhoun Williams, Tony Sheehan, and Glenda Morgan. 2020. Predicts 2021: Education—Unprecedented Disruption Creates Shifting Landscape. Stamford: Gartner. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Garay Gallastegui, L.M.; Reier Forradellas, R.F. Business Methodology for the Application in University Environments of Predictive Machine Learning Models Based on an Ethical Taxonomy of the Student’s Digital Twin. Adm. Sci. 2021, 11, 118. https://doi.org/10.3390/admsci11040118

Garay Gallastegui LM, Reier Forradellas RF. Business Methodology for the Application in University Environments of Predictive Machine Learning Models Based on an Ethical Taxonomy of the Student’s Digital Twin. Administrative Sciences. 2021; 11(4):118. https://doi.org/10.3390/admsci11040118

Chicago/Turabian StyleGaray Gallastegui, Luis Miguel, and Ricardo Francisco Reier Forradellas. 2021. "Business Methodology for the Application in University Environments of Predictive Machine Learning Models Based on an Ethical Taxonomy of the Student’s Digital Twin" Administrative Sciences 11, no. 4: 118. https://doi.org/10.3390/admsci11040118

APA StyleGaray Gallastegui, L. M., & Reier Forradellas, R. F. (2021). Business Methodology for the Application in University Environments of Predictive Machine Learning Models Based on an Ethical Taxonomy of the Student’s Digital Twin. Administrative Sciences, 11(4), 118. https://doi.org/10.3390/admsci11040118