Digital Detection of Suicidal Ideation: A Scoping Review to Inform Prevention and Psychological Well-Being

Abstract

1. Introduction

2. Materials and Methods

2.1. Information Sources and Searching

2.2. Study Selection

2.3. Data Extraction and Analysis

3. Results

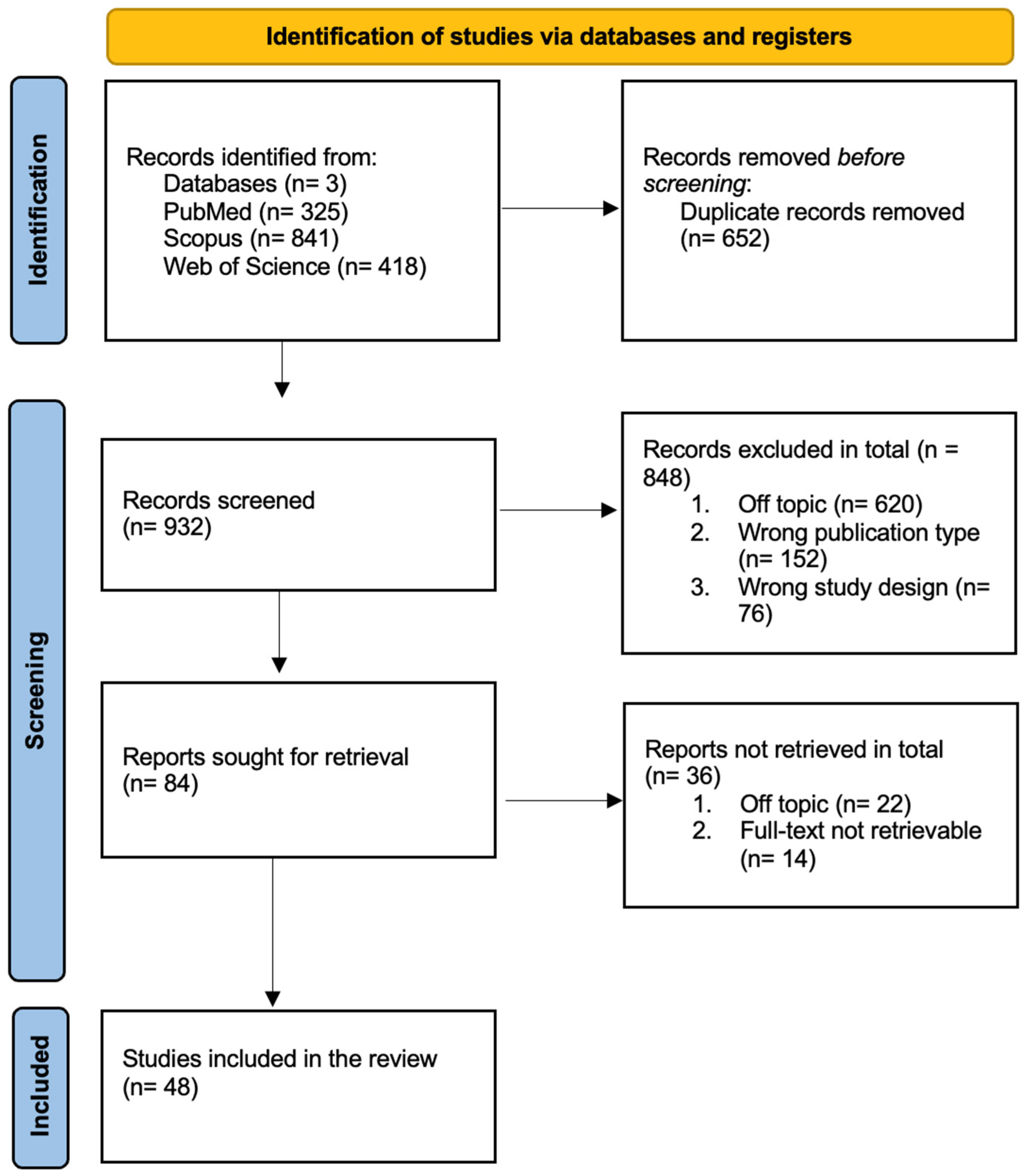

3.1. Search Results

3.2. Study Characteristics

3.3. Purpose of the Analysis

3.4. Type of Analysis

3.5. Traditional Methods

3.6. AI-Based Methods

3.7. Content Analysis Main Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Author, Year of Publication, Journal, Purpose of the Analysis, Type of Analysis, Sample Characteristics and Main Findings

| First Author, Year, Title | Journal | Aim | Interpretative/ Predictive | Type of Analysis, Method | Platform, N° Post, Language | Main Results |

| Scottye J Cash et al. Adolescent Suicide Statements on MySpace (Cash et al., 2013) | Cyberpsychol Behav Soc Netw | To investigate how teenagers remark on their suicide ideas and plans on MySpace. | Interpretative | Traditional Content analysis mixed method | MySpace 64 English | Content analysis identified key themes in comments related to potential suicidality. More than half of the comments expressed merely suicide ideation and lacked particular context. Breakups and other relationship problems were the second most prevalent theme. Substance abuse and mental health issues were less common but nonetheless important. There were no gender or age-group differences that were statistically significant. |

| Judith Horne et al. Doing being ‘on the edge’: managing the dilemma of being authentically suicidal in an online forum (Horne & Wiggins, 2009) | Sociol Health Illn | This study looks at how members of an online forum for “suicidal thoughts” develop their authenticity in their introductory postings and how other forum members react to them. | Interpretative | Traditional Discursive analysis | Two forums 329 English | Four practices—narrative framing, getting “beyond” despair, exhibiting logic, and not overtly seeking assistance—are used by participants to establish their authenticity, according to the analysis. Additionally, identities were constructed as being psychologically “on the edge” of life and death in both the original and later entries. |

| Mirtaheri, S.L. et al. A Self-Attention TCN-Based Model for Suicidal Ideation Detection from Social Media Posts (Mirtaheri et al., 2023) | Expert Systems with Applications | In this research, we offer a novel machine-learning approach that uses deep learning and natural language processing to identify suicidality risk in social media posts. | Predictive | AI-based methods | Reddit and Twitter 278,698 English | The examination identifies that the suicidal social media updates tend to have despair messages, i.e., “want to die,” “feel like,” “need help,” and “don’t know.” The semantic examination suggests that the updates are predominantly associated with sadness, followed by feelings such as joy and anger, pointing towards an affluent emotional atmosphere. Curiously, in spite of having apparently positive wording, the majority of posts with suicidal ideation have been labeled as positive, though in general, their context is negative. In addition, polarity and subjectivity scores show heavy dominance of negative sentiment. These findings can facilitate suicidal ideation detection in early stages using automatic language processing. |

| Ali A et al. Young People’s Reasons for Feeling Suicidal. (Ali & Gibson, 2019) | Crisis | The purpose of this study was to determine the causes of suicidal thoughts among young people in posts published | Interpretative | Traditional Braun and Clarke’s thematic analysis | Tumblr 210 English | The study identifies the loneliness and social isolation as the risk factors among young people, with a desire for a sense of belonging. Stigma, especially on gender, sexual orientation, and social norms, also contributes to higher suicide rates among marginalized groups. Additionally, self-blame in conjunction with social pressure has a tendency to create feelings of inadequacy and failure. Feelings of helplessness are a shared experience among the majority of young people, without the ability to alter their situation. Though mental illness was recorded less often, a few assigned suicidality to some diagnoses, sometimes as a justification for emotional pain. |

| Wang Z et al. A Study of Users with Suicidal Ideation on Sina Weibo (Wang et al., 2018) | Telemed J E Health | In order to classify the themes of negative postings published by USI and identify the traits of people who might be at risk of suicide, this study examined whether and how suicidal ideation is discussed on Weibo. It also looked into the possibility of using social media to identify and establish an early warning system for USI. | Interpretative | Mixed Method Braun and Clarke’s thematic analysis and Machine Learning | Sina Weibo 10,537 + 1 million Chinese | The three personal pronouns that were most frequently used were “I,” “you,” and “myself.” The majority of negative posts revealed the causes of their unpleasant emotions, which included fatigue, misunderstanding, and irritation. The second most frequent theme was just expressing sadness. The frequency of the other three motifs was significantly lower. Of the postings, about 2.5% mentioned having a suicidal propensity. Just 1.1% of the posts explained why they were considering suicide. |

| Carlyle, K. E. et al. Suicide conversations on InstagramTM: contagion or caring? (Carlyle et al., 2018) | Journal of Communication in Healthcare | Studying suicide-related content on Instagram is essential because of the platform’s extensive use and the paucity of research on its effects, which suggests that its possible role in suicide contagion warrants further investigation. | Interpretative | Traditional Quantitative content analysis | Instagram 500 English | The most prevalent graphics were text-based, and the majority of posts mentioned despair (88.4%) and self-harm (60.8%). Posts that talked about self-harm, loneliness, or seeking assistance got a lot more likes. Rarely were WHO standards on suicide contagion adhered to. Engagement was higher for those that expressed suicidal intent or that received encouraging responses. |

| Beriña M. J. et al. A Natural Language Processing Model for Early Detection of Suicidal Ideation in Textual Data—A Natural Language Processing Model for Early Detection of Suicidal Ideation in Textual Data (Beriña & Palaoag, 2024) | Journal of Information Systems Engineering and Management | Enhancing the automatic detection and reporting of suicide messages is the aim of this research. | Predictive | AI-based methods | Reddit 5000 + 5000 English | The research established clear language differences between suicidal and non-suicidal messages. Suicidal messages included distress- and negative-related words, while non-suicidal messages were positive and optimistic. The trend supported successful classification by AI, with the CNN-LSTM model performing the best and achieving 94% accuracy. |

| Lim Y.Q. et al. Characteristics of Multiclass Suicide Risks Tweets through Feature Extraction and Machine Learning Techniques (Lim & Loo, 2023) | JOIV International Journal on Informatics Visualization | The application of a machine learning framework that can analyze multiclass categorization of suicide risks from social media postings with in-depth examination of linguistic features that aid in suicide risk identification is suggested in this study. | Interpretative | AI-based methods | Twitter 690 English | Language analysis showed that individual features like PoS tags had no impact, while TF-IDF and sentiment scores were best at differentiating levels of risk. Medium-risk tweets used ideation-related terms, while high-risk tweets used more extreme terms like “kill.” No single feature was sufficient in isolation, but all features combined improved model performance. |

| García-Martínez C Exploring the Risk of Suicide in Real Time on Spanish Twitter: Observational Study. (García-Martínez et al., 2022) | JMIR Public Health Surveill | The goal was to investigate the emotional content of Spanish-language Twitter posts and how it relates to the degree of suicide risk at the time the tweet was written. | Predictive | Mixed Method | Twitter 2509 Spanish | Out of 2509 tweets, 8.61% were identified as exhibiting suicidality by experts. Suicide risk was highly correlated with emotions like sadness, decreased happiness, overall risk, and intensity of suicidal ideation. Risk intensity was higher in tweets with defeat, rejection, helplessness, desire for escape, lack of support systems, and frequent suicidal ideation. Multivariate analysis yielded intensity of suicidal ideation as a robust predictor of risk severity and emotional components including fear and valence. The model accounted for 75% of the variance. |

| Lao C et al. Analyzing Suicide Risk From Linguistic Features in Social Media: Evaluation Study. (Lao et al., 2022) | JMIR Form Res. | This project intends to investigate whether computerized language analysis could be used to evaluate and better comprehend an individual’s risk of suicide on social media. | Predictive | AI-based methods | Reddit 866 English | According to the study, at-risk users (low, moderate, and severe) exhibited less clout and more denial, first-person singular pronoun usage, and authenticity than non-risk users. Within at-risk groups, the Kruskal–Wallis test revealed no discernible differences. Strong model performance was demonstrated by the Random Forest model, which excelled at identifying users who were at risk, with AUCs ranging from 0.67 to 0.68. For the model to be accurate, characteristics like negativity and genuineness were crucial. But the SVM model did not perform well, especially for the group that was not at risk. |

| Metzler H. et al. Detecting Potentially Harmful and Protective Suicide-Related Content on Twitter: Machine Learning Approach. (Metzler et al., 2022) | J Med Internet Res. | This work uses machine learning and natural language processing techniques to categorize vast amounts of social media data based on traits found to be either helpful or detrimental in media effects research on suicide prevention. | Predictive | AI-based methods | Twitter 3202 English | Deep learning models pretrained over large-scale datasets, i.e., BERT and XLNet, outperformed traditional methods in the classification of suicide-related tweets with high F1-scores (up to 0.83 for binary classification). They worked well for suicidal ideation, coping, prevention, and actual suicide cases but could not deal with subjective language like sarcasm and metaphor. Surprisingly, approximately 75% of suicide-related tweets were about actual suicides. |

| Ptaszynski M. Looking for Razors and Needles in a Haystack: Multifaceted Analysis of Suicidal Declarations on Social Media-A Pragmalinguistic Approach. (Ptaszynski et al., 2021) | Int J Environ Res Public Health. | We examine the terminology used by suicidal users on the Reddit social media network. | Interpretative | Traditional LIWC | Reddit ND English | Its potential applicability was then further analyzed for its limitations, where we found that although LIWC is a useful and easy-to-administer tool, the lack of any kind of contextual processing makes it useless as a tool in psychological and linguistic research. We thus conclude that the shortcomings of LIWC can be easily addressed by creating a number of high-performance AI classifiers trained on annotation of similar LIWC categories, which we plan to investigate in future work. |

| M. I. Mobin et al. Social Media as a Mirror: Reflecting Mental Health Through Computational Linguistics (Mobin et al., 2024) | IEEE Access | This study uses artificial intelligence and machine learning techniques to examine people’s social media posts in order to assess their mental health, with a focus on indicators that could point to a suicide risk. | Predictive | AI-based methods | Reddit 2016 English | The study shows that suicidal users write longer posts with words like “kill” or “die” and depressed users write short, slang-filled comments. Topics like pessimism and self-harm were detected by models like LDA, Word2Vec, and TF-IDF as major topics, with suicide posts with greater behavioral intensity. Transfer learning showed a high overlap between suicidal ideation and depression, with the need for early detection and intervention. |

| Agarwal, D. et al. Dwarf Updated Pelican Optimization Algorithm for Depression and Suicide Detection from Social Media. (Agarwal et al., 2025) | Psychiatric Quarterly | By tackling the issues of variability and model generalization, this study offers a unique method for detecting depression and suicide using social media (SADDSM). | Predictive | AI-based methods | Twitter ND English | Using deep learning approaches (DBN, RNN, enhanced LSTM) tuned with the DU-POA algorithm, the SADDSM model obtained great precision (~95%). Because of TF-IDF, improved Word2Vec, and style-based features, it performed better than previous models. The study identified difficulties such as privacy concerns, post-misinterpretation, and high computational needs despite encouraging results. In order to facilitate medical intervention, future study will try to incorporate text, audio, and video data and use models like Random Forest and SVM for multiclass and real-time detection. |

| Liu X et al. Online Suicide Identification in the Framework of Rhetorical Structure Theory (RST). (X. Liu & Liu, 2021) | Healthcare | The goal was to investigate the rhetorical distinctions between Chinese social media users who did not have suicidal thoughts and those who did. | Interpretative | Traditional Rhetorical Structure Theory (RST) | Online Chinese platform 10,111 + 3740 Chinese | The study contrasted microblog posts from suicide and non-suicide groups (both with 4 men and 11 women). The suicide group contained significantly more posts and rhetorical relations than the non-suicide group. While “Joint” was utilized as the most common rhetorical relation in both groups, variations came in others like “Antithesis,” “Background,” and “Purpose.” When word count was controlled, the differences were only marginally significant. |

| Alghazzawi D. et al. Explainable AI-based suicidal and non-suicidal ideations detection from social media text with enhanced ensemble technique (Alghazzawi et al., 2025) | Sci Rep. | The work’s goal is to develop a binary-label text classification system that can distinguish between thoughts of suicide and those that are not. | Predictive | AI-based methods | Reddit 12,638 Portuguese translated in English | The study recognized that explicit and personal terminology reflects genuine suicidal ideation and vague or hyperbolic terminology suggests false intent. High precision and good AUC-ROC, especially for false ideation detection, was achieved by an ensemble model boosted with Explainable AI (XAI). The use of multiple classifiers ensured generalizability, while cross-validation and data balancing ensured robustness. The study emphasizes the need to personalize explanations to users and retrain models to comply with changing language trends. |

| Benjachairat, P. et al. Classification of suicidal ideation severity from Twitter messages using machine learning (Benjachairat et al., 2024) | International Journal of Information Management Data Insights | Text categorization models for predicting the intensity of suicidal thoughts are presented in this study. | Predictive | Mixed Method | Twitter 20,138 Thai | The study employs Twitter data to train a machine learning model that recognizes suicidal ideation levels, to assist the CHUOSE app, using CBT principles to process user messages. While not a replacement for clinical therapy, the app may help with treatment and be guided by clinicians as a therapeutic tool. Context understanding and coverage of CBT should be enhanced. |

| Shukla, S.S.P. et al. Enhancing suicidal ideation detection through advanced feature selection and stacked deep learning models (Shukla & Singh, 2025) | Applied Intelligence | Since social media and other communication platforms are often used for emotional expression and might reveal notable behavioral changes, it is essential for suicide prevention to identify suicidal ideation on these platforms. | Predictive | AI-based methods | Twitter and Reddit 30,816 Reddit + 9119 Twitter English | The hybrid approach proposed is 97% accurate on Twitter data, outperforming previous models. PBFS feature selection in conjunction with BERT, LLM, and MUSE embeddings for enhanced semantic understanding is employed. Stacked architecture from BiGRU-Attention, CNN, and XGBoost boosted the classification performance. The model generalized well but has the drawback of being text-based only, and multimodal work needs to be explored in the future. |

| Bouktif S. et al. Explainable Predictive Model for Suicidal Ideation During COVID-19: Social Media Discourse Study (Bouktif et al., 2025) | J Med Internet Res. | Using machine and deep learning algorithms on social media data, this study sought to find trends and indications linked to suicide thoughts. | Predictive | AI-based methods | Reddit 1338 + 1816 English | The study examined 3154 Reddit posts, of which 42.4% were tagged suicidal. A BERT + CNN + LSTM model reported 94% precision, 95% recall, and 93.65% accuracy with robust performance validated through 10-fold cross-validation. Analysis showed significant shifts in suicidal language across COVID-19, with XAI tools (LIME and SHAP) identifying salient terms such as “COVID,” “life,” and “die” as robust predictors of suicidal ideation. |

| Allam H. et al. AI-Driven Mental Health Surveillance: Identifying Suicidal Ideation Through Machine Learning Techniques (Allam et al., 2025) | Big Data and Cognitive Computing | The article’s goal is to use internet language as a monitoring and early intervention tool while utilizing AI and machine learning to enhance suicide prevention. | Predictive | AI-based methods | Twitter 20,000 English | The model achieved 85% accuracy, 88% precision, and 83% recall in identifying suicidal posts. It picked up notable emotional markers like “hurt,” “lost,” and “better without.” The balanced performance was 0.85 for F1-score and 0.93 for PR-AUC, but higher recall could capture more severe cases. |

| Meng, X. et al. Mining Suicidal Ideation in Chinese Social Media: A Dual-Channel Deep Learning Model with Information Gain Optimization (Meng et al., 2025) | Entropy | Using a fine-grained text enhancement technique, our model is made to process Chinese data and grasp the subtleties of text locale, context, and logical structure. | Predictive | AI-based methods | Sina Weibo ND Chinese | The study found that BERT embedding deep learning models outperform traditional models in suicidal ideation identification on Chinese social media. Combining information gain and attention mechanisms promoted focus on significant indicators, whereas small convolution kernels enhanced identification and reduced complexity. Linguistic analysis proved that suicidal users utilize more verbs and first-person pronouns, which aligns with the success of the model. |

| Abdulsalam, A. et al. Detecting Suicidality in Arabic Tweets Using Machine Learning and Deep Learning Techniques (Abdulsalam et al., 2024) | Arab J Sci Eng | Use machine learning and deep learning techniques to automatically categorize tweets in Arabic that contain suicide thoughts (as opposed to non-suicidal ones). | Predictive | AI-based methods | Twitter 5719 Arabic | According to the study, several terms associated with death and self-harm were frequently used in suicidal tweets, including “I want to die” and “I will kill myself.” Usually, these tweets were between two and twenty words long. The language employed was characterized by emotional turmoil and a clear intention to damage oneself. Furthermore, the number of suicidal tweets decreased around 5 PM after peaking at around 10 PM. These tweets’ timing and content provide important information for enhancing suicide ideation detection systems. |

| Ahadi S.A. et al. Detecting suicidality from Reddit Posts Using a Hybrid CNN-LSTM Model (Ahadi et al., 2024) | JUCS—Journal of Universal Computer Science | A multi-layered classification model that combines a convolutional neural network (CNN) and a long short-term memory (LSTM) network is used in this paper to propose an integrated framework for identifying suicidal ideation on social media. | Predictive | AI-based methods | Reddit 59,996 English | The study designed a hybrid CNN-LSTM classifier to detect suicidal ideation in text on social media. There was enhanced accuracy, robustness, and reduced overfitting with stacked and bagging ensembles. The best performance was obtained using TF-IDF embeddings, RReLU activation, and the Adam optimizer, boosting performance by up to 15%. Further study is needed to tackle data imbalance, model interpretability, and ethical issues. |

| Boonyarat, P. et al. Leveraging enhanced BERT models for detecting suicidal ideation in Thai social media content amidst COVID-19 (Boonyarat et al., 2024) | Information Processing and Management | The study’s two goals were to identify emotions (ER) and detect suicidal ideation (SID) via binary classification (whether suicidal ideation was present in tweets or not). | Predictive | AI-based methods | Twitter 2400 Thai | The model detected excessive suicidal ideation and negative emotions in Thai Twitter data, indicating long-standing psychological distress after the COVID-19 pandemic. Results show that there are long-term mental health effects from pandemic lockdowns with frustration, hopelessness, and emotional suffering seen among users. Findings are consistent with international trends and emphasize the need for continuous monitoring of mental health. Restrictions include no ground truth and data limited to Twitter with future intent to expand to other platforms. |

| Narynov, S. et al. Applying natural language processing techniques for suicidal content detection in social media (Narynov et al., 2020) | Journal of Theoretical and Applied Information Technology | The goal of this project is to create and assess computer techniques for identifying and assessing depressive and suicidal content in texts from social networks. | Predictive | AI-based methods | Vkontakte microblogging platform ND Russian | Texts were lemmatized and stop-words eliminated prior to vectorization using TF-IDF and Word2Vec. Various classifiers were trained, the optimum result being produced by the Random Forest classifier using TF-IDF at 96%. ROC curve validation and cross-validation were able to establish its accuracy in labeling suicidal and depressive posts. |

| Rezapour, M. Contextual evaluation of suicide-related posts. (Rezapour, 2023) | Humanit Soc Sci Commun | The purpose of this study was to find mental stresses associated with suicide and investigate the posts’ contextual characteristics, particularly those that are incorrectly labeled. | Predictive | AI-based methods | Reddit 22,498 English | Our findings suggest that while machine learning algorithms can identify most of the potentially offending posts, they can also introduce bias and some human touch is required to minimize the bias. We feel that there will be some posts that will be very difficult or impossible for algorithms to accurately label on their own, and they do require human empathy and insight. |

| Desmet, B. et al. Online suicide prevention through optimised text classification (Desmet & Hoste, 2018) | Information Sciences | This study aims to investigate supervised text classification to model and detect suicidality in Dutch-language forum posts in alternative to keyword search methods, typically used online | Predictive | AI-based methods | Dutch-language forum ND Dutch | The study revealed that automatic text classification can efficiently be applied to classify suicide and high-risk content with great accuracy, something that can be applicable for prevention in real-life scenarios. While recall was excellent in detecting relevant content, it was low in severity, especially due to implicit reference to suicide. Optimization using feature selection and genetic algorithms improved model performance. However, the reliance of the system on labeled data as well as its Dutch nature are significant limitations. Future projects involve text normalization and cross-lingual transfer to enhance recall and broaden applicability. |

| Grant RN et al. Automatic extraction of informal topics from online suicidal ideation. (Grant et al., 2018) | BMC Bioinformatics. | By examining social media posts and identifying at-risk individuals using expert-derived indicators and language traits, the study seeks to understand factors connected to suicide. | Interpretative | AI-based methods | Reddit 131,728 English | The cluster analysis was able to identify key themes of suicidal thoughts, self-injury, and abuse that mapped onto expert-identified risk factors. More specific risks like drug abuse and stressors like school pressure, body image, and medical issues were also revealed, though some, like gun ownership, were less represented. |

| Jere S. et al. Detection of Suicidal Ideation Based on Relational Graph Attention Network with DNN Classifier (Jere & Patil, 2023) | International Journal of Intelligent Systems and Applications in Engineering | In order to detect suicidal ideation by analyzing Twitter posts, a model based on a Relational Graph Attention Network (RGAT) in conjunction with a DNN classifier will be developed and evaluated. This model will integrate natural language processing techniques to identify at-risk individuals early and outperform current models. | Predictive | AI-based methods | Reddit 233,372 English | The study proved that automatic text classification can accurately pinpoint suicide-related content and can be used for prevention. While high recall was established on relevant posts, it was low on severity due to implicit references. Feature selection and genetic algorithms increased performance. The limitations are based on labeled data and Dutch focus. Future work aims to increase recall via cross-lingual transfer and normalization of the text. |

| Luo J Exploring temporal suicidal behavior patterns on social media: Insight from Twitter analytics. (Luo et al., 2020) | Health Informatics J. | 1. Content analysis was used to identify suicide risk variables in tweets; 2. Suicidality was quantitatively measured to identify temporal patterns of suicidal behavior; and 3. A method for examining temporal patterns of suicidal behavior on Twitter was proposed. | Predictive | AI-based methods | Twitter 716,899 English | The study identified 13 latent suicide-related topics using NMF topic modeling in tweets. Depression, school, low mood, and being lonely were some of the significant risk factors. Weekend suicide-related activity was seen for a few topics and Monday/Tuesday for others by temporal analysis. Every topic showed distinct weekly patterns, substantiating suicidality as a time-varying concept. These results could be applied in formulating targeted suicide prevention intervention based on behavioral patterns. |

| Slemon A. et al. Reddit Users’ Experiences of Suicidal Thoughts During the COVID-19 Pandemic: A Qualitative Analysis of r/Covid19_support Posts. (Slemon et al., 2021) | Frontiers in Public Health | To investigate the ways in which members of the r/COVID19_support group on Reddit talk about having suicidal thoughts during the COVID-19 pandemic. | Interpretative | Traditional Braun and Clarke’s thematic analysis | Reddit 83 English | The study determined that employment and financial concern, pre-existing mental illness, and isolation—each heightened by the pandemic—amplified suicidal ideation. Despite online relationships, one still felt isolated. The semi-anonymity of Reddit allowed open talk of mental illness, suggesting possible facilitated integration, but conscientious implementation is required. |

| Sarsam, S. M. et al. A lexicon-based approach to detecting suicide-related messages on Twitter (Sarsam et al., 2021) | Biomedical Signal Processing and Control | This study investigated how emotions in Twitter posts can be used to identify information related to suicide. | Predictive | AI-based methods | Twitter 4987 English | The study identified that suicide tweets were more angry, sad, and negative in sentiment, while non-suicide tweets were more angry and cheerful. The YATSI classifier, using a semi-supervised approach, was better than the existing methods with high accuracy and 94% agreement with expert labels, indicating improved detection of suicide tweets. |

| Sawhney R et al. Robust suicide risk assessment on social media via deep adversarial learning. (Sawhney et al., 2021) | Journal of the American Medical Informatics Association | To enhance the model’s capacity for generalization, we provide Adversarial Suicide assessment Hierarchical Attention (ASHA), a hierarchical attention model that uses adversarial learning. | Predictive | AI-based methods | Reddit 500 English | The study finds that ASHA outperforms deep and standard models in being able to correctly detect temporal trends and varied risk levels. Adversarial training regularizes and reduces overfitting. All the model components—ordinal loss, sequential modeling, and adversarial learning—are working towards improved performance. ASHA also picks up on subtle suicidal signals such as misspellings and indirect speech and is more human-judgment-like than previous models. |

| Gu Y et al. Suicide Possibility Scale Detection via Sina Weibo Analytics: Preliminary Results. (Gu et al., 2023) | Int J Environ Res Public Health | In order to forecast four aspects of the Suicide Possibility Scale—hopelessness, suicidal thoughts, poor self-evaluation, and hostility—we developed a suicide risk-prediction model using machine-learning algorithms to extract text patterns from Sina Weibo data. | Predictive | AI-based methods | Sina weibo 37,474 English | The research employed 121 linguistic features to forecast four SPS dimensions, most of which were taken from SCLIWC and the Moral Foundations Dictionary. The model was found to have satisfactory reliability for Hopelessness (0.72) and Hostility (0.81), with moderate predictive validity of approximately 0.35 Pearson correlation. Overall, it proved satisfactory predictive power through linguistic analysis of Weibo posts. |

| Guidry JPD et al. Pinning Despair and Distress—Suicide-Related Content on Visual Social Media Platform Pinterest. (Guidry et al., 2021) | Crisis. | This study’s goal was to examine Pinterest posts on suicide. | Interpretative | Traditional quantitative content analysis | Pinterest 500 English | Most suicide-related Pinterest posts were from individuals and were more interactive than organizational posts. Expression of identification as a person who has suicidal thoughts was common in comments, with more than half positive but 24.1% negative or bullying. WHO guideline information was more common in images than in captions but generated less interaction. Emotional and informational support were mostly conveyed in images, though only instrumental support, though infrequent, was linked to higher interaction. |

| Rabani, S.T. et al. Detecting suicidality on social media: Machine learning at rescue (Rabani et al., 2023) | Egyptian Informatics Journal | The aim of this research is to create a multi-class machine learning classifier to recognize varying degrees of suicidal risk in social media messages. | Predictive | AI-based methods | Twitter and Reddit 19,915 English | The study found that the XGB-EFASRI model performed best in identifying suicidal ideation (accuracy of 96.33%) among other machine learning models by capturing both linear and nonlinear patterns of features. Content analysis revealed that high-risk posts contained explicit suicidal intent and extreme despair, emotional distress but without explicit suicidal language in moderate-risk posts, and no-risk posts included general awareness or information. Topic modeling reinforced obvious semantic variations by risk level, with topics such as loneliness, emptiness, and hopelessness being characteristic of high-risk material. |

| Anika, S et al. Analyzing Multiple Data Sources for Suicide Risk Detection: A Deep Learning Hybrid Approach (Anika et al., 2024) | International Journal of Advanced Computer Science and Applications | This study’s goal is to automatically identify suicide risk in user-generated content from social media sites by utilizing Natural Language Processing (NLP) and Sentiment Analysis techniques, especially through sophisticated deep learning models. | Predictive | AI-based methods | Twitter and Reddit 241,193 English | Content analysis revealed differential linguistic differences in suicidal and non-suicidal posts, depicted as word clouds using common words. Skip-Gram word embeddings captured informative word relationships best. The BiGRU-CNN model performed better than others in suicidality prediction with good accuracy on both the Reddit (93.07%) and Twitter (92.47%) corpora. The model could effectively capture emotional and contextual information in text with good generalizability across platforms. Deep learning algorithms surpassed the traditional machine learning classifiers consistently in handling the nuance of suicidal content’s language. |

| Priyamvada, B. Stacked CNN—LSTM approach for prediction of suicidal ideation on social media (Priyamvada et al., 2023) | Multimedia Tools and Applications | The goal of the study is to determine which machine learning and deep learning models are more effective at identifying suicidal ideation signals in Twitter messages. | Predictive | AI-based methods | Twitter 5126 + 4833 English | Content analysis revealed clear lexical divergence between suicidal and non-suicidal tweets, where suicidal tweets showed repetitive use of question marks and despair, while non-suicidal tweets showed positivity and joy. The classification phase compared a number of machine learning models, where XGBoost outperformed other traditional methods with ~89% accuracy. Deep learning models using Word2Vec embeddings were also tried, and it was observed that the Stacked CNN—2 Layer LSTM model provided the highest accuracy of 93.92%. The hybrid CNN-LSTM architecture helped mitigate overfitting and improve stability and prediction capability. Overall, the coupling of CNN and LSTM models was observed to be superior to their individual use. |

| Aldhyani, T.H.H. et al. Detecting and Analyzing Suicidal Ideation on Social Media Using Deep Learning and Machine Learning Models (Aldhyani et al., 2022) | International Journal of Environmental Research and Public Health | The aim of this work is to propose an experimental research-based approach to create a system for detecting suicidal thoughts. | Predictive | AI-based methods | Reddit 232,074 + 116,037 English | The study analyzed Reddit posts to predict suicidal ideation using textual characteristics and psychometric LIWC-22 characteristics. Posts indicating suicidal ideation had higher scores on authenticity, anxiety, depression, negativity, and despondency, whereas non-suicidal posts had higher scores on attention, perception, sociality, and cognitive processes. The CNN–BiLSTM model achieved 95% using textual characteristics, outperforming XGBoost, while XGBoost outperformed CNN–BiLSTM with LIWC characteristics. Statistical testing confirmed that the individuals who are at risk of suicide have clear indications of psychological distress. Word cloud visualization portrayed the most common repeating words expressing suicidal ideation. |

| Chatterjee, M. et al. Suicide ideation detection from online social media: A multi-modal feature based technique (Chatterjee et al., 2022) | International Journal of Information Management Data Insights | The primary goal of this study is to assess online social media in order to enable early diagnosis of suicidal thinking. | Predictive | AI-based methods | Twitter and Reddit 188,704 English | Emotional, linguistic, and temporal features were identified as important predictors of suicidal ideation on social media by the research. The individuals posting suicidal ideation were frequently sad, anxious, depressed, and used more negative language and emoticons. Temporal analysis revealed that 73% of the suicidal users were active between 6 p.m. and 6 a.m., which in turn suggests that nighttime use was correlated with depressive states of mind. Sentiment, use of emoticons, and personality were systematically mined out of each post to identify implicit emotional signals. Logistic Regression had the maximum accuracy for classification followed by SVM when all the features that were mined were used. |

| Renjith, S. et al. An ensemble deep learning technique for detecting suicidal ideation from posts in social media platforms (Renjith et al., 2022) | Journal of King Saud University—Computer and Information Sciences | The goal of the project is to develop a mixed deep learning model that will enhance the automatic classification of Reddit texts that contain suicide thoughts. The posts are analyzed using LSTM, CNN, and an attention mechanism (Attention). | Predictive | AI-based methods | Reddit 69,600 English | Severe Risk posts often utilized negative words, self-referential words, and death words, while No Risk posts contained more positive words. Word cloud and n-gram frequency visualized the distinctions between the postings. |

| Saha, S. et al. An Investigation of Suicidal Ideation from Social Media Using Machine Learning Approach (Saha et al., 2023) | Baghdad Science Journal | This study’s goal is to use machine learning to assess factors of suicidal behavior in people. | Predictive | AI-based methods | Twitter ND English | The study compared different machine learning models to determine suicidal ideation from social media posts. SVM yielded the maximum accuracy (88.6%), and Gaussian Naive Bayes yielded the highest precision (77.3%). Suicidal posts had more despair-related words, self-injury-related words, and hopelessness-related words, and non-suicidal posts had more positive words. |

| Chadha, A. A Hybrid Deep Learning Model Using Grid Search and Cross-Validation for Effective Classification and Prediction of Suicidal Ideation from Social Network Data (Chadha & Kaushik, 2022) | New Generation Computing | The goal of this study is to create an effective learning model that can recognize people who are displaying suicide thoughts by precisely evaluating social media data. | Predictive | AI-based methods | Reddit 10,000 + 10,000 English | The authors employed 20,000 Reddit posts for suicidal vs. non-suicidal content classification. The authors, after preprocessing with lemmatization and word vectorization, tried CNN, CNN-LSTM, and CNN-LSTM with attention (ACL). The optimal F1 score of 90.82% and precision of 87.36% were achieved using ACL with GloVe embedding and hyperparameter tuning, while the maximum recall of 94.94% was achieved using the random embedding version with the minimum number of missed suicidal posts. |

| Liu, J. et al. Detecting Suicidal Ideation in Social Media: An Ensemble Method Based on Feature Fusion (J. Liu et al., 2022) | International Journal of Environmental Research and Public Health | The final objective is to use a hybrid technique that integrates linguistic, psychological, and semantic data to develop an efficient and customized model that can correctly identify post-suicidal ideation. | Predictive | AI-based methods | Weibo 2272 + 37,950 Chinese | The experiment showed that the characteristics of suicide risk factors were optimal for predicting suicidal ideation in Weibo posts with an F1-score of 72.77%. Merging different aspects of content—particularly basic statistics, suicide-related words, and word embeddings—also improved performance. The best model using content (BSC + RFS + WEC) had an accuracy of 80.15% and an F1-score of 78.60% after feature selection, which eliminated noise and highlighted key patterns. |

| Ramírez-Cifuentes, D et al. Detection of suicidal ideation on social media: Multimodal, relational, and behavioral analysis (Ramírez-Cifuentes et al., 2020) | Journal of Medical Internet Research | Suicide risk assessment was the main application of this study, which investigated the identification of mental health issues on social media. | Predictive | Mixed Method | Twitter 98,619 English | The study emphasizes the necessity of text features to measure suicide risk on social media. Through manually constructed suicide-specific vocabulary (SPV), researchers improved model accuracy by eliminating meaningless text. Self-references, posting frequency, and time intervals between posts were strong predictors (p < 0.001). Models trained on generic control users performed better than models that used controls with similar suicidal content. Merging text analysis with relational, behavioral, and image data provided more accurate and understandable predictions. |

| Tadesse, M.M. et al. Detection of suicide ideation in social media forums using deep learningtade (Tadesse et al., 2020) | Algorithms | The project aims to compare a hybrid prediction model based on deep learning (LSTM + CNN) with more conventional machine learning models in order to detect suicidal ideation in Reddit postings. | Predictive | AI-based methods | Reddit 3549 + 3652 English | The study contrasted frequent n-grams of suicide-suggestive and non-suicidal postings through examination of Reddit postings, and the outcome signaled hopelessness, anxiousness, guilt, and self-focus in suicidal postings and positivity and social interaction in non-suicidal postings. Rhetorical questions and death words signaling distress were prevalent in suicidal postings. The classification experiments signaled that deep learning models, specifically an LSTM-CNN hybrid model that made use of word embeddings, did better than standard machine learning baselines. This model achieved maximum accuracy (93.8%) and F1 score (92.8%) in suicidal ideation detection. Tunings of the features and parameters, such as ReLU activation and max-pooling, also assisted the model in its performance. |

| Roy, A. et al. A machine learning approach predicts future risk to suicidal ideation from social media data (Roy et al., 2020) | npj Digital Medicine | By examining publicly available Twitter data, the aim was to create an algorithm known as the “Suicide Artificial Intelligence Prediction Heuristic (SAIPH)” that can forecast the likelihood of suicidal thoughts in the future. | Predictive | AI-based methods | Twitter 283 + 3,518,494 English | Neural network models correctly predicted various psychological states with over 70% AUC. Using tweets, they detected suicidal ideation (SI) events with high accuracy (up to 0.90 AUC) and were better than sentiment analysis. Models also detected individuals who had a history of suicide attempts or plans and were stable across sex and age groups. Temporal patterns revealed risk scores are highest surrounding SI events, which suggests early warning potential. Regional SI scores were highly correlated with county-level suicide rates, particularly among youths. |

| Rabani, S.T. et al. Detection of suicidal ideation on Twitter using machine learning & ensemble approaches (Rabani et al., 2020) | Baghdad Science Journal | In order to help with the early detection and prevention of this severe mental health condition, this research suggests a methodology and experimental strategy that makes use of social media to more thoroughly analyze suicidal ideation. | Predictive | AI-based methods | Twitter 18,756 English | Content analysis was guided by mental health professionals who categorized tweets as suicidal or non-suicidal depending on words and context. Tweets with at least 75% inter-annotator agreement were used to ensure annotation quality. Feature extraction used TFIDF, and lesser important features were removed using information gain. The highest-performing model, Random Forest, recorded a 98.5% accuracy rate in detecting suicidal content. |

Appendix B. Glossary for Readers Related to Main Terminology Used in AI Qualitative Analysis

| Preprocessing | the stage of preparing and cleaning raw data—for example, by removing errors, missing values, or noise—to make it ready for analysis or use in machine learning models. |

| Lowercasing | process of converting all letters in a text to lowercase |

| Tokenization | process used to divide text into smaller units of information, such as words, phrases, or symbols. |

| Stopword removal | process of eliminating common words that provide little meaningful information. |

| Stemming | the reduction of words to their root or base form, usually by removing suffixes and sometimes prefixes. |

| Lemmatization | process applied to words to transform them into a base or dictionary representation known as a lemma, through the application of linguistic rules. |

| Part-of-speech | categorization of words in a text into their grammatical categories, such as nouns, verbs, and adjectives. |

| Word embedding | represent words as real-valued vectors that capture their contextual, semantic, and syntactic properties. These vectors reflect the degree of similarity between words, allowing models to recognize related meanings based on proximity in vector space. |

| TF-IDF | statistical method that converts text into numerical vectors by emphasizing the relevance of certain words within documents |

| Sentiment analysis | process of detecting and categorizing opinions or emotions expressed in text, usually as positive, negative, or neutral. |

| LIWC | tool for text analysis that quantifies the psychological, emotional, and structural elements of language through predefined word categories. |

| Vader | rule-based sentiment analysis tool designed for social media text that combined a lexicon with intensity measures to capture the polarity of sentiment. |

| Senti-word-net | lexical resource in which each WordNet synset gets numerical scores for positive, negative, and neutral sentiments. |

| LSTM | type of recurrent neural network designed to capture long-term dependencies in sequential data |

| CNN | neural network architecture that uses convolutional layers to extract hierarchical features from data |

| LSTM-CNN | hybrid neural network that combines the LSTM layer for capturing sequential dependencies within text with the CNN layers for extracting feature patterns of a local nature. |

| BERT | transformer-based language model that uses both left and right contexts to generate contextualized word embeddings. |

References

- Abdulsalam, A., Alhothali, A., & Al-Ghamdi, S. (2024). Detecting suicidality in Arabic tweets using machine learning and deep learning techniques. Arabian Journal for Science and Engineering, 49(9), 12729–12742. [Google Scholar] [CrossRef]

- Agarwal, D., Singh, V., Singh, A. K., & Madan, P. (2025). Dwarf updated pelican optimization algorithm for depression and suicide detection from social media. Psychiatric Quarterly, 96, 529–562. [Google Scholar] [CrossRef] [PubMed]

- Ahadi, S. A., Jazayeri, K., & Tebyani, S. (2024). Detecting suicidality from reddit posts using a hybrid CNN-LSTM model. Journal of Universal Computer Science, 30(13), 1872–1904. [Google Scholar] [CrossRef]

- Aldhyani, T. H. H., Alsubari, S. N., Alshebami, A. S., Alkahtani, H., & Ahmed, Z. A. T. (2022). Detecting and analyzing suicidal ideation on social media using deep learning and machine learning models. International Journal of Environmental Research and Public Health, 19(19), 12635. [Google Scholar] [CrossRef]

- Alghazzawi, D., Ullah, H., Tabassum, N., Badri, S. K., & Asghar, M. Z. (2025). Explainable AI-based suicidal and non-suicidal ideations detection from social media text with enhanced ensemble technique. Scientific Reports, 15(1), 1111. [Google Scholar] [CrossRef]

- Ali, A., & Gibson, K. (2019). Young people’s reasons for feeling suicidal: An analysis of posts to a social media suicide prevention forum. Crisis, 40(6), 400–406. [Google Scholar] [CrossRef]

- Allam, H., Davison, C., Kalota, F., Lazaros, E., & Hua, D. (2025). AI-driven mental health surveillance: Identifying suicidal ideation through machine learning techniques. Big Data and Cognitive Computing, 9(1), 16. [Google Scholar] [CrossRef]

- Anika, S., Dewanjee, S., & Muntaha, S. (2024). Analyzing multiple data sources for suicide risk detection: A deep learning hybrid approach. IJACSA International Journal of Advanced Computer Science and Applications, 15(2), 675–683. [Google Scholar] [CrossRef]

- Badian, Y., Ophir, Y., Tikochinski, R., Calderon, N., Klomek, A. B., Fruchter, E., & Reichart, R. (2023). Social media images can predict suicide risk using interpretable large language-vision models. The Journal of Clinical Psychiatry, 85(1), 50516. [Google Scholar] [CrossRef]

- Belli, G., Trentarossi, B., Romão, M. E., Baptista, M. N., Barello, S., & Visonà, S. D. (2025). Suicide notes: A scoping review of qualitative studies to highlight methodological opportunities for prevention. OMEGA—Journal of Death and Dying. [Google Scholar] [CrossRef] [PubMed]

- Benjachairat, P., Senivongse, T., Taephant, N., Puvapaisankit, J., Maturosjamnan, C., & Kultananawat, T. (2024). Classification of suicidal ideation severity from Twitter messages using machine learning. International Journal of Information Management Data Insights, 4(2), 100280. [Google Scholar] [CrossRef]

- Beriña, J. M., & Palaoag, T. D. (2024). A natural language processing model for early detection of suicidal ideation in textual data. Journal of Information Systems Engineering and Management, 10(6s), 422–432. [Google Scholar] [CrossRef]

- Birk, R. H., & Samuel, G. (2022). Digital phenotyping for mental health: Reviewing the challenges of using data to monitor and predict mental health problems. Current Psychiatry Reports, 24(10), 523–528. [Google Scholar] [CrossRef]

- Boonyarat, P., Liew, D. J., & Chang, Y.-C. (2024). Leveraging enhanced BERT models for detecting suicidal ideation in Thai social media content amidst COVID-19. Information Processing & Management, 61(4), 103706. [Google Scholar] [CrossRef]

- Bouktif, S., Khanday, A. M. U. D., & Ouni, A. (2025). Explainable predictive model for suicidal ideation during COVID-19: Social media discourse study. Journal of Medical Internet Research, 27, e65434. [Google Scholar] [CrossRef] [PubMed]

- Cai, Z., Mao, P., Wang, Z., Wang, D., He, J., & Fan, X. (2023). Associations between problematic internet use and mental health outcomes of students: A meta-analytic review. Adolescent Research Review, 8(1), 45–62. [Google Scholar] [CrossRef]

- Carlyle, K. E., Guidry, J. P. D., Williams, K., Tabaac, A., & Perrin, P. B. (2018). Suicide conversations on InstagramTM: Contagion or caring? Journal of Communication in Healthcare, 11(1), 12–18. [Google Scholar] [CrossRef]

- Cash, S. J., Thelwall, M., Peck, S. N., Ferrell, J. Z., & Bridge, J. A. (2013). Adolescent suicide statements on MySpace. Cyberpsychology, Behavior, and Social Networking, 16(3), 166–174. [Google Scholar] [CrossRef]

- Chadha, A., & Kaushik, B. (2022). A hybrid deep learning model using grid search and cross-validation for effective classification and prediction of suicidal ideation from social network data. New Generation Computing, 40(4), 889–914. [Google Scholar] [CrossRef] [PubMed]

- Chatterjee, M., Kumar, P., Samanta, P., & Sarkar, D. (2022). Suicide ideation detection from online social media: A multi-modal feature based technique. International Journal of Information Management Data Insights, 2(2), 100103. [Google Scholar] [CrossRef]

- Desmet, B., & Hoste, V. (2018). Online suicide prevention through optimised text classification. Information Sciences, 439–440, 61–78. [Google Scholar] [CrossRef]

- Falcone, T., Dagar, A., Castilla-Puentes, R. C., Anand, A., Brethenoux, C., Valleta, L. G., Furey, P., Timmons-Mitchell, J., & Pestana-Knight, E. (2020). Digital conversations about suicide among teenagers and adults with epilepsy: A big-data, machine learning analysis. Epilepsia, 61(5), 951–958. [Google Scholar] [CrossRef]

- García-Martínez, C., Oliván-Blázquez, B., Fabra, J., Martínez-Martínez, A. B., Pérez-Yus, M. C., & López-Del-Hoyo, Y. (2022). Exploring the risk of suicide in real time on Spanish Twitter: Observational study. JMIR Public Health and Surveillance, 8(5), e31800. [Google Scholar] [CrossRef]

- Grant, R. N., Kucher, D., León, A. M., Gemmell, J. F., Raicu, D. S., & Fodeh, S. J. (2018). Automatic extraction of informal topics from online suicidal ideation. BMC Bioinformatics, 19, 211. [Google Scholar] [CrossRef] [PubMed]

- Gu, Y., Chen, D., & Liu, X. (2023). Suicide possibility scale detection via Sina Weibo analytics: Preliminary results. International Journal of Environmental Research and Public Health, 20(1), 466. [Google Scholar] [CrossRef] [PubMed]

- Guidry, J. P. D., O’Donnell, N. H., Miller, C. A., Perrin, P. B., & Carlyle, K. E. (2021). Pinning despair and distress-suicide-related content on visual social media platform pinterest. Crisis, 42(4), 270–277. [Google Scholar] [CrossRef] [PubMed]

- Horne, J., & Wiggins, S. (2009). Doing being “on the edge”: Managing the dilemma of being authentically suicidal in an online forum. Sociology of Health and Illness, 31(2), 170–184. [Google Scholar] [CrossRef]

- Huang, Y.-P., Goh, T., & Liew, C. L. (2007, December 10–12). Hunting suicide notes in Web 2.0—Preliminary findings. Ninth IEEE International Symposium on Multimedia Workshops (ISMW 2007) (pp. 517–521), Taichung, Taiwan. [Google Scholar] [CrossRef]

- Jere, S., & Patil, A. P. (2023). Detection of suicidal ideation based on relational graph attention network with DNN classifier. Original Research Paper International Journal of Intelligent Systems and Applications in Engineering IJISAE, 11(10s), 321–332. [Google Scholar]

- Karbeyaz, K., Akkaya, H., Balci, Y., & Urazel, B. (2014). İntihar notlarinin analizi: Eskişehir deneyimi. Nöro Psikiyatri Arşivi, 51, 275–279. [Google Scholar] [CrossRef]

- Lao, C., Lane, J., & Suominen, H. (2022). Analyzing suicide risk from linguistic features in social media: Evaluation study. JMIR Formative Research, 6(8), e35563. [Google Scholar] [CrossRef]

- Lim, Y. Q., & Loo, Y. L. (2023). Characteristics of multi-class suicide risks tweets through feature extraction and machine learning techniques. JOIV: International Journal on Informatics Visualization, 7(4), 2297. [Google Scholar] [CrossRef]

- Liu, J., Shi, M., & Jiang, H. (2022). Detecting suicidal ideation in social media: An ensemble method based on feature fusion. International Journal of Environmental Research and Public Health, 19(13), 8197. [Google Scholar] [CrossRef]

- Liu, X., & Liu, X. (2021). Online suicide identification in the framework of rhetorical structure theory (RST). Healthcare, 9(7), 847. [Google Scholar] [CrossRef] [PubMed]

- Liu, X., Liu, X., Sun, J., Yu, N. X., Sun, B., Li, Q., & Zhu, T. (2019). Proactive suicide prevention online (PSPO): Machine identification and crisis management for Chinese social media users with suicidal thoughts and behaviors. Journal of Medical Internet Research, 21(5), e11705. [Google Scholar] [CrossRef] [PubMed]

- Loch, A. A., & Kotov, R. (2025). Promises and pitfalls of internet search data in mental health: Critical review. JMIR Mental Health, 12, e60754. [Google Scholar] [CrossRef]

- Luo, J., Du, J., Tao, C., Xu, H., & Zhang, Y. (2020). Exploring temporal suicidal behavior patterns on social media: Insight from Twitter analytics. Health Informatics Journal, 26(2), 738–752. [Google Scholar] [CrossRef]

- Meng, X., Cui, X., Zhang, Y., Wang, S., Wang, C., Li, M., & Yang, J. (2025). Mining suicidal ideation in Chinese social media: A dual-channel deep learning model with information gain optimization. Entropy, 27(2), 116. [Google Scholar] [CrossRef] [PubMed]

- Metzler, H., Baginski, H., Niederkrotenthaler, T., & Garcia, D. (2022). Detecting potentially harmful and protective suicide-related content on Twitter: Machine learning approach. Journal of Medical Internet Research, 24(8), e34705. [Google Scholar] [CrossRef]

- Mirtaheri, S. L., Greco, S., & Shahbazian, R. (2023). A self-attention TCN-based model for suicidal ideation detection from social media posts. Available online: https://ssrn.com/abstract=4410421 (accessed on 26 July 2025).

- Mobin, M. I., Suaib Akhter, A. F. M., Mridha, M. F., Hasan Mahmud, S. M., & Aung, Z. (2024). Social media as a mirror: Reflecting mental health through computational linguistics. IEEE Access, 12, 130143–130164. [Google Scholar] [CrossRef]

- Narynov, S., Kozhakhmet, K., Mukhtarkhanuly, D., & Saparkhojayev, N. (2020). Applying natural language processing techniques for suicidal content detection in social media. Journal of Theoretical and Applied Information Technology, 15(21), 3390–3404. [Google Scholar]

- Niederkrotenthaler, T., Braun, M., Pirkis, J., Till, B., Stack, S., Sinyor, M., Tran, U. S., Voracek, M., Cheng, Q., Arendt, F., Scherr, S., Yip, P. S. F., & Spittal, M. J. (2020). Association between suicide reporting in the media and suicide: Systematic review and meta-analysis. BMJ, 368, m575. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Ouzzani, M., Hammady, H., Fedorowicz, Z., & Elmagarmid, A. (2016). Rayyan—A web and mobile app for systematic reviews. Systematic Reviews, 5(1), 210. [Google Scholar] [CrossRef]

- Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., … Moher, D. (2021). The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ, 372, n71. [Google Scholar] [CrossRef]

- Priyamvada, B., Singhal, S., Nayyar, A., Jain, R., Goel, P., Rani, M., & Srivastava, M. (2023). Stacked CNN—LSTM approach for prediction of suicidal ideation on social media. Multimedia Tools and Applications, 82(18), 27883–27904. [Google Scholar] [CrossRef]

- Ptaszynski, M., Zasko-Zielinska, M., Marcinczuk, M., Leliwa, G., Fortuna, M., Soliwoda, K., Dziublewska, I., Hubert, O., Skrzek, P., Piesiewicz, J., Karbowska, P., Dowgiallo, M., Eronen, J., Tempska, P., Brochocki, M., Godny, M., & Wroczynski, M. (2021). Looking for razors and needles in a haystack: Multifaceted analysis of suicidal declarations on social media—A pragmalinguistic approach. International Journal of Environmental Research and Public Health, 18(22), 11759. [Google Scholar] [CrossRef]

- Rabani, S. T., Khan, Q. R., & Ud Din Khanday, A. M. (2020). Detection of suicidal ideation on Twitter using machine learning & ensemble approaches. Baghdad Science Journal, 17(4), 1328–1339. [Google Scholar] [CrossRef]

- Rabani, S. T., Ud Din Khanday, A. M., Khan, Q. R., Hajam, U. A., Imran, A. S., & Kastrati, Z. (2023). Detecting suicidality on social media: Machine learning at rescue. Egyptian Informatics Journal, 24(2), 291–302. [Google Scholar] [CrossRef]

- Ramírez-Cifuentes, D., Freire, A., Baeza-Yates, R., Puntí, J., Medina-Bravo, P., Velazquez, D. A., Gonfaus, J. M., & Gonzàlez, J. (2020). Detection of suicidal ideation on social media: Multimodal, relational, and behavioral analysis. Journal of Medical Internet Research, 22(7), e17758. [Google Scholar] [CrossRef]

- Renjith, S., Abraham, A., Jyothi, S. B., Chandran, L., & Thomson, J. (2022). An ensemble deep learning technique for detecting suicidal ideation from posts in social media platforms. Journal of King Saud University—Computer and Information Sciences, 34(10), 9564–9575. [Google Scholar] [CrossRef]

- Rezapour, M. (2023). Contextual evaluation of suicide-related posts. Humanities and Social Sciences Communications, 10(1), 895. [Google Scholar] [CrossRef]

- Roy, A., Nikolitch, K., McGinn, R., Jinah, S., Klement, W., & Kaminsky, Z. A. (2020). A machine learning approach predicts future risk to suicidal ideation from social media data. npj Digital Medicine, 3(1), 78. [Google Scholar] [CrossRef] [PubMed]

- Saha, S., Dasgupta, S., Anam, A., Saha, R., Nath, S., & Dutta, S. (2023). An investigation of suicidal ideation from social media using machine learning approach. Baghdad Science Journal, 20, 1164–1181. [Google Scholar] [CrossRef]

- Sarsam, S. M., Al-Samarraie, H., Alzahrani, A. I., Alnumay, W., & Smith, A. P. (2021). A lexicon-based approach to detecting suicide-related messages on Twitter. Biomedical Signal Processing and Control, 65, 102355. [Google Scholar] [CrossRef]

- Sawhney, R., Joshi, H., Gandhi, S., Jin, D., & Shah, R. R. (2021). Robust suicide risk assessment on social media via deep adversarial learning. Journal of the American Medical Informatics Association, 28(7), 1497–1506. [Google Scholar] [CrossRef]

- Shukla, S. S. P., & Singh, M. P. (2025). Enhancing suicidal ideation detection through advanced feature selection and stacked deep learning models. Applied Intelligence, 55(5), 303. [Google Scholar] [CrossRef]

- Slemon, A., McAuliffe, C., Goodyear, T., McGuinness, L., Shaffer, E., & Jenkins, E. K. (2021). Reddit users’ experiences of suicidal thoughts during the COVID-19 pandemic: A qualitative analysis of r/COVID19_support posts. Frontiers in Public Health, 9, 693153. [Google Scholar] [CrossRef] [PubMed]

- Tadesse, M. M., Lin, H., Xu, B., & Yang, L. (2020). Detection of suicide ideation in social media forums using deep learning. Algorithms, 13(1), 7. [Google Scholar] [CrossRef]

- Trentarossi, B., Romão, M. E., Baptista, M. N., Barello, S., Visonà, S. D., & Belli, G. (2025, November 19). Digital detection of suicidal ideation: A scoping review to inform prevention and psychological well-being. Available online: https://osf.io/myjdp (accessed on 26 July 2025).

- Tricco, A. C., Lillie, E., Zarin, W., O’Brien, K. K., Colquhoun, H., Levac, D., Moher, D., Peters, M. D. J., Horsley, T., Weeks, L., Hempel, S., Akl, E. A., Chang, C., McGowan, J., Stewart, L., Hartling, L., Aldcroft, A., Wilson, M. G., Garritty, C., … Straus, S. E. (2018). PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Annals of Internal Medicine, 169(7), 467–473. [Google Scholar] [CrossRef]

- Walling, M. A. (2021). Suicide contagion. Current Trauma Reports, 7(4), 103–114. [Google Scholar] [CrossRef]

- Wang, Z., Yu, G., Tian, X., Tang, J., & Yan, X. (2018). A study of users with suicidal ideation on Sina Weibo. Telemedicine and E-Health, 24(9), 702–709. [Google Scholar] [CrossRef]

- Wong, P. W. C., & Li, T. M. H. (2017). Suicide communications on Facebook as a source of information in suicide research: A case study. Available online: https://www.researchgate.net/publication/318850597 (accessed on 25 July 2025).

- World Health Organization. (2025). Suicide. Available online: https://Www.Who.Int/News-Room/Fact-Sheets/Detail/Suicide (accessed on 25 July 2025).

- Wu, Y.-J., Outley, C., Matarrita-Cascante, D., & Murphrey, T. P. (2016). A systematic review of recent research on adolescent social connectedness and mental health with internet technology use. Adolescent Research Review, 1(2), 153–162. [Google Scholar] [CrossRef]

| Concept | Keywords/Search Terms |

|---|---|

| 1. Suicidal Ideation | “suicid* ideation” OR “suicide intention” OR “suicid* thoughts” |

| 2. Digital Context | “social media” OR “online post*” OR “internet forum*” OR “digital platform*” |

| 3. Research Activity | “analysis” OR “study” OR “evaluation” OR “assessment” OR “investigation” OR “examination” |

| 4. Final Boolean Search | (“suicid* ideation” OR “suicide intention” OR “suicid* thoughts”) AND (“social media” OR “online post*” OR “internet forum*” OR “digital platform*”) AND (“analysis” OR “study” OR “evaluation” OR “assessment” OR “investigation” OR “examination”) |

| Inclusion Criteria |

| 1. Language: publications in English |

| 2. Studies concerning the analysis of suicidal ideation online |

| Exclusion Criteria |

| 1. Studies not related to online post |

| 2. Studies related only to specific categories |

| 3. Not journal articles |

| 4. Literature reviews, letters or opinion papers |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Trentarossi, B.; Romão, M.E.; Barello, S.; Baptista, M.N.; Visonà, S.D.; Belli, G. Digital Detection of Suicidal Ideation: A Scoping Review to Inform Prevention and Psychological Well-Being. Behav. Sci. 2025, 15, 1601. https://doi.org/10.3390/bs15121601

Trentarossi B, Romão ME, Barello S, Baptista MN, Visonà SD, Belli G. Digital Detection of Suicidal Ideation: A Scoping Review to Inform Prevention and Psychological Well-Being. Behavioral Sciences. 2025; 15(12):1601. https://doi.org/10.3390/bs15121601

Chicago/Turabian StyleTrentarossi, Benedetta, Mateus Eduardo Romão, Serena Barello, Makilim Nunes Baptista, Silvia Damiana Visonà, and Giacomo Belli. 2025. "Digital Detection of Suicidal Ideation: A Scoping Review to Inform Prevention and Psychological Well-Being" Behavioral Sciences 15, no. 12: 1601. https://doi.org/10.3390/bs15121601

APA StyleTrentarossi, B., Romão, M. E., Barello, S., Baptista, M. N., Visonà, S. D., & Belli, G. (2025). Digital Detection of Suicidal Ideation: A Scoping Review to Inform Prevention and Psychological Well-Being. Behavioral Sciences, 15(12), 1601. https://doi.org/10.3390/bs15121601