ChatGPT in Learning: Assessing Students’ Use Intentions through the Lens of Perceived Value and the Influence of AI Literacy

Abstract

1. Introduction

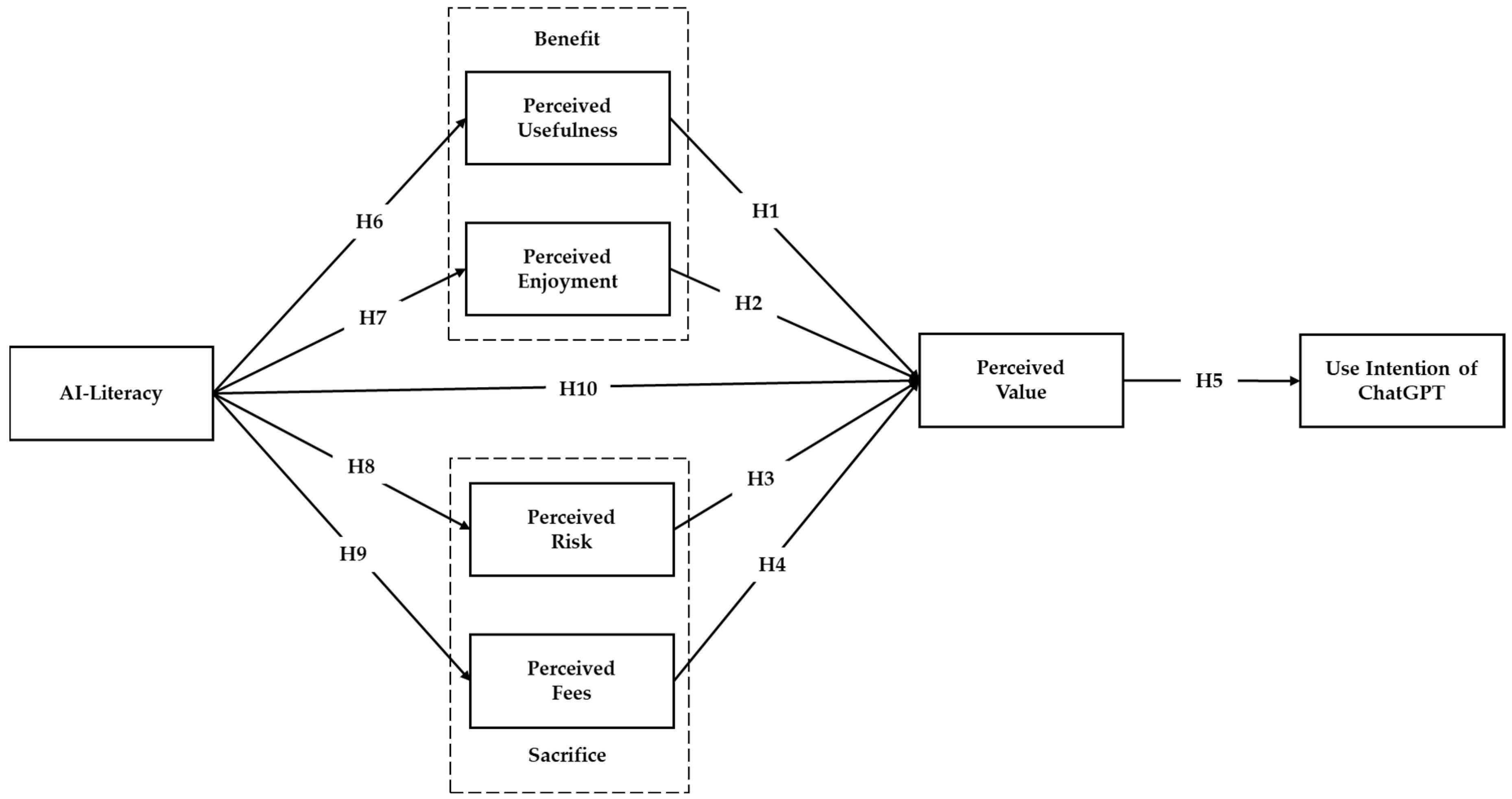

2. Theoretical Foundation

2.1. Value-Based Model (VAM)

2.1.1. Perceived Benefits and Perceived Value

2.1.2. Perceived Sacrifice and Perceived Value

2.1.3. Perceived Value and ChatGPT Use Intention

2.2. The Role of AI Literacy

2.2.1. AI Literacy and Perceived Benefits

2.2.2. AI Literacy and Perceived Sacrifice

2.2.3. AI Literacy and Perceived Value

3. Method

3.1. Data Collection and Participants

3.2. Data Analysis and Measurement

4. Results

4.1. Measurement Model Analysis

4.2. Structural Model Analysis

5. Discussion and Implications

5.1. Perceived Usefulness and Perceived Value

5.2. Perceived Enjoyment and Perceived Value

5.3. Perceived Risk and Perceived Value

5.4. Perceived Fees and Perceived Value

5.5. Perceived Value and ChatGPT Use Intention

5.6. AI Literacy and Perceived Usefulness

5.7. AI Literacy and Perceived Enjoyment

5.8. AI Literacy and Perceived Risk

5.9. AI Literacy and Perceived Fees

5.10. AI Literacy and Perceived Value

6. Conclusions, Limitations, and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lo, C.K. What is the impact of ChatGPT on education? A rapid review of the literature. Educ. Sci. 2023, 13, 410. [Google Scholar] [CrossRef]

- Rane, N. Enhancing the quality of teaching and learning through ChatGPT and similar large language models: Challenges, future prospects, and ethical considerations in education. TESOL Technol. Stud. 2024, 5, 1–6. [Google Scholar] [CrossRef]

- Ayanwale, M.A.; Sanusi, I.T.; Adelana, O.P.; Aruleba, K.D.; Oyelere, S.S. Teachers’ readiness and intention to teach artificial intelligence in schools. Comput. Educ. Artif. Intell. 2022, 3, 100099. [Google Scholar] [CrossRef]

- Rahman, M.M.; Watanobe, Y. ChatGPT for education and research: Opportunities, threats, and strategies. Appl. Sci. 2023, 13, 5783. [Google Scholar] [CrossRef]

- Biswas, S. ChatGPT and the future of medical writing. Radiology 2023, 307, e223312. [Google Scholar] [CrossRef]

- Fırat, M. What ChatGPT means for universities: Perceptions of scholars and students. J. Appl. Learn. Teach. 2023, 6, 57–63. [Google Scholar] [CrossRef]

- Strzelecki, A. Students’ acceptance of ChatGPT in higher education: An extended unified theory of acceptance and use of technology. Innov. High. Educ. 2023, 49, 223–245. [Google Scholar] [CrossRef]

- Ge, J.; Lai, J.C. Artificial intelligence-based text generators in hepatology: ChatGPT is just the beginning. Hepatol. Commun. 2023, 7, e0097. [Google Scholar] [CrossRef]

- Adıgüzel, T.; Kaya, M.H.; Cansu, F.K. Revolutionizing education with AI: Exploring the transformative potential of ChatGPT. Contemp. Educ. Technol. 2023, 15, ep429. [Google Scholar] [CrossRef]

- Al-Abdullatif, A.M.; Al-Dokhny, A.A.; Drwish, A.M. Implementing the Bashayer chatbot in Saudi higher education: Measuring the influence on students’ motivation and learning strategies. Front. Psychol. 2023, 14, 1129070. [Google Scholar] [CrossRef]

- Eller-Molitas, R. Leveraging ChatGPT for adult ESL education. WAESOL Educ. 2024, 49, 34–36. Available online: https://educator.waesol.org/index.php/WE/article/view/104/49 (accessed on 11 August 2024).

- Elmohandes, N.; Marghany, M. Effective or ineffective? Using ChatGPT for staffing in the hospitality industry. Eur. J. Tour. Res. 2024, 36, 3617. [Google Scholar] [CrossRef]

- Marr, B. A Short History of ChatGPT: How We Got to Where We Are Today. Available online: https://www.forbes.com/sites/bernardmarr/2023/05/19/a-short-history-of-chatgpt-how-we-got-to-where-we-are-today/?sh=6ecbe8dd674f (accessed on 18 August 2024).

- Javaid, M.; Haleem, A.; Singh, R.P.; Khan, S.; Khan, I.H. Unlocking the opportunities through ChatGPT tool towards ameliorating the education system. Bench Counc. Trans. Benchmarks Stand. Eval. 2023, 3, 100115. [Google Scholar] [CrossRef]

- Mai, D.T.T.; Van Da, C.; Hanh, N.V. The use of ChatGPT in teaching and learning: A systematic review through SWOT analysis approach. Front. Educ. 2024, 9, 1328769. [Google Scholar] [CrossRef]

- Gill, S.S.; Xu, M.; Patros, P.; Wu, H.; Kaur, R.; Kaur, K.; Fuller, S.; Singh, M.; Arora, P.; Parlikad, A.K.; et al. Transformative effects of ChatGPT on modern education: Emerging era of AI chatbots. Internet Things Cyber-Phys. Syst. 2024, 4, 19–23. [Google Scholar] [CrossRef]

- Al-Abdullatif, A.M. Modeling students’ perceptions of chatbots in learning: Integrating technology acceptance with the value-based adoption model. Educ. Sci. 2023, 13, 1151. [Google Scholar] [CrossRef]

- Cotton, D.R.; Cotton, P.A.; Shipway, J.R. Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innov. Educ. Teach. Int. 2023, 61, 228–239. [Google Scholar] [CrossRef]

- Mosaiyebzadeh, F.; Pouriyeh, S.; Parizi, R.; Dehbozorgi, N.; Dorodchi, M.; Macêdo Batista, D. Exploring the Role of ChatGPT in Education: Applications and Challenges. In Proceedings of the 24th Annual Conference on Information Technology Education (ITE 2023), Marietta, GA, USA, 11–14 October 2023; pp. 84–89. [Google Scholar] [CrossRef]

- Tossell, C.C.; Tenhundfeld, N.L.; Momen, A.; Cooley, K.; de Visser, E.J. Student perceptions of ChatGPT use in a college essay assignment: Implications for learning, grading, and trust in artificial intelligence. IEEE Trans. Learn. Technol. 2024, 17, 1069–1081. Available online: https://ieeexplore.ieee.org/abstract/document/10400910 (accessed on 7 July 2024). [CrossRef]

- Rogers, E.M. Diffusion of Innovations, 5th ed.; Free Press: New York, NY, USA, 2023. [Google Scholar]

- Ng, D.T.K.; Leung, J.K.L.; Chu, S.K.W.; Qiao, M.S. Conceptualizing AI literacy: An exploratory review. Comput. Educ. Artif. Intell. 2021, 2, 100041. [Google Scholar] [CrossRef]

- Kim, H.W.; Chan, H.C.; Gupta, S. Value-based adoption of mobile internet: An empirical investigation. Decis. Support Syst. 2007, 43, 111–126. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A theoretical extension of the technology acceptance model: Four longitudinal field studies. Manag. Sci. 2020, 46, 186–204. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User acceptance of computer technology: A comparison of two theoretical models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef]

- Zeithaml, V.A. Consumer perceptions of price, quality, and value: A means-end model and synthesis of evidence. J. Mark. 1988, 52, 2–22. [Google Scholar] [CrossRef]

- Kim, J.; Kim, J. An integrated analysis of value-based adoption model and information systems success model for proptech service platform. Sustainability 2021, 13, 12974. [Google Scholar] [CrossRef]

- Yu, J.; Lee, H.; Ha, I.; Zo, H. User acceptance of media tablets: An empirical examination of perceived value. Telemat. Inform. 2017, 34, 206–223. [Google Scholar] [CrossRef]

- Liao, Y.K.; Wu, W.Y.; Le, T.Q.; Phung, T.T.T. The integration of the technology acceptance model and value-based adoption model to study the adoption of e-learning: The moderating role of e-WOM. Sustainability 2022, 14, 815. [Google Scholar] [CrossRef]

- Kim, S.H.; Bae, J.H.; Jeon, H.M. Continuous intention on accommodation apps: Integrated value-based adoption and expectation–confirmation model analysis. Sustainability 2019, 11, 1578. [Google Scholar] [CrossRef]

- Lau, C.K.; Chui, C.F.R.; Au, N. Examination of the adoption of augmented reality: A VAM approach. Asia Pac. J. Tour. Res. 2019, 24, 1005–1020. [Google Scholar] [CrossRef]

- Liang, T.P.; Lin, Y.L.; Hou, H.C. What drives consumers to adopt a sharing platform: An integrated model of value-based and transaction cost theories. Inf. Manag. 2021, 58, 103471. [Google Scholar] [CrossRef]

- Teo, T. Factors influencing teachers’ intention to use technology: Model development and test. Comput. Educ. 2011, 57, 2432–2440. [Google Scholar] [CrossRef]

- Panda, S.; Kaur, N. Exploring the viability of ChatGPT as an alternative to traditional chatbot systems in library and information centers. Libr. Hi Tech News 2023, 40, 22–25. [Google Scholar] [CrossRef]

- Sohn, K.; Kwon, O. Technology acceptance theories and factors influencing artificial intelligence-based intelligent products. Telemat. Inform. 2020, 47, 101324. [Google Scholar] [CrossRef]

- Kim, Y.; Park, Y.; Choi, J. A study on the adoption of IoT smart home service: Using value-based adoption model. Total Qual. Manag. Bus. Excell. 2017, 28, 1149–1165. [Google Scholar] [CrossRef]

- Bozkurt, A. Generative artificial intelligence (AI) powered conversational educational agents: The inevitable paradigm shift. Asian J. Distance Educ. 2023, 18, 198–204. [Google Scholar]

- Chan, C.K.Y. Is AI Changing the Rules of Academic Misconduct? An In-depth Look at Students’ Perceptions of ‘AI-giarism’. arXiv 2023, arXiv:2306.03358. [Google Scholar]

- Grassini, S. Shaping the future of education: Exploring the potential and consequences of AI and ChatGPT in educational settings. Educ. Sci. 2023, 13, 692. [Google Scholar] [CrossRef]

- Marjerison, R.K.; Zhang, Y.; Zheng, H. AI in E-commerce: Application of the use and gratification model to the acceptance of chatbots. Sustainability 2022, 14, 14270. [Google Scholar] [CrossRef]

- Wach, K.; Duong, C.D.; Ejdys, J.; Kazlauskaitė, R.; Korzyński, P.; Mazurek, G.; Paliszkiewicz, J.; Ziemba, E. The dark side of generative artificial intelligence: A critical analysis of controversies and risks of ChatGPT. Entrep. Bus. Econ. Rev. 2023, 11, 7–30. Available online: https://www.ceeol.com/search/article-detail?id=1205845 (accessed on 22 July 2024). [CrossRef]

- Rapp, A.; Curti, L.; Boldi, A. The human side of human-chatbot interaction: A systematic literature review of ten years of research on text-based chatbots. Int. J. Hum.-Comput. Stud. 2021, 151, 102630. [Google Scholar] [CrossRef]

- Hsu, C.L.; Lin, J.C.C. What drives purchase intention for paid mobile apps? An expectation confirmation model with perceived value. Electron. Commer. Res. Appl. 2015, 14, 46–57. [Google Scholar] [CrossRef]

- George, A.S.; George, A.H. A review of ChatGPT AI’s impact on several business sectors. Partn. Univers. Int. Innov. J. 2023, 1, 9–23. [Google Scholar] [CrossRef]

- Chunxiang, L. Study on mobile commerce customer based on value adoption. J. Appl. Sci 2014, 14, 901–909. [Google Scholar] [CrossRef]

- Habibi, A.; Rasoolimanesh, S.M. Experience and service quality on perceived value and behavioral intention: Moderating effect of perceived risk and fee. J. Qual. Assur. Hosp. Tour. 2021, 22, 711–737. [Google Scholar] [CrossRef]

- Wang, Y.S.; Yeh, C.H.; Liao, Y.W. What drives purchase intention in the context of online content services? The moderating role of ethical self-efficacy for online piracy. Int. J. Inf. Manag. 2013, 33, 199–208. [Google Scholar] [CrossRef]

- Fatima, T.; Kashif, S.; Kamran, M.; Awan, T.M. Examining factors influencing adoption of m-payment: Extending UTAUT2 with perceived value. Int. J. Innov. Creat. Chang. 2021, 15, 276–299. Available online: https://www.ijicc.net/images/Vol_15/Iss_8/15818_Fatima_2021_E1_R.pdf (accessed on 1 September 2024).

- Baidoo-Anu, D.; Ansah, L.O. Education in the era of generative artificial intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. J. AI 2023, 7, 52–62. [Google Scholar] [CrossRef]

- Chan, C.K.Y.; Hu, W. Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. High. Educ. 2023, 20, 43. [Google Scholar] [CrossRef]

- Chan, C.K.Y.; Lee, K.K. The AI generation gap: Are Gen Z students more interested in adopting generative AI such as ChatGPT in teaching and learning than their Gen X and Millennial Generation teachers? Smart Learn. Environ. 2023, 10, 60. [Google Scholar] [CrossRef]

- Zhao, L.; Wu, X.; Luo, H. Developing AI literacy for primary and middle school teachers in China: Based on a structural equation modeling analysis. Sustainability 2022, 14, 14549. [Google Scholar] [CrossRef]

- Hsiao, K.L.; Chen, C.C. Value-based adoption of e-book subscription services: The roles of environmental concerns and reading habits. Telemat. Inform. 2017, 34, 434–448. [Google Scholar] [CrossRef]

- Huang, W.; Hew, K.F.; Gonda, D.E. Designing and evaluating three chatbot-enhanced activities for a flipped graduate course. Int. J. Mech. Eng. Robot. Res. 2019, 8, 813–818. [Google Scholar] [CrossRef]

- Kong, S.C.; Cheung, W.M.Y.; Zhang, G. Evaluation of an artificial intelligence literacy course for university students with diverse study backgrounds. Comput. Educ. Artif. Intell. 2021, 2, 100026. [Google Scholar] [CrossRef]

- Laupichler, M.C.; Aster, A.; Schirch, J.; Raupach, T. Artificial intelligence literacy in higher and adult education: A scoping literature review. Comput. Educ. Artif. Intell. 2022, 3, 100101. [Google Scholar] [CrossRef]

- Long, D.; Magerko, B. What is AI Literacy? Competencies and Design Considerations. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI 2020), Honolulu, HI, USA, 25–30 April 2020; pp. 1–16. [Google Scholar] [CrossRef]

- Wang, B.; Rau, P.L.P.; Yuan, T. Measuring user competence in using artificial intelligence: Validity and reliability of artificial intelligence literacy scale. Behav. Inf. Technol. 2023, 42, 1324–1337. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education–Where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 39. [Google Scholar] [CrossRef]

- Jang, M.; Aavakare, M.; Nikou, S.; Kim, S. The impact of literacy on intention to use digital technology for learning: A comparative study of Korea and Finland. Telecommun. Policy 2021, 45, 102154. [Google Scholar] [CrossRef]

- Kong, S.C.; Cheung, W.M.Y.; Zhang, G. Evaluating artificial intelligence literacy courses for fostering conceptual learning, literacy and empowerment in university students: Refocusing to conceptual building. Comput. Hum. Behav. Rep. 2022, 7, 100223. [Google Scholar] [CrossRef]

- Su, J.; Yang, W. AI literacy curriculum and its relation to children’s perceptions of robots and attitudes towards engineering and science: An intervention study in early childhood education. J. Comput. Assist. Learn. 2023, 40, 241–253. [Google Scholar] [CrossRef]

- Lee, T.; Lee, B.K.; Lee-Geiller, S. The effects of information literacy on trust in government websites: Evidence from an online experiment. Int. J. Inf. Manag. 2020, 52, 102098. [Google Scholar] [CrossRef]

- Feng, L. Modeling the contribution of EFL students’ digital literacy to their foreign language enjoyment and self-efficacy in online education. Asia-Pac. Educ. Res. 2023, 33, 977–985. [Google Scholar] [CrossRef]

- Yu, H. The application and challenges of ChatGPT in educational transformation: New demands for instructors’ roles. Heliyon 2024, 10, e24289. [Google Scholar] [CrossRef] [PubMed]

- Gillani, N.; Eynon, R.; Chiabaut, C.; Finkel, K. Unpacking the “black box” of AI in education. Educ. Technol. Soc. 2023, 26, 99–111. [Google Scholar]

- Bender, E.; Gebru, T.; McMillan-Major, A.; Shmitchell, S. On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (FACCT 2021), Virtual Event, 3–10 March 2021; pp. 610–623. [Google Scholar] [CrossRef]

- Fütterer, T.; Fischer, C.; Alekseeva, A.; Chen, X.; Tate, T.; Warschauer, M.; Gerjets, P. ChatGPT in education: Global reactions to AI innovations. Sci. Rep. 2023, 13, 15310. [Google Scholar] [CrossRef] [PubMed]

- Casal-Otero, L.; Catala, A.; Fernández-Morante, C.; Taboada, M.; Cebreiro, B.; Barro, S. AI literacy in K-12: A systematic literature review. Int. J STEM Educ. 2023, 10, 29. [Google Scholar] [CrossRef]

- Nikou, S.; Aavakare, M. An assessment of the interplay between literacy and digital technology in higher education. Educ. Inf. Technol. 2021, 26, 3893–3915. [Google Scholar] [CrossRef]

- Vekiri, I. Information science instruction and changes in girls’ and boys’ expectancy and value beliefs: In search of gender-equitable pedagogical practices. Comput. Educ. 2010, 54, 392–406. [Google Scholar] [CrossRef]

- Hargittai, E.; Hsieh, Y.P. Succinct survey measures of web-use skills. Soc. Sci. Comput. Rev. 2012, 30, 95–107. [Google Scholar] [CrossRef]

- Mohammadyari, S.; Singh, H. Understanding the effect of e-learning on individual performance: The role of digital literacy. Comput. Educ. 2015, 82, 11–25. [Google Scholar] [CrossRef]

- Nazzal, A.; Thoyib, A.; Zain, D.; Hussein, A.S. The effect of digital literacy and website quality on purchase intention in Internet shopping through mediating variable: The case of Internet users in Palestine. Webology 2022, 19, 2414–2434. [Google Scholar] [CrossRef]

- Luckin, R.; Holmes, W. Intelligence Unleashed: An Argument for AI in Education; Pearson: London, UK, 2016. [Google Scholar]

- Hair, J.F.; Risher, J.J.; Sarstedt, M.; Ringle, C.M. When to use and how to report the results of PLS-SEM. Eur. Bus. Rev. 2019, 31, 2–24. [Google Scholar] [CrossRef]

- Sallam, M.; Salim, N.; Barakat, M.; Al-Mahzoum, K.; Al-Tammemi, A.B.; Malaeb, D.; Hallit, R.; Hallit, S. Validation of a technology acceptance model-based scale TAME-ChatGPT on health students’ attitudes and usage of ChatGPT in Jordan. JMIR Med. Educ. 2023, 9, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Hair, J.F., Jr.; Hair, J.; Sarstedt, M.; Ringle, C.M.; Gudergan, S.P. Advanced Issues in Partial Least Squares Structural Equation Modeling, 2nd ed.; Sage: Thousand Oaks, CA, USA, 2017. [Google Scholar]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Al Darayseh, A. Acceptance of artificial intelligence in teaching science: Science teachers’ perspective. Comput. Educ. Artif. Intell. 2023, 4, 100132. [Google Scholar] [CrossRef]

- Chatterjee, S.; Bhattacharjee, K.K. Adoption of artificial intelligence in higher education: A quantitative analysis using structural equation modelling. Educ. Inf. Technol. 2020, 25, 3443–3463. [Google Scholar] [CrossRef]

- Kelly, S.; Kaye, S.A.; Oviedo-Trespalacios, O. What factors contribute to acceptance of artificial intelligence? A systematic review. Telemat. Inform. 2022, 77, 101925. [Google Scholar] [CrossRef]

| Features | n | % |

|---|---|---|

| Gender | ||

| Male | 109 | 16.1 |

| Female | 567 | 83.9 |

| Age | ||

| ≤18 | 103 | 15.2 |

| 19–20 | 294 | 43.5 |

| 21–22 | 170 | 25.1 |

| 23–24 | 41 | 6.1 |

| ≥25 | 68 | 10.1 |

| Education Level | ||

| Undergraduate | 619 | 91.6 |

| Graduate | 57 | 8.4 |

| Academic Major | ||

| Health sciences | 118 | 17.5 |

| Agriculture | 193 | 28.6 |

| Humanities | 44 | 6.5 |

| Social sciences | 23 | 3.4 |

| Pure sciences | 60 | 8.9 |

| Computer science | 238 | 35.2 |

| Construct | Indicator (In) | Standardized Indicator Loadings | α | CR | AVE | R2 | R2 Adjusted | Q2 |

|---|---|---|---|---|---|---|---|---|

| Perceived Usefulness | In 1 | 0.91 | 0.892 | 0.892 | 0.822 | 0.263 | 0.262 | 0.259 |

| In 2 | 0.90 | |||||||

| In 3 | 0.89 | |||||||

| Perceived Enjoyment | In 1 | 0.91 | 0.896 | 0.896 | 0.827 | 0.292 | 0.291 | 0.289 |

| In 2 | 0.92 | |||||||

| In 3 | 0.89 | |||||||

| Perceived Risk | In 1 | 0.89 | 0.857 | 0.971 | 0.601 | 0.019 | 0.017 | 0.011 |

| In 2 | 0.76 | |||||||

| In 3 | 0.84 | |||||||

| In 4 | 0.68 | |||||||

| In 5 | 0.72 | |||||||

| Perceived Fees | In 1 | 0.89 | 0.802 | 0.803 | 0.835 | 0.045 | 0.052 | 0.049 |

| In 2 | 0.91 | |||||||

| In 3 | 0.92 | |||||||

| Perceived Value | In 1 | 0.72 | 0.862 | 0.889 | 0.712 | 0.624 | 0.621 | 0.282 |

| In 2 | 0.90 | |||||||

| In 3 | 0.90 | |||||||

| In 4 | 0.87 | |||||||

| Use Intention | In 1 | 0.90 | 0.914 | 0.918 | 0.796 | 0.560 | 0.560 | 0.254 |

| In 2 | 0.91 | |||||||

| In 3 | 0.93 | |||||||

| In 4 | 0.82 | |||||||

| AI Literacy | In 1 | 0.75 | 0.929 | 0.936 | 0.611 | |||

| In 2 | 0.80 | |||||||

| In 3 | 0.82 | |||||||

| In 4 | 0.81 | |||||||

| In 5 | 0.77 | |||||||

| In 6 | 0.81 | |||||||

| In 7 | 0.83 | |||||||

| In 8 | 0.77 | |||||||

| In 9 | 0.75 | |||||||

| In 10 | 0.72 |

| Constructs | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|

| 1. Perceived Usefulness | 0.906 | ||||||

| 2. Perceived Enjoyment | 0.761 (0.801) | 0.909 | |||||

| 3. Perceived Risk | −0.095 (0.072) | −0.079 (0.082) | 0.774 | ||||

| 4. Perceived Fees | 0.274 (0.324) | 0.313 (0.368) | −0.044 (0.091) | 0.912 | |||

| 5. Perceived Value | 0.729 (0.813) | 0.693 (0.771) | −0.030 (0.081) | 0.397 (0.495) | 0.844 | ||

| 6. Use Intention | 0.653 (0.721) | 0.583 (0.642) | −0.032 (0.075) | 0.233 (0.270) | 0.741 (0.831) | 0.898 | |

| 7. AI Literacy | 0.513 (0.553) | 0.541 (0.538) | −0.137 (0.120) | 0.232 (0.263) | 0.535 (0.582) | 0.520 (0.554) | 0.782 |

| H | Independent Variables | Path | Dependent Variables | β | SE | t | p |

|---|---|---|---|---|---|---|---|

| H1 | Perceived Usefulness | → | Perceived Value | 0.433 | 0.049 | 8.830 | 0.000 * |

| H2 | Perceived Enjoyment | → | Perceived Value | 0.226 | 0.048 | 4.727 | 0.000 * |

| H3 | Perceived Risk | → | Perceived Value | 0.054 | 0.050 | 1.172 | 0.241 |

| H4 | Perceived Fees | → | Perceived Value | 0.172 | 0.030 | 5.801 | 0.000 * |

| H5 | Perceived Value | → | Use Intention | 0.748 | 0.022 | 33.70 | 0.000 * |

| H6 | AI Literacy | → | Perceived Usefulness | 0.513 | 0.035 | 14.54 | 0.000 * |

| H7 | AI Literacy | → | Perceived Enjoyment | 0.541 | 0.032 | 18.82 | 0.000 * |

| H8 | AI Literacy | → | Perceived Risk | −0.137 | 0.093 | 1.473 | 0.141 |

| H9 | AI Literacy | → | Perceived Fees | 0.232 | 0.043 | 5.429 | 0.000 * |

| H10 | AI Literacy | → | Perceived Value | 0.158 | 0.34 | 4.600 | 0.000 * |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Abdullatif, A.M.; Alsubaie, M.A. ChatGPT in Learning: Assessing Students’ Use Intentions through the Lens of Perceived Value and the Influence of AI Literacy. Behav. Sci. 2024, 14, 845. https://doi.org/10.3390/bs14090845

Al-Abdullatif AM, Alsubaie MA. ChatGPT in Learning: Assessing Students’ Use Intentions through the Lens of Perceived Value and the Influence of AI Literacy. Behavioral Sciences. 2024; 14(9):845. https://doi.org/10.3390/bs14090845

Chicago/Turabian StyleAl-Abdullatif, Ahlam Mohammed, and Merfat Ayesh Alsubaie. 2024. "ChatGPT in Learning: Assessing Students’ Use Intentions through the Lens of Perceived Value and the Influence of AI Literacy" Behavioral Sciences 14, no. 9: 845. https://doi.org/10.3390/bs14090845

APA StyleAl-Abdullatif, A. M., & Alsubaie, M. A. (2024). ChatGPT in Learning: Assessing Students’ Use Intentions through the Lens of Perceived Value and the Influence of AI Literacy. Behavioral Sciences, 14(9), 845. https://doi.org/10.3390/bs14090845