Emotional Evaluations from Partners and Opponents Differentially Influence the Perception of Ambiguous Faces

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Stimuli

2.3. Procedure

2.4. EEG Recording and Data Preprocessing

2.5. Data Analysis

3. Results

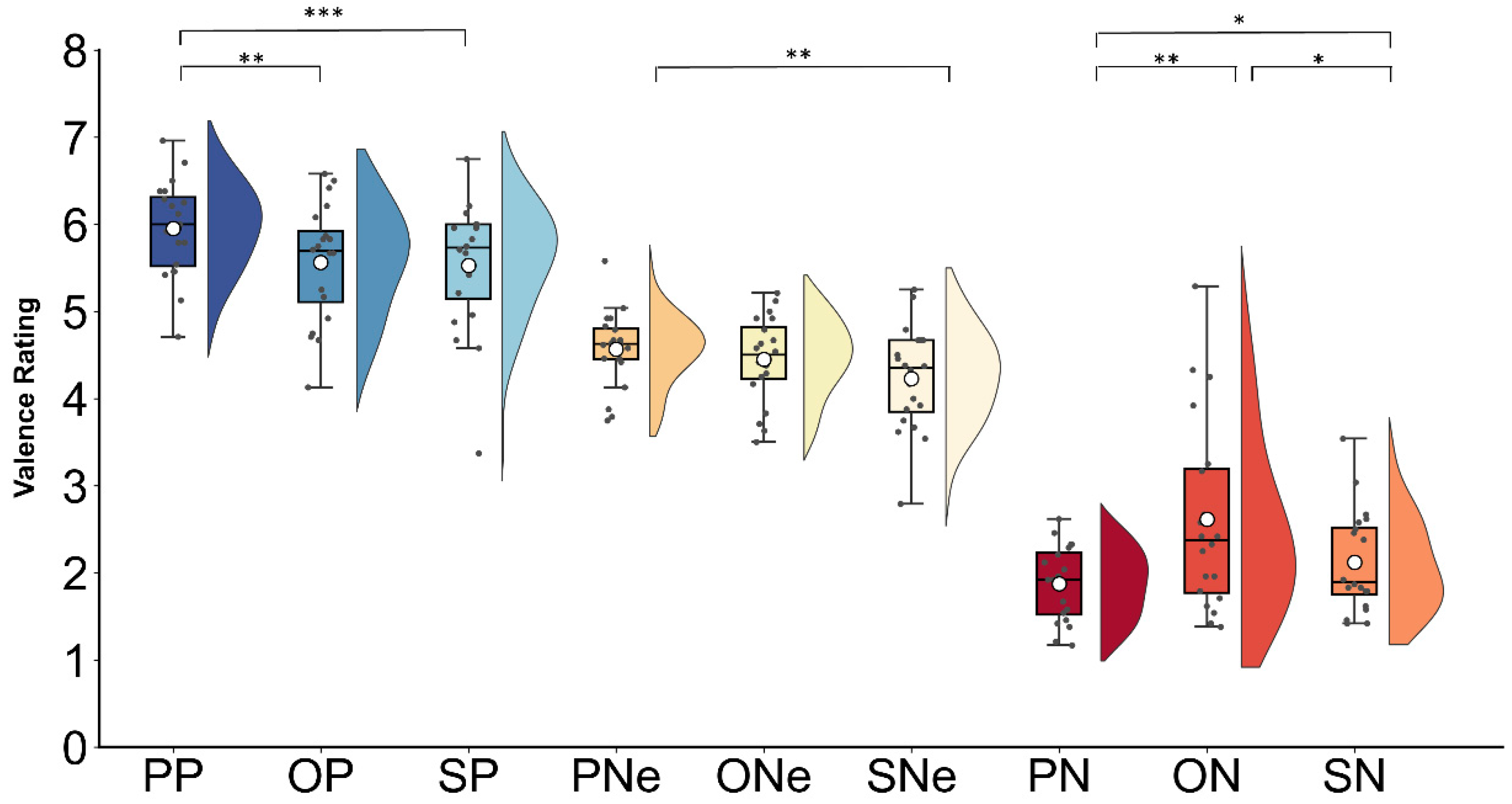

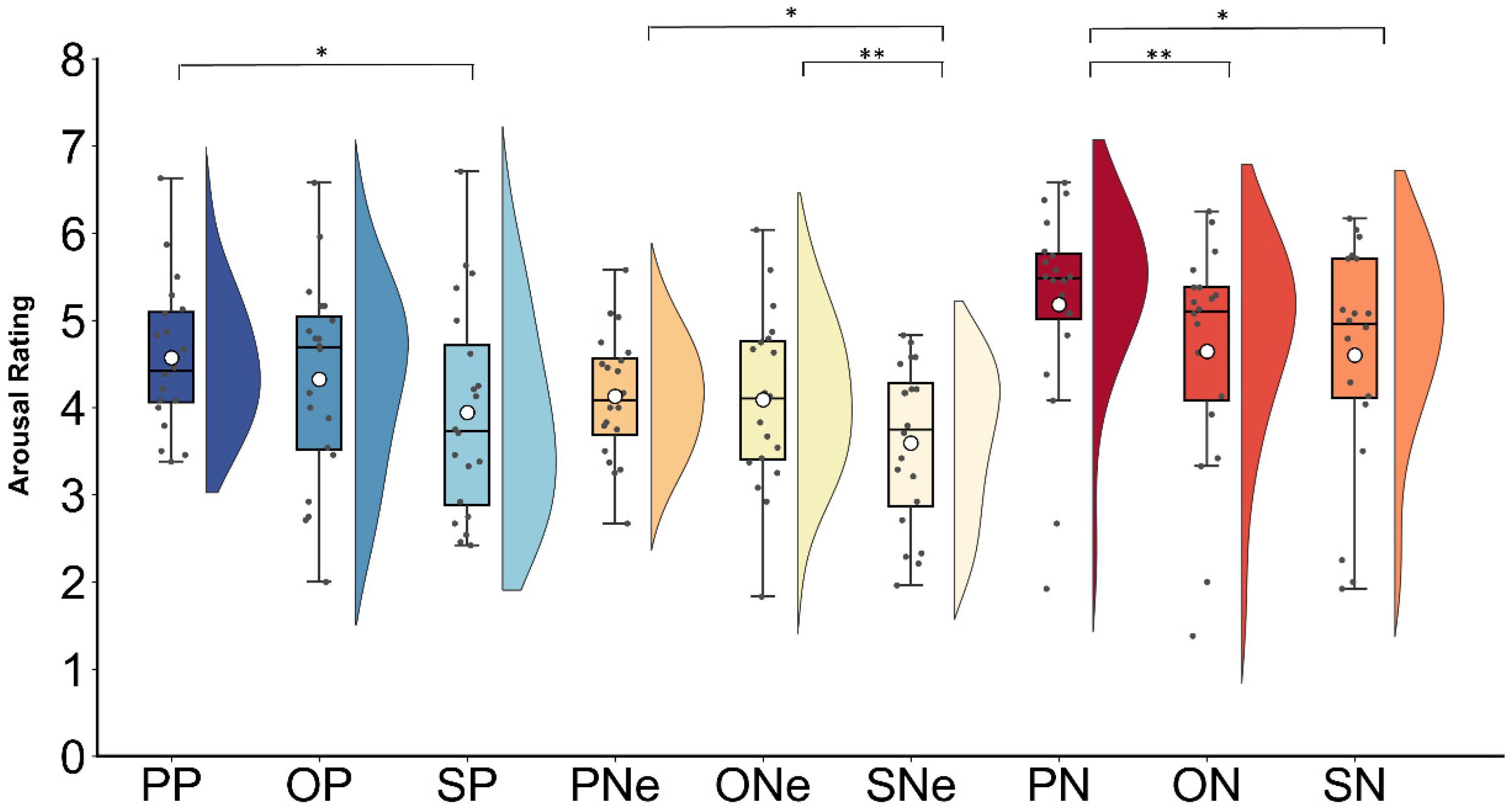

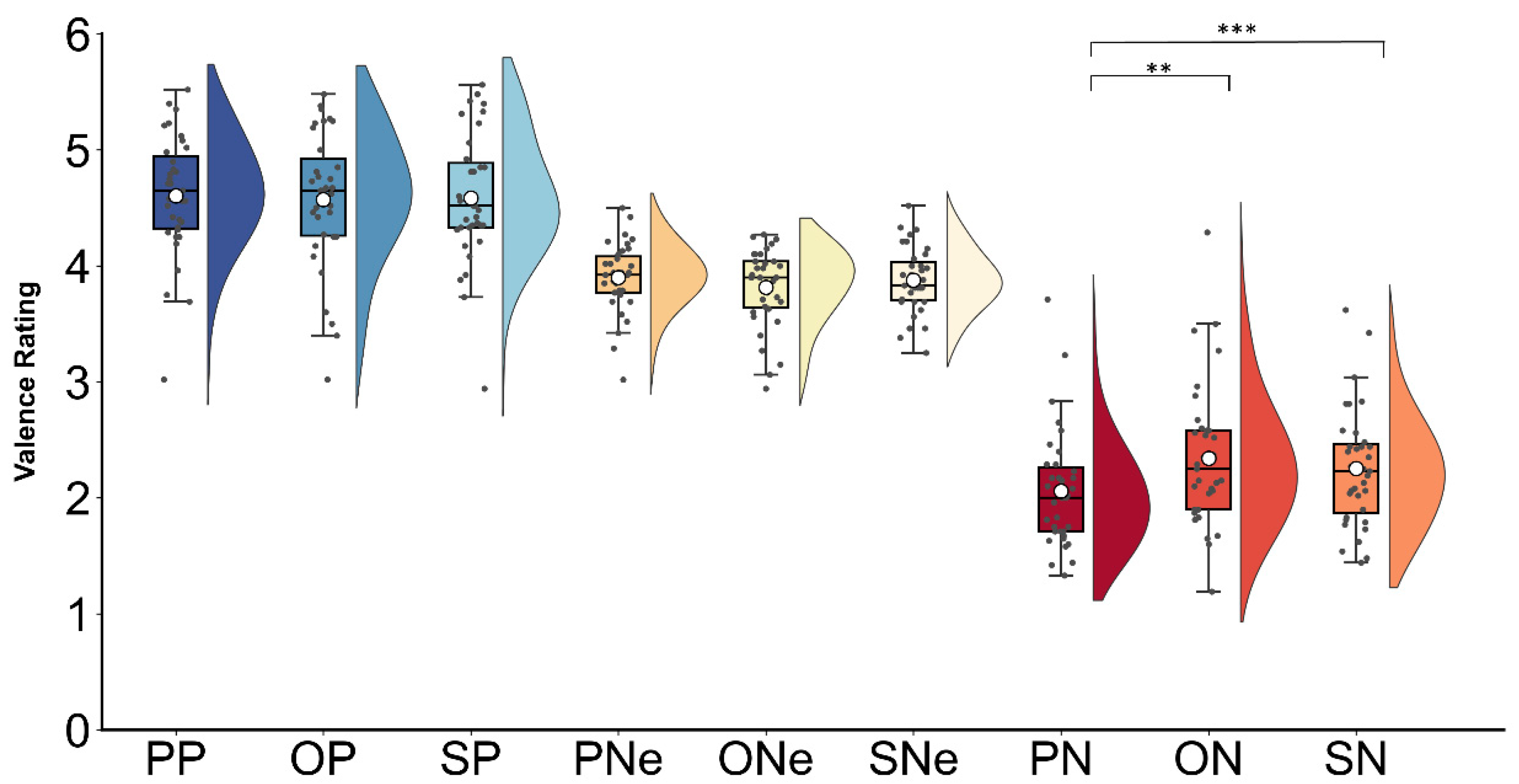

3.1. Behavioral Results

3.2. ERP Results

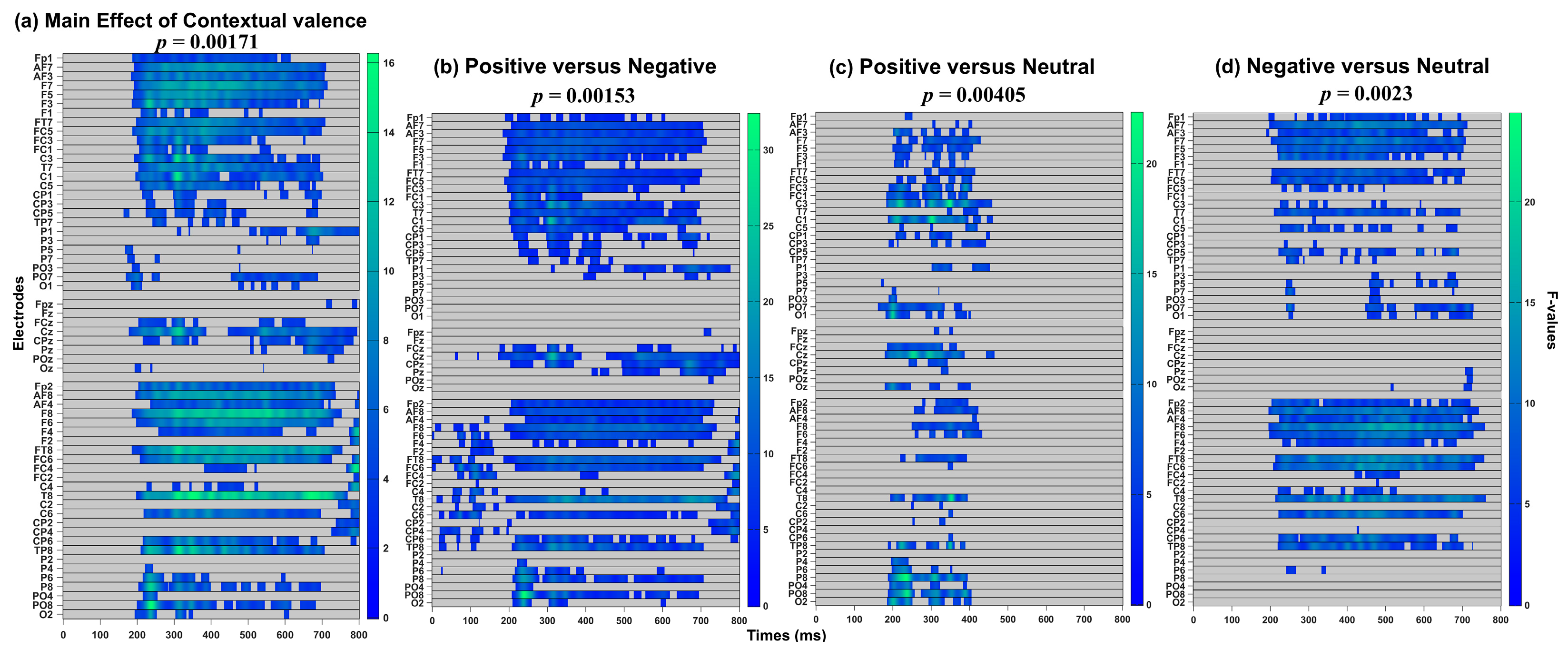

3.2.1. Exploratory Analysis over All Electrodes (0–800 ms)

3.2.2. P1 Component over Occipito-Temporal Sites (90–130 ms)

3.2.3. N170 Component over Occipito-Temporal Sites (140–190 ms)

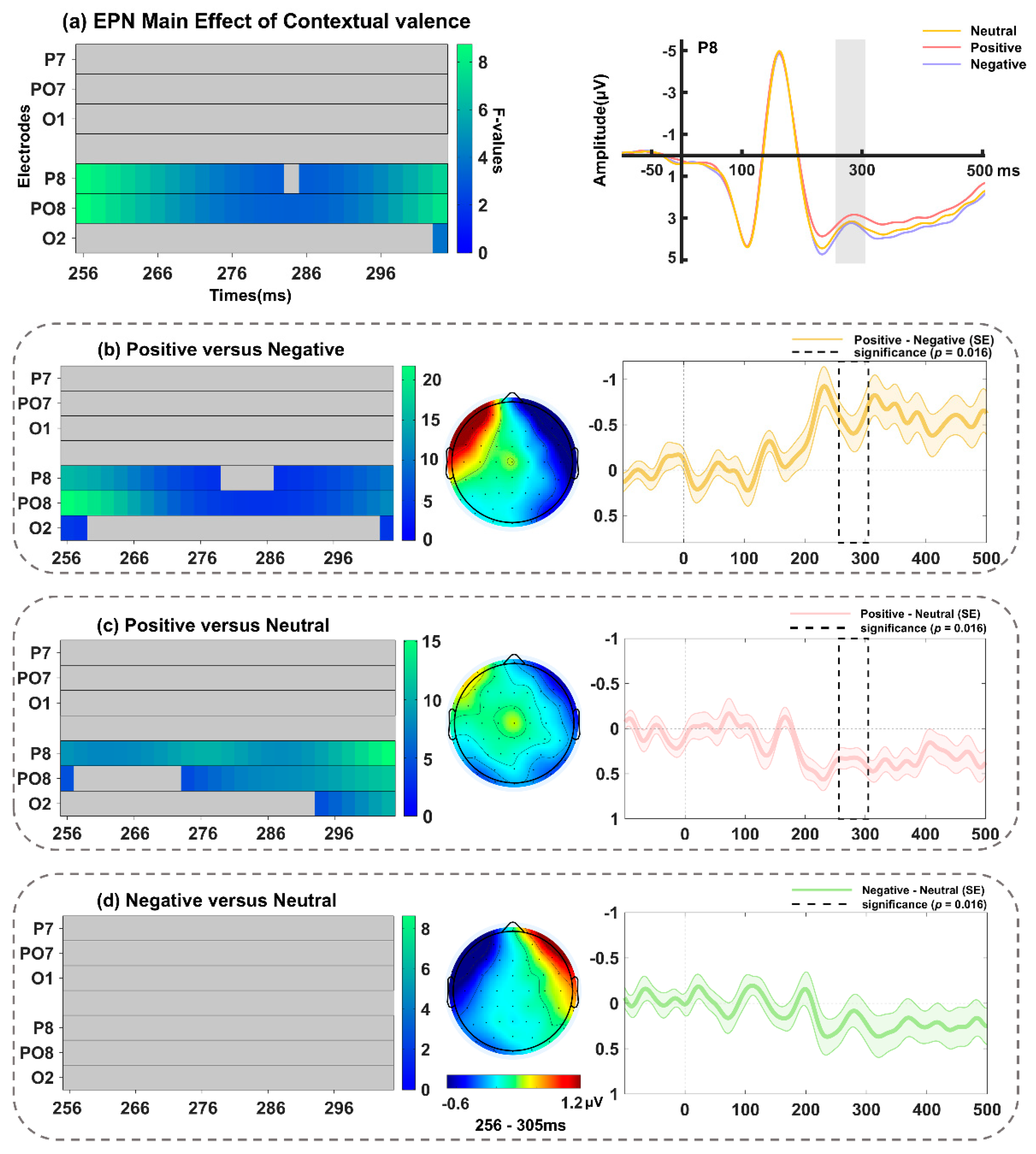

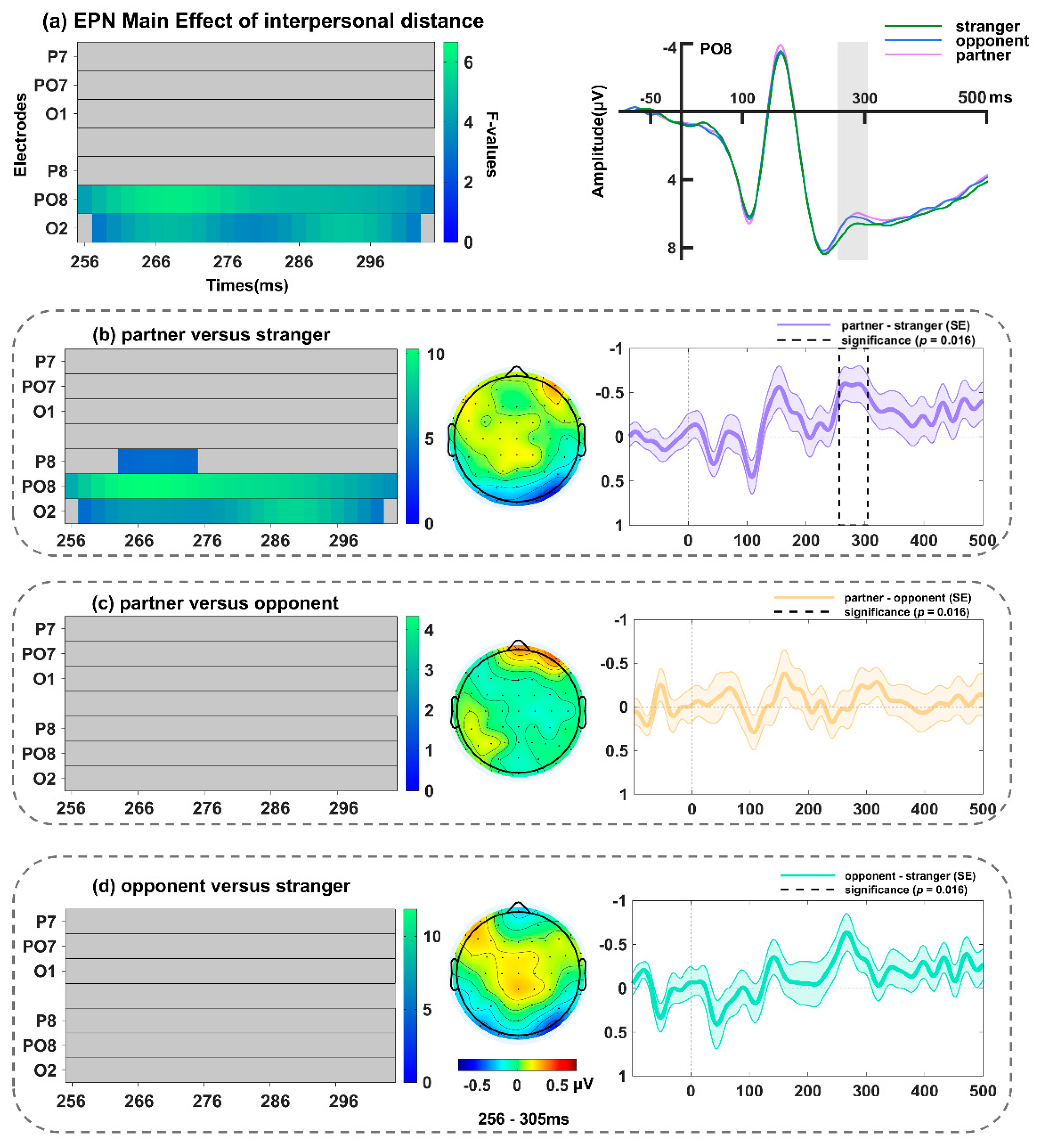

3.2.4. EPN Component over Occipito-Temporal Sites (256–305 ms)

3.2.5. LPP Component over Centro-Parietal Sites (400–600 ms)

4. Discussion

4.1. Effect of Contextual Valence

4.2. Effect of Interpersonal Distance

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Barrett, L.F.; Kensinger, E.A. Context Is Routinely Encoded During Emotion Perception. Psychol. Sci. 2010, 21, 595–599. [Google Scholar] [CrossRef] [PubMed]

- Aviezer, H.; Hassin, R.R.; Ryan, J.; Grady, C.; Susskind, J.; Anderson, A.; Moscovitch, M.; Bentin, S. Angry, Disgusted, or Afraid? Studies on the malleability of emotion perception. Psychol. Sci. 2008, 19, 724–732. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Somerville, L.H.; Johnstone, T.; Polis, S.; Alexander, A.L.; Shin, L.M.; Whalen, P.J. Contextual Modulation of Amygdala Responsivity to Surprised Faces. J. Cogn. Neurosci. 2004, 16, 1730–1745. [Google Scholar] [CrossRef] [PubMed]

- Boll, S.; Gamer, M.; Kalisch, R.; Büchel, C. Processing of facial expressions and their significance for the observer in subregions of the human amygdala. NeuroImage 2011, 56, 299–306. [Google Scholar] [CrossRef]

- Diéguez-Risco, T.; Aguado, L.; Albert, J.; Hinojosa, J.A. Judging emotional congruency: Explicit attention to situational context modulates processing of facial expressions of emotion. Biol. Psychol. 2015, 112, 27–38. [Google Scholar] [CrossRef]

- Xu, M.; Li, Z.; Diao, L.; Fan, L.; Yang, D. Contextual Valence and Sociality Jointly Influence the Early and Later Stages of Neutral Face Processing. Front. Psychol. 2016, 7, 1258. [Google Scholar] [CrossRef]

- Schwarz, K.A.; Wieser, M.J.; Gerdes, A.B.M.; Mühlberger, A.; Pauli, P. Why are you looking like that? How the context influences evaluation and processing of human faces. Soc. Cogn. Affect. Neurosci. 2013, 8, 438–445. [Google Scholar] [CrossRef]

- Wieser, M.J.; Gerdes, A.B.; Büngel, I.; Schwarz, K.A.; Mühlberger, A.; Pauli, P. Not so harmless anymore: How context impacts the perception and electrocortical processing of neutral faces. NeuroImage 2014, 92, 74–82. [Google Scholar] [CrossRef]

- Kato, R.; Takeda, Y. Females are sensitive to unpleasant human emotions regardless of the emotional context of photographs. Neurosci. Lett. 2017, 651, 177–181. [Google Scholar] [CrossRef]

- Righart, R.; de Gelder, B. Context Influences Early Perceptual Analysis of Faces—An Electrophysiological Study. Cereb. Cortex 2006, 16, 1249–1257. [Google Scholar] [CrossRef]

- Righart, R.; de Gelder, B. Rapid influence of emotional scenes on encoding of facial expressions: An ERP study. Soc. Cogn. Affect. Neurosci. 2008, 3, 270–278. [Google Scholar] [CrossRef] [PubMed]

- Meeren, H.K.M.; van Heijnsbergen, C.C.R.J.; de Gelder, B. Rapid perceptual integration of facial expression and emotional body language. Proc. Natl. Acad. Sci. USA 2005, 102, 16518–16523. [Google Scholar] [CrossRef] [PubMed]

- Aviezer, H.; Trope, Y.; Todorov, A. Body Cues, Not Facial Expressions, Discriminate Between Intense Positive and Negative Emotions. Science 2012, 338, 1225–1229. [Google Scholar] [CrossRef] [PubMed]

- Frühholz, S.; Fehr, T.; Herrmann, M. Early and late temporo-spatial effects of contextual interference during perception of facial affect. Int. J. Psychophysiol. 2009, 74, 1–13. [Google Scholar] [CrossRef]

- Lin, H.; Liang, J. Contextual effects of angry vocal expressions on the encoding and recognition of emotional faces: An event-related potential (ERP) study. Neuropsychologia 2019, 132, 107147. [Google Scholar] [CrossRef]

- Chen, Z.; Whitney, D. Tracking the affective state of unseen persons. Proc. Natl. Acad. Sci. USA 2019, 116, 7559–7564. [Google Scholar] [CrossRef]

- Chen, Z.; Whitney, D. Inferential affective tracking reveals the remarkable speed of context-based emotion perception. Cognition 2021, 208, 104549. [Google Scholar] [CrossRef]

- Aguado, L.; Parkington, K.B.; Dieguez-Risco, T.; Hinojosa, J.A.; Itier, R.J. Joint Modulation of Facial Expression Processing by Contextual Congruency and Task Demands. Brain Sci. 2019, 9, 116. [Google Scholar] [CrossRef]

- Aguado, L.; Martínez-García, N.; Solís-Olce, A.; Dieguez-Risco, T.; Hinojosa, J.A. Effects of affective and emotional congruency on facial expression processing under different task demands. Acta Psychol. 2018, 187, 66–76. [Google Scholar] [CrossRef]

- Xu, J.; Li, H.; Lei, Y. Effects of emotional context information on ambiguous expression recognition and the underlying mechanisms. Adv. Psychol. Sci. 2018, 26, 1961–1968. [Google Scholar] [CrossRef]

- Calbi, M.; Siri, F.; Heimann, K.; Barratt, D.; Gallese, V.; Kolesnikov, A.; Umiltà, M.A. How context influences the interpretation of facial expressions: A source localization high-density EEG study on the “Kuleshov effect”. Sci. Rep. 2019, 9, 2107. [Google Scholar] [CrossRef] [PubMed]

- Wieser, M.J.; Moscovitch, D.A. The Effect of Affective Context on Visuocortical Processing of Neutral Faces in Social Anxiety. Front. Psychol. 2015, 6, 1824. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Zhu, X.; Ding, R.; Ren, J.; Luo, W. The effect of emotional and self-referential contexts on ERP responses towards surprised faces. Biol. Psychol. 2019, 146, 107728. [Google Scholar] [CrossRef] [PubMed]

- Wieser, M.J.; Brosch, T. Faces in Context: A Review and Systematization of Contextual Influences on Affective Face Processing. Front. Psychol. 2012, 3, 471. [Google Scholar] [CrossRef]

- Baum, J.; Rahman, R.A. Negative news dominates fast and slow brain responses and social judgments even after source credibility evaluation. NeuroImage 2021, 244, 118572. [Google Scholar] [CrossRef]

- Fields, E.C.; Kuperberg, G.R. Loving yourself more than your neighbor: ERPs reveal online effects of a self-positivity bias. Soc. Cogn. Affect. Neurosci. 2015, 10, 1202–1209. [Google Scholar] [CrossRef]

- Dong, Y.; Liu, Y.; Jia, Y.; Li, Y.; Li, C. Effects of Facial Expression and Facial Gender on Judgment of Trustworthiness: The Modulating Effect of Cooperative and Competitive Settings. Front. Psychol. 2018, 9, 2022. [Google Scholar] [CrossRef]

- Balas, B.; Thomas, L.E. Competition makes observers remember faces as more aggressive. J. Exp. Psychol. Gen. 2015, 144, 711–716. [Google Scholar] [CrossRef]

- Schindler, S.; Kissler, J. People matter: Perceived sender identity modulates cerebral processing of socio-emotional language feedback. NeuroImage 2016, 134, 160–169. [Google Scholar] [CrossRef]

- Schindler, S.; Kissler, J. Language-based social feedback processing with randomized “senders”: An ERP study. Soc. Neurosci. 2018, 13, 202–213. [Google Scholar] [CrossRef]

- Iffland, B.; Klein, F.; Schindler, S.; Kley, H.; Neuner, F. “She finds you abhorrent”—The impact of emotional context information on the cortical processing of neutral faces in depression. Cogn. Affect. Behav. Neurosci. 2021, 21, 426–444. [Google Scholar] [CrossRef] [PubMed]

- McCrackin, S.D.; Itier, R.J. Is it about me? Time-course of self-relevance and valence effects on the perception of neutral faces with direct and averted gaze. Biol. Psychol. 2018, 135, 47–64. [Google Scholar] [CrossRef] [PubMed]

- Klein, F.; Iffland, B.; Schindler, S.; Wabnitz, P.; Neuner, F. This person is saying bad things about you: The influence of physically and socially threatening context information on the processing of inherently neutral faces. Cogn. Affect. Behav. Neurosci. 2015, 15, 736–748. [Google Scholar] [CrossRef] [PubMed]

- Roche, R.; Dockree, P. Introduction to EEG methods and concepts: What is it? Why use it? How to do it. Advantages? Limitations? In Proceedings of the Sixth European Science Foundation ERNI-HSF Meeting on ‘Combining Brain Imaging Techniques’, Tutzing, Germany, 5–8 October 2011. [Google Scholar]

- Herrmann, M.J.; Ehlis, A.-C.; Ellgring, H.; Fallgatter, A.J. Early stages (P100) of face perception in humans as measured with event-related potentials (ERPs). J. Neural Transm. 2004, 112, 1073–1081. [Google Scholar] [CrossRef]

- Pourtois, G.; Grandjean, D.; Sander, D.; Vuilleumier, P. Electrophysiological Correlates of Rapid Spatial Orienting Towards Fearful Faces. Cereb. Cortex 2004, 14, 619–633. [Google Scholar] [CrossRef]

- Smith, N.; Cacioppo, J.T.; Larsen, J.T.; Chartrand, T.L. May I have your attention, please: Electrocortical responses to positive and negative stimuli. Neuropsychologia 2003, 41, 171–183. [Google Scholar] [CrossRef]

- Luo, W.; Feng, W.; He, W.; Wang, N.-Y.; Luo, Y.-J. Three stages of facial expression processing: ERP study with rapid serial visual presentation. NeuroImage 2010, 49, 1857–1867. [Google Scholar] [CrossRef]

- Gu, Y.; Mai, X.; Luo, Y.-J. Do Bodily Expressions Compete with Facial Expressions? Time Course of Integration of Emotional Signals from the Face and the Body. PLoS ONE 2013, 8, e66762. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Zinbarg, R.E.; Boehm, S.G.; Paller, K.A. Neural and Behavioral Evidence for Affective Priming from Unconsciously Perceived Emotional Facial Expressions and the Influence of Trait Anxiety. J. Cogn. Neurosci. 2008, 20, 95–107. [Google Scholar] [CrossRef]

- Bruchmann, M.; Schindler, S.; Dinyarian, M.; Straube, T. The role of phase and orientation for ERP modulations of spectrum-manipulated fearful and neutral faces. Psychophysiology 2022, 59, e13974. [Google Scholar] [CrossRef]

- Li, S.; Ding, R.; Zhao, D.; Zhou, X.; Zhan, B.; Luo, W. Processing of emotions expressed through eye regions attenuates attentional blink. Int. J. Psychophysiol. 2022, 182, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Eimer, M. The face-specific N170 component reflects late stages in the structural encoding of faces. Neuroreport 2000, 11, 2319–2324. [Google Scholar] [CrossRef]

- Eimer, M. Effects of face inversion on the structural encoding and recognition of faces evidence from event-related brain potentials. Cogn. Brain Res. 2000, 10, 145–158. [Google Scholar] [CrossRef]

- Leppänen, J.M.; Hietanen, J.K.; Koskinen, K. Differential early ERPs to fearful versus neutral facial expressions: A response to the salience of the eyes? Biol. Psychol. 2008, 78, 150–158. [Google Scholar] [CrossRef] [PubMed]

- Schindler, S.; Caldarone, F.; Bruchmann, M.; Moeck, R.; Straube, T. Time-dependent effects of perceptual load on processing fearful and neutral faces. Neuropsychologia 2020, 146, 107529. [Google Scholar] [CrossRef]

- Rellecke, J.; Sommer, W.; Schacht, A. Does processing of emotional facial expressions depend on intention? Time-resolved evidence from event-related brain potentials. Biol. Psychol. 2012, 90, 23–32. [Google Scholar] [CrossRef]

- Pegna, A.J.; Landis, T.; Khateb, A. Electrophysiological evidence for early non-conscious processing of fearful facial expressions. Int. J. Psychophysiol. 2008, 70, 127–136. [Google Scholar] [CrossRef]

- Durston, A.J.; Itier, R.J. The early processing of fearful and happy facial expressions is independent of task demands—Support from mass univariate analyses. Brain Res. 2021, 1765, 147505. [Google Scholar] [CrossRef] [PubMed]

- Itier, R.J.; Neath-Tavares, K.N. Effects of task demands on the early neural processing of fearful and happy facial expressions. Brain Res. 2017, 1663, 38–50. [Google Scholar] [CrossRef]

- Schindler, S.; Bruchmann, M.; Steinweg, A.-L.; Moeck, R.; Straube, T. Attentional conditions differentially affect early, intermediate and late neural responses to fearful and neutral faces. Soc. Cogn. Affect. Neurosci. 2020, 15, 765–774. [Google Scholar] [CrossRef]

- Li, S.; Yang, L.; Hao, B.; He, W.; Luo, W. Perceptual load-independent modulation of the facilitated processing of emotional eye regions. Int. J. Psychophysiol. 2023, 190, 8–19. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Li, P.; Wang, W.; Zhu, X.; Luo, W. The effect of emotionally valenced eye region images on visuocortical processing of surprised faces. Psychophysiology 2018, 55, e13039. [Google Scholar] [CrossRef] [PubMed]

- Bublatzky, F.; Gerdes, A.B.M.; White, A.J.; Riemer, M.; Alpers, G.W. Social and emotional relevance in face processing: Happy faces of future interaction partners enhance the late positive potential. Front. Hum. Neurosci. 2014, 8, 493. [Google Scholar] [CrossRef]

- Schupp, H.T.; Öhman, A.; Junghöfer, M.; Weike, A.I.; Stockburger, J.; Hamm, A.O. The Facilitated Processing of Threatening Faces: An ERP Analysis. Emotion 2004, 4, 189–200. [Google Scholar] [CrossRef]

- Junghöfer, M.; Bradley, M.M.; Elbert, T.R.; Lang, P.J. Fleeting images: A new look at early emotion discrimination. Psychophysiology 2001, 38, 175–178. [Google Scholar] [CrossRef] [PubMed]

- Holmes, A.; Nielsen, M.K.; Tipper, S.; Green, S. An electrophysiological investigation into the automaticity of emotional face processing in high versus low trait anxious individuals. Cogn. Affect. Behav. Neurosci. 2009, 9, 323–334. [Google Scholar] [CrossRef]

- Calvo, M.G.; Beltrán, D. Recognition advantage of happy faces: Tracing the neurocognitive processes. Neuropsychologia 2013, 51, 2051–2061. [Google Scholar] [CrossRef]

- Dillon, D.G.; Cooper, J.J.; Grent-‘T-Jong, T.; Woldorff, M.G.; LaBar, K.S. Dissociation of event-related potentials indexing arousal and semantic cohesion during emotional word encoding. Brain Cogn. 2006, 62, 43–57. [Google Scholar] [CrossRef]

- Michalowski, J.M.; Pané-Farré, C.A.; Löw, A.; Hamm, A.O. Brain dynamics of visual attention during anticipation and encoding of threat- and safe-cues in spider-phobic individuals. Soc. Cogn. Affect. Neurosci. 2015, 10, 1177–1186. [Google Scholar] [CrossRef]

- Bayer, M.; Ruthmann, K.; Schacht, A. The impact of personal relevance on emotion processing: Evidence from event-related potentials and pupillary responses. Soc. Cogn. Affect. Neurosci. 2017, 12, 1470–1479. [Google Scholar] [CrossRef]

- Luo, Q.L.; Wang, H.L.; Dzhelyova, M.; Huang, P.; Mo, L. Effect of Affective Personality Information on Face Processing: Evidence from ERPs. Front. Psychol. 2016, 7, 810. [Google Scholar] [CrossRef] [PubMed]

- Northoff, G.; Schneider, F.; Rotte, M.; Matthiae, C.; Tempelmann, C.; Wiebking, C.; Bermpohl, F.; Heinzel, A.; Danos, P.; Heinze, H.; et al. Differential parametric modulation of self-relatedness and emotions in different brain regions. Hum. Brain Mapp. 2009, 30, 369–382. [Google Scholar] [CrossRef] [PubMed]

- Bar-Anan, Y.; Liberman, N.; Trope, Y. The association between psychological distance and construal level: Evidence from an implicit association test. J. Exp. Psychol. Gen. 2006, 135, 609–622. [Google Scholar] [CrossRef]

- Sacco, D.F.; Hugenberg, K. Cooperative and competitive motives enhance perceptual sensitivity to angry and happy facial expressions. Motiv. Emot. 2011, 36, 382–395. [Google Scholar] [CrossRef]

- Rajchert, J.; Żółtak, T.; Szulawski, M.; Jasielska, D. Effects of Rejection by a Friend for Someone Else on Emotions and Behavior. Front. Psychol. 2019, 10, 764. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Lang, A.-G.; Buchner, A. G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 2007, 39, 175–191. [Google Scholar] [CrossRef]

- Gong, X.; Huang, Y.X.; Wang, Y.; Luo, Y.J. Revision of the Chinese facial affective picture system. Chin. Ment. Health J. 2011, 25, 40–46. [Google Scholar]

- Delorme, A.; Makeig, S. EEGLAB: An Open Source Toolbox for Analysis of Single-Trial EEG Dynamics Including Independent Component Analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Luck, S.J.; Gaspelin, N. How to get statistically significant effects in any erp experiment (and why you shouldn’t). Psychophysiology 2017, 54, 146–157. [Google Scholar] [CrossRef]

- Fields, E.C.; Kuperberg, G.R. Having your cake and eating it too: Flexibility and power with mass univariate statistics for ERP data. Psychophysiology 2019, 57, e13468. [Google Scholar] [CrossRef]

- Hudson, A.; Durston, A.J.; McCrackin, S.D.; Itier, R.J. Emotion, Gender and Gaze Discrimination Tasks do not Differentially Impact the Neural Processing of Angry or Happy Facial Expressions—A Mass Univariate ERP Analysis. Brain Topogr. 2021, 34, 813–833. [Google Scholar] [CrossRef] [PubMed]

- Groppe, D.M.; Urbach, T.P.; Kutas, M. Mass univariate analysis of event-related brain potentials/fields II: Simulation studies. Psychophysiology 2011, 48, 1726–1737. [Google Scholar] [CrossRef] [PubMed]

- Rischer, K.M.; Savallampi, M.; Akwaththage, A.; Thunell, N.S.; Lindersson, C.; MacGregor, O. In context: Emotional intent and temporal immediacy of contextual descriptions modulate affective ERP components to facial expressions. Soc. Cogn. Affect. Neurosci. 2020, 15, 551–560. [Google Scholar] [CrossRef] [PubMed]

- Öhman, A.; Lundqvist, D.; Esteves, F. The face in the crowd revisited: A threat advantage with schematic stimuli. J. Pers. Soc. Psychol. 2001, 80, 381–396. [Google Scholar] [CrossRef]

- Logue, A.; Ophir, I.; Strauss, K.E. The acquisition of taste aversions in humans. Behav. Res. Ther. 1981, 19, 319–333. [Google Scholar] [CrossRef]

- Peeters, G.; Czapinski, J. Positive-Negative Asymmetry in Evaluations: The Distinction Between Affective and Informational Negativity Effects. Eur. Rev. Soc. Psychol. 1990, 1, 33–60. [Google Scholar] [CrossRef]

- Kahneman, D.; Tversky, A. Choices, values, and frames. Am. Psychol. 1984, 39, 341–350. [Google Scholar] [CrossRef]

- Wen, F.; Zuo, B.; Ma, S.; Xie, Z. Own-group bias in face recognition. Adv. Psychol. Sci. 2020, 28, 1164–1171. [Google Scholar] [CrossRef]

- Richman, L.S.; Leary, M.R. Reactions to discrimination, stigmatization, ostracism, and other forms of interpersonal rejection: A multimotive model. Psychol. Rev. 2009, 116, 365–383. [Google Scholar] [CrossRef]

- Snapp, C.M.; Leary, M.R. Hurt Feelings among New Acquaintances: Moderating Effects of Interpersonal Familiarity. J. Soc. Pers. Relationships 2001, 18, 315–326. [Google Scholar] [CrossRef]

- Olofsson, J.K.; Nordin, S.; Sequeira, H.; Polich, J. Affective picture processing: An integrative review of ERP findings. Biol. Psychol. 2008, 77, 247–265. [Google Scholar] [CrossRef] [PubMed]

- Luck, S.J.; Woodman, G.F.; Vogel, E.K. Event-related potential studies of attention. Trends Cogn. Sci. 2000, 4, 432–440. [Google Scholar] [CrossRef]

- Hofmann, M.J.; Kuchinke, L.; Tamm, S.; Võ, M.L.H.; Jacobs, A.M. Affective processing within 1/10th of a second: High arousal is necessary for early facilitative processing of negative but not positive words. Cogn. Affect. Behav. Neurosci. 2009, 9, 389–397. [Google Scholar] [CrossRef] [PubMed]

- Xia, M.; Li, X.; Ye, C.; Li, H. The ERPs for the Facial Expression Processing. Adv. Psychol. Sci. 2014, 22, 1556–1563. [Google Scholar] [CrossRef]

- Smith, E.; Weinberg, A.; Moran, T.; Hajcak, G. Electrocortical responses to NIMSTIM facial expressions of emotion. Int. J. Psychophysiol. 2013, 88, 17–25. [Google Scholar] [CrossRef] [PubMed]

- Schindler, S.; Vormbrock, R.; Kissler, J. Emotion in Context: How Sender Predictability and Identity Affect Processing of Words as Imminent Personality Feedback. Front. Psychol. 2019, 10, 94. [Google Scholar] [CrossRef]

- Morel, S.; Beaucousin, V.; Perrin, M.; George, N. Very early modulation of brain responses to neutral faces by a single prior association with an emotional context: Evidence from MEG. NeuroImage 2012, 61, 1461–1470. [Google Scholar] [CrossRef]

- Neta, M.; Davis, F.C.; Whalen, P.J. Valence resolution of ambiguous facial expressions using an emotional oddball task. Emotion 2011, 11, 1425–1433. [Google Scholar] [CrossRef]

- Park, G.; Vasey, M.W.; Kim, G.; Hu, D.D.; Thayer, J.F. Trait Anxiety Is Associated with Negative Interpretations When Resolving Valence Ambiguity of Surprised Faces. Front. Psychol. 2016, 7, 1164. [Google Scholar] [CrossRef]

- Hu, Z.; Liu, H. Influence of emotional context on facial expression recognition and the underlying mechanism. J. Psychol. Sci. 2015, 38, 1087–1094. [Google Scholar] [CrossRef]

- Federmeier, K.D.; Kutas, M. Right words and left words: Electrophysiological evidence for hemispheric differences in meaning processing. Brain Res Cogn Brain Res. 1999, 8, 373–392. [Google Scholar] [CrossRef] [PubMed]

- Sato, W.; Yoshikawa, S. Detection of emotional facial expressions and anti-expressions. Vis. Cogn. 2010, 18, 369–388. [Google Scholar] [CrossRef]

- Balconi, M.; Pozzoli, U. Face-selective processing and the effect of pleasant and unpleasant emotional expressions on ERP correlates. Int. J. Psychophysiol. 2003, 49, 67–74. [Google Scholar] [CrossRef]

- Schupp, H.T.; Junghöfer, M.; Weike, A.I.; Hamm, A.O. Attention and emotion: An ERP analysis of facilitated emotional stimulus processing. NeuroReport 2003, 14, 1107–1110. [Google Scholar] [CrossRef]

- Ma, Y.; Han, S. Why we respond faster to the self than to others? An implicit positive association theory of self-advantage during implicit face recognition. J. Exp. Psychol. Hum. Percept. Perform. 2010, 36, 619–633. [Google Scholar] [CrossRef] [PubMed]

- Mezulis, A.H.; Abramson, L.Y.; Hyde, J.S.; Hankin, B.L. Is There a Universal Positivity Bias in Attributions? A Meta-Analytic Review of Individual, Developmental, and Cultural Differences in the Self-Serving Attributional Bias. Psychol. Bull. 2004, 130, 711–747. [Google Scholar] [CrossRef]

- Watson, L.; Dritschel, B.; Obonsawin, M.; Jentzsch, I. Seeing yourself in a positive light: Brain correlates of the self-positivity bias. Brain Res. 2007, 1152, 106–110. [Google Scholar] [CrossRef]

- Herbert, C.; Pauli, P.; Herbert, B.M. Self-reference modulates the processing of emotional stimuli in the absence of explicit self-referential appraisal instructions. Soc. Cogn. Affect. Neurosci. 2010, 6, 653–661. [Google Scholar] [CrossRef]

- Geday, J.; Ostergaard, K.; Gjedde, A. Stimulation of subthalamic nucleus inhibits emotional activation of fusiform gyrus. NeuroImage 2006, 33, 706–714. [Google Scholar] [CrossRef]

- Sato, W.; Kochiyama, T.; Yoshikawa, S.; Matsumura, M. Emotional expression boosts early visual processing of the face: ERP recording and its decomposition by independent component analysis. NeuroReport 2001, 12, 709–714. [Google Scholar] [CrossRef]

- Vuilleumier, P.; Pourtois, G. Distributed and interactive brain mechanisms during emotion face perception: Evidence from functional neuroimaging. Neuropsychologia 2007, 45, 174–194. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Han, S. Functional dissociation of the left and right fusiform gyrus in self-face recognition. Hum. Brain Mapp. 2012, 33, 2255–2267. [Google Scholar] [CrossRef] [PubMed]

- Schindler, S.; Bublatzky, F. Attention and emotion: An integrative review of emotional face processing as a function of attention. Cortex 2020, 130, 362–386. [Google Scholar] [CrossRef] [PubMed]

- Song, S.; Zhao, S.; Gao, Z.; Lu, M.; Zhang, M.; Gao, S.; Zheng, Y. Influence of affective verbal context on emotional facial expression perception of social anxiety. Int. J. Psychophysiol. 2022, 181, 141–149. [Google Scholar] [CrossRef]

- Sabatinelli, D.; Flaisch, T.; Bradley, M.M.; Fitzsimmons, J.R.; Lang, P.J. Affective picture perception: Gender differences in visual cortex? NeuroReport 2004, 15, 1109–1112. [Google Scholar] [CrossRef]

- Hajcak, G.; Anderson, B.; Arana, A.; Borckardt, J.; Takacs, I.; George, M.; Nahas, Z. Dorsolateral prefrontal cortex stimulation modulates electrocortical measures of visual attention: Evidence from direct bilateral epidural cortical stimulation in treatment-resistant mood disorder. Neuroscience 2010, 170, 281–288. [Google Scholar] [CrossRef]

- Schupp, H.T.; Stockburger, J.; Codispoti, M.; Junghöfer, M.; Weike, A.I.; Hamm, A.O. Selective Visual Attention to Emotion. J. Neurosci. 2007, 27, 1082–1089. [Google Scholar] [CrossRef]

- Schupp, H.; Cuthbert, B.; Bradley, M.; Hillman, C.; Hamm, A.; Lang, P. Brain processes in emotional perception: Motivated attention. Cogn. Emot. 2004, 18, 593–611. [Google Scholar] [CrossRef]

- Bradley, M.M.; Sabatinelli, D.; Lang, P.J.; Fitzsimmons, J.R.; King, W.; Desai, P. Activation of the visual cortex in motivated attention. Behav. Neurosci. 2003, 117, 369–380. [Google Scholar] [CrossRef]

- Zhang, D.; He, W.; Wang, T.; Luo, W.; Zhu, X.; Gu, R.; Li, H.; Luo, Y.-J. Three stages of emotional word processing: An ERP study with rapid serial visual presentation. Soc. Cogn. Affect. Neurosci. 2014, 9, 1897–1903. [Google Scholar] [CrossRef]

- Herbert, C.; Kissler, J.; Junghöfer, M.; Peyk, P.; Rockstroh, B. Processing of emotional adjectives: Evidence from startle EMG and ERPs. Psychophysiology 2006, 43, 197–206. [Google Scholar] [CrossRef] [PubMed]

- Kissler, J.; Assadollahi, R.; Herbert, C. Emotional and semantic networks in visual word processing: Insights from erp studies. Prog. Brain Res. 2006, 156, 147–183. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Guo, J.; Ma, X.; Zhang, M.; Liu, L.; Feng, L.; Yang, J.; Wang, Z.; Wang, G.; Zhong, N. Self-Reference Emerges Earlier than Emotion during an Implicit Self-Referential Emotion Processing Task: Event-Related Potential Evidence. Front. Hum. Neurosci. 2017, 11, 451. [Google Scholar] [CrossRef] [PubMed]

- Fields, E.C.; Kuperberg, G.R. It’s All About You: An ERP Study of Emotion and Self-Relevance in Discourse. Neuroimage 2012, 62, 562–574. [Google Scholar] [CrossRef]

- George, M.S.; Mannes, S.; Hoffinan, J.E. Global Semantic Expectancy and Language Comprehension. J. Cogn. Neurosci. 1994, 6, 70–83. [Google Scholar] [CrossRef]

- Rubinsten, O.; Korem, N.; Perry, A.; Goldberg, M.; Shamay-Tsoory, S. Different neural activations for an approaching friend versus stranger: Linking personal space to numerical cognition. Brain Behav. 2020, 10, e01613. [Google Scholar] [CrossRef]

- Proverbio, A.M.; Riva, F.; Paganelli, L.; Cappa, S.F.; Canessa, N.; Perani, D.; Zani, A. Neural Coding of Cooperative vs. Affective Human Interactions: 150 ms to Code the Action’s Purpose. PLoS ONE 2011, 6, e22026. [Google Scholar] [CrossRef]

- Lin, H.; Liang, J. Competition influences outcome processing involving social comparison: An ERP study. Psychophysiology 2024, 61, e14477. [Google Scholar] [CrossRef]

| Test | Timing | Electrodes | Peak |

|---|---|---|---|

| Contextual valence | 164–768 ms | Fp2, F4, C4, P4, O1, O2, F8, T8, P7, P8, Oz, FC6, CP5, CP6, AF4, FC4, PO3, PO4, F6, C6, P5, P6, AF8, FT8, TP8, PO7, PO8, Fpz | PO8 at 238 ms [F(2, 68) = 16.24, p = 0.00188] |

| 178–800 ms | Fp1, F3, F4, C3, C4, P3, O1, F7, T7, P7, Cz, Pz, Oz, FC1, FC2, CP1, CP2, FC5, FC6, CP5, CP6, FCz, F1, F2, C1, C2, P1, AF3, AF4, FC3, FC4, CP3, CP4, PO3, F5, F6, C5, C6, P5, AF7, AF8, FT7, TP7, PO7, Fpz, CPz, POz | FC4 at 792 ms [F(2, 68) = 14.89, p = 0.00171] | |

| Interpersonal distance | N/A | N/A | N/A |

| Contextual valence × Interpersonal distance | N/A | N/A | N/A |

| ERPs | Test | Timing | Electrodes | Peak |

|---|---|---|---|---|

| P1 (90–130 ms) | Contextual valence | 98–122 ms | O1, P7, PO7 | P7 at 112 ms (F(2, 68) = 7.17, p = 0.0155) |

| Interpersonal distance | 90–130 ms | O1, P7, PO7 | PO7 at 120 ms (F(2, 68) = 7.27, p = 0.00928) | |

| N170 (140–190 ms) | Contextual valence | N/A | N/A | N/A |

| Interpersonal distance | N/A | N/A | N/A | |

| EPN (256–305 ms) | Contextual valence | 256–304 ms | P8, PO8, O2 | PO8 at 256 ms (F(2, 68) = 8.72, p = 0.01391) |

| Interpersonal distance | 256–304 ms | PO8, O2 | PO8 at 268 ms (F(2, 68) = 6.08, p = 0.01997) | |

| LPP (400–600 ms) | Contextual valence | 446–600 ms | CP1, CPz, C1, Cz | Cz at 544 ms (F(2, 68) = 9.68, p = 0.0118) |

| Interpersonal distance | 420–554 ms | CP1, CP2, CPz, C1, C2, Cz | CP2 at 540 ms (F(2, 68) = 6.77, p = 0.01128) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ran, D.; Zhang, Y.; Hao, B.; Li, S. Emotional Evaluations from Partners and Opponents Differentially Influence the Perception of Ambiguous Faces. Behav. Sci. 2024, 14, 1168. https://doi.org/10.3390/bs14121168

Ran D, Zhang Y, Hao B, Li S. Emotional Evaluations from Partners and Opponents Differentially Influence the Perception of Ambiguous Faces. Behavioral Sciences. 2024; 14(12):1168. https://doi.org/10.3390/bs14121168

Chicago/Turabian StyleRan, Danyang, Yihan Zhang, Bin Hao, and Shuaixia Li. 2024. "Emotional Evaluations from Partners and Opponents Differentially Influence the Perception of Ambiguous Faces" Behavioral Sciences 14, no. 12: 1168. https://doi.org/10.3390/bs14121168

APA StyleRan, D., Zhang, Y., Hao, B., & Li, S. (2024). Emotional Evaluations from Partners and Opponents Differentially Influence the Perception of Ambiguous Faces. Behavioral Sciences, 14(12), 1168. https://doi.org/10.3390/bs14121168