Personalized Teaching Questioning Strategies Study Based on Learners’ Cognitive Structure Diagnosis

Abstract

1. Introduction

2. Literature Review

2.1. Teacher Questioning

2.2. Technology and Teacher Questioning

2.3. Cognitive Diagnostic Model Application

3. Theoretical Underpinnings

4. PTQ Strategies Based on Learner’s Cognitive Structure Diagnosis

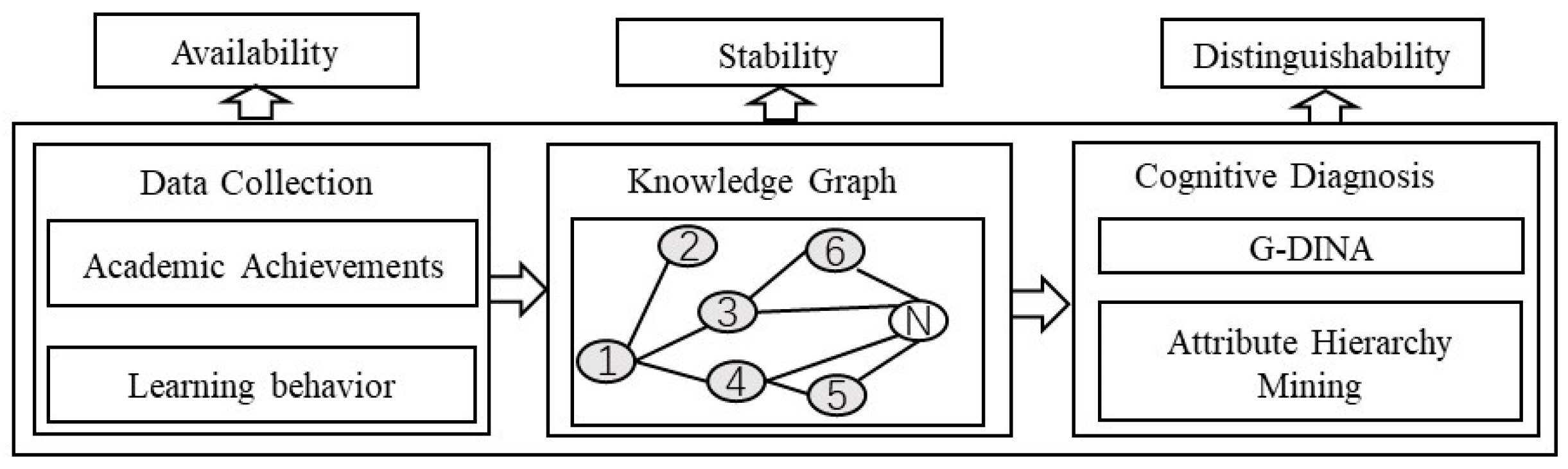

4.1. Learners’ Cognitive Structure Diagnosis

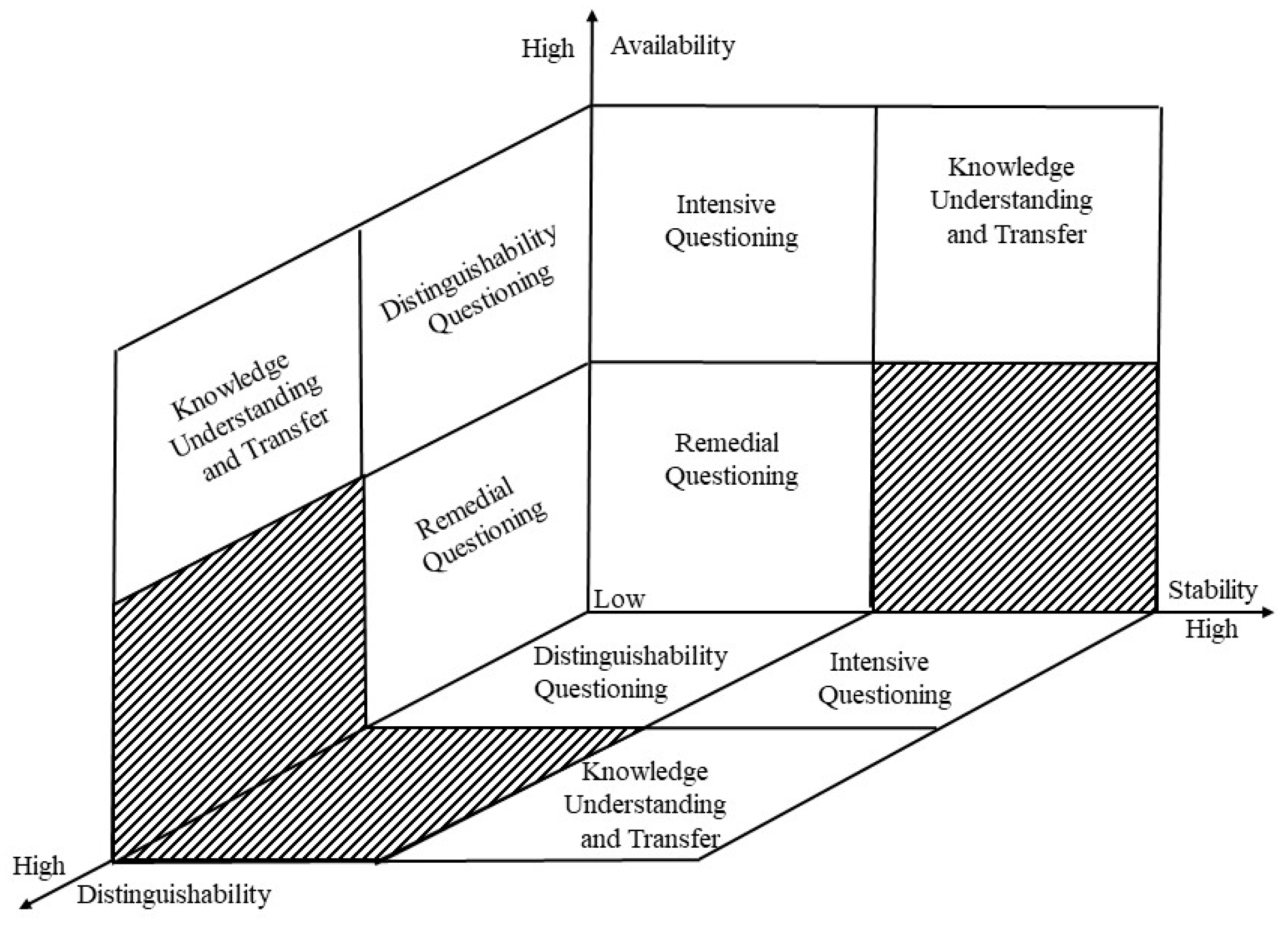

4.2. Personalized Questions Based on Diagnostic Results

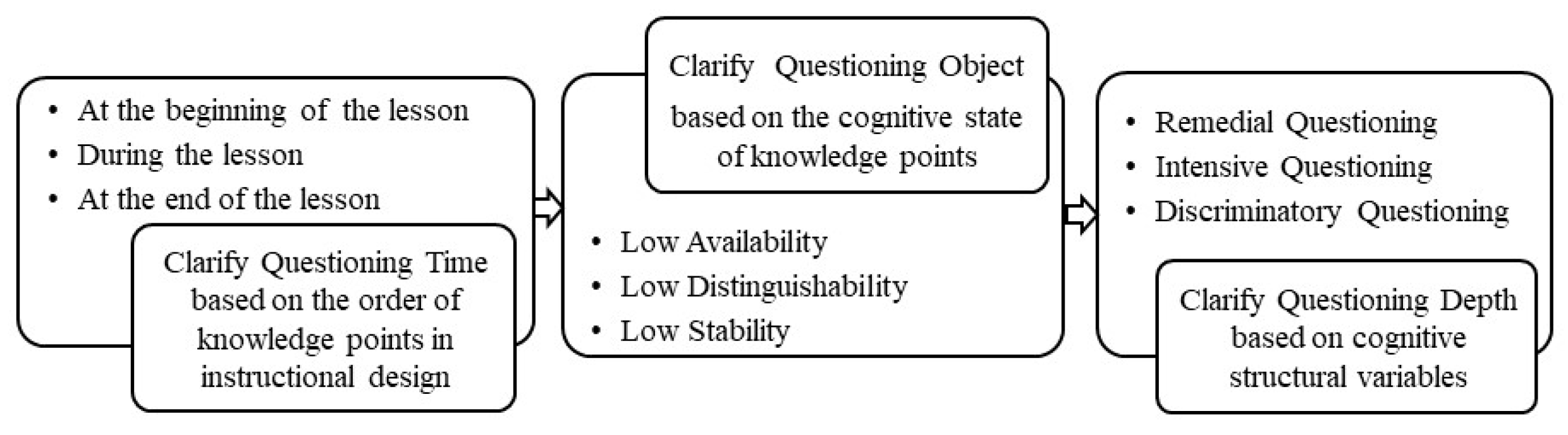

4.3. PTQ Practice

5. Methodology

5.1. Participants

5.2. Experimental Procedures

5.3. Data Collection

6. Data Analysis

6.1. The Analysis of Pre-and Post-Test Data

6.2. Time Series Analysis of Experimental and Control Groups

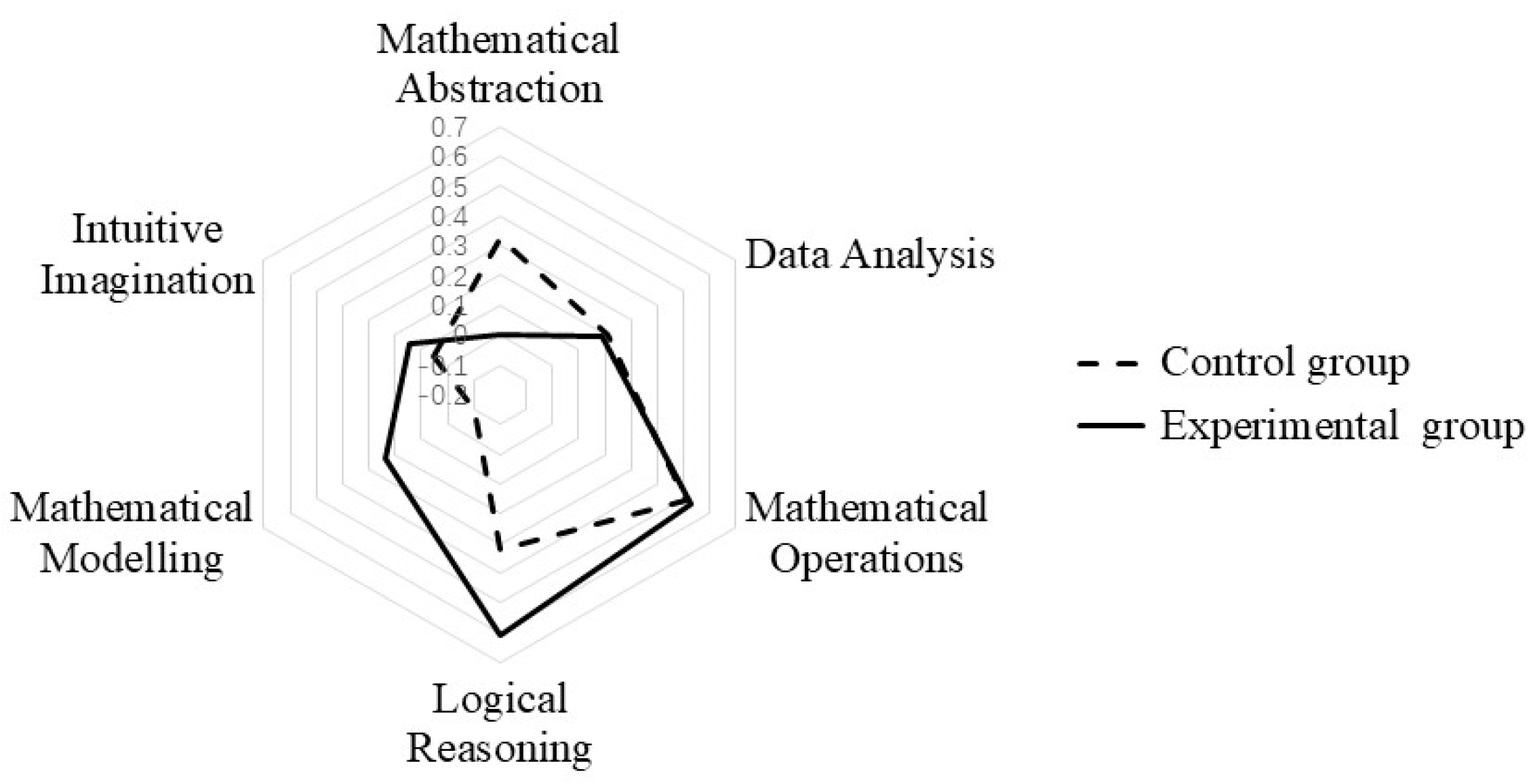

6.3. Comparative Analysis of Subject Literacy

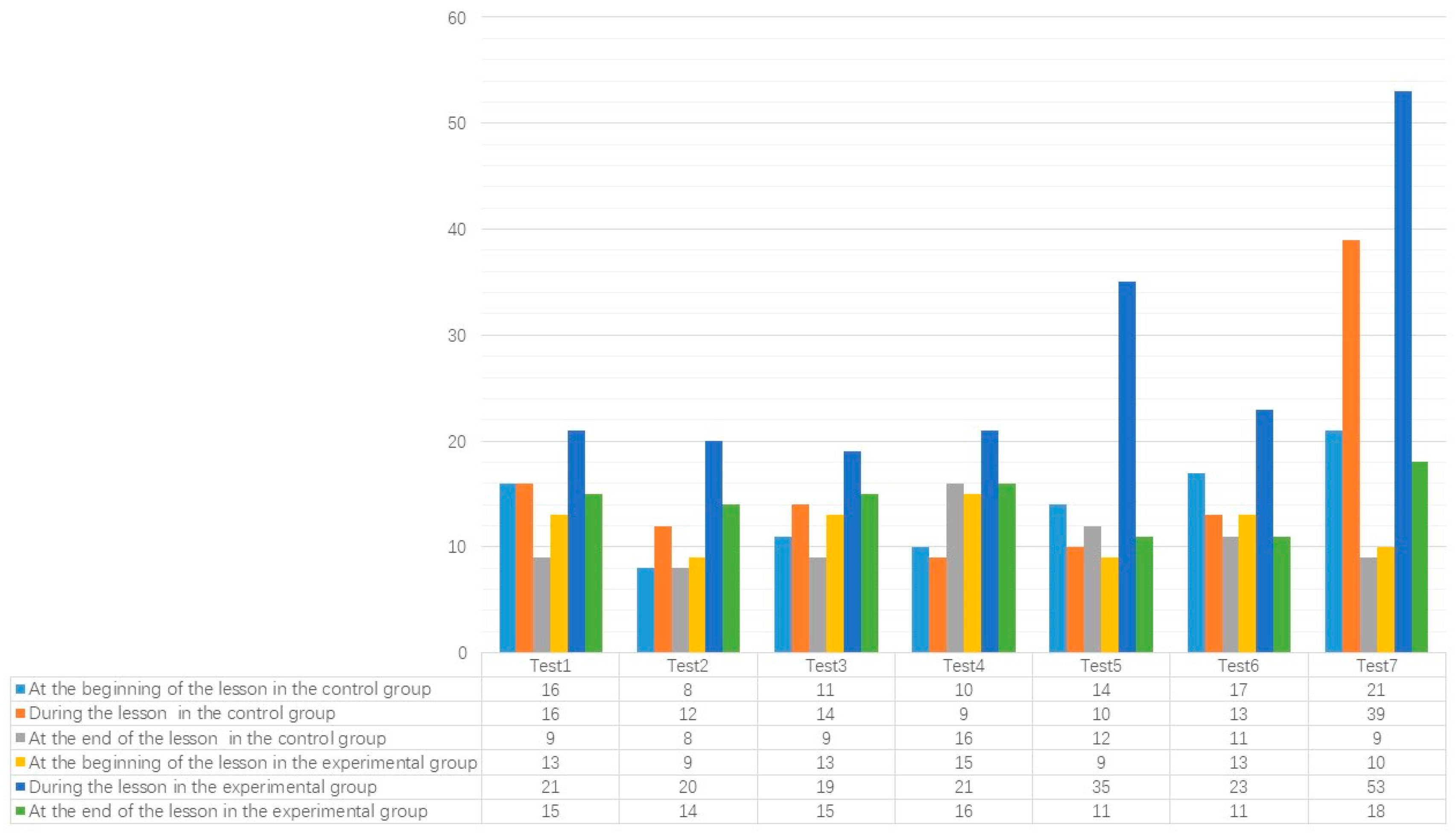

6.4. Comparison of the Number of Questions Asked in the Experimental and Control Groups

6.5. Comparison of Question Depth

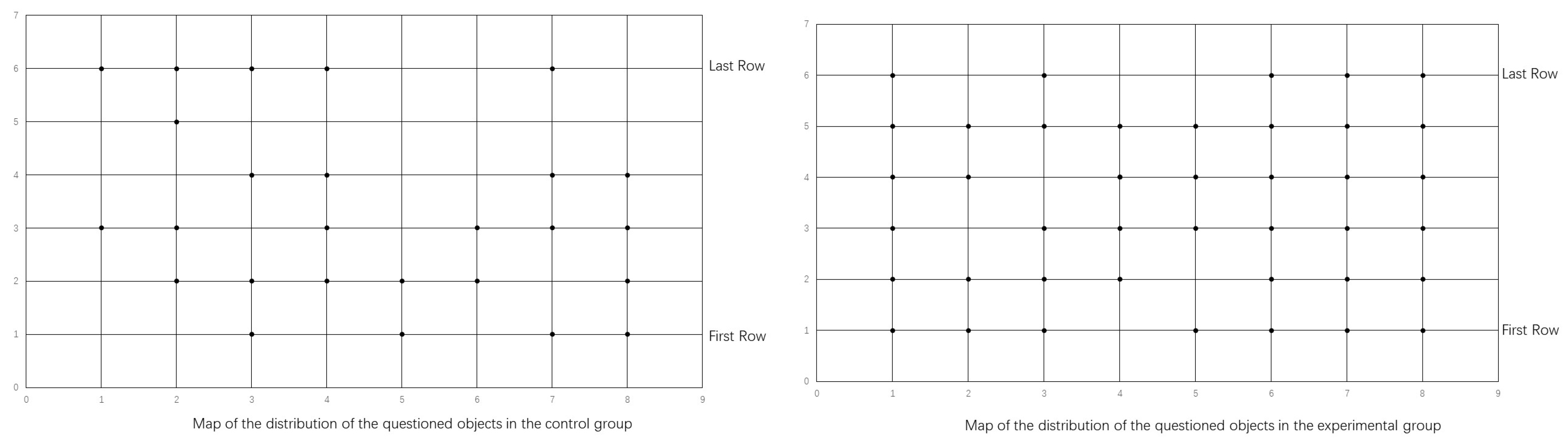

6.6. Distribution of Learners Asked in Class in the Control and Experimental Group

7. Discussion

7.1. PTQ Strategies Based on Cognitive Structure Diagnosis

7.2. The Effectiveness ofPTQ Strategies Based on Cognitive Structure Diagnosis

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Almeida, P.; de Jesus, H.P.; Watts, M. Developing a mini-project: Students’ questions and learning styles. Psychol. Educ. Rev. 2008, 32, 6. [Google Scholar] [CrossRef]

- Chin, C.; Osborne, J. Students’ questions: A potential resource for teaching and learning science. Stud. Sci. Educ. 2008, 44, 1–39. [Google Scholar] [CrossRef]

- Graesser, A.C.; Olde, B.A. How does one know whether a person understands a device? The quality of the questions the person asks when the device breaks down. J. Educ. Psychol. 2003, 95, 524. [Google Scholar] [CrossRef]

- Fusco, E. Effective Questioning Strategies in the Classroom: A Step-by-Step Approach to Engaged Thinking and Learning, K-8; Teachers College Press: New York, NY, USA, 2012. [Google Scholar]

- Morgan, N.; Saxton, J. Teaching, Questioning, and Learning; Taylor & Francis: Abingdon, UK, 1991. [Google Scholar]

- Norton, D.E. The Effective Teaching of Language Arts; ERIC: Washington, DC, USA, 1997. [Google Scholar]

- Wiliam, D. The right questions, the right way. Educ. Leadersh. 2014, 71, 16–19. [Google Scholar]

- Wilen, W.; Ishler, M.; Hutchison, J.; Kindsvatter, R. Dynamics of Effective Teaching; ERIC: Washington, DC, USA, 2000. [Google Scholar]

- Yang, H. A research on the effective questioning strategies in class. Sci. J. Educ. 2017, 5, 158–163. [Google Scholar] [CrossRef]

- Floyd, W.D. An Analysis of the Oral Questioning Activities in Selected Colorado Classrooms. Unpublished Doctoral Dissertation, Colorado State College, Fort Collins, CO, USA, 1960. [Google Scholar]

- Kerry, T. Explaining and Questioning; Nelson Thornes: Cheltenham, UK, 2002. [Google Scholar]

- Anderson, L.W.; Krathwohl, D.R.; Airasian, P.W.; Cruikshank, K.A.; Mayer, R.E.; Pintrich, P.R. A revision of Bloom’s taxonomy of educational objectives. In A Taxonomy for Learning, Teaching and Assessing; Longman: New York, NY, USA, 2001. [Google Scholar]

- Dös, B.; Bay, E.; Aslansoy, C.; Tiryaki, B.; Çetin, N.; Duman, C. An Analysis of Teachers’ Questioning Strategies. Educ. Res. Rev. 2016, 11, 2065–2078. [Google Scholar]

- Goodman, L. The art of asking questions: Using directed inquiry in the classroom. Am. Biol. Teach. 2000, 62, 473–476. [Google Scholar] [CrossRef]

- Tofade, T.; Elsner, J.; Haines, S.T. Best practice strategies for effective use of questions as a teaching tool. Am. J. Pharm. Educ. 2013, 77, 155. [Google Scholar] [CrossRef]

- Yang, Y.-T.C.; Newby, T.J.; Bill, R.L. Using Socratic questioning to promote critical thinking skills through asynchronous discussion forums in distance learning environments. Am. J. Distance Educ. 2005, 19, 163–181. [Google Scholar] [CrossRef]

- Saeed, T.; Khan, S.; Ahmed, A.; Gul, R.; Cassum, S.H.; Parpio, Y. Development of students’ critical thinking: The educators’ ability to use questioning skills in the baccalaureate programmes in nursing in Pakistan. JPMA J. Pak. Med. Assoc. 2012, 62, 200. [Google Scholar]

- Sellappah, S.; Hussey, T.; McMurray, A. The use of questioning strategies by clinical teachers. J. Adv. Nurs. 1998, 28, 142–148. [Google Scholar] [CrossRef]

- Fisher, D.; Frey, N. Asking questions that prompt discussion. Princ. Leadersh. 2011, 12, 58–60. [Google Scholar]

- Chen, W.; Shen, S. A Study on the Deep Questions of Mathematics Teaching in Primary Schools:A Case St udy Based on Classroom Questioning of Expert Teacher. Curric. Textb. Pedag. 2019, 39, 118–123. [Google Scholar] [CrossRef]

- Wang, Y.H.; Liu, S. Research on Strategies for Promoting Deep Learning through Classroom Questioning. Teach. Manag. 2018, 11, 101–103. [Google Scholar]

- Walsh, J.A.; Sattes, B.D. Questioning for Classroom Discussion: Purposeful Speaking, Engaged Listening, Deep Thinking; ASCD: Alexandria, VA, USA, 2015. [Google Scholar]

- Curenton, S.M.; Justice, L.M. African American and Caucasian preschoolers’ use of decontextualized language. Lang. Speech Hear. Serv. Sch. 2004, 35, 240–253. [Google Scholar] [CrossRef] [PubMed]

- Dickinson, D.K. Toward a toolkit approach to describing classroom quality. Early Educ. Dev. 2006, 17, 177–202. [Google Scholar] [CrossRef]

- Kintsch, E. Comprehension theory as a guide for the design of thoughtful questions. Top. Lang. Disord. 2005, 25, 51–64. [Google Scholar] [CrossRef]

- Brown, G.A.; Edmondson, R. Asking questions. In Classroom Teaching Skills: The Research Findings of the Teacher Education Project; Wragg, E.C., Ed.; Nichols: New York, NY, USA, 1989. [Google Scholar]

- Ellis, K. Teacher Questioning Behavior and Student Learning: What Research Says to Teachers. In Proceedings of the 1993 Convention of the Western States Communication Association, Albuquerque, NM, USA, 13–16 February 1993. [Google Scholar]

- Wilen, W.W. Questioning, Thinking and Effective Citizenship. Soc. Sci. Rec. 1985, 22, 4–6. [Google Scholar]

- Mohamad, S.K.; Tasir, Z. Exploring how feedback through questioning may influence reflective thinking skills based on association rules mining technique. Think. Ski. Creat. 2023, 47, 101231. [Google Scholar] [CrossRef]

- Lu, W.; Rongxiao, C. Classroom questioning tendencies from the perspective of big data. Front. Educ. China 2016, 11, 125–164. [Google Scholar] [CrossRef][Green Version]

- MA, Y.; Xia, X.; Zhang, W. Research on Analysis Method of Teachers’ Classroom Questioning Based on Deep Learning. e-Educ. Res. 2021, 42, 108–114. [Google Scholar]

- Lee, D.; Yeo, S. Developing an AI-based chatbot for practicing responsive teaching in mathematics. Comput. Educ. 2022, 191, 104646. [Google Scholar] [CrossRef]

- De La Torre, J. DINA model and parameter estimation: A didactic. J. Educ. Behav. Stat. 2009, 34, 115–130. [Google Scholar] [CrossRef]

- De La Torre, J. The generalized DINA model framework. Psychometrika 2011, 76, 179–199. [Google Scholar] [CrossRef]

- De La Torre, J.; Douglas, J.A. Higher-order latent trait models for cognitive diagnosis. Psychometrika 2004, 69, 333–353. [Google Scholar] [CrossRef]

- Liu, Q.; Wu, R.; Chen, E.; Xu, G.; Su, Y.; Chen, Z.; Hu, G. Fuzzy cognitive diagnosis for modelling examinee performance. ACM Trans. Intell. Syst. Technol. (TIST) 2018, 9, 4. [Google Scholar] [CrossRef]

- Fischer, G.H. The linear logistic test model as an instrument in educational research. Acta Psychol. 1973, 37, 359–374. [Google Scholar] [CrossRef]

- Bley, S. Developing and validating a technology-based diagnostic assessment using the evidence-centered game design approach: An example of intrapreneurship competence. Empir. Res. Vocat. Educ. Train. 2017, 9, 6. [Google Scholar] [CrossRef]

- Ranjbaran, F.; Alavi, S.M. Developing a reading comprehension test for cognitive diagnostic assessment: A RUM analysis. Stud. Educ. Eval. 2017, 55, 167–179. [Google Scholar] [CrossRef]

- Nichols, P.D.; Joldersma, K. Cognitive Diagnostic Assessment for Education. J. Educ. Meas. Theory J. Educ. Meas. 2008, 45, 407–411. [Google Scholar] [CrossRef]

- Vygotsky, L.S. The Mind in Society: The Development of Higher Psychologic Cambridge; Harvard University Press: London, UK, 1978. [Google Scholar]

- Barker, D.; Hapkiewicz, W.G. The effects of behavioral objectives relevant and incidental learning at two levels of Bloom’s taxonomy. J. Educ. Res. 1979, 72, 334–339. [Google Scholar] [CrossRef]

- Güler, G.; Özdemir, E.; Dikici, R. Preservice teachers’ proving skills of using mathematical induction and their views on mathematical proving. Kastamonu Educ. J. 2012, 20, 219–236. [Google Scholar]

- Özcan, S.; Akcan, K. Examination of the questions prepared by science teachers and their compliance to the content of Bloom’s Taxonomy. Kastamonu Educ. J. 2010, 18, 323–330. [Google Scholar]

- Tanık, N.; Saraçoğlu, S. Examination of the written questions of science and technology lesson according to the renewed bloom taxonomy. TÜBAV J. Sci. 2011, 4, 235–246. [Google Scholar]

- Wan, H.; Wang, Q.; Yu, S. The Construction and Application of Adaptive Learning Framework Based on Learning Cognitive Graph. Mod. Distance Educ. 2022, 73–82. [Google Scholar] [CrossRef]

- Bredderman, T. The effects of activity-based science in elementary schools. In Education in the 80’s: Science; National Education Association: Washington, DC, USA, 1982; pp. 63–75. [Google Scholar]

- Wilen, W.W. Questions, Questioning Techniques, and Effective Teaching.; ERIC: Washington, DC, USA, 1987. [Google Scholar]

- Aydemir, Y.; Çiftçi, Ö. Literature Teachers’ Questioning Skills: A Study on (Gazi University Faculty of Education Case). Centen. Univ. J. Fac. Educ 2008, 6, 103–115. [Google Scholar]

- Özdemir, A.; Baran, I. Importance of Turkey in terms Questioning Techniques of Science Teacher Education. Dokuz Eylül Univ. Buca Educ. Fac. J. 1999, 10, 132–137. [Google Scholar]

| Experimental Group | Control Group | T | p | |||

|---|---|---|---|---|---|---|

| m | Sd | m | Sd | |||

| Pre-test | 96.9388 | 6.42070 | 94.1667 | 9.18679 | 1.725 | 0.088 |

| Test1 | 98.0612 | 3.92857 | 96.4167 | 5.13160 | 1.770 | 0.080 |

| Test2 | 86.3878 | 15.73719 | 83.6042 | 10.93450 | 1.010 | 0.315 |

| Test3 | 88.3469 | 8.86837 | 90.3229 | 12.32300 | −0.908 | 0.366 |

| Test4 | 93.4694 | 8.72521 | 92.7917 | 9.94658 | 0.357 | 0.722 |

| Test5 | 87.9592 | 10.21836 | 80.5833 | 21.49204 | 2.151 | 0.035 |

| Test6 | 86.5918 | 9.87193 | 76.4375 | 13.67231 | 4.186 | 0.000 |

| Test7 | 94.0816 | 5.92254 | 89.2708 | 14.43951 | 2.155 | 0.034 |

| Post-test | 93.3673 | 5.62618 | 88.0833 | 12.99932 | 2.589 | 0.012 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Y.; Huang, T.; Wang, H.; Geng, J. Personalized Teaching Questioning Strategies Study Based on Learners’ Cognitive Structure Diagnosis. Behav. Sci. 2023, 13, 660. https://doi.org/10.3390/bs13080660

Zhao Y, Huang T, Wang H, Geng J. Personalized Teaching Questioning Strategies Study Based on Learners’ Cognitive Structure Diagnosis. Behavioral Sciences. 2023; 13(8):660. https://doi.org/10.3390/bs13080660

Chicago/Turabian StyleZhao, Yuan, Tao Huang, Han Wang, and Jing Geng. 2023. "Personalized Teaching Questioning Strategies Study Based on Learners’ Cognitive Structure Diagnosis" Behavioral Sciences 13, no. 8: 660. https://doi.org/10.3390/bs13080660

APA StyleZhao, Y., Huang, T., Wang, H., & Geng, J. (2023). Personalized Teaching Questioning Strategies Study Based on Learners’ Cognitive Structure Diagnosis. Behavioral Sciences, 13(8), 660. https://doi.org/10.3390/bs13080660