Framework for Classification of Fattening Pig Vocalizations in a Conventional Farm with High Relevance for Practical Application

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Animals and Housing

2.2. Behavioral Data Collection

2.3. Recording of Audio Data

2.4. Acoustic Data Processing

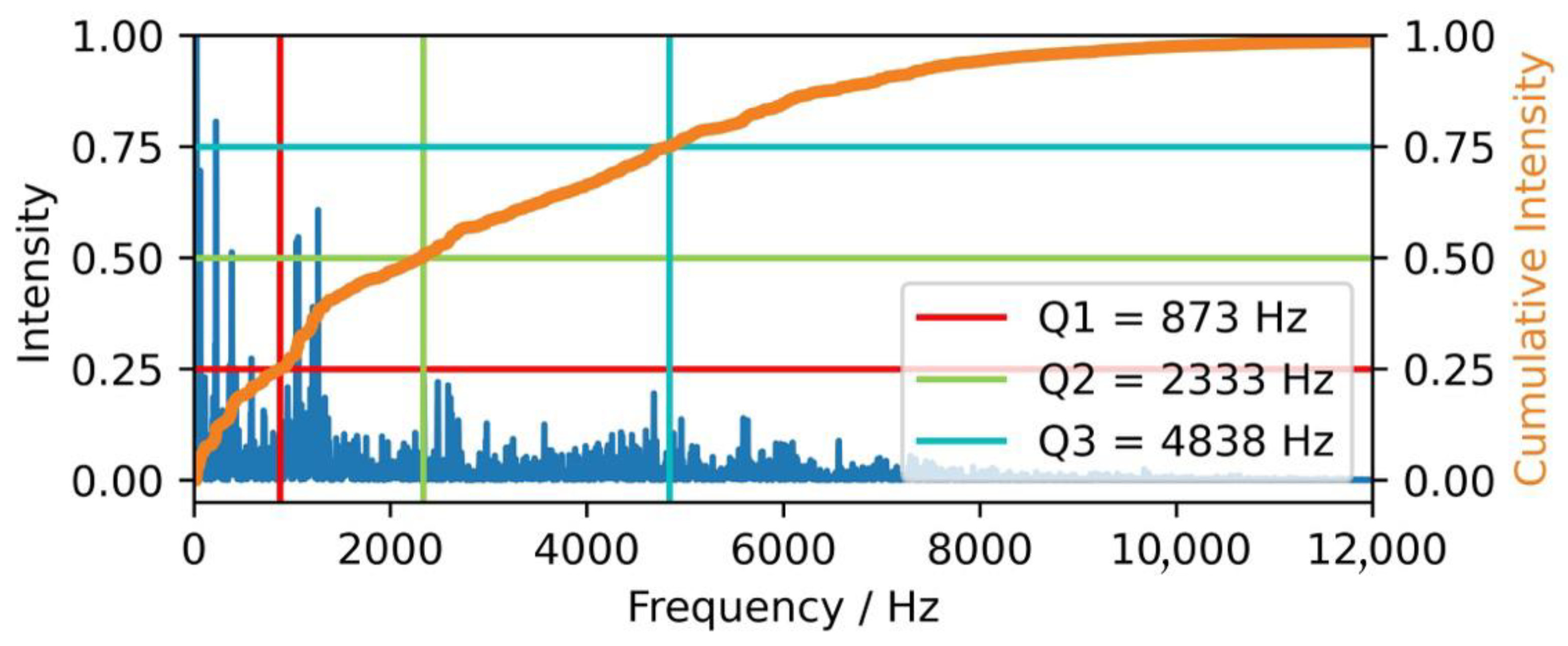

2.4.1. Frequency-Based Features—Quantiles of the Frequency Spectrum

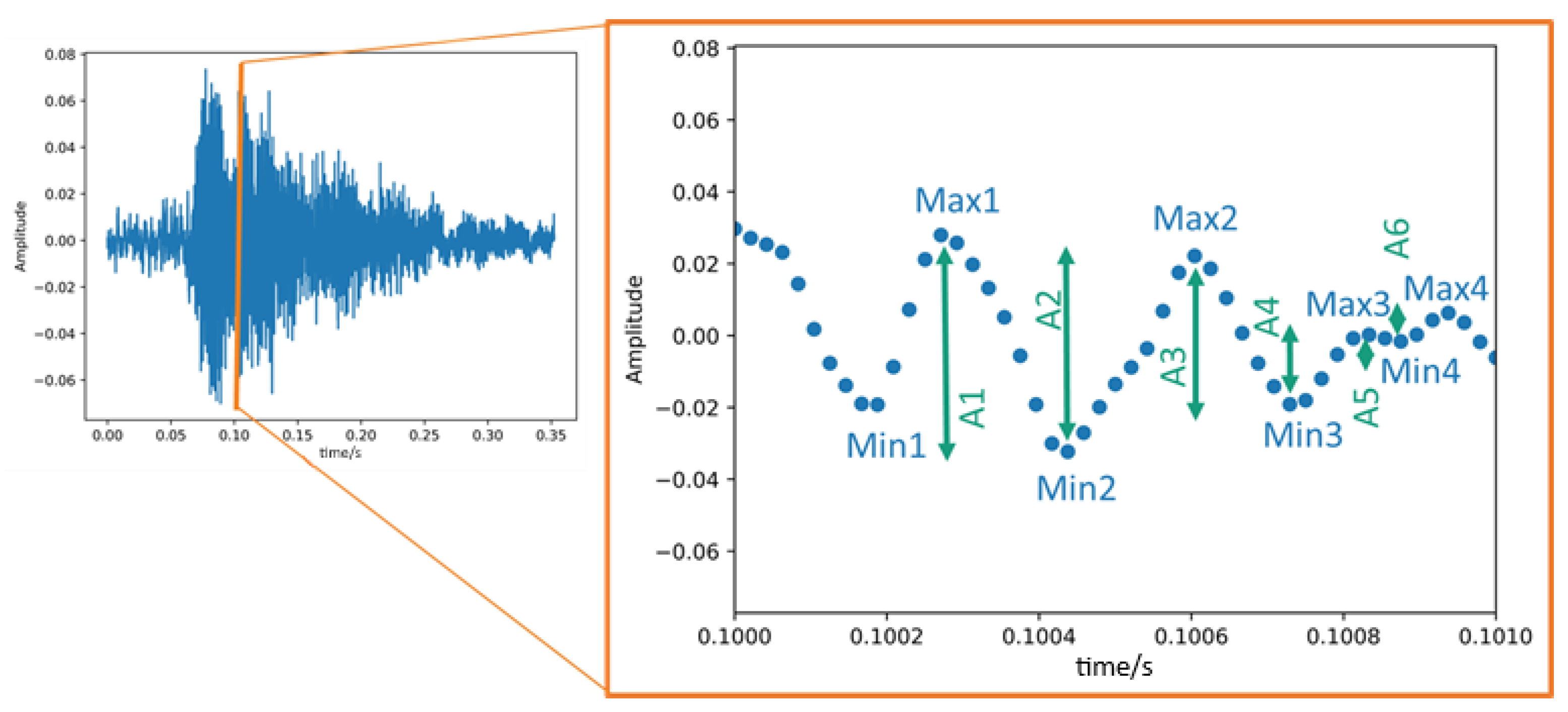

2.4.2. Time-Based Features—Measures of the Amplitude Changes

2.4.3. Mathematical Analysis of the Results

3. Results

3.1. Classification of Pig Sounds

3.2. Results of Behavioral Observations

3.3. Analysis of Acoustic Data Set Regarding Behavioral Framework

3.4. Analysis of Acoustic Data Set Regarding Acoustic Features

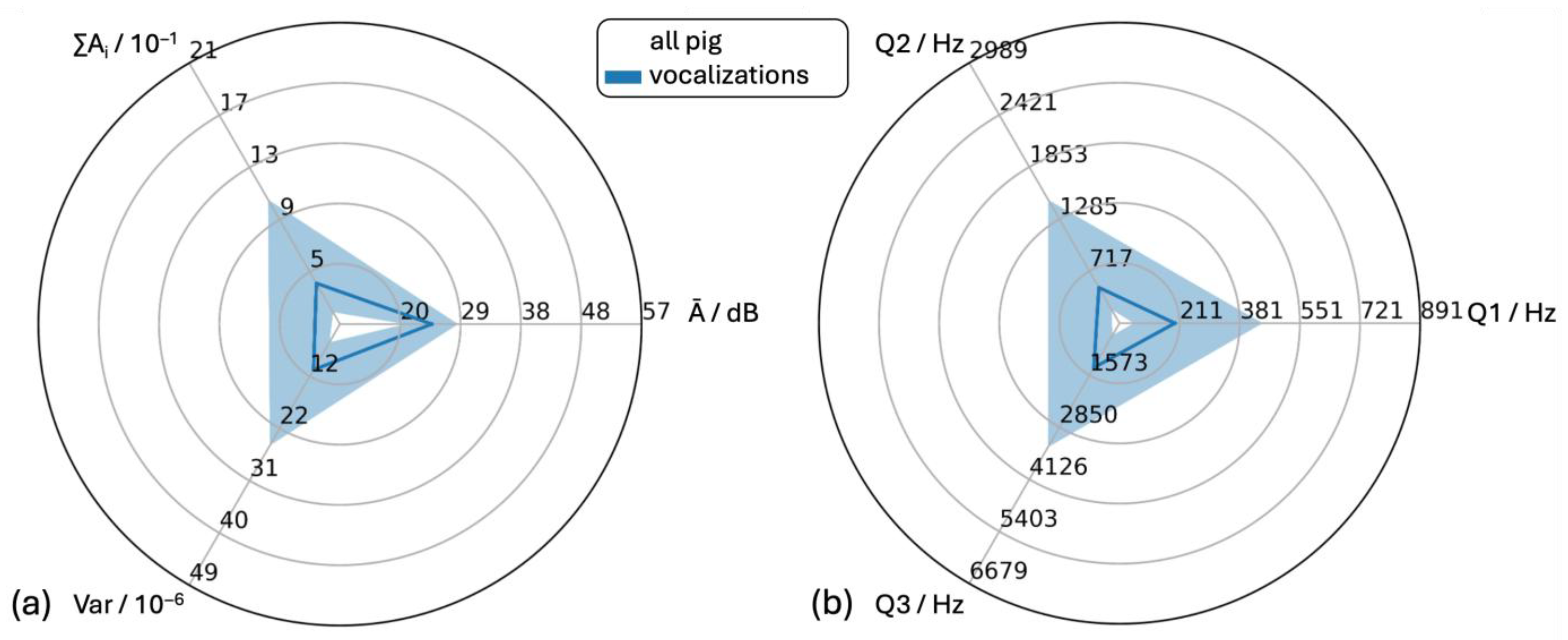

3.4.1. All Vocalizations

3.4.2. “Negative” and “Positive/Neutral” Vocalizations

3.4.3. “Oral Manipulation” and “Aversive Physical Contact”

3.4.4. “Alert” (Level 4)

3.4.5. “Sneezing” and “Coughing” (Level 4)

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| q1 | First quartile (25%) of the distribution |

| q2 | Second quartile (50%) of the distribution |

| q3 | Third quartile (75%) of the distribution |

| Var | Variance of the time signal |

| dB | Decibel |

| Ā | Mean level of the individual amplitude modulations |

| ∑Ai | Cumulative amplitude modulation |

| Q1 | First quartile (25%) of the cumulative frequency signal |

| Q2 | Second quartile (50%) of the cumulative frequency signal |

| Q3 | Third quartile (75%) of the cumulative frequency signal |

References

- Kiley, M. The Vocalizations of Ungulates, their Causation and Function. Z. Tierpsychol. 1972, 31, 171–222. [Google Scholar] [CrossRef]

- Fraser, D. The vocalizations and other behaviour of growing pigs in an “open field” test. Appl. Anim. Ethol. 1974, 1, 3–16. [Google Scholar] [CrossRef]

- Klingholz, F.; Meynhardt, H. Lautinventare der Säugetiere—Diskret oder kontinuierlich? Z. Tierpsychol. 1979, 50, 250–264. [Google Scholar]

- Garcia, M.; Gingras, B.; Bowling, D.L.; Herbst, C.T.; Boeckle, M.; Locatelli, Y.; Tecumseh Fitch, W. Structural Classification of Wild Boar (Sus scrofa) Vocalizations. Ethology 2015, 122, 329–342. [Google Scholar] [CrossRef]

- Tallet, C.; Linhart, P.; Policht, R.; Hammerschmidt, K.; Simecek, P.; Kratinova, P.; Spinka, M. Encoding of Situations in the Vocal Repertoire of Piglets (Sus scrofa): A Comparison of Discrete and Graded Classifications. PLoS ONE 2013, 8, e71841. [Google Scholar] [CrossRef] [PubMed]

- Briefer, E.F.; Sypherd, C.C.R.; Linhart, P.; Leliveld, L.M.C.; Padilla de la Torre, M.; Read, E.R.; Guérin, C.; Deiss, V.; Monestier, C.; Rasmussen, J.H.; et al. Classification of pig calls produces from birth to slaughter according to their emotional valence and context of production. Sci. Rep. 2022, 12, 3409. [Google Scholar] [CrossRef]

- Jensen, P.; Algers, B. An ethogram of piglet vocalizations during suckling. Appl. Anim. Ethol. 1984, 11, 237–248. [Google Scholar] [CrossRef]

- Algers, B. Nursing in pigs: Communicating needs and distributing resources. J. Anim. Sci. 1993, 71, 2826–2831. [Google Scholar] [CrossRef]

- Appleby, M.C.; Weary, D.M.; Taylor, A.A.; Illmann, G. Vocal Communication in Pigs: Who are Nursing Piglets Screaming at? Ethology 1999, 105, 881–892. [Google Scholar] [CrossRef]

- Weary, D.M.; Braithwaite, L.A.; Fraser, D. Vocal response to pain in piglets. Appl. Anim. Behav. Sci. 1998, 56, 161–172. [Google Scholar] [CrossRef]

- Marx, G.; Horn, T.; Thielebein, J.; Knubel, B.; von Borell, E. Analysis of pain-related vocalization in young pigs. J. Sound. Vib. 2003, 266, 687–698. [Google Scholar] [CrossRef]

- Puppe, B.; Schön, P.C.; Tuchscherer, A.; Manteuffel, G. Castration-induced vocalisation in domestic piglets, Sus scrofa: Complex and specific alterations of the vocal quality. Appl. Anim. Behav. Sci. 2005, 95, 67–78. [Google Scholar] [CrossRef]

- Von Borell, E.; Bünger, B.; Schmidt, T.; Horn, T. Vocal-type classification as a tool to identify stress in piglets under on-farm conditions. Anim. Welf. 2009, 18, 407–416. [Google Scholar] [CrossRef]

- Illmann, G.; Hammerschmidt, K.; Spinka, M.; Tallet, C. Calling by Domestic Piglets during Simulated Crushing and Isolation: A Signal of Need? PLoS ONE 2012, 8, e83529. [Google Scholar] [CrossRef] [PubMed]

- Weary, D.M.; Lawson, G.L.; Thompson, B.K. Sows shows stronger responses to isolation calls of piglets associated with greater levels of piglet need. Anim. Behav. 1996, 52, 1247–1253. [Google Scholar] [CrossRef]

- Illmann, G.; Schrader, L.; Spinka, M.; Sustr, P. Acoustical mother-offspring recognition in pigs (Sus scrofa domestica). Behaviour 2002, 139, 487–505. [Google Scholar] [CrossRef]

- Illmann, G.; Neuhauserova, K.; Pokorna, Z.; Chaloupkova, H.; Simeckova, M. Maternal responsiveness of sows towards piglet’s screams during the first 24h postpartum. Appl. Anim. Behav. Sci. 2008, 112, 248–259. [Google Scholar] [CrossRef]

- Marchant, J.N.; Whittaker, X.; Broom, D.M. Vocalisations of the adult female domestic pig during a standard human approach test and their relationships with behavioural and heart rate measures. Appl. Anim. Behav. Sci. 2001, 72, 23–39. [Google Scholar] [CrossRef]

- Manteuffel, G.; Schön, P.C. STREMODO, an innovative technique for continuous stress assessment of pigs in housing and transport. Arch. Tierzucht. 2004, 2, 173–181. [Google Scholar]

- Heseker, P.; Bergmann, T.; Scheumann, M.; Traulsen, I.; Kemper, N.; Probst, J. Detecting tail biters by monitoring pig screams in weaning pigs. Sci. Rep. 2024, 14, 4523. [Google Scholar] [CrossRef]

- Bollmann, K.E.; Nicolaisen, T.J.; Ganster, M.; Herter, S.; Hennig-Pauka, I.; Fischer, S.C.L. Untersuchung des Einsatzes von akustischer Überwachung in einem Assistenzsystem für die Schweinehaltung. In Fortschritte der Akustik—DAGA 2024, Proceedings of Jahrestagung für Akustik—DAGA 2024, Hannover, Germany, 18–21 March 2024; Deutsche Gesellschaft für Akustik: Berlin, Germany, 2024; pp. 953–956. [Google Scholar]

- Chan, W.Y. The Meaning of Barks: Vocal Communication of Fearful and Playful Affective States in Pigs. Ph.D. Thesis, Washington State University, Pullman, WA, USA, December 2011. [Google Scholar]

- Charlton, B.D.; Zhihe, Z.; Snyder, R.J. Vocal cues to identity and relatedness in giant pandas (Ailuropoda melanoleuca). J. Acoust. Soc. Am. 2009, 126, 2721–2732. [Google Scholar] [CrossRef]

- Maigrot, A.L.; Hillmann, E.; Briefer, E.F. Encoding of Emotional Valence in Wild Boar (Sus scrofa) Calls. Animals 2018, 8, 85. [Google Scholar] [CrossRef] [PubMed]

- Imfeld-Müller, S.; Van Wezemael, L.; Stauffacher, M.; Gygax, L.; Hillmann, E. Do pigs distinguish between situations of different emotional valences during anticipation? Appl. Anim. Behav. Sci. 2011, 131, 86–93. [Google Scholar] [CrossRef]

- Linhart, P.; Ratcliffe, V.F.; Reby, D.; Spinka, M. Expression of Emotional Arousal in Two Different Piglet Call Types. PLoS ONE 2015, 10, e0135414. [Google Scholar] [CrossRef] [PubMed]

- Schön, P.C.; Puppe, B.; Manteuffel, G. Automated recording of stress vocalisations as a tool to document impaired welfare in pigs. Anim. Welf. 2004, 13, 105–110. [Google Scholar] [CrossRef]

- Guarino, M.; Jans, P.; Costa, A.; Aerts, J.M.; Berckmans, D. Field test of algorithm for automatic cough detection in pig houses. Comput. Electron. Agric. 2008, 62, 22–28. [Google Scholar] [CrossRef]

- Shen, W.; Tu, D.; Yin, Y.; Bao, J. A new fusion feature based on convolutional neural network for pig cough recognition in field situations. Inform. Proc. Agric. 2021, 8, 573–580. [Google Scholar] [CrossRef]

- Shen, W.; Ji, N.; Yin, Y.; Dai, B.; Tu, D.; Sun, B.; Hou, H.; Kou, S.; Zhao, Y. Fusion of acoustic and deep features for pig cough sound detection. Comput. Electron. Agric. 2022, 197, 106994. [Google Scholar] [CrossRef]

- Ferrari, S.; Silva, M.; Guarino, M.; Aerts, J.M.; Berckmans, D. Cough sound analysis to identify respiratory infection in pigs. Comput. Electron. Agric. 2008, 64, 318–325. [Google Scholar] [CrossRef]

- Chan, W.; Cloutier, S.; Newberry, R.C. Barking pigs: Differences in acoustic morphology predict juvenile responses to alarm calls. Anim. Behav. 2011, 82, 767–774. [Google Scholar] [CrossRef]

- Andersen, I.L.; Ocepek, M.; Thingnes, S.L.; Newberry, R.C. Welfare and performance of finishing pigs on commercial farms: Associations with group size, floor space per pig and feed type. Appl. Anim. Behav. Sci. 2023, 105979. [Google Scholar] [CrossRef]

- Laskoski, F.; Faccin, J.E.G.; Vier, C.M.; Goncalves, M.A.D.; Orlando, U.A.D.; Kummer, R.; Mellagi, A.P.G.; Bernardi, M.L.; Wentz, I.; Bortolozzo, F.P. Effects of pigs per feeder hole and group size on feed intake onset, growth performance, and ear and tail lesions in nursery pigs with consistent space allowance. J. Swine Health Prod. 2019, 27, 12–18. [Google Scholar] [CrossRef]

- Jensen, M.B.; Pedersen, L.J. Effects of feeding level and access to rooting material on behaviour of growing pigs in situations with reduced feeding space and delayed feeding. Appl. Anim. Behav. Sci. 2010, 123, 1–6. [Google Scholar] [CrossRef]

- Pedersen, L.J.; Herskin, M.S.; Forkman, B.; Halekoh, U.; Kristensen, K.M.; Jensen, M.B. How much is enough? The amount of straw necessary to satisfy pigs’ need to perform exploratory behaviour. Appl. Anim. Behav. Sci. 2014, 160, 46–55. [Google Scholar] [CrossRef]

- Hansen, L.L.; Hagelsø, A.M.; Madsen, A. Behavioural results and performance of bacon pigs fed ad libitum from one or several self-feeders. Appl. Anim. Ethol. 1982, 8, 307–333. [Google Scholar] [CrossRef]

- Moinard, C.; Mendl, M.; Nicol, C.J.; Green, L.E. A case-control study of on-farm risk factors for tail biting in pigs. Appl. Anim. Behav. Sci. 2003, 81, 333–355. [Google Scholar] [CrossRef]

- Aarnink, A.J.A.; Schrama, J.W.; Heetkamp, M.J.W.; Stefanowska, J.; Huynh, T.T.T. Temperature and body weight affect fouling of pig pens. J. Anim. Sci. 2006, 84, 2224–2231. [Google Scholar] [CrossRef] [PubMed]

- Savary, P.; Gygax, L.; Wechsler, B.; Hauser, R. Effect of a synthetic plate in the lying area on lying behaviour, degree of fouling and skin lesions at the leg joints of finishing pigs. Appl. Anim. Behav. Sci. 2009, 118, 20–27. [Google Scholar] [CrossRef]

| Sound Classification | Observed Behavior and Sound | Definition | |

|---|---|---|---|

| Vocalizations | Positive/neutral | Grunting | Grunting by a pig, often as a contact call during social interaction |

| Playing behavior | Pig or a group of pigs scampering around the pen during vocalization (barking) | ||

| Negative | Conflict over resources | Agonistic behavior at the feeder, drinking nipple or enrichment material | |

| Fight | Agonistic behavior between two pigs without recognizable resource conflict, rank fight | ||

| Oral manipulation | A pig manipulates another pig with its mouth/nose, e.g., ear nibbling, tail nibbling/biting, belly nosing | ||

| Aversive physical contact | A pig steps aversively on another sitting or lying pig or mounts a standing pig | ||

| Alert | Alert | Pigs bark, abruptly stopping their previous behavior, stand still, raise their heads and erect their ears. | |

| Others | Coughing | Explosive expiratory movement generated by the respiratory muscles | |

| Sneezing | Explosive expulsion of air through the nose | ||

| Ear shaking | Rapid, repeated movement of the head from side to side and vice versa. Ears hit the ipsilateral half of the pig’s face when the direction of movement is changed | ||

| Vocalization | Negative | Positive/ Neutral | Oral Manipulation | Physical Contact | Alert | Sneezing | Coughing | ||

|---|---|---|---|---|---|---|---|---|---|

| Number of sounds | 1167 | 296 | 380 | 45 | 123 | 51 | 319 | 81 | |

| ∑Ai/10−1 | Mean | 14.55 | 25.70 | 2.99 | 13.81 | 27.33 | 24.88 | 20.46 | 3.42 |

| q1 | 1.80 | 2.71 | 1.09 | 2.68 | 3.12 | 6.09 | 5.18 | 1.57 | |

| med | 4.00 | 5.63 | 1.68 | 5.41 | 6.94 | 11.82 | 8.88 | 2.41 | |

| q3 | 10.34 | 15.88 | 2.83 | 12.08 | 15.30 | 23.27 | 20.48 | 4.10 | |

| Ā/dB | Mean | 24.25 | 27.44 | 19.37 | 27.43 | 28.30 | 21.63 | 27.78 | 24.65 |

| q1 | 20.31 | 23.62 | 16.58 | 23.89 | 24.73 | 19.57 | 26.18 | 22.55 | |

| med | 24.42 | 28.27 | 19.37 | 27.87 | 29.24 | 21.88 | 28.08 | 24.42 | |

| q3 | 28.50 | 31.41 | 21.77 | 31.24 | 31.93 | 23.00 | 29.44 | 26.37 | |

| Var/10−6 | Mean | 39.64 | 71.25 | 14.06 | 39.96 | 74.01 | 145.22 | 34.53 | 10.62 |

| q1 | 6.42 | 7.32 | 5.04 | 6.52 | 8.29 | 28.43 | 8.57 | 5.10 | |

| med | 11.30 | 16.63 | 7.86 | 13.22 | 17.18 | 69.32 | 14.11 | 6.95 | |

| q3 | 24.69 | 36.34 | 12.56 | 25.39 | 36.71 | 132.71 | 32.94 | 12.29 | |

| Q1/Hz | Mean | 396.26 | 421.32 | 147.83 | 407.81 | 429.94 | 373.63 | 744.50 | 239.58 |

| q1 | 81.03 | 103.08 | 65.81 | 80.39 | 99.25 | 282.17 | 155.39 | 64.31 | |

| med | 97.07 | 238.31 | 97.98 | 260.06 | 288.49 | 370.87 | 449.46 | 172.28 | |

| q3 | 445.43 | 630.80 | 232.76 | 452.41 | 659.25 | 442.52 | 1314.86 | 374.86 | |

| Q2/Hz | Mean | 1017.67 | 1073.32 | 319.41 | 1034.70 | 1084.53 | 652.31 | 2003.6 | 693.39 |

| q1 | 298.78 | 341.38 | 238.27 | 361.91 | 398.70 | 479.62 | 1078.14 | 437.36 | |

| med | 538.36 | 870.07 | 304.47 | 888.47 | 947.00 | 581.77 | 1936.33 | 599.37 | |

| q3 | 1494.33 | 1461.60 | 339.66 | 1517.47 | 1378.16 | 761.64 | 2808.91 | 906.52 | |

| Q3/Hz | Mean | 2113.61 | 2287.67 | 663.51 | 2454.58 | 2271.59 | 997.44 | 4127.72 | 1529.76 |

| q1 | 593.67 | 1048.97 | 348.58 | 958.05 | 1176.08 | 704.80 | 2945.18 | 1246.30 | |

| med | 1386.88 | 1803.91 | 399.03 | 2268.11 | 2105.52 | 789.55 | 4244.95 | 1554.74 | |

| q3 | 3339.61 | 3448.64 | 786.19 | 3754.37 | 3255.79 | 1207.50 | 5142.95 | 1785.54 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nicolaisen, T.J.; Bollmann, K.E.; Hennig-Pauka, I.; Fischer, S.C.L. Framework for Classification of Fattening Pig Vocalizations in a Conventional Farm with High Relevance for Practical Application. Animals 2025, 15, 2572. https://doi.org/10.3390/ani15172572

Nicolaisen TJ, Bollmann KE, Hennig-Pauka I, Fischer SCL. Framework for Classification of Fattening Pig Vocalizations in a Conventional Farm with High Relevance for Practical Application. Animals. 2025; 15(17):2572. https://doi.org/10.3390/ani15172572

Chicago/Turabian StyleNicolaisen, Thies J., Katharina E. Bollmann, Isabel Hennig-Pauka, and Sarah C. L. Fischer. 2025. "Framework for Classification of Fattening Pig Vocalizations in a Conventional Farm with High Relevance for Practical Application" Animals 15, no. 17: 2572. https://doi.org/10.3390/ani15172572

APA StyleNicolaisen, T. J., Bollmann, K. E., Hennig-Pauka, I., & Fischer, S. C. L. (2025). Framework for Classification of Fattening Pig Vocalizations in a Conventional Farm with High Relevance for Practical Application. Animals, 15(17), 2572. https://doi.org/10.3390/ani15172572