1. Introduction

Precision breeding represents a critical driver of economic efficiency and genetic advancement in modern animal husbandry, with its impact being particularly profound within the global swine industry [

1]. The relentless pursuit of accelerated genetic improvement is fueled by the need to enhance economically important traits such as growth rate, feed efficiency, and disease resistance to meet growing consumer demand sustainably [

2,

3]. Genomic selection (GS) has emerged as the cornerstone of this endeavor, revolutionizing the field by leveraging whole-genome marker information to predict phenotypic values with greater accuracy [

4]. By enabling earlier and more precise selection decisions, GS has surpassed traditional pedigree-based methods, becoming an indispensable tool for modern breeding programs [

5]. However, despite these advances, a significant and persistent challenge remains: conventional genomic prediction methods are approaching a performance plateau, limiting the potential for further accelerating genetic gain and indicating a pressing need for more powerful analytical approaches [

6,

7].

The primary limitation of existing methodologies lies in their inherent inability to fully decipher the complex, non-additive genetic architecture that governs most economically relevant traits [

7]. Conventional methods, such as Genomic Best Linear Unbiased Prediction (GBLUP) and various Bayesian approaches, have proven effective in capturing additive genetic effects [

4,

8]. Yet, they often operate on simplifying assumptions, such as a common variance for all SNP effects, which hinders their capacity to model the intricate web of epistatic interactions between genes and the long-range dependencies that exist within genomic sequences [

4]. Consequently, a substantial portion of the heritable variation remains uncaptured, creating a clear methodological gap [

6]. While the field has explored more sophisticated techniques, including machine learning methods like Lasso and random forests, and even early deep learning architectures like Convolutional and Recurrent Neural Networks, these models have also shown limitations [

7,

9,

10]. They may offer improvements in handling high dimensionality, but often fall short of comprehensively modeling the hierarchical and spatially ordered nature of genomic information (i.e., the linear arrangement of markers along a chromosome), thus failing to fully exploit the rich data encoded in an organism’s DNA [

11,

12].

This research addresses this methodological void by introducing a novel framework built upon the Transformer architecture, a deep learning model that has shown revolutionary success in natural language processing [

13]. We posit that the self-attention mechanism at the core of the Transformer is uniquely suited to overcome the limitations of prior models by effectively capturing complex contextual relationships and long-range dependencies within sequential data, making it an ideal candidate for genomic analysis [

14,

15]. Furthermore, we leverage the power of self-supervised learning (SSL), a paradigm that enables models to learn rich, effective representations from genomic data itself, used in an unlabeled fashion [

16,

17]. This is particularly advantageous in genomics, where unlabeled SNP sequence data is abundant, while corresponding high-quality phenotype data is often scarce and expensive to acquire [

16].

Therefore, we advance the central scientific hypothesis that an encoder-only Transformer model, when first pre-trained on the unlabeled SNP sequences from the datasets used in this study via a self-supervised masked prediction task, can learn the fundamental syntax of the genome, including linkage disequilibrium patterns, local haplotypes, and complex interactive effects [

15,

18]. We hypothesize that this pre-learned knowledge provides a robust initialization, enabling the model, after fine-tuning on specific labeled datasets, to predict phenotypic values for key economic traits with substantially greater accuracy than both established baseline methods and a Transformer model trained from scratch. The main contributions of this work are thus threefold: the development of an innovative methodology applying a self-supervised, pre-trained Transformer to genomic prediction in pigs; a comprehensive demonstration of its superior predictive performance against a suite of existing models; and an exploration of the model’s interpretability to gain novel insights into the genomic features driving its predictions [

19,

20]. This approach promises a powerful new tool for genomic selection, potentially unlocking faster and more substantial genetic gains in swine production.

2. Materials and Methods

To systematically validate the effectiveness of the self-supervised pre-trained Transformer model proposed in this study in addressing the challenges of complex non-additive effects and long-range dependencies, we have designed the following experimental protocol for evaluation and validation. This section first introduces the key data resources used in the study, including genomic and phenotypic value data from multiple swine populations (

Section 2.1). Subsequently, to ensure the transparency and reproducibility of our research, we will detail the specific model architecture (

Section 2.2), the two-stage training strategy (

Section 2.3), and the complete experimental design, which includes baseline models and evaluation metrics (

Section 2.4).

2.1. Dataset

2.1.1. Data Source and Description

This study utilized two publicly available swine population datasets. For improved clarity and traceability, the datasets were renamed to reflect their origin and core content. The first dataset is the PIC Genomic Dataset (PIC-GD), which was made public by PIC (a Genus Company, Hampshire, UK ) to serve as a common resource for the scientific community, particularly for comparing and benchmarking genomic prediction methods [

21]. The data originates from a single PIC nucleus pig line and includes 3534 animals. Its components include high-density genotype data from the Illumina PorcineSNP60 chip, filtered to 52,842 SNPs, and a complete pedigree file. Crucially, the dataset provides recorded phenotypic values for five distinct purebred traits. According to the data provider, these traits exhibit a wide range of heritabilities (from 0.07 to 0.62), which provides an excellent basis for evaluating the model’s performance on traits with varying degrees of genetic control. All necessary pedigree and fixed effect information for traditional genetic evaluation were also included.

The second dataset is the Huazhong Agricultural University—Piglet Mortality (HZA-PMB) at Birth (HZA-PMB) [

22]. This dataset was established to characterize the genetic and genomic fundamentals of sow reproductive traits. The data was collected from multi-breed populations of Yorkshire, Landrace, and Duroc sows. The prediction targets for this dataset are the recorded phenotypic values for Piglet Mortality (HZA-PMB) at birth across the first three parities. Each parity is treated as a distinct, albeit related, trait. These traits exhibit low heritability (estimated between 0.063 and 0.114) [

22]. All individuals were genotyped using the Illumina PorcineSNP60 BeadChip.

For both datasets, the provided heritability estimates are critical, as they represent the proportion of phenotypic variance attributable to additive genetic effects and thus serve as a theoretical performance benchmark for linear models like GBLUP (

Table 1).

2.1.2. Data Preprocessing

The raw SNP data underwent a standard quality control (QC) procedure. SNPs were removed if their call rate was below 95% (missing rate > 5%), their minor allele frequency (MAF) was less than 1%, or if they significantly deviated from Hardy–Weinberg equilibrium (HWE p < ). Furthermore, individuals with more than 10% missing genotypes were excluded from the analysis. After QC, the remaining SNPs were numerically encoded, where 0, 1, and 2 represented homozygous for the major allele, heterozygous, and homozygous for the minor allele, respectively. This encoding process is not strand-aware, as the input SNP data was already standardized to reference and alternative alleles. The very few missing genotypes remaining post-QC (0.1%) were imputed using the mean genotype of the respective SNP locus.

To prepare the data for the Transformer model, each individual’s complete SNP sequence was processed into a non-overlapping sequence of 6-mer tokens, up to a maximum length of 10,240. A special [CLS] token was prepended to each sequence, intended to be used as an aggregate representation for downstream prediction tasks. Sequences shorter than this defined maximum length were padded with a special [PAD] token to ensure uniform input size [

15].

2.2. Model Architecture

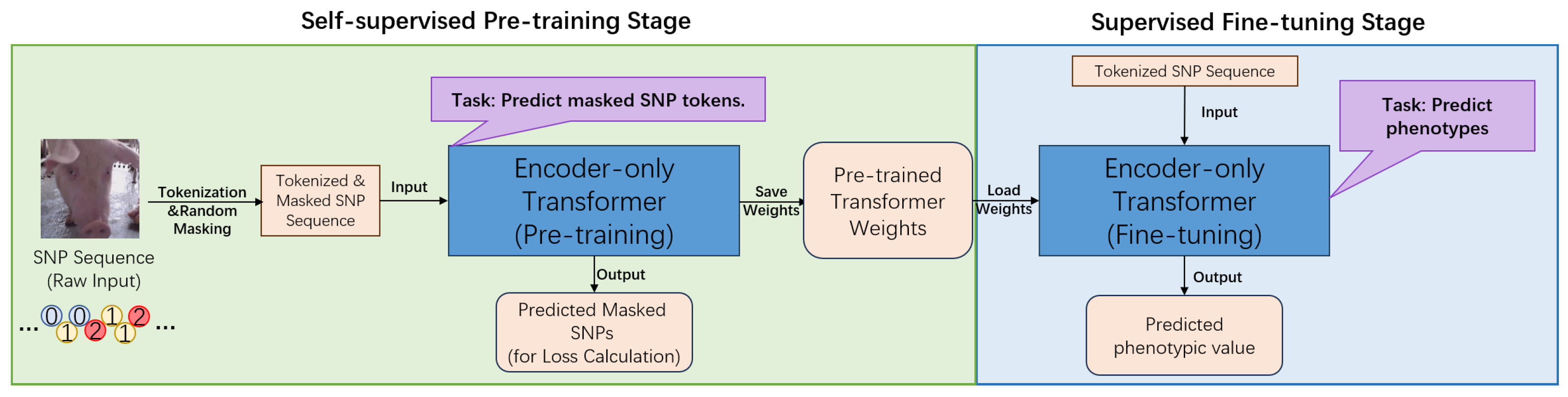

The core of this research is a novel computational framework centered on an encoder-only Transformer architecture [

13], specifically designed to address the challenges of genomic prediction in swine (

Figure 1 and

Figure 2). This framework conceptualizes SNP sequences as a form of biological language [

15,

18], allowing the model to learn the complex mapping from genotype to phenotype. Its architecture is engineered to overcome the limitations of conventional methods in capturing non-additive effects and long-range dependencies within the genome [

13,

14].

The model begins by transforming raw numerical SNP data into a high-dimensional vector space through a multi-component input representation layer. This involves creating learnable SNP token embeddings for each unique allele value and special tokens, which are then combined with position embeddings that inform the model of the sequential order of SNPs along the chromosome. This rich representation serves as the input for the model’s core engine: a stack of twelve identical Transformer encoder layers. Each layer is composed of two primary sub-modules. The first, a multi-head self-attention mechanism, is crucial for modeling complex genetic architecture. It allows the model to weigh the importance of all other SNPs in a sequence when processing a single SNP, thereby effectively capturing both local and distant interactions, such as epistasis [

13]. This is followed by a fully connected feed-forward network, which provides further non-linear transformation to enhance the model’s expressive power.

A key innovation of our framework is its two-stage training paradigm, which utilizes different task-specific output heads. In the initial self-supervised pre-training phase, a simple linear head is added to predict randomly masked SNPs from the genomic portion of the datasets, treated as unlabeled. This task compels the model to learn the intrinsic patterns of the swine genome, such as linkage disequilibrium and allele frequencies, without any phenotypic information [

15]. For the subsequent supervised fine-tuning stage, this pre-training head is replaced with a regression head. This head uses the aggregated representation from the special [CLS] token to predict final phenotypic values for specific economic traits using labeled data. This pre-training and fine-tuning approach represents a significant novelty, as it enables the model to develop a deep understanding of genomic structure, leading to demonstrably superior prediction accuracy compared to baseline models like GBLUP or a Transformer trained from scratch [

7,

23].

Ultimately, the framework is designed to deliver both methodological and applied contributions to the field of computational breeding. Methodologically, it introduces a new, powerful, and scalable deep learning model that leverages large-scale unlabeled data, offering a novel paradigm for genomic prediction that can be adapted to other species. From an applied perspective, the enhanced prediction accuracy directly translates to the potential for higher genetic gain. By enabling more precise selection decisions, this model can help accelerate genetic improvement in swine-breeding programs, leading to significant productivity and economic benefits for the industry. Furthermore, interpretability analyses of the model’s attention patterns may offer new insights into the genetic architecture of complex traits by highlighting novel genomic regions of interest [

19,

24].

2.3. Training Strategy

The model’s training is conducted in a two-stage paradigm: a self-supervised pre-training phase followed by a supervised fine-tuning phase. This approach is designed to first let the model learn robust representations of genomic architecture from large-scale data and then adapt this knowledge to specific prediction tasks [

11,

16].

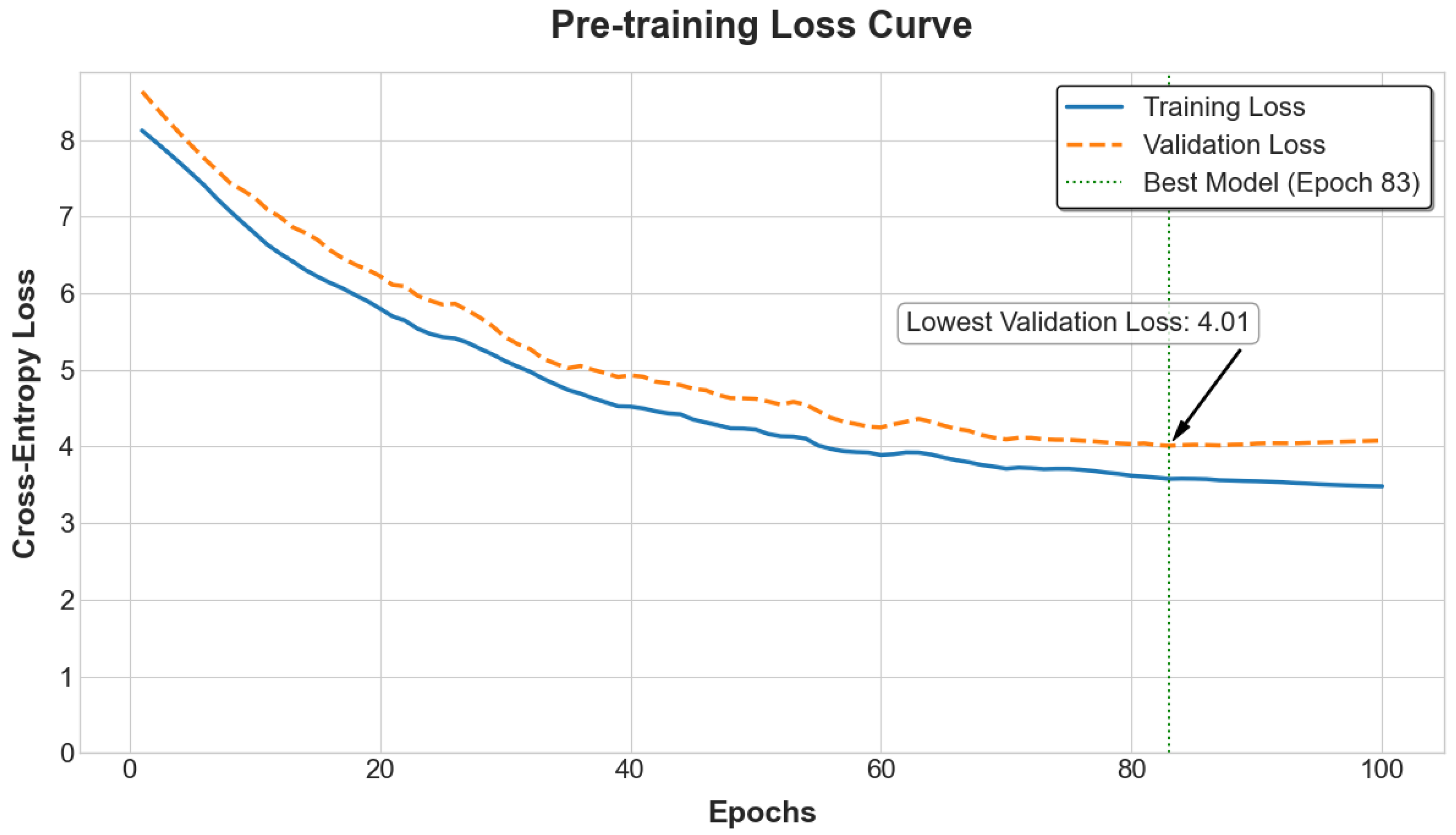

The self-supervised pre-training phase employs a masked 6-mer prediction (M6P) objective, a critical methodological shift from masking individual SNPs (

Figure 3). This strategy is predicated on the biological principle that SNPs are often inherited in blocks (haplotypes). By tokenizing the genome into non-overlapping 6-mer sequences, the model is tasked with learning local haplotype patterns directly, which also significantly reduces the input sequence length for computational efficiency [

15,

17]. During this stage, 15% of the 6-mer tokens in each sequence are randomly selected for prediction. Of these, 80% are replaced with a special [MASK] token, 10% are replaced with a random 6-mer token from the vocabulary, and the remaining 10% are left unchanged [

15]. This pre-training utilizes the complete genotypic datasets from both the PIC Genomic Dataset (PIC-GD) and the Huazhong Agricultural University—Piglet Mortality (HZA-PMB) at Birth (HZA-PMB) populations, without relying on any phenotype labels. This allows the model to learn a generalized understanding of swine genomic structure from diverse genetic backgrounds. The model is trained to predict the original 6-mer tokens at the masked positions using a cross-entropy loss function, with the AdamW optimizer and a learning rate schedule involving a warm-up and subsequent decay [

13].

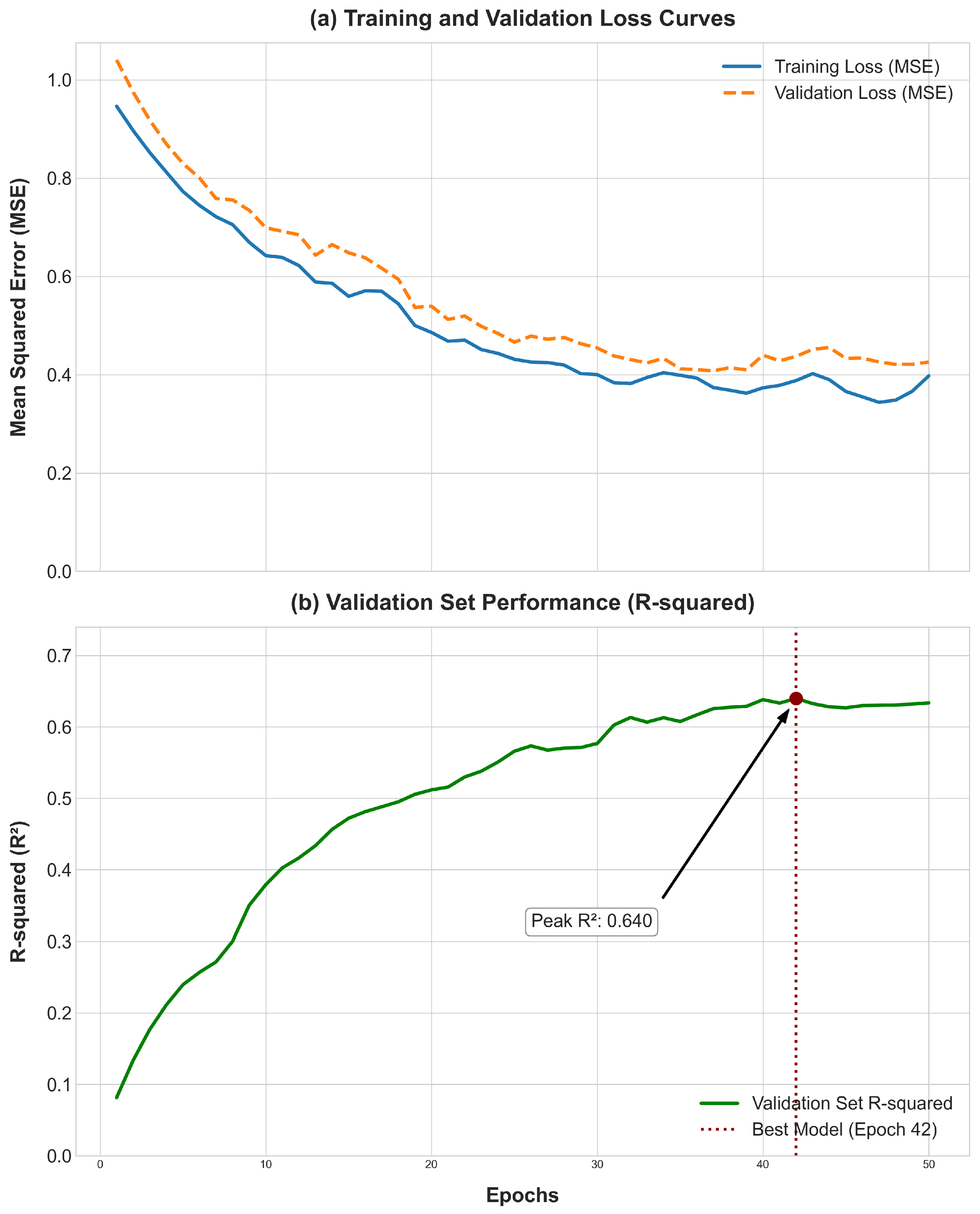

In the supervised fine-tuning phase, the pre-trained model is adapted for the regression task of predicting phenotypic values. The weights of the Transformer encoder, learned during pre-training, are used to initialize the model (

Table 2). This transfer learning strategy leverages the learned genomic knowledge for specific downstream tasks, such as predicting the phenotypic values from the PIC-GD dataset or the HZA-PMB dataset [

16]. For this stage, only individuals with both genotype data and corresponding phenotypic records are used. The dataset is carefully split into training, validation, and testing sets, using pedigree information from the PIC-GD dataset to prevent data leakage by ensuring closely related individuals are not distributed across different sets [

2,

25]. The model is then trained to minimize the Mean Squared Error (MSE) between its predictions and the true values. A smaller learning rate is typically used with the AdamW optimizer to preserve the knowledge acquired during pre-training. The validation set plays a crucial role in model selection based on metrics: the coefficient of determination (R

2) and the Mean Squared Error (MSE), as well as for implementing an early stopping strategy to prevent overfitting [

26].

2.4. Experimental Design

To systematically evaluate the performance of our proposed pre-trained Transformer model, a comprehensive experimental design was established. The model’s efficacy was benchmarked against several baseline models chosen to represent a spectrum of current techniques in the field. These included Genomic Best Linear Unbiased Prediction (GBLUP), which serves as the industry-standard linear model [

4,

5]; traditional machine learning algorithms, specifically Lasso and LightGBM (LGBM), to represent non-deep learning approaches capable of handling high-dimensional data [

6,

9]; and a Transformer model trained entirely from scratch. This “from-scratch” Transformer, possessing an identical architecture to our proposed model but without the self-supervised pre-training phase, was included specifically to quantify the contribution of the pre-training strategy to the final prediction performance [

16]. All experiments were conducted on a high-performance computing cluster equipped with two NVIDIA A6000 GPUs (48 GB of memory each). The self-supervised pre-training phase required approximately 72 h, while fine-tuning for each trait was completed in about 4 h, with a peak GPU memory usage of around 50 GB during pre-training.

To assess the model’s ability to predict phenotypic values for future generations, a forward validation strategy based on pedigree information was employed. This approach mimics the real-world application of genomic selection, where data from ancestral populations are used to predict the genetic merit of younger individuals. Specifically, we partitioned the dataset according to parent–offspring relationships, ensuring a temporal split. The oldest 80% of individuals constituted the training set, the subsequent 10% formed the validation set, and the youngest 10% of individuals were held out as the final test set. This across-generation validation scheme prevents any information from descendants being used to train or tune the model, thus providing an unbiased estimate of the model’s forward predictive power. This setup is inherently more challenging than a random split, as it directly evaluates the model’s ability to generalize to new, non-overlapping generations, a scenario where the reliance on close relatives for prediction is minimized.

Optimal configurations for each model were identified through a systematic Grid Search methodology. For each hyperparameter combination, models were trained on the training set, and their performance was assessed on the validation set using the coefficient of determination (R2). The parameter set yielding the highest R2 on the validation set was selected for the final model configuration. The test set was excluded entirely during all training and tuning phases and was used only once for the final, unbiased performance assessment. To ensure exact reproducibility of our results, a fixed random seed (seed = 42) was used for all stochastic processes, including model weight initialization and the random masking operations during pre-training.

The model’s training objective was to minimize the Mean Squared Error (MSE) loss function between the predicted and observed phenotypic values. The final predictive performance of all models on the test set was quantified using two primary metrics: the coefficient of determination (R

2), to measure the proportion of variance in the observed phenotypic values that is predictable from the model, and the MSE, to quantify the overall magnitude of prediction error [

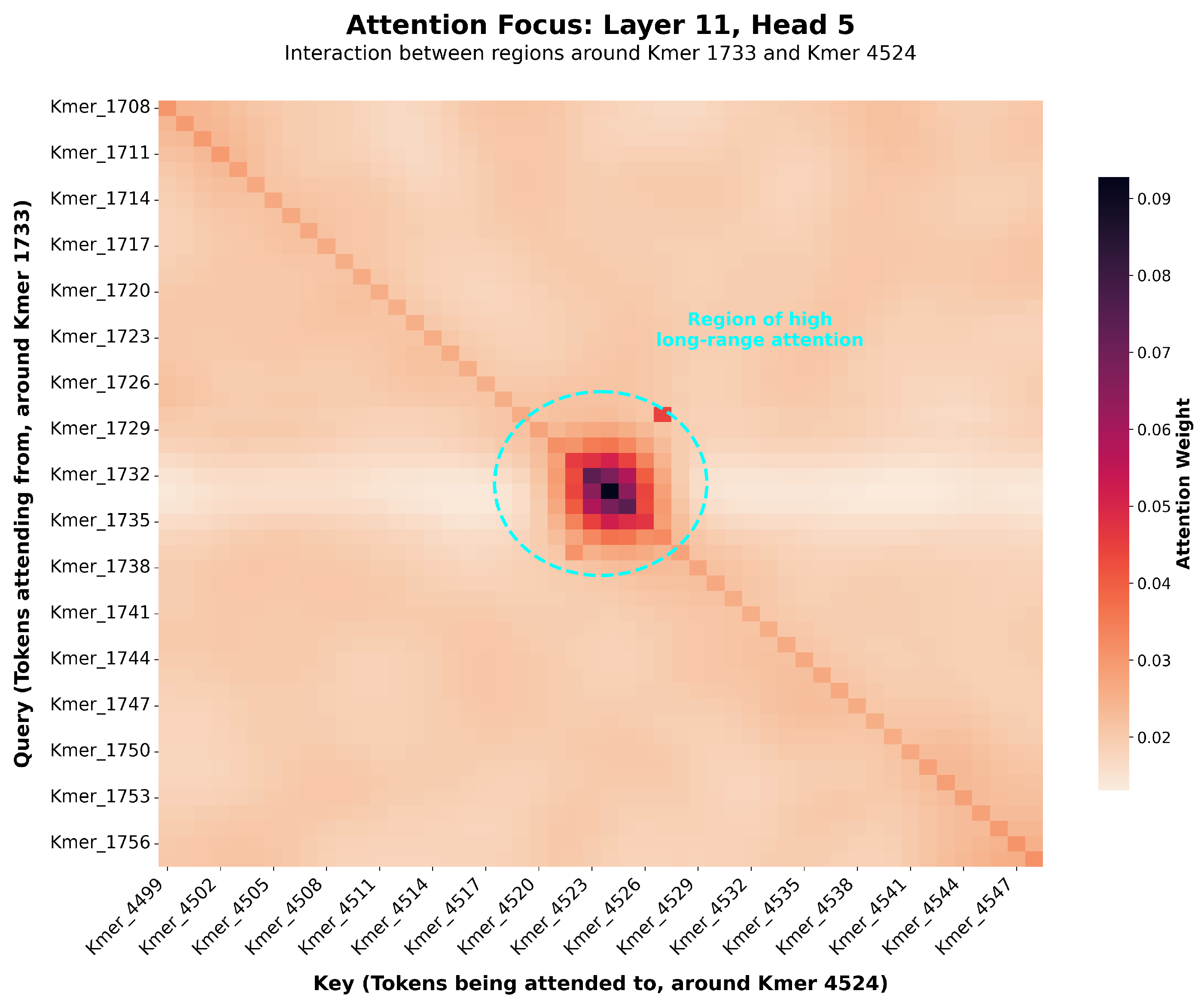

25]. Finally, to gain insight into the model’s decision-making process, we analyzed the Transformer’s attention weights to identify specific SNP regions the model deemed most important for prediction, aiming to uncover potentially novel, biologically relevant genomic loci [

19,

20].

4. Discussion

This study was designed to address a critical “methodological gap” in genomic prediction: the inherent limitation of conventional methods like GBLUP in capturing the complex non-additive effects and long-range dependencies that govern quantitative traits [

4,

7]. To overcome this, we proposed and validated a novel framework centered on a self-supervised, pre-trained Transformer model [

13,

15,

16]. This discussion will first interpret our findings by directly addressing the core research hypothesis, then summarize the study’s primary methodological and applied contributions, explore the model’s potential for generating biological insights, and finally, acknowledge its limitations and outline promising avenues for future research.

4.1. Performance and Comparison with Baselines

Our central scientific hypothesis was that a Transformer model, pre-trained on genomic data in a self-supervised manner, could learn the complex, non-linear mapping from genotype to phenotype [

15,

18]. A key aspect of our study design was the use of recorded phenotypes as the prediction target. This strategy directly addresses the ultimate goal of a breeding program: to select animals that will exhibit superior performance. We posited that by capturing non-linear signals present in the raw phenotype—signals arising from non-additive genetic effects that are inherently missed by the linear models like GBLUP—our approach would yield superior predictive accuracy. Crucially, we tested this hypothesis under a realistic forward validation scenario, designed to assess the model’s ability to predict the phenotypic outcomes of future generations from ancestral data [

9,

12].

The experimental results provide strong evidence for this hypothesis. As shown in

Table 3, our pre-trained Transformer model consistently achieved the highest prediction accuracy (measured by R

2) on the held-out test set of the youngest individuals. A key observation is the performance of the industry-standard GBLUP model [

4,

33]. For traits with known heritability (

h2), GBLUP’s predictive accuracy (R

2) is theoretically capped by

h2, as it primarily models the additive genetic component of phenotypic variance [

4]. Our results align with this expectation; for instance, for trait T5 (

h2 = 0.62), GBLUP achieved an R

2 of 0.4605, while for trait T3 (

h2 = 0.40), it achieved an R

2 of 0.2755.

The outperformance of our Transformer model under these conditions is therefore particularly telling. For trait T5, our model reached an R

2 of 0.5527, and for T3, an R

2 of 0.3291. Concurrently, we note that the performance gains, while consistent, are more modest for the low-heritability traits (MORT1 to MORT3). As detailed in

Table 3, the standard deviations from our replicated runs confirm a stable, albeit smaller, advantage over baseline models. This is an expected outcome, as the high degree of phenotypic variation in these traits is influenced by non-genetic factors, fundamentally limiting the predictive ceiling for any model relying solely on genomic data. Nevertheless, our model’s ability to consistently outperform all baselines on these challenging traits underscores its enhanced capacity to capture the available genetic signals. This performance gap (R

Transformer2 − R

GBLUP2) suggests that the Transformer is successfully capturing a substantial portion of the non-additive genetic variance (dominance and epistasis) that contributes to the final phenotype but is invisible to linear models. This ability to learn and generalize these robust, heritable features—from local haplotypes to complex interactions—enables the model to more accurately predict the phenotypic outcome of a new generation based on its ancestors, thus excelling where kinship-based linear methods are inherently limited [

13]. The crucial comparison with the “Transformer (no pre-train)” model further isolates and quantifies the substantial, additional benefit derived from the self-supervised pre-training phase [

16,

17].

4.2. Role of Self-Supervised Pre-Training

This research delivers both a methodological and an applied contribution to the field of computational breeding [

3].

The methodological contribution lies in successfully introducing and validating the “pre-training and fine-tuning” paradigm, a cornerstone of modern natural language processing, for genomic selection in swine [

15,

16]. We demonstrate that leveraging genomic data in a self-supervised, unlabeled fashion is a powerful strategy for building superior predictive models [

16,

34]. This work provides a new, scalable, and transferable analytical framework that can be adapted for other species (e.g., cattle, poultry, crops) and other omics data types [

18,

23].

The applied contribution is of direct practical significance. The enhanced prediction accuracy translates directly to the potential for higher genetic gain [

1,

4]. By enabling more precise estimation of phenotypic values, our model allows breeders to make more accurate and earlier selection decisions [

2]. This can accelerate the rate of genetic improvement for key economic traits, shortening breeding cycles and ultimately delivering significant economic benefits to the swine industry [

1,

35].

4.3. Interpretation and Biological Insights

While deep learning models are often termed “black boxes” [

6,

36], our framework offers new avenues for exploring the genetic architecture of complex traits. The self-attention mechanism, in particular, provides a direct window into how the model weighs the relationships between different genomic regions when predicting a phenotype.

To investigate the biological plausibility of the learned representations, we cross-validated the genomic regions assigned high attention weights by our model with the Pig QTLdb, a public database of quantitative trait loci [

27]. As a specific example, for the Piglet Mortality (HZA-PMB) phenotype in the HZA-PMB dataset, we observed that the model identified a high-attention region on chromosome 13. This finding is highly consistent with published research, where previous studies have identified QTLs associated with the number of stillborn piglets on pig chromosomes 5 and 13 [

28,

29]. This alignment of model prediction with known biological markers strongly suggests that our model is not merely capturing statistical noise but is learning to identify genomic loci with known biological relevance to the phenotypic trait. Because our model operates on the complete genotype-to-phenotype map, these high-attention regions may represent not only loci with strong additive effects but also hubs involved in the epistatic networks that linear models cannot detect. This not only validates the model’s effectiveness but also demonstrates its potential as a powerful hypothesis-generation tool for discovering novel candidate genetic regions and interactions. While our primary analysis here focuses on attention weights, we acknowledge that other post-hoc interpretability techniques like SHAP or Integrated Gradients offer complementary approaches to quantify feature importance, which represent a valuable direction for future work [

19,

24].

4.4. Limitations and Future Directions

Despite the promising results, this study has several limitations that could be addressed, paving the way for future work.

First, while our model shows significant advantages, the absolute predictive accuracy for low-heritability traits like Piglet Mortality (HZA-PMB) remains modest. This is expected, as these traits are strongly influenced by complex non-additive genetic effects and, crucially, by large environmental factors not captured by SNP data alone [

37]. The phenotypic variance is the sum of genetic and environmental variance. Any model using only genomic data is fundamentally limited by the proportion of variance that is not environmental. This highlights a general challenge in the field and an area for future improvement. A major avenue for improving predictive accuracy, especially for low-heritability traits, is the integration of multi-omics data and, critically, environmental data. Fusing genomics with transcriptomics, epigenomics, or even structured environmental variables (e.g., farm, season, diet) can provide a more holistic view of the factors driving the final phenotype [

37].

Second, the interpretability of deep learning models, including ours, remains an active area of research. While the attention mechanism provides valuable insights into the model’s decision-making process by identifying high-attention genomic regions, more advanced post-hoc interpretability techniques, such as SHAP or Integrated Gradients, could offer a more granular and quantitative understanding of individual feature contributions [

19]. A comparative analysis of different interpretability methods will be valuable to further demystify the model’s “black box” nature.

Third, we acknowledge that the computational cost of the self-supervised pre-training phase is substantial, requiring significant GPU resources. This might pose a barrier for some research groups. However, a key advantage of the “pre-train, fine-tune” paradigm is that the most intensive step needs to be performed only once.

Looking ahead, we propose several exciting research directions based on these limitations:

Integration of Multi-omics and Environmental Data: A major avenue for improving predictive accuracy, especially for low-heritability traits, is the integration of multi-omics data. Fusing genomics with transcriptomics or epigenomics can provide a more dynamic view of gene regulation [

3].

Advanced Pre-training Objectives: Exploring alternative self-supervised tasks beyond masked prediction, such as contrastive learning, may enable the model to learn even more robust and informative genomic representations [

16].

Advanced Interpretability and Biological Validation: Future work will focus on implementing and comparing advanced interpretability methods like SHAP. The insights gained will be systematically cross-validated with known biological information, such as QTL databases and gene ontologies. This will not only enhance the credibility of the model for applied users but also position it as a powerful hypothesis-generation tool for discovering novel candidate genes and biological pathways.

5. Conclusions

This study addresses the critical breeding challenge of enhancing genetic gain in swine [

1] by tackling the fundamental problem of accurate phenotype prediction from genomic data. We fill a key methodological gap where existing methods like GBLUP fall short in capturing complex non-additive effects that contribute to phenotypic outcomes [

4,

7]. We successfully developed and validated a novel framework based on a self-supervised, pre-trained Transformer [

13,

16]. Experimental validation on real-world swine genomic datasets (PIC-GD and HZA-PMB) demonstrates that our model substantially outperforms baselines, including GBLUP and a Transformer trained from scratch [

9,

23]. This confirms our central scientific hypothesis that pre-training on the genomic data itself enables the model to learn intrinsic genomic structures, thereby boosting performance in the downstream task of phenotype prediction by capturing non-linear genetic signals [

15,

17,

18].

The contributions of this research are twofold. As a methodological contribution, we provide a new, scalable deep learning framework for predicting complex phenotypes from high-dimensional genomic data [

11,

38]. As an applied contribution, our model directly serves to increase genetic gain in swine by delivering more accurate phenotype predictions, providing a powerful tool for more effective selection decisions in breeding programs [

3,

4].

Despite its strong performance, this work has limitations, including the need to validate its generalizability across more diverse populations and other species [

39,

40], while model interpretability remains a challenge [

6,

36]. Future work should focus on integrating multi-omics and environmental data to build more holistic predictive models [

3], and advancing interpretability techniques to uncover the key genomic regions and interactions driving phenotypic variation, thereby bridging the gap between predictive power and biological insight [

19,

20].