Deep Learning Methods for Automatic Identification of Male and Female Chickens in a Cage-Free Flock

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Setup

2.2. Poultry Image Data Collection

2.3. Image Labeling and Data Pre-Processing

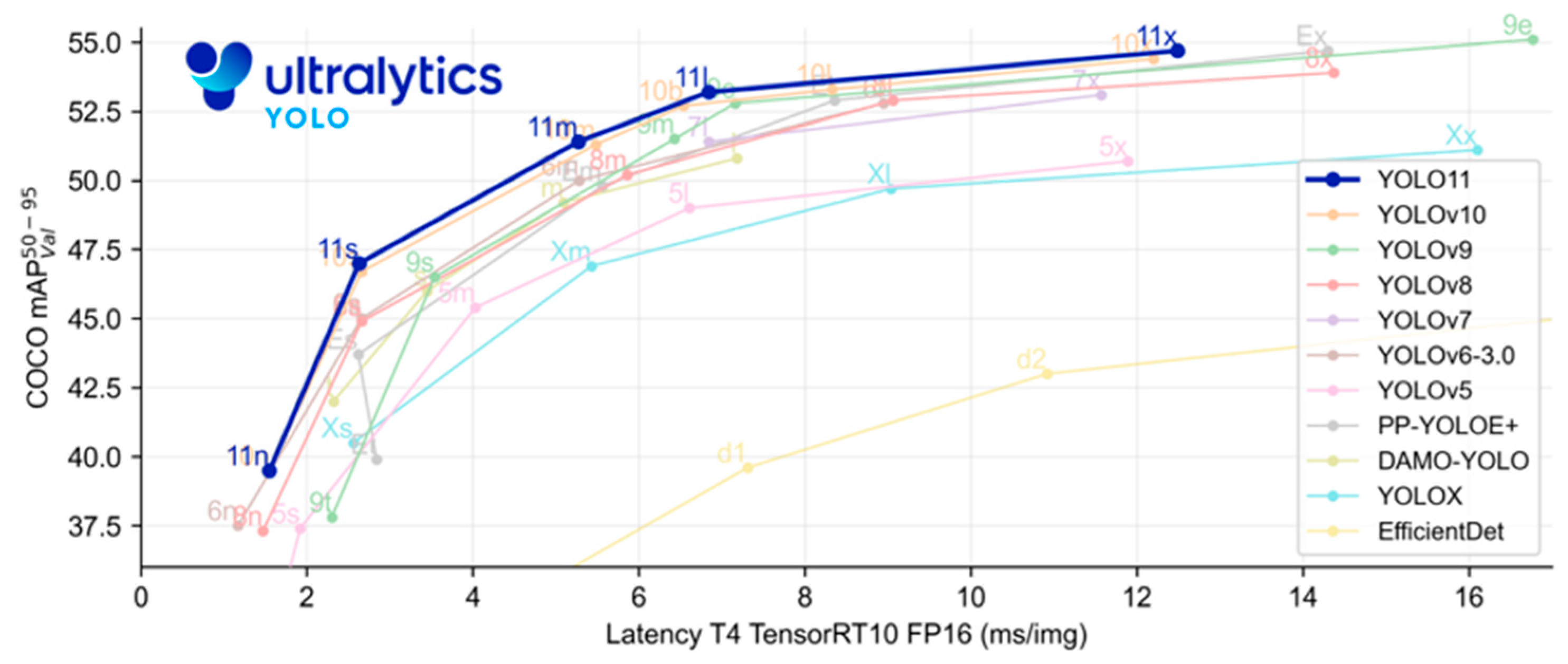

2.4. Model Architecture: YOLOv5u vs. YOLOv11

2.5. Model Evaluation Metrics

3. Results and Discussions

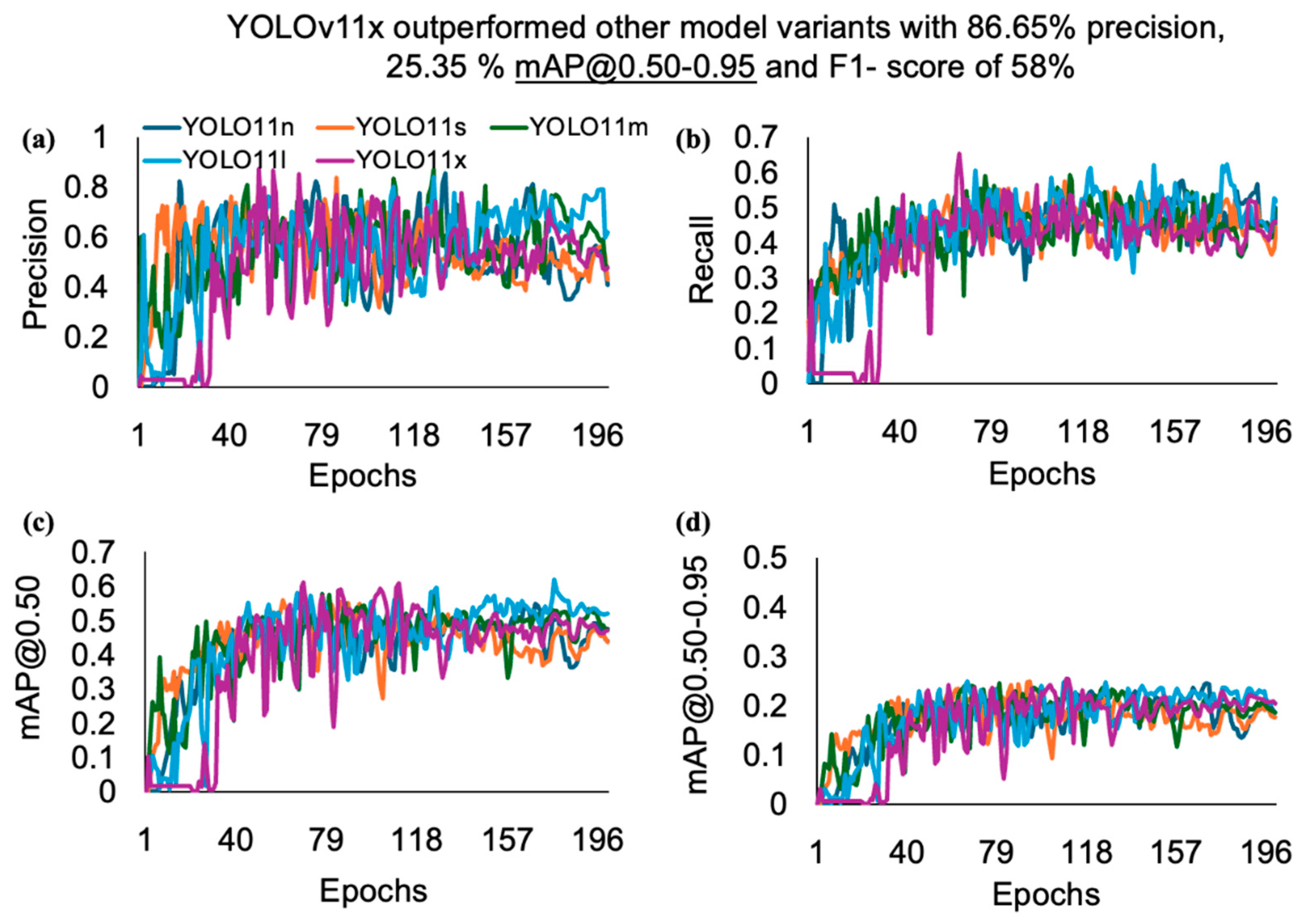

3.1. Performance Metrics Comparison: Hen and Rooster Detection (Comb Size)

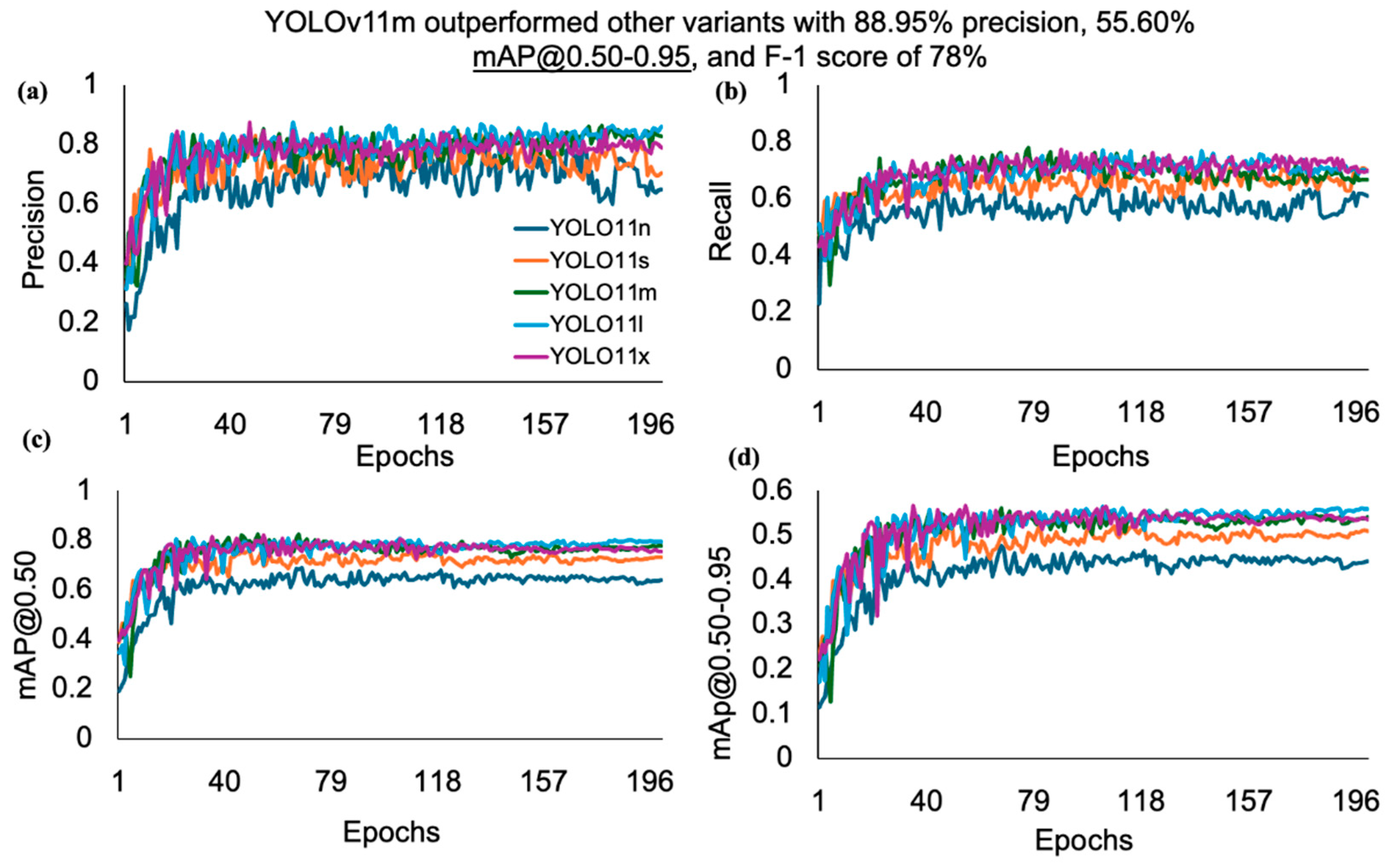

3.2. Performance Metrics Comparison: Rooster Detection (Body Size)

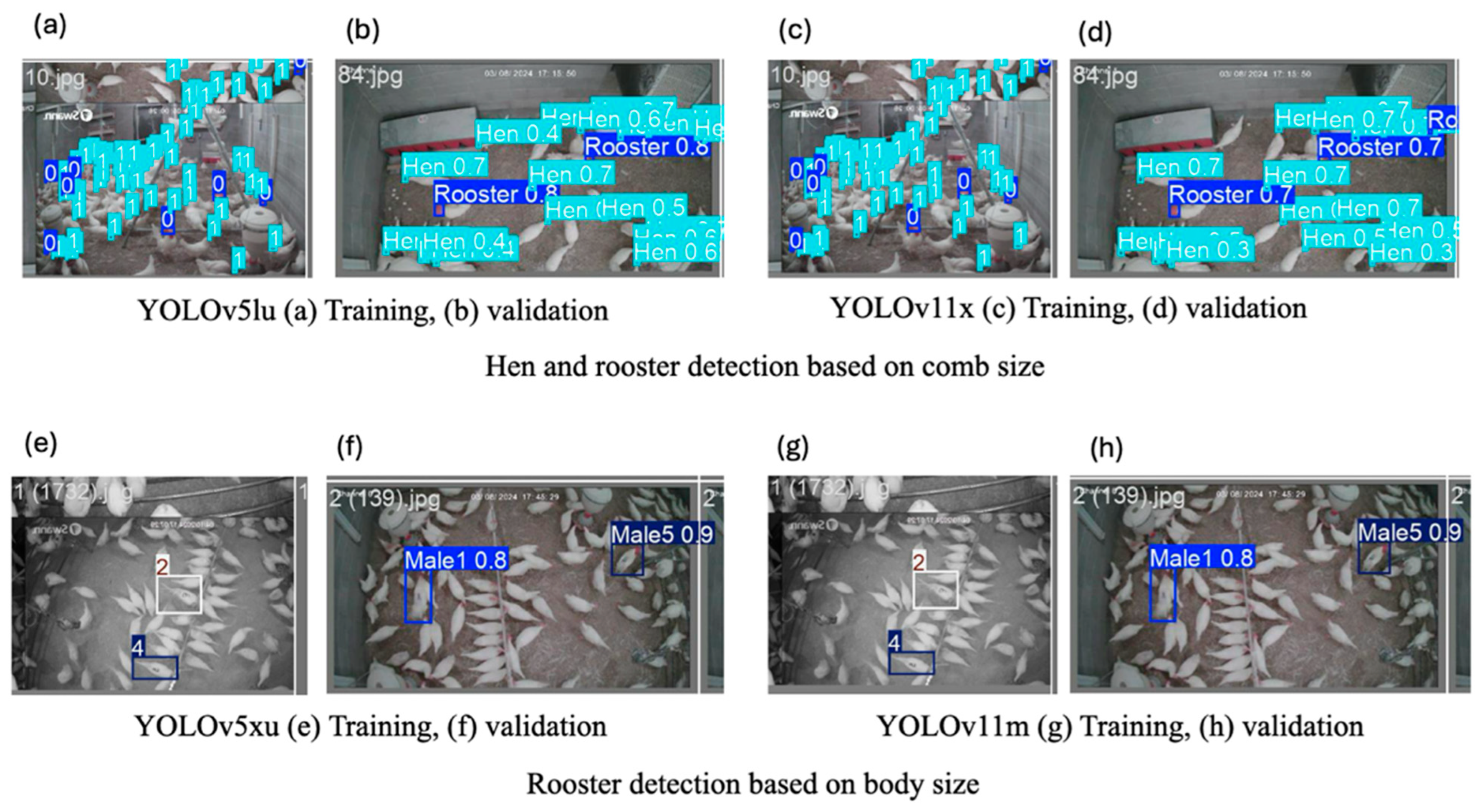

3.3. Training and Validation Prediction Result

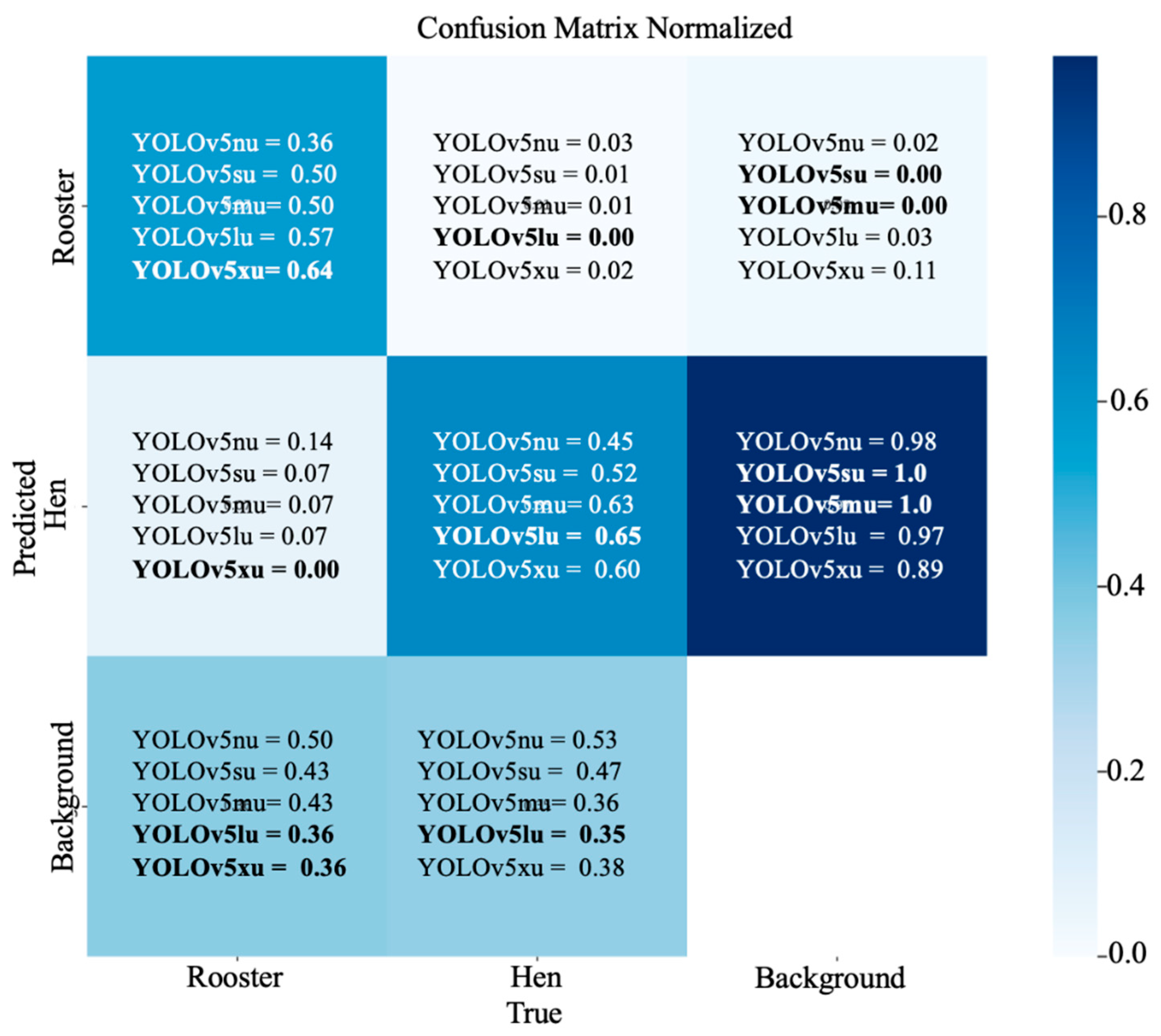

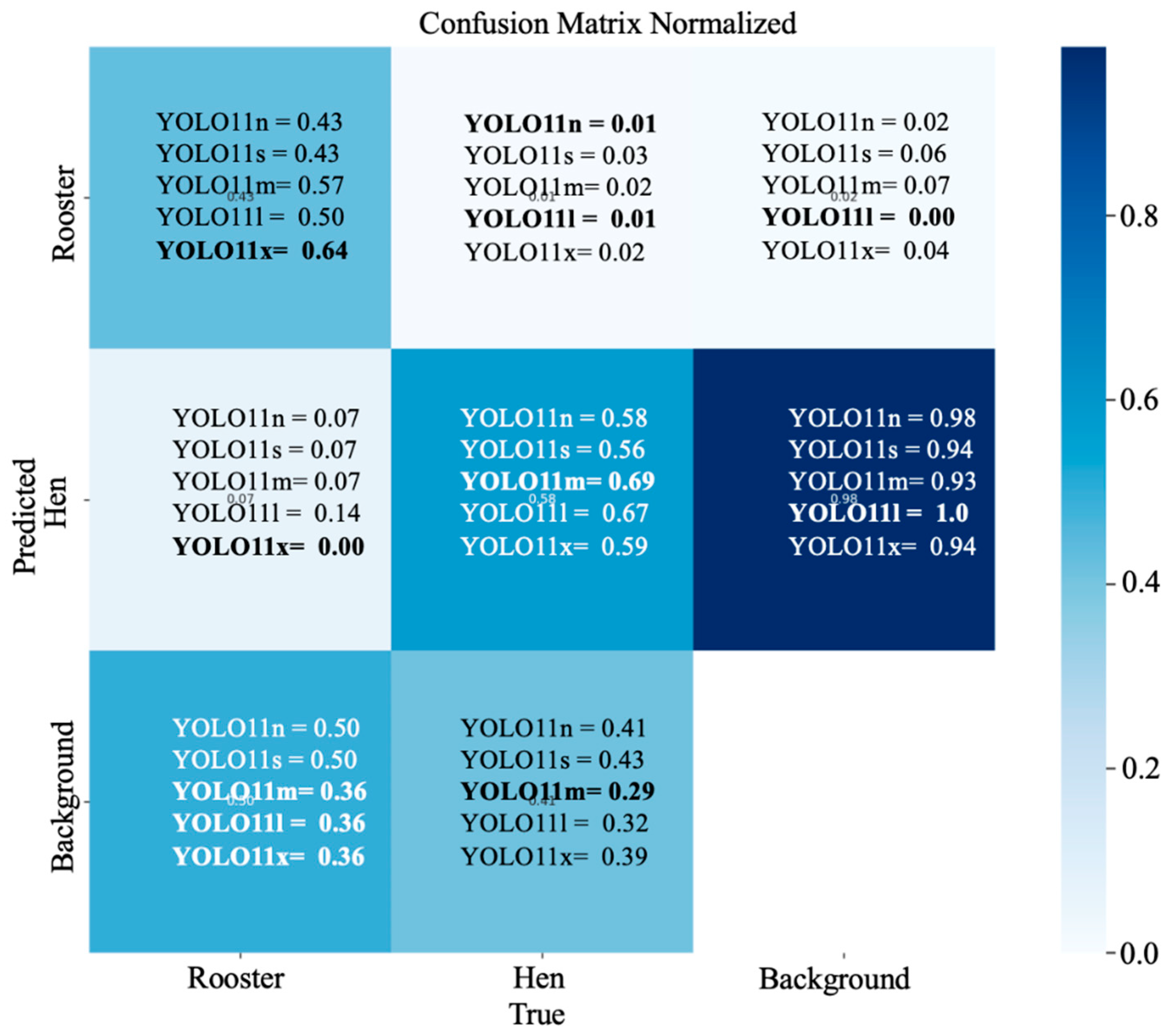

3.4. Confusion Matrix Analysis: Hen and Rooster Detection

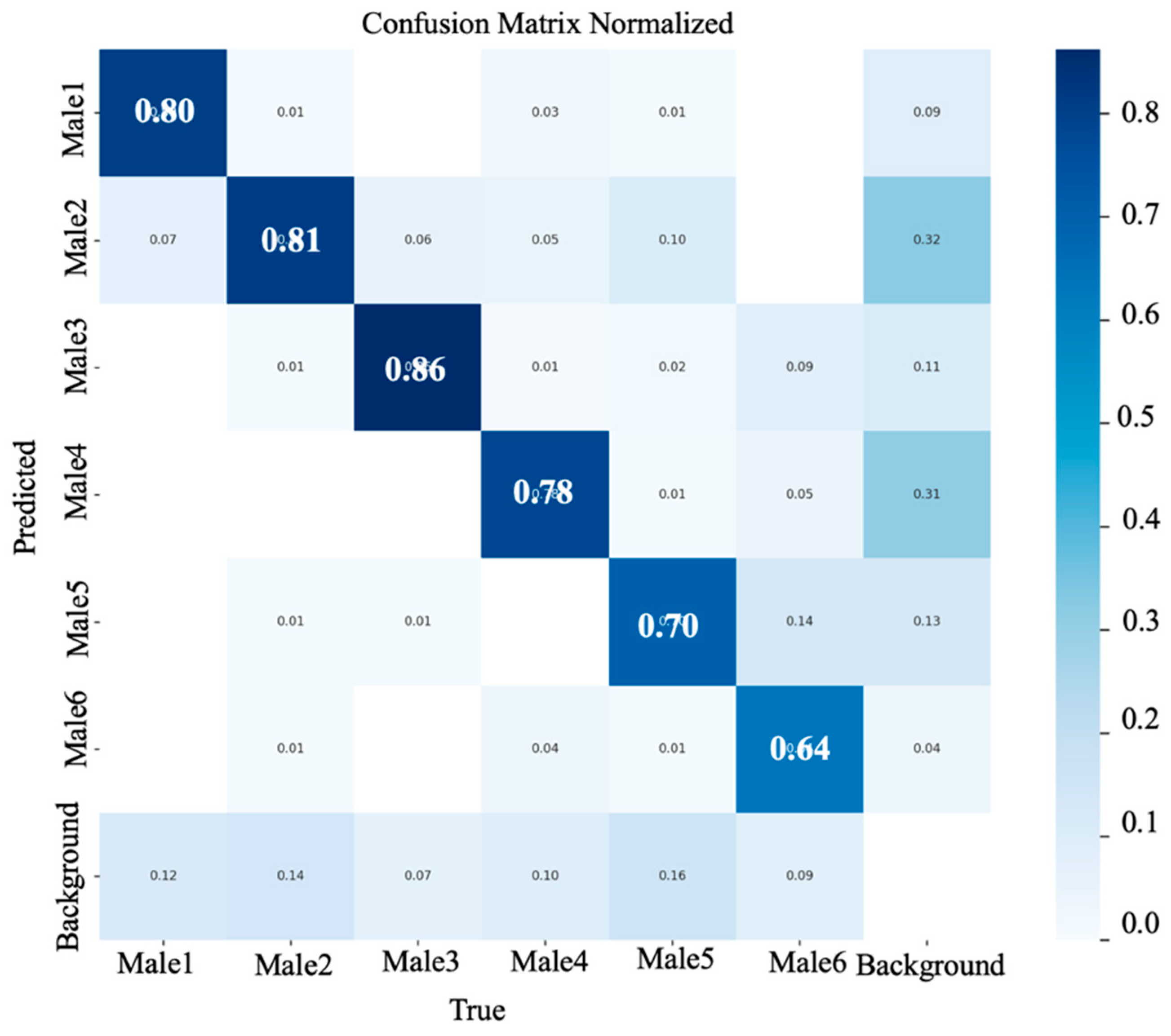

3.5. Confusion Matrix Analysis: Rooster Detection

4. Applications and Benefits of Automatic Hen and Rooster Detection

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- King’ori, A.M. Review of the Factors That Influence Egg Fertility and Hatchabilty in Poultry. Int. J. Poult. Sci. 2011, 10, 483–492. [Google Scholar] [CrossRef]

- Djermanovic, V.; Mitrovic, S.; Djekic, V. Rooster Body Weight Influence on the Reproductive Performance of the Broiler Parents. Biotechnol. Anim. Husb. 2013, 29, 83–91. [Google Scholar] [CrossRef]

- Millman, S.T.; Duncan, I.J.H. Strain Differences in Aggressiveness of Male Domestic Fowl in Response to a Male Model. Appl. Anim. Behav. Sci. 2000, 66, 217–233. [Google Scholar] [CrossRef]

- Millman, S.T.; Duncan, I.J.H. Effect of Male-to-Male Aggressiveness and Feed-Restriction during Rearing on Sexual Behaviour and Aggressiveness towards Females by Male Domestic Fowl. Appl. Anim. Behav. Sci. 2000, 70, 63–82. [Google Scholar] [CrossRef]

- Millman, S.T.; Duncan, I.J.H. Do Female Broiler Breeder Fowl Display a Preference for Broiler Breeder or Laying Strain Males in a Y-Maze Test? Appl. Anim. Behav. Sci. 2000, 69, 275–290. [Google Scholar] [CrossRef]

- Millman, S.T.; Duncan, I.J.; Widowski, T.M. Male Broiler Breeder Fowl Display High Levels of Aggression toward Females. Poult. Sci. 2000, 79, 1233–1241. [Google Scholar] [CrossRef] [PubMed]

- Jones, E.K.M.; Prescott, N.B.; Cook, P.; White, R.P.; Wathes, C.M. Ultraviolet Light and Mating Behaviour in Domestic Broiler Breeders. Br. Poult. Sci. 2001, 42, 23–32. [Google Scholar] [CrossRef]

- Jones, E.K.M.; Prescott, N.B. Visual Cues Used in the Choice of Mate by Fowl and Their Potential Importance for the Breeder Industry. World’s Poult. Sci. J. 2000, 56, 127–138. [Google Scholar] [CrossRef]

- De Jong, I.C.; Wolthuis-Fillerup, M.; Van Emous, R.A. Development of Sexual Behaviour in Commercially-Housed Broiler Breeders after Mixing. Br. Poult. Sci. 2009, 50, 151–160. [Google Scholar] [CrossRef]

- Wei, T.Y.; Lin, T.H.; Tsai, Y.C. Comb Color Analysis of Broilers Through the Video Surveillance System of a Poultry House. Braz. J. Poult. Sci. 2024, 26, 001–008. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Guo, Y.; Regmi, P.; Ding, Y.; Bist, R.B.; Chai, L. Automatic Detection of Brown Hens in Cage-Free Houses with Deep Learning Methods. Poult. Sci. 2023, 102, 102784. [Google Scholar] [CrossRef] [PubMed]

- Bist, R.B.; Subedi, S.; Yang, X.; Chai, L. Automatic Detection of Cage-Free Dead Hens with Deep Learning Methods. AgriEngineering 2023, 5, 1020–1038. [Google Scholar] [CrossRef]

- Paneru, B.; Bist, R.; Yang, X.; Chai, L. Tracking Dustbathing Behavior of Cage-Free Laying Hens with Machine Vision Technologies. Poult. Sci. 2024, 103, 104289. [Google Scholar] [CrossRef] [PubMed]

- Paneru, B.; Bist, R.; Yang, X.; Chai, L. Tracking Perching Behavior of Cage-Free Laying Hens with Deep Learning Technologies. Poult. Sci. 2024, 103, 104281. [Google Scholar] [CrossRef]

- Wang, J.; Wang, N.; Li, L.; Ren, Z. Real-Time Behavior Detection and Judgment of Egg Breeders Based on YOLO V3. Neural Comput. Appl. 2020, 32, 5471–5481. [Google Scholar] [CrossRef]

- Wahjuni, S.; Wulandari, W.; Eknanda, R.T.G.; Susanto, I.R.H.; Akbar, A.R. Automatic Detection of Broiler’s Feeding and Aggressive Behavior Using You Only Look Once Algorithm. Int. J. Artif. Intell. 2024, 13, 104. [Google Scholar] [CrossRef]

- Nasiri, A.; Amirivojdan, A.; Zhao, Y.; Gan, H. Estimating the Feeding Time of Individual Broilers via Convolutional Neural Network and Image Processing. Animals 2023, 13, 2428. [Google Scholar] [CrossRef]

- Sozzi, M.; Pillan, G.; Ciarelli, C.; Marinello, F.; Pirrone, F.; Bordignon, F.; Bordignon, A.; Xiccato, G.; Trocino, A. Measuring Comfort Behaviors in Laying Hens Using Deep-Learning Tools. Animals 2022, 13, 33. [Google Scholar] [CrossRef]

- Nguyen, K.H.; Nguyen, H.V.N.; Tran, H.N.; Quach, L.-D. Combining Autoencoder and Yolov6 Model for Classification and Disease Detection in Chickens. In Proceedings of the 2023 8th International Conference on Intelligent Information Technology, Da Nang, Vietnam, 24–26 February 2023; ACM: Da Nang, Vietnam, 2023; pp. 132–138. [Google Scholar]

- Elmessery, W.M.; Gutiérrez, J.; Abd El-Wahhab, G.G.; Elkhaiat, I.A.; El-Soaly, I.S.; Alhag, S.K.; Al-Shuraym, L.A.; Akela, M.A.; Moghanm, F.S.; Abdelshafie, M.F. YOLO-Based Model for Automatic Detection of Broiler Pathological Phenomena through Visual and Thermal Images in Intensive Poultry Houses. Agriculture 2023, 13, 1527. [Google Scholar] [CrossRef]

- Triyanto, W.A.; Adi, K.; Suseno, J.E. Detection and Tracking of Broiler Flock Movements in The Chicken Coop Using YOLO. E3S Web Conf. 2023, 448, 02064. [Google Scholar] [CrossRef]

- Depuru, B.K.; Putsala, S.; Mishra, P. Automating Poultry Farm Management with Artificial Intelligence: Real-Time Detection and Tracking of Broiler Chickens for Enhanced and Efficient Health Monitoring. Trop. Anim. Health Prod. 2024, 56, 75. [Google Scholar] [CrossRef] [PubMed]

- Lohmann Tierzucht. Lohmann LSL-Lite: Management Guide; North American Edition; Lohmann Breeders, Inc.: Muscle Shoals, AL, USA, 2016. [Google Scholar]

- Jocher, Glenn Ultralytics YOLOv5 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 19 June 2025).

- Jocher, Glenn; Qiu, Jing Ultralytics YOLO11 2024. Available online: https://github.com/ultralytics/ultralytics (accessed on 19 June 2025).

- Chang, C.-L.; Xie, B.-X.; Xu, R.-Y. Spatiotemporal Analysis Using Deep Learning and Fuzzy Inference for Evaluating Broiler Activities. Smart Agric. Technol. 2024, 9, 100534. [Google Scholar] [CrossRef]

- Bist, R.B.; Yang, X.; Subedi, S.; Chai, L. Mislaying Behavior Detection in Cage-Free Hens with Deep Learning Technologies. Poult. Sci. 2023, 102, 102729. [Google Scholar] [CrossRef] [PubMed]

- Li, Y. Performance Evaluation of Machine Learning Methods for Breast Cancer Prediction. Appl. Math. Comput. 2018, 7, 212. [Google Scholar] [CrossRef]

- Yang, S.; Wang, W.; Gao, S.; Deng, Z. Strawberry Ripeness Detection Based on YOLOv8 Algorithm Fused with LW-Swin Transformer. Comput. Electron. Agric. 2023, 215, 108360. [Google Scholar] [CrossRef]

| Models | Precision (%) | Recall (%) | mAP@0.50 (%) | mAP@0.50–0.95 (%) | F1-Score (%) |

|---|---|---|---|---|---|

| YOLOv5nu | 83.08 | 53.25 | 55.93 | 24.72 | 53.00 |

| YOLOv5su | 83.08 | 53.17 | 55.93 | 24.72 | 61.00 |

| YOLOv5mu | 87.24 | 64.55 | 61.33 | 25.54 | 65.00 |

| YOLOv5lu | 87.69 | 56.33 | 60.08 | 25.68 | 65.00 |

| YOLOv5xu | 87.38 | 68.58 | 58.73 | 24.25 | 58.00 |

| YOLOv11n | 85.33 | 57.70 | 54.83 | 24.52 | 55.00 |

| YOLOv11s | 83.54 | 57.46 | 55.44 | 24.83 | 58.00 |

| YOLOv11m | 86.81 | 59.28 | 57.56 | 24.48 | 59.00 |

| YOLOv11l | 83.91 | 62.24 | 61.94 | 24.81 | 62.00 |

| YOLOv11x | 86.65 | 65.34 | 60.99 | 25.35 | 58.00 |

| Models | Precision (%) | Recall (%) | mAP@0.50 (%) | mAP@0.50–0.95 (%) | F1-Score (%) |

|---|---|---|---|---|---|

| YOLOv5nu | 79.07 | 61.73 | 62.79 | 43.19 | 61.00 |

| YOLOv5su | 83.18 | 69.58 | 72.84 | 49.39 | 70.00 |

| YOLOv5mu | 86.49 | 76.39 | 80.05 | 54.75 | 74.00 |

| YOLOv5lu | 86.78 | 76.06 | 81.63 | 56.13 | 73.00 |

| YOLOv5xu | 88.95 | 77.72 | 82.29 | 56.08 | 78.00 |

| YOLOv11n | 81.30 | 65.89 | 68.42 | 47.66 | 64.00 |

| YOLOv11s | 83.50 | 71.97 | 75.82 | 53.23 | 71.00 |

| YOLOv11m | 88.95 | 78.77 | 82.56 | 55.60 | 78.00 |

| YOLOv11l | 87.53 | 77.20 | 81.30 | 56.52 | 78.00 |

| YOLOv11x | 87.43 | 77.53 | 82.02 | 56.70 | 74.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Paneru, B.; Bist, R.B.; Yang, X.; Dhungana, A.; Dahal, S.; Chai, L. Deep Learning Methods for Automatic Identification of Male and Female Chickens in a Cage-Free Flock. Animals 2025, 15, 1862. https://doi.org/10.3390/ani15131862

Paneru B, Bist RB, Yang X, Dhungana A, Dahal S, Chai L. Deep Learning Methods for Automatic Identification of Male and Female Chickens in a Cage-Free Flock. Animals. 2025; 15(13):1862. https://doi.org/10.3390/ani15131862

Chicago/Turabian StylePaneru, Bidur, Ramesh Bahadur Bist, Xiao Yang, Anjan Dhungana, Samin Dahal, and Lilong Chai. 2025. "Deep Learning Methods for Automatic Identification of Male and Female Chickens in a Cage-Free Flock" Animals 15, no. 13: 1862. https://doi.org/10.3390/ani15131862

APA StylePaneru, B., Bist, R. B., Yang, X., Dhungana, A., Dahal, S., & Chai, L. (2025). Deep Learning Methods for Automatic Identification of Male and Female Chickens in a Cage-Free Flock. Animals, 15(13), 1862. https://doi.org/10.3390/ani15131862