Federated Multi-Label Learning (FMLL): Innovative Method for Classification Tasks in Animal Science

Abstract

Simple Summary

Abstract

1. Introduction

- (i)

- The paper presents the first-of-its-kind Federated Multi-Label Learning (FMLL) method that combines federated learning principles with the Binary Relevance approach as a multi-label learning technique and uses the REPTree algorithm to address classification tasks where instances may belong to multiple classes simultaneously.

- (ii)

- FMLL contributes significantly to the field of animal science by offering a novel methodology for classifying diverse animal datasets. This advancement enables more accurate and efficient classification of animals based on various attributes, aiding researchers and practitioners in better understanding and managing animal populations.

- (iii)

- FMLL harnesses federated learning principles, allowing multiple nodes to collaboratively train a model using their own local data. This provides the distribution of computational complexity over multiple nodes to improve efficiency and ensures privacy preservation and data security, which are crucial considerations in animal science research where large sensitive data may be involved.

- (iv)

- The proposed approach adopts the Binary Relevance (BR) strategy to effectively handle the multi-label nature of the data. By accurately classifying instances belonging to multiple classes, FMLL enhances the understanding of complex relationships and characteristics within animal species datasets.

- (v)

- FMLL pioneers the use of the Reduced-Error Pruning Tree (REPTree) classifier within federated learning, marking the first instance in the literature. The REPTree was chosen for its effectiveness in addressing the complexities of multi-label classification tasks. This approach enhances both the accuracy and interpretability of classification results, representing a significant advancement in machine learning techniques applied to animal science.

- (vi)

- The effectiveness of FMLL is empirically validated through experiments conducted on three diverse datasets within the domain of animal science: Amphibians, Anuran-Calls-(MFCCs), and HackerEarth-Adopt-A-Buddy. These experiments demonstrated the applicability and efficacy of FMLL in real-world scenarios, showcasing significant improvements in classification accuracy.

- (vii)

- FMLL achieved remarkable improvements in classification accuracy across various animal datasets when compared to existing state-of-the-art methods. For instance, on the Amphibians dataset, FMLL achieved an average accuracy improvement of 10.92%. This improvement highlights the practical relevance and superiority of FMLL in multi-label classification tasks within the domain of animal science.

2. Related Works

3. Materials and Methods

3.1. Proposed Approach

3.2. Formal Description

| Algorithm 1: Federated Multi-Label Learning (FMLL) |

| 1. Client Learning Process Inputs: : dataset = number of nodes (number of class labels at the same time) Outputs: : local models for each label |

| 1.1. Data Preparation Begin for = 1 to foreach in // Generate binary datasets if () else endif end foreach Store ( // Store local data at the node j end for 1.2. Local Model Training for = 1 to = // Train local models at each node in parallel Send () // Send to the central server end for End |

| 2. Server Aggregation Process Inputs: local models from each client for each class label = number of nodes (number of class labels at the same time) : test set that will be predicted Outputs: G: global model predicted labels for test set |

| 2.1. Model Aggregation Begin = Ø for = 1 to Receive () // Receive local models from each client = // Aggregate the models to form the global model end for 2.2. Classification foreach in for = 1 to = = //using the global model end for end foreach End |

4. Experimental Studies

4.1. Dataset Description

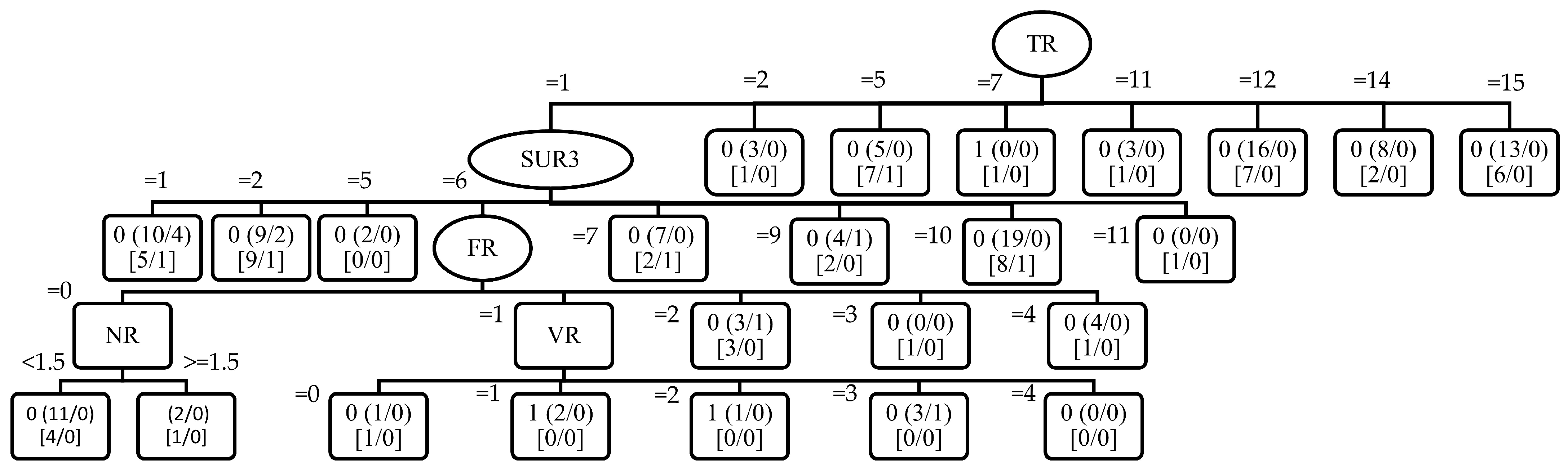

4.1.1. Amphibians

4.1.2. Anuran-Calls-(MFCCs)

4.1.3. HackerEarth-Adopt-A-Buddy

4.2. Results

- True Positive (TP) signifies the count of correctly predicted positive classes by the classifier.

- True Negative (TN) represents the count of accurately predicted negative classes by the classifier.

- False Positive (FP) denotes the count of erroneously predicted positive classes by the classifier.

- False Negative (FN) indicates the count of erroneously predicted negative classes by the classifier.

5. Discussion

6. Conclusions and Future Work

- (i)

- Introduction of FMLL (with BR and REPTree) in animal science classification as a novel approach, applicable to diverse real-world scenarios.

- (ii)

- Providing the distribution of computational cost over several clients and ensuring data security with FMLL to preserve privacy in collaborative learning environments.

- (iii)

- Effective handling of multi-label data within the FMLL framework using the BR strategy.

- (iv)

- Pioneering use of the REPTree classifier in federated learning, enhancing accuracy and interpretability.

- (v)

- Empirical validation of FMLL on various animal-based datasets, demonstrating its reliable applicability and efficacy in the field.

- (vi)

- The superiority of FMLL in multi-label classification tasks, evidenced by higher accuracy, precision, recall, and F-score metrics compared to state-of-the-art methods.

- (vii)

- The practical relevance of FMLL across taxonomic levels, showcasing its reliability in addressing multi-label classification problems within the context of animal research.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| ANN | Artificial neural network |

| ADA | AdaBoost |

| AMFSC | Amendable multi-function sensor control method |

| AR | Additive regression |

| BR | Binary relevance |

| CC | Classifier chains |

| CNN | Convolutional neural network |

| CPPS | Cyber-physical production system |

| DAGs | Dual attention gates |

| DeepFedWT | Federated deep learning framework |

| DPLA | Differential privacy Laplace mechanism |

| DT | Decision tree |

| ECC | Ensemble of classifier chains |

| EIAs | Environmental impact assessments |

| ETo | Estimation of the evapotranspiration |

| FCLOpt | Federated contrastive learning optimization |

| FedAAR | Federated learning framework for animal activity recognition |

| FedAvg | Federated averaging |

| FELIDS | Federated learning-based intrusion detection system |

| FL | Federated learning |

| FMLL | Federated multi-label learning |

| FPR | False positive rate |

| FTL | Federated transfer learning |

| GBT | Gradient-boosted tree |

| GIS | Geographic information system |

| GNN | Graph neural network |

| HFL | Horizontal federated learning |

| IDS | Intrusion detection system |

| IIoT | Industrial Internet of things |

| IoT | Internet of things |

| KNN | K-nearest neighbors |

| LCPL | Local classifier per level |

| LCPN | Local classifier per node |

| LP | Label powerset |

| LR | Logistic regression |

| LSM | Landslide susceptibility map |

| LSTM | Long short-term memory |

| MAE | Absolute error |

| MetaMIML | Meta learning based multi-instance multi-label learning |

| ML | Machine learning |

| MLC | Multi-label classification |

| ML-CookGAN | Multi-label generative adversarial network |

| ML-kNN | Multi-label k-nearest neighbors |

| MMVFL | Multi-participant multi-class vertical federated learning |

| MSRAN | Multi scale residual attention network |

| PC | Pairwise coupling |

| PMDT | Partially monotonic decision tree |

| PRC | Precision-recall curve |

| RAkEL | Random k-labelsets |

| RBF | Radial basis function |

| RC | Random committee |

| RD | Regression by discretization |

| REPTree | Reduced error pruning tree |

| RF | Random forests |

| RMSE | Root mean square error |

| RNN | Recurrent neural network |

| ROC | Receiver operating characteristic |

| RTF-REPTree | Rotational forest and reduced error pruning trees |

| SCADA | Supervisory control and data acquisition |

| SSA | Sparrow search algorithm |

| SVM | Support vector machines |

| TNR | True negative rate |

| TPR | True positive rate |

| VFL | Vertical federated learning |

References

- Li, R.; Gao, L.; Wu, G.; Dong, J. Multiple Marine Algae Identification Based on Three-Dimensional Fluorescence Spectroscopy and Multi-Label Convolutional Neural Network. Spectrochim. Acta Part A 2024, 311, 123938. [Google Scholar] [CrossRef]

- Swaminathan, B.; Jagadeesh, M.; Vairavasundaram, S. Multi-Label Classification for Acoustic Bird Species Detection Using Transfer Learning Approach. Ecol. Inf. 2024, 80, 102471. [Google Scholar] [CrossRef]

- Celniak, W.; Wodziński, M.; Jurgas, A.; Burti, S.; Zotti, A.; Atzori, M.; Müller, H.; Banzato, T. Improving the Classification of Veterinary Thoracic Radiographs through Inter-Species and Inter-Pathology Self-Supervised Pre-Training of Deep Learning Models. Sci. Rep. 2023, 13, 19518. [Google Scholar] [CrossRef]

- Ahsan, M.M.; Alam, T.E.; Haque, M.A.; Ali, M.S.; Rifat, R.H.; Nafi, A.A.N.; Hossain, M.M.; Islam, M.K. Enhancing Monkeypox Diagnosis and Explanation through Modified Transfer Learning, Vision Transformers, and Federated Learning. Inf. Med. Unlocked 2024, 45, 101449. [Google Scholar] [CrossRef]

- van Schaik, G.; Hostens, M.; Faverjon, C.; Jensen, D.B.; Kristensen, A.R.; Ezanno, P.; Frössling, J.; Dórea, F.; Jensen, B.-B.; Carmo, L.P.; et al. The DECIDE Project: From Surveillance Data to Decision-Support for Farmers and Veterinarians. Open Res. Eur. 2023, 3, 82. [Google Scholar] [CrossRef]

- Shah, K.; Kanani, S.; Patel, S.; Devani, M.; Tanwar, S.; Verma, A.; Sharma, R. Blockchain-Based Object Detection Scheme Using Federated Learning. Secur. Priv. 2022, 6, e276. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Ogundokun, R.O.; Misra, S.; Maskeliunas, R.; Damasevicius, R. A review on federated learning and machine learning approaches: Categorization, application areas, and blockchain technology. Information 2022, 13, 263. [Google Scholar] [CrossRef]

- Abreha, H.G.; Hayajneh, M.; Serhani, M.A. Federated learning in edge computing: A systematic survey. Sensors 2022, 22, 450. [Google Scholar] [CrossRef]

- Shaheen, M.; Farooq, M.S.; Umer, T.; Kim, B.-S. Applications of federated learning; taxonomy, challenges, and research trends. Electronics 2022, 11, 670. [Google Scholar] [CrossRef]

- Hassanin, M.; Radwan, I.; Khan, S.; Tahtali, M. Learning discriminative representations for multi-label image recognition. J. Vis. Commun. Image Represent. 2022, 83, 103448. [Google Scholar] [CrossRef]

- Alfaro, R.; Allende-Cid, H.; Allende, H. Multilabel text classification with label-dependent representation. Appl. Sci. 2023, 13, 3594. [Google Scholar] [CrossRef]

- Mei, S. A Multi-label learning framework for predicting chemical classes and biological activities of natural products from biosynthetic gene clusters. J. Chem. Ecol. 2023, 49, 681–695. [Google Scholar] [CrossRef]

- Zhu, C.; Liu, Y.; Miao, D.; Dong, Y.; Pedrycz, W. Within-cross-consensus-view representation-based multi-view multi-label learning with incomplete data. Neurocomputing 2023, 557, 126729. [Google Scholar] [CrossRef]

- Mo, L.; Zhu, Y.; Zeng, L. A Multi-label based physical activity recognition via cascade classifier. Sensors 2023, 23, 2593. [Google Scholar] [CrossRef]

- Suh, J.H. Multi-label prediction-based fuzzy age difference analysis for social profiling of anonymous social media. Appl. Sci. 2024, 14, 790. [Google Scholar] [CrossRef]

- Han, R.; Wang, Z.; Guo, Y.; Wang, X.; A, R.; Zhong, G. Multi-label prediction method for lithology, lithofacies and fluid classes based on data augmentation by cascade forest. Adv. Geo Energy Res. 2023, 9, 25–37. [Google Scholar] [CrossRef]

- Hou, J.; Zeng, H.; Cai, L.; Zhu, J.; Chen, J.; Ma, K.-K. Multi-label learning with multi-label smoothing regularization for vehicle re-identification. Neurocomputing 2019, 345, 15–22. [Google Scholar] [CrossRef]

- Zhang, M.L.; Li, Y.K.; Liu, X.Y.; Geng, X. Binary relevance for multi-label learning: An overview. Front. Comput. Sci. 2018, 12, 191–202. [Google Scholar] [CrossRef]

- Akshay, E.; Sugumaran, V.; Elangovan, M. Single point cutting tool fault diagnosis in turning operation using reduced error pruning tree classifier. Struct. Durab. Health Monit. 2022, 16, 255–270. [Google Scholar] [CrossRef]

- Clunie, C.; Batista-Mendoza, G.; Cedeño-Moreno, D.; Calderón-Gómez, H.; Mendoza-Pittí, L.; Russell, C.; Vargas-Lombardo, M. Use of data mining strategies in environmental parameters in poultry farms, a case Study. In Proceedings of the 9th International Conference, Guayaquil, Ecuador, 13–16 November 2023; pp. 81–94. [Google Scholar] [CrossRef]

- Kumar, A.R.S.; Goyal, M.K.; Ojha, C.S.P.; Singh, R.D.; Swamee, P.K. Application of artificial neural network, fuzzy logic and decision tree algorithms for modelling of streamflow at Kasol in India. Water Sci. Technol. 2013, 68, 2521–2526. [Google Scholar] [CrossRef]

- Lin, C.-N.; Huang, W.-S.; Huang, T.-H.; Chen, C.-Y.; Huang, C.-Y.; Wang, T.-Y.; Liao, Y.-S.; Lee, L.-W. Adding value of MRI over CT in predicting peritoneal cancer index and completeness of cytoreduction. Diagnostics 2021, 11, 674. [Google Scholar] [CrossRef]

- Haron, N.H.; Mahmood, R.; Amin, N.M.; Ahmad, A.; Jantan, S.R. An Artificial Intelligence Approach to Monitor and Predict Student Academic Performance. J. Adv. Res. Appl. Sci. Eng. Technol. 2024, 44, 105–119. [Google Scholar] [CrossRef]

- Dhade, P.; Shirke, P. Federated learning for healthcare: A comprehensive review. Eng. Proc. 2023, 59, 230. [Google Scholar] [CrossRef]

- Da Silva, F.R.; Camacho, R.; Tavares, J.M.R.S. Federated learning in medical image analysis: A systematic survey. Electronic 2024, 13, 47. [Google Scholar] [CrossRef]

- Prasad, V.K.; Bhattacharya, P.; Maru, D.; Tanwar, S.; Verma, A.; Singh, A.; Tiwari, A.K.; Sharma, R.; Alkhayyat, A.; Țurcanu, F.-E.; et al. Federated learning for the internet-of-medical-things: A survey. Mathematics 2023, 11, 151. [Google Scholar] [CrossRef]

- Yaqoob, M.M.; Nazir, M.; Khan, M.A.; Qureshi, S.; Al-Rasheed, A. hybrid classifier-based federated learning in health service providers for cardiovascular disease prediction. Appl. Sci. 2023, 13, 1911. [Google Scholar] [CrossRef]

- Žalik, K.R.; Žalik, M. A review of federated learning in agriculture. Sensors 2023, 23, 9566. [Google Scholar] [CrossRef]

- Friha, O.; Ferrag, M.A.; Shu, L.; Maglaras, L.; Choo, K.K.R.; Nafaa, M. FELIDS: Federated learning-based intrusion detection system for agricultural Internet of Things. J. Parallel Distrib. Comput. 2022, 165, 17–31. [Google Scholar] [CrossRef]

- Yu, J.; Chen, Y.; Wang, Z.; Liu, J.; Huang, B. Food risk entropy model based on federated learning. Appl. Sci. 2022, 12, 5174. [Google Scholar] [CrossRef]

- Li, A.; Markovic, M.; Edwards, P.; Leontidis, G. Model pruning enables localized and efficient federated learning for yield forecasting and data sharing. Expert Syst. Appl. 2024, 242, 122847. [Google Scholar] [CrossRef]

- Fedorchenko, E.; Novikova, E.; Shulepov, A. Comparative review of the intrusion detection systems based on federated learning: Advantages and open challenges. Algorithms 2022, 15, 247. [Google Scholar] [CrossRef]

- Lazzarini, R.; Tianfield, H.; Charissis, V. Federated learning for IoT intrusion detection. AI 2023, 4, 509–530. [Google Scholar] [CrossRef]

- Ashraf, M.M.; Waqas, M.; Abbas, G.; Baker, T.; Abbas, Z.H.; Alasmary, H. FedDP: A privacy-protecting theft detection scheme in smart grids using federated learning. Energies 2022, 15, 6241. [Google Scholar] [CrossRef]

- Park, J.; Lim, H. Privacy-preserving federated learning using homomorphic encryption. Appl. Sci. 2022, 12, 734. [Google Scholar] [CrossRef]

- Abimannan, S.; El-Alfy, E.-S.M.; Hussain, S.; Chang, Y.-S.; Shukla, S.; Satheesh, D.; Breslin, J.G. Towards federated learning and multi-access edge computing for air quality monitoring: Literature review and assessment. Sustainability 2023, 15, 13951. [Google Scholar] [CrossRef]

- Supriya, Y.; Gadekallu, T.R. Particle swarm-based federated learning approach for early detection of forest fires. Sustainability 2023, 15, 964. [Google Scholar] [CrossRef]

- Chen, D.; Yang, P.; Chen, I.-R.; Ha, D.S.; Cho, J.-H. SusFL: Energy-Aware Federated Learning-based Monitoring for Sustainable Smart Farms. arXiv 2024, arXiv:2402.10280. [Google Scholar] [CrossRef]

- Mao, A.; Huang, E.; Gan, H.; Liu, K. FedAAR: A novel federated learning framework for animal activity recognition with wearable sensors. Animals 2022, 12, 2142. [Google Scholar] [CrossRef]

- Huang, Y.; Yang, X.; Guo, J.; Cheng, J.; Qu, H.; Ma, J.; Li, L. A High-Precision Method for 100-Day-Old Classification of Chickens in Edge Computing Scenarios Based on Federated Computing. Animals 2022, 12, 3450. [Google Scholar] [CrossRef]

- Berghout, T.; Benbouzid, M.; Bentrcia, T.; Lim, W.H.; Amirat, Y. Federated Learning for Condition Monitoring of Industrial Processes: A Review on Fault Diagnosis Methods, Challenges, and Prospects. Electronics 2023, 12, 158. [Google Scholar] [CrossRef]

- Wu, S.; Xue, H.; Zhang, L. Q-Learning-Aided Offloading Strategy in Edge-Assisted Federated Learning over Industrial IoT. Electronics 2023, 12, 1706. [Google Scholar] [CrossRef]

- Bemani, A.; Björsell, N. Low-Latency Collaborative Predictive Maintenance: Over-the-Air Federated Learning in Noisy Industrial Environments. Sensors 2023, 23, 7840. [Google Scholar] [CrossRef]

- Kaleem, S.; Sohail, A.; Tariq, M.U.; Asim, M. An Improved Big Data Analytics Architecture Using Federated Learning for IoT-Enabled Urban Intelligent Transportation Systems. Sustainability 2023, 15, 15333. [Google Scholar] [CrossRef]

- Alohali, M.A.; Aljebreen, M.; Nemri, N.; Allafi, R.; Duhayyim, M.A.; Alsaid, M.I.; Alneil, A.A.; Osman, A.E. Anomaly Detection in Pedestrian Walkways for Intelligent Transportation System Using Federated Learning and Harris Hawks Optimizer on Remote Sensing Images. Remote Sens. 2023, 15, 3092. [Google Scholar] [CrossRef]

- Xu, C.; Mao, Y. An Improved Traffic Congestion Monitoring System Based on Federated Learning. Information 2020, 11, 365. [Google Scholar] [CrossRef]

- Fachola, C.; Tornaría, A.; Bermolen, P.; Capdehourat, G.; Etcheverry, L.; Fariello, M.I. Federated Learning for Data Analytics in Education. Data 2023, 8, 43. [Google Scholar] [CrossRef]

- Sengupta, D.; Khan, S.S.; Das, S.; De, D. FedEL: Federated Education Learning for generating correlations between course outcomes and program outcomes for Internet of Education Things. IoT 2024, 25, 101056. [Google Scholar] [CrossRef]

- Guo, S.; Zeng, D. Pedagogical Data Federation toward Education 4.0. In Proceedings of the 6th International Conference on Frontiers of Educational Technologies; Association for Computing Machinery, New York, NY, USA, 5–8 June 2020; pp. 51–55. [Google Scholar] [CrossRef]

- Zhang, T.; Liu, H.; Tao, J.; Wang, Y.; Yu, M.; Chen, H.; Yu, G. Enhancing Dropout Prediction in Distributed Educational Data Using Learning Pattern Awareness: A Federated Learning Approach. Mathematics 2023, 11, 4977. [Google Scholar] [CrossRef]

- Huang, G.; Zhao, X.; Lu, Q. A New Cross-Domain Prediction Model of Air Pollutant Concentration Based on Secure Federated Learning and Optimized LSTM Neural Network. Environ. Sci. Pollut. Res. 2022, 30, 5103–5125. [Google Scholar] [CrossRef] [PubMed]

- Idoje, G.; Dagiuklas, T.; Muddesar, I. Federated Learning: Crop Classification in a Smart Farm Decentralised Network. Smart Agric. Technol. 2023, 5, 100277. [Google Scholar] [CrossRef]

- Abu-Khadrah, A.; Ali, A.M.; Jarrah, M. An Amendable Multi-Function Control Method Using Federated Learning for Smart Sensors in Agricultural Production Improvements. ACM Trans. Sens. Netw. 2023, in press. [CrossRef]

- Jiang, G.; Fan, W.; Li, W.; Wang, L.; He, Q.; Xie, P.; Li, X. DeepFedWT: A Federated Deep Learning Framework for Fault Detection of Wind Turbines. Measurement 2022, 199, 111529. [Google Scholar] [CrossRef]

- Campos, E.M.; Saura, P.F.; González-Vidal, A.; Hernández-Ramos, J.L.; Bernabé, J.B.; Baldini, G.; Skarmeta, A. Evaluating Federated Learning for Intrusion Detection in Internet of Things: Review and Challenges. Comput. Netw. 2022, 203, 108661. [Google Scholar] [CrossRef]

- Wu, Y.; Zeng, D.; Wang, Z.; Shi, Y.; Hu, J. Distributed Contrastive Learning for Medical Image Segmentation. Med. Image Anal. 2022, 81, 102564. [Google Scholar] [CrossRef] [PubMed]

- Rey, V.; Sánchez, P.M.S.; Celdrán, A.H.; Bovet, G. Federated Learning for Malware Detection in IoT Devices. Comput. Netw. 2022, 204, 108693. [Google Scholar] [CrossRef]

- Novikova, E.; Doynikova, E.; Golubev, S. Federated Learning for Intrusion Detection in the Critical Infrastructures: Vertically Partitioned Data Use Case. Algorithms 2022, 15, 104. [Google Scholar] [CrossRef]

- Geng, D.; He, H.; Lan, X.; Liu, C. Bearing Fault Diagnosis Based on Improved Federated Learning Algorithm. Computing 2021, 104, 1–19. [Google Scholar] [CrossRef]

- Wang, Z.; Gai, K. Decision Tree-Based Federated Learning: A Survey. Blockchains 2024, 2, 40–60. [Google Scholar] [CrossRef]

- Tonellotto, N.; Gotta, A.; Nardini, F.M.; Gadler, D.; Silvestri, F. Neural Network Quantization in Federated Learning at the Edge. Inf. Sci. 2021, 575, 417–436. [Google Scholar] [CrossRef]

- Anaissi, A.; Suleiman, B.; Alyassine, W. A personalized federated learning algorithm for one-class support vector machine: An application in anomaly detection. In Proceedings of the International Conference on Computational Science, London, UK, 21–23 June 2022; pp. 373–379. [Google Scholar] [CrossRef]

- Deng, Z.; Han, Z.; Ma, C.; Ding, M.; Yuan, L.; Ge, C.; Liu, Z. Vertical Federated Unlearning on the Logistic Regression Model. Electronics 2023, 12, 3182. [Google Scholar] [CrossRef]

- Markovic, T.; Leon, M.; Buffoni, D.; Punnekkat, S. Random Forest Based on Federated Learning for Intrusion Detection. In Proceedings of the IFIP International Conference on Artificial Intelligence Applications and Innovations, Crete, Greece, 17–20 June 2022; pp. 132–144. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, L.; Chen, K. Secure efficient federated knn for recommendation systems. In Advances in Natural Computation, Fuzzy Systems and Knowledge Discovery; Springer: Cham, Switzerland, 2021; pp. 1808–1819. [Google Scholar] [CrossRef]

- Jiang, C.; Yin, K.; Xia, C.; Huang, W. FedHGCDroid: An Adaptive Multi-Dimensional Federated Learning for Privacy-Preserving Android Malware Classification. Entropy 2022, 24, 919. [Google Scholar] [CrossRef] [PubMed]

- Zhong, J.; Wu, Y.; Ma, W.; Deng, S.; Zhou, H. Optimizing Multi-Objective Federated Learning on Non-IID Data with Improved NSGA-III and Hierarchical Clustering. Symmetry 2022, 14, 1070. [Google Scholar] [CrossRef]

- Che, L.; Wang, J.; Zhou, Y.; Ma, F. Multimodal Federated Learning: A Survey. Sensors 2023, 23, 6986. [Google Scholar] [CrossRef]

- Liu, Z.; Duan, S.; Wang, S.; Liu, Y.; Li, X. MFLCES: Multi-Level Federated Edge Learning Algorithm Based on Client and Edge Server Selection. Electronics 2023, 12, 2689. [Google Scholar] [CrossRef]

- Le, D.-D.; Tran, A.-K.; Dao, M.-S.; Nguyen-Ly, K.-C.; Le, H.-S.; Nguyen-Thi, X.-D.; Pham, T.-Q.; Nguyen, V.-L.; Nguyen-Thi, B.-Y. Insights into Multi-Model Federated Learning: An Advanced Approach for Air Quality Index Forecasting. Algorithms 2022, 15, 434. [Google Scholar] [CrossRef]

- Feng, S.; Yu, H.; Zhu, Y. MMVFL: A Simple Vertical Federated Learning Framework for Multi-Class Multi-Participant Scenarios. Sensors 2024, 24, 619. [Google Scholar] [CrossRef] [PubMed]

- Sajid, N.A.; Rahman, A.; Ahmad, M.; Musleh, D.; Basheer Ahmed, M.I.; Alassaf, R.; Chabani, S.; Ahmed, M.S.; Salam, A.A.; AlKhulaifi, D. Single vs. Multi-Label: The Issues, Challenges and Insights of Contemporary Classification Schemes. Appl. Sci. 2023, 13, 6804. [Google Scholar] [CrossRef]

- Suri, J.S.; Bhagawati, M.; Paul, S.; Protogerou, A.D.; Sfikakis, P.P.; Kitas, G.D.; Khanna, N.N.; Ruzsa, Z.; Sharma, A.M.; Saxena, S.; et al. A Powerful Paradigm for Cardiovascular Risk Stratification Using Multiclass, Multi-Label, and Ensemble-Based Machine Learning Paradigms: A Narrative Review. Diagnostics 2022, 12, 722. [Google Scholar] [CrossRef] [PubMed]

- Kumar, S.; Kumar, N.; Dev, A.; Naorem, S. Movie Genre Classification Using Binary Relevance, Label Powerset, and Machine Learning Classifiers. Multimed. Tools Appl. 2023, 82, 945–968. [Google Scholar] [CrossRef]

- Raza, A.; Rustam, F.; Siddiqui, H.U.R.; Diez, I.d.l.T.; Garcia-Zapirain, B.; Lee, E.; Ashraf, I. Predicting Genetic Disorder and Types of Disorder Using Chain Classifier Approach. Genes 2023, 14, 71. [Google Scholar] [CrossRef]

- Yoo, J.; Jin, Y.; Ko, B.; Kim, M.-S. k-Labelsets Method for Multi-Label ECG Signal Classification Based on SE-ResNet. Appl. Sci. 2021, 11, 7758. [Google Scholar] [CrossRef]

- Rocha, V.F.; Varejão, F.M.; Segatto, M.E.V. Ensemble of Classifier Chains and Decision Templates for Multi-Label Classification. Knowl. Inf. Syst. 2022, 64, 643–663. [Google Scholar] [CrossRef]

- Romero-del-Castillo, J.A.; Mendoza-Hurtado, M.; Ortiz-Boyer, D.; García-Pedrajas, N. Local-Based K Values for Multi-Label K-Nearest Neighbors Rule. Eng. Appl. Artif. Intell. 2022, 116, 105487. [Google Scholar] [CrossRef]

- Chada, N.K.; Hoel, H.; Jasra, A.; Zouraris, G.E. Improved Efficiency of Multilevel Monte Carlo for Stochastic PDE through Strong Pairwise Coupling. J. Sci. Comput. 2022, 93, 62. [Google Scholar] [CrossRef]

- Read, J.; Bifet, A.; Holmes, G.; Pfahringer, B. Scalable and Efficient Multi-Label Classification for Evolving Data Streams. Mach. Learn. 2012, 88, 243–272. [Google Scholar] [CrossRef]

- Nadeem, M.I.; Ahmed, K.; Li, D.; Zheng, Z.; Naheed, H.; Muaad, A.Y.; Alqarafi, A.; Abdel Hameed, H. SHO-CNN: A Metaheuristic Optimization of a Convolutional Neural Network for Multi-Label News Classification. Electronics 2023, 12, 113. [Google Scholar] [CrossRef]

- Shakeel, M.; Nishida, K.; Itoyama, K.; Nakadai, K. 3D Convolution Recurrent Neural Networks for Multi-Label Earthquake Magnitude Classification. Appl. Sci. 2022, 12, 2195. [Google Scholar] [CrossRef]

- Pang, Y.; Qin, X.; Zhang, Z. Specific Relation Attention-Guided Graph Neural Networks for Joint Entity and Relation Extraction in Chinese EMR. Appl. Sci. 2022, 12, 8493. [Google Scholar] [CrossRef]

- Park, M.; Tran, D.Q.; Lee, S.; Park, S. Multilabel Image Classification with Deep Transfer Learning for Decision Support on Wildfire Response. Remote Sens. 2021, 13, 3985. [Google Scholar] [CrossRef]

- Hüllermeier, E.; Fürnkranz, J.; Mencia, E.L. Conformal Rule-Based Multi-Label Classification. Lect. Notes Comput. Sci. 2020, 12325, 290–296. [Google Scholar] [CrossRef]

- Qiu, S.; Wang, M.; Yang, Y.; Yu, G.; Wang, J.; Yan, Z.; Domeniconi, C.; Guo, M. Meta Multi-Instance Multi-Label Learning by Heterogeneous Network Fusion. Inf. Fusion 2023, 94, 272–283. [Google Scholar] [CrossRef]

- Verma, S.; Singh, S.; Majumdar, A. Multi-Label LSTM Autoencoder for Non-Intrusive Appliance Load Monitoring. Electr. Power Syst. Res. 2021, 199, 107414. [Google Scholar] [CrossRef]

- Liu, Z.; Niu, K.; He, Z. ML-CookGAN: Multi-Label Generative Adversarial Network for Food Image Generation. ACM Trans. Multimed. Comput. Commun. Appl. 2023, 19, 85. [Google Scholar] [CrossRef]

- Saha, S.; Saha, M.; Mukherjee, K.; Arabameri, A.; Ngo, P.T.T.; Paul, G.C. Predicting the Deforestation Probability Using the Binary Logistic Regression, Random Forest, Ensemble Rotational Forest, REPTree: A Case Study at the Gumani River Basin, India. Sci. Total Environ. 2020, 730, 139197. [Google Scholar] [CrossRef] [PubMed]

- Ajin, R.S.; Saha, S.; Saha, A.; Biju, A.; Costache, R.; Kuriakose, S.L. Enhancing the Accuracy of the REPTree by Integrating the Hybrid Ensemble Meta-Classifiers for Modelling the Landslide Susceptibility of Idukki District, South-Western India. Photonirvachak 2022, 50, 2245–2265. [Google Scholar] [CrossRef]

- Al-Mukhtar, M.; Srivastava, A.; Khadke, L.; Al-Musawi, T.; Elbeltagi, A. Prediction of Irrigation Water Quality Indices Using Random Committee, Discretization Regression, REPTree, and Additive Regression. Water Resour. Manag. 2023, 38, 343–368. [Google Scholar] [CrossRef]

- Alsultanny, Y. Machine Learning by Data Mining REPTree and M5P for Predicating Novel Information for PM10. Cloud Comput. Data Sci. 2020, 1, 40–48. [Google Scholar] [CrossRef]

- Saha, S.; Sarkar, R.; Roy, J.; Saha, T.K.; Bhardwaj, D.; Acharya, S. Predicting the Landslide Susceptibility Using Ensembles of Bagging with RF and REPTree in Logchina, Bhutan. In Impact of Climate Change, Land Use and Land Cover, and Socio-Economic Dynamics on Landslides; Sarkar, R., Shaw, R., Pradhan, B., Eds.; Springer: Singapore, 2022; pp. 231–247. [Google Scholar] [CrossRef]

- Mandal, K.; Saha, S.; Mandal, S. Predicting the Landslide Susceptibility in Eastern Sikkim Himalayan Region, India Using Boosted Regression Tree and REPTree Machine Learning Techniques. In Applied Geomorphology and Contemporary Issues; Mandal, S., Maiti, R., Nones, M., Beckedahl, H.R., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 683–707. [Google Scholar] [CrossRef]

- Prajapati, J.B. Analysis of Age Sage Classification for Students’ Social Engagement Using REPTree and Random Forest. In Proceedings of the International Conference on Computational Intelligence in Data Science, Virtual Event, 24–26 March 2022; pp. 44–54. [Google Scholar] [CrossRef]

- Elbeltagi, A.; Srivastava, A.; Al-Saeedi, A.H.; Raza, A.; Abd-Elaty, I.; El-Rawy, M. Forecasting Long-Series Daily Reference Evapotranspiration Based on Best Subset Regression and Machine Learning in Egypt. Water 2023, 15, 1149. [Google Scholar] [CrossRef]

- Mrabet, H.; Alhomoud, A.; Jemai, A.; Trentesaux, D. A Secured Industrial Internet-of-Things Architecture Based on Blockchain Technology and Machine Learning for Sensor Access Control Systems in Smart Manufacturing. Appl. Sci. 2022, 12, 4641. [Google Scholar] [CrossRef]

- Olaleye, T.O. Opinion Mining Analytics for Spotting Omicron Fear-Stimuli Using REPTree Classifier and Natural Language Processing. Int. J. Res. Appl. Sci. Eng. Technol. 2022, 10, 995–1005. [Google Scholar] [CrossRef]

- Li, Q.; Wu, Z.; Cai, Y.; Han, Y.; Yung, C.M.; Fu, T.; He, B. Fedtree: A federated learning system for trees. In Proceedings of the 6th Machine Learning and Systems, Miami Beach, FL, USA, 8 June 2023; pp. 1–15. [Google Scholar]

- Zheng, Y.; Xu, S.; Wang, S.; Gao, Y.; Hua, Z. Privet: A Privacy-Preserving Vertical Federated Learning Service for Gradient Boosted Decision Tables. IEEE Trans. Serv. Comput. 2023, 16, 3604–3620. [Google Scholar] [CrossRef]

- Maddock, S.; Cormode, G.; Wang, T.; Maple, C.; Jha, S. Federated Boosted Decision Trees with Differential Privacy. In Proceedings of the CCS, Nagasaki, Japan, 30 May–2 June 2022; pp. 2249–2263. [Google Scholar] [CrossRef]

- Yamamoto, F.; Ozawa, S.; Wang, L. eFL-Boost: Efficient Federated Learning for Gradient Boosting Decision Trees. IEEE Access 2022, 10, 43954–43963. [Google Scholar] [CrossRef]

- Fu, F.; Shao, Y.; Yu, L.; Jiang, J.; Xue, H.; Tao, Y.; Cui, B. Vf2boost: Very fast vertical federated gradient boosting for cross-enterprise learning. In Proceedings of the SIGMOD, Xi’an, China, 20–25 June 2021; pp. 563–576. [Google Scholar] [CrossRef]

- Li, Q.; Wu, Z.; Wen, Z.; He, B. Privacy-preserving gradient boosting decision trees. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 784–791. [Google Scholar] [CrossRef]

- Zhao, L.; Ni, L.; Hu, S.; Chen, Y.; Zhou, P.; Xiao, F.; Wu, L. InPrivate Digging: Enabling Tree-based Distributed Data Mining with Differential Privacy. In Proceedings of the IEEE Conference on Computer Communications, Honolulu, HI, USA, 16–19 April 2018; pp. 2087–2095. [Google Scholar] [CrossRef]

- Li, X.; Hu, Y.; Liu, W.; Feng, H.; Peng, L.; Hong, Y.; Ren, K.; Qin, Z. OpBoost: A vertical federated tree boosting framework based on order-preserving desensitization. arXiv 2022, arXiv:2210.01318. [Google Scholar] [CrossRef]

- Zhao, J.; Zhu, H.; Xu, W.; Wang, F.; Lu, R.; Li, H. SGBoost: An Efficient and Privacy-Preserving Vertical Federated Tree Boosting Framework. IEEE Trans. Inf. Forensics Secur. 2022, 18, 1022–1036. [Google Scholar] [CrossRef]

- Cheng, K.; Fan, T.; Jin, Y.; Liu, Y.; Chen, T.; Papadopoulos, D.; Yang, Q. SecureBoost: A Lossless Federated Learning Framework. IEEE Intell. Syst. 2021, 36, 87–98. [Google Scholar] [CrossRef]

- Chen, W.; Ma, G.; Fan, T.; Kang, Y.; Xu, Q.; Yang, Q. Secureboost+: A high performance gradient boosting tree framework for large scale vertical federated learning. arXiv 2021, arXiv:2110.10927. [Google Scholar] [CrossRef]

- Le, N.K.; Liu, Y.; Nguyen, Q.M.; Liu, Q.; Liu, F.; Cai, Q.; Hirche, S. Fedxgboost: Privacy-preserving xgboost for federated learning. arXiv 2021, arXiv:2106.10662. [Google Scholar] [CrossRef]

- Law, A.; Leung, C.; Poddar, R.; Popa, R.A.; Shi, C.; Sima, O.; Zheng, W. Secure collaborative training and inference for xgboost. In Proceedings of the 2020 Workshop on Privacy-Preserving Machine Learning in Practice, Virtual Event, 9 November 2020; pp. 21–26. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, Y.; Liu, Y.; Liu, X.; Gupta, B.B.; Ma, J. Cloud-based federated boosting for mobile crowdsensing. arXiv 2020, arXiv:2005.05304. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, X.; Yuan, P. Federated security tree algorithm for user privacy protection. J. Comput. Appl. 2020, 40, 2980. [Google Scholar]

- Li, Q.; Wen, Z.; He, B. Practical Federated Gradient Boosting Decision Trees. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 4642–4649. [Google Scholar] [CrossRef]

- Yang, M.W.; Song, L.Q.; Xu, J.; Li, C.; Tan, G. The tradeoff between privacy and accuracy in anomaly detection using federated xgboost. arXiv 2019, arXiv:1907.07157. [Google Scholar] [CrossRef]

- Liu, Y.; Ma, Z.; Liu, X.; Ma, S.; Nepal, S.; Deng, R. Boosting Privately: Privacy-Preserving Federated Extreme Boosting for Mobile Crowdsensing. arXiv 2019, arXiv:1907.10218. [Google Scholar] [CrossRef]

- Yao, H.; Wang, J.; Dai, P.; Bo, L.; Chen, Y. An efficient and robust system for vertically federated random forest. arXiv 2022, arXiv:2201.10761. [Google Scholar] [CrossRef]

- Han, Y.; Du, P.; Yang, K. FedGBF: An efficient vertical federated learning framework via gradient boosting and bagging. arXiv 2022, arXiv:2204.00976. [Google Scholar] [CrossRef]

- Wu, Y.; Cai, S.; Xiao, X.; Chen, G.; Ooi, B.C. Privacy preserving vertical federated learning for tree-based models. arXiv 2020, arXiv:2008.06170. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Y.; Liu, Z.; Liang, Y.; Meng, C.; Zhang, J.; Zheng, Y. Federated Forest. IEEE Trans. Big Data 2020, 8, 843–854. [Google Scholar] [CrossRef]

- Zhang, K.; Song, X.; Zhang, C.; Yu, S. Challenges and future directions of secure federated learning: A survey. Front. Comput. Sci. 2022, 16, 165817. [Google Scholar] [CrossRef] [PubMed]

- Banabilah, S.; Aloqaily, M.; Alsayed, E.; Malik, N.; Jararweh, Y. Federated learning review: Fundamentals, enabling technologies, and future applications. Inf. Process. Manag. 2022, 59, 103061. [Google Scholar] [CrossRef]

- Blachnik, M.; Sołtysiak, M.; Dąbrowska, D. Predicting Presence of Amphibian Species Using Features Obtained from GIS and Satellite Images. ISPRS Int. J. Geo Inf. 2019, 8, 123. [Google Scholar] [CrossRef]

- Colonna, J.G.; Gama, J.; Nakamura, E.F. A comparison of hierarchical multi-output recognition approaches for anuran classification. Mach. Learn. 2018, 107, 1651–1671. [Google Scholar] [CrossRef]

- Kaggle. HackerEarth ML Challenge: Adopt a Buddy. Available online: https://www.kaggle.com/datasets/mannsingh/hackerearth-ml-challenge-pet-adoption (accessed on 16 March 2024).

- Witten, I.H.; Frank, E.; Hall, M.A. Data Mining: Practical Machine Learning Tools and Techniques, 3rd ed.; Morgan Kaufmann: Cambridge, MA, USA, 2016; pp. 1–664. [Google Scholar]

- Pan, W. Predicting Presence of Amphibian Species Using Feature Selection. In Proceedings of the 6th IEEE Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 4–6 March 2022; pp. 1823–1826. [Google Scholar] [CrossRef]

| Year | Ref. | FL Type | Dataset | Aggregation Algorithm | ML Algorithm | Evaluation Metric | Contribution |

|---|---|---|---|---|---|---|---|

| 2023 | [52] | Centralized | Air pollutants and meteorolgical data | FedAvg | LSTM, SSA, and DPLA | MAE, RMSE, R-squared | Cross-domain prediction of air pollutant concentration |

| 2023 | [53] | Decentralized | Air dataset | FedAvg | CNN | Accuracy, precision, recall, F-score, and confusion matrix | Predicting chickpea crops for smart farming |

| 2023 | [54] | Centralized | Crop and soil dataset | Federated learning | AMFSC | Analysis rate, control rate | Agricultural production improvement |

| 2022 | [30] | Centralized | CSE-CICIDS2018, MQTTset, and InSDN | Cyber-physical production system (CPPS)-based aggregation | CNN, recurrent neural networks, and deep neural networks | Accuracy, precision, recall, F-score | Intrusion detection to enhance the security of agricultural IoT infrastructures |

| 2022 | [55] | Centralized | Wind turbines data | FedAvg | MSRAN and deep network | Precision, recall, and F-score | Fault detection in wind turbines |

| 2022 | [56] | Centralized | ToN_IoT | FedAvg and Fed+ | Multinomial logistic regression | Accuracy, precision, recall, F-score, FPR | Intrusion detection for IoT |

| 2022 | [57] | Centralized | Spinesagt2- wdataset3 | Federated contrastive learning optimization (FCLOpt) | Dual attention gates (DAGs) and U-Net | Accuracy | Federated learning-based vertebral body segment framework (FLVBSF) |

| 2022 | [58] | Centralized | N-BaIoT | Mini-batch and multi-epoch aggregation, derived from FedAVG | Multilayer perceptron and autoencoder | Accuracy, F-score | Federated learning for IoT malware detection |

| 2022 | [59] | Centralized | SWAT 2015 | SCADA server-based aggregation | GBDT with Paillier HE | Accuracy | Intrusion detection for IoT prioritizing data confidentiality |

| 2021 | [60] | Decentralized | CWRU | FA-FedAvg | CNN | Accuracy | Bearing fault diagnosis |

| Sample | X | Y | |||

|---|---|---|---|---|---|

| … | |||||

| … | |||||

| … | … | … | … | … | … |

| … | |||||

| X | Y | X | Y | X | Y | X | Y | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| … | … | … | … | … | … | … | … | … | … | … | … |

| ID | Ref. | Dataset Name | #Features | #Instances | #Labels | #Classes | Source | Link (accessed on 16 March 2024) |

|---|---|---|---|---|---|---|---|---|

| 1 | [124] | Amphibians | 23 | 189 | 7 | 2,2,2,2,2,2,2 | UCI | https://archive.ics.uci.edu/dataset/528/amphibians |

| 2 | [125] | Anuran-Calls-(MFCCs) | 22 | 7195 | 3 | 4,8,10 | UCI | https://archive.ics.uci.edu/dataset/406/anuran+calls+mfccs |

| 3 | [126] | HackerEarth-Adopt-A-Buddy | 11 | 18,834 | 2 | 3,4 | Kaggle | https://www.kaggle.com/datasets/mannsingh/hackerearth-ml-challenge-pet-adoption |

| Dataset Attributes | Task | Study Domain | Feature Types | #Instances | #Features | #Views |

|---|---|---|---|---|---|---|

| Multivariate | Classification | Biology | Integer, real, nominal | 189 | 23 | 6457 |

| Feature Name | Min | Max | Mean | Mode | Standard Deviation |

|---|---|---|---|---|---|

| SR | 30 | 500,000 | 9633.2275 | 300 | 46,256.0783 |

| NR | 1 | 12 | 1.5661 | 1 | 1.5444 |

| OR | 25 | 100 | 90.8689 | 100 | 19.0996 |

| No | Attribute | Type | Description |

|---|---|---|---|

| 1 | ID | Integer | Identification number (unused in classification) |

| 2 | MV | Categorical | Motorway (unused in classification) |

| 3 | SR | Numerical | Surface of water reservoir (m2) |

| 4 | NR | Numerical | Number of water reservoirs in habitat (The greater the number of reservoirs, the higher the probability that some of them will be proper for amphibian breeding). |

| 5 | TR | Categorical | Type of water reservoirs (including reservoirs with natural features, lately formed reservoirs, settling ponds, reservoirs situated near residential areas, technological water reservoirs, etc.) |

| 6 | VR | Categorical | Vegetation presence within the reservoirs (including absence of vegetation, sparse patches at the edges, densely overgrown areas, abundant vegetation within the reservoir, reservoirs entirely overgrown, etc.) |

| 7 | SUR1 | Categorical | Surroundings 1 (the predominant land cover types surrounding the water reservoir) |

| 8 | SUR2 | Categorical | Surroundings 2 (the second most prevalent types of land cover surrounding the water reservoir) |

| 9 | SUR3 | Categorical | Surroundings 3 (the third most predominant types of land cover surrounding the water reservoir) |

| 10 | UR | Categorical | Use of water reservoirs (unused by humans, recreational and scenic use, economic utilization, technological purposes) |

| 11 | FR | Categorical | The presence of fishing (limited or occasional fishing, intensive fishing, breeding reservoirs) |

| 12 | OR | Numerical | Degree of access from reservoir edges to undeveloped areas: no access, limited access, moderate access, extensive access to open space |

| 13 | RR | Ordinal | Minimum distance from the water reservoir to roads categorized as: <50 m, 50–100 m, 100–200 m, 200–500 m, 500–1000 m, >1000 m |

| 14 | BR | Ordinal | Building development as minimum distance to buildings <50 m, 50–100 m, 100–200 m, 200–500 m, 500–1000 m, >1000 m |

| 15 | MR | Categorical | Maintenance status of the reservoir (including clean, slightly littered, reservoirs heavily or very heavily littered) |

| 16 | CR | Categorical | Type of shore (natural or concrete) |

| 17 | Green frogs | Categorical | Presence of green frogs (label 1) |

| 18 | Brown frogs | Categorical | Presence of brown frogs (label 2) |

| 19 | Common toad | Categorical | Presence of common toad (label 3) |

| 20 | Fire-bellied toad | Categorical | Presence of fire-bellied toad (label 4) |

| 21 | Tree frog | Categorical | Presence of tree frog (label 5) |

| 22 | Common newt | Categorical | Presence of common newt (label 6) |

| 23 | Great crested newt | Categorical | Presence of great crested newt (label 7) |

| Dataset Attributes | Task | Study Domain | Feature Type | #Instances | #Features | #Views |

|---|---|---|---|---|---|---|

| Multivariate | Classification, clustering | Biology | Real | 7195 | 22 | 5692 |

| Feature Name | Min | Max | Mean | Mode | Standard Deviation |

|---|---|---|---|---|---|

| MFCCs_1 | −0.2512 | 1.0000 | 0.9899 | 1.0000 | 0.0690 |

| MFCCs_2 | −0.6730 | 1.0000 | 0.3236 | 1.0000 | 0.2187 |

| MFCCs_3 | −0.4360 | 1.0000 | 0.3112 | 1.0000 | 0.2635 |

| MFCCs_4 | −0.4727 | 1.0000 | 0.4460 | 1.0000 | 0.1603 |

| MFCCs_5 | −0.6360 | 0.7522 | 0.1270 | No | 0.1627 |

| MFCCs_6 | −0.4104 | 0.9642 | 0.0979 | No | 0.1204 |

| MFCCs_7 | −0.5390 | 1.0000 | −0.0014 | No | 0.1714 |

| MFCCs_8 | −0.5765 | 0.5518 | −0.0004 | No | 0.1163 |

| MFCCs_9 | −0.5873 | 0.7380 | 0.1282 | No | 0.1790 |

| MFCCs_10 | −0.9523 | 0.5228 | 0.0560 | No | 0.1271 |

| MFCCs_11 | −0.9020 | 0.5230 | −0.1157 | No | 0.1868 |

| MFCCs_12 | −0.7994 | 0.6909 | 0.0434 | No | 0.1560 |

| MFCCs_13 | −0.6441 | 0.9457 | 0.1509 | No | 0.2069 |

| MFCCs_14 | −0.5904 | 0.5757 | −0.0392 | No | 0.1525 |

| MFCCs_15 | −0.7172 | 0.6689 | −0.1017 | No | 0.1876 |

| MFCCs_16 | −0.4987 | 0.6707 | 0.0421 | No | 0.1199 |

| MFCCs_17 | −0.4215 | 0.6812 | 0.0887 | No | 0.1381 |

| MFCCs_18 | −0.7593 | 0.6141 | 0.0078 | No | 0.0847 |

| MFCCs_19 | −0.6807 | 0.5742 | −0.0495 | No | 0.0825 |

| MFCCs_20 | −0.3616 | 0.4678 | −0.0532 | No | 0.0942 |

| MFCCs_21 | −0.4308 | 0.3898 | 0.0373 | No | 0.0795 |

| MFCCs_22 | −0.3793 | 0.4322 | 0.0876 | No | 0.1234 |

| Label | Class | #Instances |

|---|---|---|

| Family | Bufonidae | 68 |

| Dendrobatidae | 542 | |

| Hylidae | 2165 | |

| Leptodactylidae | 4420 | |

| Genus | Adenomera | 4150 |

| Ameerega | 542 | |

| Dendropsophus | 310 | |

| Hypsiboas | 1593 | |

| Leptodactylus | 270 | |

| Osteocephalus | 114 | |

| Rhinella | 68 | |

| Scinax | 148 | |

| Species | AdenomeraAndre | 672 |

| AdenomeraHylaedactylus | 3478 | |

| Ameeregatrivittata | 542 | |

| HylaMinuta | 310 | |

| HypsiboasCordobae | 1121 | |

| HypsiboasCinerascens | 472 | |

| LeptodactylusFuscus | 270 | |

| OsteocephalusOophagus | 114 | |

| Rhinellagranulosa | 68 | |

| ScinaxRuber | 148 |

| Dataset Attributes | Task | Study Domain | Feature Type | #Instances | #Features | #Views |

|---|---|---|---|---|---|---|

| Multivariate | Classification | Biology | Integer, real, nominal, temporal | 18,834 | 11 | 5605 |

| No. | Attribute | Type | Description |

|---|---|---|---|

| 1 | pet_id | Integer | A unique identifier is assigned to each animal up for adoption. |

| 2 | issue_date | Temporal | The date when the pet was officially taken in by the shelter. |

| 3 | listing_date | Temporal | The date and time when the pet became available for adoption at the shelter. |

| 4 | condition | Categorical | The health or physical state of the pet upon arrival at the shelter. |

| 5 | color_type | Categorical | The color pattern or combination exhibited by the pet. |

| 6 | length | Real | The measured length of the pet is typically in meters. |

| 7 | height | Real | The measured height of the pet is typically in centimeters. |

| 8 | X1 | Integer | The value related with the pet. |

| 9 | X2 | Integer | The other value related with the pet. |

| 10 | breed_category | Categorical | The category or classification of the pet’s breed. |

| 11 | pet_category | Categorical | The category or species classification of the pet. |

| Feature Name | Min | Max | Mean | Mode | Standard Deviation |

|---|---|---|---|---|---|

| length | 0.0000 | 1.0000 | 0.5026 | 0.0800 | 0.2887 |

| height | 5.0000 | 50.0000 | 27.4488 | 21.4000 | 13.0198 |

| X1 | 0.0000 | 19.0000 | 5.3696 | 0.0000 | 6.5724 |

| X2 | 0.0000 | 9.0000 | 4.5773 | 1.0000 | 3.5178 |

| Amphibians | Accuracy | Precision | TNR | ROC | PRC | Recall | F-Score |

|---|---|---|---|---|---|---|---|

| Green frogs | 68.78 | 0.694 | 0.688 | 0.715 | 0.682 | 0.688 | 0.689 |

| Brown frogs | 78.31 | 0.613 | 0.783 | 0.503 | 0.665 | 0.783 | 0.688 |

| Common toad | 71.43 | 0.712 | 0.714 | 0.621 | 0.653 | 0.714 | 0.674 |

| Fire-bellied toad | 70.37 | 0.669 | 0.704 | 0.576 | 0.612 | 0.704 | 0.650 |

| Tree frog | 65.61 | 0.639 | 0.655 | 0.638 | 0.627 | 0.656 | 0.631 |

| Common newt | 69.84 | 0.658 | 0.698 | 0.528 | 0.603 | 0.698 | 0.619 |

| Great crested newt | 88.36 | 0.790 | 0.884 | 0.539 | 0.818 | 0.884 | 0.834 |

| Average | 73.24 | 0.682 | 0.732 | 0.589 | 0.666 | 0.732 | 0.684 |

| Anuran-Calls-(MFCCs) | Accuracy | Precision | TNR | ROC | PRC | Recall | F-Score |

|---|---|---|---|---|---|---|---|

| Family | 95.75 | 0.957 | 0.980 | 0.978 | 0.964 | 0.957 | 0.957 |

| Genus | 94.19 | 0.941 | 0.991 | 0.979 | 0.943 | 0.942 | 0.941 |

| Species | 93.55 | 0.935 | 0.992 | 0.983 | 0.935 | 0.936 | 0.935 |

| Average | 94.50 | 0.944 | 0.988 | 0.980 | 0.947 | 0.945 | 0.944 |

| HackerEarth-Adopt-A-Buddy | Accuracy | Precision | TNR | ROC | PRC | Recall | F-Score |

|---|---|---|---|---|---|---|---|

| Breed_category | 85.43 | 0.856 | 0.927 | 0.965 | 0.938 | 0.854 | 0.850 |

| Pet_category | 86.80 | 0.869 | 0.928 | 0.946 | 0.928 | 0.868 | 0.865 |

| Average | 86.12 | 0.863 | 0.928 | 0.956 | 0.933 | 0.861 | 0.858 |

| Method | Accuracy |

|---|---|

| Gradient-Boosted Trees (GBT) [124] | 64.18 |

| Random Forest (RF) [124] | 57.54 |

| AdaBoost (ADA) [124] | 60.01 |

| Decision Tree (DT) [124] | 58.37 |

| Partially Monotonic Decision Tree (PMDT) [128] | 71.50 |

| Average | 62.32 |

| Proposed (FMLL with BR and REPTree) | 73.24 |

| Method | Precision | Recall | F-Score |

|---|---|---|---|

| Species | |||

| KNN-Flat | 0.690 | 0.720 | 0.700 |

| RBF-SVM-Flat | 0.850 | 0.540 | 0.660 |

| Polynomial-SVM-Flat | 0.710 | 0.760 | 0.740 |

| Tree-Flat | 0.490 | 0.500 | 0.500 |

| KNN-LCPL | 0.691 | 0.719 | 0.705 |

| KNN-Hierarchical-LCPN | 0.690 | 0.720 | 0.700 |

| RBF-SVM- Hierarchical-LCPN | 0.840 | 0.540 | 0.650 |

| Polynomial-SVM-Hierarchical-LCPN | 0.680 | 0.710 | 0.700 |

| Tree-Hierarchical-LCPN | 0.570 | 0.560 | 0.560 |

| KNN-Hierarchical-LCPL | 0.690 | 0.720 | 0.700 |

| RBF-SVM- Hierarchical-LCPL | 0.830 | 0.520 | 0.640 |

| Polynomial-SVM-Hierarchical-LCPL | 0.690 | 0.740 | 0.720 |

| Tree-Hierarchical-LCPL | 0.470 | 0.500 | 0.490 |

| Proposed (FMLL with BR and REPTree) | 0.935 | 0.936 | 0.935 |

| Family | |||

| KNN-LCPL | 0.713 | 0.820 | 0.763 |

| Proposed (FMLL with BR and REPTree) | 0.957 | 0.957 | 0.957 |

| Genus | |||

| KNN-LCPL | 0.663 | 0.731 | 0.695 |

| Proposed (FMLL with BR and REPTree) | 0.941 | 0.942 | 0.941 |

| Species + Family + Genus | |||

| KNN-LCPL | 0.689 | 0.757 | 0.721 |

| Proposed (FMLL with BR and REPTree) | 0.944 | 0.945 | 0.944 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ghasemkhani, B.; Varliklar, O.; Dogan, Y.; Utku, S.; Birant, K.U.; Birant, D. Federated Multi-Label Learning (FMLL): Innovative Method for Classification Tasks in Animal Science. Animals 2024, 14, 2021. https://doi.org/10.3390/ani14142021

Ghasemkhani B, Varliklar O, Dogan Y, Utku S, Birant KU, Birant D. Federated Multi-Label Learning (FMLL): Innovative Method for Classification Tasks in Animal Science. Animals. 2024; 14(14):2021. https://doi.org/10.3390/ani14142021

Chicago/Turabian StyleGhasemkhani, Bita, Ozlem Varliklar, Yunus Dogan, Semih Utku, Kokten Ulas Birant, and Derya Birant. 2024. "Federated Multi-Label Learning (FMLL): Innovative Method for Classification Tasks in Animal Science" Animals 14, no. 14: 2021. https://doi.org/10.3390/ani14142021

APA StyleGhasemkhani, B., Varliklar, O., Dogan, Y., Utku, S., Birant, K. U., & Birant, D. (2024). Federated Multi-Label Learning (FMLL): Innovative Method for Classification Tasks in Animal Science. Animals, 14(14), 2021. https://doi.org/10.3390/ani14142021