An Efficient Time-Domain End-to-End Single-Channel Bird Sound Separation Network

Abstract

Simple Summary

Abstract

1. Introduction

- We built a mixed species bird sound dataset for bird sound separation.

- We first introduced a separation network with good separation performance to separate mixed species bird sound.

- We improved the separation efficiency of the separation network by using the simplified transformer structure.

- Two performance metrics and five efficiency metrics were used to compare the separation performance and efficiency of two speech separation networks and our proposed bird sound separation network on our self-built bird sound dataset.

2. Materials and Methods

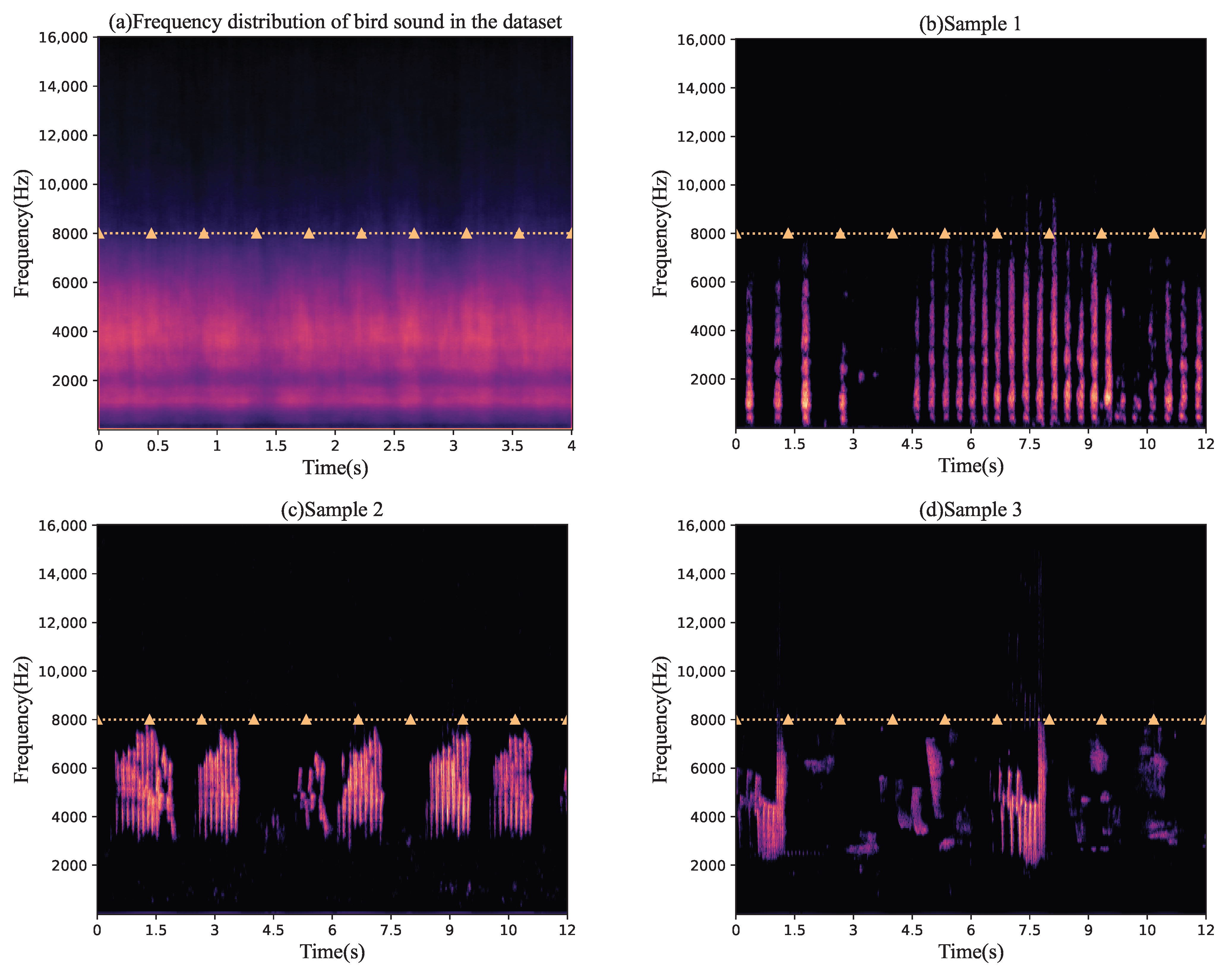

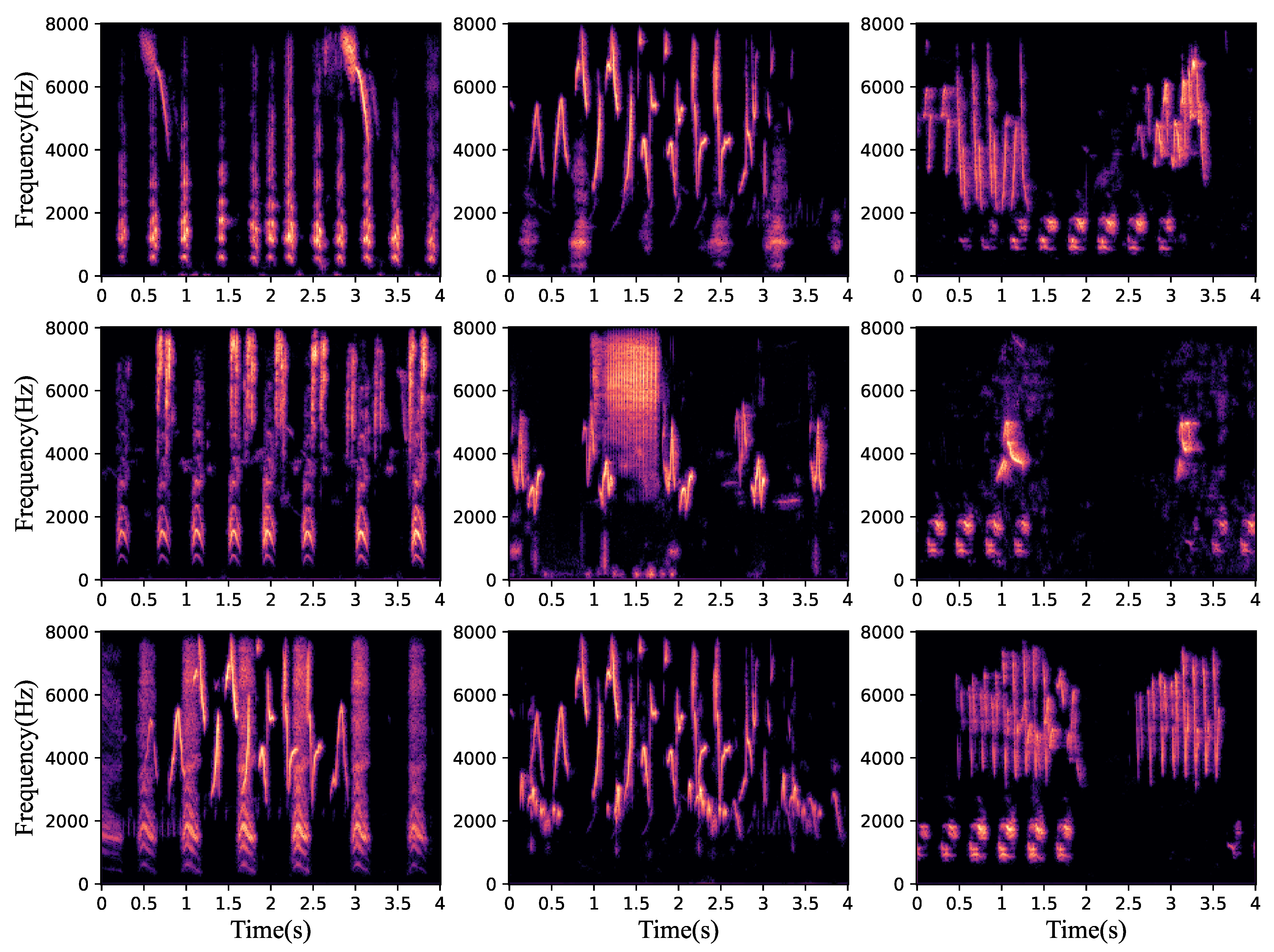

2.1. Data

2.2. Data Preprocessing

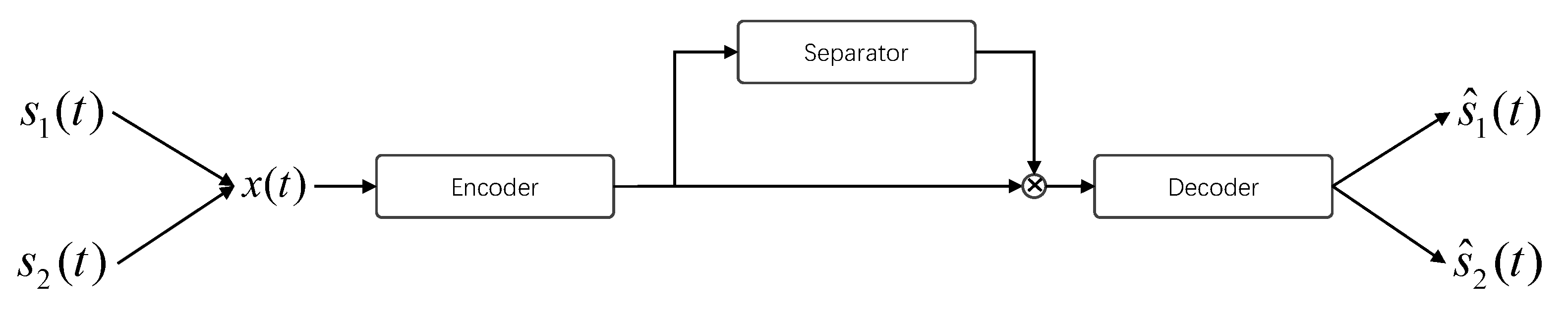

2.3. Methodology

2.3.1. Encoder

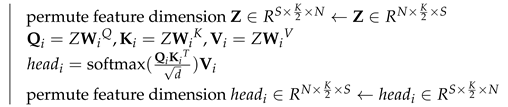

2.3.2. Separator

- 1.

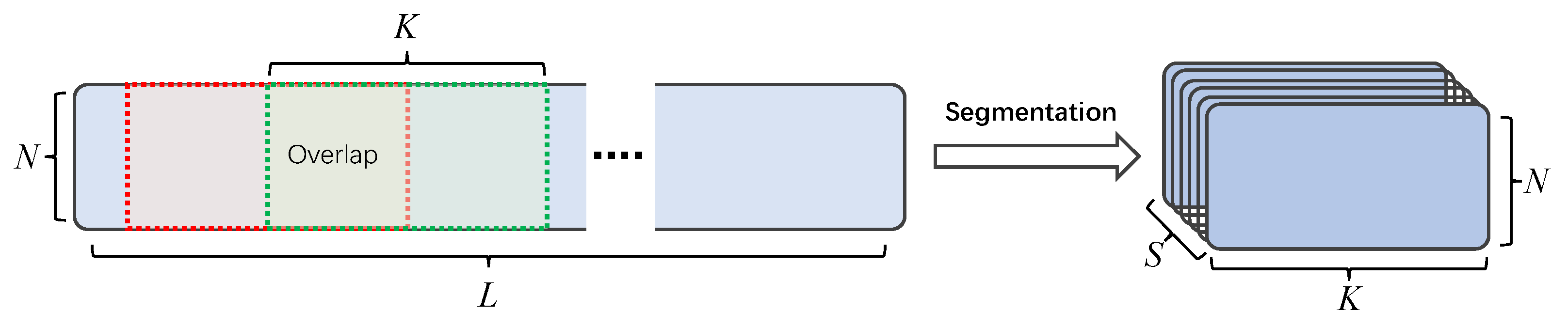

- Segmentation

- 2.

- DPTTNet block

| Algorithm 1: DPTTNet block forward. |

Input: Output: fori = 1 to hdo  end |

- 3.

- Dual-path block processing

- 4.

- Overlap-Add

2.3.3. Decoder

2.4. Evaluation Methods

- Number of executed floating point operations (FLOPs);

- Number of trainable parameters;

- Memory allocation required on the device for a single pass;

- Time for completing each process.

2.5. Experimental Setup

3. Results

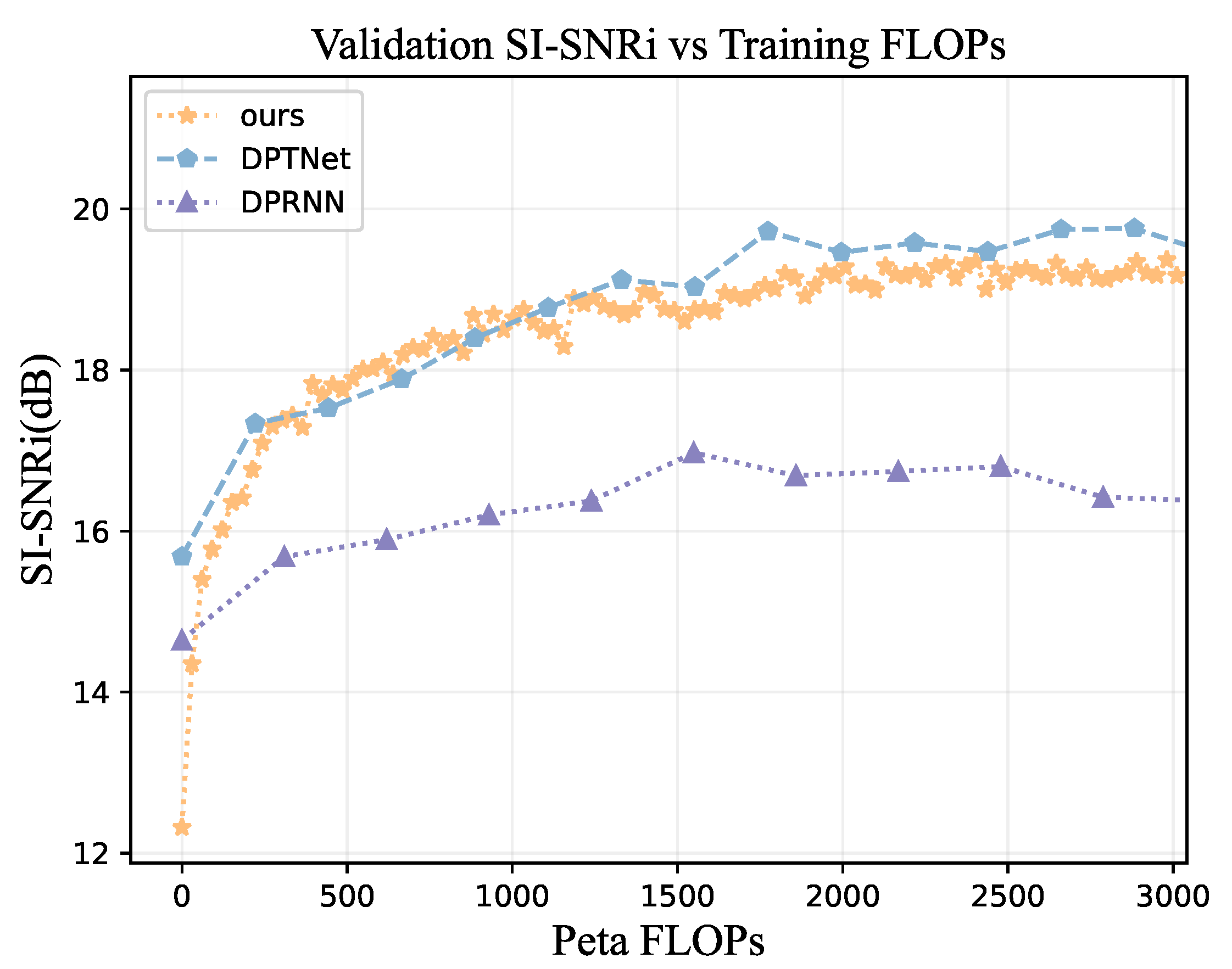

3.1. Separation Performance Analysis

3.2. Computational Resource Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shonfield, J.; Bayne, E.M. Autonomous recording units in avian ecological research: Current use and future applications. Avian Conserv. Ecol. 2017, 12, 14. [Google Scholar] [CrossRef]

- Sevilla, A.; Glotin, H. Audio Bird Classification with Inception-v4 extended with Time and Time-Frequency Attention Mechanisms. In Proceedings of the CLEF, Dublin, Ireland, 11–14 September 2017. [Google Scholar]

- G’omez-G’omez, J.; Vidaña-Vila, E.; Sevillano, X. Western Mediterranean wetlands bird species classification: Evaluating small-footprint deep learning approaches on a new annotated dataset. arXiv 2022, arXiv:2207.05393. [Google Scholar]

- Conde, M.V.; Shubham, K.; Agnihotri, P.; Movva, N.D.; Bessenyei, S. Weakly-Supervised Classification and Detection of Bird Sounds in the Wild. A BirdCLEF 2021 Solution. arXiv 2021, arXiv:2107.04878. [Google Scholar]

- Mammides, C.; Goodale, E.; Dayananda, S.K.; Kang, L.; Chen, J. Do acoustic indices correlate with bird diversity? Insights from two biodiverse regions in Yunnan Province, south China. Ecol. Indic. 2017, 82, 470–477. [Google Scholar] [CrossRef]

- Bradfer-Lawrence, T.; Bunnefeld, N.; Gardner, N.; Willis, S.G.; Dent, D.H. Rapid assessment of avian species richness and abundance using acoustic indices. Ecol. Indic. 2020, 115, 106400. [Google Scholar] [CrossRef]

- Dröge, S.; Martin, D.A.; Andriafanomezantsoa, R.; Buřivalová, Z.; Fulgence, T.R.; Osen, K.; Rakotomalala, E.; Schwab, D.; Wurz, A.; Richter, T.; et al. Listening to a changing landscape: Acoustic indices reflect bird species richness and plot-scale vegetation structure across different land-use types in north-eastern Madagascar. Ecol. Indic. 2021, 120, 106929. [Google Scholar] [CrossRef]

- Noumida, A.; Rajan, R. Multi-label bird species classification from audio recordings using attention framework. Appl. Acoust. 2022, 197, 108901. [Google Scholar] [CrossRef]

- Denton, T.; Wisdom, S.; Hershey, J.R. Improving Bird Classification with Unsupervised Sound Separation. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Virtual, 7–13 May 2022; pp. 636–640. [Google Scholar]

- Ross, S.R.P.; Friedman, N.R.; Yoshimura, M.; Yoshida, T.; Donohue, I.; Economo, E.P. Utility of acoustic indices for ecological monitoring in complex sonic environments. Ecol. Indic. 2021, 121, 107114. [Google Scholar] [CrossRef]

- Sánchez-Giraldo, C.; Bedoya, C.L.; Morán-Vásquez, R.A.; Isaza, C.V.; Daza, J.M. Ecoacoustics in the rain: Understanding acoustic indices under the most common geophonic source in tropical rainforests. Remote Sens. Ecol. Conserv. 2020, 6, 248–261. [Google Scholar] [CrossRef]

- Zhao, Z.; Xu, Z.Y.; Bellisario, K.M.; Zeng, R.; Li, N.; Zhou, W.Y.; Pijanowski, B.C. How well do acoustic indices measure biodiversity? Computational experiments to determine effect of sound unit shape, vocalization intensity, and frequency of vocalization occurrence on performance of acoustic indices. Ecol. Indic. 2019, 107, 105588. [Google Scholar] [CrossRef]

- Stoller, D.; Ewert, S.; Dixon, S. Wave-U-Net: A Multi-Scale Neural Network for End-to-End Audio Source Separation. arXiv 2018, arXiv:1806.03185. [Google Scholar]

- Tzinis, E.; Venkataramani, S.; Wang, Z.; Sübakan, Y.C.; Smaragdis, P. Two-Step Sound Source Separation: Training On Learned Latent Targets. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 31–35. [Google Scholar]

- Luo, Y.; Mesgarani, N. TaSNet: Time-Domain Audio Separation Network for Real-Time, Single-Channel Speech Separation. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 696–700. [Google Scholar]

- Luo, Y.; Mesgarani, N. Conv-TasNet: Surpassing Ideal Time–Frequency Magnitude Masking for Speech Separation. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 1256–1266. [Google Scholar] [CrossRef] [PubMed]

- Lea, C.S.; Vidal, R.; Reiter, A.; Hager, G. Temporal Convolutional Networks: A Unified Approach to Action Segmentation. arXiv 2016, arXiv:1608.08242. [Google Scholar]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to Forget: Continual Prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef] [PubMed]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Hershey, J.R.; Chen, Z.; Roux, J.L.; Watanabe, S. Deep clustering: Discriminative embeddings for segmentation and separation. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 31–35. [Google Scholar]

- Luo, Y.; Chen, Z.; Yoshioka, T. Dual-Path RNN: Efficient Long Sequence Modeling for Time-Domain Single-Channel Speech Separation. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 46–50. [Google Scholar]

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Chen, J.J.; Mao, Q.; Liu, D. Dual-Path Transformer Network: Direct Context-Aware Modeling for End-to-End Monaural Speech Separation. arXiv 2020, arXiv:2007.13975. [Google Scholar]

- Lam, M.W.Y.; Wang, J.; Su, D.; Yu, D. Sandglasset: A Light Multi-Granularity Self-Attentive Network for Time-Domain Speech Separation. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 5759–5763. [Google Scholar]

- Subakan, C.; Ravanelli, M.; Cornell, S.; Bronzi, M.; Zhong, J. Attention Is All You Need In Speech Separation. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 21–25. [Google Scholar]

- Rixen, J.; Renz, M. SFSRNet: Super-resolution for Single-Channel Audio Source Separation. In Proceedings of the AAAI, Virtual, 22 February–1 March 2022. [Google Scholar]

- Tzinis, E.; Wang, Z.; Jiang, X.; Smaragdis, P. Compute and Memory Efficient Universal Sound Source Separation. J. Signal Process. Syst. 2022, 94, 245–259. [Google Scholar] [CrossRef]

- Lam, M.W.Y.; Wang, J.; Su, D.; Yu, D. Effective Low-Cost Time-Domain Audio Separation Using Globally Attentive Locally Recurrent Networks. In Proceedings of the 2021 IEEE Spoken Language Technology Workshop (SLT), Shenzhen, China, 19–22 January 2021; pp. 801–808. [Google Scholar]

- Paulus, J.; Torcoli, M. Sampling Frequency Independent Dialogue Separation. arXiv 2022, arXiv:2206.02124. [Google Scholar]

- Mikula, P.; Valcu, M.; Brumm, H.; Bulla, M.; Forstmeier, W.; Petrusková, T.; Kempenaers, B.; Albrecht, T. A global analysis of song frequency in passerines provides no support for the acoustic adaptation hypothesis but suggests a role for sexual selection. Ecol. Lett. 2021, 24, 477–486. [Google Scholar] [CrossRef]

- Joly, A.; Goëau, H.; Kahl, S.; Deneu, B.; Servajean, M.; Cole, E.; Picek, L.; Castañeda, R.R.D.; Bolon, I.; Durso, A.M.; et al. Overview of LifeCLEF 2020: A System-Oriented Evaluation of Automated Species Identification and Species Distribution Prediction. In Proceedings of the CLEF, Online Event, 22–25 September 2020. [Google Scholar]

- Kahl, S.; Denton, T.; Klinck, H.; Glotin, H.; Goëau, H.; Vellinga, W.P.; Planqué, R.; Joly, A. Overview of BirdCLEF 2021: Bird call identification in soundscape recordings. In Proceedings of the CLEF, Online Event, 21–24 September 2021. [Google Scholar]

- Xeno-Canto: Sharing Bird Sounds from around the World. 2022. Available online: https://www.xeno-canto.org/about/xeno-canto (accessed on 17 March 2022).

- Ba, J.; Kiros, J.R.; Hinton, G.E. Layer Normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Vincent, E.; Gribonval, R.; Févotte, C. Performance measurement in blind audio source separation. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 1462–1469. [Google Scholar] [CrossRef]

- Raffel, C.; McFee, B.; Humphrey, E.J.; Salamon, J.; Nieto, O.; Liang, D.; Ellis, D.P.W. MIR_EVAL: A Transparent Implementation of Common MIR Metrics. In Proceedings of the ISMIR, Taipei, Taiwan, 27–31 October 2014. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

- Kolbaek, M.; Yu, D.; Tan, Z.; Jensen, J.H. Multitalker Speech Separation with Utterance-Level Permutation Invariant Training of Deep Recurrent Neural Networks. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 1901–1913. [Google Scholar] [CrossRef]

| Environment | Setup (Training) | Setup (Testing) |

|---|---|---|

| GPU | NVIDIA GeForce RTX 3080 Ti 12 G | NVIDIA GeForce RTX 3060 12 G |

| CPU | Intel(R) Core(TM) i7-11700F | Intel(R) Core(TM) i5-12400F |

| RAM | 32 G | 16 G |

| Operating system | Windows 10 64 bit | Windows 10 64 bit |

| Software environment | Python 3.8.10, Pytorch 1.11, CUDA 11.6 | Python 3.8.10, Pytorch 1.11, CUDA 11.6 |

| Network | SI-SNRi (dB) | SDRi (dB) | Params (M) |

|---|---|---|---|

| DPRNN | 19.3 | 20.0 | 2.6 |

| DPTNet | 21.5 | 22.1 | 2.6 |

| Ours | 19.3 | 20.1 | 0.4 |

| Network | FLOPs (G) | CPU Time (s) | GPU Time (ms) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| 8 kHz | 16 kHz | 32 kHz | 8 kHz | 16 kHz | 32 kHz | 8 kHz | 16 kHz | 32 kHz | |

| DPRNN | 30.374 | 60.016 | 119.302 | 1.066 | 2.047 | 3.598 | 31.994 | 54.994 | 109.167 |

| DPTNet | 21.742 | 42.961 | 85.402 | 1.106 | 2.256 | 4.407 | 29.839 | 52.001 | 107.494 |

| Ours | 2.943 | 5.893 | 11.651 | 0.141 | 0.316 | 0.673 | 8.167 | 13.507 | 30.506 |

| Model | FLOPs (G) | CPU Time (s) | GPU Time (ms) | F/B GPU Memory (GB) |

|---|---|---|---|---|

| DPRNN | 60.016 | 2.047 | 54.994 | 0.890/1390 |

| DPTNet | 42.961 | 2.256 | 52.001 | 0.844/1.996 |

| Ours |

| TCK | TCS | CPU Time (s) | SI-SNRi (dB) | SDRi (dB) |

|---|---|---|---|---|

| 16 | 8 | 0.185 | 16.6 | 17.5 |

| 8 | 4 | 0.245 | 18.8 | 19.6 |

| 4 | 2 | 0.316 | 19.3 | 20.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, C.; Chen, Y.; Hao, Z.; Gao, X. An Efficient Time-Domain End-to-End Single-Channel Bird Sound Separation Network. Animals 2022, 12, 3117. https://doi.org/10.3390/ani12223117

Zhang C, Chen Y, Hao Z, Gao X. An Efficient Time-Domain End-to-End Single-Channel Bird Sound Separation Network. Animals. 2022; 12(22):3117. https://doi.org/10.3390/ani12223117

Chicago/Turabian StyleZhang, Chengyun, Yonghuan Chen, Zezhou Hao, and Xinghui Gao. 2022. "An Efficient Time-Domain End-to-End Single-Channel Bird Sound Separation Network" Animals 12, no. 22: 3117. https://doi.org/10.3390/ani12223117

APA StyleZhang, C., Chen, Y., Hao, Z., & Gao, X. (2022). An Efficient Time-Domain End-to-End Single-Channel Bird Sound Separation Network. Animals, 12(22), 3117. https://doi.org/10.3390/ani12223117